Abstract

Two well-known drawbacks in fuzzy clustering are the requirement of assigning in advance the number of clusters and random initialization of cluster centers. The quality of the final fuzzy clusters depends heavily on the initial choice of the number of clusters and the initialization of the clusters, then, it is necessary to apply a validity index to measure the compactness and the separability of the final clusters and run the clustering algorithm several times. We propose a new fuzzy C-means algorithm in which a validity index based on the concepts of maximum fuzzy energy and minimum fuzzy entropy is applied to initialize the cluster centers and to find the optimal number of clusters and initial cluster centers in order to obtain a good clustering quality, without increasing time consumption. We test our algorithm on UCI (University of California at Irvine) machine learning classification datasets comparing the results with the ones obtained by using well-known validity indices and variations of fuzzy C-means by using optimization algorithms in the initialization phase. The comparison results show that our algorithm represents an optimal trade-off between the quality of clustering and the time consumption.

1. Introduction

A validity index is a measure applied in fuzzy clustering to evaluate the compactness of clusters and the separability among clusters.

Numerous validity indices have been applied to measure the compactness and separateness of clusters detected by applying the fuzzy C-means (FCM) algorithm [1,2].

The two well-known main drawbacks of the FCM are the random setting of the initial clusters and the requirement of assigning the number of clusters in advance. The initial selection of the cluster centers can affect the performances of the algorithm in terms of efficiency and number of iterations needed to obtain the convergence. Moreover, the quality of the final fuzzy clusters depends on the choice of the number of clusters, then, it is necessary to use a validity index to evaluate what is the optimal number of clusters.

A simple technique applied to solve these problems is to execute the clustering algorithm several times, varying the initial centers of the clusters and the number of clusters, and to choose the optimal clustering using a validity index to measure the quality of the final clustering. However, this technique can be computationally expensive as the clustering algorithm has to be run many times.

In References [3,4], a technique is proposed which is based on the subtractive clustering algorithm to initialize the clusters, but this method needs to set the maximum peak and the maximum radius parameters.

In Reference [5], a technique, called Fuzzy Silhouette, is proposed: this method generalizes the Average Silhouette Width Criterion [6] applied for evaluating the quality of crisp clustering. The authors of Reference [5] show that the proposed validity measure, unlike other well-known validity measures, such as Fuzzy Hypervolume and Average Partition Density [7] and the Xie-Beni [8] index, can be used as an objective function of an evolutionary algorithm to automatically find the number of clusters; however, this approach requires running FCM many times for each cluster number selection.

In Reference [9], a new optimization method based on the density of the grid cells is proposed to find the optimal initial cluster centers and number of clusters: this approach can reduce run times in high-dimensional clustering.

The K-means algorithm is used in Reference [10] to initialize the centers of the clusters; then, the Partition Coefficient [1,11] and Partition Entropy [12] validity measures are calculated to find the optimal number of clusters. The drawback of this method is that it is highly time consuming and it can be unsuitable for managing massive datasets.

Some authors propose hybrid FCM variations in which meta-heuristic approaches are applied to optimize the initialization of the cluster centers. In Reference [13], a kernel FCM algorithm is proposed in which an evolutive method is applied in order to find the initial cluster centers. A Genetic Algorithm (GA) is proposed in Reference [14] to find the optimal initial FCM cluster centers in image segmentation problems. A Particle Swarm Optimization (PSO) algorithm is proposed in Reference [15] to find the optimal FCM initial cluster centers for sentiment clustering. Three hybrid FCM algorithms, based on Differential Evolution, GA, and PSO methods, are proposed in Reference [16] to optimize the cluster centers’ initialization. These algorithms, while guaranteeing a higher quality of results, require too long execution times, and they too are unsuitable for handling high-dimensional data.

In this paper, we propose a FCM variation in which a new validity index based on the De Luca and Termini Fuzzy Entropy and Fuzzy Energy concepts [17,18] is used to optimize the initialization of the clusters and to find the optimal number of clusters. Our aim is to reach a trade-off between the time consumption and the quality of the clustering algorithm.

Recently, a weighted FCM variation based on the De Luca and Termini Fuzzy Entropy was proposed in order to optimize the initialization of the cluster centers in Reference [19]. To initialize the cluster centers, the authors initially execute a weighted FCM algorithm, in which the weight assigned to a data point is given by a fuzziness measure obtained by calculating the mean fuzzy entropy of the data point and then the initial cluster centers are found when the mean fuzzy entropy of the clustering converges as well.

The algorithm proposed in Reference [19] is less time-consuming than hybrid algorithms using meta-heuristic approaches, but like the algorithm proposed in Reference [10], it applies an iterative method of pre-processing to initialize cluster centers. Furthermore, it does not detect the optimal number of clusters that must be set in advance.

In the proposed algorithm, the validity measure of the quality of clustering based on the fuzzy energy and fuzzy entropy is calculated both in the pre-processing phase to find the optimal initial cluster centers and to determine the optimal number of clusters. We set the number of clusters and randomly assign cluster centers several times, by choosing as initial cluster centers those for which the clustering validity index is greatest; finally, the FCM algorithm runs. We repeat this process by increasing the number of clusters up to a maximum number. After obtaining the final clusters for each setting of the number of clusters, we choose the one with the largest validity index.

In Section 2, we give a brief review on the Fuzzy Energy and Fuzzy Entropy measures of a fuzzy set and of the FCM algorithm. In Section 3, we introduce the proposed FCM algorithm based on the fuzzy energy and entropy-based validity index. In Section 4, we present several experimental results to demonstrate the features of the proposed index by applying to FCM. In Section 5, we present our conclusions.

2. Preliminaries

2.1. Fuzzy Energy and Entropy Measures

Let X be a universe of discourse and F(X) = {A: X → [0, 1]} be the set of all fuzzy sets defined on X. Moreover, let A ∊ F(X) and B ∊ F(Y) be two fuzzy sets defined on the sets X and Y respectively, and let R ⊆ F[X × Y] be a fuzzy relation on X × Y.

In References [17,18], two categories of fuzziness measures of fuzzy sets are defined: fuzzy energy and fuzzy entropy. If X = {x1, …, xm} is a discrete set with cardinality m, the energy measure of fuzziness of the fuzzy set A ∊ F(X) is given by:

where e: [0, 1] → [0, 1] is a continuous function called fuzzy energy function. The following restrictions are required for the function e:

- (1)

- e(0) = 0

- (2)

- e(1) = 1

- (3)

- e is monotonically increasing.

The simplest fuzzy energy function is given by the identity e(u) = u with u ∊ [0.1]. A more general formula for e(u) is:

where p > 0 is a positive number.

The minimal value of the fuzzy energy measure is 0 and the maximal value is given by E(A) = Card(X) = m, where Card(X) is the cardinality of the set X.

The energy measures of a fuzzy set A can be seen as a measure of information contained in this fuzzy set. If E(A) = 0, then A coincides with the empty set; if E(A) = m, then A coincides with the set X.

The entropy measure of fuzziness of the fuzzy set A is given by:

where h: [0, 1] → [0, 1] is a continuous function called fuzzy entropy function. The following restrictions are required for the function h:

- (4)

- h(1) = 0

- (5)

- h(u) = h(1 − u)

- (6)

- h is monotonically increasing in [0, ½]

- (7)

- h is monotonically decreasing in [½, 1].

The simplest fuzzy entropy function is given by:

This fuzzy entropy function has a minimal value of 0 when u is 0 or 1 and a maximum value 1 when u = ½.

De Luca and Termini in Reference [18] propose the following fuzzy entropy function:

This fuzzy entropy function has a maximum value 1 when u = ½, and it is called Shannon’s function.

The entropy measures of a fuzzy set A can be seen as a measure of the fuzziness contained in this fuzzy set. If H(A) = 0, then for each element xi, i = 1, …, m, A(xi) = 0 or A(xi) = 1 and A coincides with a subset of the set X; if H(A) = m, then for each element xi, i = 1, …, m, A(xi) = ½ and the fuzziness of A is maximum.

A problem is to find the fuzzy set from a family of fuzzy sets of F(X) with the highest information content and the lowest fuzziness.

2.2. Fuzzy C-Means Algorithm

Let X = {x1, …, xN} ⊂ Rn be a set of N data points in the n-dimensional space Rn, where xj = (xj1, …, xjn), and let V = {v1, …, vC} ⊂ Rn be the set of centers of the C clusters. Let U be the C × N partition matrix, where uij is the membership degree of the jth data point xj to the ith cluster vi.

The FCM algorithm [1,2] is based on the minimization of the following objective function:

where dij = is the Euclidean distance between the center vi of the ith cluster and the jth object xj, p ∈ [1, +∝] is the fuzzifier parameter (a constant which affects the membership values and defines the degree of fuzziness of the partition). For m = 1, FCM become a hard C-means clustering; the more m tends towards +∝, the more the fuzziness level of the clusters grows.

By considering the following constraints:

and applying the Lagrange multipliers, we obtain the following solutions for (1):

and

An iterative process is proposed in Reference [2] as follows: initially the membership degrees are assigned randomly; in each iteration, the cluster centers are calculated by (4), then the membership degree components are calculated by (5). The iterative process stops at the tth iteration when

where ε > 0 is a parameter assigned a priori to stop the iteration process and

The pseudocodes of the FCM algorithm (Algorithm 1) are shown below.

| Algorithm 1:FCM |

| Input: Dataset X = {x1, …, xN} Output: Cluster centers V = {v1, …, vC}; Partition matrix U Arguments: number of clusters C; fuzzifier p; stop iteration threshold ε

|

3. The Proposed FCM Algorithm Based on a Fuzzy Energy and Entropy Validity Index

Let X = {x1, …, xN} be the set of data points with cardinality N. We consider the fuzzy set Ai ∊ F(X), where Ai(xj) = uij is the membership degree of the jth data point to the ith cluster.

We propose a new validity index based on the fuzzy energy and fuzzy entropy measures to evaluate the compactness of clusters and the separability among clusters.

By using (1) and (3) respectively, we can evaluate the fuzzy energy and the fuzzy entropy of the ith cluster, measuring the fuzzy entropy and the fuzzy energy of the fuzzy set Ai, given by

where the fuzzy energy and entropy are normalized dividing them by the cardinality N of the dataset.

Fuzzy energy (13) measures the quantity of information contained in the ith cluster and fuzzy entropy (14) measures the fuzziness of the ith cluster, namely the quality of the information contained therein.

For example, a cluster with low fuzzy entropy has low fuzziness, so it is compact; however, if it also has a low fuzzy energy, then the information which it contains is low. Hence, even if compact, a very small number of data points will belong to this cluster and this could be due to the presence of noise or outliers in the data. Moreover, a cluster with a high value of fuzzy entropy has high fuzziness and low compactness.

We set the function (2) as fuzzy energy function, where p is given by the value of the fuzzifier parameter. The fuzzy entropy function h(u) is given by the Shannon function (5).

We measure the energy and the entropy of the clustering given by the averages of the energy and entropy of the C clusters:

and

respectively. The proposed validity index, called Partition Energy-Entropy (PEH), is given by the difference between the energy and the entropy of the clustering:

This index varies in the range [−1, 1], the optimal clustering is the one that maximizes PEH, and the greater the value of PEH, the more the clusters are compact and well separated from each other.

We propose a new algorithm, called PEHFCM, in which the PEH index is used to initialize the cluster centers and to find the optimal number of clusters.

In addition to the fuzzifier and iteration error threshold parameters, further arguments of the algorithm are the maximum number of clusters, Cmax, and the number of random selections of initial C clusters, Smax. The PEHFCM algorithm is composed of a For loop in which the number of clusters is initially set to 2 and then cyclically iterated until the Cmax value is reached. In each cycle, Smax sets of cluster centers are initially selected, for each of which the PEH index is calculated. The optimal set of initial cluster centers is the one for which the PEH indicator is maximum. Subsequently, a variation of the FCM algorithm is performed, called FCMV, which, unlike FCM, uses the set of initial cluster centers V0 as a further argument instead of setting it randomly. Finally, the PEH index of the final clustering is calculated.

The PEHFCM algorithm returns the optimal number of C* clusters and the respective sets of cluster centers V* and partition matrix U* corresponding to the highest PEH validity index.

Below, we show the algorithm PEHFCM (Algorithm 2) and the algorithm FCMV (Algorithm 3), called PEHFC.

| Algorithm 2:PEHFCM. |

| Input: Dataset X = {x1, …, xN} Output: Cluster centers V = {v1, …, vC}; Partition matrix U, optimal number of clusters C* Arguments: max num of clusters, Cmax; max num of random selections of the initial cluster centers, Smax, fuzzifier p; stop iteration threshold ε

|

| Algorithm 3:FCMV |

| Input: Dataset X = {x1, …, xN} Initial cluster centers V0 = {v10, …, vC0} Output: Cluster centers V = {v1, …, vC}; Partition matrix U Arguments: Initial cluster centers V0 = {v10, …, vC0}; number of clusters C; fuzzifier p; stop iteration threshold ε

|

We can evaluate the computational complexity of PEHFCM, considering that the computational complexity of the FCM algorithm is by O(N·n·c2·I), where N is the number of objects, n their dimension, c the number of clusters, and I is the number of iterations.

In PEHFCM, for not high Smax values, it is possible to neglect the complexity of the computation of energy and entropy measures of the initial Smax cluster centers, approximating the computational complexity by O(N·n·c2·I·Cmax), where Cmax is the maximum number of clusters and I is the mean number of iterations of each FCM execution.

Then, PEHFCM has the same computational complexity of the FCM in which the measurement of a validity index is performed to calculate the optimal number of clusters.

Moreover, due to the problem of initialization of cluster centers, FCM is generally performed several times, increasing its computational complexity; on the other hand, PEHFCM does not need to be executed several times as the algorithm determines the initial centers of the optimal clusters.

To measure the performances of the proposed algorithm, we compare the results with the ones obtained by applying FCM and applying our method by using other well-known validity indices: Partition Coefficient (PC) [1,11], Partition Entropy (PE) [12], Fukuyama and Sugeno (FS) [20], Xie-Beni (XB) [8], and Partition Coefficient And Exponential Separation (PCAES) [21], described below.

The PC validity index is given by the formula:

It measures the crispness of the clusters. The value C* is obtained when PC is maximum.

The PE validity index is given by:

It measures the mean fuzziness of the clusters, and the optimal number of clusters, C*, is obtained when PE is minimum.

The FS validity index is given by:

where is the average of the cluster centers. The first term in (20) measures the compactness of the clusters, the other one the separability among the same clusters. The optimal number of clusters, C*, is obtained when FS is maximum.

The XB validity index is given by the formula:

The numerator measures the compactness of the clusters, and the denominator indicates the separability between clusters. The optimal number of clusters, C*, is obtained when XB assumes the minimum value.

The PCAES validity index is given by the formula:

where the vector is the average of the cluster center. The first term in (22) measures the compactness of clusters, and the last term the separability among clusters. The optimal number of clusters, C*, is obtained when PCAES assumes the minimum value.

We complete our comparisons by comparing our method with hybrid metaheuristic algorithms.

The comparison tests are performed on well-known UC Irvine (UCI) machine learning classification datasets (http://archive.ics.uci.edu/ml/datasets.html). We measure the quality of the results in terms of accuracy, precision, recall, and F1-score [22,23].

4. Results

We show the results obtained on a set of over 40 classification UCI machine learning datasets. In all experiments, we used an Intel core I5 3.2 GHz processor, m = 2, ε = 0.01, and Smax = 100.

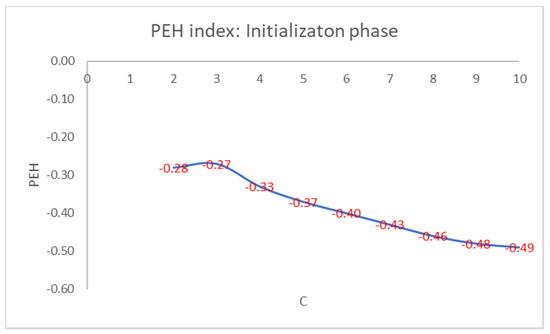

For brevity, we only show in detail the results obtained on the well-known Iris flower dataset. This dataset contains 150 data points with 4 features given by the length and the width of the sepals and petals measured in centimeters: 50 data points are classified as belonging to the type of Iris flower Iris Setosa, 50 data points to the type Iris Versicolor, and 50 data points to the Iris Virginica type. Only the class Iris Setosa is linearly separable from the other two, which are not linearly separable. We set the max number of clusters, Cmax, to 10. In Figure 1, we show the values of the PEH index of the best initial cluster centers obtained for each setting of the number of clusters.

Figure 1.

PEH index calculated in the initialization phase by varying the number of clusters.

As can be seen from Figure 1, the maximum values of the PEH index are obtained for C = 3 by varying the number of clusters.

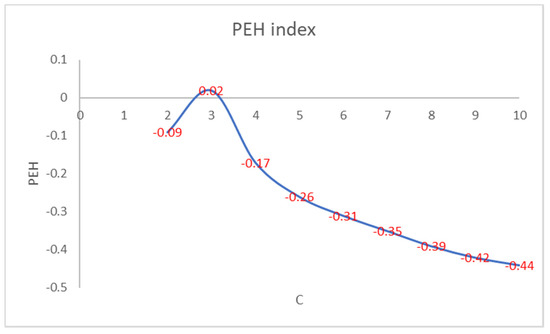

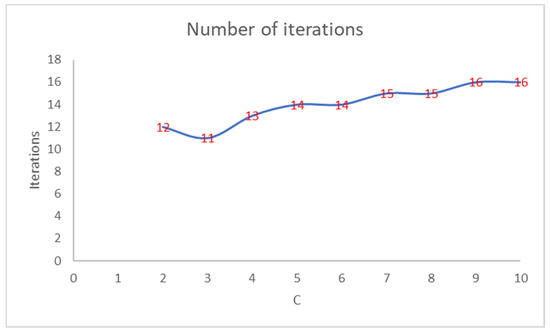

Figure 2 shows that the number of iterations increases as the PEH value of the initial clustering decreases. Figure 3 shows the trend of the number of iterations necessary to reach the convergence by varying the number of clusters in PEHFCM. The least number of iterations (12) is obtained for C = 3.

Figure 2.

PEH index of the final clustering by varying the number of clusters.

Figure 3.

Number of iterations of the PEHFCM algorithm by varying the number of clusters.

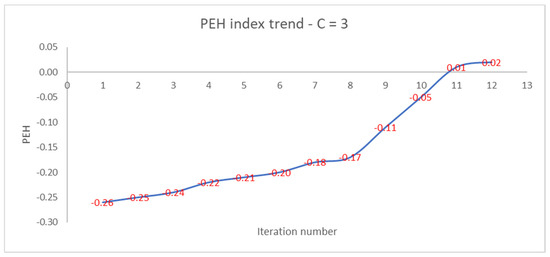

Like the PEH index of the final clustering, the number of iterations increases as the PEH value of the initial clustering decreases. In Figure 4, we show the trend of the PEH in any iteration for C = 3.

Figure 4.

Trend of PEH with respect to the iteration number for C = 3.

The PEH index increases slightly, then increases rapidly after the 8th iteration and reaches a plateau at the 12th iteration. We compare the performances of the PEH index with the ones of the PC, PE, FS, and XB validity indices.

Table 1 shows the optimal number of clusters found using the validity index, the number of iterations necessary for the convergence, and the running time.

Table 1.

Iris Dataset: validity indices comparison of number of iterations and running time.

The best results are obtained by executing PEHFCM with respect to FCM + PC and FCM + PE (resp., FCM + FS and FCM + XB) when the optimal number of clusters obtained is 2 (resp., 3). In both cases, the least number of iterations and the shortest execution time are achieved using PEHFCM. In addition, we compare the results obtained by executing PEHFCM with the ones obtained via the entropy-based weighted FCM algorithm (EwFCM) [19] and the metaheuristic PSOFCM proposed in Reference [15].

Table 2 shows the running time, the accuracy, precision, recall, and F1-Score obtained by executing FCM + FS, FCM + XB, FCM + PCAES, PEHFCM, EwFCM, and PSOFCM.

Table 2.

Dataset Iris: Comparisons of running time and classification performances.

The results in Table 2 show that the best classification performances are given by EwFCM and PSOFCM. PEHFCM has the shortest running time and classification performances comparable with EwFCM and PSOFCM.

These results are confirmed by testing other UCI machine datasets. Here, we present the results obtained on the Wine dataset. This dataset is given by 178 data points having 13 features: each data point represents an Italian wine derived from a specific crop and their features provide information on its chemical composition. The dataset is partitioned in three classes, corresponding to three crops.

In Table 3, we show the results obtained by considering the five validity indices.

Table 3.

Wine Dataset: validity indices comparison of number of iterations and running time.

Even in this case, PEHFCM provides the best number of iterations and running time.

Table 4 shows the running time and the classification performances of all the compared algorithms.

Table 4.

Wine Dataset: Comparisons of running time and classification performances.

Also, here, the results obtained on the Wine dataset show that PEHFCM provides the shortest execution time and classification performances comparable to those obtained by using EwFCM and PSOFCM.

In Table 5, the accuracy values obtained for some datasets used in our comparison tests are shown. These results confirm that the accuracy performances provided by PEHFCM are better than the ones provided by FCM + FS, FCM + XB, and FCM + PCAES, and are comparable to those provided by EwFCM and PSOFCM.

Table 5.

Accuracy comparisons for some UCI machine learning datasets.

We summarize the results obtained on all the classification UCI machine learning datasets used in our tests, calculating:

- -

- The mean percent of gain (or loss) of running time. If TC is a running time calculated by running a FCM-based method and TCPEH is the one calculated with PEHFCM, this index is given by the average of the percentage of (TCPEH − TC)/TCPEH. This value is equal to 0 for PEHFCM.

- -

- The mean percentage gain (or loss) of a classification index. If IC is a classification index value obtained by running a FCM-based method and ICPEH is the one obtained with PEHFCM, this index is given by the average of the percentage of (IC − ICPEH)/ICPEH. This value is equal to 0 for PEHFCM.

If the value of a summarized index is positive, then, by executing the algorithm, we obtain a gain in terms of running time or of the classification index; conversely, we get a loss if that value is negative. In Table 6, we show these results.

Table 6.

Average percentage of gain of running time and classification indices.

The results in Table 6 show that PEHFCM provides the best running time; indeed, the running times measured executing the other FCM-based algorithms were more than 28% longer than the one obtained by executing PEHFCM. The gain of accuracy, precision, recall, and F1-score obtained executing EwFCM and PSOFCM was less than 2%.

5. Conclusions

We proposed a variation of FCM in which a validity index was based on the fuzzy energy and fuzzy entropy of the clustering, in order to find an optimal initialization of the cluster centers and the optimal number of clusters.

The proposed method represents a trade-off between the running time and the clustering performances: it aims to overcome the problems of initializing cluster centers and setting the number of clusters a priori, without, at the same time, requiring long execution times due to the pre-processing phase necessary to optimize the initialization of cluster centers.

The results of experimental tests applied on well-known UCI machine learning classification datasets showed that the PEHFCM algorithm provides shorter running times than the EwFCM and PSOFCM algorithms, which use an optimization method based on fuzzy entropy and a metaheuristic PSO-based method to determine the initial cluster centers, respectively. Furthermore, PEHFCM provides classifier performance comparable to EwFCM and PSO FCM.

Since the proposed method is FCM-based, its computational complexity depends on the number of data points and the number of features, and it may be unsuitable for managing massive and high-dimensional datasets. In the future, we intend to adapt PEHFCM to manage high-dimensional and massive datasets, in which it is essential to guarantee high performances both in terms of quality of results and execution times.

Author Contributions

Conceptualization, F.D.M. and S.S.; methodology, F.D.M. and S.S.; software, F.D.M. and S.S.; validation, F.D.M. and S.S.; formal analysis, F.D.M. and S.S.; investigation, F.D.M. and S.S.; resources, F.D.M. and S.S.; data curation, F.D.M. and S.S.; writing—original draft preparation, F.D.M. and S.S.; writing—review and editing, F.D.M. and S.S.; visualization, F.D.M. and S.S.; supervision, F.D.M. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Kluwer Academic Publishers: Norwell, MA, USA, 1981. [Google Scholar]

- Bezdek, J.C.; Ehrlich, R.; Full, W. FCM: The fuzzy c-means clustering algorithm. Comput. Geosci. 1984, 10, 191–203. [Google Scholar] [CrossRef]

- Chen, J.; Qin, Z.; Jia, J. A Weighted Mean Subtractive Clustering Algorithm. Inf. Technol. J. 2008, 7, 356–360. [Google Scholar] [CrossRef]

- Yang, Q.; Zhang, D.; Tian, F. A initialization method for Fuzzy C-means algorithm using Subtractive Clustering. In Proceedings of the Third International Conference on Intelligent Networks and Intelligent Systems, Shenyang, China, 1–3 November 2010; Volume 10, pp. 393–396. [Google Scholar]

- Campello, R.J.G.B.; Hruschka, E.R. A fuzzy extension of the silhouette width criterion for cluster analysis. Fuzzy Sets Syst. 2006, 157, 2858–2875. [Google Scholar] [CrossRef]

- Everitt, B.S.; Landau, S.; Leese, M.; Stahl, D. Cluster Analysis, 5th ed.; Arnold: Paris, France, 2011; p. 343. ISBN 978-0-470-74991-3. [Google Scholar]

- Gath, I.; Geva, A.B. Unsupervised optimal fuzzy clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 773–781. [Google Scholar] [CrossRef]

- Xie, X.L.; Beni, I.G. A validity measure for fuzzy clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 841–847. [Google Scholar] [CrossRef]

- Zou, K.-Q.; Wang, Z.-P.; Pei, S.-J.; Hu, M. A New Initialization Method for Fuzzy c-Means Algorithm Based on Density. In Advances in Multimedia, Software Engineering and Computing Vol.2; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2009; Volume 54, pp. 547–553. [Google Scholar]

- Hayet, D.; Hamza, C.; Mostefa, B.; Nadjette, D.H. Initialization Methods for K-means and Fuzzy Cmeans Clustering Algorithm. In Proceedings of the International Conference on Automatic, Télécommunication, and Signals, Annaba, Algeria, 16–18 November 2015; 6p. [Google Scholar] [CrossRef]

- Trauvert, E. On the meaning of Dunn’s partition coefficient for fuzzy clusters. Fuzzy Sets Syst. 1988, 25, 217–242. [Google Scholar] [CrossRef]

- Bezdek, J.C. Cluster validity with fuzzy sets. J. Cybern. 1973, 3, 58–73. [Google Scholar] [CrossRef]

- Ding, Y.; Fu, X. Kernel-based fuzzy c-means clustering algorithm based on genetic algorithm. Neurocomputing 2016, 188, 233–238. [Google Scholar] [CrossRef]

- Li, D.; Han, Z.; Zhao, J. A Novel Level Set Method with Improved Fuzzy C-Means Based on Genetic Algorithm for Image Segmentation. In Proceedings of the 2017 3rd International Conference on Big Data Computing and Communications (BIGCOM), Chengdu, China, 10–11 August 2017; pp. 151–157. [Google Scholar]

- Siringoringo, R.; Jamaluddin, J. Initializing the Fuzzy C-Means Cluster Center with Particle Swarm Optimization for Sentiment Clustering. In Proceedings 1st International Conference of SNIKOM 2018; IOP Publishing: Bristol, UK, 2019; Volume 1361, p. 012002. [Google Scholar]

- Franco, D.G.D.B.; Steiner, M.T.A. Clustering of solar energy facilities using a hybrid fuzzy c-means algorithm initialized by metaheuristics. J. Clean. Prod. 2018, 191, 445–457. [Google Scholar] [CrossRef]

- De Luca, A.; Termini, S. Entropy and energy measures of fuzzy sets. In Advances in Fuzzy Set Theory and Applications; Gupta, M.M., Ragade, R.K., Yager, R.R., Eds.; North-Holland: Amsterdam, The Netherlands, 1979; pp. 321–338. [Google Scholar]

- De Luca, A.; Termini, S. A definition of non-probabilistic entropy in the setting of fuzzy sets theory. Inf. Control 1972, 20, 301–312. [Google Scholar] [CrossRef]

- Cardone, B.; Di Martino, F. A Novel Fuzzy Entropy-Based Method to Improve the Performance of the Fuzzy C-Means Algorithm. Electronics 2020, 9, 554. [Google Scholar] [CrossRef]

- Fukuyama, Y.; Sugeno, M. A new method of choosing the number of clusters for the fuzzy C-means method. In Proceedings of the Fifth Fuzzy Systems Symposium, Kobe, Japan, 2–3 June 1989; pp. 247–250. [Google Scholar]

- Wu, K.L.; Yang, M.S. A fuzzy validity index for fuzzy clustering. Pattern Recognit. Lett. 2005, 26, 1275–1291. [Google Scholar] [CrossRef]

- Ming, T.K. Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; ISBN 978-0-387-30164-8. [Google Scholar]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2018, 13. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).