1. Introduction

In this work, we present a novel way to estimate a discrete probability distribution, denoted , using both expert knowledge and data. This is a crucial aspect for many applications. Indeed, when deploying a decision support tool, we often rely entirely on expert/domain knowledge at the beginning; the data only comes with the use of the algorithm in real life. However, we need a good model of the environment to directly train the decision support tool with a planning algorithm. This model of the environment is to be refined and corrected as the data flow increases.

We assume here to have some expert knowledge in the form of an initial a priori on the marginals, the moments, and/or the support of or any other relevant information. We also assume that we sequentially receive data. We denote ,…, an independent and identically distributed (i.i.d) sample following a given unknown discrete probability distribution in .

One example of application may come from the objective of building a symptom checker for rare diseases [

1]. In this case,

represents the probabilities of the different possible combinations of symptoms, given the event that the disease of the patient is

D. More precisely, we denote:

We aim to estimate the distribution where D is the random variable disease. ,…, are the typical symptoms of the disease D; all are binary random variables, i.e., the symptom can be present or absent. We aim to estimate the different combinations (as , for example) when we only have an expert a priori on the marginals , for all .

Of course, a first idea would be to assume that the symptoms are conditionally independent given the disease. However, we expect complex correlations between the typical symptoms of a given disease. Indeed, we can imagine two symptoms that are very plausible individually, but which rarely occur together (or even never, in the case of incompatible symptoms like microcephaly and macrocephaly).

Note also that the assumption of conditional independence would make it possible to present a disease without having any of the symptoms related to this disease in the database (when there is no such that ), which should be impossible.

Generally speaking, if we had enough empirical data, we would no longer need the experts. Conversely, without empirical data, our model must be based entirely on experts. We detail here two different approaches to dealing with the intermediate regime where we do not have enough data to do without the a priori given by the experts, but where we have enough data to correct and specify this initial a priori. These approaches are meaningful as long as we do not know how much data have been used to build the initial a priori, and as long as we really try to combine two heterogeneous forms of information: Experts and empirical data.

In

Section 2.1, we first recall the principle of maximum entropy, which is the basic brick we use to build an expert model. We then briefly introduce the proposed approach to mixing experts and data in

Section 2.2. We underline the extent to which this approach is superior to the one we previously proposed in [

1]. The Barycenter approach that we propose here provides an objective way to choose the weight to be given to experts compared to data. On the contrary, the maximum likelihood with entropic penalization approach of [

1] was shown to be sensitive to the choice of the regularization parameter. In

Section 3, we outline a review of the literature. Finally, in

Section 4, we show both empirically and theoretically that our barycenter estimator is always more efficient than the best of the two models (expert or data) within a constant.

It should be noted that even though we will refer throughout the paper to our particular application in medicine, our framework is relevant for any inference problem involving an initial a priori with a particular form (marginals, moments, support, etc.) combined with data. Biology, ecology, and physics, to name a few, are areas where ideas of maximum entropy have been used for a long time and where the ideas developed in this work could be interesting. See [

2] for an overview of the maximum entropy applications for inference in biology.

2. Mixing Expert and Empirical Data

2.1. Building an Expert Model: The Maximum Entropy Principle

Of course, the aim of benefiting simultaneously from expert data and empirical data has a very old history. This is the very essence of Bayesian statistics [

3], which aims to integrate expert data in the form of an a priori, which is updated with empirical data using the Bayes’ theorem to obtain what will be called the posterior.

Note that in our case, we do not have a classical a priori modeling the model parameters with probability distributions. We have an a priori on the marginals, such as a number of constraints on the distribution to be estimated. The absence of an obvious a priori to model the distribution of the parameters naturally leads us to the idea of maximum entropy, theorized by [

4]. Indeed, if no model seems more plausible to us than another, then we will choose the least informative. This is a generalization of the principle of indifference often attributed to Laplace:

“We consider two events as equally probable, when we see no reason that makes one more probable than the other, because, even if there is an unequal possibility between them, since we don’t know which is the biggest, this uncertainty makes us look at one as as likely as the other” [

5].

This principle therefore takes the form of an axiom that allows us to construct a method to choose an a priori: The least informative possible a priori that is consistent with what we know.

We then define the distribution of maximum entropy as follows:

where

.

is the set of constraints fixed by experts and

is the probability simplex of the discrete probability distributions of dimension

K:

Note that is well-defined; namely, it exists and is unique, as long as is a convex set. Indeed, the function is strictly concave; it is a classic result that a strictly concave function under convex constraints admits a unique maximum.

It is well known that if only contains the constraints for the marginals, then is nothing more than the independent distribution.

However, in our case, we can add some information about the structure of the desired distribution as constraints integrated into . We judge that it is impossible to have a disease without having at least a certain number of its associated symptoms: One, two, or more depending on the disease. Indeed, the diseases we are interested in manifest themselves in combinations of symptoms. The combinations which allow the presence of two simultaneous but exclusive symptoms should also have constraints that are equal to 0. All combinations of constraints are conceivable, as long as remains a convex closed space, in order to ensure the existence and uniqueness of .

We therefore construct our a priori by taking the maximum entropy distribution, checking the constraints imposed by the experts. Thus, among the infinite distributions that verify the constraints imposed by the experts, we choose the least informative distribution ; in other words, the one closest to the conditional independence distribution.

We need to add information to move from the information provided by the experts to the final distribution, and we want to add as little as possible to what we do not know. This approach is referred to as maxent (maximum entropy) and has been widely studied in the literature [

4,

6,

7].

2.2. Barycenters between Experts and Data

Our target probability distribution is denoted

and is defined on the probability simplex

of Equation (

3).

The i.i.d. sample of

is denoted

,…,

, where

. The empirical distribution

is given by:

Following the ideas of

Section 2.1, we define the expert distribution as the distribution which maximizes entropy while satisfying the constraints fixed by experts:

where

is the intersection of the simplex probabilities with the set of constraints fixed by experts. In our medical context, the set of constraints is composed of a list of censured combinations and a list of marginals coming from the literature. Censured combinations are combinations of symptoms that are set to zero because they involve the simultaneous presence of two incompatible symptoms and/or combinations that do not involve enough of a presence of typical symptoms.

Note that it is possible to give more or less credit to the marginals given by experts by formulating the constraint as an interval (wider or narrower) rather than as a strict equality. The distribution of experts is then defined as the least informative distribution consistent with what we know.

Let

be any dissimilarity measured between two probability distributions. Our barycenter estimator mixing expert and empirical data is then defined as:

where

and

is the probability measure defined on the product space

,…,

.

is then defined as the closest distribution from the experts, in the sense of the dissimilarity measure , which is consistent with the observed data.

For such a construction to be possible, we will therefore have to choose a measure of dissimilarity so that we have a concentration of the empirical distribution around the true distribution for .

Such a formulation has several advantages over the maximum likelihood with entropic penalization approach previously proposed in [

1]. First, we do not have to choose a regularization parameter, which seems to have a strong impact on the results of the estimator (see [

1]). This parameter is replaced by the parameter

, for which it is reasonable not to take more than

and which appears to have low impact on the result of

(see

Section 5). Secondly, the solution of (

6) can be (depending on the choice of the dissimilarity measure

) easier to compute than that of the optimization problem associated with the penalization approach, for which a closed form of the solution could not be derived [

1]. Mathematically,

is the projection of the experts on the confidence interval centered on the empirical distribution and radius

.

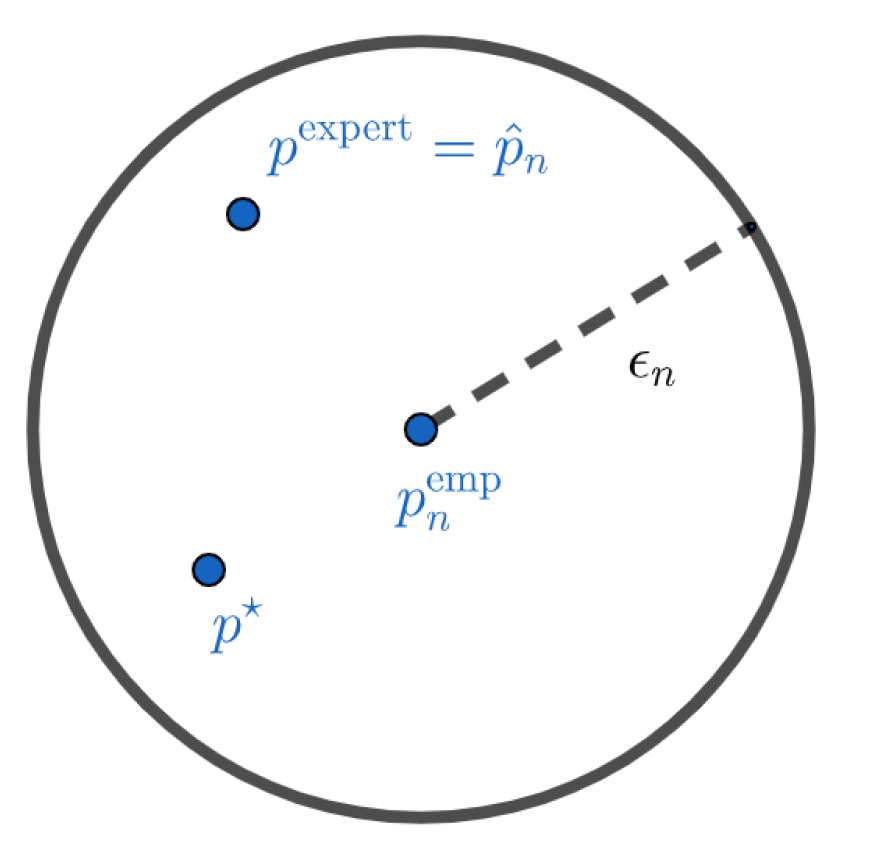

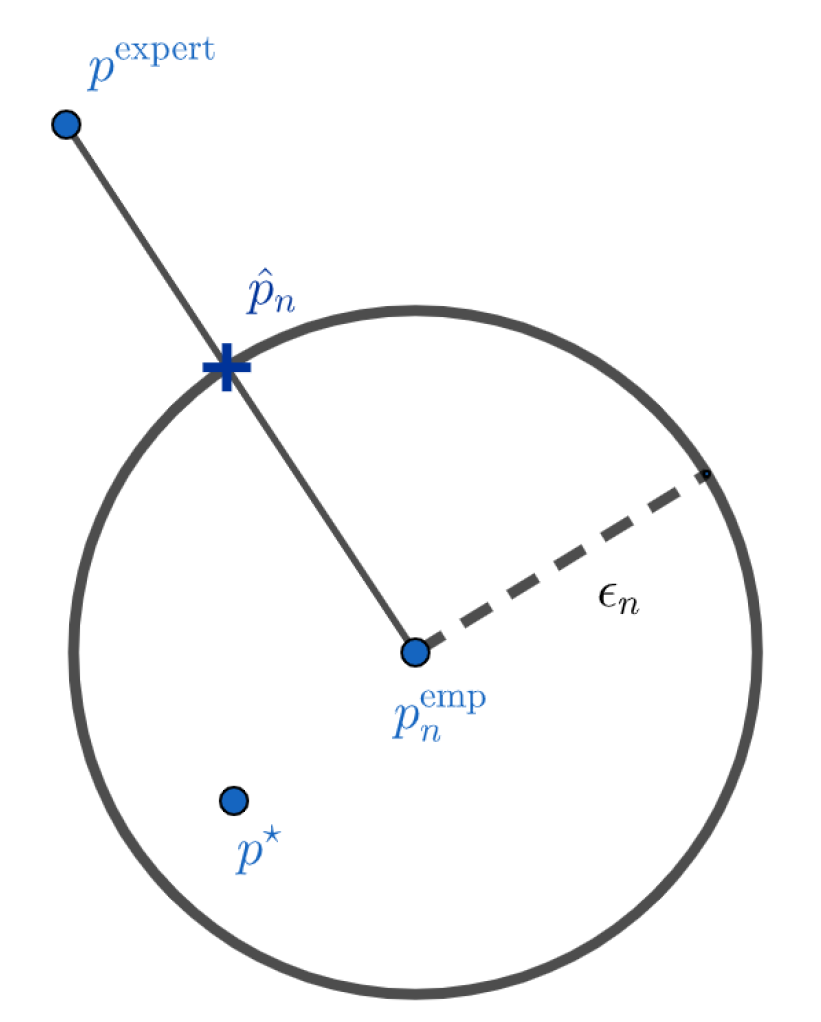

Figure 1 and

Figure 2 give a visual interpretation of such a construction. These representations should not be taken literally. The objects we work on live in the simplex of probabilities, and their geometry is very different from the Euclidean interpretation of

Figure 1 and

Figure 2. These figures are mainly here to illustrate the two different cases we can have. In

Figure 1, we do not have much data and the confidence interval is wide. In this case, the projection of the experts on the confidence interval centered on the empirical distribution is the expert distribution itself. We do not have enough elements to modify our initial a priori. This case can also occur when the initial a priori of the experts is very close to the true distribution. On the contrary, in

Figure 2, we modify the initial a priori because the experts do not belong to the confidence interval anymore.

2.3. A Baseline: Mixture Model with Bayesian Weights

We present here an alternative approach that will be our baseline in the numerical experiments of

Section 5. We still aim to mix the empirical distribution,

, built with an i.i.d. sample of

:

,…,

, with the distribution of the experts

.

The idea is to make a linear combination of these two models:

where the mixture parameters are proportional to the log-likelihood of the data according to the model considered, namely:

and

where

ℓ stands for the log-likelihood.

This is a parametric Bayesian approach, since we apply the Bayes theorem, stating that the posterior is proportional to the product of the prior with the likelihood function.

3. Related Works

3.1. Expert System with Probabilistic Reasoning

The creation of a decision support tool for medical diagnosis has been an objective since the beginning of the computer age. Most of the early work proposed a rule-based expert system, but in the 1980s, a significant part of the community studied the possibility of building an expert system using probabilistic reasoning [

8]. Bayesian probabilities and methods were therefore considered as good ways to model the uncertainty inherent in medical diagnosis relatively early.

The assumption of conditional independence of symptoms given the disease has been intensively discussed, as it is of crucial importance for computational complexity. Some researchers considered this hypothesis harmless [

9], while others already proposed a maximum entropy approach to face this issue [

10,

11,

12].

However, it seems that none of the work of that time considered the expert vs. empirical data trade-off that we face. In the review article [

13] presenting the state-of-the-art of the research of that time (1990) about this issue, it is clearly mentioned that these methods only deal with data of probabilistic forms. More precisely, they assume that they have an a priori on the marginal but also on some of the combinations of symptoms (in our case, we would assume that we have an a priori on

, for example), and propose a maximum entropy approach where these expert data are treated as constraints in the optimization process. Once again, this is not the case for us, since we have only an a priori on the marginal (and a certain number of constraints), as well as experimental data. This field of research was very active in the 1980s and then gradually disappeared, probably due to the computational intractability of the algorithms proposed for the computer resources of the time.

3.2. Bayesian Networks

Bayesian networks [

14] were then quickly considered as a promising alternative for modeling probabilistic dependency relationships between symptoms and diseases [

8]. These are now used in most expert systems, particularly in medicine [

15].

A Bayesian network is generally defined as an acyclically oriented graph. The nodes in this graph correspond to the random variables: Symptoms or diseases in our case. The edges link two correlated random variables by integrating the information of the conditional law of the son node with respect to the father node. The main advantage of such a model is that it can factorize the joint distribution using the so-called global Markov property. The joint law can indeed be expressed as the product of the conditional distributions of each node given its direct parents in the graph [

16].

First of all, the construction of a Bayesian network implies the inference of its structure, i.e., to determine the nodes that must be linked by an edge to those that can be considered conditionally independent of the rest of the graph (structure learning). Then, learning the network implies learning the parameters, i.e., the probabilities linking the nodes (parameter learning).

It is therefore natural to also find works that aimed at mixing expert and empirical data in this area of the literature. In [

17], the experts’ indications take a particular form since they indicate by hand correlations, positive or negative, between variables. The approach of [

18] is also quite distant, because it is prefers being based on data. [

18] only uses expert indications for additional variables for which there are no data, typically rare events never observed in the database. A work closer to ours is [

19], where the authors assume that they have a first Bayesian network built entirely by the experts, to which they associate a degree of trust. The authors then use the available data to correct this expert network. We distinguish ourselves from this work in our effort to find an objective procedure for the weight to be given to experts in relation to the data (and for this weight not to be set by the experts themselves).

Note also that the main interest of Bayesian networks is to take advantage of conditional independence relationships known in advance, as they are pre-filled by experts or inferred from a sufficient amount of data. However, in our case, we do not have such an a priori knowledge about the dependency relationships between symptoms and or enough data to infer them.

3.3. From the Marginals to the Joint Distribution

Estimating the joint distribution from the marginal is an old problem, which is obviously not necessarily related to expert systems. This problem is sometimes referred to in the literature as the “cell probabilities estimation problem in contingency tables with fixed marginals”. The book [

20] gives a good overview of this field. We can trace back to the work of [

21], which assumes knowing the marginal and having access to a sample of empirical data, and aims to estimate the joint distribution. In this article, they proposed the “iterative proportional fitting procedure” (IPFP) algorithm, which is still very popular for solving this problem.

An important assumption of [

21] is that each cell of the contingency table receives data. In [

22], the authors prove that the asymptotic estimator obtained by an IPFP algorithm is the distribution that minimizes the Kullback–Leibler divergence from the empirical distribution under the constraint to respect the marginal experts.

However, an IPFP algorithm is not suitable for our problem for two main reasons: First, we do not have absolute confidence in the marginals given by experts (we want to allow ourselves to modify them as we collect more data) and second, because we are interested in rare diseases, we do not expect to have a sufficient amount of data. In fact, many of the cells in the contingency table we are trying to estimate will not receive data, but it would be disastrous in our application to assign a zero probability to the corresponding symptom combination.

In a sense, an IPFP algorithm does exactly the opposite of what we are aiming for: It modifies empirical data (as little as possible) to adapt them to experts, while we aim to modify experts (as little as possible) to make them consistent, in a less restrictive sense, with empirical data.

We should also mention the work related to our problem in applications of statistics in the social sciences, where researchers aim to construct a synthetic population from the marginal, coming from several inconsistent sources [

23]. Their proposed approach also uses ideas of maximum entropy, but it is still different from our trade-off of expert vs. empirical data, since they built their model without samples.

3.4. The Kullback Centroid

Our optimization problem in Equation (

6) in the particular case where the dissimilarity measure

is the Kullback–Leibler divergence is called moment-projection (M-projection) in the literature. The properties of these projections have been intensely studied [

24].

Note that the Lagrangian associated with such an optimization problem is then nothing more than a Kullback–Leibler centroid. These objects or variations/generalizations of them (with Jeffrey’s, Bregman’s divergences, etc.) have been the subject of research since the paper of [

25]. For example, articles [

26,

27] study cases where an exact formula can be obtained, and propose algorithms when this is not the case.

However, we have not found any use of these centroids to find a good trade-off of expert vs. empirical data as we propose in this paper. Bregman’s divergence centroids have been used to mix several potentially contradictory experts; the interested reader may refer to the work of [

28,

29]. We could certainly consider that the empirical distribution

is a second expert, and that our problem is the same as mixing two experts: Literature and data. However, the question of the weight to be given to each expert, which is the question that interests us here, will not be resolved. In [

28], the aim is rather to synthesize contradictory opinions of different experts by fixing the weight to be given to each expert in advance. We propose, for our part, an objective procedure to determine the weight to be given to experts compared to empirical data.

4. Theoretical Properties of the Barycenter Estimator

4.1. Barycenter in Space

In this section we work in the space. Let us recall that the classic norm on the space is given by: .

Following the ideas presented in

Section 2.2, we define our estimator,

as follows:

where

To control

, we use the concentration inequality obtained in the recent work of [

30]. In the literature, most of the concentration inequalities for the empirical distribution use the

norm. This is why, even though we will present the following results by trying to generalize to spaces

for all p, in practice, only

interests us. The proofs for the different theoretical results of this section are relegated to the

Appendix A.

Proposition 1 (Existence and uniqueness).

The estimator defined by (8) exists for all . is unique if and only if .

In the following, therefore refers to a set of probability measures.

The next proposition shows that one of the solutions of (

8) can always be written as a barycenter between

and

. This property therefore provides us with an explicit expression of a solution of (

8), which was not otherwise trivial to obtain by a direct calculation looking for the saddle points of the Lagrangian (for example, in the case

).

Proposition 2. Let be defined by (8); then for all i, it exists such that :where if and otherwise. Therefore, one of the elements of

can be written under the form of a barycenter. For the sake of simplicity, in the following, we will designate

as the solution of (

8) for

, which can be written under the form of (

10) rather than using the whole set of solutions.

It is now a question of deriving a result proving that mixing experts and data, as we do with , represents an interest rather than a binary choice of one of the two models. For this reason, we show in the following proposition that, with a high probability, our estimator is always better than the best of the models within a constant.

Theorem 1. Let be defined by (8). Then, we have with probability of at least : 4.2. Barycenter Using the Kullback–Leibler Divergence

In this section, we study the theoretical properties of the solution of Equation (

6) in the particular case where the dissimilarity measure

is the Kullback–Leibler divergence. The proofs for the different theoretical results of this section are relegated to the

Appendix B.

The Kullback–Leibler divergence between two discrete probability measures

p and

q is defined as:

Let us recall that the Kullback-Leibler divergence is not a distance, since it is not symmetric and does not satisfy the triangular inequality; however, it is positively defined [

6].

We define our estimator as:

where

To calibrate

, we can use the concentration inequality obtained in [

30]. More precisely, we have:

In the following proposition, we show the existence and uniqueness of our estimator

and the fact that our estimator is a barycenter. However, unlike in the case of

of Equation (

8), it does not seem possible to obtain a closed form for

this time.

Proposition 3. Let be defined by (12); then, exists and is unique. Moreover, can be written under the following form:where is a non-negative real such that: The following proposition is intended to be the analog of the proposition 1 when is the Kullback–Leibler divergence. We prove that the centroid is better than the experts (with high probability). On the other hand, we obtain that when , the barycenter is better than the empirical distribution. To obtain guarantees when seems less obvious and requires control over the quantity .

Theorem 2. Let be defined by (12); then, we have with probability at least :where Remark 1. Note that is infinite if does not have the same support as that of . Nevertheless, obtaining a result for would require us to have a concentration on , which we do not have. Note that is infinite until we have sampled all the elements of the support of at least one time.

5. Numerical Results

For each experiment in this section, we generate a random distribution that we try to estimate. To do this, we simulate some realizations of a uniform distribution and renormalize in order to sum up to 1.

We also generate four different distributions that will serve as a priori for the inference: . The first three priors are obtained by a maximum entropy procedure under constraint to respect marginals of having undergone a modification. We added to the marginals of a Gaussian noise of zero expectation and variance equal to , and , respectively. The last prior is chosen to be equal to the distribution (the experts provided us with the right distribution).

We then sequentially sample data from

, i.e., we generate patients, and update for each new datum and each different a priori, the left centroid

(using an Uzawa algorithm [

31]), the barycenter

, and the empirical distribution

, as well as the divergences

and

and the norms

and

.

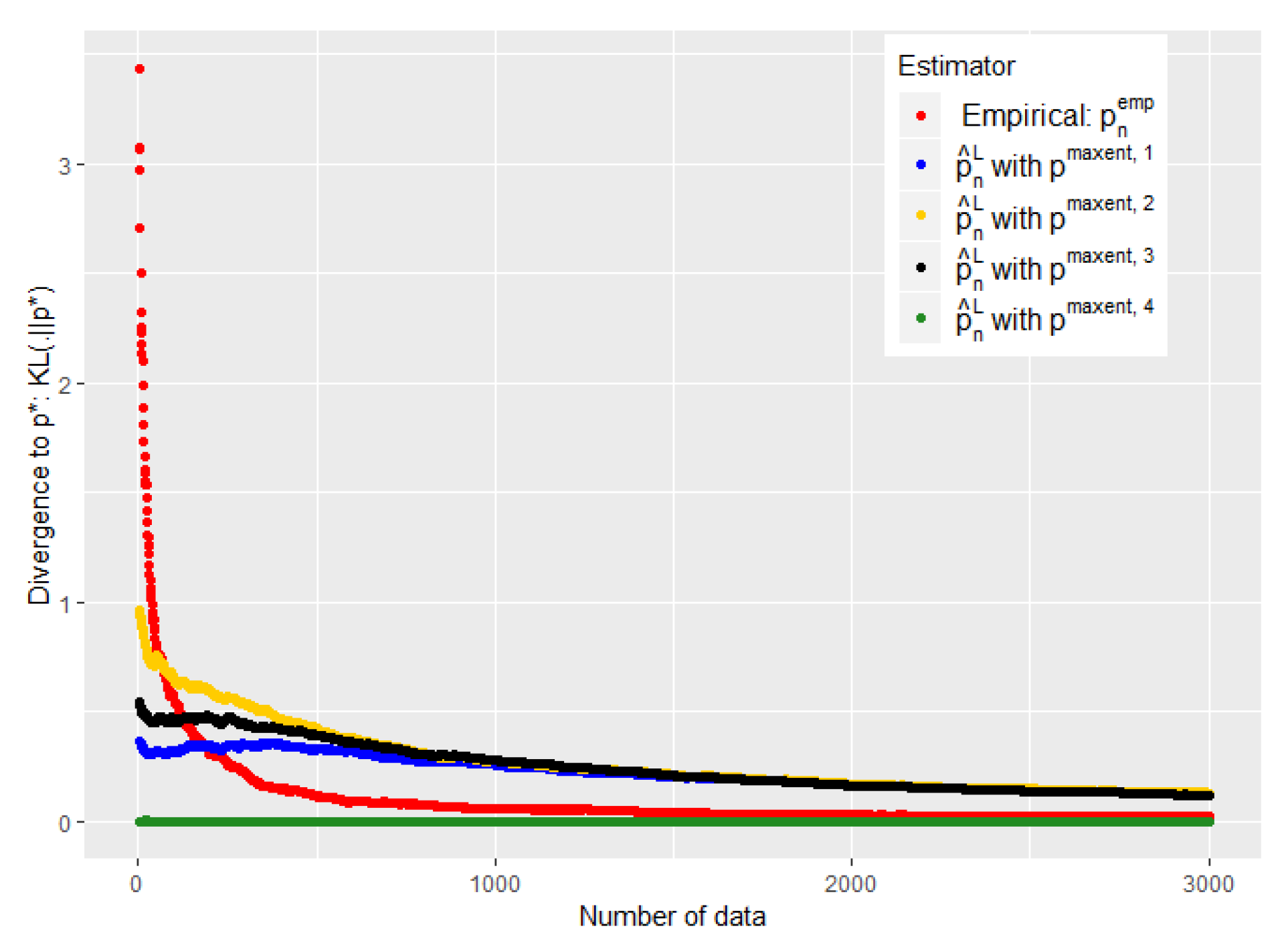

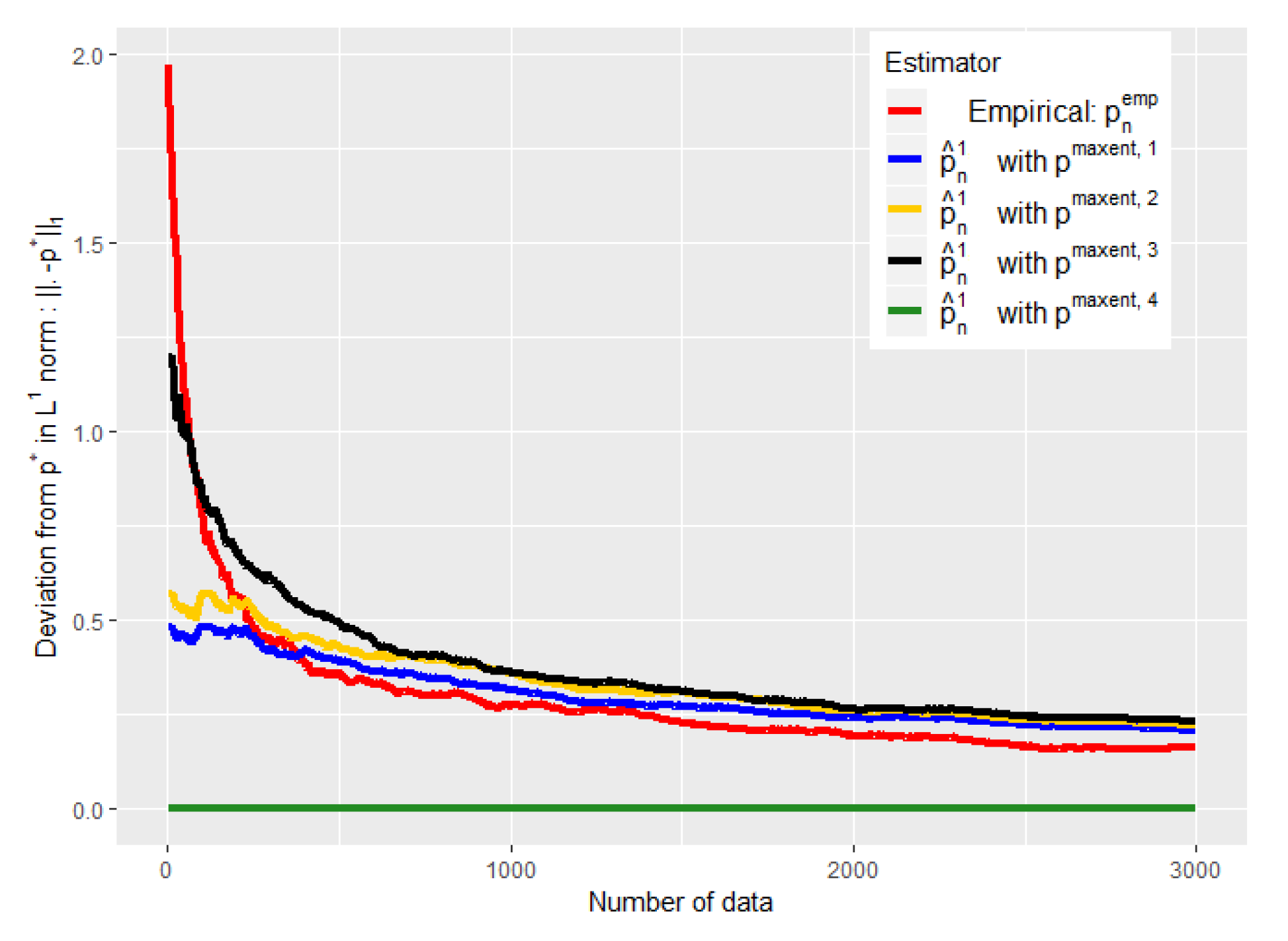

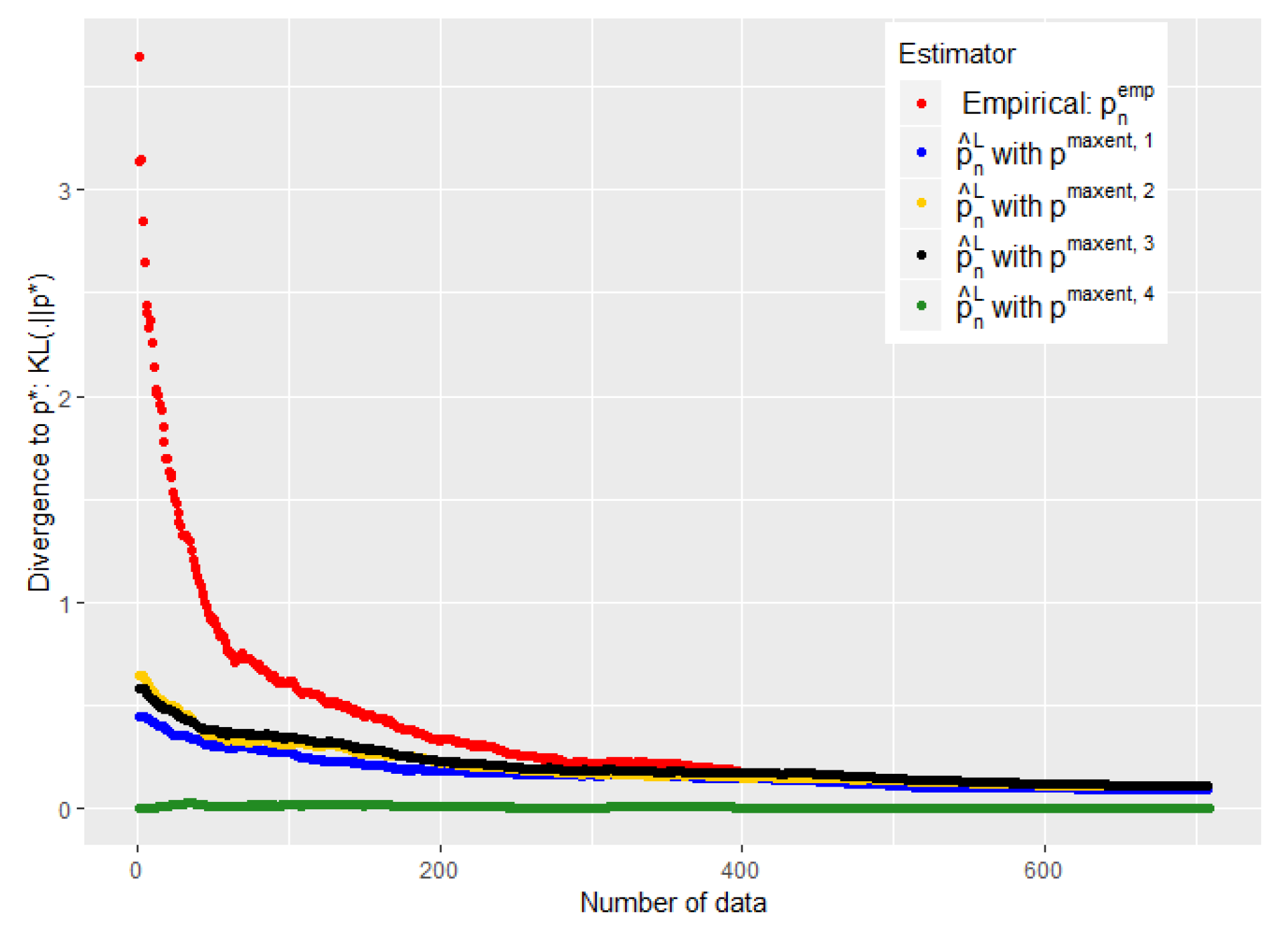

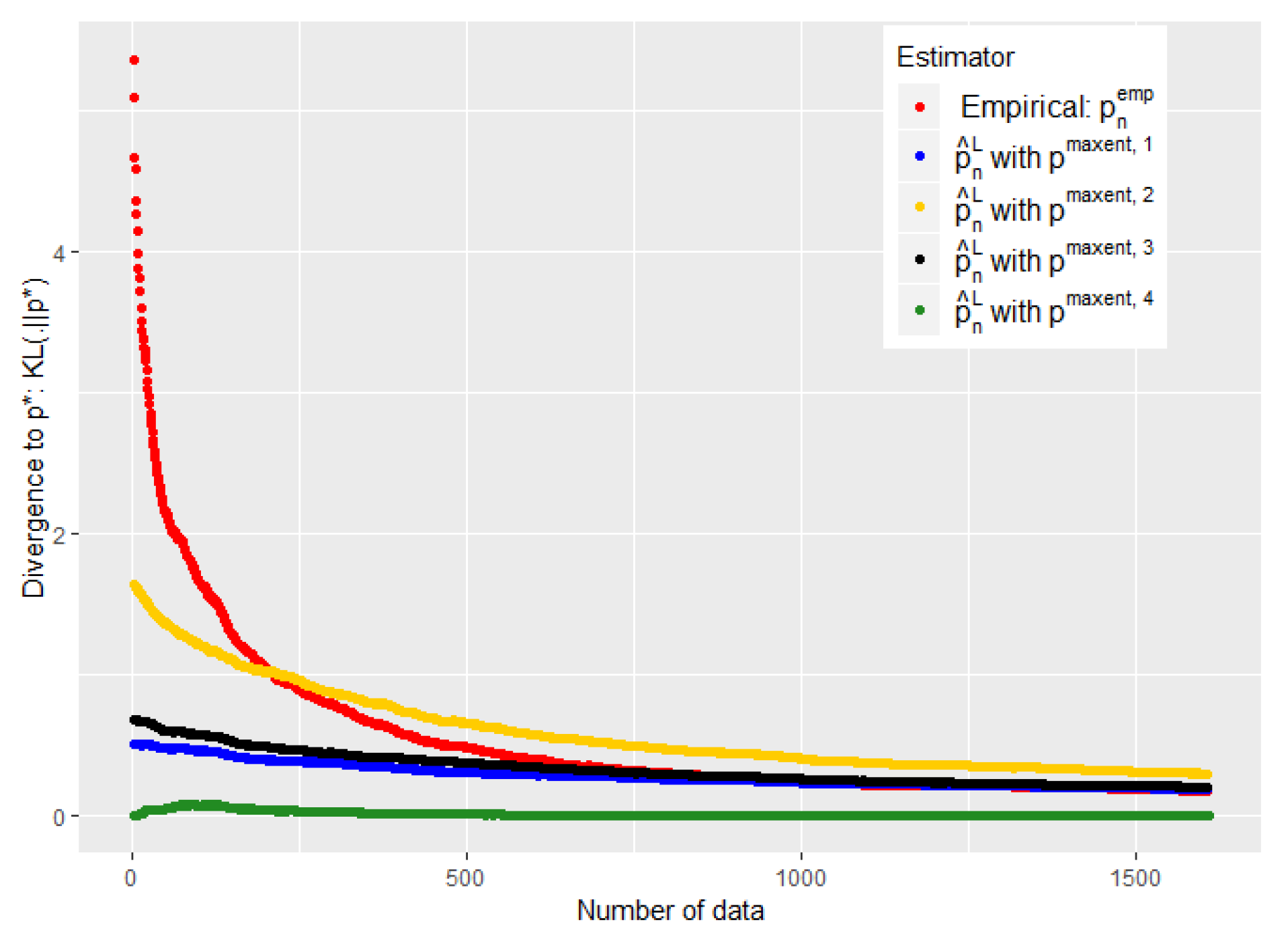

The experiments of

Figure 3,

Figure 4,

Figure 5 and

Figure 6 were conducted on the case of a disease with

typical symptoms and where there are therefore

possible combinations. The experiments of

Figure 7,

Figure 8 and

Figure 9 were conducted on the case of a disease with 9 typical symptoms and where there are therefore

possible combinations.

5.1. General Analysis of the Barycenter Performance and Choice of

The only parameter that we can control is the

used to construct the confidence interval of the concentration of the empirical distribution around the true distribution. Let us recall that for the case of the Kullback centroid of Equation (

12), we set:

where

is defined in Equation (

14).

However, our first numerical experiments show that the choice of

defined by Equation (

18) is a little too conservative (see

Figure 3). We need to converge

faster towards 0 without abandoning our a priori when it is good.

Our experiments suggest taking an

consistent with the concentration proposed in a conjecture of [

30] for Kullback–Leibler divergence:

Note that we added a constant

to the conjecture of [

30]. As for the choice of

, this appears important mainly when

n is small; taking it when sufficiently low avoids an overfitting situation when the number of data is still low, without being harmful when

n is high. We took it equal to

in the experiments of

Figure 3,

Figure 4 and

Figure 5 and

Figure 7, and tried different values in

Figure 6.

The

Figure 5 and

Figure 7 show that such a choice for

makes a good trade-off between expert and empirical data, because we are able to take advantage of these two sources of information when the number of data is small (typically when

), but also to quickly abandon our a priori when it is bad (see the black curves) or to keep it when it is good (the green curves). Eventually, the

Figure 5 and

Figure 7 were performed on problems of 128 and 512, respectively, and this choice of

therefore appears relatively robust with respect to changes in size.

Concerning

, we took, still following the conjectures of [

30]:

The

Figure 4 shows the error made by our barycenter in norm

:

using such an

. We are again able to get rid of a bad a priori relatively quickly to follow the empirical (green curve) while keeping it if it is good (blue curve).

Moreover, we show with these experiments that there is an intermediate regime when we do not have much data, where our estimator is

strictly better than the two individual models (experts and data alone). This is particularly visible when we used the

of the conjecture of [

30] (see

Figure 7 and

Figure 5). It is then empirically evident that mixing these two heterogeneous sources of information, experts and empirical data, can be useful for statistical inference.

One might nevertheless wonder by looking at the experiments of

Figure 3,

Figure 4 and

Figure 5 and

Figure 7 why we propose a mixture of expert and data rather than just a binary choice of the best model. Indeed, both our theoretical and experimental results show that we can lose a constant when making a barycenter between expert and data instead of just a binary choice of the best of the two models. This is particularly true when the number of data tends to grow and when the initial expert a priori was misleading. Nevertheless, this constant is a price that we are willing to pay in order to avoid the undesirable consequences of a binary choice of model.

First, when making a binary choice of model, it is not that easy to determine when we should jump from the expert model to the empirical model. Note also that it would produce an undesirable discontinuity in the function of the data flow. Most importantly, it is crucial in our application that our estimator has the same support as the real distribution. It would be disastrous indeed to consider that a disease is impossible because we never observed a particular combination of symptoms. This remark is somewhat linked to the well-known coupon collector’s problem: How many samples do we need on average to observe all the modalities of the support of a given distribution at least one time? In the equal case (the target distribution is uniform), the average number of samples needed is of the order of

, but it might be much more in the unequal case [

32]. Nevertheless, let us emphasize here once again that we are particularly interested in the moment where we have little data. Then, the empirical distribution alone will never be a really good alternative. We could, of course, consider a Laplace smoothing in order to avoid this difficulty, but this would be nothing more than a less sophisticated maximum entropy approach.

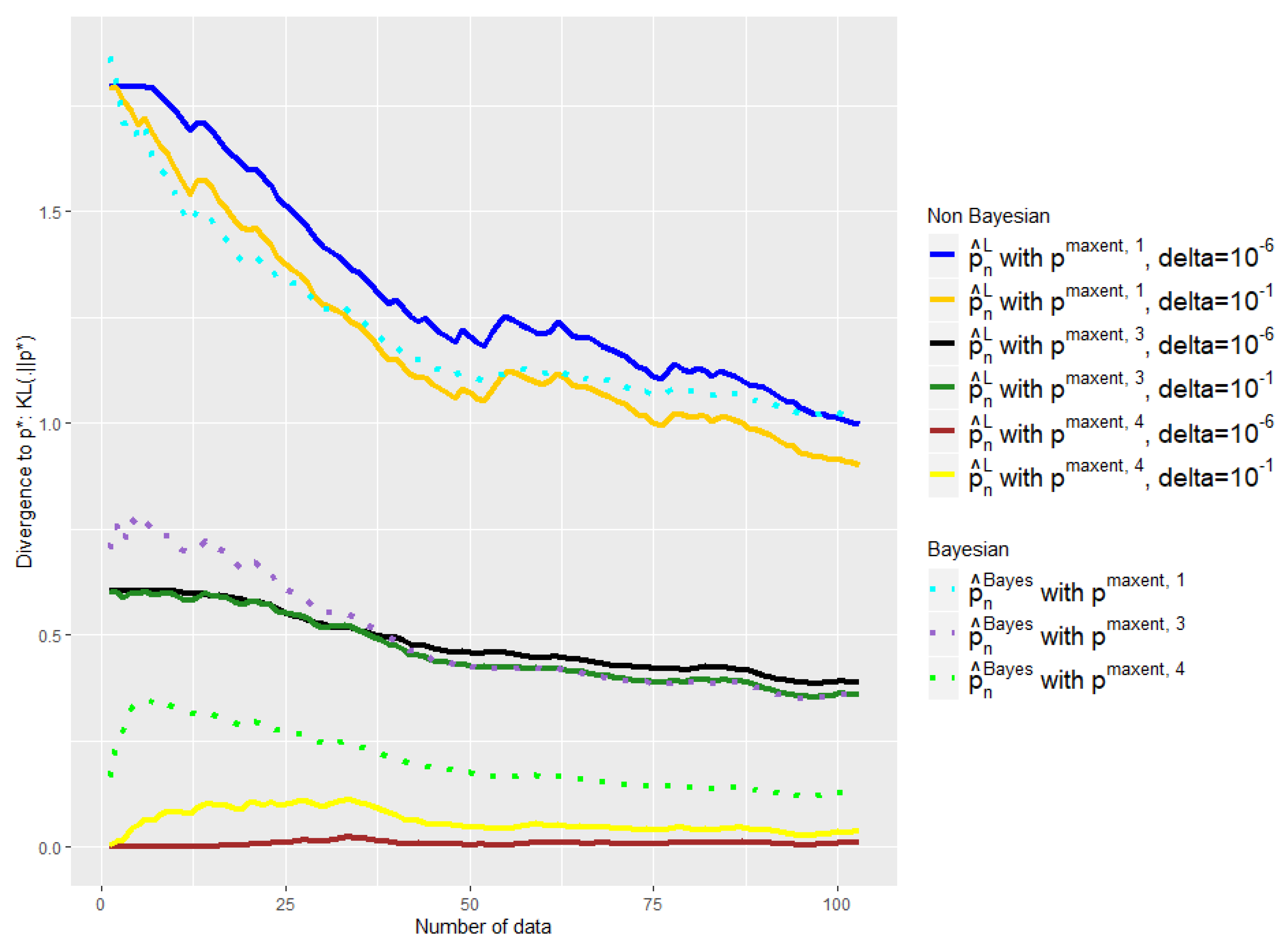

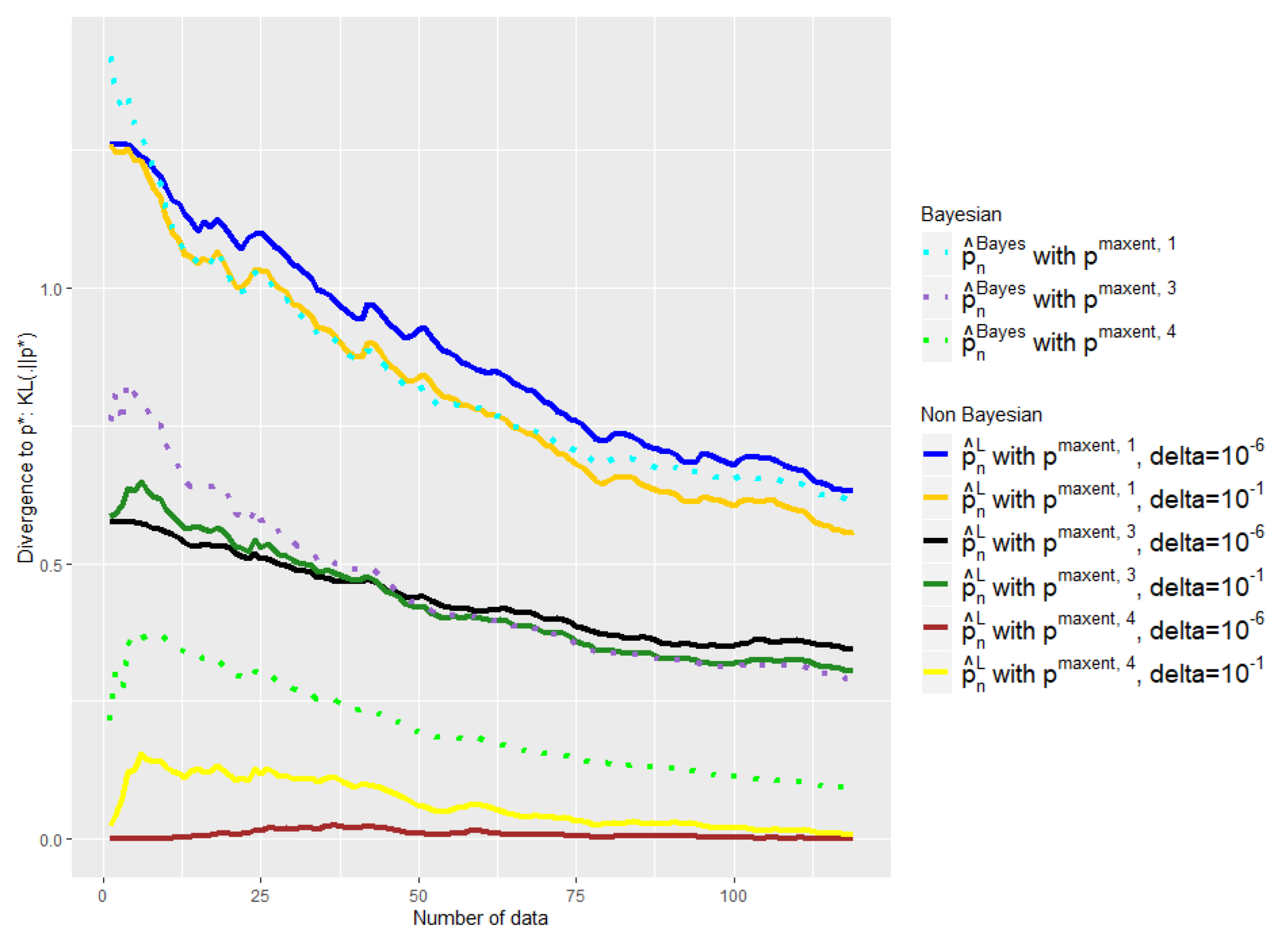

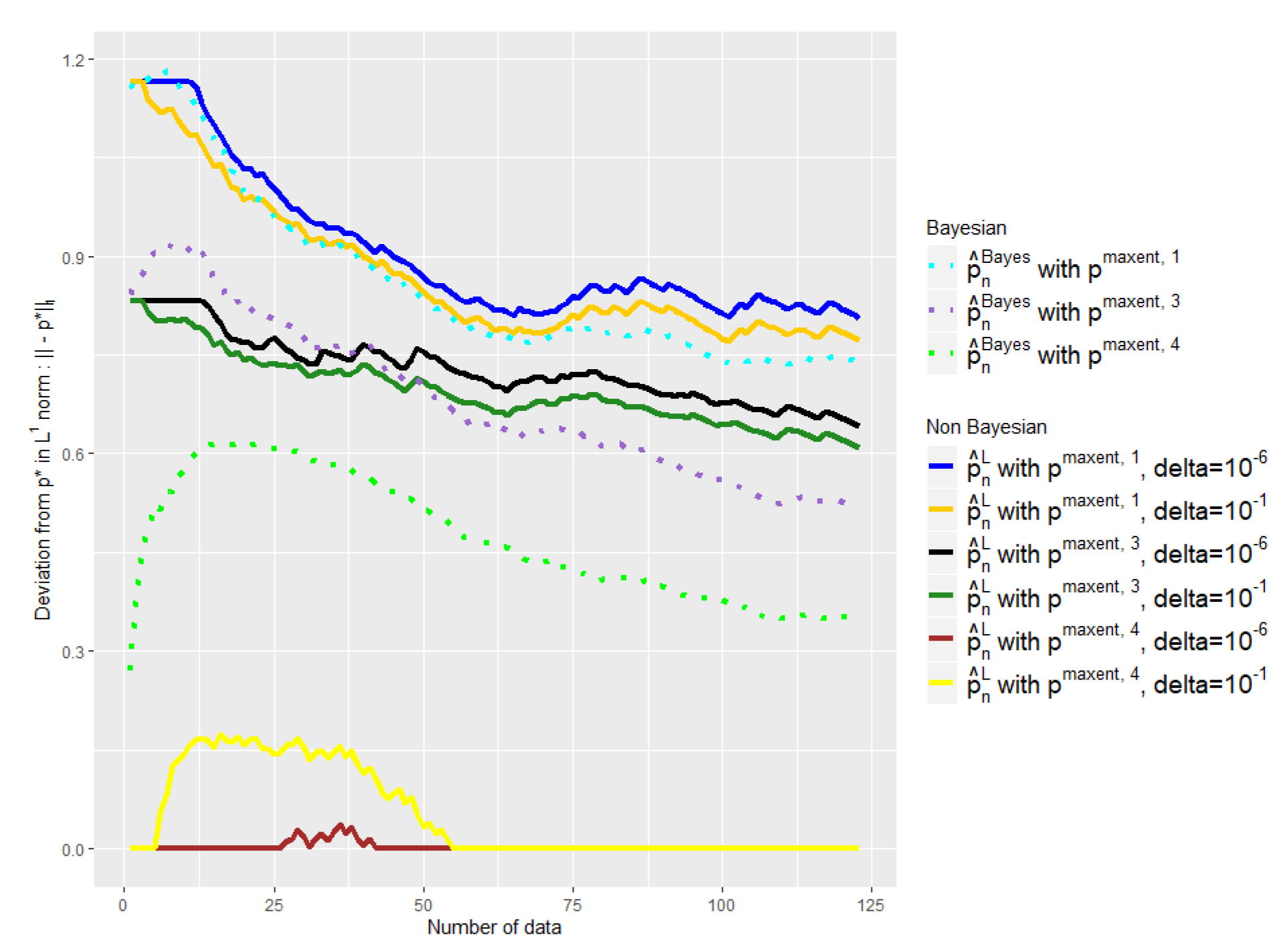

5.2. Comparison with the Baseline and Choice of

In

Figure 6,

Figure 8 and

Figure 9 we compare our approach with the Bayesian mixture of

Section 2.3. We removed the empirical distribution curve for visual reasons, because it is always above the curves presented and thus distorts the representation by stretching the y-axis.

We tried two different values for our only parameter : and . Note that the advantage of our method is that the parameter that we have to choose, , has an intelligible meaning: It refers to the probability that is outside the confidence interval. That is why we do not consider higher values of .

First of all, one can note the influence of the

parameter on

Figure 6,

Figure 8 and

Figure 9. The light yellow curve is a clear example of where the

has been chosen too high,

, giving too much credit to data in comparison with the expert. There is of course a trade-off; to choose a smaller

,

has a cost, as we can see with the black and the dark blue curves, which are a bit too conservative in comparison with the dark green and the dark yellow ones.

Nevertheless, despite the variability of the observed performance of our method as a function of , it leads in any case to a better mixture than the baseline in all our experiments. Our barycenter then outperforms the baseline in this task of finding the right weight to give to data in relation to the expert. This is particularly true when and to a lesser extent when .

Indeed, we aim at finding a mixture that would keep the expert a priori when it is good and quickly move away when it is bad. This is not what the baseline exhibits in our experiments, contrary to our estimator. The light green curve shows clearly that the weight placed on the data in relation to the expert is too high; the beginning of the purple curve also exhibits this behavior.

Once again, the

Figure 8 shows that such observations are robust with respect to changes of dimension, and the

Figure 8 has

symptom combinations; meanwhile, the

Figure 6 has

.

6. Conclusion and Perspectives

In this work, we have presented a way to combine expert knowledge—in the form of marginal probabilities and rules—together with empirical data in order to estimate a given discrete probability distribution. This problem emerged from our application, in which we aimed to learn the probability distribution of the different combinations of symptoms of a given disease. For this objective, we have an initial a priori consisting of the marginal distributions coming from the medical literature; clinical data collected is used as the decision support tool.

The particular form of the prior does not allow us to simply adopt a maximum a posteriori (MAP) approach. The absence of an obvious a priori to model the parameter’s distribution naturally leads us to the idea of maximum entropy: If no model seems more plausible to us than another, then we will choose the least informative.

This idea of maximum entropy brings us back to the works of the 1980s and 1990s, where researchers also aimed to build symptom checkers using marginals. In our work, we go further by gradually integrating empirical data as the algorithm is used.

We are interested in the intermediate regime in which we do not have enough empirical data to do without experts, but have enough to correct them if necessary. Our proposal is to construct our estimator as the distribution closest to the experts’ initial a priori, in the sense of a given dissimilarity measure, that is consistent with the empirical data collected.

We prove, both theoretically and empirically, that our barycenter estimator mixing the two sources of information is always more efficient than the best of the two models (clinical data or experts alone) within a constant.

We have empirically illustrated the effectiveness of the proposed approach by giving an a priori of different quality and incrementally adding empirical data. We have shown that our estimator allows a bad a priori to be abandoned relatively quickly when the inconsistency of the data collected with the initial a priori is observed. At the same time, this same mixture makes it possible to keep the initial a priori if it is good. Moreover, we show with this experiment that, in the intermediate regime, our estimator can be strictly better than the best of the two models (experts and data alone). It empirically confirms the idea that mixing these two heterogeneous sources of information can be profitable in statistical inference.

Future work will concentrate on several refinements, such as the addition of a kernel structure for the construction of the empirical distribution. Indeed, it is possible that there are omissions of some symptoms in the data collected. Then, a kernel approach that would consider closer states that only differ by some presences would capture such a difficulty and make a better use of empirical data. Other dissimilarity measures could also be investigated. Finally, having a true non-parametric Bayesian approach would be very interesting. However, closing the gap between classical Dirichlet priors on the marginal to a single prior on the joint distribution seems to be a real challenge.