Abstract

As product variety is an important feature for modern enterprises, multi-product demand forecasting is essential to support order decision-making and inventory management. However, these well-established forecasting approaches for multi-dimensional time series, such as Vector Autoregression (VAR) or dynamic factor model (DFM), all cannot deal very well with time series with high or ultra-high dimensionality, especially when the time series are short. Considering that besides the demand trends in historical data, that of associated products (including highly correlated ones or ones having significantly causality) can also provide rich information for prediction, we propose new forecasting approaches for multiple products in this study. The demand of associated products is treated as predictors to add in AR model to improve its prediction accuracy. If there are many time series associated with the object, we introduce two schemes to simplify variables to avoid over-fitting. Then procurement data from a grid company in China is applied to test forecasting performance of the proposed approaches. The empirical results reveal that compared with four conventional models, namely single exponential smoothing (SES), autoregression (AR), VAR and DFM respectively, the new approaches perform better in terms of forecasting errors and inventory simulation performance. They can provide more effective guidance for actual operational activities.

1. Introduction

Demand forecasting, a prerequisite for inventory decision-making, plays a vital role in supply chain management. How to improve prediction accuracy has always been the focus of academic circles and enterprises. With the increasingly fierce competition in business, product variety has become an important feature of modern enterprises, which can contribute to meet diverse needs of customers and occupy more market segments [1]. However, many products, where ‘many’ means hundreds or thousands, bring about a new challenge to demand forecasting. Traditional time series algorithms cannot well adapt to the complex high- or even ultra-high dimensionality, resulting in inferior predictive effectiveness in multi-product scenarios.

It is worth noting that the demand of multiple products is not completely isolated, but rather complex relationships exist between them. According to the relevant literature, there are two common association relationships between different products: correlation and Granger causality. For example, the demand for complementary products is highly correlated, having contemporaneous influence with each other [2]. Materials used in engineering projects have a clear sequence, so Granger causality exists in their demand [3]. Obviously, capturing and making full use of such potential information can be helpful to obtain more accurate prediction results. What’s more, when the time series are short, historic trend cannot provide enough information for future demand. Associated relationships can make up for the defects and reduce the bias of prediction. However, as far as we know, there is currently little research taking into account association relationships between products in demand forecasting. In this paper, we incorporate associated relationships among products into the forecasting framework to construct a more accurate prediction approach.

In previous literature, there are mainly three branches of forecasting models for multi-dimensional time series. The first one is a series of statistical methods, represented by the AIRMA model and its extended versions, including VARMA, VARs, BVAR, etc. [4,5,6,7,8,9,10,11,12,13]. They treat multi-dimensional time series as an endogenous system. Target variables are regressed by lag items of all series, considering their relations generally. With the development of econometrics, VARs with different settings are widely applied. For example, [8] proposed five types of VAR and utilized industrial production data from OECD countries to test their forecasting effect. The key defect of VAR is that the number of estimated parameters increases exponentially along with the increase in dimensions. For high-dimensional time series, it is easy to cause overfitting, weakening the prediction ability outside the original sample. Some scholars have assumed that estimated parameters obey a specific prior distribution to reduce their number, i.e., BVAR, applied in macro-economic forecasting [14,15,16,17], market share forecasting [12] and business forecasting [10]. Some other scholars incorporated some unmodeled predictors from exogenous variables to improve original regression models. For example, [18] integrated intra- and inter-category promotional information to construct multistage LASSO regression to forecast the demand of 3222 products. The results are significantly better than the model only using endogenous variables. Unfortunately, these methods can alleviate but not completely solve the problem of overfitting. Accurate results can be obtained only when the time series is long enough.

The second strand of research concentrates on processing high-dimensional time series through the method of dimension reduction, represented by dynamic factor model (DFM). [19] holds the belief that a small number of latent factors are able to interpret fluctuations of observed macroeconomic indices. As long as these potential factors can be portrayed accurately, the task of forecasting is simplified substantially and precise results are achievable. There are many algorithms for the estimation of dynamic factors, including maximum likelihood [19,20,21,22], principle component analysis (PCA) [23,24,25,26,27,28,29,30,31,32], and data shrinking methods [33,34]. As for prediction accuracy, [35] collected relevant literature and confirmed that DFM performs better than single time series prediction models through a meta-analysis method. Reference [36] pointed out that a simple AR model may be better than a DFM model when there is a large structural change in the data. Compared with other high-dimensional time series models like shrinkage forecasts, FDM is also superior [37]. What’s more, [38] introduced U.S. macroeconomic data to compare two forms of DFM estimation methods [39,40]. The results demonstrated that their forecasting precision is not significantly different. However, DFM has the obstacle to tackle sophisticated high-dimensional time series, due to the existence of some isolated series, and the same is true for ultra-high dimensional time series. More specifically, some unique information may be skipped in the process of selecting a limited number of factors, leading to inefficient estimates. If add more dynamic factors, it will fall into the over parameterized problem again.

As the development of artificial intelligence, various machine learning models have been widely used in the area of forecasting, including neural network [41,42,43], support vector machine [44,45,46], nearest neighbor regression [47,48], and so on. They are serious contenders to classical statistical models and form a vital research branch. Different from statistical models, these models construct the dependency between historic data and future values through a black-box and nonlinear process. Reference [49] compared eight types of machine learning models, finding that their rank is unambiguous and does not change much with different features of time series. Reference [50] tested the accuracy of some popular machine learning models. The results demonstrated that their performances were inferior to eight traditional statistical ones. In addition, [50] points out that machine learning models need to become more accurate, reduce their computation load, as well as be less of a black box. Therefore, in this paper, we will continue to optimize the statistics models by associated relationships to get higher accuracy, instead of machine learning models.

By summarizing previous literature related to multi-dimensional time series analysis, we find that these methods all fail to deal with the situation where product dimension is large but time dimension is small. Based on this situation, we innovatively construct an improved forecasting model for the target variable based on its precedent values and endogenous predictors selected from associated relationships. In some scenarios, if there are many time series associated with the object, we adopt two feasible schemes to simplify the variable space. Then we conduct an empirical study by using an actual dataset of a Chinese grid company. The results of forecasting errors and inventory simulation show that new approaches are superior to these conventional time series forecasting models, including SES, AR, VAR and FDM. Generally speaking, the proposed methods have three major advantages. Firstly, the number of estimated parameters is simplified significantly, not depending exponentially on the dimensions. Secondly, each variable has a customized forecasting regression, which can describe isolated time series well. Thirdly, it does not necessary to collect extra data to act as exogenous variables. Therefore, one contribution of our work is that the new approaches innovatively incorporate associated relationships into demand forecasting, getting rid of the transitional dependence on historical data, so it can be applied to forecast short time series with large dimensionality, making up for the void of previous algorithms. In addition, we contribute to solving over-fitting problems, providing a new direction for the subsequent research. Besides the above theoretical implications, the study also has important practical significance. Note that life circles of products especially high-tech products are getting shorter and many new products are born due to the acceleration of technological innovation. Demand forecasting in terms of limited time points is very common and necessary in actual business activities. Therefore, our new approaches have a wide range of application scenarios and can provide more accurate decision-making basis for practitioners.

The remainder of this paper is organized as follows: In Section 2, we give a brief description of two conventional forecasting models for multi-dimensional time series, i.e., VAR and DFM, and then present our new forecasting approaches based on correlation and Granger causality, respectively. Section 3 describes the procurement dataset and analyzes the relationships between the demands for purchased products. An empirical study and its results are discussed in Section 4. Finally, we summarize our conclusions in Section 5.

2. Forecasting Model and Evaluation

In this section, first we review two common models used in multivariable forecasting. Then we detail our new approaches, utilizing correlations and Granger causality among products to improve prediction accuracy respectively. Finally, some indices are introduced to evaluate the forecasting performance.

2.1. VAR and DFM

VAR and DFM are two conventional models used to forecast demand under multi-product scenarios. Both them have specific limitations, struggling with high (or ultra-high) dimensionality and failing to describe evolutions of short time series. Firstly, we introduce the VAR model. Assume that demand of products at time is , t = 1, 2, …, T, where represents the demand of th product at time . The VAR model is as follows:

where is a matrix polynomial with lags in total. is a parameter matrix of th lag and is the lag operator calculated by . is a constant vector, and is a vector of white noisy process, without contemporaneous correlation. According to (1), it is obvious that there are total free parameters need to be estimated in VAR model. With the increase of the number of products (i.e., N), the parameters increase quadratically. Therefore, only time series have moderate dimensionality, i.e., the length of data point is long enough relative to the number of products, can VAR obtain efficient estimates.

As for DFM, it extracts some dynamic factors that can explain the most variation of target variables as predictors, turning the curse of dimensionality into a blessing. However, when the number of products is large, there are some isolated products unavoidably. Common factors cannot explain their demand accurately. Keeping the previous assumptions about , the general DFM model is as follows:

where is a -dimensional column vector, representing values for unobserved factors at time . It can supplant the originally large data. , , and the meanings of these parameters are similar to ’s in the previous part. and are residuals, satisfying some idiosyncratic assumptions. Equation (3) aims to get predictive values of dynamic factors, then applied in Equation (2).

We can see that the quality of factors is the key to determine the accuracy of DFM. As mentioned above, there are many methods to extract factors. Among them, PCA is commonly used in forecasting literature [28]. In PCA estimation, assume that , i.e., original time series are only influenced by contemporaneous factors. Because and are uncorrelated at all lags, we can decompose the covariance matrix of into two parts:

where and are covariance matrices of and respectively. Under the assumptions, the eigenvalues of is and is , the first eigenvalues of are and the remaining eigenvalues are . Therefore, the first principal components of can act as dynamic factors. If is known, the estimator of can be calculated by OLS directly, i.e., . However, is usually unknown for most cases. Similar to regression, the following optimization equation can estimate and :

The first order condition for minimizing (5) with respect to shows that . By substituting this into the objective function, the results demonstrate that equals to the first eigenvectors of , where . More detailed derivation process can refer to [28]. Correspondingly, is the first principal components of . It is the final PCA estimator of dynamic factors in DFM. Finally, let to get predictive values of the original time series.

2.2. The Forecasting Approach Based on Correlation

Associated relationships between multiple products can provide rich information for demand forecasting. Mining effective predictors from associated time series, instead of all series, will be helpful to eliminate some irrelevant information and reduce the number of parameters significantly. Based on this believe, we propose new approaches based on two typical association relationships, namely correlation and Granger causality, respectively. It is proved that they have higher accuracy and can work well even if a wide range of products only have limited data points in the time dimension.

We start with the forecasting approach based on correlation between products. If two products are highly correlated, their demand has specific interactions in the contemporaneous period. For example, if the demand for a product increases, its complementary products will also see a rise in demand at the same time, while its substitutes will experience a decline. Therefore, we utilize such hidden information to modify forecasting algorithms and get more accurate results. There are mainly three steps in the forecasting approach based on correlation. Firstly, find a proper variable subset for each product in terms of correlated relationships. To be specific, calculate the correlation coefficients between the target one and all other products. Those highly correlated to the targeted one, i.e., whose correlation coefficient is more than a certain threshold, constitute the proper variable subset. If a product does not have highly correlated ones, its proper variable subset is empty. Secondly, run autoregressive model (AR) for each product to get originally predictive values of its demand. AR only depends on past values of a time series to forecast its future evolution, ignoring useful information hidden in other correlated time series. Therefore, the third step is that reconstruct forecasting model for products whose proper variable subset is not empty. We can add these proper variables into AR to get final results. It is worth noting that in some cases one product may have many highly correlated products, i.e., many proper variables. If the time point is not enough, adding too many proper variables results in the over-fitted problem, similar to VAR. For example, in this paper, the training sample only contains 36 time points in total. In terms of the principle that the number of estimated parameters should be less than 1/10 of that of observations, there are no more than 3 parameters in the forecasting model. Since the autoregressive process of original time series occupies at least two parameters, only one predictor based on correlation can be selected. Therefore, we propose two feasible schemes to control the scale of the forecasting model as follows:

- Scheme (I): Only select the product having the highest correlation with the object from the proper variable subset as a predictor added in final model.

- Scheme (II): Extract the first principle component of all elements in the proper variable subset as a predictor added in the final model.

More formally, Let represent time series of demand for all products during the T periods, and then the correlation matrix of is as follows:

where is the th row of , i.e., the sophistic demand series of th product. According to , we can pick up products highly correlated to , making up for the proper variable subset for th product. The autoregression can get originally predictive demand for th product in th period. is a lag parameter, determined by the Akaike information criterion (AIC). Based on this, is a matrix, containing original prediction values of all proper variables for th product. Its rows represent time dimension and columns correspond to products, ranked from left to right in terms of their correlation coefficients with in descending order. Assume that is the effective predictor selected from to improve forecasting. Because it corresponds to prediction values, the first time point is missed. The final model is as follows:

Scheme (I) suggests that , where is the first column of , the product having highest correlation with the th one. According to Scheme (II), is the first principle component of . The procedure of calculating principle components is as follows: (i) computing the covariance matrix of , (ii) determining eigenvalues and eigenvectors , , …, of , where , (iii) getting the first principle component .

2.3. The Forecasting Approach Based on Granger Causality

If Granger causality exists between two products, it means that historic observations of one product can explain the future demand of another product (there is a time lag between them). This situation often occurs when the procurement of products has a stable sequence, such as material procurement in engineering projects. The idea of the forecasting approach based on Granger causality is similar to the former one based on correlation, also consisting of three steps. Firstly, find the proper variable subset for every product by doing Granger causal relation test. When the p-value of Granger test satisfies a critical condition, the corresponding product can join the proper variable subset of the target one. Secondly, run AR for every product to get its originally predictive demand. Finally, select effective predictors from the proper variable subset to reconstruct the forecasting model, if a product’s proper variable subset is not empty. Similarly, there are also two schemes to prevent excessive parameters:

- Scheme (I): Only select the product having lowest p-value of Granger test with the object from the proper variable subset as a predictor added in final model.

- Scheme (II): Extract the first principle component of all elements in the proper variable subset as a predictor added in the final model.

Assume is the p-value matrix of Granger test for considering lags, where is determined by AIC. The rows of describe Granger results while columns are Granger causes. According to , we can construct the proper variable subset for the th product, expressed by a matrix . The granger cause with the lowest p-value of the th product arranges in the first column of , and so on. Let represents the effective predictor extracted from . According to Scheme (I) and Scheme (II), and is the first principle component of respectively. The final forecasting model based on Granger causality is as follows:

2.4. The Forecast Accuracy Measures

According to the previous literature, there are two major methods to evaluate the performance for demand forecasting approaches: forecasting errors and inventory performance, from the perspective of forecasting accuracy and actual inventory management, respectively. It is worth noting that the dataset used in this paper has intermittent demand series: the demand of some products is zero in some periods. Therefore, we adopt absolute scaled error (ASE) to measure forecasting errors. It can overcome the drawback of infinities caused by zero division [51]. The formula is as follows:

Then mean absolute scaled error is . A forecasting approach with lower MASE means that it is more accurate during the whole forecasting period in general. Therefore, we can compare different approaches according to their values of MASE. In addition, we also apply relative error (RE) to measure the accuracy of forecasters, i.e., calculating ratios of their ASE to that of a baseline model. In this paper, we set simple exponential smoothing (SES) model as the benchmark, which can refer to [52]. For multi-period demand forecasting, the overall judgement of RE is usually based on the form of geometric mean instead of arithmetic mean [51,53]. Geometric mean relative absolute scaled error is expressed as , where is errors of the baseline model.

In fact, optimizing forecasting accuracy aims to provide better guidance for order strategy and inventory management, finally reducing inventory costs and improving managerial efficiency. How forecasting results influence inventory performance is also a concern for scholars and enterprises. A lot of studies assess forecasts by means of inventory simulations [54,55,56,57,58]. Therefore, we also introduce inventory performance to evaluate forecasting approaches. The order-up-to-level policy, commonly used in practice, is adopted to control inventory simulation. We set the inventory review period as one month, consistent with the prediction period. The order-up-to level S is , where is the predictive demand during the lead time (one month), SS is the safety stock related to the desired service level. At the beginning of each period, check the holding stock H. If H is below S, place an order with the ordering quantity H-S. Otherwise, nothing needs to be done. When face out-of-stocks, the demand will be serviced in the next period. To initialize the simulation system, assume that have full stock at the beginning, i.e., H=S. One index of inventory performance is total inventory costs, consisting of two parts: shortage costs and holding costs, i.e., total inventory costs = unit total cost × (mean inventory per month × + mean stock-out per month × ) [52]. The cost parameters and reflect the trade-off between stock-holding and out-of-stock. means that costs of out stocks are more expensive. When , by contrast, unit stock-holding costs more dollars. In addition, another index is the inventory ratio. A smaller inventory ratio means higher inventory efficiency. It is calculated by the following expression:

3. Data Description

3.1. Data and Pretreatment

In this paper, we obtained a real dataset of material procurement from a large grid company in China over the span from June in 2012 to April in 2016, comprising a total of 47 months. To minimize forecasting errors, we removed trend and seasonal components from the time series, following [38,45].

Because it takes at least three years to estimate seasonal components, we treat the first 36 months as a training set and the remaining 11 months as a test set to evaluate the out-of-sample prediction ability of forecasting approaches. After removing trend and seasonal effect, results of the unit root test suggest that all processed variables are stationary, the subsequent forecasting steps can continue.

Purchased products are mainly infrastructure materials, consisting of cables, transformers, fittings, etc. Note that the demand is intermittent in the dataset. A few products even have zero demand at more than 2/3 of all time points. These products are not suitable to do forecasting and the data have already be cleaned up. Besides, products without procurement in the first 12 months and the last 12 months are also not considered. In total, there are 338 products left. According to product characteristics, they can be aggregated at different levels, forming a hierarchical structure, which is: Family > Category > Subcategory > Product, from the top level to the bottom level. As shown in Table 1, at the most aggregated level, there are two families, namely primary equipment and equipment material respectively, which can be further disaggregated into 15 categories at level 2 and into 59 subcategories at level 3. Besides, it is obvious that the quantity of products and the value of procurement vary significantly within each categories (subcategories).

Table 1.

Description of hierarchical structure and procurement scale of products.

3.2. Correlation Analysis

In this sub-section, we compute correlations coefficients between products at different aggregated levels to make clear their dependencies structure, supporting the subsequent forecasting. At the top level, primary equipment and equipment material are highly correlated. The correlation coefficient of the two families is 0.7784.

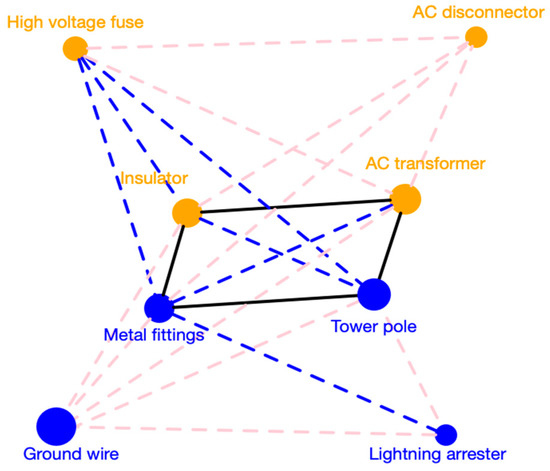

As for 15 categories, their correlations are shown in Figure 1. A pink dotted line indicates that the correlation value of two linked nodes is in the interval . Similarly, a blue one corresponds to , while a black line means more than 0.8. Seen from Figure 1, there exists high correlations among 8 categories, including AC transformer, insulator, metal fittings, tower pole, AC disconnector, lightning arrester, high-voltage fuse, and wire & ground wire, especially the first four categories. In addition, the correlation network is clustered by a method proposed by [59] and nodes with same colors in Figure 1 represent that they are clustered in a same group. To be specific, AC transformer, AC disconnector, high-voltage fuse and insulator are in G1, while lightning arrester, tower pole, ground wire and metal fittings are in G2, which are mainly consumed in line laying. According to Table 2, a series of centrality indices of metal fittings are almost the biggest in the correlation network, reflecting that its demand has high correlations with demand of all other categories.

Figure 1.

Correlation relationships of categories at level 2.

Table 2.

Centrality indices of categories in the correlation network.

3.3. Granger Causality Analysis

We applied the Granger causal relation test to evaluate the relationship between primary equipment and equipment materials. The result shows that they have no statistically significant causality. In other words, demand of primary equipment does not Granger cause that of equipment materials, with p = 0.087. Furthermore, the reverse direction is also not significant, with p = 0.201.

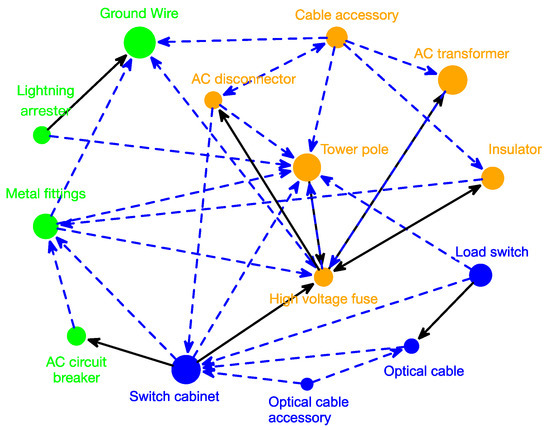

In addition, we evaluate the Granger causality relationships among 15 categories at level 2 and visualize the network in Figure 2. Directions of arrows are from Granger causes to Granger results. When the p-value of Granger test locates in and , the arrow is drawn by a blue dotted line and a black line respectively. Figure 2 shows that the demands of 14 categories have a significant causal influence on each other, except for cable. It is because cables are widely used in power grid construction, not depending on other products. According to the clustering results of causality network, three groups can be found. G1 contains optical cable accessory, optical cable, load switch and switch cabinet, and procurement of first three categories Granger results in that of switch cabinet. G2 consists of cable accessories, AC transformers, insulator, high-voltage fuse, tower pole and AC disconnector, similar to G1 in correlation analysis, existing complex causality relationships. Overall, high-voltage fuse and tower pole locate at core position, which also have causality relationships with out-group products. Lightning arrester, metal fittings, AC circuit breaker and ground wire are clustered in G3, similar to G2 in correlation analysis. The significant direction of causality from the first three categories to wire & ground wire reflects that a growth of their purchase will increase demand for wire & ground wire later.

Figure 2.

Granger causality relationships of categories at level 2.

To further illustrate Granger causality between categories, we calculate some indices, listed in Table 3. High-voltage fuses and tower poles display the biggest values of in-degree, indicating that their procurement can be greatly explained by lag demand of other categories, while referring to out-degree, high-voltage fuse also ranks first, as well as cable accessory, reflecting that they are strong predictor for follow-up demand of other categories. Besides, switch cabinet, tower pole and high-voltage fuse, with largest centrality indices, are the core nodes in the Granger causality network. what’s more, categories in G1 have closer relationship, demonstrated by their higher clustering coefficients.

Table 3.

Centrality indices of categories in the causality network.

4. Empirical Analysis

4.1. Experimental Setup

In this section, we generate demand forecasting for the above dataset by our new approaches and compare their forecasting performance with four traditional time series models, namely SES, AR, VAR, and DFM, respectively. Except for the enterprise level which only has a single time series, we applied these forecasting approaches on the other four levels of the product hierarchy. Because the number of products varies greatly at the different levels, from several to hundreds, it helps us to investigate whether the new approaches can deal with different data dimensions well. Nevertheless, DFM is not necessary to do forecasting at the family level. VAR ignores the product level and the subcategory level due to a large number of products at the two levels.

An essential step of our new methods is to set up the criteria for constructing proper variable sets, in other words, to define the critical conditions of high correlation and significant Granger causality. If the critical conditions are too strict, most products may not find proper variables, and then their forecasting demand cannot be corrected by association relationships. Conversely, an excessively loose condition will bring too much disturbing information in proper variable sets, even impairing originally forecasting accuracy. Therefore, a rational critical condition is a key point to obtain satisfying prediction results. Considering that in the forecasting approach based on correlation, originally forecasting values of AR are used as explanatory variables, which may further increase uncertainty, a stricter selection criterion is necessary. We set the correlation coefficient at more than 0.513 as the threshold condition preliminarily. The significance level of the critical value is 0.001 in terms of the size of the training sample. As for the Granger relationship, the standard is that the p-value of Granger test is no less than 0.1. Based on the above settings, we get proper variable sets and then do final demand forecasting. What’s more, to further investigate the influence of critical conditions on forecasting performance, we set the critical correlation coefficient as 0.6, 0.7, 0.8, 0.9 as well as the critical Granger significant level as 0.05 and 0.01 separately to repeat prediction process.

Finally, we evaluate the forecasting performance for these models. To begin with we calculate their absolute errors and relative errors in terms of the equations mentioned before. The approach with a smaller average error is considered to be more accurate. Then we introduce t-test to verify whether forecasting errors of our new approaches exist statistically significant differences with the baseline model SES. In addition, demand prediction aims to guide subsequent activities including purchase and inventory management. More accurate forecasting may be helpful to avoid high inventory levels or out of stock, reducing the cost loss of enterprises naturally. Therefore, it is common to assess forecasting approaches by simulating the process of inventory management. we also do inventory simulation for 338 products according to forecasting results of different approaches, to compare their performance from the perspective of inventory management.

4.2. Results and Analysis

4.2.1. Forecasting Accuracy Analysis

Table 4 presents mean values of absolute errors and relative errors for the proposed approaches, as well as four conventional models. CI and CII refer to the approach based on correlations adopting scheme (I) and scheme (II) to control model size respectively. Similarly, GI and GII refer to the approach based on Granger causality and the Roman numerals represent different schemes. The brackets indicate the critical conditions to construct proper variable sets. The approach based on correlations requires the correlation coefficient greater than 0.513. As for the approach based on Granger causality, the p-value of Granger test should be less than 0.1.

Table 4.

Forecasting errors of six models at four aggregated levels.

We can see from Table 4 that for the approaches based on correlations, evaluation results are consistent whether base on MASE or GMRASE. CII performs best among the six models, having a minimum deviation from real demand. Conversely, forecasting accuracy of CI is low, especially at more disaggregated levels. As the dimension of products increase, forecasting errors of CI grows rapidly, even inferior to the original predictive results (AR). This reflects that the forecasting values of the most correlated time series distort the effect of original predictors, leading to lower accuracy, not aligned with our theoretical expectations. However, the first principal component is equivalent to weighted average of all highly correlated time series, which not only contains more effective information but also offsets errors of different correlated series. Therefore, CII can get more accurate results. As for the two types of models based on Granger causality, their accuracy is the same basically at all aggregated levels, superior to that of VAR and DFM. When the product dimension is large, Granger II is more advantageous.

We set different critical values to investigate their influence on accuracy of models. For the approach based on correlation, we set the critical values separately as 0.6, 0.7, 0.8 and 0.9. Table 5 shows the forecasting errors in each situation. With the increase of the critical value, CI becomes more precise while CII is absolutely opposite, witnessing an upward tendency in errors. However, even if the critical value is equal to 0.9, CI performs still worse than AR, let alone CII. For the approach based on Granger causality, forecasting results in terms of different critical conditions are displayed in Table 6. We can see that forecasting errors of GII is always lower than that of GI. When the critical value equal to 0.01, GII has the highest accuracy. In conclusion, scheme (II) can help the approach based on association relationships to get more accurate forecasting results, better than these conventional models. Besides, the critical value should be set in a rationally high level, to ensure that only highly associated time series can be selected to eliminate irrelevant information and the proper variable subset has enough members too to offset errors. In this way, the approaches based on associated relationships can be the most effective.

Table 5.

Forecasting errors of the approaches based on correlation under different critical conditions.

Table 6.

Forecasting errors of the approaches based on Granger causality under different critical conditions.

To further validate the accuracy of models, we treat the SES model as the benchmark to do t-tests for all other models. A negative test statistic means that average ASE of the model is smaller than that of the baseline model, i.e., more accurate than SES model. The smaller the negative t value is, the higher the significance level is. As shown in Table 7, the t-test results for absolute errors and relative errors are not consistent. In terms of absolute errors, at the three levels (production, subcategory and category), forecasting errors of GI and GII are all significantly smaller than SES (p-value < 0.01), regardless of the critical values. The models based on correlations outperform SES only when adopt scheme (II) to collect predictors. When it comes to relative errors, CI is still significant superior to the benchmark model only at the category level. GI and GII become insignificant in most cases. In conclusion, significance levels of t-value for relative errors are lower remarkably compared to absolute errors. However, CII still has significantly lower errors, performing best among all models.

Table 7.

T-test of forecasting errors for different approaches at three aggregated levels.

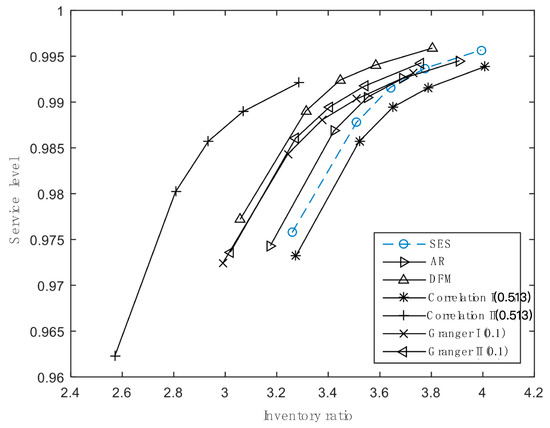

4.2.2. Inventory Performance Analysis

In our study, we set five desired service levels and three kinds of cost parameters to do inventory simulations for 338 products at the product level. Total inventory costs are shown in Table 8 and Figure 3 illustrates inventory ratios in different scenarios. When satisfying the same service level, CII enjoys the lowest total costs and the inventory ratio and, as expected, CI spends more costs and maintains higher inventory ratios than the original approach (AR) as well as SES and DFM. As for the two approaches based on Granger causality, the results are inconsistent with that of forecasting errors. GI has fewer stock costs and a lower inventory ratio than GII, although GII’s forecast accuracy is higher. In addition, compared with AR and DFM, they need higher inventory levels to realize a certain service level.

Table 8.

Total inventory costs for different approaches considered satisfying various service levels.

Figure 3.

Inventory ratios for different approaches satisfying various service levels.

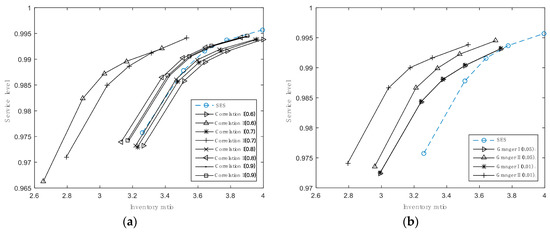

In addition, we also analyze the inventory performance of the proposed approaches satisfying different threshold values. As shown in Table 9, when the critical value rises, total inventory costs creep up if using CII to forecast demand, and the inventory ratios also reach to the top value (in Figure 4a). CI benefits from high critical values, bringing improvement of inventory performance, but never surpasses that of AR.

Table 9.

Total inventory costs for the approach based on correlation with different critical values.

Figure 4.

Inventory ratios for approaches with different critical values satisfying various service levels. (a) is for the approach based on correlation and (b) is for the approach based on Granger causality.

For approaches based on Granger causality, their inventory performance is displayed in Table 10 and Figure 4b. We can see that total costs and inventory ratios of GI basically remain the same, regardless of the change of the critical value. GII is more sensitive to the critical value in comparison. A stricter condition to select time series existing Granger causality is helpful to optimize inventory performance for a given service level. As a whole, CII with the critical value equal to 0.513 is the most accurate forecasting model, which can help to reduce inventory costs and improve the service level to the most extent, followed by GII with the critical value equal to 0.01. Therefore, by setting rational thresholds and choosing appropriate methods to mine predictors, the approaches based on associated relationships can achieve higher forecasting accuracy, thereby improving inventory performance. It is helpful to provide effective guidance for actual inventory management activities.

Table 10.

Total inventory costs for the approach based on Granger causality with different critical values.

5. Conclusions

Along with the trend of technological advance, a rapid increase in product diversity and shortening of product life cycles have become important features for enterprises. This means that practitioners need to use limited historical demand to forecast the future, leading to the failure of traditional forecasting approaches. Fortunately, potential relationships between demand time series of multiple products can provide abundant information to improve forecasting accuracy. Therefore, we proposed an improved approach, whose core idea is utilizing the demand of associated products (including highly correlated ones or ones having significantly causality) as predictors to add in AR model to improve its prediction accuracy. Considering that time series may be short, we introduce two feasible schemes to simplify variables to avoid over-fitting. Then monthly procurement data from a Chinese grid company is used to test the forecasting ability of the proposed approaches, as well as four conventional time series approaches SES, AR, VAR, and FDM.

We adopt two types of indicators including forecasting errors and inventory performance to compare these approaches. There are three main findings. Firstly, our new approaches can perform better than those conventional approaches, especially when the product dimensionality is large. Among them, CII with the critical value equal to 0.513 has the best forecasting performance, enjoying the lowest forecasting errors, total inventory costs and inventory ratio, followed by GII with the critical value equal to 0.01. Secondly, Scheme (II) is more effective for the approaches based on associated relationships to achieve higher accuracy, compared with Scheme (I) because it can refine more information and set off the errors of different time series. Finally, it is vital to set a rational threshold condition to select proper variables, which can help to get ideal prediction results. The condition should make sure that most objects have enough proper variables to improve prediction and while the irrelative information is also as less as possible.

However, due to the limitations of the real dataset, we can only pick up one predictor from the associated relationships, so we cannot discuss the influence of the number of selected predictors on the forecasting accuracy. In the future, we can extend our research from the following aspects. Firstly, we can properly test the new approaches through a large wide of diverse datasets, and analyze the most proper number of predictors that should be added in final forecasting models. Secondly, try more methods to dig for predictors from proper variable sets, not limited to PCA. Then compare their prediction performance to find a better one. In addition, “order-up-to level” is the only inventory strategy used to do inventory simulations in this empirical study. Therefore, we can introduce other widely applied strategies into inventory simulations and explore whether the approaches based on associated relationships are appropriate for different inventory strategies.

Author Contributions

Conceptualization, M.L.; methodology, S.L. and S.Y.; software, S.L.; validation, S.Y.; formal analysis, S.Y. and S.L.; investigation, S.L.; resources, S.L.; data curation, S.L. and S.Y.; writing—original draft preparation, S.Y.; writing—review and editing, S.Y.; visualization, S.L. and S.Y.; supervision, M.L.; project administration, M.L.; funding acquisition, M.L.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- ElMaraghy, H.; Schuh, G.; ElMaraghy, W.; Piller, F.; Schönsleben, P.; Tseng, M.; Bernard, A. Product variety management. CIRP Ann.-Manuf. Tech. 2013, 62, 629–652. [Google Scholar] [CrossRef]

- Ganesh, M.; Raghunathan, S.; Rajendran, C. The value of information sharing in a multi-product, multi-level supply chain: Impact of product substitution, demand correlation, and partial information sharing. Decis Support Syst. 2014, 58, 79–94. [Google Scholar] [CrossRef]

- Przymus, P.; Hmamouche, Y.; Casali, A.; Lakhal, L. Improving multivariate time series forecasting with random walks with restarts on causality graphs. In Proceedings of the IEEE International Conference on Data Mining Workshops, New Orleans, LA, USA, 18–21 November 2017; pp. 924–931. [Google Scholar]

- Riise, T.; Tjozstheim, D. Theory and practice of multivariate ARMA forecasting. J. Forecast. 1984, 3, 309–317. [Google Scholar] [CrossRef]

- Cholette, P.A.; Lamy, R. Mutivariate ARIMA forecasting of irregular time series. Int. J. Forecast. 1986, 2, 201–216. [Google Scholar] [CrossRef]

- Litterman, R.B. Forecasting with Bayesian vector autoregressions—five years of experience. J. Bus. Econ. Stat. 1986, 4, 25–38. [Google Scholar]

- Hafer, R.W.; Sheehan, R.G. The sensitivity of VAR forecasts to alternative lag structures. Int. J. Forecast. 1989, 5, 399–408. [Google Scholar] [CrossRef]

- Funke, M. Assessing the forecasting accuracy of monthly vector autoregressive models: The case of five OECD countries. Int. J. Forecast. 1990, 6, 363–378. [Google Scholar] [CrossRef]

- De Gooijer, J.G.; Klein, A. On the cumulated multi-step-ahead predictions of vector autoregressive moving average processes. Int. J. Forecast. 1992, 7, 501–513. [Google Scholar] [CrossRef]

- Spencer, D.E. Developing a Bayesian vector autoregression forecasting model. Int. J. Forecast. 1993, 9, 407–421. [Google Scholar] [CrossRef]

- Dhrymes, P.J.; Thomakos, D.D. Structural VAR, MARMA and open economy models. Int. J. Forecast. 1998, 14, 187–198. [Google Scholar] [CrossRef][Green Version]

- Ramos, F.F.R. Forecasts of market shares from VAR and BVAR models: A comparison of their accuracy. Int. J. Forecast. 2003, 19, 95–110. [Google Scholar] [CrossRef]

- Wieringa, J.E.; Horváth, C. Computing level-impulse responses of log-specified VAR systems. Int. J. Forecast. 2005, 21, 279–289. [Google Scholar] [CrossRef]

- Ashley, R. On the relative worth of recent macroeconomic forecasts. Int. J. Forecast. 1988, 4, 363–376. [Google Scholar] [CrossRef]

- Artis, M.J.; Zhang, W. BVAR forecasts for the G-7. Int. J. Forecast. 1990, 6, 349–362. [Google Scholar] [CrossRef]

- Holden, K.; Broomhead, A. An examination of vector autoregressive forecasts for the UK economy. Int. J. Forecast. 1990, 6, 11–23. [Google Scholar] [CrossRef]

- Brave, S.A.; Butters, R.A.; Justiniano, A. Forecasting economic activity with mixed frequency BVARs. Int. J. Forecast. 2019, 35, 1692–1707. [Google Scholar] [CrossRef]

- Ma, S.; Fildes, R.; Huang, T. Demand forecasting with high dimensional data: The case of SKU retail sales forecasting with intra- and inter-category promotional information. Eur. J. Oper. Res. 2016, 249, 245–257. [Google Scholar] [CrossRef]

- Sargent, T.J.; Sims, C.A. Business cycle modeling without pretending to have too much a priori economic theory. New Meth. J. Bus. Cycle Res. 1977, 1, 145–168. [Google Scholar]

- Geweke, J. The dynamic factor analysis of economic time series. In Latent Variables in Socio-Economic Models; North-Holland Publishing Company: Amsterdam, The Netherlands, 1977; pp. 365–383. [Google Scholar]

- Engle, R.; Watson, M. A one-factor multivariate time series model of metropolitan wage rates. J. Am. Stat. Assoc. 1981, 76, 774–781. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. New indexes of coincident and leading economic indicators. NBER Macroecon. Annu. 1989, 4, 351–394. [Google Scholar] [CrossRef]

- Brillinger, D.R. Time Series: Data Analysis and Theory; Holden-Day: San Francisco, CA, USA, 1981. [Google Scholar]

- Connor, G.; Korajczyk, R.A. Performance measurement with the arbitrage pricing theory: A new framework for analysis. J. Financ. Econ. 1986, 15, 373–394. [Google Scholar] [CrossRef]

- Ding, A.A.; Hwang, J.T.G. Prediction intervals, factor analysis models, and high-dimensional empirical linear prediction. J. Am. Stat. Assoc. 1999, 94, 446–455. [Google Scholar] [CrossRef]

- Forni, M.; Hallin, M.; Lippi, M.; Reichlin, L. The generalized dynamic factor model consistency and rates. J. Econom. 2004, 119, 231–255. [Google Scholar] [CrossRef]

- Bai, J. Inferential theory for factor models of large dimensions. Econometrica 2003, 71, 135–171. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Forecasting with many predictors. In Handbook of Economic Forecasting; Elsevier: Amsterdam, The Netherlands, 2006; Chapter 10; pp. 515–554. [Google Scholar]

- Boivin, J.; Ng, S. Are more data always better for factor analysis? J. Econom. 2006, 132, 169–194. [Google Scholar] [CrossRef]

- Otrok, C.; Whiteman, C.H. Bayesian leading indicators: Measuring and predicting economic conditions in Iowa. Int. Econ. Rev. 1998, 39, 997–1014. [Google Scholar] [CrossRef]

- Kim, C.J.; Nelson, C.R. Business cycle turning points, a new coincident index, and tests of duration dependence based on a dynamic factor model with regime switching. Rev. Econ. Stat. 1998, 80, 188–201. [Google Scholar] [CrossRef]

- Kose, M.A.; Otrok, C.; Whiteman, C.H. International business cycles: World, region, and country-specific factors. Am. Econ. Rev. 2003, 93, 1216–1239. [Google Scholar] [CrossRef]

- Cepni, O.; Guney, I.E.; Swanson, N.R. Forecasting and nowcasting emerging market GDP growth rates: The role of latent global economic policy uncertainty and macroeconomic data surprise factors. J. Forecast. 2018, 1, 1–19. [Google Scholar] [CrossRef]

- Kim, H.H.; Swanson, N.R. Mining big data using parsimonious factor, machine learning, variable selection and shrinkage methods. Int. J. Forecast. 2018, 34, 339–354. [Google Scholar] [CrossRef]

- Eickmeier, S.; Ziegler, C. How successful are dynamic factor models at forecasting output and inflation? A meta-analytic approach. J. Forecast. 2008, 27, 237–265. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Generalized shrinkage methods for forecasting using many predictors. J. Bus. Econ. Stat. 2012, 30, 481–493. [Google Scholar] [CrossRef]

- D’Agostino, A.; Giannone, D. Comparing alternative predictors based on large-panel factor models. Oxf. Bullet. Econom. Stat. 2012, 74, 306–326. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Forecasting using principal components from a large number of predictors. J. Am. Stat. Assoc. 2002, 97, 1167–1179. [Google Scholar] [CrossRef]

- Forni, M.; Hallin, M.; Reichlin, L.L. The Generalized Dynamic Factor Model: One-Sided Estimation and Forecasting. J. Am. Stat. Assoc. 2005, 100, 830–840. [Google Scholar] [CrossRef]

- Khandelwal, I.; Adhikari, R.; Verma, G. Time series forecasting using hybrid ARIMA and ANN models based on DWT decomposition. Procedia Comput. Sci. 2015, 48, 173–179. [Google Scholar] [CrossRef]

- Kandananond, K. Forecasting electricity demand in Thailand with an artificial neural network approach. Energies 2011, 4, 1246–1257. [Google Scholar] [CrossRef]

- Singh, P. Rainfall and financial forecasting using fuzzy time series and neural networks based model. Int. J. Mach. Learn. Cyber. 2018, 9, 491–506. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J. Forecasting stochastic neural network based on financial empirical mode decomposition. Neural Netw. 2017, 90, 8–20. [Google Scholar] [CrossRef]

- Fu, S.; Li, Y.; Sun, S.; Li, H. Evolutionary support vector machine for RMB exchange rate forecasting. Physica A 2019, 521, 692–704. [Google Scholar] [CrossRef]

- Villegas, M.A.; Pedregal, D.J.; Trapero, J.R. A support vector machine for model selection in demand forecasting applications. Comput. Ind. Eng. 2018, 121, 1–7. [Google Scholar] [CrossRef]

- Martínez, F.; Frías, M.P.; Pérez, M.D.; Rivera, A.J. A methodology for applying k-nearest neighbor to time series forecasting. Artif. Intell. Rev. 2019, 52, 2019–2037. [Google Scholar] [CrossRef]

- Dell’Acqua, P.; Bellotti, F.; Berta, R.; De Gloria, A. Time-aware multivariate nearest neighbor regression methods for traffic flow prediction. IEEE Tran. Intell. Transport. Syst. 2015, 16, 3393–3402. [Google Scholar] [CrossRef]

- Ahmed, N.K.; Atiya, A.F.; Gayar, N.E.; EI-Shishiny, H. An Empirical Comparison of Machine Learning Models for Time Series Forecasting. Econom. Rev. 2010, 29, 594–621. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. Statistical and Machine Learning forecasting methods: Concerns and ways forward. PLoS ONE 2018, 13, e0194889. [Google Scholar] [CrossRef]

- Prestwich, S.; Rossi, R.; Tarim, S.A.; Hnich, B. Mean-based error measures for intermittent demand forecasting. Int. J. Prod. Res. 2014, 52, 6782–6791. [Google Scholar] [CrossRef]

- Moon, S.; Hicks, C.; Simpson, A. The development of a hierarchical forecasting method for predicting spare parts demand in the South Korean Navy—A case study. Int. J. Prod. Econom. 2012, 140, 794–802. [Google Scholar] [CrossRef]

- Syntetos, A.A.; Boylan, J.E.; Croston, J.D. On the categorization of demand patterns. J. Oper. Res. Soc. 2005, 56, 95–503. [Google Scholar] [CrossRef]

- Babai, M.Z.; Ali, M.M.; Nikolopoulos, K. Impact of temporal aggregation on stock control performance of intermittent demand estimators: Empirical analysis. Omega 2012, 40, 713–721. [Google Scholar] [CrossRef]

- Kourentzes, N. Intermittent demand forecasts with neural networks. Int. J. Prod. Econom. 2013, 143, 198–206. [Google Scholar] [CrossRef]

- Kourentzes, N.; Petropoulos, F.; Trapero, J.R. Improving forecasting by estimating time series structural components across multiple frequencies. Int. J. Forecast. 2014, 30, 291–302. [Google Scholar] [CrossRef]

- Petropoulos, F.; Kourentzes, N.; Nikolopoulos, K. Another look at estimators for intermittent demand. Int. J. Prod. Econom. 2016, 181, 154–161. [Google Scholar] [CrossRef]

- Petropoulos, F.; Wang, X.; Disney, S.M. The inventory performance of forecasting methods: Evidence from the M3 competition data. Int. J. Forecast. 2018, 35, 251–265. [Google Scholar] [CrossRef]

- Chatfield, D.C.; Kim, J.G.; Harrison, T.P.; Hayya, J.C. The bullwhip effect—impact of stochastic lead time, information quality, and information sharing: A simulation study. Product. Oper. Manag. 2004, 13, 340–353. [Google Scholar] [CrossRef]

- Wakita, K.; Tsurumi, T. Finding community structure in Mega-scale social networks [extended abstract]. In Proceedings of the International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).