1. Introduction

Tishby et al. [

1] introduced the

Information Bottleneck (IB) objective function which learns a representation

Z of observed variables

that retains as little information about

X as possible but simultaneously captures as much information about

Y as possible:

is the mutual information. The hyperparameter

controls the trade-off between compression and prediction, in the same spirit as Rate-Distortion Theory [

2] but with a learned representation function

that automatically captures some part of the “semantically meaningful” information, where the semantics are determined by the observed relationship between

X and

Y. The IB framework has been extended to and extensively studied in a variety of scenarios, including Gaussian variables [

3], meta-Gaussians [

4], continuous variables via variational methods [

5,

6,

7], deterministic scenarios [

8,

9], geometric clustering [

10] and is used for learning invariant and disentangled representations in deep neural nets [

11,

12].

From the IB objective (Equation (

1)) we see that when

it will encourage

which leads to a trivial representation

Z that is independent of

X, while when

, it reduces to a maximum likelihood objective (e.g., in classification, it reduces to cross-entropy loss). Therefore, as we vary

from 0 to

, there must exist a point

at which IB starts to learn a nontrivial representation where

Z contains information about

X.

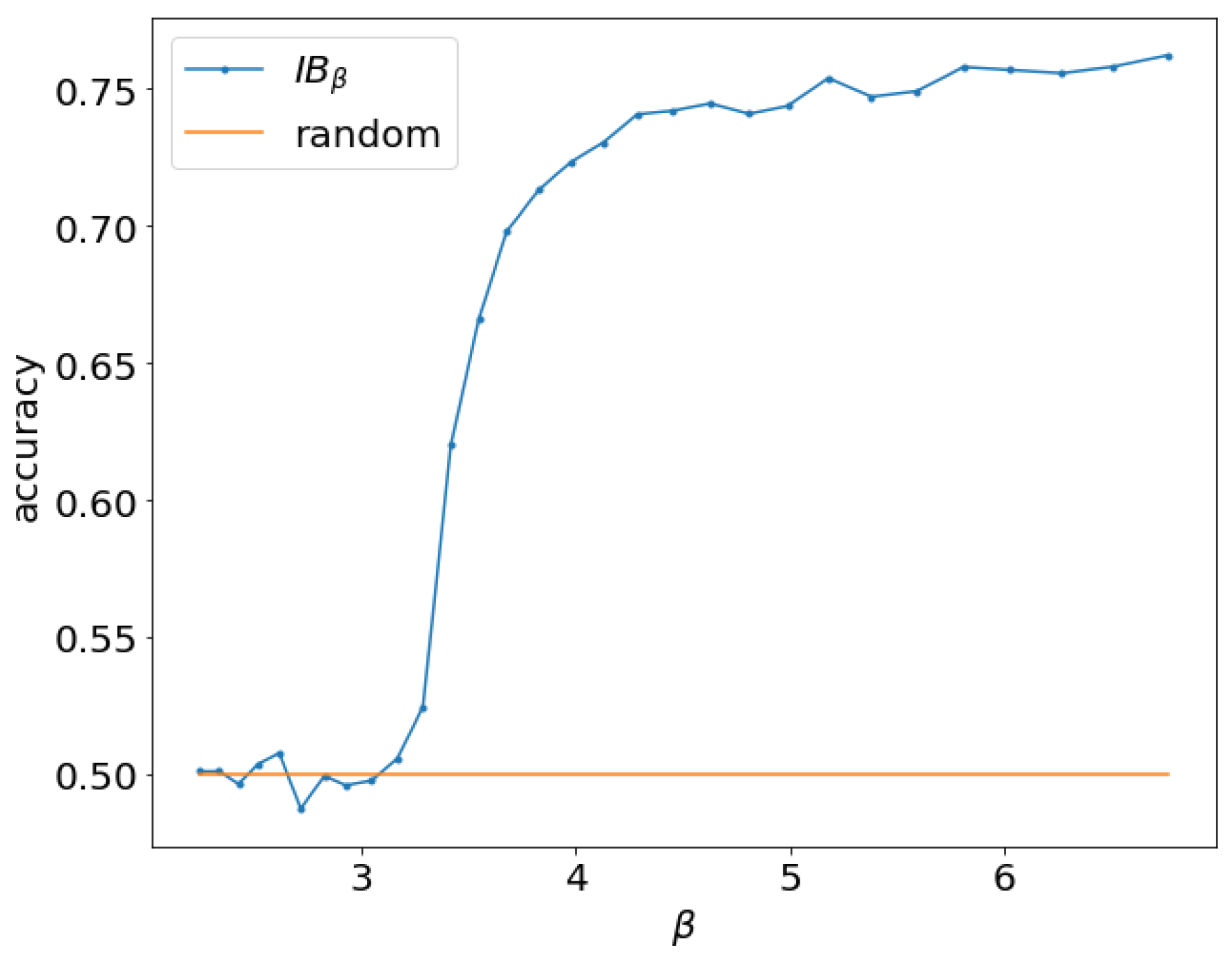

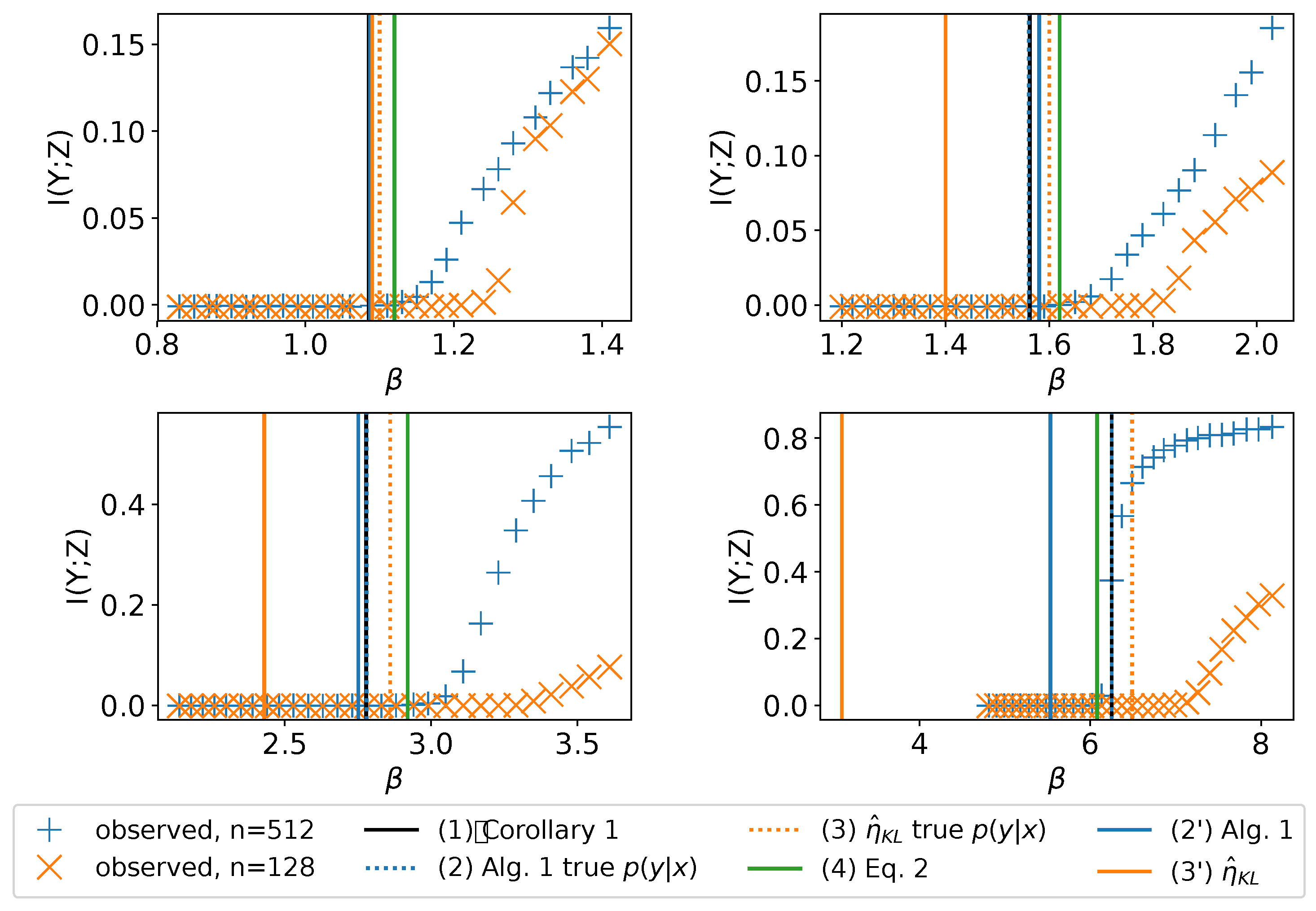

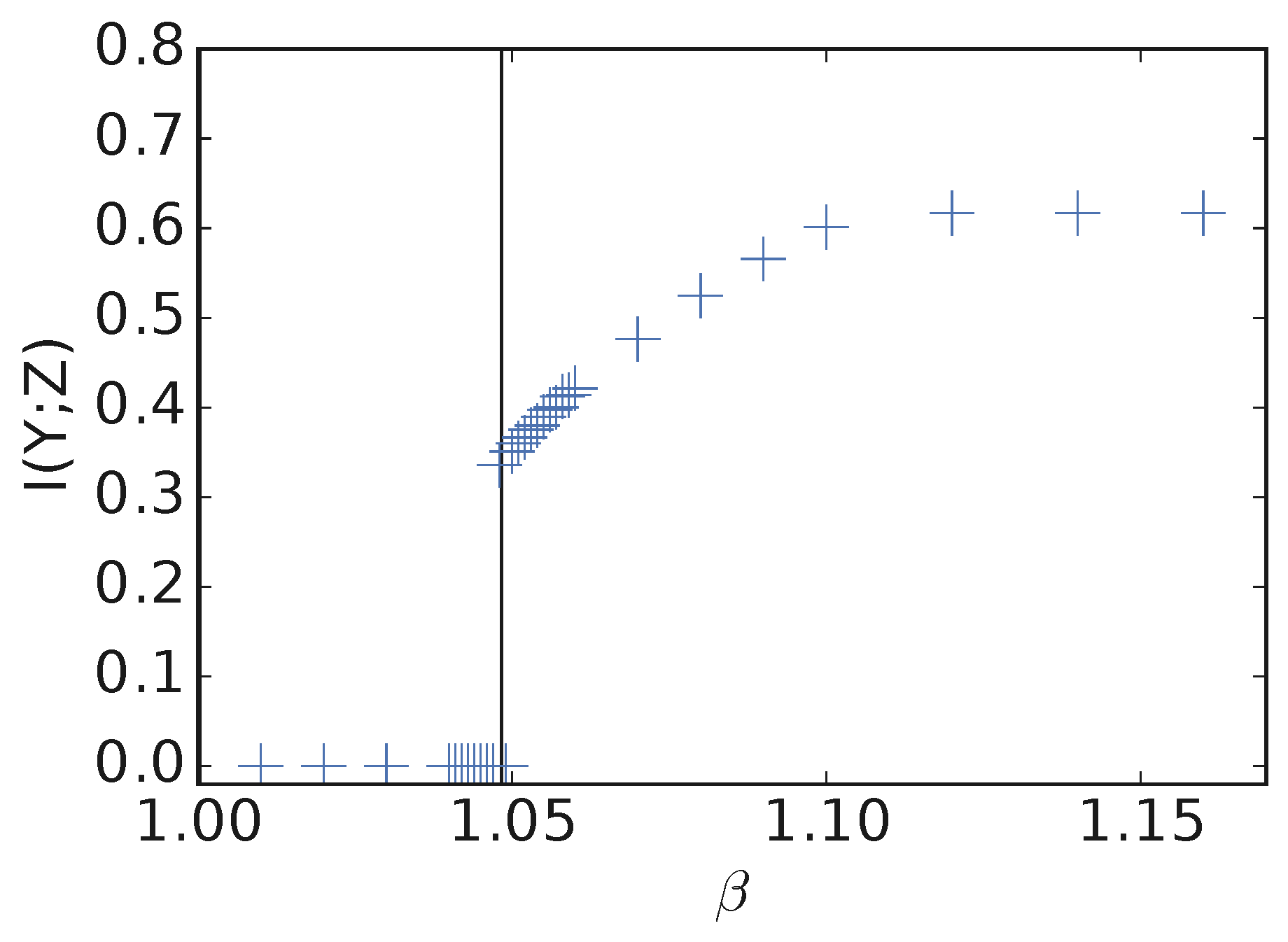

As an example, we train multiple variational information bottleneck (VIB) models on binary classification of MNIST [

13] digits 0 and 1 with 20% label noise at different

. The accuracy vs.

is shown in

Figure 1. We see that when

, no learning happens and the accuracy is the same as random guessing. Beginning with

, there is a clear phase transition where the accuracy sharply increases, indicating the objective is able to learn a nontrivial representation. In general, we observe that different datasets and model capacity will result in different

at which IB starts to learn a nontrivial representation. How does

depend on the aspects of the dataset and model capacity and how can we estimate it? What does an IB model learn at the onset of learning? Answering these questions may provide a deeper understanding of IB in particular and learning on two observed variables in general.

In this work, we begin to answer the above questions. Specifically:

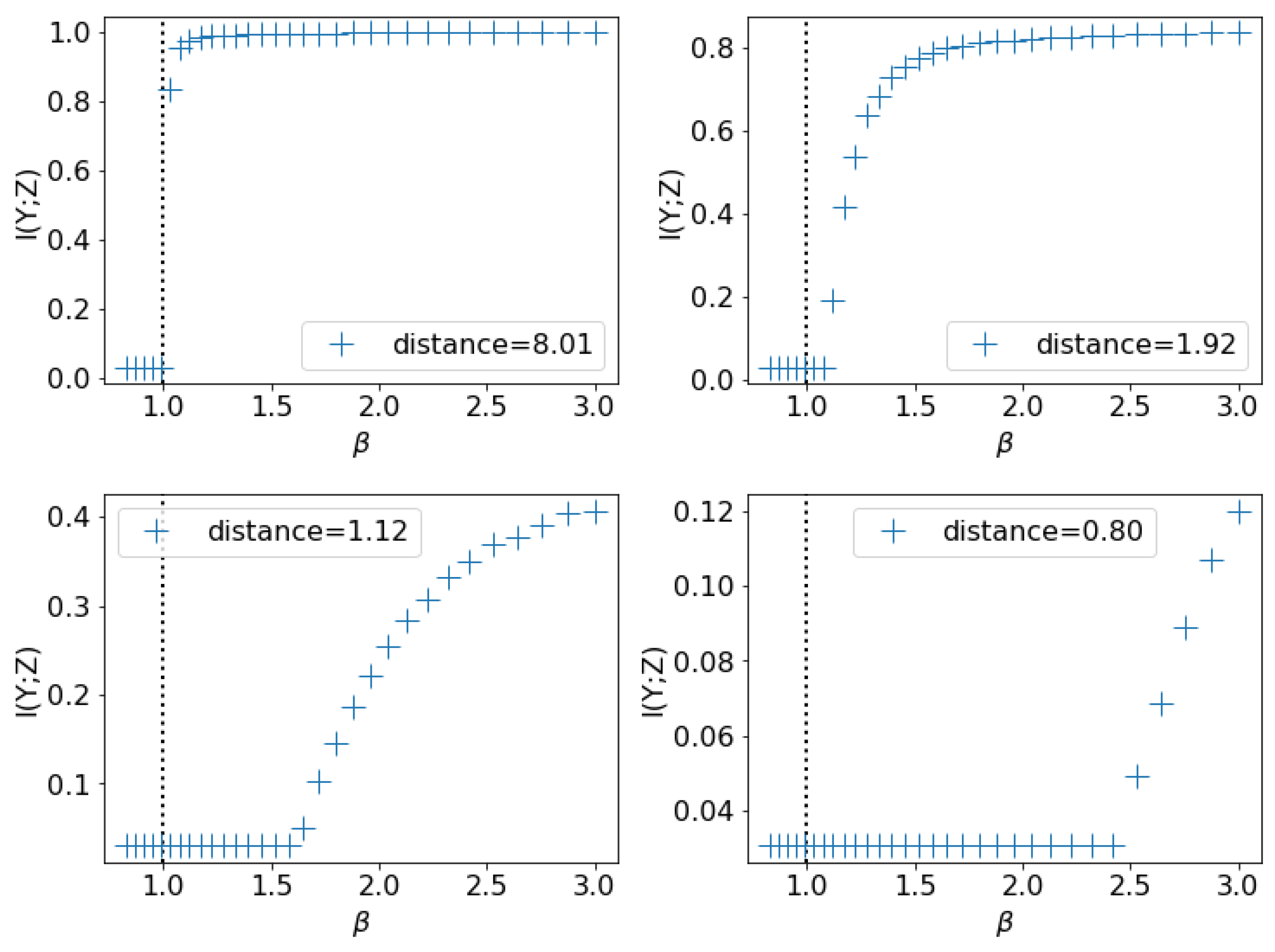

We introduce the concept of

IB-Learnability and show that when we vary

, the IB objective will undergo a phase transition from the inability to learn to the ability to learn (

Section 3).

Using the second-order variation, we derive sufficient conditions for IB-Learnability, which provide upper bounds for the learnability threshold

(

Section 4).

We show that IB-Learnability is determined by the largest

confident,

typical and

imbalanced subset of the examples (the

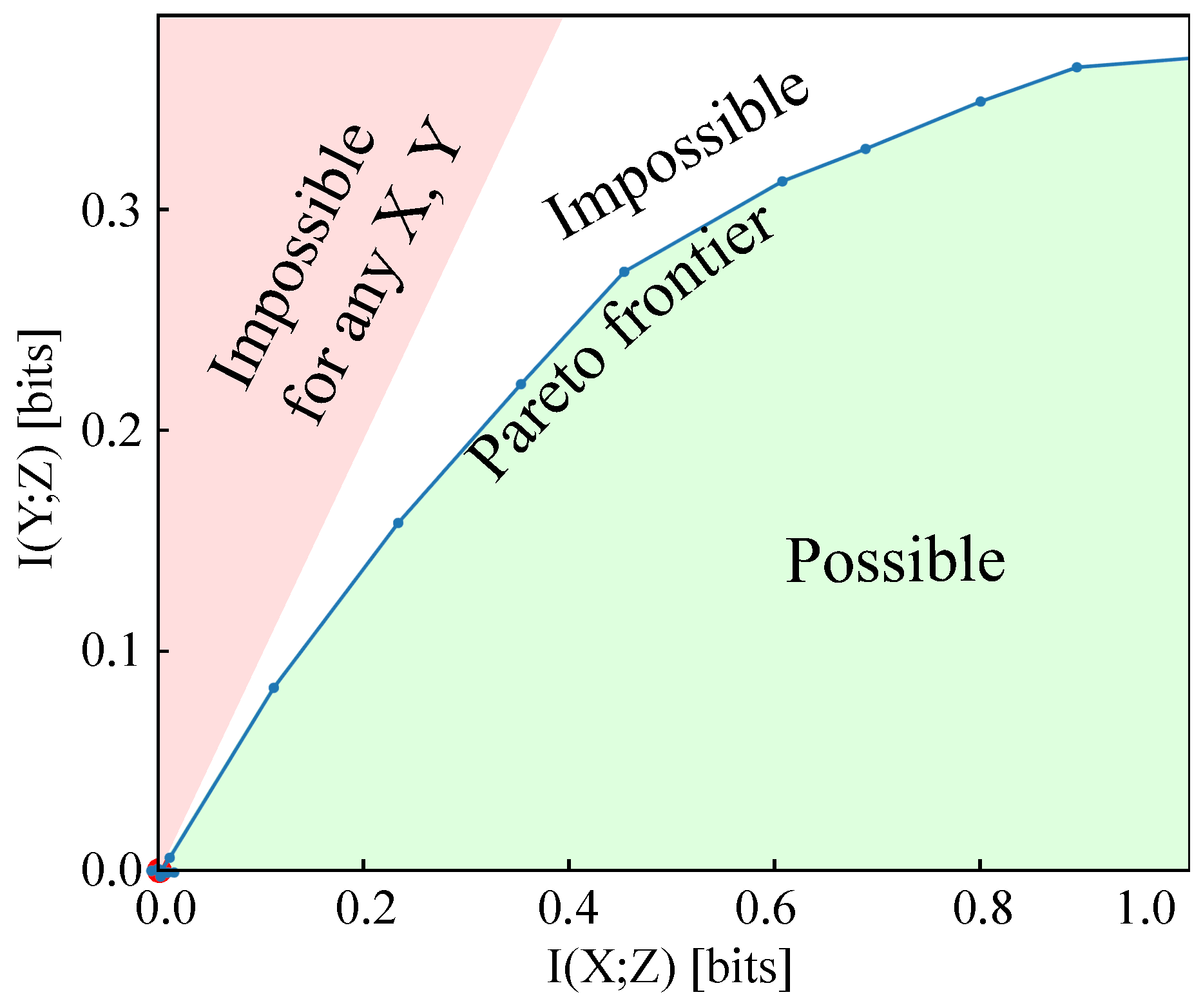

conspicuous subset), reveal its relationship with the slope of the Pareto frontier at the origin on the information plane

vs.

and discuss its relation to model capacity (

Section 5).

We prove a deep relationship between IB-Learnability, our upper bounds on

, the hypercontractivity coefficient, the contraction coefficient and the maximum correlation (

Section 5).

We also present an algorithm for estimating the onset of IB-Learnability and the conspicuous subset, which provide us with a tool for understanding a key aspect of the learning problem

(

Section 6). Finally, we use our main results to demonstrate on synthetic datasets, MNIST [

13] and CIFAR10 [

14] that the theoretical prediction for IB-Learnability closely matches experiment, and show the conspicuous subset our algorithm discovers (

Section 7).

2. Related Work

The seminal IB work [

1] provides a tabular method for exactly computing the optimal encoder distribution

for a given

and cardinality of the discrete representation,

. They did not consider the IB learnability problem as addressed in this work. Chechik et al. [

3] presents the Gaussian Information Bottleneck (GIB) for learning a multivariate Gaussian representation

Z of

, assuming that both

X and

Y are also multivariate Gaussians. Under GIB, they derive analytic formula for the optimal representation as a noisy linear projection to eigenvectors of the normalized regression matrix

and the learnability threshold

is then given by

where

is the largest eigenvalue of the matrix

. This work provides deep insights about relations between the dataset,

and optimal representations in the Gaussian scenario but the restriction to multivariate Gaussian datasets limits the generality of the analysis Another analytic treatment of IB is given in [

4], which reformulates the objective in terms of the copula functions. As with the GIB approach, this formulation restricts the form of the data distributions—the copula functions for the joint distribution

are assumed to be known, which is unlikely in practice.

Strouse and Schwab [

8] present the Deterministic Information Bottleneck (DIB), which minimizes the coding cost of the representation,

, rather than the transmission cost,

as in IB. This approach learns hard clusterings with different code entropies that vary with

. In this case, it is clear that a hard clustering with minimal

will result in a single cluster for all of the data, which is the DIB trivial solution. No analysis is given beyond this fact to predict the actual onset of learnability, however.

The first amortized IB objective is in the Variational Information Bottleneck (VIB) of Alemi et al. [

5]. VIB replaces the exact, tabular approach of IB with variational approximations of the classifier distribution (

) and marginal distribution (

). This approach cleanly permits learning a stochastic encoder,

, that is applicable to any

, rather than just the particular

X seen at training time. The cost of this flexibility is the use of variational approximations that may be less expressive than the tabular method. Nevertheless, in practice, VIB learns easily and is simple to implement, so we rely on VIB models for our experimental confirmation.

Closely related to IB is the recently proposed Conditional Entropy Bottleneck (CEB) [

7]. CEB attempts to explicitly learn the Minimum Necessary Information (MNI), defined as the point in the information plane where

. The MNI point may not be achievable even in principle for a particular dataset. However, the CEB objective provides an explicit estimate of how closely the model is approaching the MNI point by observing that a necessary condition for reaching the MNI point occurs when

. The CEB objective

is equivalent to IB at

, so our analysis of IB-Learnability applies equally to CEB.

Kolchinsky et al. [

9] show that when

Y is a deterministic function of X, the “corner point” of the IB curve (where

) is the unique optimizer of the IB objective for all

(with the parameterization of Kolchinsky et al. [

9],

), which they consider to be a “trivial solution”. However, their use of the term “trivial solution” is distinct from ours. They are referring to the observation that all points on the IB curve contain uninteresting interpolations between two different but valid solutions on the optimal frontier, rather than demonstrating a non-trivial trade-off between compression and prediction as expected when varying the IB Lagrangian. Our use of “trivial” refers to whether IB is capable of learning at all given a certain dataset and value of

.

Achille and Soatto [

12] apply the IB Lagrangian to the weights of a neural network, yielding InfoDropout. In Achille and Soatto [

11], the authors give a deep and compelling analysis of how the IB Lagrangian can yield invariant and disentangled representations. They do not, however, consider the question of the onset of learning, although they are aware that not all models will learn a non-trivial representation. More recently, Achille et al. [

15] repurpose the InfoDropout IB Lagrangian as a Kolmogorov Structure Function to analyze the ease with which a previously-trained network can be fine-tuned for a new task. While that work is tangentially related to learnability, the question it addresses is substantially different from our investigation of the onset of learning.

Our work is also closely related to the hypercontractivity coefficient [

16,

17], defined as

, which by definition equals the inverse of

, our IB-learnability threshold. In [

16], the authors prove that the hypercontractivity cofficient equals the contraction coefficient

and Kim et al. [

18] propose a practical algorithm to estimate

, which provides a measure for potential influence in the data. Although our goal is different, the sufficient conditions we provide for IB-Learnability are also lower bounds for the hypercontractivity coefficient.

3. IB-Learnability

We are given instances of drawn from a distribution with probability (density) with support of , where unless otherwise stated, both X and Y can be discrete or continuous variables. We use capital letters for random variables and lowercase to denote the instance of variables, with and denoting their probability or probability density, respectively. is our training data and may be characterized by different types of noise. The nature of this training data and the choice of will be sufficient to predict the transition from unlearnable to learnable.

We can learn a representation

Z of

X with conditional probability

, such that

obey the Markov chain

. Equation (

1) above gives the IB objective with Lagrange multiplier

,

, which is a functional of

:

. The IB learning task is to find a conditional probability

that minimizes

. The larger

, the more the objective favors making a good prediction for

Y. Conversely, the smaller

, the more the objective favors learning a concise representation.

How can we select

such that the IB objective learns a useful representation? In practice, the selection of

is done empirically. Indeed, Tishby et al. [

1] recommends “sweeping

”. In this paper, we provide theoretical guidance for choosing

by introducing the concept of IB-Learnability and providing a series of IB-learnable conditions.

Definition 1. is-learnable if there exists a Z given by some, such that, wherecharacterizes the trivial representation whereis independent of X.

If is -learnable, then when is globally minimized, it will not learn a trivial representation. On the other hand, if is not -learnable, then when is globally minimized, it may learn a trivial representation.

3.1. Trivial Solutions

Definition 1 defines trivial solutions in terms of representations where . Another type of trivial solution occurs when but . This type of trivial solution is not directly achievable by the IB objective, as is minimized but it can be achieved by construction or by chance. It is possible that starting learning from could result in access to non-trivial solutions not available from . We do not attempt to investigate this type of trivial solution in this work.

3.2. Necessary Condition for IB-Learnability

From Definition 1, we can see that -Learnability for any dataset requires . In fact, from the Markov chain , we have via the data-processing inequality. If , then since and , we have that . Hence is not -learnable for .

Due to the reparameterization invariance of mutual information, we have the following theorem for -Learnability:

Lemma 1. Letbe an invertible map (if X is a continuous variable, g is additionally required to be continuous). Thenandhave the same-Learnability.

The proof for Lemma 1 is in

Appendix A.2. Lemma 1 implies a favorable property for any condition for

-Learnability: the condition should be invariant to invertible mappings of

X. We will inspect this invariance in the conditions we derive in the following sections.

4. Sufficient Conditions for IB-Learnability

Given , how can we determine whether it is -learnable? To answer this question, we derive a series of sufficient conditions for -Learnability, starting from its definition. The conditions are in increasing order of practicality, while sacrificing as little generality as possible.

Firstly, Theorem 1 characterizes the

-Learnability range for

, with proof in

Appendix A.3:

Theorem 1. Ifis-learnable, then for any, it is-learnable.

Based on Theorem 1, the range of such that is -learnable has the form . Thus, is the threshold of IB-Learnability.

Lemma 2. is a stationary solution for.

The proof in

Appendix A.6 shows that both first-order variations

and

vanish at the trivial representation

, so

at the trivial representation.

Lemma 2 yields our strategy for finding sufficient conditions for learnability: find conditions such that

is not a local minimum for the functional

. Based on the necessary condition for the minimum (

Appendix A.4), we have the following theorem (The theorems in this paper deal with learnability w.r.t. true mutual information. If parameterized models are used to approximate the mutual information, the limitation of the model capacity will translate into more uncertainty of

Y given

X, viewed through the lens of the model.):

Theorem 2 (Suff. Cond. 1). A sufficient condition for to be -learnable is that there exists a perturbation function (so that the perturbed probability (density) is ) with , such that the second-order variation at the trivial representation .

The proof for Theorem 2 is given in

Appendix A.4. Intuitively, if

, we can always find a

in the neighborhood of the trivial representation

, such that

, thus satisfying the definition for

-Learnability.

To make Theorem 2 more practical, we perturb around the trivial solution and expand to the second order of . We can then prove Theorem 3:

Theorem 3 (

Suff. Cond. 2)

. A sufficient condition for to be -learnable is X and Y are not independent andwhere the functional is given byMoreover, we have thatis a lower bound of the slope of the Pareto frontier in the information planevs.at the origin.

The proof is given in

Appendix A.7, which also shows that if

in Theorem 3 is satisfied, we can construct a perturbation function

with

,

for some

, such that

satisfies Theorem 2. It also shows that the converse is true: if there exists

such that the condition in Theorem 2 is true, then Theorem 3 is satisfied, that is,

. (We do not claim that any

satisfying Theorem 2 can be decomposed to

at the onset of learning. But from the equivalence of Theorems 2 and 3 as explained above, when there exists an

such that Theorem 2 is satisfied, we can always construct an

that also satisfies Theorem 2.) Moreover, letting the perturbation function

at the trivial solution, we have

where

is the estimated

by IB for a certain

,

and

is a constant. This shows how the

by IB explicitly depends on

at the onset of learning. The proof is provided in

Appendix A.8.

Theorem 3 suggests a method to estimate : we can parameterize for example, by a neural network, with the objective of minimizing . At its minimization, provides an upper bound for , and provides a soft clustering of the examples corresponding to a nontrivial perturbation of at that minimizes .

Alternatively, based on the property of

, we can also use a specific functional form for

in Equation (

2) and obtain a stronger sufficient condition for

-Learnability. But we want to choose

as near to the infimum as possible. To do this, we note the following characteristics for the R.H.S of Equation (

2):

We can set

to be nonzero if

for some region

and 0 otherwise. Then we obtain the following sufficient condition:

The numerator of the R.H.S. of Equation (

4) attains its minimum when

is a constant within

. This can be proved using the Cauchy-Schwarz inequality:

, setting

,

and defining the inner product as

. Therefore, the numerator of the R.H.S. of Equation (

4)

and attains equality when

is constant.

Based on these observations, we can let be a nonzero constant inside some region and 0 otherwise and the infimum over an arbitrary function is simplified to infimum over and we obtain a sufficient condition for -Learnability, which is a key result of this paper:

Theorem 4 (

Conspicuous Subset Suff. Cond.)

. A sufficient condition for to be -learnable is X and Y are not independent andwhere denotes the event that , with probability .gives a lower bound of the slope of the Pareto frontier in the information planevs.at the origin.

The proof is given in

Appendix A.9. In the proof we also show that this condition is invariant to invertible mappings of

X.

6. Estimating the IB-Learnability Condition

Theorem 4 not only reveals the relationship between the learnability threshold for and the least noisy region of but also provides a way to practically estimate , both in the general classification case and in more structured settings.

6.1. Estimation Algorithm

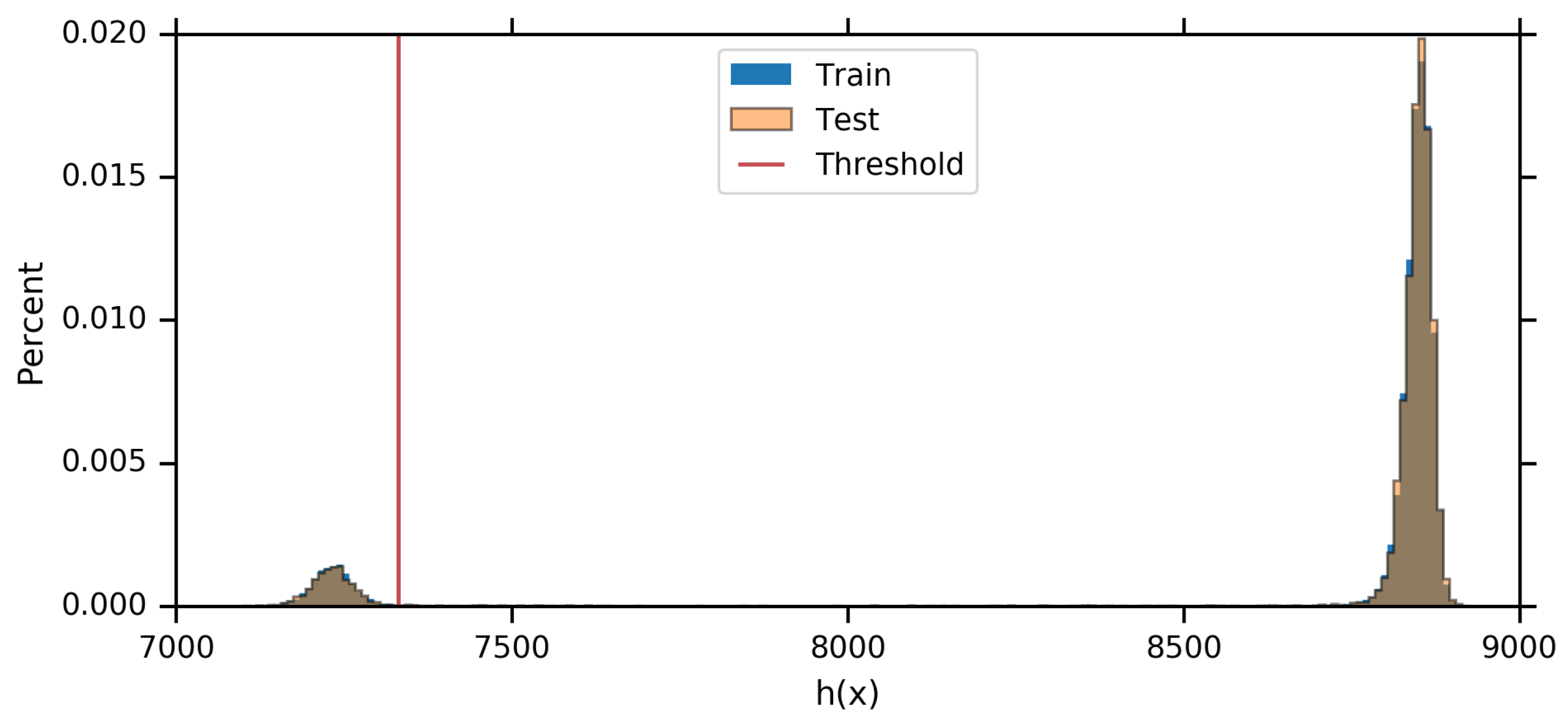

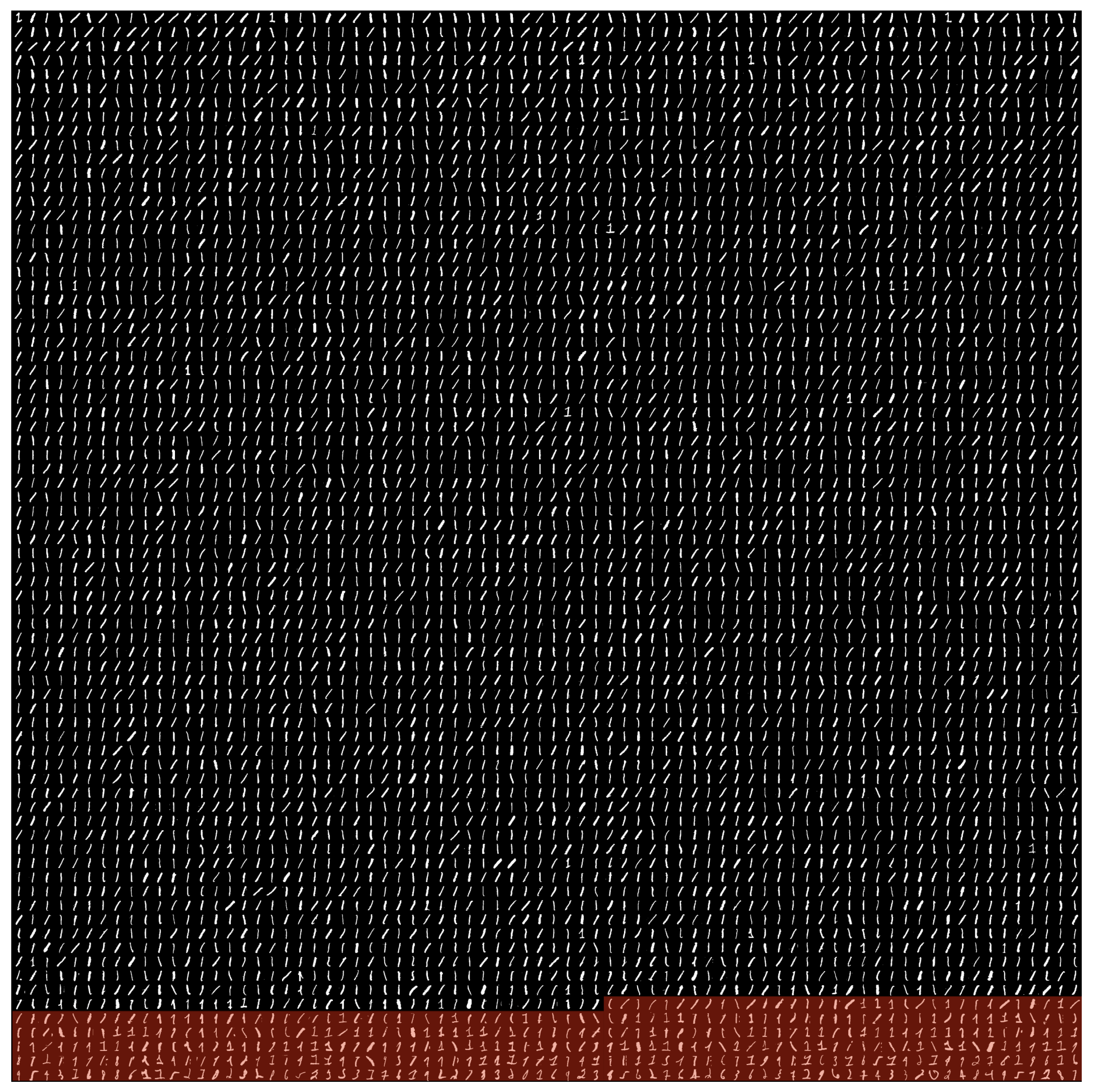

Based on Theorem 4, for general classification tasks we suggest Algorithm 1 to empirically estimate an upper-bound , as well as discovering the conspicuous subset that determines .

We approximate the probability of each example

by its empirical probability,

. For example, for MNIST,

, where

N is the number of examples in the dataset. The algorithm starts by first learning a maximum likelihood model of

, using for example, feed-forward neural networks. It then constructs a matrix

and a vector

to store the estimated

and

for all the examples in the dataset. To find the subset

such that the

is as small as possible, by previous analysis we want to find a

conspicuous subset such that its

is large for a certain class

j (to make the denominator of Equation (

5) large) and containing as many elements as possible (to make the numerator small).

We suggest the following heuristics to discover such a conspicuous subset. For each class j, we sort the rows of according to its probability for the pivot class j by decreasing order and then perform a search over for . Since is large when contains too few or too many elements, the minimum of for class j will typically be reached with some intermediate-sized subset and we can use binary search or other discrete search algorithm for the optimization. The algorithm stops when does not improve by tolerance . The algorithm then returns the as the minimum over all the classes , as well as the conspicuous subset that determines this .

After estimating , we can then use it for learning with IB, either directly or as an anchor for a region where we can perform a much smaller sweep than we otherwise would have. This may be particularly important for very noisy datasets, where can be very large.

| Algorithm 1 Estimating the upper bound for and identifying the conspicuous subset |

Require: Dataset . The number of classes is C.

Require: tolerance for estimating - 1:

Learn a maximum likelihood model using the dataset . - 2:

Construct matrix such that . - 3:

Construct vector such that . - 4:

forjin: - 5:

Sort the rows of in decreasing values of . - 6:

Search , until is minimal with tolerance , where . - 7:

end for - 8:

. - 9:

. - 10:

the rows of indexed by . - 11:

return

|

|

subroutine Get():

- s1:

number of rows of . - s2:

number of columns of . - s3:

number of elements of . - s4:

, . - s5:

- s6:

return

|

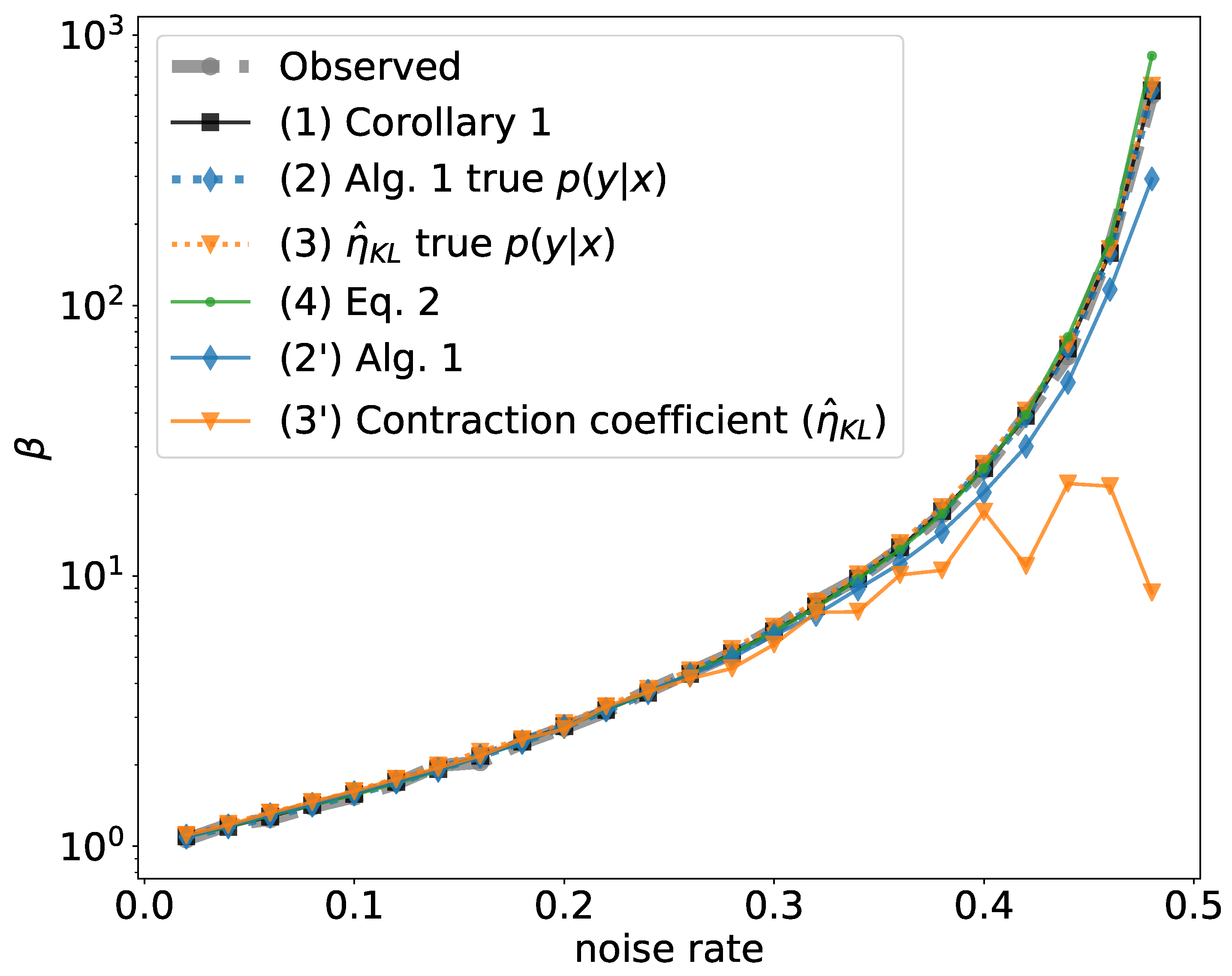

6.2. Special Cases for Estimating

Theorem 4 may still be challenging to estimate, due to the difficulty of making accurate estimates of and searching over . However, if the learning problem is more structured, we may be able to obtain a simpler formula for the sufficient condition.

6.2.1. Class-Conditional Label Noise

Classification with noisy labels is a common practical scenario. An important noise model is that the labels are randomly flipped with some hidden class-conditional probabilities and we only observe the corrupted labels. This problem has been studied extensively [

22,

23,

24,

25,

26]. If IB is applied to this scenario, how large

do we need? The following corollary provides a simple formula.

Corollary 1. Suppose that the true class labels areand the input space belonging to eachhas no overlap. We only observe the corrupted labels y with class-conditional noiseand Y is not independent of X. We have that a sufficient condition for-Learnability is: We see that under class-conditional noise, the sufficient condition reduces to a discrete formula which only depends on the noise rates

and the true class probability

, which can be accurately estimated via, for example, Northcutt et al. [

26]. Additionally, if we know that the noise is class-conditional but the observed

is greater than the R.H.S. of Equation (

8), we can deduce that there is overlap between the true classes. The proof of Corollary 1 is provided in

Appendix A.10.

6.2.2. Deterministic Relationships

Theorem 4 also reveals that relates closely to whether Y is a deterministic function of X, as shown by Corollary 2:

Corollary 2. Assume that Y contains at least one value y such that its probability. If Y is a deterministic function of X and not independent of X, then a sufficient condition for-Learnability is.

The assumption in the Corollary 2 is satisfied by classification and certain regression problems. (The following scenario does not satisfy this assumption: for certain regression problems where

Y is a continuous random variable and the probability density function

is bounded, then for any

y, the

probability has measure 0.)This corollary generalizes the result in Reference [

9] which only proves it for classification problems. Combined with the necessary condition

for any dataset

to be

-learnable (

Section 3), we have that under the assumption, if

Y is a deterministic function of

X, then a necessary and sufficient condition for

-learnability is

; that is, its

is 1. The proof of Corollary 2 is provided in

Appendix A.10.

Therefore, in practice, if we find that

, we may infer that

Y is not a deterministic function of

X. For a classification task, we may infer that either some classes have overlap or the labels are noisy. However, recall that finite models may add effective class overlap if they have insufficient capacity for the learning task, as mentioned in

Section 4. This may translate into a higher observed

, even when learning deterministic functions.