Conditional Gaussian Systems for Multiscale Nonlinear Stochastic Systems: Prediction, State Estimation and Uncertainty Quantification

Abstract

Contents

1. Introduction

2. Overview: Data vs. Model, Data-Driven Modeling Framework, and Efficient Data Assimilation and Prediction Strategies with Solvable Conditional Statistics

3. A Summary of the General Mathematical Structure of Nonlinear Conditional Gaussian Systems

3.1. Conditional Gaussian Nonlinear Dynamical Systems

3.2. Kalman–Bucy Filter: The Simplest and Special Data Assimilation Example within Conditional Gaussian Framework

3.3. Physics-Constrained Nonlinear Models with Conditional Gaussian Statistics

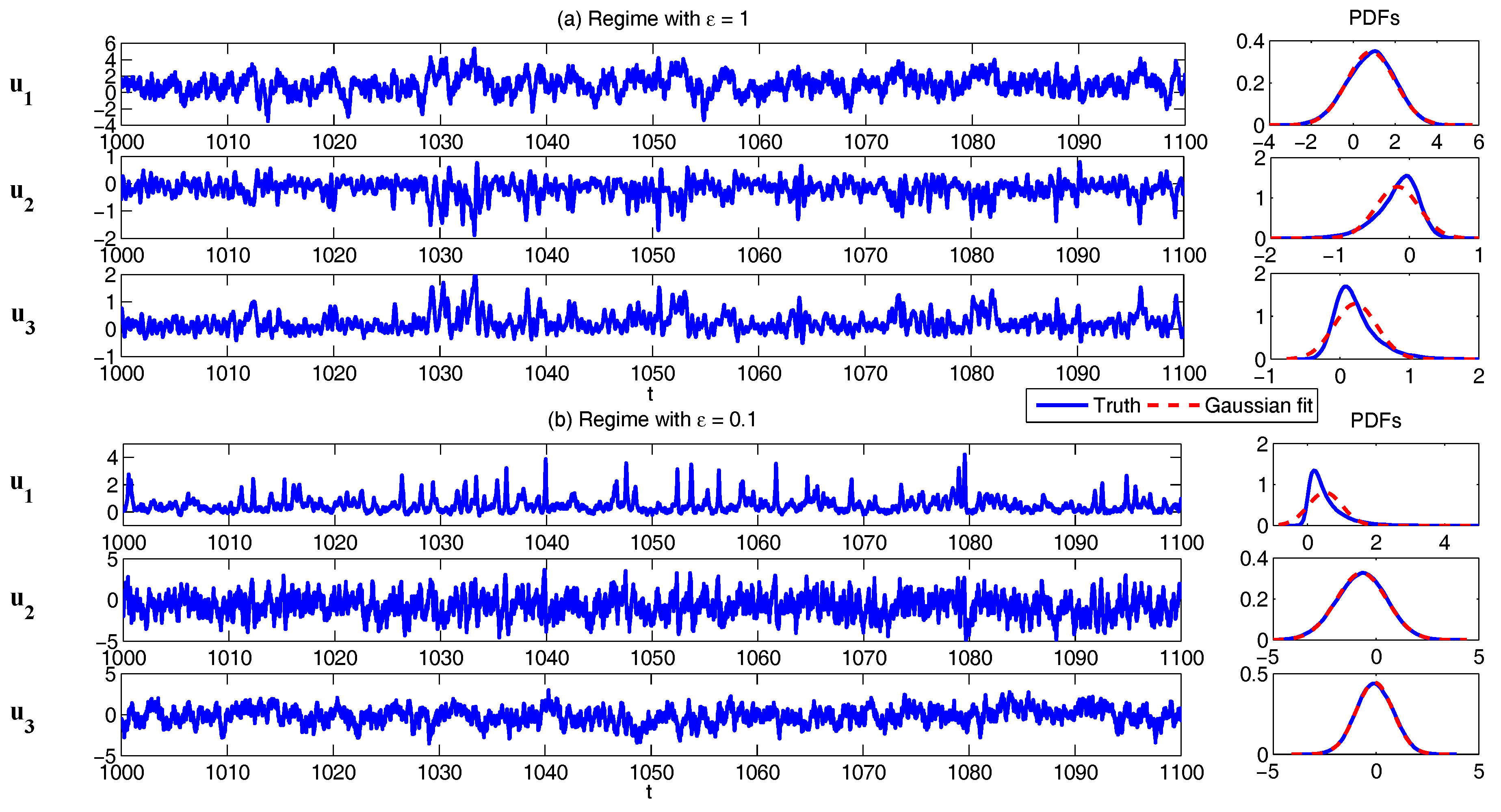

3.4. Multiscale Conditional Gaussian with MTV Stochastic Modeling Strategy

- The equations of motion for the unresolved fast modes are modified by representing the nonlinear self-interactions terms between unresolved modes by stochastic terms.

- The equations of motion for the unresolved fast modes are eliminated using the standard projection technique for stochastic differential equations.

4. A Gallery of Examples of Conditional Gaussian Systems

4.1. Physics-Constrained Nonlinear Low-Order Stochastic Models

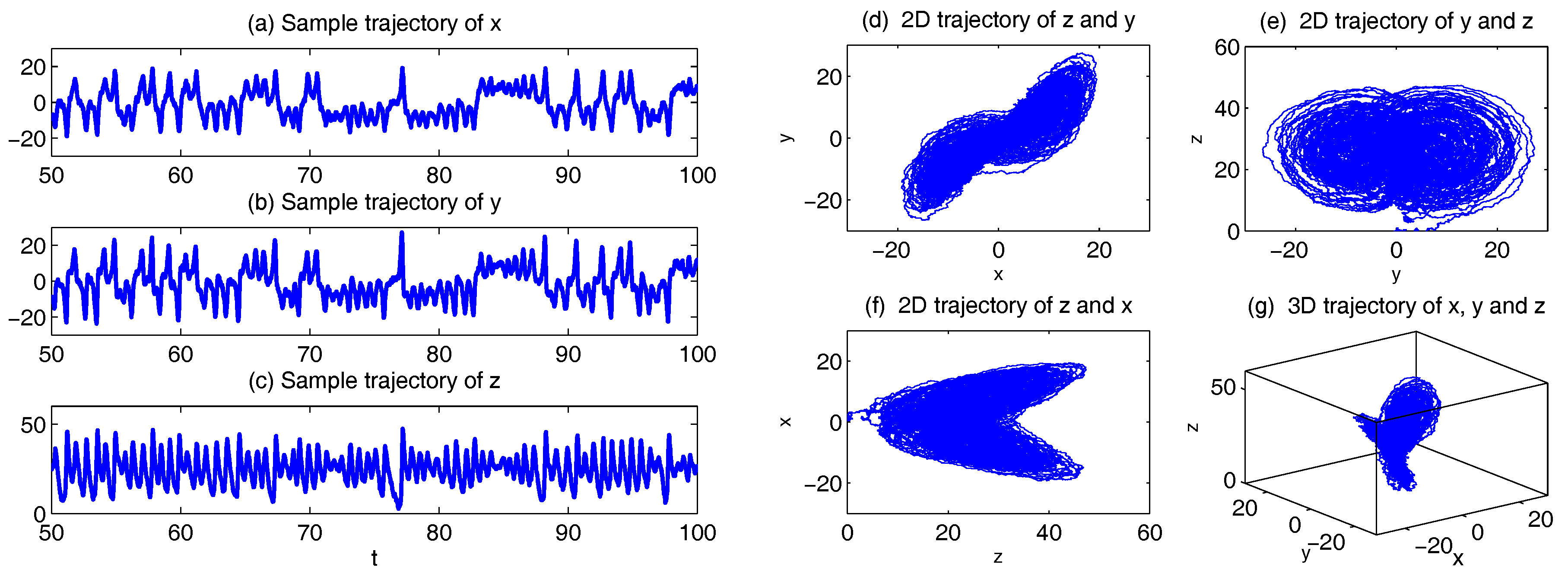

4.1.1. The Noisy Versions of Lorenz Models

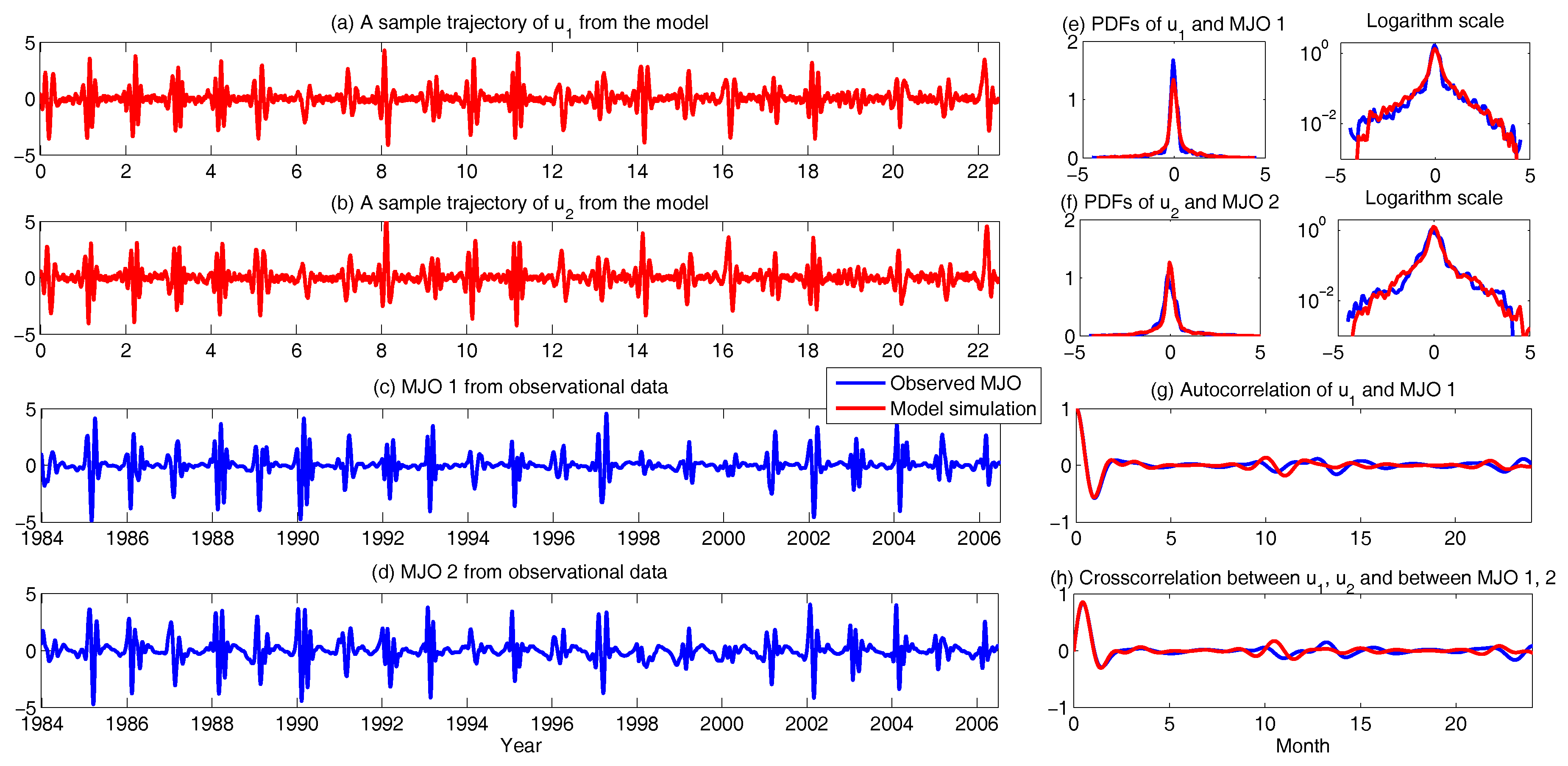

4.1.2. Nonlinear Stochastic Models for Predicting Intermittent MJO and Monsoon Indices

4.1.3. A Simple Stochastic Model with Key Features of Atmospheric Low-Frequency Variability

4.1.4. A Nonlinear Triad Model with Multiscale Features

4.1.5. Conceptual Models for Turbulent Dynamical Systems

4.1.6. A Conceptual Model of the Coupled Atmosphere and Ocean

4.1.7. A Low-Order Model of Charney–DeVore Flows

4.1.8. A Paradigm Model for Topographic Mean Flow Interaction

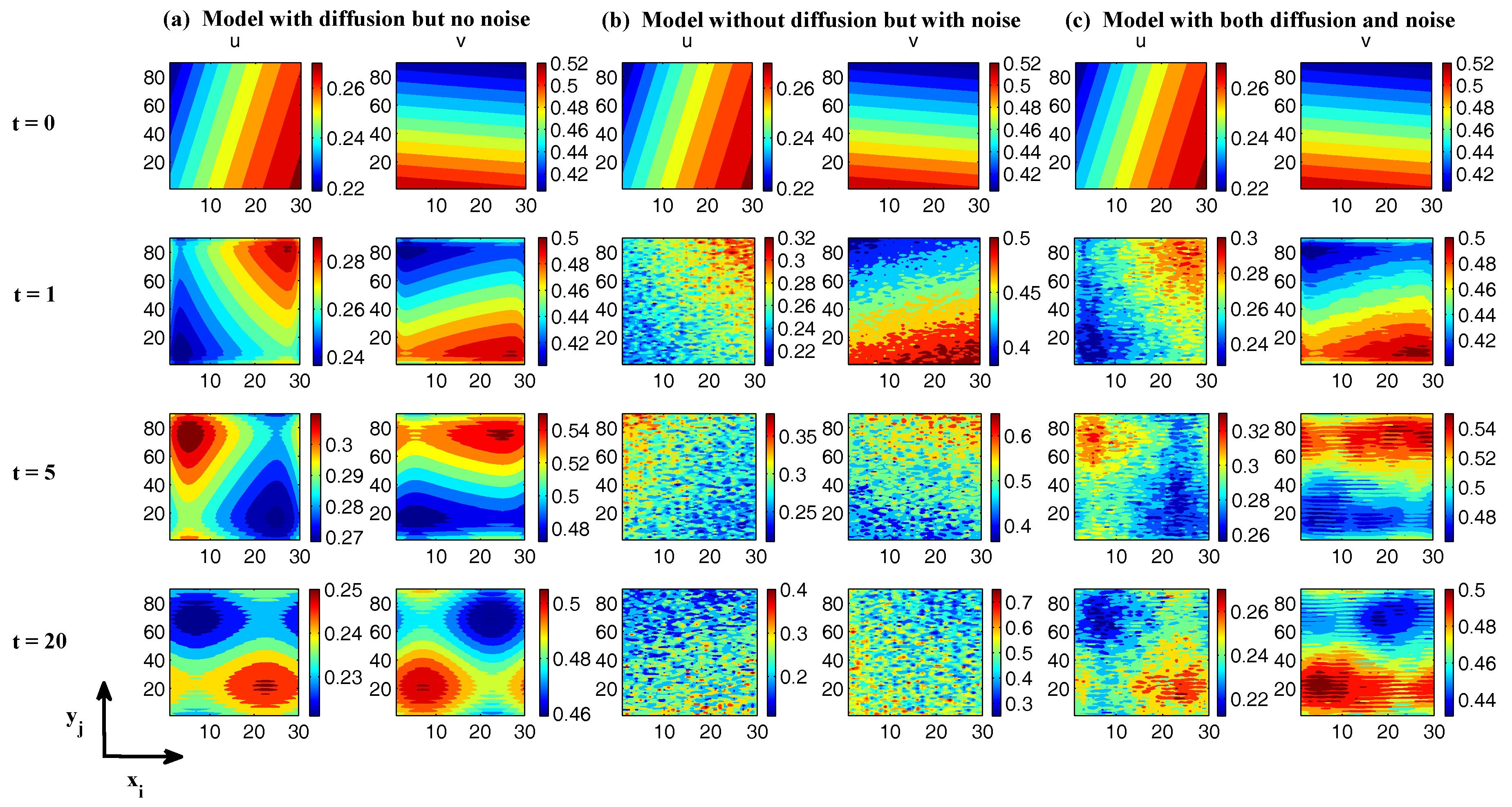

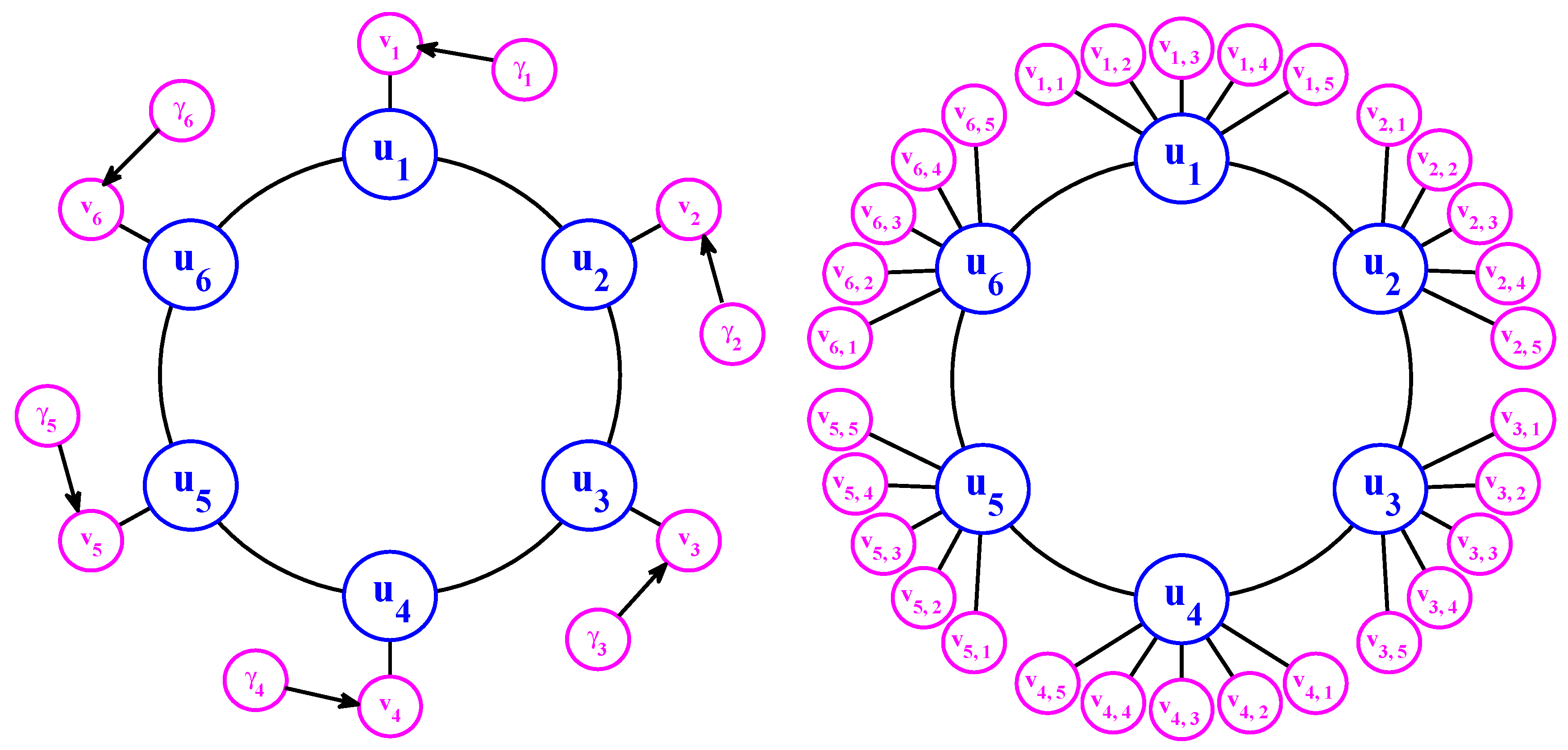

4.2. Stochastically Coupled Reaction–Diffusion Models in Neuroscience and Ecology

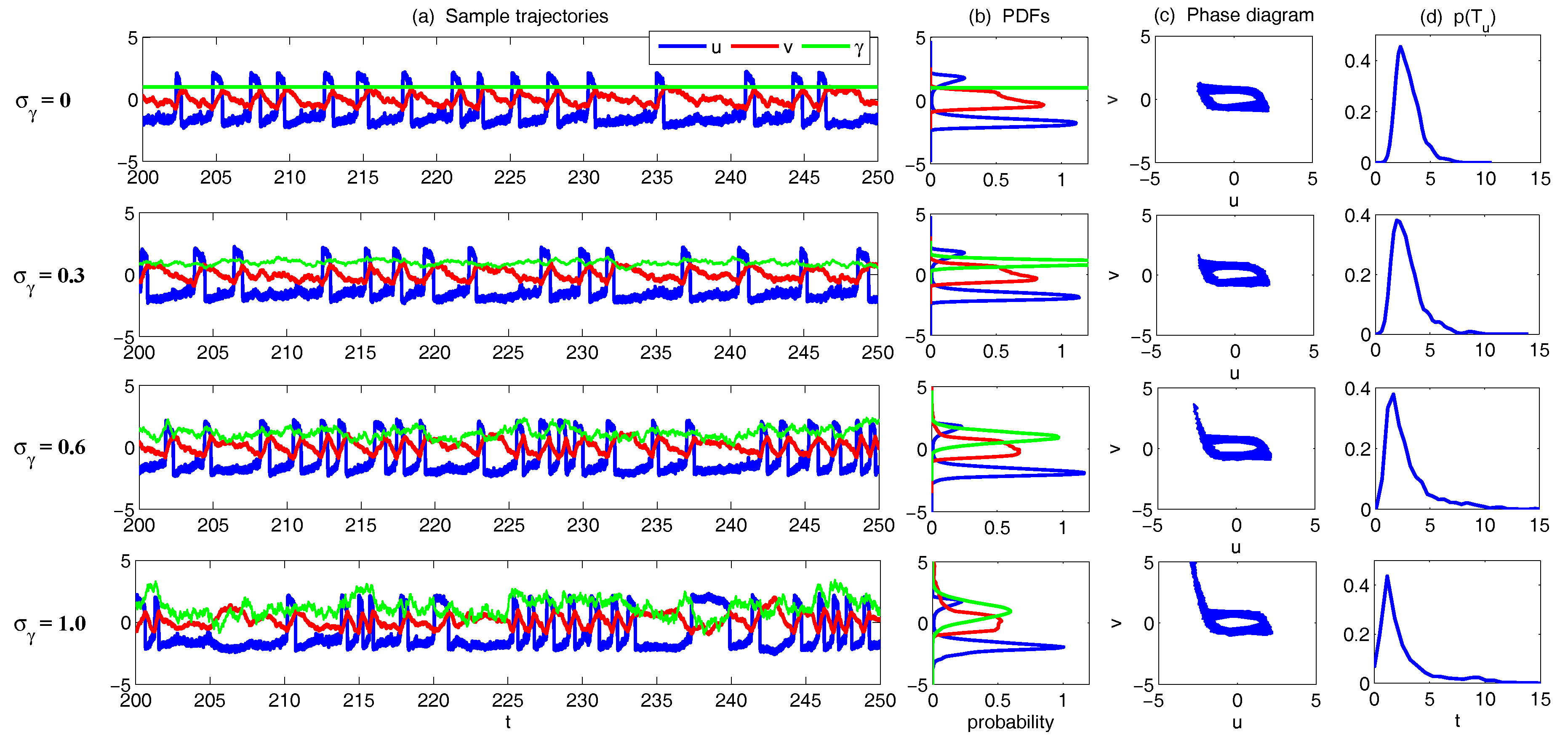

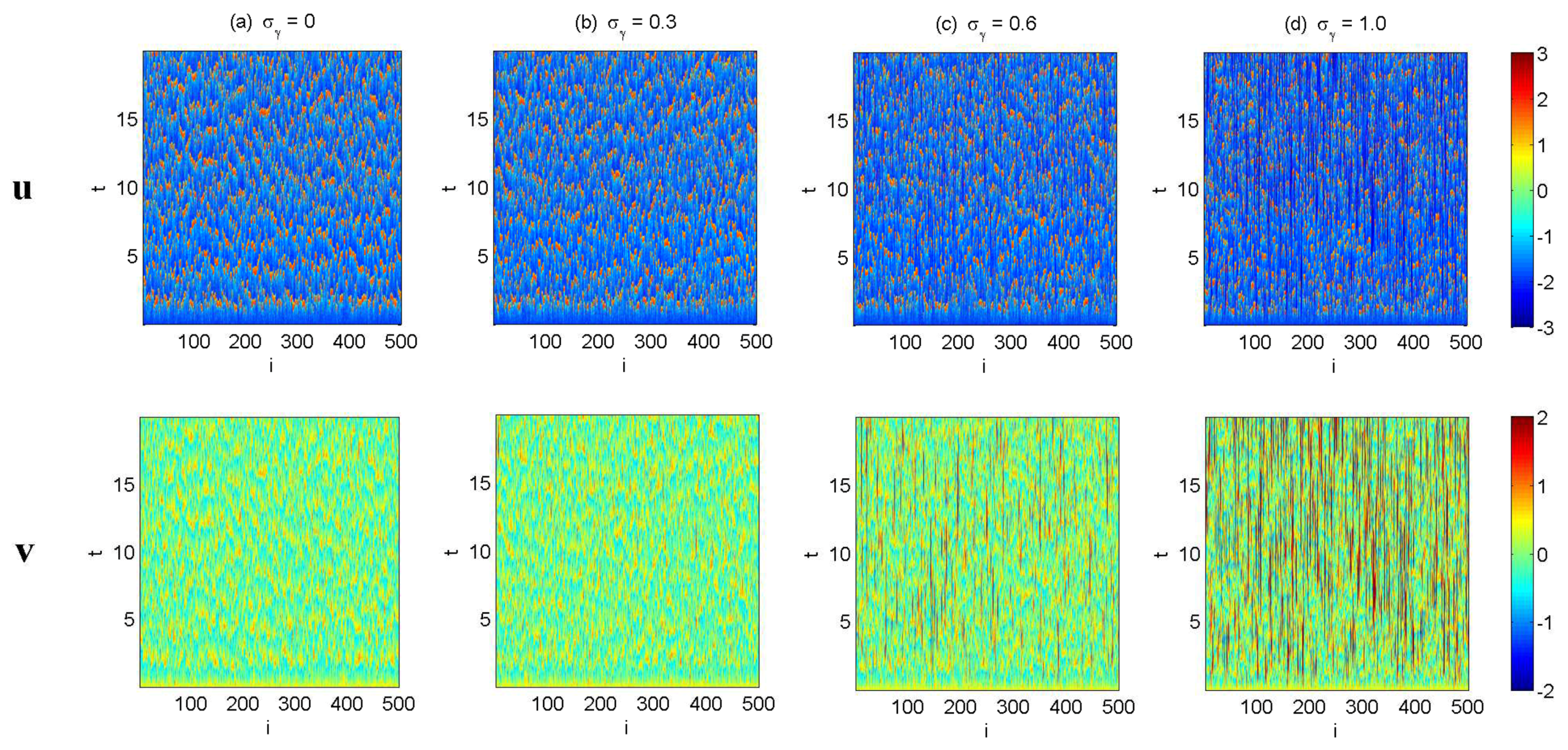

4.2.1. Stochastically Coupled FitzHugh–Nagumo (FHN) Models

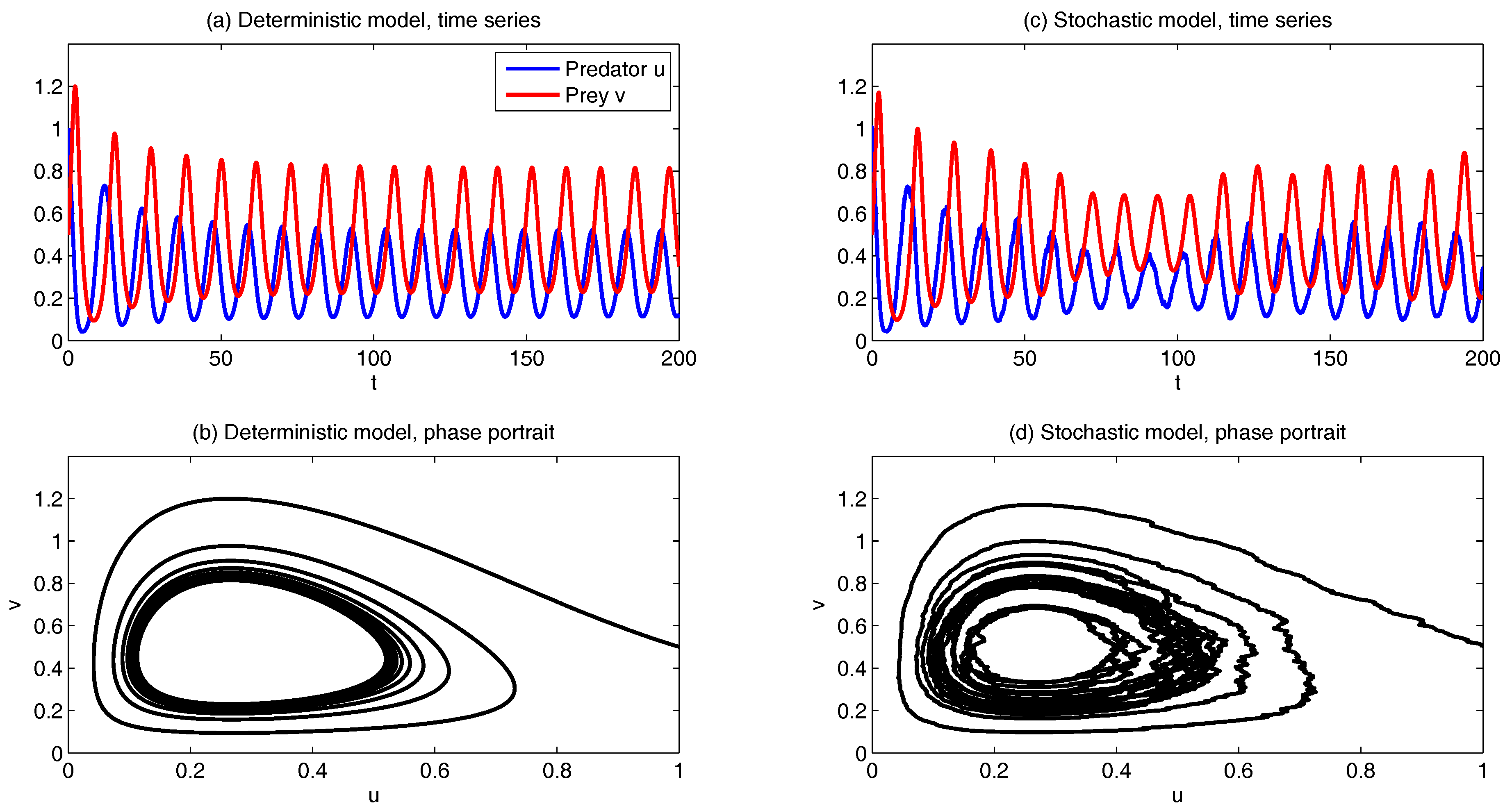

4.2.2. The Predator–Prey Models

4.2.3. A Stochastically Coupled SIR Epidemic Model

4.2.4. A Nutrient-Limited Model for Avascular Cancer Growth

4.3. Large-Scale Dynamical Models in Turbulence, Fluids and Geophysical Flows

4.3.1. The MJO Stochastic Skeleton Model

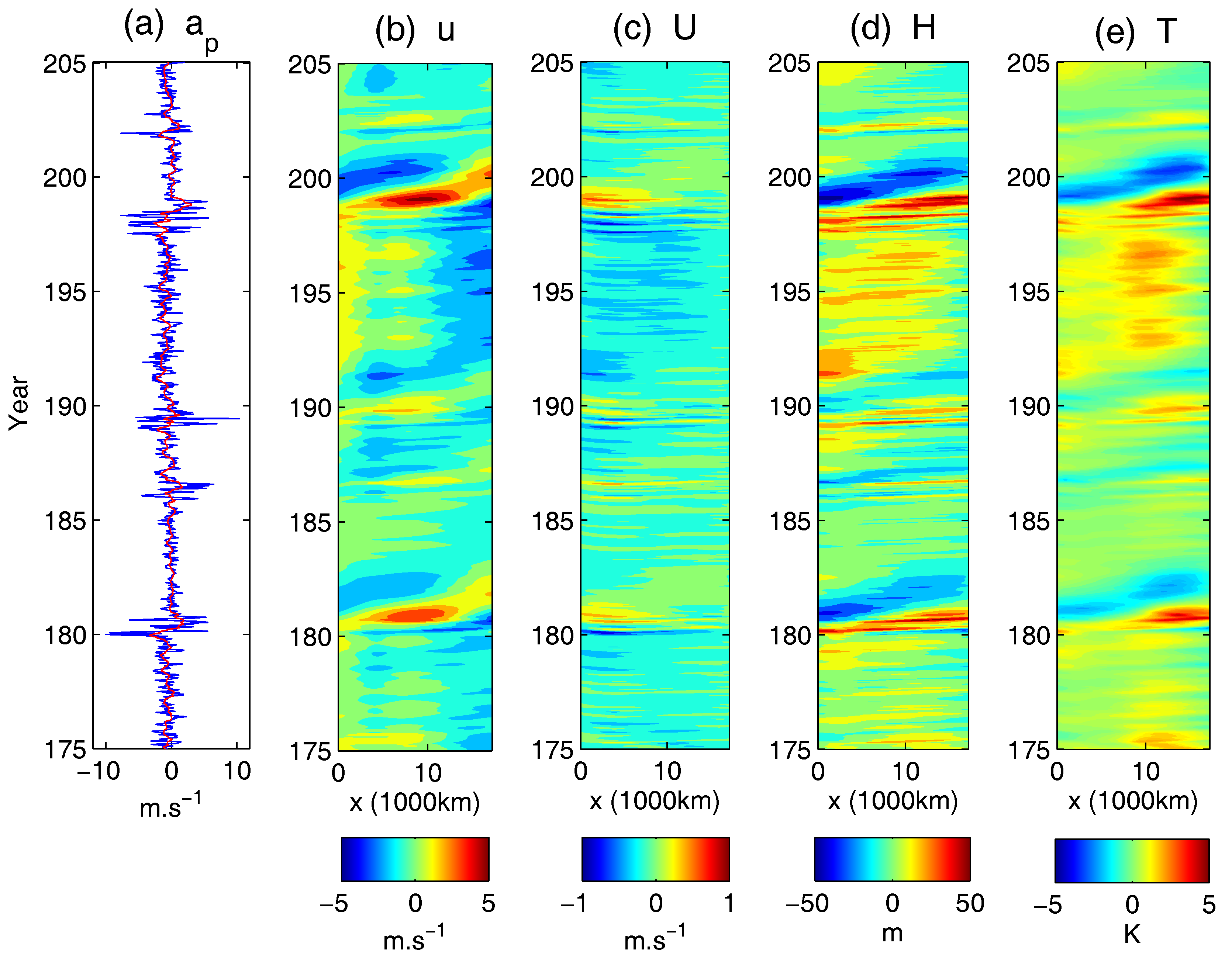

4.3.2. A Coupled El Niño Model Capturing Observed El Niño Diversity

- Atmosphere

- Ocean

- SST

- Coupling:

4.3.3. The Boussinesq Equation

4.3.4. Darcy–Brinkman–Oberbeck–Boussinesq System—Convection Phenomena in Porous Media

4.3.5. The Rotating Shallow Water Equations

- Geostrophically balanced (GB) modes: ; incompressible.

- Gravity modes: ; compressible.

4.4. Coupled Observation-Filtering Systems for Filtering Turbulent Ocean Flows Using Lagrangian Tracers

4.5. Other Low-Order Models for Filtering and Prediction

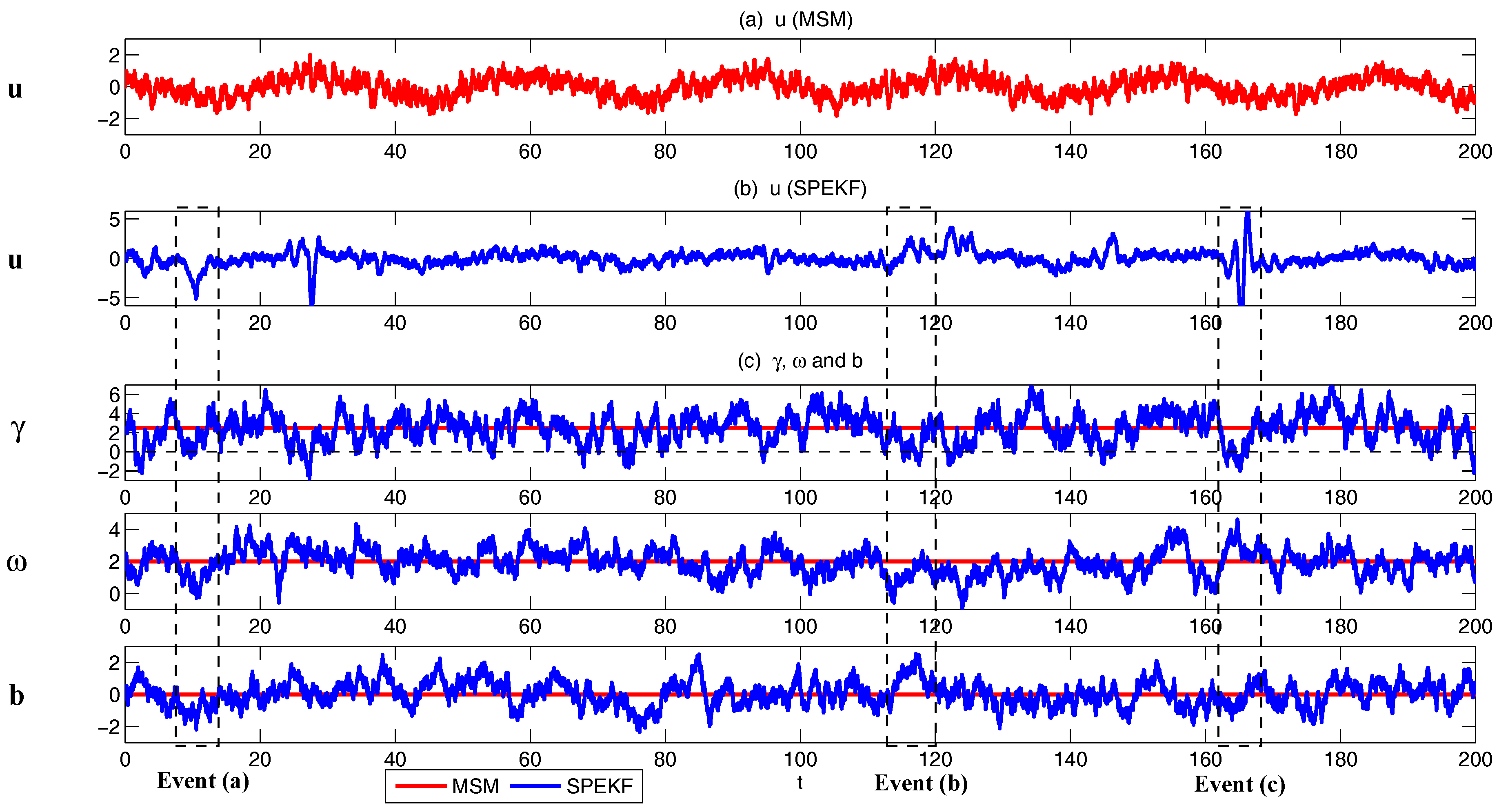

4.5.1. Stochastic Parameterized Extended Kalman Filter Model

4.5.2. An Idealized Surface Wind Model

5. Algorithms Which Beat the Curse of Dimension for Fokker–Planck Equation for Conditional Gaussian Systems: Application to Statistical Prediction

5.1. The Basic Algorithm with a Hybrid Strategy

5.2. Beating the Curse of Dimension with Block Decomposition

5.3. Statistical Symmetry

5.4. Quantifying the Model Error Using Information Theory

5.5. Applications to Statistical Prediction

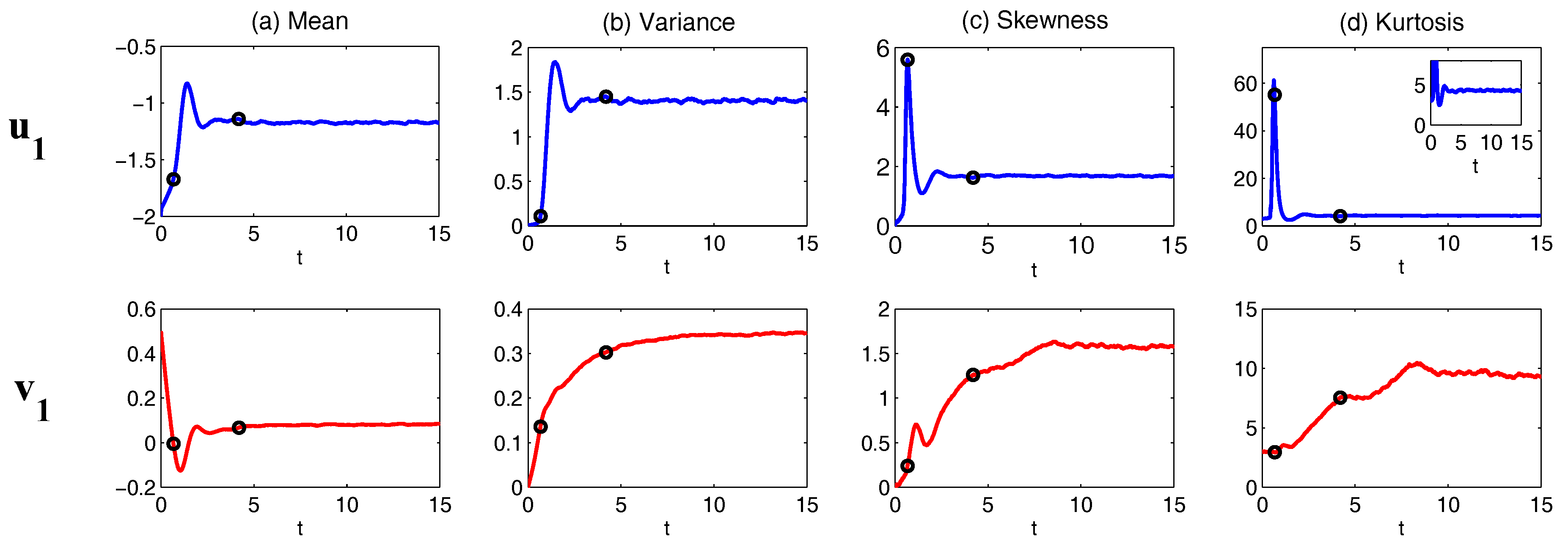

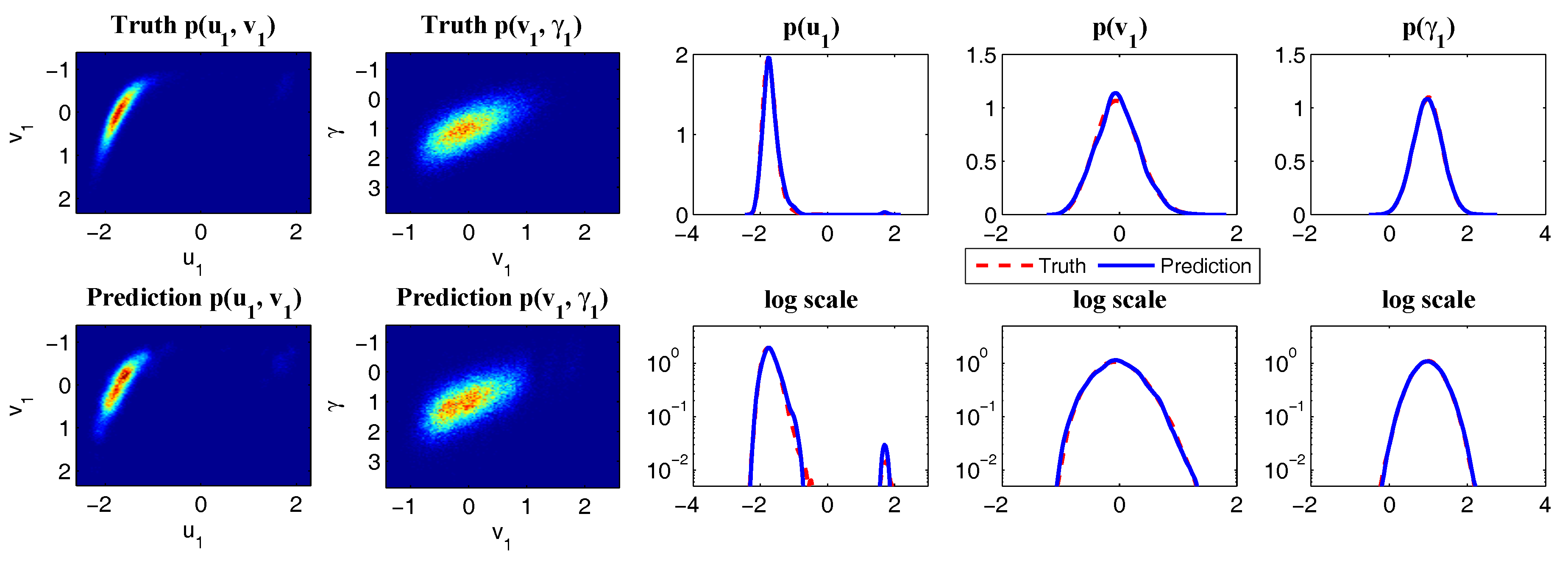

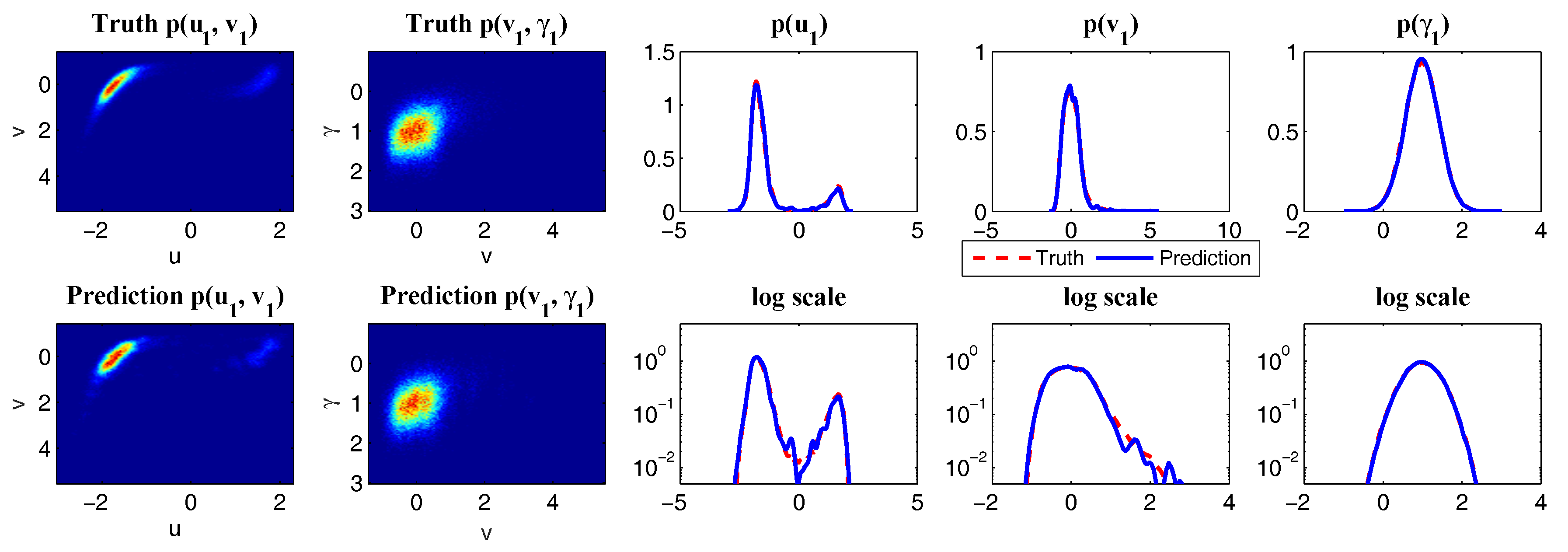

5.5.1. Application to the Stochastically Coupled FHN Model with Multiplicative Noise Using Statistical Symmetry

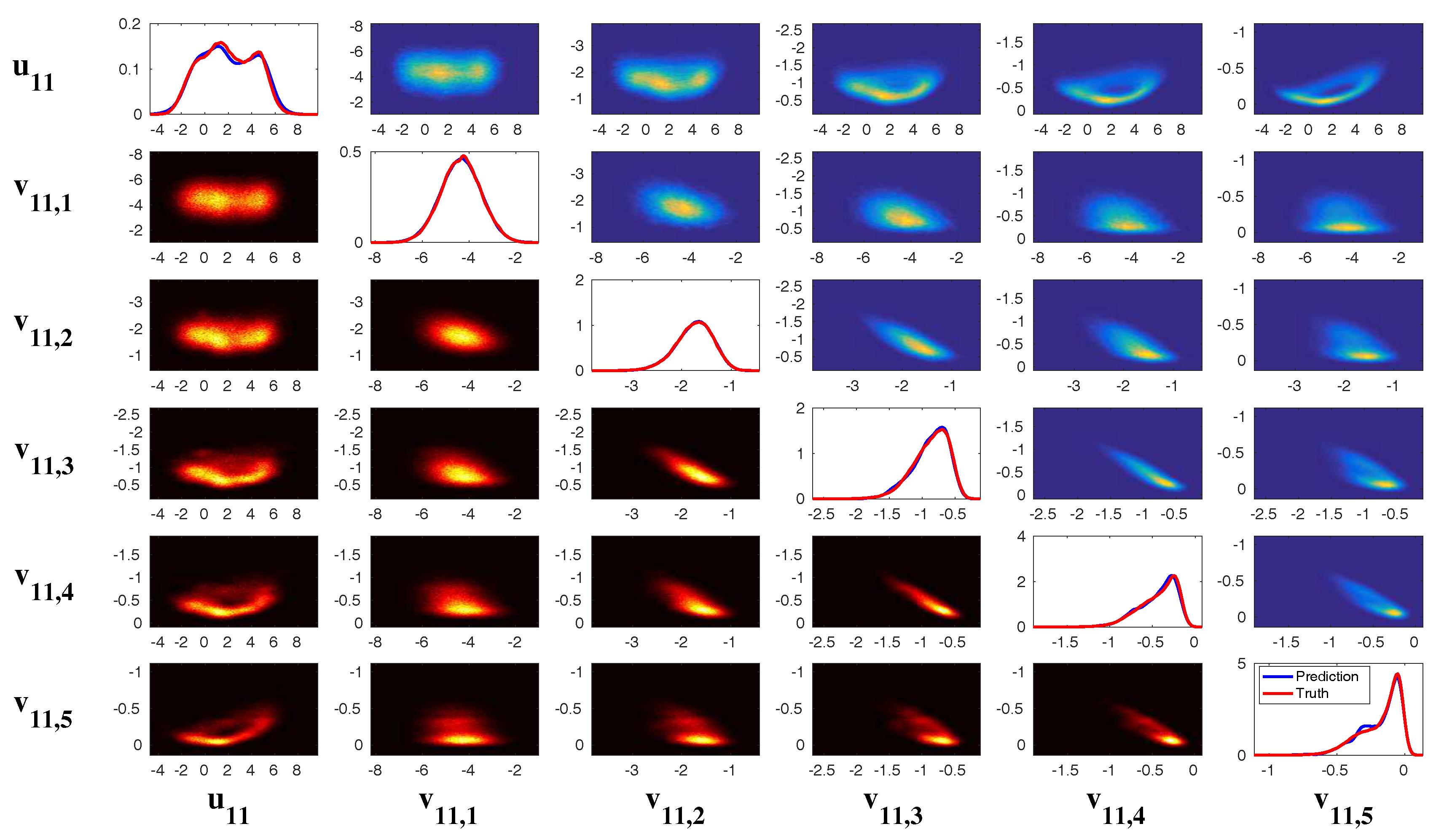

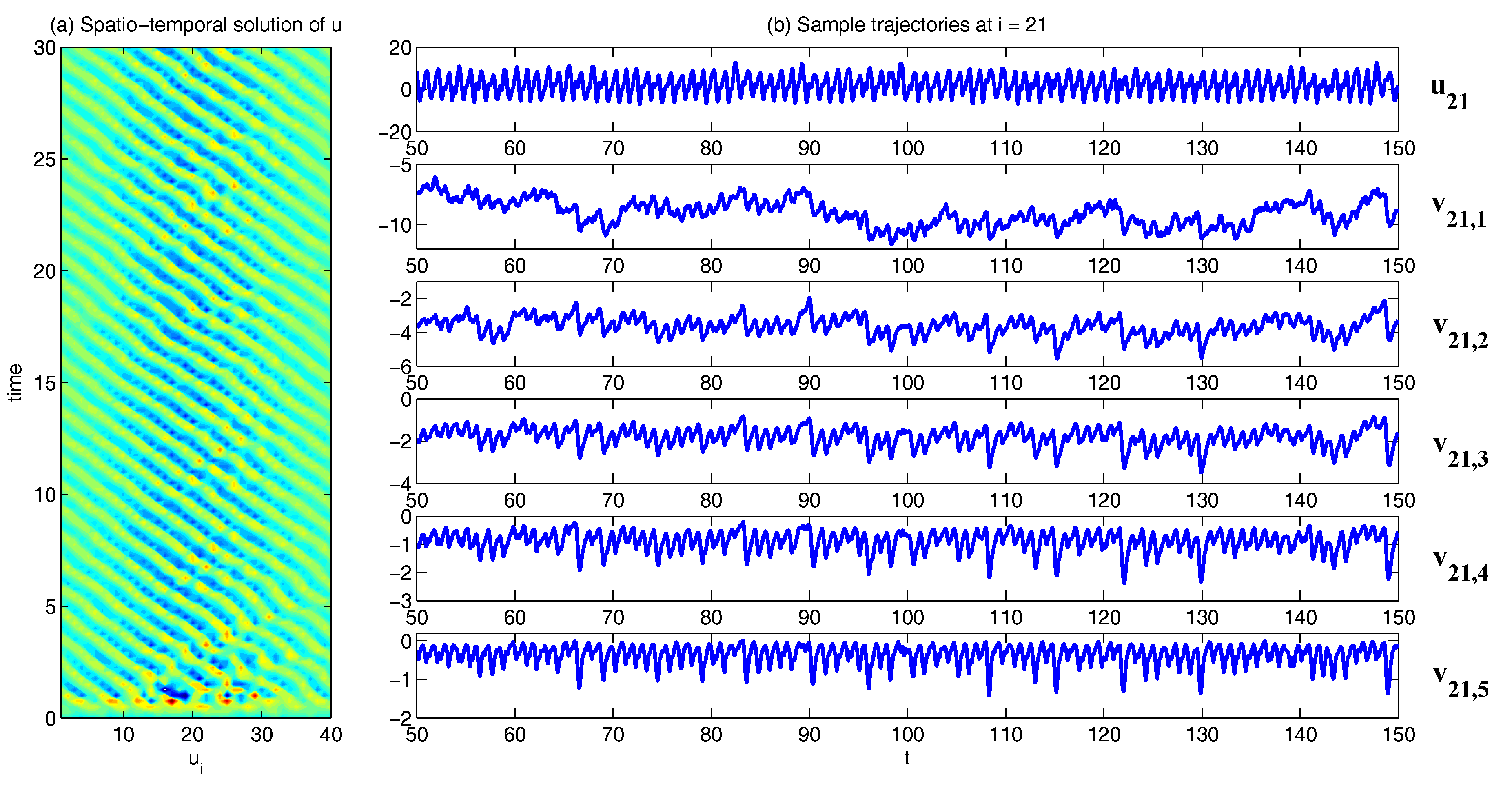

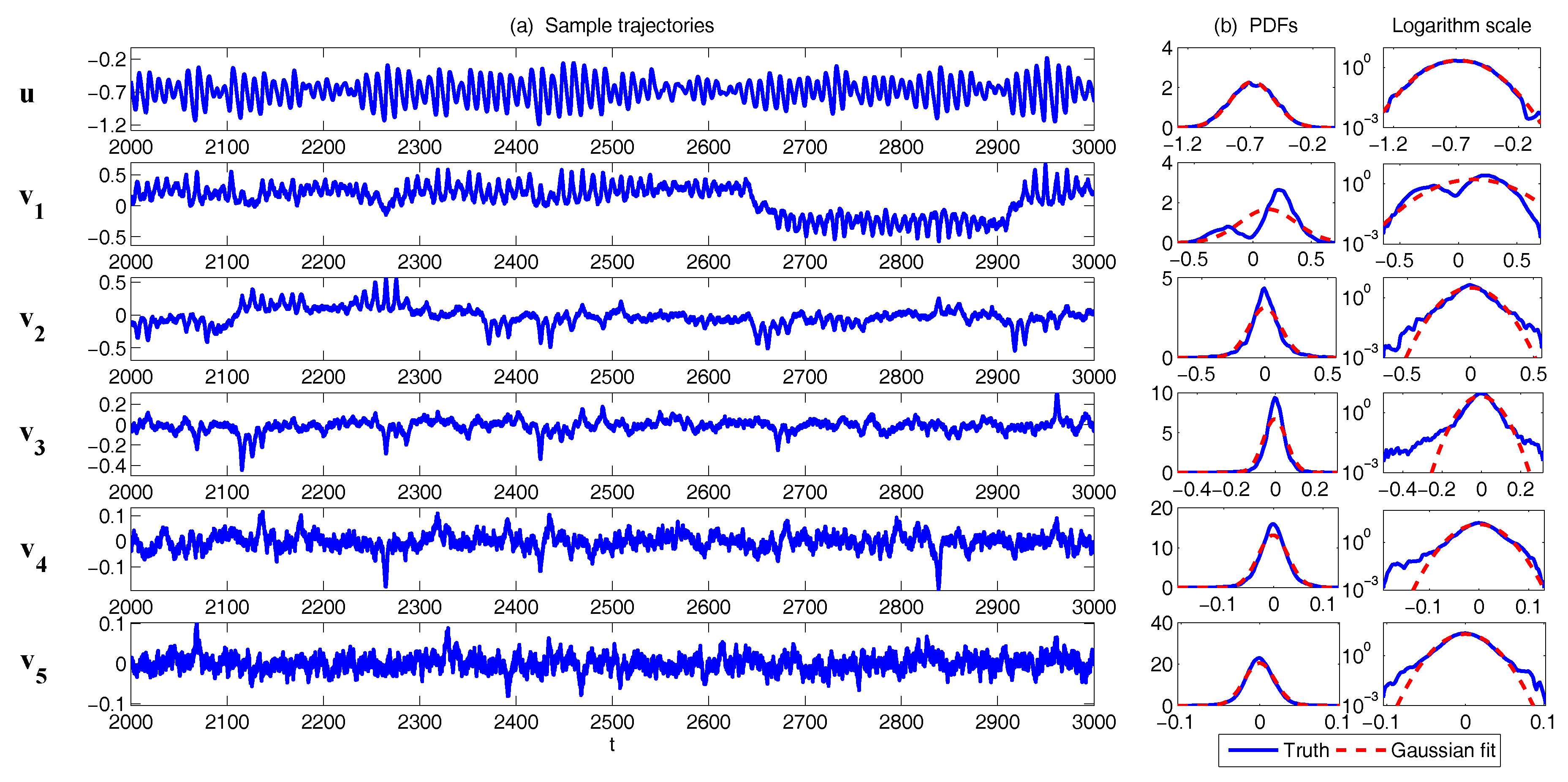

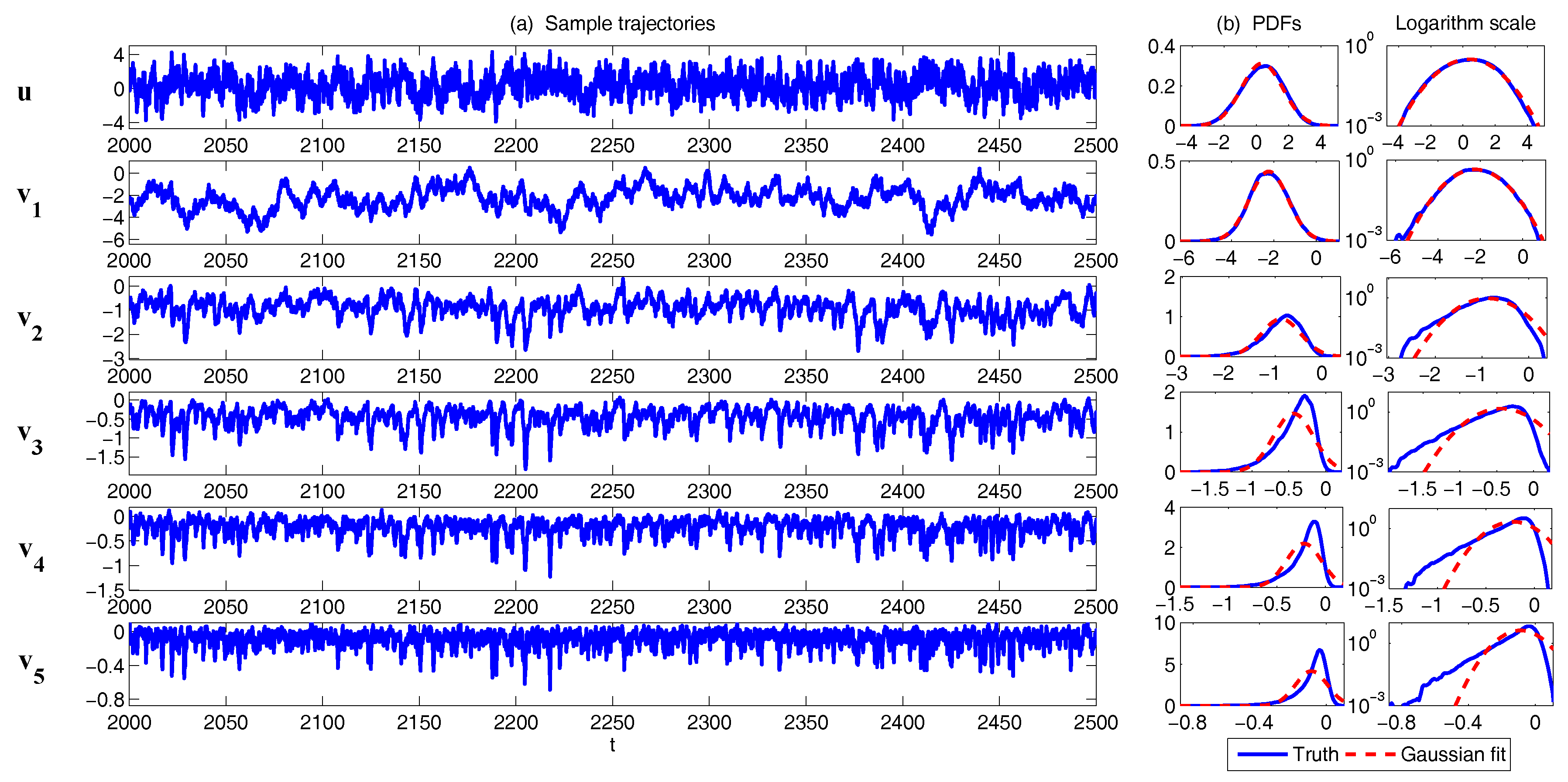

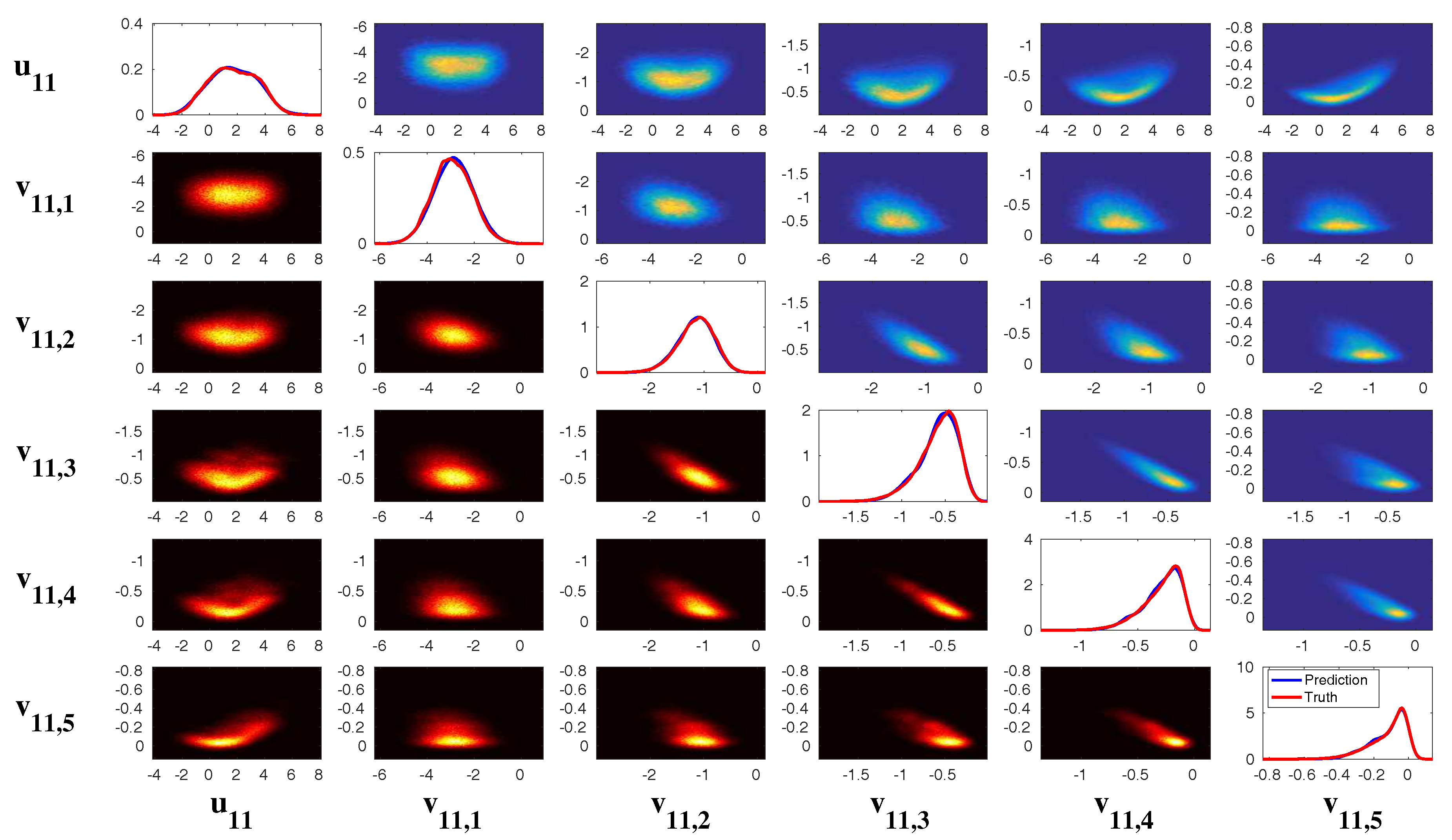

5.5.2. Application to the Two-Layer L-96 Model with Inhomogeneous Spatial Structures

6. Multiscale Data Assimilation, Particle Filters, Conditional Gaussian Systems and Information Theory for Model Errors

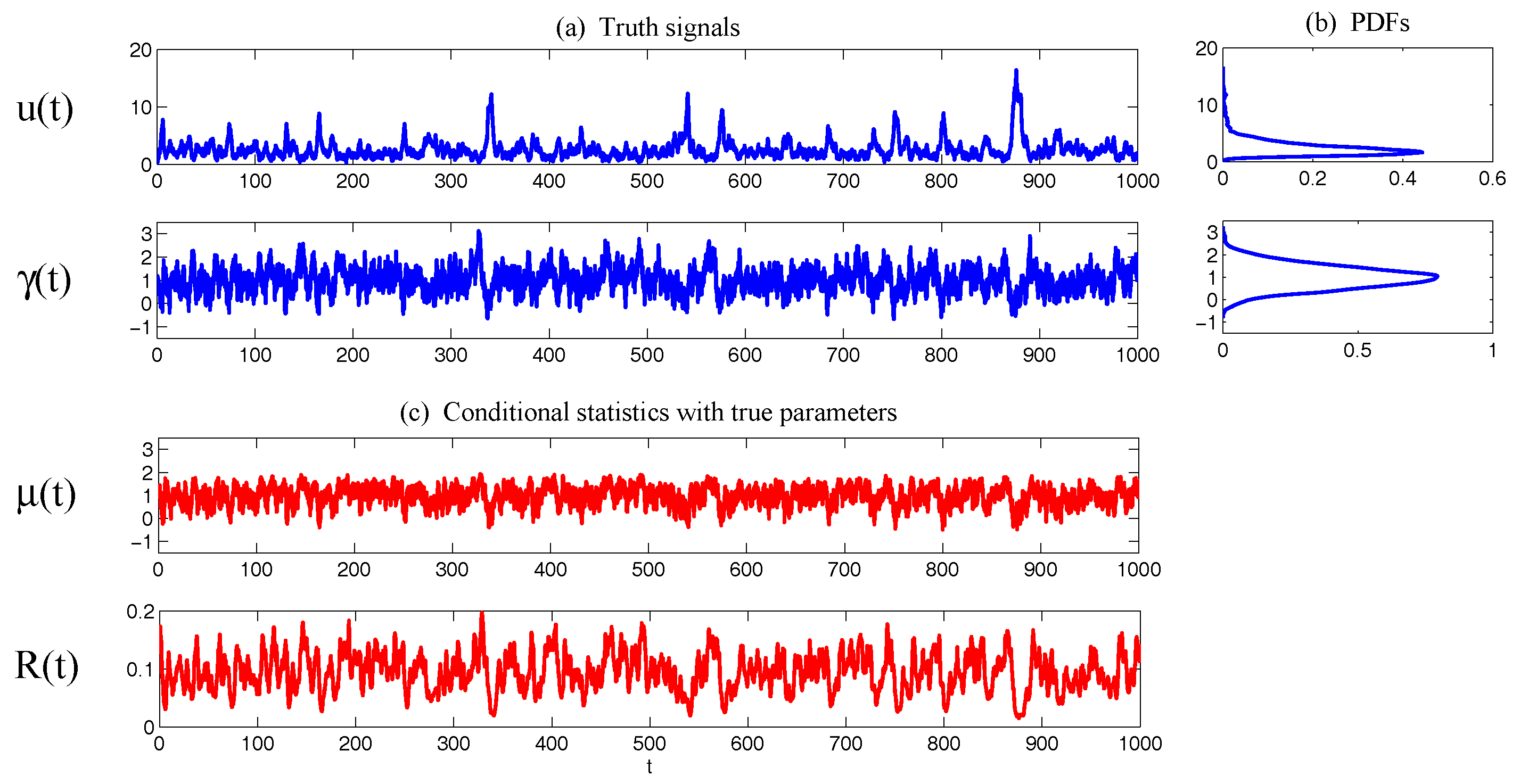

6.1. Parameter Estimation

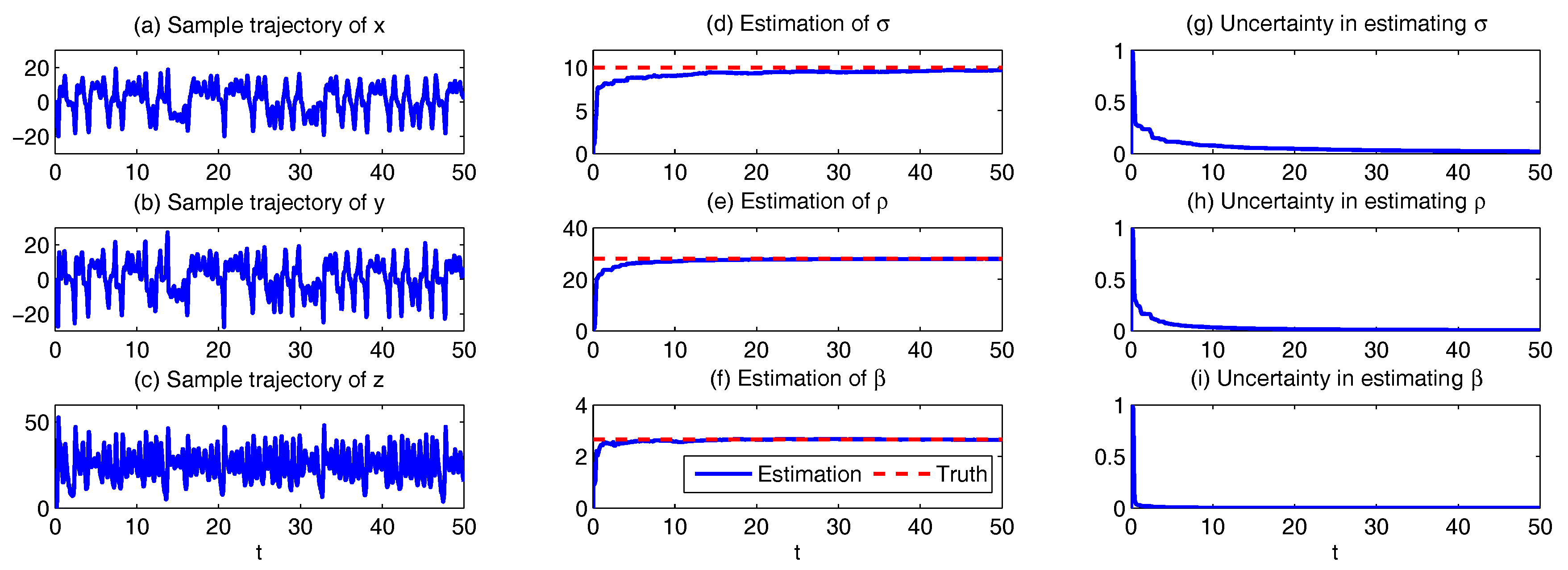

6.1.1. Direct Parameter Estimation Algorithm

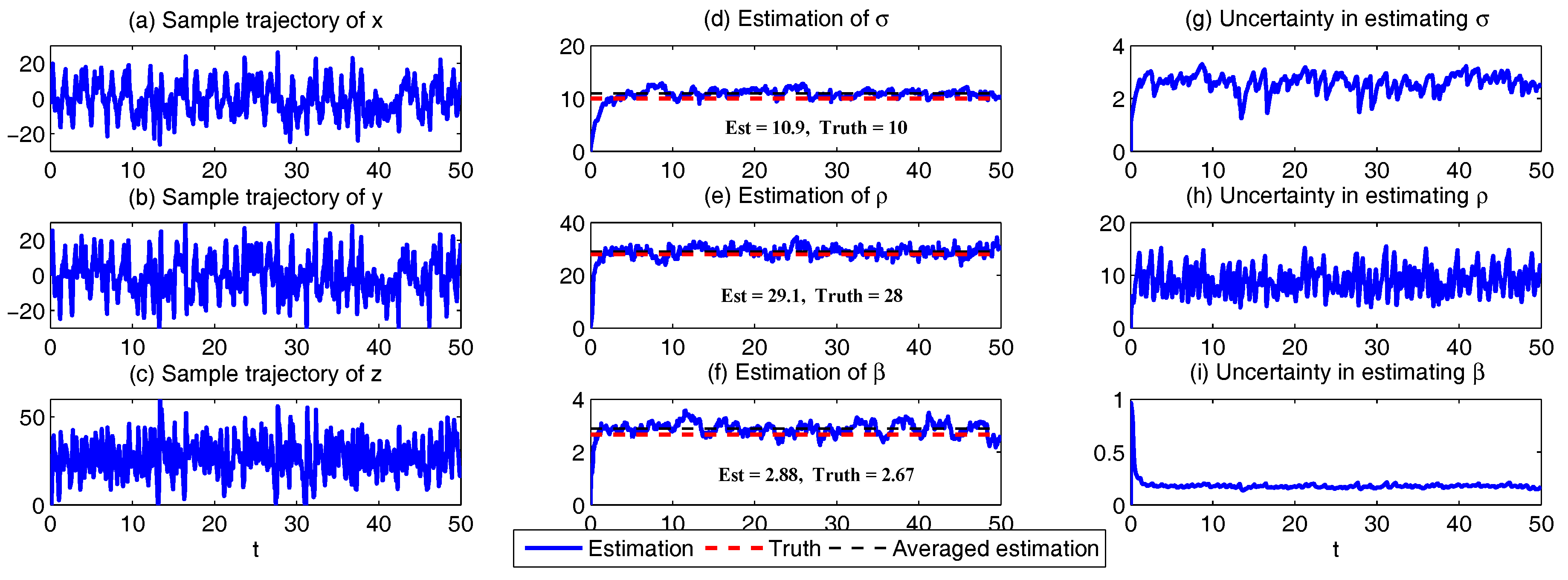

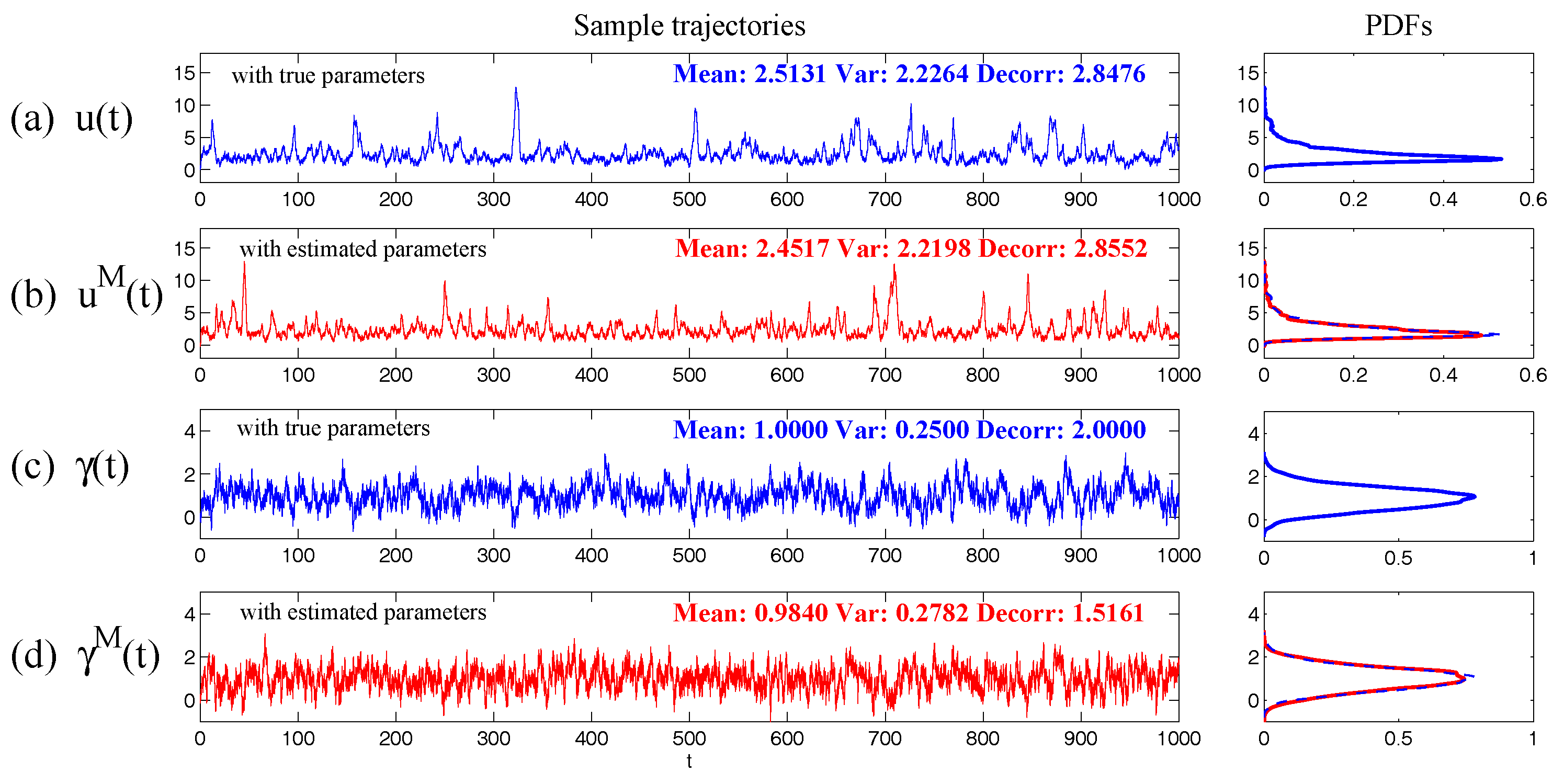

6.1.2. Parameter Estimation Using Stochastic Parameterized Equations

6.1.3. Estimating Parameters in the Unresolved Processes

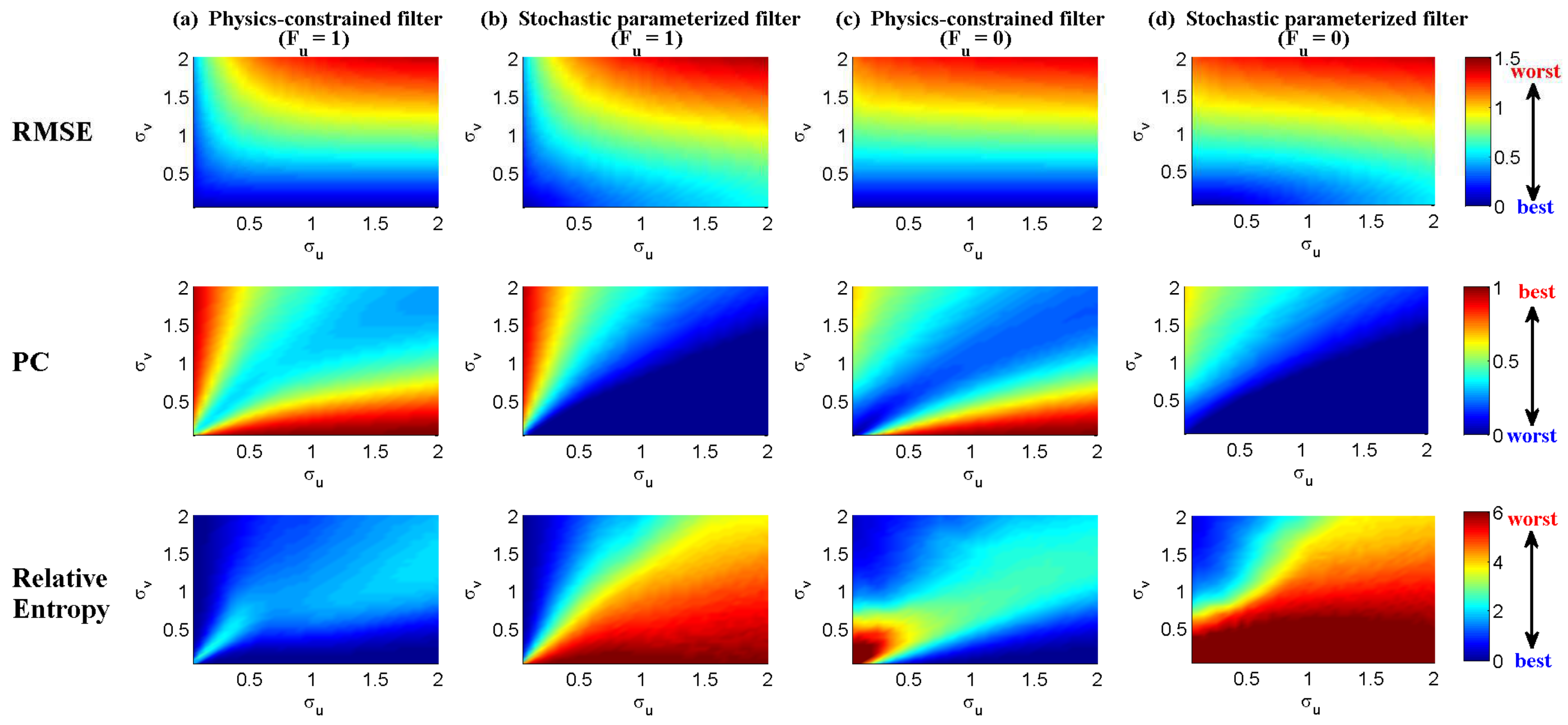

6.2. Data Assimilation with Physics-Constrained Forecast Models and Information Theory for Quantifying Model Errors

6.2.1. An Information Theoretical Framework for Data Assimilation and Prediction

- The root-mean-square error (RMSE):

- The pattern correlation (PC):

- The Shannon entropy residual,

- The mutual information,

- The relative entropy,

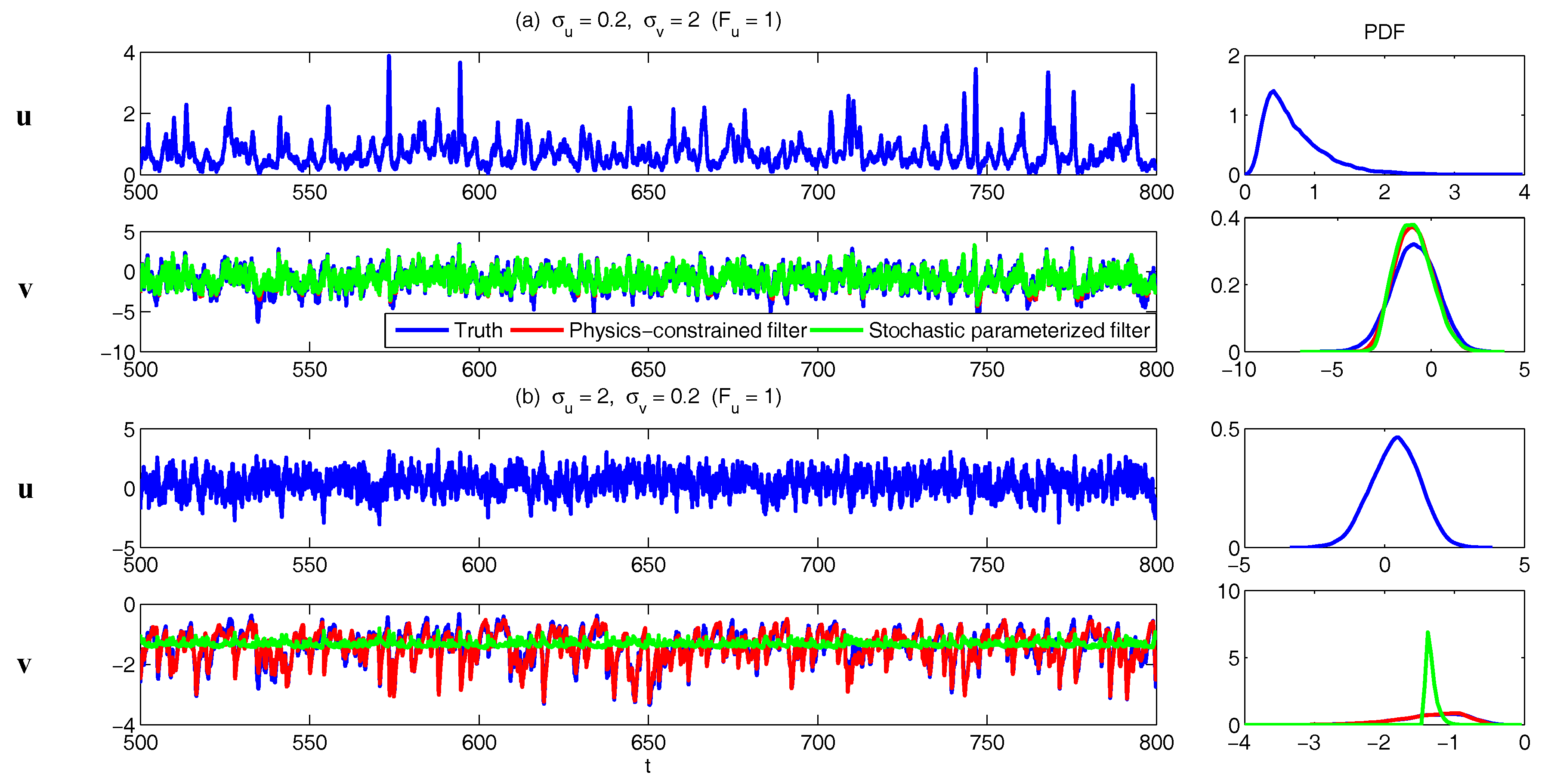

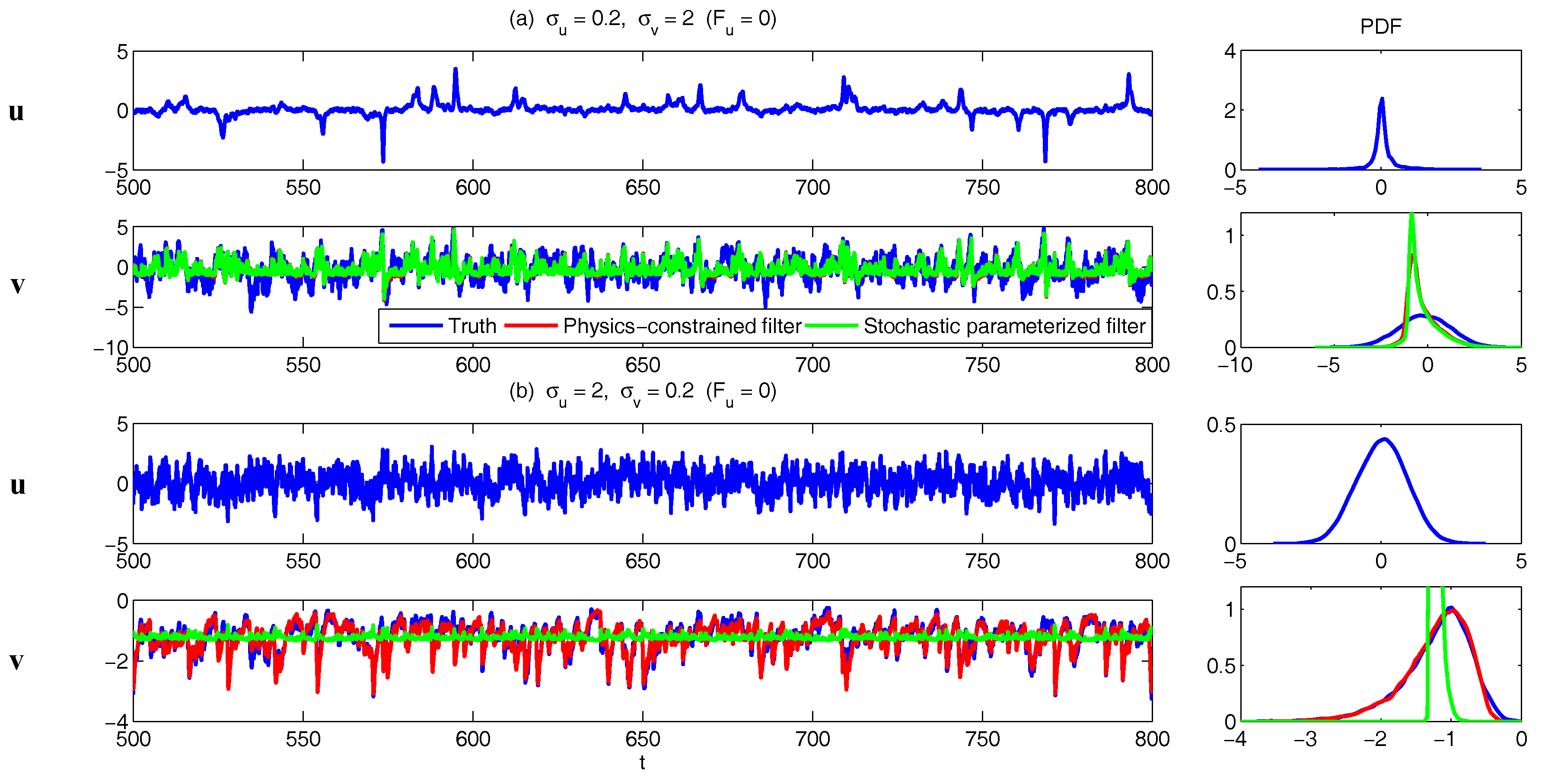

6.2.2. Important Roles of Physics-Constrained Forecast Models in Data Assimilation

6.3. Multiscale Data Assimilation with Particles Interacting with Conditional Gaussian Statistics

6.3.1. A General Description

6.3.2. Particle Filters with Superparameterization

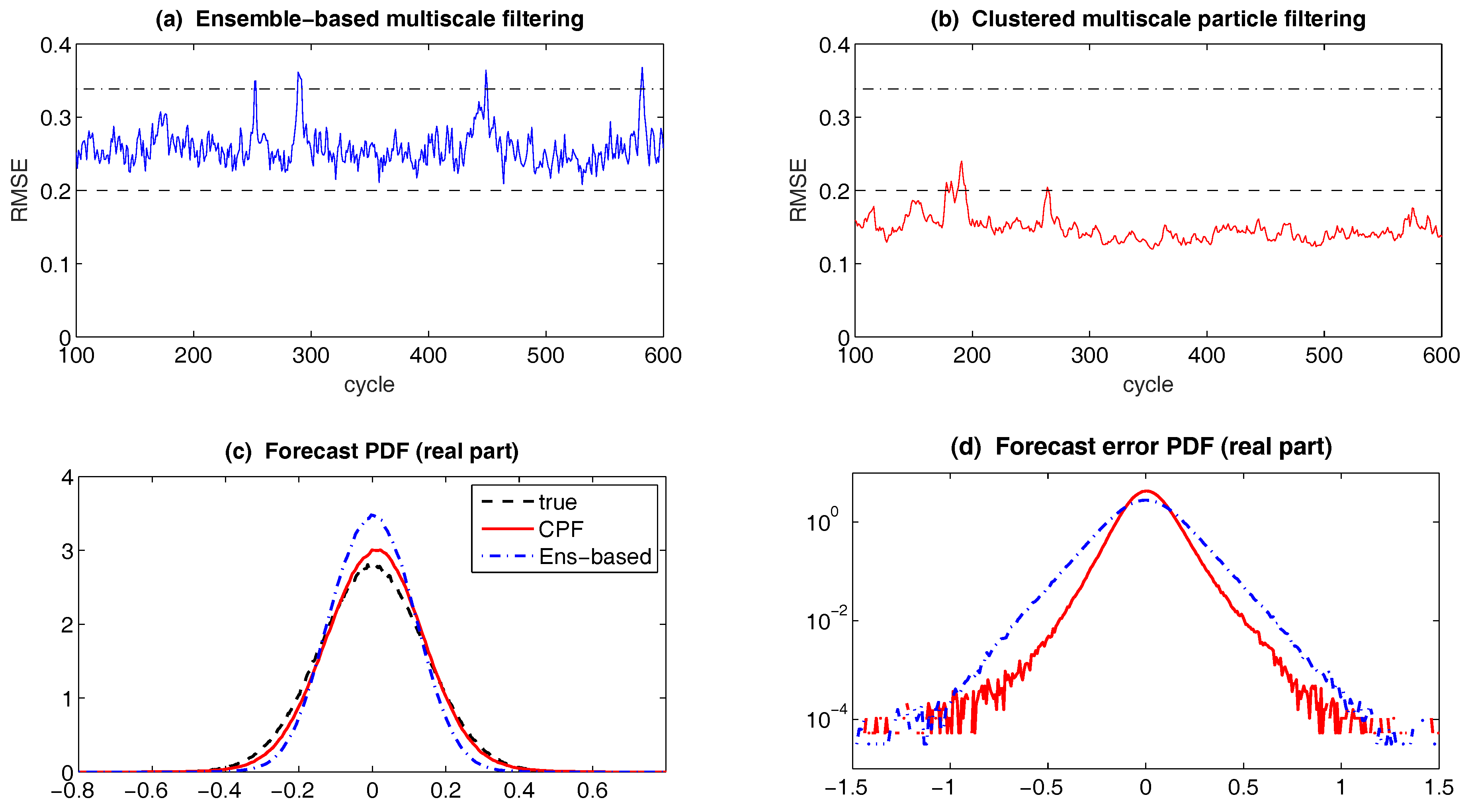

6.3.3. Clustered Particle Filters and Mutiscale Data Assimilation

6.3.4. Blended Particle Methods with Adaptive Subspaces for Filtering Turbulent Dynamical Systems

6.3.5. Extremely Efficient Multi-Scale Filtering Algorithms: SPEKF and Dynamic Stochastic Superresolution (DSS)

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Majda, A.J. Introduction to Turbulent Dynamical Systems in Complex Systems; Springer: New York, NY, USA, 2016. [Google Scholar]

- Majda, A.; Wang, X. Nonlinear Dynamics and Statistical Theories for Basic Geophysical Flows; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Strogatz, S.H. Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Baleanu, D.; Machado, J.A.T.; Luo, A.C. Fractional Dynamics and Control; Springer: New York, NY, USA, 2011. [Google Scholar]

- Deisboeck, T.; Kresh, J.Y. Complex Systems Science in Biomedicine; Springer: Boston, MA, USA, 2007. [Google Scholar]

- Stelling, J.; Kremling, A.; Ginkel, M.; Bettenbrock, K.; Gilles, E. Foundations of Systems Biology; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Sheard, S.A.; Mostashari, A. Principles of complex systems for systems engineering. Syst. Eng. 2009, 12, 295–311. [Google Scholar] [CrossRef]

- Wilcox, D.C. Multiscale model for turbulent flows. AIAA J. 1988, 26, 1311–1320. [Google Scholar] [CrossRef]

- Mohamad, M.A.; Sapsis, T.P. Probabilistic description of extreme events in intermittently unstable dynamical systems excited by correlated stochastic processes. SIAM/ASA J. Uncertain. Quantif. 2015, 3, 709–736. [Google Scholar] [CrossRef]

- Sornette, D. Probability distributions in complex systems. In Encyclopedia of Complexity and Systems Science; Springer: New York, NY, USA, 2009; pp. 7009–7024. [Google Scholar]

- Majda, A. Introduction to PDEs and Waves for the Atmosphere and Ocean; American Mathematical Society: Providence, RI, USA, 2003; Volume 9. [Google Scholar]

- Wiggins, S. Introduction to Applied Nonlinear Dynamical Systems and Chaos; Springer: New York, NY, USA, 2003; Volume 2. [Google Scholar]

- Vespignani, A. Predicting the behavior of techno-social systems. Science 2009, 325, 425–428. [Google Scholar] [CrossRef] [PubMed]

- Latif, M.; Anderson, D.; Barnett, T.; Cane, M.; Kleeman, R.; Leetmaa, A.; O’Brien, J.; Rosati, A.; Schneider, E. A review of the predictability and prediction of ENSO. J. Geophys. Res. Oceans 1998, 103, 14375–14393. [Google Scholar] [CrossRef]

- Kalnay, E. Atmospheric Modeling, Data Assimilation and Predictability; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Majda, A.J.; Branicki, M. Lessons in uncertainty quantification for turbulent dynamical systems. Discret. Contin. Dyn. Syst. A 2012, 32, 3133–3221. [Google Scholar]

- Sapsis, T.P.; Majda, A.J. Statistically accurate low-order models for uncertainty quantification in turbulent dynamical systems. Proc. Natl. Acad. Sci. USA 2013, 110, 13705–13710. [Google Scholar] [CrossRef] [PubMed]

- Mignolet, M.P.; Soize, C. Stochastic reduced order models for uncertain geometrically nonlinear dynamical systems. Comput. Methods Appl. Mech. Eng. 2008, 197, 3951–3963. [Google Scholar] [CrossRef]

- Lahoz, W.; Khattatov, B.; Ménard, R. Data assimilation and information. In Data Assimilation; Springer: New York, NY, USA, 2010; pp. 3–12. [Google Scholar]

- Majda, A.J.; Harlim, J. Filtering Complex Turbulent Systems; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Evensen, G. Data Assimilation: The Ensemble Kalman Filter; Springer: New York, NY, USA, 2009. [Google Scholar]

- Law, K.; Stuart, A.; Zygalakis, K. Data Assimilation: A Mathematical Introduction; Springer: New York, NY, USA, 2015; Volume 62. [Google Scholar]

- Palmer, T.N. A nonlinear dynamical perspective on model error: A proposal for non-local stochastic-dynamic parametrization in weather and climate prediction models. Q. J. R. Meteorol. Soc. 2001, 127, 279–304. [Google Scholar] [CrossRef]

- Orrell, D.; Smith, L.; Barkmeijer, J.; Palmer, T. Model error in weather forecasting. Nonlinear Process. Geophys. 2001, 8, 357–371. [Google Scholar] [CrossRef]

- Hu, X.M.; Zhang, F.; Nielsen-Gammon, J.W. Ensemble-based simultaneous state and parameter estimation for treatment of mesoscale model error: A real-data study. Geophys. Res. Lett. 2010, 37, L08802. [Google Scholar] [CrossRef]

- Benner, P.; Gugercin, S.; Willcox, K. A survey of projection-based model reduction methods for parametric dynamical systems. SIAM Rev. 2015, 57, 483–531. [Google Scholar] [CrossRef]

- Majda, A.J. Challenges in climate science and contemporary applied mathematics. Commun. Pure Appl. Math. 2012, 65, 920–948. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Filtering nonlinear turbulent dynamical systems through conditional Gaussian statistics. Mon. Weather Rev. 2016, 144, 4885–4917. [Google Scholar] [CrossRef]

- Olbers, D. A gallery of simple models from climate physics. In Stochastic Climate Models; Springer: Berlin, Germany, 2001; pp. 3–63. [Google Scholar]

- Liptser, R.S.; Shiryaev, A.N. Statistics ofRandom Processes II: Applications. In Applied Mathematics; Springer: Berlin/Heidelberg, Germany, 2001; Volume 6. [Google Scholar]

- Majda, A.J.; Harlim, J. Physics constrained nonlinear regression models for time series. Nonlinearity 2012, 26, 201. [Google Scholar] [CrossRef]

- Harlim, J.; Mahdi, A.; Majda, A.J. An ensemble Kalman filter for statistical estimation of physics constrained nonlinear regression models. J. Comput. Phys. 2014, 257, 782–812. [Google Scholar] [CrossRef]

- Majda, A.J.; Yuan, Y. Fundamental limitations of ad hoc linear and quadratic multi-level regression models for physical systems. Discret. Contin. Dyn. Syst. B 2012, 17, 1333–1363. [Google Scholar] [CrossRef]

- Lorenz, E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Lorenz, E.N. Formulation of a low-order model of a moist general circulation. J. Atmos. Sci. 1984, 41, 1933–1945. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Beating the curse of dimension with accurate statistics for the Fokker–Planck equation in complex turbulent systems. Proc. Natl. Acad. Sci. USA 2017, 114, 12864–12869. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Lee, Y. Conceptual dynamical models for turbulence. Proc. Natl. Acad. Sci. USA 2014, 111, 6548–6553. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Majda, A.J. Efficient statistically accurate algorithms for the Fokker–Planck equation in large dimensions. J. Comput. Phys. 2018, 354, 242–268. [Google Scholar] [CrossRef]

- Ferrari, R.; Cessi, P. Seasonal synchronization in a chaotic ocean–atmosphere model. J. Clim. 2003, 16, 875–881. [Google Scholar] [CrossRef]

- Lee, Y.; Majda, A. Multiscale data assimilation and prediction using clustered particle filters. J. Comput. Phys. 2017, in press. [Google Scholar]

- Chen, N.; Majda, A.J.; Giannakis, D. Predicting the cloud patterns of the Madden–Julian Oscillation through a low-order nonlinear stochastic model. Geophys. Res. Lett. 2014, 41, 5612–5619. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Predicting the real-time multivariate Madden–Julian oscillation index through a low-order nonlinear stochastic model. Mon. Weather Rev. 2015, 143, 2148–2169. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Predicting the Cloud Patterns for the Boreal Summer Intraseasonal Oscillation through a Low-Order Stochastic Model. Math. Clim. Weather Forecast. 2015, 1, 1–20. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J.; Sabeerali, C.; Ajayamohan, R. Predicting Monsoon Intraseasonal Precipitation using a Low-Order Nonlinear Stochastic Model. J. Clim. 2018, 25, 4403–4427. [Google Scholar] [CrossRef]

- Majda, A.J.; Timofeyev, I.; Eijnden, E.V. Models for stochastic climate prediction. Proc. Natl. Acad. Sci. USA 1999, 96, 14687–14691. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Timofeyev, I.; Vanden Eijnden, E. A mathematical framework for stochastic climate models. Commun. Pure Appl. Math. 2001, 54, 891–974. [Google Scholar] [CrossRef]

- Majda, A.; Timofeyev, I.; Vanden-Eijnden, E. A priori tests of a stochastic mode reduction strategy. Phys. D Nonlinear Phenom. 2002, 170, 206–252. [Google Scholar] [CrossRef]

- Majda, A.J.; Timofeyev, I.; Vanden-Eijnden, E. Systematic strategies for stochastic mode reduction in climate. J. Atmos. Sci. 2003, 60, 1705–1722. [Google Scholar] [CrossRef]

- Majda, A.J.; Franzke, C.; Khouider, B. An applied mathematics perspective on stochastic modelling for climate. Philos. Trans. R. Soc. Lond. A 2008, 366, 2427–2453. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Franzke, C.; Crommelin, D. Normal forms for reduced stochastic climate models. Proc. Natl. Acad. Sci. USA 2009, 106, 3649–3653. [Google Scholar] [CrossRef] [PubMed]

- Thual, S.; Majda, A.J.; Stechmann, S.N. A stochastic skeleton model for the MJO. J. Atmos. Sci. 2014, 71, 697–715. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Simple stochastic dynamical models capturing the statistical diversity of El Niño Southern Oscillation. Proc. Natl. Acad. Sci. USA 2017, 114, 201620766. [Google Scholar] [CrossRef] [PubMed]

- Castaing, B.; Gunaratne, G.; Heslot, F.; Kadanoff, L.; Libchaber, A.; Thomae, S.; Wu, X.Z.; Zaleski, S.; Zanetti, G. Scaling of hard thermal turbulence in Rayleigh-Bénard convection. J. Fluid Mech. 1989, 204, 1–30. [Google Scholar] [CrossRef]

- Wang, X. Infinite Prandtl number limit of Rayleigh-Bénard convection. Commun. Pure Appl. Math. 2004, 57, 1265–1282. [Google Scholar] [CrossRef]

- Majda, A.J.; Grote, M.J. Model dynamics and vertical collapse in decaying strongly stratified flows. Phys. Fluids 1997, 9, 2932–2940. [Google Scholar] [CrossRef]

- Kelliher, J.P.; Temam, R.; Wang, X. Boundary layer associated with the Darcy–Brinkman–Boussinesq model for convection in porous media. Phys. D Nonlinear Phenom. 2011, 240, 619–628. [Google Scholar] [CrossRef]

- Lindner, B.; Garcıa-Ojalvo, J.; Neiman, A.; Schimansky-Geier, L. Effects of noise in excitable systems. Phys. Rep. 2004, 392, 321–424. [Google Scholar] [CrossRef]

- Medvinsky, A.B.; Petrovskii, S.V.; Tikhonova, I.A.; Malchow, H.; Li, B.L. Spatiotemporal complexity of plankton and fish dynamics. SIAM Rev. 2002, 44, 311–370. [Google Scholar] [CrossRef]

- Shulgin, B.; Stone, L.; Agur, Z. Pulse vaccination strategy in the SIR epidemic model. Bull. Math. Biol. 1998, 60, 1123–1148. [Google Scholar] [CrossRef]

- Ferreira, S., Jr.; Martins, M.; Vilela, M. Reaction-diffusion model for the growth of avascular tumor. Phys. Rev. E 2002, 65, 021907. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Majda, A.J.; Tong, X.T. Information barriers for noisy Lagrangian tracers in filtering random incompressible flows. Nonlinearity 2014, 27, 2133. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J.; Tong, X.T. Noisy Lagrangian tracers for filtering random rotating compressible flows. J. Nonlinear Sci. 2015, 25, 451–488. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Model error in filtering random compressible flows utilizing noisy Lagrangian tracers. Mon. Weather Rev. 2016, 144, 4037–4061. [Google Scholar] [CrossRef]

- Gershgorin, B.; Harlim, J.; Majda, A.J. Improving filtering and prediction of spatially extended turbulent systems with model errors through stochastic parameter estimation. J. Comput. Phys. 2010, 229, 32–57. [Google Scholar] [CrossRef]

- Gershgorin, B.; Harlim, J.; Majda, A.J. Test models for improving filtering with model errors through stochastic parameter estimation. J. Comput. Phys. 2010, 229, 1–31. [Google Scholar] [CrossRef]

- Gardiner, C.W. Handbook of Stochastic Methods for Physics, Chemistry and the Natural Sciences; Springer Series in Synergetics; Springer: Berlin/Heidelberg, Germany, 2004; Volume 13. [Google Scholar]

- Risken, H. The Fokker–Planck Equation—Methods of Solution and Applications; Springer Series in Synergetics; Springer: Berlin/Heidelberg, Germany, 1989; Volume 18, p. 301. [Google Scholar]

- Pichler, L.; Masud, A.; Bergman, L.A. Numerical solution of the Fokker–Planck equation by finite difference and finite element methods-a comparative study. In Computational Methods in Stochastic Dynamics; Springer: Dordrecht, The Netherlands, 2013; pp. 69–85. [Google Scholar]

- Robert, C.P. Monte Carlo Methods; Wiley Online Library: Hoboken, NJ, USA, 2004. [Google Scholar]

- Majda, A.J.; Grote, M.J. Mathematical test models for superparametrization in anisotropic turbulence. Proc. Natl. Acad. Sci. USA 2009, 106, 5470–5474. [Google Scholar] [CrossRef] [PubMed]

- Grooms, I.; Majda, A.J. Stochastic superparameterization in a one-dimensional model for wave turbulence. Commun. Math. Sci 2014, 12, 509–525. [Google Scholar] [CrossRef]

- Grooms, I.; Majda, A.J. Stochastic superparameterization in quasigeostrophic turbulence. J. Comput. Phys. 2014, 271, 78–98. [Google Scholar] [CrossRef]

- Majda, A.J.; Grooms, I. New perspectives on superparameterization for geophysical turbulence. J. Comput. Phys. 2014, 271, 60–77. [Google Scholar] [CrossRef]

- Grooms, I.; Lee, Y.; Majda, A.J. Numerical schemes for stochastic backscatter in the inverse cascade of quasigeostrophic turbulence. Multiscale Model. Simul. 2015, 13, 1001–1021. [Google Scholar] [CrossRef]

- Grooms, I.; Lee, Y.; Majda, A.J. Ensemble Kalman filters for dynamical systems with unresolved turbulence. J. Comput. Phys. 2014, 273, 435–452. [Google Scholar] [CrossRef]

- Grooms, I.; Lee, Y.; Majda, A.J. Ensemble filtering and low-resolution model error: Covariance inflation, stochastic parameterization, and model numerics. Mon. Weather Rev. 2015, 143, 3912–3924. [Google Scholar] [CrossRef]

- Grooms, I.; Majda, A.J. Efficient stochastic superparameterization for geophysical turbulence. Proc. Natl. Acad. Sci. USA 2013, 110, 4464–4469. [Google Scholar] [CrossRef] [PubMed]

- Grooms, I.; Majda, A.J.; Smith, K.S. Stochastic superparameterization in a quasigeostrophic model of the Antarctic Circumpolar Current. Ocean Model. 2015, 85, 1–15. [Google Scholar] [CrossRef]

- Bain, A.; Crisan, D. Fundamentals of stochastic filtering; Springer: New York, NY, USA, 2009; Volume 3. [Google Scholar]

- Majda, A.J.; Qi, D.; Sapsis, T.P. Blended particle filters for large-dimensional chaotic dynamical systems. Proc. Natl. Acad. Sci. USA 2014, 111, 7511–7516. [Google Scholar] [CrossRef] [PubMed]

- Janjić, T.; Cohn, S.E. Treatment of observation error due to unresolved scales in atmospheric data assimilation. Mon. Weather Rev. 2006, 134, 2900–2915. [Google Scholar] [CrossRef]

- Daley, R. Estimating observation error statistics for atmospheric data assimilation. Ann. Geophys. 1993, 11, 634–647. [Google Scholar]

- Qian, C.; Zhou, W.; Fong, S.K.; Leong, K.C. Two approaches for statistical prediction of non-Gaussian climate extremes: A case study of Macao hot extremes during 1912–2012. J. Clim. 2015, 28, 623–636. [Google Scholar] [CrossRef]

- Hjorth, J.U. Computer Intensive Statistical Methods: Validation, Model Selection, and Bootstrap; Routledge: London, UK, 2017. [Google Scholar]

- Alessandrini, S.; Delle Monache, L.; Sperati, S.; Cervone, G. An analog ensemble for short-term probabilistic solar power forecast. Appl. Energy 2015, 157, 95–110. [Google Scholar] [CrossRef]

- Alessandrini, S.; Delle Monache, L.; Sperati, S.; Nissen, J. A novel application of an analog ensemble for short-term wind power forecasting. Renew. Energy 2015, 76, 768–781. [Google Scholar] [CrossRef]

- Nair, P.; Chakraborty, A.; Varikoden, H.; Francis, P.; Kuttippurath, J. The local and global climate forcings induced inhomogeneity of Indian rainfall. Sci. Rep. 2018, 8, 6026. [Google Scholar] [CrossRef] [PubMed]

- Hodges, K.; Chappell, D.; Robinson, G.; Yang, G. An improved algorithm for generating global window brightness temperatures from multiple satellite infrared imagery. J. Atmos. Ocean. Technol. 2000, 17, 1296–1312. [Google Scholar] [CrossRef]

- Winker, D.M.; Vaughan, M.A.; Omar, A.; Hu, Y.; Powell, K.A.; Liu, Z.; Hunt, W.H.; Young, S.A. Overview of the CALIPSO mission and CALIOP data processing algorithms. J. Atmos. Ocean. Technol. 2009, 26, 2310–2323. [Google Scholar] [CrossRef]

- Kravtsov, S.; Kondrashov, D.; Ghil, M. Multilevel regression modeling of nonlinear processes: Derivation and applications to climatic variability. J. Clim. 2005, 18, 4404–4424. [Google Scholar] [CrossRef]

- Wikle, C.K.; Hooten, M.B. A general science-based framework for dynamical spatio-temporal models. Test 2010, 19, 417–451. [Google Scholar] [CrossRef]

- Alexander, R.; Zhao, Z.; Székely, E.; Giannakis, D. Kernel analog forecasting of tropical intraseasonal oscillations. J. Atmos. Sci. 2017, 74, 1321–1342. [Google Scholar] [CrossRef]

- Harlim, J.; Yang, H. Diffusion Forecasting Model with Basis Functions from QR-Decomposition. J. Nonlinear Sci. 2017, 1–26. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond the Kalman Filter: Particle Filters for Tracking Applications; Artech House: Norwood, MA, USA, 2003. [Google Scholar]

- Tippett, M.K.; Anderson, J.L.; Bishop, C.H.; Hamill, T.M.; Whitaker, J.S. Ensemble square root filters. Mon. Weather Rev. 2003, 131, 1485–1490. [Google Scholar] [CrossRef]

- Anderson, J.L. An ensemble adjustment Kalman filter for data assimilation. Mon. Weather Rev. 2001, 129, 2884–2903. [Google Scholar] [CrossRef]

- Harlim, J.; Majda, A.J. Catastrophic filter divergence in filtering nonlinear dissipative systems. Commun. Math. Sci. 2010, 8, 27–43. [Google Scholar] [CrossRef]

- Tong, X.T.; Majda, A.J.; Kelly, D. Nonlinear stability and ergodicity of ensemble based Kalman filters. Nonlinearity 2016, 29, 657. [Google Scholar] [CrossRef]

- Majda, A.J.; Harlim, J.; Gershgorin, B. Mathematical strategies for filtering turbulent dynamical systems. Discret. Contin. Dyn. Syst. 2010, 27, 441–486. [Google Scholar] [CrossRef]

- Frenkel, Y.; Majda, A.J.; Khouider, B. Using the stochastic multicloud model to improve tropical convective parameterization: A paradigm example. J. Atmos. Sci. 2012, 69, 1080–1105. [Google Scholar] [CrossRef]

- Branicki, M.; Majda, A.J. Dynamic stochastic superresolution of sparsely observed turbulent systems. J. Comput. Phys. 2013, 241, 333–363. [Google Scholar] [CrossRef]

- Branicki, M.; Majda, A.J.; Law, K.J.H. Accuracy of some approximate gaussian filters for the Navier–Stokes equation in the presence of model error. Multiscale Model. Simul. 2018. submitted. [Google Scholar]

- Kalman, R.E.; Bucy, R.S. New results in linear filtering and prediction theory. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Brammer, K.; Siffling, G. Kalman–Bucy Filters; Artech House: Norwood, MA, USA, 1989. [Google Scholar]

- Bucy, R.S.; Joseph, P.D. Filtering for Stochastic Processes with Applications to Guidance; American Mathematical Society: Providence, RI, USA, 1987; Volume 326. [Google Scholar]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Courier Corporation: Washington, DC, USA, 2007. [Google Scholar]

- Bensoussan, A. Stochastic Control of Partially Observable Systems; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Liptser, R.S.; Shiryaev, A. Statistics of Random Processes; Springer: New York, NY, USA, 1977. [Google Scholar]

- Sparrow, C. The Lorenz Equations: Bifurcations, Chaos, and Strange Attractors; Springer: New York, NY, USA, 2012; Volume 41. [Google Scholar]

- Haken, H. Analogy between higher instabilities in fluids and lasers. Phys. Lett. A 1975, 53, 77–78. [Google Scholar] [CrossRef]

- Knobloch, E. Chaos in the segmented disc dynamo. Phys. Lett. A 1981, 82, 439–440. [Google Scholar] [CrossRef]

- Gorman, M.; Widmann, P.; Robbins, K. Nonlinear dynamics of a convection loop: A quantitative comparison of experiment with theory. Phys. D Nonlinear Phenom. 1986, 19, 255–267. [Google Scholar] [CrossRef]

- Hemati, N. Strange attractors in brushless DC motors. IEEE Trans. Circuits Syst. I 1994, 41, 40–45. [Google Scholar] [CrossRef]

- Cuomo, K.M.; Oppenheim, A.V. Circuit implementation of synchronized chaos with applications to communications. Phys. Rev. Lett. 1993, 71, 65. [Google Scholar] [CrossRef] [PubMed]

- Poland, D. Cooperative catalysis and chemical chaos: A chemical model for the Lorenz equations. Phys. D Nonlinear Phenom. 1993, 65, 86–99. [Google Scholar] [CrossRef]

- Tzenov, S.I. Strange attractors characterizing the osmotic instability. arXiv, 2014; arXiv:1406.0979. [Google Scholar]

- Wilks, D.S. Effects of stochastic parametrizations in the Lorenz’96 system. Q. J. R. Meteorol. Soc. 2005, 131, 389–407. [Google Scholar] [CrossRef]

- Arnold, H.; Moroz, I.; Palmer, T. Stochastic parametrizations and model uncertainty in the Lorenz’96 system. Phil. Trans. R. Soc. A 2013, 371, 20110479. [Google Scholar] [CrossRef] [PubMed]

- Vallis, G.K. Atmospheric and Oceanic Fluid Dynamics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Salmon, R. Lectures on Geophysical Fluid Dynamics; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Lorenz, E.N. Irregularity: A fundamental property of the atmosphere. Tellus A 1984, 36, 98–110. [Google Scholar] [CrossRef]

- Giannakis, D.; Majda, A.J. Nonlinear Laplacian spectral analysis for time series with intermittency and low-frequency variability. Proc. Natl. Acad. Sci. USA 2012, 109, 2222–2227. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.; Abramov, R.V.; Grote, M.J. Information Theory and Stochastics for Multiscale Nonlinear Systems; American Mathematical Society: Providence, RI, USA, 2005; Volume 25. [Google Scholar]

- Majda, A.J.; Gershgorin, B.; Yuan, Y. Low-frequency climate response and fluctuation–dissipation theorems: Theory and practice. J. Atmos. Sci. 2010, 67, 1186–1201. [Google Scholar] [CrossRef]

- Mantua, N.J.; Hare, S.R. The Pacific decadal oscillation. J. Oceanogr. 2002, 58, 35–44. [Google Scholar] [CrossRef]

- Hinze, J. Turbulence: An introduction to its mechanisms and theory. Mech. Eng. 1959, 1396. [Google Scholar] [CrossRef]

- Townsend, A.A. The Structure of Turbulent Shear Flow; Cambridge University Press: Cambridge, UK, 1980. [Google Scholar]

- Frisch, U. Turbulence: The Legacy of AN Kolmogorov; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Cessi, P.; Primeau, F. Dissipative selection of low-frequency modes in a reduced-gravity basin. J. Phys. Oceanogr. 2001, 31, 127–137. [Google Scholar] [CrossRef]

- LaCasce, J.; Pedlosky, J. Baroclinic Rossby waves in irregular basins. J. Phys. Oceanogr. 2002, 32, 2828–2847. [Google Scholar] [CrossRef]

- Charney, J.G.; DeVore, J.G. Multiple flow equilibria in the atmosphere and blocking. J. Atmos. Sci. 1979, 36, 1205–1216. [Google Scholar] [CrossRef]

- Treutlein, H.; Schulten, K. Noise Induced Limit Cycles of the Bonhoeffer-Van der Pol Model of Neural Pulses. Ber. Bunsenges. Phys. Chem. 1985, 89, 710–718. [Google Scholar] [CrossRef]

- Lindner, B.; Schimansky-Geier, L. Coherence and stochastic resonance in a two-state system. Phys. Rev. E 2000, 61, 6103. [Google Scholar] [CrossRef]

- Longtin, A. Stochastic resonance in neuron models. J. Stat. Phys. 1993, 70, 309–327. [Google Scholar] [CrossRef]

- Wiesenfeld, K.; Pierson, D.; Pantazelou, E.; Dames, C.; Moss, F. Stochastic resonance on a circle. Phys. Rev. Lett. 1994, 72, 2125. [Google Scholar] [CrossRef] [PubMed]

- Neiman, A.; Schimansky-Geier, L.; Cornell-Bell, A.; Moss, F. Noise-enhanced phase synchronization in excitable media. Phys. Rev. Lett. 1999, 83, 4896. [Google Scholar] [CrossRef]

- Hempel, H.; Schimansky-Geier, L.; Garcia-Ojalvo, J. Noise-sustained pulsating patterns and global oscillations in subexcitable media. Phys. Rev. Lett. 1999, 82, 3713. [Google Scholar] [CrossRef]

- Hu, B.; Zhou, C. Phase synchronization in coupled nonidentical excitable systems and array-enhanced coherence resonance. Phys. Rev. E 2000, 61, R1001. [Google Scholar] [CrossRef]

- Jung, P.; Cornell-Bell, A.; Madden, K.S.; Moss, F. Noise-induced spiral waves in astrocyte syncytia show evidence of self-organized criticality. J. Neurophysiol. 1998, 79, 1098–1101. [Google Scholar] [CrossRef] [PubMed]

- DeVille, R.L.; Vanden-Eijnden, E.; Muratov, C.B. Two distinct mechanisms of coherence in randomly perturbed dynamical systems. Phys. Rev. E 2005, 72, 031105. [Google Scholar] [CrossRef] [PubMed]

- Casado, J. Noise-induced coherence in an excitable system. Phys. Lett. A 1997, 235, 489–492. [Google Scholar] [CrossRef]

- Sadhu, S.; Kuehn, C. Stochastic mixed-mode oscillations in a three-species predator–prey model. Chaos 2018, 28, 033606. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.Q.; Jin, Z.; Liu, Q.X.; Li, B.L. Rich dynamics in a predator–prey model with both noise and periodic force. BioSystems 2010, 100, 14–22. [Google Scholar] [CrossRef] [PubMed]

- McCluskey, C.C. Complete global stability for an SIR epidemic model with delay-distributed or discrete. Nonlinear Anal. 2010, 11, 55–59. [Google Scholar] [CrossRef]

- Kim, K.I.; Lin, Z.; Zhang, Q. An SIR epidemic model with free boundary. Nonlinear Anal. 2013, 14, 1992–2001. [Google Scholar] [CrossRef]

- Allen, L.J.; Brauer, F.; Van den Driessche, P.; Wu, J. Mathematical Epidemiology; Springer: New York, NY, USA, 2008; Volume 1945. [Google Scholar]

- Gray, A.; Greenhalgh, D.; Hu, L.; Mao, X.; Pan, J. A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math. 2011, 71, 876–902. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, H. Stability of periodic solutions for an SIS model with pulse vaccination. Math. Comput. Model. 2003, 38, 299–308. [Google Scholar] [CrossRef]

- Madden, R.A.; Julian, P.R. Observations of the 40–50-day tropical oscillation—A review. Mon. Weather Rev. 1994, 122, 814–837. [Google Scholar] [CrossRef]

- Zhang, C. Madden-julian oscillation. Rev. Geophys. 2005, 43, 2004RG000158. [Google Scholar] [CrossRef]

- Lau, W.K.M.; Waliser, D.E. Intraseasonal Variability in the Atmosphere-Ocean Climate System; Springer: Berlin, Germany, 2011. [Google Scholar]

- Hendon, H.H.; Wheeler, M.C.; Zhang, C. Seasonal dependence of the MJO–ENSO relationship. J. Clim. 2007, 20, 531–543. [Google Scholar] [CrossRef]

- McPhaden, M.J. Genesis and evolution of the 1997-98 El Niño. Science 1999, 283, 950–954. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Yu, B. MJO and its relationship to ENSO. J. Geophys. Res. Atmos. 2008, 113, D14106. [Google Scholar] [CrossRef]

- Majda, A.J.; Stechmann, S.N. The skeleton of tropical intraseasonal oscillations. Proc. Natl. Acad. Sci. USA 2009, 106, 8417–8422. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Stechmann, S.N. Nonlinear dynamics and regional variations in the MJO skeleton. J. Atmos. Sci. 2011, 68, 3053–3071. [Google Scholar] [CrossRef]

- Stechmann, S.N.; Majda, A.J. Identifying the skeleton of the Madden–Julian oscillation in observational data. Mon. Weather Rev. 2015, 143, 395–416. [Google Scholar] [CrossRef]

- Stachnik, J.P.; Waliser, D.E.; Majda, A.J.; Stechmann, S.N.; Thual, S. Evaluating MJO event initiation and decay in the skeleton model using an RMM-like index. J. Geophys. Res. Atmos. 2015, 120, 11486–11508. [Google Scholar] [CrossRef]

- Biello, J.A.; Majda, A.J. Modulating synoptic scale convective activity and boundary layer dissipation in the IPESD models of the Madden–Julian oscillation. Dyn. Atmos. Oceans 2006, 42, 152–215. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J. Filtering the stochastic skeleton model for the Madden–Julian oscillation. Mon. Weather Rev. 2016, 144, 501–527. [Google Scholar] [CrossRef]

- Thual, S.; Majda, A.J.; Stechmann, S.N. Asymmetric intraseasonal events in the stochastic skeleton MJO model with seasonal cycle. Clim. Dyn. 2015, 45, 603–618. [Google Scholar] [CrossRef]

- Thual, S.; Majda, A.J. A skeleton model for the MJO with refined vertical structure. Clim. Dyn. 2016, 46, 2773–2786. [Google Scholar] [CrossRef]

- Majda, A.J.; Tong, X.T. Geometric ergodicity for piecewise contracting processes with applications for tropical stochastic lattice models. Commun. Pure Appl. Math. 2016, 69, 1110–1153. [Google Scholar] [CrossRef]

- Clarke, A.J. An Introduction to the Dynamics of El Niño and the Southern Oscillation; Elsevier: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Sarachik, E.S.; Cane, M.A. The El Nino-Southern Oscillation Phenomenon; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Holton, J.R.; Dmowska, R. El Niño, La Niña, and the Southern Oscillation; Academic Press: New York, NY, USA, 1989; Volume 46. [Google Scholar]

- Lee, T.; McPhaden, M.J. Increasing intensity of El Niño in the central-equatorial Pacific. Geophys. Res. Lett. 2010, 37, L14603. [Google Scholar] [CrossRef]

- Kao, H.Y.; Yu, J.Y. Contrasting eastern-Pacific and central-Pacific types of ENSO. J. Clim. 2009, 22, 615–632. [Google Scholar] [CrossRef]

- Ashok, K.; Behera, S.K.; Rao, S.A.; Weng, H.; Yamagata, T. El Niño Modoki and its possible teleconnection. J. Geophys. Res. Oceans 2007, 112, C11. [Google Scholar] [CrossRef]

- Kug, J.S.; Jin, F.F.; An, S.I. Two types of El Niño events: Cold tongue El Niño and warm pool El Niño. J. Clim. 2009, 22, 1499–1515. [Google Scholar] [CrossRef]

- Capotondi, A.; Wittenberg, A.T.; Newman, M.; Di Lorenzo, E.; Yu, J.Y.; Braconnot, P.; Cole, J.; Dewitte, B.; Giese, B.; Guilyardi, E.; et al. Understanding ENSO diversity. Bull. Am. Meteorol. Soc. 2015, 96, 921–938. [Google Scholar] [CrossRef]

- Chen, D.; Lian, T.; Fu, C.; Cane, M.A.; Tang, Y.; Murtugudde, R.; Song, X.; Wu, Q.; Zhou, L. Strong influence of westerly wind bursts on El Niño diversity. Nat. Geosci. 2015, 8, 339. [Google Scholar] [CrossRef]

- Thual, S.; Majda, A.J.; Chen, N.; Stechmann, S.N. Simple stochastic model for El Niño with westerly wind bursts. Proc. Natl. Acad. Sci. USA 2016, 113, 10245–10250. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Majda, A.J. Simple dynamical models capturing the key features of the Central Pacific El Niño. Proc. Natl. Acad. Sci. USA 2016, 113, 11732–11737. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Majda, A.J.; Thual, S. Observations and mechanisms of a simple stochastic dynamical model capturing El Niño diversity. J. Clim. 2018, 31, 449–471. [Google Scholar] [CrossRef]

- Thual, S.; Majda, A.J.; Chen, N. Mechanisms of the 2014–2016 Delayed Super El Nino Captured by Simple Dynamical Models. Clim. Dyn. 2018, in press. [Google Scholar] [CrossRef]

- Thual, S.; Majda, A.; Chen, N. Seasonal synchronization of a simple stochastic dynamical model capturing El Niño diversity. J. Clim. 2017, 30, 10047–10066. [Google Scholar] [CrossRef]

- Wang, X. A Note on Long-Time Behavior of Solutions to the Boussinesq System at Large Prandtl Number. Contemp. Math. 2005, 371, 315. [Google Scholar]

- Constantin, P.; Doering, C.R. Infinite Prandtl number convection. J. Stat. Phys. 1999, 94, 159–172. [Google Scholar] [CrossRef]

- Boffetta, G.; Davoudi, J.; Eckhardt, B.; Schumacher, J. Lagrangian tracers on a surface flow: The role of time correlations. Phys. Rev. Lett. 2004, 93, 134501. [Google Scholar] [CrossRef] [PubMed]

- Ide, K.; Kuznetsov, L.; Jones, C.K. Lagrangian data assimilation for point vortex systems*. J. Turbul. 2002, 3. [Google Scholar] [CrossRef]

- Apte, A.; Jones, C.K.; Stuart, A.; Voss, J. Data assimilation: Mathematical and statistical perspectives. Int. J. Numerical Methods Fluids 2008, 56, 1033–1046. [Google Scholar] [CrossRef]

- Salman, H.; Ide, K.; Jones, C.K. Using flow geometry for drifter deployment in Lagrangian data assimilation. Tellus A 2008, 60, 321–335. [Google Scholar] [CrossRef]

- Branicki, M.; Gershgorin, B.; Majda, A.J. Filtering skill for turbulent signals for a suite of nonlinear and linear extended Kalman filters. J. Comput. Phys. 2012, 231, 1462–1498. [Google Scholar] [CrossRef]

- Branicki, M.; Chen, N.; Majda, A.J. Non-Gaussian test models for prediction and state estimation with model errors. Chin. Ann. Math. Ser. B 2013, 34, 29–64. [Google Scholar] [CrossRef]

- Harlim, J.; Majda, A.J. Filtering turbulent sparsely observed geophysical flows. Mon. Weather Rev. 2010, 138, 1050–1083. [Google Scholar] [CrossRef]

- Monahan, A.H. Temporal Filtering Enhances the Skewness of Sea Surface Winds. J. Clim. 2018. [Google Scholar] [CrossRef]

- Monahan, A.H. The temporal autocorrelation structure of sea surface winds. J. Clim. 2012, 25, 6684–6700. [Google Scholar] [CrossRef]

- Cousins, W.; Sapsis, T.P. Reduced-order precursors of rare events in unidirectional nonlinear water waves. J. Fluid Mech. 2016, 790, 368–388. [Google Scholar] [CrossRef]

- Botev, Z.I.; Grotowski, J.F.; Kroese, D.P. Kernel density estimation via diffusion. Ann. Stat. 2010, 38, 2916–2957. [Google Scholar] [CrossRef]

- Chen, N.; Majda, A.J.; Tong, X.T. Rigorous analysis for efficient statistically accurate algorithms for solving Fokker–Planck equations in large dimensions. arXiv, 2017; arXiv:1709.05585. [Google Scholar]

- Majda, A.J.; Gershgorin, B. Quantifying uncertainty in climate change science through empirical information theory. Proc. Natl. Acad. Sci. USA 2010, 107, 14958–14963. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Gershgorin, B. Link between statistical equilibrium fidelity and forecasting skill for complex systems with model error. Proc. Natl. Acad. Sci. USA 2011, 108, 12599–12604. [Google Scholar] [CrossRef] [PubMed]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Kullback, S. Letter to the editor: The Kullback-Leibler distance. Am. Stat. 1987, 41, 340–341. [Google Scholar]

- Chen, N.; Giannakis, D.; Herbei, R.; Majda, A.J. An MCMC algorithm for parameter estimation in signals with hidden intermittent instability. SIAM/ASA J. Uncertain. Quantif. 2014, 2, 647–669. [Google Scholar] [CrossRef]

- Andrieu, C.; De Freitas, N.; Doucet, A.; Jordan, M.I. An introduction to MCMC for machine learning. Mach. Learn. 2003, 50, 5–43. [Google Scholar] [CrossRef]

- Beskos, A.; Roberts, G.; Stuart, A.; Voss, J. MCMC methods for diffusion bridges. Stoch. Dyn. 2008, 8, 319–350. [Google Scholar] [CrossRef]

- Golightly, A.; Wilkinson, D.J. Bayesian inference for stochastic kinetic models using a diffusion approximation. Biometrics 2005, 61, 781–788. [Google Scholar] [CrossRef] [PubMed]

- Johansen, S.; Juselius, K. Maximum likelihood estimation and inference on cointegration-with applications to the demand for money. Oxf. Bull. Econ. Stat. 1990, 52, 169–210. [Google Scholar] [CrossRef]

- Bock, R.D.; Aitkin, M. Marginal maximum likelihood estimation of item parameters: Application of an EM algorithm. Psychometrika 1981, 46, 443–459. [Google Scholar] [CrossRef]

- Evensen, G. The ensemble Kalman filter for combined state and parameter estimation. IEEE Control Syst. 2009, 29, 83–104. [Google Scholar] [CrossRef]

- Annan, J.; Hargreaves, J.; Edwards, N.; Marsh, R. Parameter estimation in an intermediate complexity earth system model using an ensemble Kalman filter. Ocean Model. 2005, 8, 135–154. [Google Scholar] [CrossRef]

- Taylor, K.E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. Atmos. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

- Houtekamer, P.L.; Mitchell, H.L. Data assimilation using an ensemble Kalman filter technique. Mon. Weather Rev. 1998, 126, 796–811. [Google Scholar] [CrossRef]

- Lermusiaux, P.F. Data assimilation via error subspace statistical estimation. Part II: Middle Atlantic Bight shelfbreak front simulations and ESSE validation. Mon. Weather Rev. 1999, 127, 1408–1432. [Google Scholar] [CrossRef]

- Hendon, H.H.; Lim, E.; Wang, G.; Alves, O.; Hudson, D. Prospects for predicting two flavors of El Niño. Geophys. Res. Lett. 2009, 36, L19713. [Google Scholar] [CrossRef]

- Kim, H.M.; Webster, P.J.; Curry, J.A. Seasonal prediction skill of ECMWF System 4 and NCEP CFSv2 retrospective forecast for the Northern Hemisphere Winter. Clim. Dyn. 2012, 39, 2957–2973. [Google Scholar] [CrossRef]

- Majda, A.J.; Chen, N. Model Error, Information Barriers, State Estimation and Prediction in Complex Multiscale Systems. Entropy 2018. submitted. [Google Scholar]

- Majda, A.J.; Gershgorin, B. Improving model fidelity and sensitivity for complex systems through empirical information theory. Proc. Natl. Acad. Sci. USA 2011, 108, 10044–10049. [Google Scholar] [CrossRef] [PubMed]

- Gershgorin, B.; Majda, A.J. Quantifying uncertainty for climate change and long-range forecasting scenarios with model errors—Part I: Gaussian models. J. Clim. 2012, 25, 4523–4548. [Google Scholar] [CrossRef]

- Branicki, M.; Majda, A.J. Quantifying uncertainty for predictions with model error in non-Gaussian systems with intermittency. Nonlinearity 2012, 25, 2543. [Google Scholar] [CrossRef]

- Branicki, M.; Majda, A. Quantifying Bayesian filter performance for turbulent dynamical systems through information theory. Commun. Math. Sci 2014, 12, 901–978. [Google Scholar] [CrossRef]

- Kleeman, R. Information theory and dynamical system predictability. Entropy 2011, 13, 612–649. [Google Scholar] [CrossRef]

- Kleeman, R. Measuring dynamical prediction utility using relative entropy. J. Atmos. Sci. 2002, 59, 2057–2072. [Google Scholar] [CrossRef]

- Majda, A.; Kleeman, R.; Cai, D. A mathematical framework for quantifying predictability through relative entropy. Methods Appl. Anal. 2002, 9, 425–444. [Google Scholar]

- Xu, Q. Measuring information content from observations for data assimilation: Relative entropy versus Shannon entropy difference. Tellus A 2007, 59, 198–209. [Google Scholar] [CrossRef]

- Barato, A.; Seifert, U. Unifying three perspectives on information processing in stochastic thermodynamics. Phys. Rev. Lett. 2014, 112, 090601. [Google Scholar] [CrossRef] [PubMed]

- Kawaguchi, K.; Nakayama, Y. Fluctuation theorem for hidden entropy production. Phys. Rev. E 2013, 88, 022147. [Google Scholar] [CrossRef] [PubMed]

- Pham, D.T. Stochastic methods for sequential data assimilation in strongly nonlinear systems. Mon. Weather Rev. 2001, 129, 1194–1207. [Google Scholar] [CrossRef]

- Majda, A.J. Statistical energy conservation principle for inhomogeneous turbulent dynamical systems. Proc. Natl. Acad. Sci. USA 2015, 112, 8937–8941. [Google Scholar] [CrossRef] [PubMed]

- Harlim, J.; Majda, A. Filtering nonlinear dynamical systems with linear stochastic models. Nonlinearity 2008, 21, 1281. [Google Scholar] [CrossRef]

- Gajic, Z.; Lelic, M. Modern Control Systems Engineering; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1996. [Google Scholar]

- Grabowski, W.W.; Smolarkiewicz, P.K. CRCP: A cloud resolving convection parameterization for modeling the tropical convecting atmosphere. Phys. D Nonlinear Phenom. 1999, 133, 171–178. [Google Scholar] [CrossRef]

- Grabowski, W.W. An improved framework for superparameterization. J. Atmos. Sci. 2004, 61, 1940–1952. [Google Scholar] [CrossRef]

- Doucet, A.; Godsill, S.; Andrieu, C. On sequential Monte Carlo sampling methods for Bayesian filtering. Stat. Comput. 2000, 10, 197–208. [Google Scholar] [CrossRef]

- Harlim, J.; Majda, A.J. Test models for filtering with superparameterization. Multiscale Model. Simul. 2013, 11, 282–308. [Google Scholar] [CrossRef]

- Lee, Y.; Majda, A.J. Multiscale methods for data assimilation in turbulent systems. Multiscale Model. Simul. 2015, 13, 691–713. [Google Scholar] [CrossRef]

- Daley, R. Atmospheric Data Analysis, Cambridge Atmospheric and Space Science Series; Cambridge University Press: Cambridge, UK, 1991; Volume 6966, p. 25. [Google Scholar]

- Sorenson, H.W.; Alspach, D.L. Recursive Bayesian estimation using Gaussian sums. Automatica 1971, 7, 465–479. [Google Scholar] [CrossRef]

- Hoteit, I.; Luo, X.; Pham, D.T. Particle Kalman filtering: A nonlinear Bayesian framework for ensemble Kalman filters. Mon. Weather Rev. 2012, 140, 528–542. [Google Scholar] [CrossRef]

- Alspach, D.L.; Samant, V.S.; Sorenson, H.W. Practical Control Algorithms for Nonlinear Stochastic Systems and Investigations of Nonlinear Filters; Technical Report for Statistics and Probability: San Diego, CA, USA, July 1980. [Google Scholar]

- Lee, Y.; Majda, A.J. State estimation and prediction using clustered particle filters. Proc. Natl. Acad. Sci. USA 2016, 113, 14609–14614. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.; McLaughlin, D.; Tabak, E. A one-dimensional model for dispersive wave turbulence. J. Nonlinear Sci. 1997, 7, 9–44. [Google Scholar] [CrossRef]

- Cai, D.; Majda, A.J.; McLaughlin, D.W.; Tabak, E.G. Dispersive wave turbulence in one dimension. Phys. D Nonlinear Phenom. 2001, 152, 551–572. [Google Scholar] [CrossRef]

- Qi, D.; Majda, A.J. Blended particle methods with adaptive subspaces for filtering turbulent dynamical systems. Phys. D Nonlinear Phenom. 2015, 298, 21–41. [Google Scholar] [CrossRef]

- Sapsis, T.P.; Majda, A.J. Blended reduced subspace algorithms for uncertainty quantification of quadratic systems with a stable mean state. Phys. D Nonlinear Phenom. 2013, 258, 61–76. [Google Scholar] [CrossRef]

- Keating, S.R.; Majda, A.J.; Smith, K.S. New methods for estimating ocean eddy heat transport using satellite altimetry. Mon. Weather Rev. 2012, 140, 1703–1722. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; Majda, A.J. Conditional Gaussian Systems for Multiscale Nonlinear Stochastic Systems: Prediction, State Estimation and Uncertainty Quantification. Entropy 2018, 20, 509. https://doi.org/10.3390/e20070509

Chen N, Majda AJ. Conditional Gaussian Systems for Multiscale Nonlinear Stochastic Systems: Prediction, State Estimation and Uncertainty Quantification. Entropy. 2018; 20(7):509. https://doi.org/10.3390/e20070509

Chicago/Turabian StyleChen, Nan, and Andrew J. Majda. 2018. "Conditional Gaussian Systems for Multiscale Nonlinear Stochastic Systems: Prediction, State Estimation and Uncertainty Quantification" Entropy 20, no. 7: 509. https://doi.org/10.3390/e20070509

APA StyleChen, N., & Majda, A. J. (2018). Conditional Gaussian Systems for Multiscale Nonlinear Stochastic Systems: Prediction, State Estimation and Uncertainty Quantification. Entropy, 20(7), 509. https://doi.org/10.3390/e20070509