Abstract

Maximum entropy models are increasingly being used to describe the collective activity of neural populations with measured mean neural activities and pairwise correlations, but the full space of probability distributions consistent with these constraints has not been explored. We provide upper and lower bounds on the entropy for the minimum entropy distribution over arbitrarily large collections of binary units with any fixed set of mean values and pairwise correlations. We also construct specific low-entropy distributions for several relevant cases. Surprisingly, the minimum entropy solution has entropy scaling logarithmically with system size for any set of first- and second-order statistics consistent with arbitrarily large systems. We further demonstrate that some sets of these low-order statistics can only be realized by small systems. Our results show how only small amounts of randomness are needed to mimic low-order statistical properties of highly entropic distributions, and we discuss some applications for engineered and biological information transmission systems.

1. Introduction

Maximum entropy models are central to the study of physical systems in thermal equilibrium [], and they have recently been found to model protein folding [,], antibody diversity [], and neural population activity [,,,,] quite well (see [] for a different finding). In part due to this success, these types of models have also been used to infer functional connectivity in complex neural circuits [,,] and to model collective phenomena of systems of organisms, such as flock behavior [].

This broad application of maximum entropy models is perhaps surprising since the usual physical arguments involving ergodicity or equality among energetically accessible states are not obviously applicable for such systems, though maximum entropy models have been justified in terms of imposing no structure beyond what is explicitly measured [,]. With this approach, the choice of measured constraints fully specifies the corresponding maximum entropy model. More generally, choosing a set of constraints restricts the set of consistent probability distributions—the maximum entropy solution being only one of all possible consistent probability distributions. If the space of distributions were sufficiently constrained by observations so that only a small number of very similar models were consistent with the data, then agreement between the maximum entropy model and the data would be an unavoidable consequence of the constraints rather than a consequence of the unique suitability of the maximum entropy model for the dataset in question.

In the field of systems neuroscience, understanding the range of allowed entropies for given constraints is an area of active interest. For example, there has been controversy [,,,,] over the notion that small pairwise correlations can conspire to constrain the behavior of large neural ensembles, which has led to speculation about the possibility that groups of ∼200 neurons or more might employ a form of error correction when representing sensory stimuli []. Recent work [] has extended the number of simultaneously recorded neurons modeled using pairwise statistics up to 120 neurons, pressing closer to this predicted error correcting limit. This controversy is in part a reflection of the fact that pairwise models do not always allow accurate extrapolation from small populations to large ensembles [,], pointing to the need for exact solutions in the important case of large neural systems. Another recent paper [] has also examined specific classes of biologically-plausible neural models whose entropy grows linearly with system size. Intriguingly, these authors point out that entropy can be subextensive, at least for one special distribution that is not well matched to most neural data (coincidentally, that special case was originally studied nearly 150 years ago [] when entropy was a new concept, though not in the present context). Understanding the range of possible scaling properties of the entropy in a more general setting is of particular importance to neuroscience because of its interpretation as a measure of the amount of information communicable by a neural system to groups of downstream neurons.

Previous authors have studied the large scale behavior of these systems with maximum entropy models expanded to second- [], third- [], and fourth-order []. Here we use non-perturbative methods to derive rigorous upper and lower bounds on the entropy of the minimum entropy distribution for any fixed sets of means and pairwise correlations possible for arbitrarily large systems (Equations (1)–(9); Figure 1 and Figure 2). We also derive lower bounds on the maximum entropy distribution (Equation (8)) and construct explicit low and high entropy models (Equations (28) and (29)) for a broad array of cases including the full range of possible uniform first- and second-order constraints realizable for large systems. Interestingly, we find that entropy differences between models with the same first- and second-order statistics can be nearly as large as is possible between any two arbitrary distributions over the same number of binary variables, provided that the solutions do not run up against the boundary of the space of allowed constraint values (see Section 2.1 and Figure 3 for a simple illustration of this phenomenon). This boundary is structured in such a way that some ranges of values for low order statistics are only satisfiable by systems below some critical size. Thus, for cases away from the boundary, entropy is only weakly constrained by these statistics, and the success of maximum entropy models in biology [,,,,,,,,,], when it occurs for large enough systems [], can represent a real triumph of the maximum entropy approach.

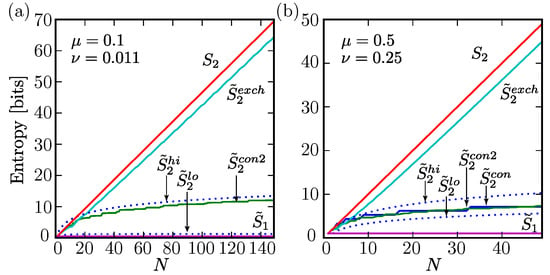

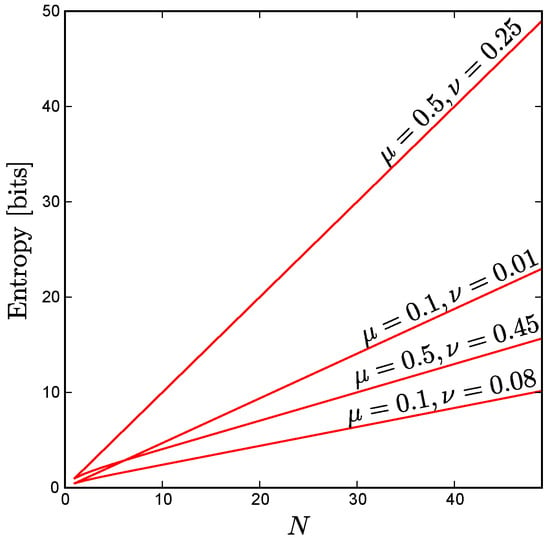

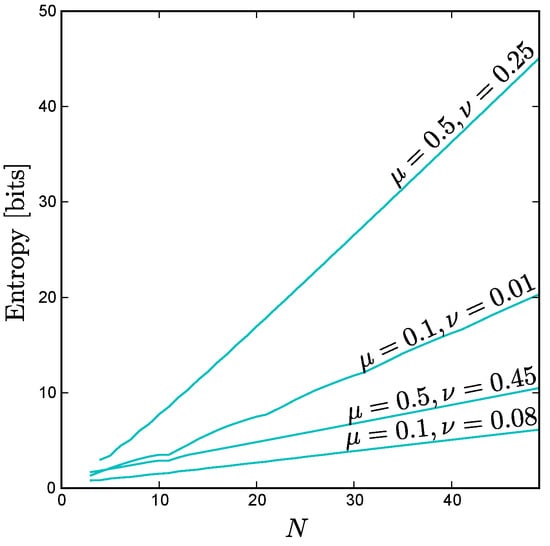

Figure 1.

Minimum and maximum entropy for fixed uniform constraints as a function of N. The minimum entropy grows no faster than logarithmically with the system size N for any mean activity level and pairwise correlation strength . (a) In a parameter regime relevant for neural population activity in the retina [,] (, ), we can construct an explicit low entropy solution () that grows logarithmically with N, unlike the linear behavior of the maximum entropy solution (). Note that the linear behavior of the maximum entropy solution is only possible because these parameter values remain within the boundary of allowed and values (See Appendix C); (b) Even for mean activities and pairwise correlations matched to the global maximum entropy solution (; , ), we can construct explicit low entropy solutions ( and ) and a lower bound () on the entropy that each grow logarithmically with N, in contrast to the linear behavior of the maximum entropy solution () and the finitely exchangeable minimum entropy solution (). is the minimum entropy distribution that is consistent with the mean firing rates. It remains constant as a function of N.

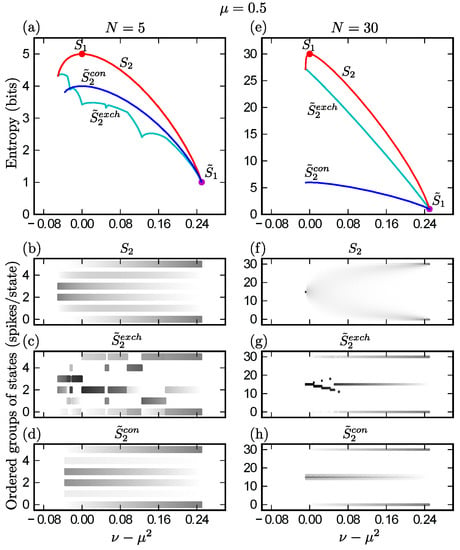

Figure 2.

Minimum and maximum entropy models for uniform constraints. (a) Entropy as a function of the strength of pairwise correlations for the maximum entropy model (), finitely exchangeable minimum entropy model (), and a constructed low entropy solution (), all corresponding to and . Filled circles indicate the global minimum and maximum for ; (b–d) Support for (b), (c), and (d) corresponding to the three curves in panel (a). States are grouped by the number of active units; darker regions indicate higher total probability for each group of states; (e–h) Same as for panels (a) through (d), but with . Note that, with rising N, the cusps in the curve become much less pronounced.

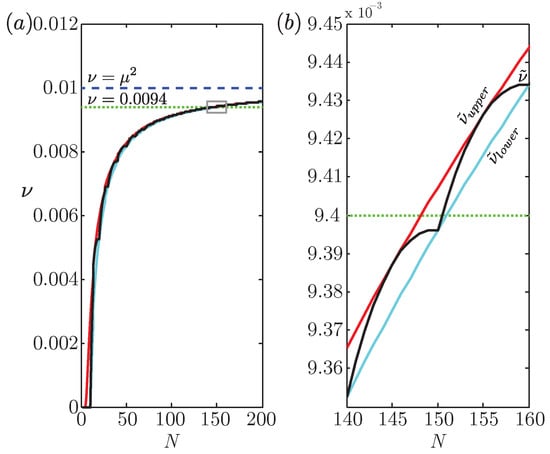

Figure 3.

An example of uniform, low-level statistics that can be realized by small groups of neurons but not by any system greater than some critical size. (a) Upper (red curve, ) and lower (cyan curve, ) bounds on the minimum value (black curve, ) for the pairwise correlation shared by all pairs of neurons are plotted as a function of system size N assuming that every neuron has mean activity . Note that all three curves asymptote to as (dashed blue line); (b) Enlarged portion of (a) outlined in grey reveals that groups of neurons can exhibit uniform constraints and (green dotted line), but this is not possible for any larger group.

Our results also have relevance for engineered information transmission systems. We show that empirically measured first-, second-, and even third-order statistics are essentially inconsequential for testing coding optimality in a broad class of such systems, whereas the existence of other statistical properties, such as finite exchangeability [], do guarantee information transmission near channel capacity [,], the maximum possible information rate given the properties of the information channel. A better understanding of minimum entropy distributions subject to constraints is also important for minimal state space realization [,]—a form of optimal model selection based on an interpretation of Occam’s Razor complementary to that of Jaynes []. Intuitively, maximum entropy models impose no structure beyond that needed to fit the measured properties of a system, whereas minimum entropy models require the fewest “moving parts” in order to fit the data. In addition, our results have implications for computer science as algorithms for generating binary random variables with constrained statistics and low entropy have found many applications (e.g., [,,,,,,,,,,,,,,,]).

Problem Setup

To make these ideas concrete, consider an abstract description of a neural ensemble consisting of N spiking neurons. In any given time bin, each neuron i has binary state denoting whether it is currently firing an action potential () or not (). The state of the full network is represented by . Let be the probability of state so that the distribution over all states of the system is represented by , . Although we will use this neural framework throughout the paper, note that all of our results will hold for any type of system consisting of binary variables.

In neural studies using maximum entropy models, electrophysiologists typically measure the time-averaged firing rates and pairwise event rates and fit the maximum entropy model consistent with these constraints, yielding a Boltzmann distribution for an Ising spin glass []. This “inverse” problem of inferring the interaction and magnetic field terms in an Ising spin glass Hamiltonian that produce the measured means and correlations is nontrivial, but there has been progress [,,,,,,]. The maximum entropy distribution is not the only one consistent with these observed statistics, however. In fact, there are typically many such distributions, and we will refer to the complete set of these as the solution space for a given set of constraints. Little is known about the minimum entropy permitted for a particular solution space.

Our question is: Given a set of observed mean firing rates and pairwise correlations between neurons, what are the possible entropies for the system? We will denote the maximum (minimum) entropy compatible with a given set of imposed correlations up to order n by (). The maximum entropy framework [] provides a hierarchical representation of neural activity: as increasingly higher order correlations are measured, the corresponding model entropy is reduced (or remains the same) until it reaches a lower limit. Here we introduce a complementary, minimum entropy framework: as higher order correlations are specified, the corresponding model entropy is increased (or unchanged) until all correlations are known. The range of possible entropies for any given set of constraints is the gap () between these two model entropies, and our primary concern is whether this gap is greatly reduced for a given set of observed first- or second-order statistics. We find that, for many cases, the gap grows linearly with the system size N, up to a logarithmic correction.

2. Results

We prove the following bounds on the minimum and maximum entropies for fixed sets of values of first and second order statistics, and , respectively. All entropies are given in bits.

For the minimum entropy:

where is the average of over all , . Perhaps surprisingly, the scaling behavior of the minimum entropy does not depend on the details of the sets of constraint values—for large systems the entropy floor does not contain tall peaks or deep valleys as one varies , , or N.

We emphasize that the bounds in Equation (1) are valid for arbitrary sets of mean firing rates and pairwise correlations, but we will often focus on the special class of distributions with uniform constraints:

The allowed values for and that can be achieved for arbitrarily large systems (see Appendix A) are

For uniform constraints, Equation (1) reduces to

where . In most cases, the lower bound in Equation (1) asymptotes to a constant, but in the special case for which and have values consistent with the global maximum entropy solution ( and ), we can give the higher bound:

For the maximum entropy, it is well known that:

which is valid for any set of values for and . Additionally, for any set of uniform constraints that can be achieved by arbitrarily large systems (Equation (4)) other than , which corresponds to the case in which all N neurons are perfectly correlated, we can derive upper and lower bounds on the maximum entropy that each scale linearly with the system size:

where and are independent of N.

An important class of probability distributions are the exchangeable distributions [], which are distributions over multiple variables that are symmetric under any permutation of those variables. For binary systems, exchangeable distributions have the property that the probability of a sequence of ones and zeros is only a function of the number of ones in the binary string. We have constructed a family of exchangeable distributions, with entropy , that we conjecture to be minimum entropy exchangeable solutions. The entropy of our exchangeable constructions scale linearly with N:

where and do not depend on N. We have computationally confirmed that this is indeed a minimum entropy exchangeable solution for .

Figure 1 illustrates the scaling behavior of these various bounds for uniform constraints in two parameter regimes of interest. Figure 2 shows how the entropy depends on the level of correlation () for the maximum entropy solution (), the minimum entropy exchangeable solution(), and a low entropy solution (), for a particular value of mean activity () at each of two system sizes, and .

2.1. Limits on System Growth

Physicists are often faced with the problem of having to determine some set of experimental predictions (e.g., mean values and pairwise correlations of spins in a magnet) for some model system defined by a given Hamiltonian, which specifies the energies associated with any state of the system. Typically, one is interested in the limiting behavior as the system size tends to infinity. In this canonical situation, no matter how the various terms in the Hamiltonian are defined as the system grows, the existence of a well-defined Hamiltonian guarantees that there exists a (maximum entropy) solution for the probability distribution for any system size.

However, here we are studying the inverse problem of deducing the underlying model based on measured low-order statistics [,,,,,]. In particular, we are interested in the minimum and maximum entropy models consistent with a given set of specified means and pairwise correlations. Clearly, both of these types of (potentially degenerate) models must exist whenever there exists at least one distribution consistent with the specified statistics, but as we now show, some sets of constraints can only be realized for small systems.

A Simple Example

To illustrate this point, consider the following example, which is arguably the simplest case exhibiting a cap on system size. At least one system consisting of N neurons can be constructed to satisfy the uniform set of constraints: and , provided that , but no solution is possible for . To prove this, we first observe that any set of uniform constraints that admits at least one solution must admit at least one exchangeable solution (see Appendix A and Appendix D). Armed with this fact, we can derive closed-form solutions for upper and lower bounds on the minimum value for consistent with N and (Appendix Equation (A25)), as well as the actual minimum value of for any given value of N, as depicted in Figure 3.

Thus, this system defined by its low-level statistics cannot be grown indefinitely, not because the entropy decreases beyond some system size, which is impossible (e.g., Theorem 2.2.1 of []), but rather, because no solution of any kind is possible for these particular constraints for ensembles of more than 150 neurons. We believe that this relatively straightforward example captures the essence of the more subtle phenomenon described by Schneidman and colleagues [], who pointed out that a naive extrapolation of the maximum entropy model fit to their retinal data to larger system sizes results in a conundrum at around neurons.

For our simple example, it was straightforward to decide what rules to follow when growing the system—both the means and pairwise correlations were fixed and perfectly uniform across the network at every stage. In practice, real neural activities and most other types of data exhibit some variation in their mean activities and correlations across the measured population, so in order to extrapolate to larger systems, one must decide how to model the distribution of statistical values involving the added neurons.

Fortunately, despite this complication, we have been able to derive upper and lower bounds on the minimum entropy for arbitrarily large N. In Appendix C, we derive a lower bound on the maximum entropy for the special case of uniform constraints achievable for arbitrarily large systems, but such a bound for the more general case would depend on the details of how the statistics of the system change with N.

Finally, we mention that this toy model can be thought of as an example of a “frustrated” system [], in that traveling in a closed loop of an odd number of neurons involves an odd number of anticorrelated pairs. By “anticorrelated”, we mean that the probability of simultaneous firing of a pair of neurons is less than chance, , but note that is always non-negative due to our convention of labeling active and inactive units with zeros and ones, respectively. However, frustrated systems are not typically defined by their correlational structure, but rather by their Hamiltonians, and there are often many Hamiltonians that are consistent with a given set of observed sets of constraints, so there does not seem to be a one-to-one relationship between these two notions of frustration. In the more general setting of nonuniform constraints, the relationship between frustrated systems as defined by their correlational structure and those that cannot be grown arbitrarily large is much more complex.

2.2. Bounds on Minimum Entropy

Entropy is a strictly concave function of the probabilities and therefore has a unique maximum that can be identified using standard methods [], at least for systems that possess the right symmetries or that are sufficiently small. In Section 2.3, we will show that the maximum entropy for many systems with specified means and pairwise correlations scales linearly with N (Equation (8), Figure 1). We obtain bounds on minimum entropy by exploiting the geometry of the entropy function.

2.2.1. Upper Bound on the Minimum Entropy

We prove below that the minimum entropy distribution exists at a vertex of the allowed space of probabilities, where most states have probability zero []. Our challenge then is to determine in which vertex a minimum resides. The entropy function is nonlinear, precluding obvious approaches from linear programming, and the dimensionality of the probability space grows exponentially with N, making exhaustive search and gradient descent techniques intractable for . Fortunately, we can compute a lower (upper) bound () on the entropy of the minimum entropy solution for all N (Figure 1), and we have constructed two families of explicit solutions with low entropies ( and ; Figure 1 and Figure 2) for a broad parameter regime covering all allowed values for and in the case of uniform constraints that can be achieved by arbitrarily large systems (see Equation (A24), Figure A2 in the Appendix).

Our goal is to minimize the entropy S as a function of the probabilities where

is the number of states, the satisfy a set of independent linear constraints, and for all i. For the main problem we consider, . The number of constraints—normalization, mean firing rates, and pairwise correlations—grows quadratically with N:

The space of normalized probability distributions is the standard simplex in dimensions. Each additional linear constraint on the probabilities introduces a hyperplane in this space. If the constraints are consistent and independent, then the intersection of these hyperplanes defines a affine space, which we call . All solutions are constrained to the intersection between and , and this solution space is a convex polytope of dimension ≤d, which we refer to as . A point within a convex polytope can always be expressed as a linear combination of its vertices; therefore, if are the vertices of , we may express

where is the total number of vertices and .

Using the concavity of the entropy function, we will now show that the minimum entropy for a space of probabilities S is attained on one (or possibly more) of the vertices of that space. Moreover, these vertices correspond to probability distributions with small support—specifically a support size no greater than (see Appendix B). This means that the global minimum will occur at the (possibly degenerate) vertex that has the lowest entropy, which we denote as :

It follows that .

Moreover, if a distribution satisfying the constraints exists, then there is one with at most nonzero (e.g., from arguments as in []). Together, these two facts imply that there are minimum entropy distributions with a maximum of nonzero . This means that even though the state space may grow exponentially with N, the support of the minimum entropy solution for fixed means and pairwise correlations will only scale quadratically with N.

The maximum entropy possible for a given support size occurs when the probability distribution is evenly distributed across the support and the entropy is equal to the logarithm of the number of states. This allows us to give an upper bound on the minimum entropy as

Note that this bound is quite general: as long as the constraints are independent and consistent this result holds regardless of the specific values of the and .

2.2.2. Lower Bound on the Minimum Entropy

We can also use the concavity of the entropy function to derive a lower bound on the entropy as in Equation (1):

where is the minimum entropy given a network of size N with constraint values and , and the sum is taken over all , and . Taken together with the upper bound, this fully characterizes the scaling behavior of the entropy floor as a function of N.

To derive this lower bound, note that the concavity of the entropy function allows us to write

Using this relation to find a lower bound on requires an upper bound on provided by the Frobenius norm of the correlation matrix (where the states are defined to take vaues in rather than )

In this case, is a simple function of the , :

Using Equations (17) and (18) in Equation (16) gives us our result (see Appendix H for further details).

Typically, this bound asymptotes to a constant as the number of terms included in the sum over scales with the number of pairs (), but in certain conditions this bound can grow with the system size. For example, in the case of uniform constraints, this reduces to

where .

In the large N limit for uniform constraints, we know (see Appendix A), therefore the only values of and satisfying Equation (20) are

Although here the lower bound grows logarithmically with N, rather than remaining constant, for many large systems this difference is insignificant compared with the linear dependence of the maximum entropy solution (i.e., N fair i.i.d. Bernoulli random variables). In other words, the gap between the minimum and maximum possible entropies consistent with the measured mean activities and pairwise correlations grows linearly in these cases (up to a logarithmic correction), which is as large as the gap for the space of all possible distributions for binary systems of the same size N with the same mean activities but without restriction on the correlations.

2.3. Bounds on Maximum Entropy

An upper bound on the maximum entropy is well-known and easy to state. For a given number of allowed states , the maximum possible entropy occurs when the probability distribution is equally distributed over all allowed states (i.e., the microcanonical ensemble), and the entropy is equal to

Lower bounds on the maximum entropy are more difficult to obtain (see Appendix C for further discussion on this point.). However, by restricting ourselves to uniform constraints achievable by arbitrarily large systems (Equations (2) and (3)), we can construct a distribution that provides a useful lower bound on the maximum possible entropy consistent with these constraints:

where

and

It is straightforward to verify that w and x are nonnegative constants for all allowed values of and that can be achieved for arbitrarily large systems. Importantly, x is nonzero provided (i.e., ), so the entropy of the system will grow linearly with N for any set of uniform constraints achievable for arbitrarily large systems except for the case in which all neurons are perfectly correlated.

2.4. Low-Entropy Solutions

In addition to the bounds we derived for the minimum entropy, we can also construct probability distributions between these minimum entropy boundaries for distributions with uniform constraints. These solutions provide concrete examples of distributions that achieve the scaling behavior of the bounds we have derived for the minimum entropy. We include these so that the reader may gain a better intuition for what low entropy models look like in practice. We remark that these models are not intended as an improvement over maximum entropy models for any particular biological system. This would be an interesting direction for future work. Nonetheless, they are of practical importance to other fields as we discuss further in Section 2.6.

Each of our low entropy constructions, and , has an entropy that grows logarithmically with N (see Appendix E, Appendix F and Appendix G, Equations (1)–(6)):

where is the ceiling function and represents the smallest prime at least as large as its argument. Thus, there is always a solution whose entropy grows no faster than logarithmically with the size of the system, for any observed levels of mean activity and pairwise correlation.

As illustrated in Figure 1a, for large binary systems with uniform first- and second-order statistics matched to typical values of many neural populations, which have low firing rates and correlations slightly above chance ([,,,,,,]; , ), the range of possible entropies grows almost linearly with N, despite the highly symmetric constraints imposed (Equations (2) and (3)).

Consider the special case of first- and second-order constraints (Equation (21)) that correspond to the unconstrained global maximum entropy distribution. For these highly symmetric constraints, both our upper and lower bounds on the minimum entropy grow logarithmically with N, rather than just the upper bound as we found for the neural regime (Equation (6); Figure 1a). In fact, one can construct [,] an explicit solution (Equation (28); Figure 1b and Figure 2a,d,e,h) that matches the mean, pairwise correlations, and triplet-wise correlations of the global maximum entropy solution whose entropy is never more than two bits above our lower bound (Equation (15)) for all N. Clearly then, these constraints alone do not guarantee a level of independence of the neural activities commensurate with the maximum entropy distribution. By varying the relative probabilities of states in this explicit construction we can make it satisfy a much wider range of and values than previously considered, covering most of the allowed region (see Appendix F and Appendix G) while still remaining a distribution whose entropy grows logarithmically with N.

2.5. Minimum Entropy for Exchangeable Distributions

We consider the exchangeable class of distributions as an example of distributions whose entropy must scale linearly with the size of the system unlike the global entropy minimum which we have shown scales logarithmically. If one has a principled reason to believe some system should be described by an exchangeable distribution, the constraints themselves are sufficient to drastically narrow the allowed range of entropies although the gap between the exchangeable minimum and the maximum will still scale linearly with the size of the system except in special cases. This result is perhaps unsurprising as the restriction to exchangeable distributions is equivalent to imposing a set of additional constraints (e.g., , for ) that is exponential in the size of the system.

While a direct computational solution to the general problem of finding the minimum entropy solution becomes intractable for , the situation for the exchangeable case is considerably different. In this case, the high level of symmetry imposed means that there are only states (one for each number of active neurons) and constraints (one for normalization, mean, and pairwise firing). This makes the problem of searching for the minimum entropy solution at each vertex of the space computationally tractable up into the hundreds of neurons.

Whereas the global lower bound scales logarithmically, our computation illustrates that the exchangeable case scales with N as seen in Figure 1. The large gap between and demonstrates that a distribution can dramatically reduce its entropy if it is allowed to violate sufficiently strong symmetries present in the constraints. This is reminiscent of other examples of symmetry-breaking in physics for which a system finds an equilibrium that breaks symmetries present in the physical laws. However, here the situation can be seen as reversed: Observed first and second order statistics satisfy a symmetry that is not present in the underlying model.

2.6. Implications for Communication and Computer Science

These results are not only important for understanding the validity of maximum entropy models in neuroscience, but they also have consequences in other fields that rely on information entropy. We now examine consequences of our results for engineered communication systems. Specifically, consider a device such as a digital camera that exploits compressed sensing [,] to reduce the dimensionality of its image representations. A compressed sensing scheme might involve taking inner products between the vector of raw pixel values and a set of random vectors, followed by a digitizing step to output N-bit strings. Theorems exist for expected information rates of compressed sensing systems, but we are unaware of any that do not depend on some knowledge about the input signal, such as its sparse structure [,]. Without such knowledge, it would be desirable to know which empirically measured output statistics could determine whether such a camera is utilizing as much of the N bits of channel capacity as possible for each photograph.

As we have shown, even if the mean of each bit is , and the second- and third-order correlations are at chance level (; , for all sets of distinct ), consistent with the maximum entropy distribution, it is possible that the Shannon mutual information shared by the original pixel values and the compressed signal is only on the order of bits, well below the channel capacity (N bits) of this (noiseless) output stream. We emphasize that, in such a system, the transmitted information is limited not by corruption due to noise, which can be neglected for many applications involving digital electronic devices, but instead by the nature of the second- and higher-order correlations in the output.

Thus, measuring pairwise or even triplet-wise correlations between all bit pairs and triplets is insufficient to provide a useful floor on the information rate, no matter what values are empirically observed. However, knowing the extent to which other statistical properties are obeyed can yield strong guarantees of system performance. In particular, exchangeability is one such constraint. Figure 1 illustrates the near linear behavior of the lower bound on information () for distributions obeying exchangeability, in both the neural regime (cyan curve, panel (a)) and the regime relevant for our engineering example (cyan curve, panel (b)). We find experimentally that any exchangeable distribution has as much entropy as the maximum entropy solution, up to terms of order (see Appendicies).

This result has potential applications in the field of symbolic dynamics and computational mechanics, which study the consequences of viewing a complex system through a finite state measuring device [,]. If we view each of the various models presented here as a time series of binary measurements from a system, our results indicate that bitstreams with identical mean and pairwise statistics can have profoundly different scaling as a function of the number of measurements (N), indicating radically different complexity. It would be interesting to explore whether the models presented here appear differently when viewed through the -machine framework [].

In computer science, it is sometimes possible to construct efficient deterministic algorithms from randomized ones by utilizing low entropy distributions. One common technique is to replace the independent binary random variables used in a randomized algorithm with those satisfying only pairwise independence []. In many cases, such a randomized algorithm can be shown to succeed even if the original independent random bits are replaced by pairwise independent ones having significantly less entropy. In particular, efficient derandomization can be accomplished in these instances by finding pairwise independent distributions with small sample spaces. Several such designs are known and use tools from finite fields and linear codes [,,,,], combinatorial block designs [,], Hadamard matrix theory [,], and linear programming [], among others []. Our construction here of two families of low entropy distributions fit to specified mean activities and pairwise statistics adds to this literature.

3. Discussion

Ideas and approaches from statistical mechanics are finding many applications in systems neuroscience [,]. In particular, maximum entropy models are powerful tools for understanding physical systems, and they are proving to be useful for describing biology as well, but a deeper understanding of the full solution space is needed as we explore systems less amenable to arguments involving ergodicity or equally accessible states. Here we have shown that second order statistics do not significantly constrain the range of allowed entropies, though other constraints, such as exchangeability, do guarantee extensive entropy (i.e., entropy proportional to system size N).

We have shown that in order for the constraints themselves to impose a linear scaling on the entropy, the number of experimentally measured quantities that provide those constraints must scale exponentially with the size of the system. In neuroscience, this is an unlikely scenario, suggesting that whatever means we use to infer probability distributions from the data (whether maximum entropy or otherwise) will most likely have to agree with other, more direct, estimates of the entropy [,,,,,,]. The fact that maximum entropy models chosen to fit a somewhat arbitrary selection of measured statistics are able to match the entropy of the system they model lends credence to the merits of this approach.

Neural systems typically exhibit a range of values for the correlations between pairs of neurons, with some firing coincidently more often than chance and others firing together less often than chance. Such systems can exhibit a form of frustration, such that they apparently cannot be scaled up to arbitrarily large sizes in such a way that the distribution of correlations and mean firing rates for the added neurons resembles that of the original system. We have presented a particularly simple example of a small system with uniform pairwise correlations and mean firing rates that cannot be grown beyond a specific finite size while maintaining these statistics throughout the network.

We have also indicated how, in some settings, minimum entropy models can provide a floor on information transmission, complementary to channel capacity, which provides a ceiling on system performance. Moreover, we show how highly entropic processes can be mimicked by low entropy processes with matching low-order statistics, which has applications in computer science.

Acknowledgments

The authors would like to thank Jonathan Landy, Tony Bell, Michael Berry, Bill Bialek, Amir Khosrowshahi, Peter Latham, Lionel Levine, Fritz Sommer, and all members of the Redwood Center for many useful discussions. M.R.D. is grateful for support from the Hellman Foundation, the McDonnell Foundation, the McKnight Foundation, the Mary Elizabeth Rennie Endowment for Epilepsy Research, and the National Science Foundation through Grant No. IIS-1219199. C.H. was supported under an NSF All-Institutes Postdoctoral Fellowship administered by the Mathematical Sciences Research Institute through its core grant DMS-0441170. This material is based upon work supported in part by the U.S. Army Research Laboratory and the U.S. Army Research Office under contract number W911NF-13-1-0390.

Author Contributions

Badr F. Albanna and Michael R. DeWeese contributed equally to this project and are resposible for the bulk of the results and writing of the paper. Jascha Sohl-Dickstein contributed substantially to clarifications of the main results and improvements to the text including the connection of these results to computer science. Christopher Hillar also contributed substantially to clarifications of the main results, the method used to identify an analytical lower bound on the minimum entropy solution, and improvements to the clarity of the text. All authors approved of this manuscript before submission.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Allowed Range of ν Given μ Across All Distributions for Large N

In this section we will only consider distributions satisfying uniform constraints

and we will show that

in the large N limit. One could concievably extend the linear programming methods below to find bounds in the case of general non-uniform constraints, but as of this time we have not been able to do so without resorting to numerical algorithms on a case-by-case basis.

We begin by determining the upper bound on , the probability of any pair of neurons being simultaneously active, given , the probability of any one neuron being active, in the large N regime, where N is the total number of neurons. Time is discretized and we assume any neuron can spike no more than once in a time bin. We have because is the probability of a pair of neurons firing together and thus each neuron in that pair must have at least a firing probability of . Furthermore, it is easy to see that the case = is feasible when there are only two states with non-zero probabilities: all neurons silent () or all neurons active (). In this case, . We use the term “active” to refer to neurons that are spiking, and thus equal to one, in a given time bin, and we also refer to “active” states in a distribution, which are those with non-zero probabilities.

We now proceed to show that the lower bound on in the large N limit is , the value of consistent with statistical independence among all N neurons. We can find the lower bound by viewing this as a linear programming problem [,], where the goal is to maximize given the normalization constraint and the constraints on .

It will be useful to introduce the notion of an exchangeable distribution [], for which any permutation of the neurons in the binary words labeling the states leaves the probability of each state unaffected. For example if , an exchangeable solution satisfies

In other words, the probability of any given word depends only on the number of ones it contains, not their particular locations, for an exchangeable distribution.

In order to find the allowed values of and , we need only consider exchangeable distributions. If there exists a probability distribution that satisfies our constraints, we can always construct an exchangeable one that also does given that the constraints themselves are symmetric (Equations (1) and (2)). Let us do this explicitly: Suppose we have a probability distribution over binary words that satisfies our constraints but is not exchangeable. We construct an exchangeable distribution with the same constraints as follows:

where is an element of the permutation group on N elements. This distribution is exchangeable by construction, and it is easy to verify that it satisfies the same uniform constraints as does the original distribution, .

Therefore, if we wish to find the maximum for a given value of , it is sufficient to consider exchangeable distributions. From now on in this section we will drop the e subscript on our earlier notation, define p to be exchangeable, and let be the probability of a state with i spikes.

The normalization constraint is

Here the binomial coefficient counts the number of states with i active neurons.

The firing rate constraint is similar, only now we must consider summing only those probabilities that have a particular neuron active. How many states are there with only a pair of active neurons given that a particular neuron must be active in all of the states? We have the freedom to place the remaining active neuron in any of the remaining sites, which gives us states with probability . In general if we consider states with i active neurons, we will have the freedom to place of them in sites, yielding:

Finally, for the pairwise firing rate, we must add up states containing a specific pair of active neurons, but the remaining active neurons can be anywhere else:

Now our task can be formalized as finding the maximum value of

subject to

This gives us the following dual problem: Minimize

given the following constraints (each labeled by i)

where is taken to be zero for . The principle of strong duality [] ensures that the value of the objective function at the solution is equal to the extremal value of the original objective function .

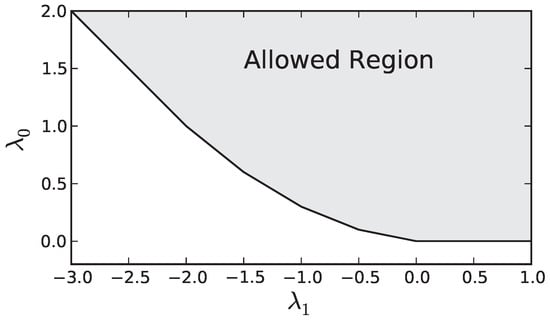

The set of constraints defines a convex region in the , plane as seen in Figure A1. The minimum of our dual objective generically occurs at a vertex of the boundary of the allowed region, or possibly degenerate minima can occur anywhere along an edge of the region. From Figure A1 is is clear that this occurs where Equation (A12) is an equality for two (or three in the degenerate case) consecutive values of i. Calling the first of these two values , we then have the following two equations that allow us to determine the optimal values of and ( and , respectively) as a function of

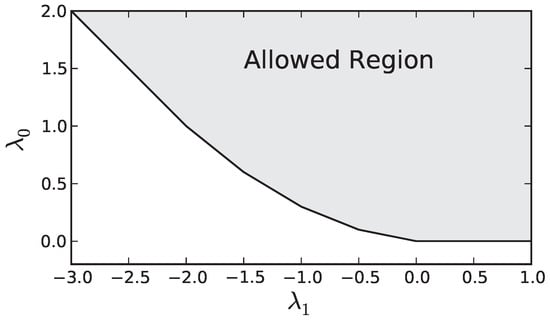

Figure A1.

An example of the allowed values of and for the dual problem ().

Solving for and , we find

Plugging this into Equation (A11) we find the optimal value is

Now all that is left is to express as a function of and take the limit as N becomes large. This expression can be found by noting from Equation (A11) and Figure A1 that at the solution, satisfies

where is the slope, , of constraint i. The expression for is determined from Equation (A12),

This allows us to write

where is between 0 and 1 for all N. Solving this for , we obtain

Taking the large N limit we find that and by the principle of strong duality [] the maximum value of is . Therefore we have shown that for large N, the region of satisfiable constraints is simply

as illustrated in Figure A2.

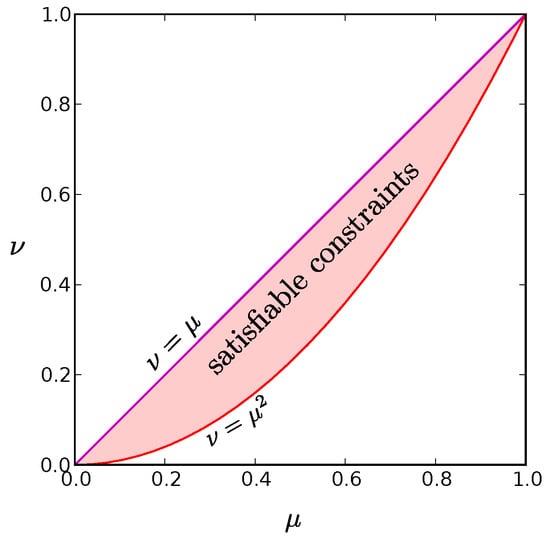

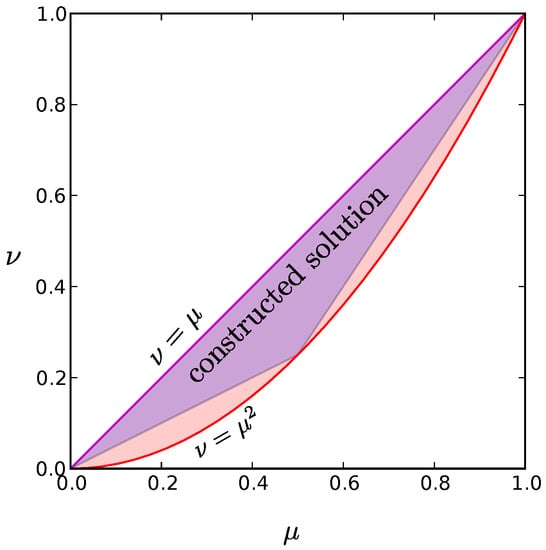

Figure A2.

The red shaded region is the set of values for and that can be satisfied for at least one probability distribution in the limit. The purple line along the diagonal where is the distribution for which only the all active and all inactive states have non-zero probability. It represents the global entropy minimum for a given value of . The red parabola, , at the bottom border of the allowed region corresponds to a wide range of probability distributions, including the global maximum entropy solution for given in which each neuron fires independently. We find that low entropy solutions reside at this low boundary as well.

We can also compute upper and lower bounds on the minimum possible value for for finite N by taking the derivative of (Equation (A23)) with respect to and setting that to zero, to obtain . Recalling that , it is clear that the only candidates for extremizing are , and we have:

Appendix B. Minimum Entropy Occurs at Small Support

Our goal is to minimize the entropy function

where is the number of states, the satisfy a set of independent linear constraints, and for all i. For the main problem we consider, . The constraints for normalization, mean firing rates, and pairwise firing rates give

In this section we will show that the minimum occurs at the vertices of the space of allowed probabilities. Moreover, these vertices correspond to probabilities of small support—specifically a support size equal to in most cases. These two facts allow us to put an upper bound on the minimum entropy of

for large N.

We begin by noting that the space of normalized probability distributions is the standard simplex in dimensions. Each linear constraint on the probabilities introduces a hyperplane in this space. If the constraints are consistent and independent, the intersection of these hyperplanes defines a affine space, which we call . All solutions are constrained to the intersection between and and this solution space is a convex polytope of dimension ≤ d, which we refer to as . A point within a convex polytope can always be expressed as a linear combination of its vertices, therefore if are the vertices of we may write

where is the total number of vertices and .

Using the concavity of the entropy function, we will now show that the minimum entropy for a space of probabilities S is attained on the vertices of that space. Of course, this means that the global minimum will occur at the vertex that has the lowest entropy,

Therefore,

Moreover, if a distribution satisfying the constraints exists, then there is one with at most non-zero (e.g., from arguments as in []). Together, these two facts imply that there are minimum entropy distributions with a maximum of non-zero . This means that even though the state space may grow exponentially with N, the support of the minimum entropy solution for fixed means and pairwise correlations will only scale quadratically with N.

This allows us to give an upper bound on the minimum entropy as,

for large N. It is important to note how general this bound is: as long as the constraints are independant and consistent this result holds regardless of the specific values of the and .

Appendix C. The Maximum Entropy Solution

In the previous Appendix, we derived a useful upper bound on the minimum entropy solution valid for any values of and that can be achieved by at least one probability distribution. In Appendix H below, we obtain a useful lower bound on the minimum entropy solution. It is straightforward to obtain an upper bound on the maximum entropy distribution valid for arbitrary achievable and : the greatest possible entropy for N neurons is achieved if they all fire independently with probability , resulting in the bound .

Deriving a useful lower bound for the maximum entropy for arbitrary allowed constraints and is a subtle problem. In fact, merely specifying how an ensemble of binary units should be grown from some finite initial size to arbitrarily large N in such a way as to “maintain” the low-level statistics of the original system raises many questions.

For example, typical neural populations consist of units with varying mean activities, so how should the mean activities of newly added neurons be chosen? For that matter, what correlational structure should be imposed among these added neurons and between each new neuron and the existing units? For each added neuron, any choice will inevitably change the histograms of mean activities and pairwise correlations for any initial ensemble consisting of more than one neuron, except in the special case of uniform constraints. Even for the relatively simple case of uniform constraints, we have seen that there are small systems that cannot be grown beyond some critical size while maintaining uniform values for and (see Figure 2 in the main text). The problem of determining whether it is even mathematically possible to add a neuron with some predetermined set of pairwise correlations with existing neurons can be much more challenging for the general case of nonuniform constraints.

For these reasons, we will leave the general problem of finding a lower bound on the maximum entropy for future work and focus here on the special case of uniform constraints:

We will obtain a lower bound on the maximum entropy of the system with the use of an explicit construction, which will necessarily have an entropy, , that does not exceed that of the maximum entropy solution. We construct our model system as follows: with probability , all N neurons are active and set to 1, otherwise each neuron is independently set to 1 with probability . The conditions required for this model to match the desired mean activities and pairwise correlations across the population are given by

Inverting these equations to isolate and yields

The entropy of the system is then

where w and x are nonnegative constants for all allowed values of and that can be achieved for arbitrarily large systems. x is nonzero provided ( i.e., ), so the entropy of the system will grow linearly with N for any uniform constraints achievable for arbitrarily large systems except for the case in which all neurons are perfectly correlated.

Using numerical methods, we can empirically confirm the linear scaling of the entropy of the true maximum entropy solution for the case of uniform constraints that can be achieved by arbitrarily large systems. In general, the constraints can be written

where the sums run over all states of the system. In order to enforce the constraints, we can add terms involving Lagrange multipliers and to the entropy in the usual fashion to arrive at a function to be maximized

Maximizing this function gives us the Boltzmann distribution for an Ising spin glass

where is the normalization factor or partition function. The values of and are left to be determined by ensuring this distribution is consistent with our constraints and .

For the case of uniform constraints, the Lagrange multipliers are themselves uniform:

This allows us to write the following expression for the maximum entropy distribution:

If there are k neurons active, this becomes

Note that there are states with probability . Using expression (A48), we find the maximum entropy by using the fsolve function from the SciPy package of Python subject to constraints (A34) and (A35).

As Figure A3 shows, for fixed uniform constraints the entropy scales linearly as a function of N, even in cases where the correlations between all pairs of neurons () are quite large, provided that . Moreover, for uniform constraints with anticorrelated units ( i.e., pairwise correlations below the level one would observe for independent units), we find empirically that the maximum entropy still scales approximately linearly up to the maximum possible system size consistent with these constraints, as illustrated by Figure 3 in the main text.

Figure A3.

For the case of uniform constraints achievable by arbitrarily large systems, the maximum possible entropy scales linearly with system size, N, as shown here for various values of and . Note that this linear scaling holds even for large correlations, provided that . For the extreme case , all the neurons are perfectly correlated so the entropy of the ensemble does not change with increasing N.

Appendix D. Minimum Entropy for Exchangeable Probability Distributions

Although the values of the firing rate () and pairwise correlations () may be identical for each neuron and pair of neurons, the probability distribution that gives rise to these statistics need not be exchangeable as we have already shown. Indeed, as we explain below, it is possible to construct non-exchangeable probability distributions that have dramatically lower entropy then both the maximum and the minimum entropy for exchangeable distributions. That said, exchangeable solutions are interesting in their own right because they have large N scaling behavior that is distinct from the global entropy minimum, and they provide a symmetry that can be used to lower bound the information transmission rate close to the maximum possible across all distributions.

Restricting ourselves to exchangeable solutions represents a significant simplification. In the general case, there are probabilities to consider for a system of N neurons. There are N constraints on the firing rates (one for each neuron) and pairwise constraints (one for each pair of neurons). This gives us a total number of constrains () that grows quadratically with N:

However in the exchangeable case, all states with the same number of spikes have the same probability so there are only free parameters. Moreover, the number of constraints becomes 3 as there is only one constraint each for normalization, firing rate, and pairwise firing rate (as expressed in Equations (A5)–(A7), respectively). In general, the minimum entropy solution for exchangeable distributions should have the minimum support consistent with these three constraints. Therefore, the minimum entropy solution should have at most three non-zero probabilities (see Appendix B).

For the highly symmetrical case with and , we can construct the exchangeable distribution with minimum entropy for all even N. This distribution consists of the all ones state, the all zeros state, and all states with ones. The constraint implies that , and the condition implies

which corresponds to an entropy of

Using Sterling’s approximation and taking the large N limit, this simplifies to

For arbitrary values of , and N, it is difficult to determine from first principles which three probabilities are non-zero for the minimum entropy solution, but fortunately the number of possibilities is now small enough that we can exhaustively search by computer to find the set of non-zero probabilities corresponding to the lowest entropy.

Using this technique, we find that the scaling behavior of the exchangeable minimum entropy is linear with N as shown in Figure A4. We find that the asymptotic slope is positive, but less than that of the maximum entropy curve, for all . For the symmetrical case, , our exact expression Equation (A51) for the exchangeable distribution consisting of the all ones state, the all zeros state, and all states with ones agrees with the minimum entropy exchangeable solution found by exhaustive search, and in this special case the asymptotic slope is identical to that of the maximum entropy curve.

Figure A4.

The minimum entropy for exchangeable distributions versus N for various values of and . Note that, like the maximum entropy, the exchangeable minimum entropy scales linearly with N as , albeit with a smaller slope for . We can calculate the entropy exactly for = 0.5 and = 0.25 as , and we find that the leading term is indeed linear: .

Appendix E. Construction of a Low Entropy Distribution for All Values of μ and ν

We can construct a probability distribution with roughly states with nonzero probability out of the full possible states of the system such that

where N is the number of neurons in the network and n is the number of neurons that are active in every state. Using this solution as a basis, we can include the states with all neurons active and all neurons inactive to create a low entropy solution for all allowed values for and (See Appendix G). We refer to the entropy of this low entropy construction to distinguish it from the entropy () of another low entropy solution described in the next section. Our construction essentially goes back to Joffe [] as explained by Luby in [].

We derive our construction by first assuming that N is a prime number, but this is not actually a limitation as we will be able to extend the result to all values of N. Specifically, non-prime system sizes are handled by taking a solution for a larger prime number and removing the appropriate number of neurons. It should be noted that occasionally the solution derived using the next largest prime number does not necessarily have the lowest entropy and occasionally we must use even larger primes to find the minimum entropy possible using this technique; all plots in the main text were obtained by searching for the lowest entropy solution using the 10 smallest primes that are each at least as great as the system size N.

We begin by illustrating our algorithm with a concrete example; following this illustrative case we will prove that each step does what we expect in general. Consider , and . The algorithm is as follows:

- Begin with the state with active neurons in a row:

- 11100

- Generate new states by inserting progressively larger gaps of 0s before each 1 and wrapping active states that go beyond the last neuron back to the beginning. This yields unique states including the original state:

- 11100

- 10101

- 11010

- 10011

- Finally, “rotate” each state by shifting each pattern of ones and zeros to the right (again wrapping states that go beyond the last neuron). This yields a total of states:

- 11100 01110 00111 10011 11001

- 10101 11010 01101 10110 01011

- 11010 01101 10110 01011 10101

- 10011 11001 11100 01110 00111

- Note that each state is represented twice in this collection, removing duplicates we are left with total states. By inspection we can verify that each neuron is active in states and each pair of neurons is represented in states. Weighting each state with equal probability gives us the values for and stated in Equation (A53).

Now we will prove that this construction works in general for N prime and any value of n by establishing (1) that step 2 of the above algorithm produces a set of states with n spikes; (2) that this method produces a set of states that when weighted with equal probability yield neurons that all have the same firing rates and pairwise statistics; and (3) that this method produces at least double redundancy in the states generated as stated in step 4 (although in general there may be a greater redundancy). In discussing (1) and (2) we will neglect the issue of redundancy and consider the states produced through step 3 as distinct.

First we prove that step 2 always produces states with n neurons, which is to say that no two spikes are mapped to the same location as we shift them around. We will refer to the identity of the spikes by their location in the original starting state; this is important as the operations in step 2 and 3 will change the relative ordering of the original spikes in their new states. With this in mind, the location of the ith spike with a spacing of s between them will result in the new location l (here the original state with all spikes in a row is ):

where . In this form, our statement of the problem reduces to demonstrating that for given values of s and N, no two values of i will result in the same l. This is easy to show by contradiction. If this were the case,

For this to be true, either s or must contain a factor of N, but each are smaller than N so we have a contradiction. This also demonstrates why N must be prime—if it were not, it would be possible to satisfy this equation in cases where s and contain between them all the factors of N.

It is worth noting that this also shows that there is a one-to-one mapping between s and l given i. In other words, each spike is taken to every possible neuron in step 2. For example, if , and we fix :

If we now perform the operation in step 3, then the location l of spike i becomes

where d is the amount by which the state has been rotated (the first column in step 3 is , the second is , etc.). It should be noted that step 3 trivially preserves the number of spikes in our states so we have established that steps 2 and 3 produce only states with n spikes.

We now show that each neuron is active, and each pair of neurons is simultaneously active, in the same number of states. This way when each of these states is weighted with equal probability, we find symmetric statistics for these two quantities.

Beginning with the firing rate, we ask how many states contain a spike at location l. In other words, how many combinations of s, i, and d can we take such that Equation (A56) is satisfied for a given l. For each choice of s and i there is a unique value of d that satisfies the equation. s can take values between 1 and , and i takes values from 0 to , which gives us states that include a spike at location l. Dividing by the total number of states we obtain an average firing rate of

Consider neurons at and ; we wish to know how many values of s, d, and we can pick so that

Taking the difference between these two equations, we find

From our discussion above, we know that this equation uniquely specifies s for any choice of and . Furthermore, we must pick d such that Equations (A58) and (A59) are satisfied. This means that for each choice of and there is a unique choice of s and d, which results in a state that includes active neurons at locations and . Swapping and will result in a different s and d. Therefore, we have states that include any given pair—one for each choice of and . Dividing this number by the total number of states, we find a correlation equal to

where N is prime.

Finally we return to the question of redundancy among states generated by steps 1 through 3 of the algorithm. Although in general there may be a high level of redundancy for choices of n that are small or close to N, we can show that in general there is at least a twofold degeneracy. Although this does not impact our calculation of and above, it does alter the number of states, which will affect the entropy of system.

The source of the twofold symmetry can be seen immediately by noting that the third and fourth rows of our example contain the same set of states as the second and first respectively. The reason for this is that each state in the case involves spikes that are one leftward step away from each other just as involves spikes that are one rightward shift away from each other. The labels we have been using to refer to the spikes have reversed order but the set of states are identical. Similarly the case contains all states with spikes separated by two leftward shifts just as the case. Therefore, the set of states with is equivalent to the set of states with . Taking this degeneracy into account, there are at most unique states; each neuron spikes in of these states and any given pair spikes together in states.

Because these states each have equal probability the entropy of this system is bounded from above by

where N is prime. As mentioned above, we write this as an inequality because further degeneracies among states beyond the factor of two that always occurs are possible for some prime numbers. In fact, in order to avoid non-monotonic behavior, the curves for shown in Figure 1 and Figure 3 of the main text were generated using the lowest entropy found for the 10 smallest primes greater than N for each value of N.

We can extend this result to arbitrary values for N including non-primes by invoking the Bertrand-Chebyshev theorem, which states that there always exists at least one prime number p with for any integer :

where N is any integer. Unlike the maximum entropy and the entropy of the exchangeable solution, which we have shown to both be extensive quantities, this scales only logarithmically with the system size N.

Appendix F. Another Low Entropy Construction for the Communications Regime, &

In addition to the probability distribution described in the previous section, we also rediscovered another low entropy construction in the regime most relevant for engineered communications systems (, ) that allows us to satisfy our constraints for a system of N neurons with only active states. Below we describe a recursive algorithm for determining the states for arbitrarily large systems—states needed for are built from the states needed for , where q is any integer greater than 2. This is sometimes referred to as a Hadamard matrix. Interestingly, this specific example goes back to Sylvester in 1867 [], and it was recently discussed in the context of neural modeling by Macke and colleagues [].

We begin with . Here we can easily write down a set of states that when weighted equally lead to the desired statistics. Listing these states as rows of zeros and ones, we see that they include all possible two-neuron states:

In order to find the states needed for we replace each 1 in the above by

and each 0 by

to arrive at a new array for twice as many neurons and twice as many states with nonzero probability:

By inspection, we can verify that each new neuron is spiking in half of the above states and each pair is spiking in a quarter of the above states. This procedure preserves , , and for all neurons; thus providing a distribution that mimics the statistics of independent binary variables up to third order (although it does not for higher orders). Let us consider the the proof that is preserved by this transformation. In the process of doubling the number of states from to , each neuron with firing rate “produces” two new neurons with firing rates and . It is clear from Equations (A65) and (A66) that we obtain the following two relations,

It is clear from these equations that if we begin with that this will be preserved by this transformation. By similar, but more tedious, methods one can show that , and .

Therefore, we are able to build up arbitrarily large groups of neurons that satisfy our statistics using only states by repeating the procedure that took us from to . Since these states are weighted with equal probability we have an entropy that grows only logarithmically with N

We mention briefly a geometrical interpretation of this probability distribution. The active states in this distribution can be thought of as a subset of corners on an N dimensional hypercube with the property that the separation of almost every pair is the same. Specifically, for each active state, all but one of the other active states has a Hamming distance of exactly from the original state; the remaining state is on the opposite side of the cube, and thus has a Hamming distance of N. In other words, for any pair of polar opposite active states, there are active states around the “equator”.

We can extend Equation (A70) to arbitrary numbers of neurons that are not multiples of 2 by taking the least multiple of 2 at least as great as N, so that in general:

By adding two other states we can extend this probability distribution so that it covers most of the allowed region for and while remaining a low entropy solution, as we now describe.

We remark that the authors of [,] provide a lower bound of for the sample size possible for a pairwise independent binary distribution, making the sample size of our novel construction essentially optimal.

Appendix G. Extending the Range of Validity for the Constructions

We now show that each of these low entropy probability distributions can be generalized to cover much of the allowed region depicted in Figure A2; in fact, the distribution derived in Appendix E can be extended to include all possible combinations of the constraints and . This can be accomplished by including two additional states: the state where all neurons are silent and the state where all neurons are active. If we weight these states by probabilities and respectively and allow the original states to carry probability in total, normalization requires

We can express the value of the new constraints ( and ) in terms of the original constraint values ( and ) as follows:

These values span a triangular region in the - plane that covers the majority of satisfiable constraints. Figure A5 illustrates the situation for . Note that by starting with other values of , we can construct a low entropy solution for any possible constraints and .

With the addition of these two states, the entropy of the expanded system is bounded from above by

For given values of and , the are fixed and only the first term depends on N. Using Equations (A73) and (A74),

This allows us to rewrite Equation (A75) as

We are free to select and to minimize the first coefficient for a desired and , but in general we know this coefficient is less than 1 giving us a simple bound,

Like the original distribution, the entropy of this distribution scales logarithmically with N. Therefore, by picking our original distribution properly, we can find low entropy distributions for any and for which the number of active states grows as a polynomial in N (see Figure A5).

Figure A5.

The full shaded region includes all allowed values for the constraints and for all possible probability distributions, replotted from Figure A2. As described in Appendix E and Appendix G, one of our low-entropy constructed solutions can be matched to any of the allowed constraint values in the full shaded region, whereas the constructed solution described in Appendix F can achieve any of the values within the triangular purple shaded region. Note that even with this second solution, we can cover most of the allowed region. Each of our constructed solutions have entropies that scale as .

Similarly, we can extend the range of validity for the construction described in Appendix F to the triangular region shown in Figure A2 by assigning probabilities , , and to the all silent state, all active state, and the total probability assigned to the remaining states of the original model, respectively. The entropy of this extended distribution must be no greater than the entropy of the original distribution (Equation (A71)), since the same number of states are active, but now they are not weighted equally, so this remains a low entropy distribution.

Appendix H. Proof of the Lower Bound on Entropy for Any Distribution Consistent with Given &

Using the concavity of the logarithm function, we can derive a lower bound on the minimum entropy. Our lower bound asymptotes to a constant except for the special case , ∀ i, and , ∀, which is especially relevant for communication systems since it matches the low order statistics of the global maximum entropy distribution for an unconstrained set of binary variables.

We begin by bounding the entropy from below as follows:

where represents the full vector of all state probabilities, and we have used to denote an average over the distribution . The third step follows from Jensen’s inequality applied to the convex function .

Now we seek an upper bound on . This can be obtained by starting with the matrix representation C of the constraints (for now, we consider each state of the system, , as a binary column vector, where i denotes the state and each of the N components is either 1 or 0):

where C is an matrix. In this form, the diagonal entries of C, , are equal to and the off diagonal entries, , are equal to .

For the calculation that follows, it is expedient to represent words of the system as rather than ( i.e., −1 represents a silent neuron instead of 0). The relationship between the two can be written

where is the vector of all ones. Using this expression, we can relate to C:

This reduces to

Returning to Equation (A80) to find an upper bound on , we take the square of the Frobenius norm of :

The final line is where our new representation pays off: in this representation, . This gives us the desired upper bound for :

Combining this result with Equations (A87) and (A79), we obtain a lower bound for the entropy for any distribution consistent with any given sets of values and :

where and is the average value of over all with .

In the case of uniform constraints, this becomes

where .

For large values of N this lower bound asymptotes to a constant

The one exception is when . In the large N limit, this case is limited to when and for all i, j. Each is positive semi-definite; therefore, only when each . In other words,

But in the large N limit,

Without loss of generality, we assume that . In this case,

and

Of course, that means that in order to satisfy each pair must have one less than of equal to and the other greater than or equal to . The only way this may be true for all possible pairs is if all are equal to . According to Equation (A96), all must then be equal to . This is precisely the communication regime, and in this case our lower-bound scales logarithmically with N,

References

- Pathria, R. Statistical Mechanics; Butterworth Hein: Oxford, UK, 1972. [Google Scholar]

- Russ, W.; Lowery, D.; Mishra, P.; Yaffe, M.; Ranganathan, R. Natural-like function in artificial WW domains. Nature 2005, 437, 579–583. [Google Scholar] [CrossRef] [PubMed]

- Socolich, M.; Lockless, S.W.; Russ, W.P.; Lee, H.; Gardner, K.H.; Ranganathan, R. Evolutionary information for specifying a protein fold. Nature 2005, 437, 512–518. [Google Scholar] [CrossRef] [PubMed]

- Mora, T.; Walczak, A.M.; Bialek, W.; Callan, C.G. Maximum entropy models for antibody diversity. Proc. Natl. Acad. Sci. USA 2010, 107, 5405–5410. [Google Scholar] [CrossRef] [PubMed]

- Schneidman, E.; Berry, M.J.; Segev, R.; Bialek, W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature 2006, 440, 1007–1012. [Google Scholar] [CrossRef] [PubMed]

- Shlens, J.; Field, G.D.; Gauthier, J.L.; Grivich, M.I.; Petrusca, D.; Sher, A.; Litke, A.M.; Chichilnisky, E.J. The structure of multi-neuron firing patterns in primate retina. J. Neurosci. 2006, 26, 8254–8266. [Google Scholar] [CrossRef] [PubMed]

- Tkacik, G.; Schneidman, E.; Berry, I.; Michael, J.; Bialek, W. Ising models for networks of real neurons. arXiv, 2006; arXiv:q-bio/0611072. [Google Scholar]

- Tang, A.; Jackson, D.; Hobbs, J.; Chen, W.; Smith, J.; Patel, H.; Prieto, A.; Petrusca, D.; Grivich, M.I.; Sher, A.; et al. A maximum entropy model applied to spatial and temporal correlations from cortical networks in vitro. J. Neurosci. 2008, 28, 505–518. [Google Scholar] [CrossRef] [PubMed]

- Shlens, J.; Field, G.D.; Gauthier, J.L.; Greschner, M.; Sher, A.; Litke, A.M.; Chichilnisky, E.J. The Structure of Large-Scale Synchronized Firing in Primate Retina. J. Neurosci. 2009, 29, 5022–5031. [Google Scholar] [CrossRef] [PubMed]

- Ganmor, E.; Segev, R.; Schneidman, E. Sparse low-order interaction network underlies a highly correlated and learnable neural population code. Proc. Natl. Acad. Sci. USA 2011, 108, 9679–9684. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Huang, D.; Singer, W.; Nikolic, D. A small world of neuronal synchrony. Cereb. Cortex 2008, 18, 2891–2901. [Google Scholar] [CrossRef] [PubMed]

- Köster, U.; Sohl-Dickstein, J. Higher order correlations within cortical layers dominate functional connectivity in microcolumns. arXiv, 2013; arXiv:1301.0050v1. [Google Scholar]

- Hamilton, L.S.; SohlDickstein, J.; Huth, A.G.; Carels, V.M.; Deisseroth, K.; Bao, S. Optogenetic activation of an inhibitory network enhances feedforward functional connectivity in auditory cortex. Neuron 2013, 80, 10661076. [Google Scholar] [CrossRef] [PubMed]

- Bialek, W.; Cavagna, A.; Giardina, I.; Mora, T.; Silvestri, E.; Viale, M.; Walczak, A. Statistical mechanics for natural flocks of birds. arXiv, 2011; arXiv:org:1107.0604. [Google Scholar]

- Jaynes, E.T. Information Theory and Statistical Mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Bethge, M.; Berens, P. Near-maximum entropy models for binary neural representations of natural images. In Advances in Neural Information Processing Systems; Platt, J., Koller, D., Singer, Y., Roweis, S., Eds.; MIT Press: Cambridge, MA, USA, 2008; Volume 20, pp. 97–104. [Google Scholar]

- Roudi, Y.; Nirenberg, S.H.; Latham, P.E. Pairwise maximum entropy models for studying large biological systems: When they can and when they can’t work. PLoS Comput. Biol. 2009, 5, e1000380. [Google Scholar] [CrossRef] [PubMed]

- Nirenberg, S.H.; Victor, J.D. Analyzing the activity of large populations of neurons: How tractable is the problem? Curr. Opin. Neurobiol. 2007, 17, 397–400. [Google Scholar] [CrossRef] [PubMed]

- Azhar, F.; Bialek, W. When are correlations strong? arXiv, 2010; arXiv:org:1012.5987. [Google Scholar]

- Tkačik, G.; Marre, O.; Amodei, D.; Schneidman, E.; Bialek, W.; Berry, M.J. Searching for collective behavior in a large network of sensory neurons. PLoS Comput. Biol. 2014, 10, e1003408. [Google Scholar] [CrossRef] [PubMed]

- Macke, J.H.; Opper, M.; Bethge, M. Common Input Explains Higher-Order Correlations and Entropy in a Simple Model of Neural Population Activity. Phys. Rev. Lett. 2011, 106, 208102. [Google Scholar] [CrossRef] [PubMed]

- Sylvester, J. Thoughts on inverse orthogonal matrices, simultaneous sign successions, and tessellated pavements in two or more colours, with applications to Newton’s rule, ornamental tile-work, and the theory of numbers. Philos. Mag. 1867, 34, 461–475. [Google Scholar]

- Diaconis, P. Finite forms of de Finetti’s theorem on exchangeability. Synthese 1977, 36, 271–281. [Google Scholar] [CrossRef]

- Shannon, C. A mathematical theory of communications, I and II. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]