Abstract

Support vector machine (SVM) is one of the most successful learning methods for solving classification problems. Despite its popularity, SVM has the serious drawback that it is sensitive to outliers in training samples. The penalty on misclassification is defined by a convex loss called the hinge loss, and the unboundedness of the convex loss causes the sensitivity to outliers. To deal with outliers, robust SVMs have been proposed by replacing the convex loss with a non-convex bounded loss called the ramp loss. In this paper, we study the breakdown point of robust SVMs. The breakdown point is a robustness measure that is the largest amount of contamination such that the estimated classifier still gives information about the non-contaminated data. The main contribution of this paper is to show an exact evaluation of the breakdown point of robust SVMs. For learning parameters such as the regularization parameter, we derive a simple formula that guarantees the robustness of the classifier. When the learning parameters are determined with a grid search using cross-validation, our formula works to reduce the number of candidate search points. Furthermore, the theoretical findings are confirmed in numerical experiments. We show that the statistical properties of robust SVMs are well explained by a theoretical analysis of the breakdown point.

1. Introduction

1.1. Background

Support vector machine (SVM) is a highly developed classification method that is widely used in real-world data analysis [1,2]. The most popular implementation is called C-SVM, which uses the maximum margin criterion with a penalty for misclassification. The positive parameter C tunes the balance between the maximum margin and penalty, and the resulting classification problem can be formulated as a convex quadratic problem based on training data. A separating hyper-plane for classification is obtained from the optimal solution of the problem. Furthermore, complex non-linear classifiers are obtained by using the reproducing kernel Hilbert space (RKHS) as a statistical model of the classifiers [3]. There are many variants of SVM for solving binary classification problems, such as ν-SVM, Eν-SVM and least squares SVM [4,5,6]. Moreover, the generalization ability of SVM has been analyzed in many studies [7,8,9].

In practical situations, however, SVM has drawbacks. The remarkable feature of the SVM is that the separating hyperplane is determined mainly from misclassified samples. Thus, the most misclassified samples significantly affect the classifier, meaning that the standard SVM is extremely susceptible to outliers. In C-SVM, the penalties of sample points are measured in terms of the hinge loss, which is a convex surrogate of the 0-1loss for misclassification. The convexity of the hinge loss causes SVM to be unstable in the presence of outliers, since the convex function is unbounded and puts an extremely large penalty on outliers. One way to remedy the instability is to replace the convex loss with a non-convex bounded loss to suppress outliers. Loss clipping is a simple method to obtain a bounded loss from a convex loss [10,11]. For example, clipping the hinge loss leads to the ramp loss [12,13], which is a loss function used in robust SVMs. Yu et al. [11,14] showed a convex loss clipping that yields a non-convex loss function and proposed a convex relaxation of the resulting non-convex optimization problem to obtain a computationally-efficient learning algorithm. The SVM using the ramp loss is regarded as a robust variant of -SVM. Recently, Feng et al. [15] also proposed a robust variant of -SVM.

1.2. Our Contribution

In this paper, we provide a detailed analysis on the robustness of SVMs. In particular, we deal with a robust variant of kernel-based ν-SVM. The standard ν-SVM [5] has a regularization parameter ν, and it is equivalent to C-SVM; i.e., both methods provide the same classifier for the same training data if the regularization parameters, ν and C, are properly tuned. We generate a robust variant of ν-SVM by clipping the loss function of ν-SVM, called robust -SVM, with another learning parameter . The parameter μ denotes the ratio of samples to be removed from the training dataset as outliers. When the ratio of outliers in the training dataset is bounded above by μ, robust -SVM is expected to provide a robust classifier.

Robust -SVM is closely related to other robust SVMs, such as CVaR--SVM [16], the robust outlier detection (ROD) algorithm [17] and extended robust SVM (ER-SVM) [18,19]. In particular, it is equivalent to the CVaR--SVM. In this paper, the learning algorithm we consider is referred to as robust -SVM to emphasize that it is a robust variant of ν-SVM. On the other hand, ROD is to robust -SVM what C-SVM is to ν-SVM. ER-SVM is another robust extension of ν-SVM, and it includes robust -SVM as a special case. Both ROD and ER-SVM have a parameter corresponding to μ; i.e., the ratio of outliers to be removed from the training samples. The above learning algorithms share almost the same learning model. Here, the main concern of the past studies was to develop computationally-efficient learning algorithms and to confirm the robustness property in numerica experiments.

In this paper, our purpose is a theoretical investigation of the statistical properties of robust SVMs. In particular, we derive the exact finite-sample breakdown point of robust -SVM. The finite-sample breakdown point indicates the largest amount of contamination such that the estimator still gives information about the non-contaminated data [20] (Chapter 3.2). In order to investigate the breakdown point, we present that the robustness of the learning method is closely related to the dual representation of the optimization problem in the learning algorithm. Indeed, the dual representation provides an intuitive picture on how each sample affects the estimated classifier. Based on such an intuition, we calculate the exact breakdown point. This is a new approach to the theoretical analysis of robust statistics.

In the detailed analysis of the breakdown point, we reveal that the finite-sample breakdown point of robust -SVM is equal to μ if ν and μ satisfy a simple condition. Conversely, we prove that the finite-sample breakdown point is strictly less than μ, if the condition is violated. An important point is that our findings provide a way to specify a region of the learning parameters , such that robust -SVM has the desired robustness property. As a result, one can reduce the number of candidate learning parameters when the grid search of the learning parameters is conducted with cross-validation.

Some of the previous studies are related to ours. In particular, the breakdown point was used to assess the robustness of kernel-based estimators in [14]. In that paper, the influence of a single outlier is considered for a general class of robust estimators in regression problems. In contrast, we focus on a variant of SVM and provide a detailed analysis of the robustness property based on the breakdown point. Our analysis takes into account an arbitrary number of outliers.

The paper is organized as follows. In Section 2, we introduce the problem setup and briefly review the topic of learning algorithms using the standard SVM. Section 3 introduces the robust variant of ν-SVM. We propose a modified learning algorithm of robust -SVM in order to guarantee the robustness property of local optimal solutions. We show that the dual representation of robust -SVM has an intuitive interpretation that is of great help for evaluating the breakdown point. In Section 4, we introduce a finite-sample breakdown point as a measure of robustness. Then, we evaluate the breakdown point of robust -SVM. The robustness of other SVMs is also considered. In Section 5, we discuss a method of tuning the learning parameters ν and μ on the basis of the robustness analysis in Section 4. Section 6 examines the generalization performance of robust -SVM via numerical experiments. The conclusion is in Section 7. Detailed proofs of the theoretical results are presented in the Appendix.

2. Brief Introduction to Learning Algorithms

First of all, we summarize the notation used throughout this paper. Let be the set of positive integers, and let for denote a finite set of defined as . The set of all real numbers is denoted as . The function is defined as for . For a finite set A, the size of A is expressed as . For a reproducing kernel Hilbert space (RKHS) , the norm on is denoted as . See [3] for a description of RKHS.

Next, let us introduce the classification problem with an input space and binary output labels . Given i.i.d. training samples drawn from a probability distribution over , a learning algorithm produces a decision function such that its sign predicts the output labels for input points in test samples. The decision function predicts the correct label on the sample if and only if the inequality holds. The product is called the margin of the sample for the decision function g [21]. To make an accurate decision function, the margins on the training dataset should take large positive values.

In kernel-based ν-SVM [5], an RKHS endowed with a kernel function is used to estimate the decision function , where and . The misclassification penalty is measured by the hinge loss. More precisely, ν-SVM produces a decision function as the optimal solution of the convex problem,

where is the hinge loss of the margin with the threshold ρ. The second term is the penalty for the threshold ρ. The parameter ν in the interval is the regularization parameter. Usually, the range of ν that yields a meaningful classifier is narrower than the interval , as shown in [5]. The first term in (1) is a regularization term to avoid overfitting to the training data. A large positive margin is preferable for each training data. The optimal ρ of ν-SVM is non-negative. Indeed, the optimal solution satisfies:

The representer theorem [22,23] indicates that the optimal decision function of (1) is of the form,

for . Thanks to this theorem, even when is an infinite dimensional space, the above optimization problem can be reduced to a finite dimensional quadratic convex problem. This is the great advantage of using RKHS for non-parametric statistical inference [5]. The input point with a non-zero coefficient is called a support vector. A remarkable property of ν-SVM is that the regularization parameter ν provides a lower bound on the fraction of support vectors.

As pointed out in [24], ν-SVM is closely related to a financial risk measure called conditional value at risk (CVaR) [25]. Suppose that holds for a parameter . Then, the CVaR of samples at level ν is defined as the average of its ν-tail, i.e., , where σ is a permutation on such that holds. The definition of CVaR for general random variables is presented in [25].

In the literature, is defined as the negative margin . For a regularization parameter ν satisfying and a fixed decision function , the objective function in (1) is expressed as:

The proof is presented in Theorem 10 of [25]. Hence, ν-SVM yields a decision function that minimizes the sum of the regularization term and the CVaR of the negative margins at level ν.

In C-SVM [1], the decision function is obtained by solving:

in which the hinge loss with the fixed threshold is used. A positive regularization parameter is used instead of ν. For each training data, ν-SVM and C-SVM can be made to provide the same decision function by appropriately tuning ν and C. In this paper, we focus on ν-SVM and its robust variants rather than C-SVM. The parameter ν has the explicit meaning shown above, and this interpretation will be significant when we derive the robustness property of our method.

The hinge loss in (4) is replaced with the so-called ramp loss:

in the robust C-SVM proposed in [10,13,17]. By truncating the hinge loss, the influence of outliers is suppressed, and the estimated classifier is expected to be robust against outliers in the training data.

3. Robust Variants of SVM

3.1. Outlier Indicators for Robust Learning Methods

Here, we introduce robust -SVM, which is a robust variant of ν-SVM. To remove the influence of outliers, an outlier indicator, , is assigned for each training sample, where is intended to indicate that the sample is an outlier. The same idea is used in ROD [17]. Assume that the ratio of outliers is less than or equal to μ. For ν and μ such that ; robust -SVM can be formalized using RKHS as follows:

The optimal solution, and , provides the decision function for classification. The optimal ρ is non-negative, the same as with ν-SVM. Influence from samples with large negative margins can be removed by setting to zero.

The representer theorem ensures that the optimal decision function of (5) is represented by (2). Suppose that the decision function of the form (2), threshold ρ and outlier indicator η satisfy the KKT (Karush–Kuhn–Tucker) condition [26] (Chapter 5) of (5). As in the case of the standard ν-SVM, the number of support vectors in is bounded below by . In addition, the margin error on the training samples with is bounded above by ; i.e.,

holds.

In sequel sections, we develop a learning algorithm and investigate its robustness property against outliers. In order to avoid technical difficulties in the theoretical analysis of robust -SVM, we assume that and are positive integers throughout this paper. This is not a severe limitation unless the sample size is extremely small. This assumption ensures that the optimal solution of η in (5) lies in the binary product set .

Now, let us show the equivalence of robust -SVM and CVaR--SVM [16]. Given ν and μ, the optimization problem (5) can be represented as:

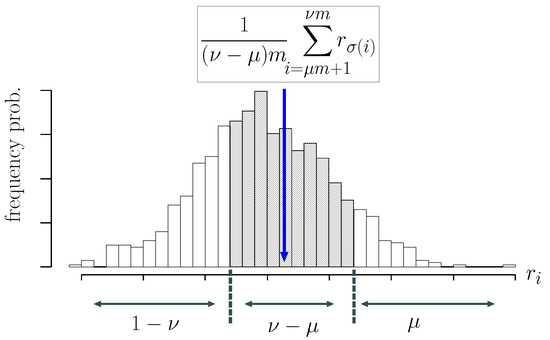

where is the negative margin and is the permutation such that as defined in Section 2. The second term in (6) is the average of the negative margins included in the middle interval presented in Figure 1, and it is expressed by the difference of CVaRs at levels ν and μ. A learning algorithm based on this interpretation is proposed in [16] under the name CVaR--SVM with and .

Figure 1.

Distribution of negative margins for a fixed decision function .

Robust -SVM is also closely related to the robust outlier detection (ROD) algorithm [17], which is a robust variant of C-SVM. In ROD, the classifier is given by the optimal solution of:

where is a regularization parameter and η is an outlier indicator. The linear kernel is used in the original ROD [17]. To obtain a classifier, the ROD solves a semidefinite relaxation of (7). In [18], it is proven that a KKT point of (7) with the learning parameter corresponds to that of robust -SVM for some parameter ν.

3.2. Learning Algorithm

It is hard to obtain a global optimal solution of (5), since the objective function is non-convex. The difference of convex functions algorithm (DCA) [27] and concave-convex programming (CCCP) [28] are popular methods to efficiently obtain practical numerical solutions of non-convex optimization problems. Indeed, DCA is used in robust C-SVM using the ramp loss [12] and ER-SVM [18].

Let us show an expression of the objective function in (5) as a difference of convex functions. The set of feasible outlier indicators is denoted as:

For the negative margin , the objective function in robust -SVM is then represented as:

which is derived from (3) and (6).

We derive the DCA using the decomposition (8). The optimization algorithm is a simplified variant of the learning algorithm proposed in [18]. The representer theorem ensures that the optimal decision function is represented by when the kernel function of the RKHS is . From (8), the objective function of the robust -SVM is expressed as:

using the convex functions and defined as:

where α is the column vector and is the Gram matrix defined by . Let be the solution obtained after t iterations of the DCA. Next, the solution is updated to the optimal solution of:

where with is an element of the subgradient of at . Let be the convex hull of the set S, and let denote component-wise multiplication of two vectors a and b. Accordingly, the subgradient of can be expressed as:

where denotes an m-dimensional vector of all ones. A parameter that meets the condition in the above subgradient is obtained by sorting the negative margin , at .

Let us describe the learning algorithm for robust -SVM. We propose a modification of DCA to guarantee the robustness of the local optimal solution. The DCA for robust -SVM based on Expression (9) is used to obtain a good numerical solution of the outlier indicator. Let be the objective function of robust -SVM:

The learning algorithm is presented in Algorithm 1. Given training samples , the learning algorithm outputs the decision function . The dual problem of (10) is presented as (11) in Algorithm 1.

The numerical solution given by DCA is modified in Steps 7 and 8. Step 7 of Algorithm 1 is equivalent to solving (5) with the additional equality constraint . This is almost the same as the standard ν-SVM using the training samples with and the regularization parameter instead of ν. Hence, the optimal solution is efficiently obtained. In Step 8, the problem is reduced to the optimization of the one-dimensional piecewise linear function of b. This fact is shown in Appendix C, when we prove the robustness property of in Section 4.2. Hence, finding a local optimal solution of the problem in Step 8 is tractable.

Throughout the learning algorithm, the objective value monotonically decreases. Indeed, the DCA has the monotone decreasing property of the objective value [27]. Let be the numerical solution obtained at the last iteration of DCA. Then, we have:

It is straightforward to guarantee the monotone decrease of the objective value even if is a local optimal solution.

| Algorithm 1 Learning Algorithm of Robust -SVM |

Input: Training dataset , Gram matrix defined as , and training labels . The matrix is defined as . Let be the initial decision function.

|

3.3. Dual Problem and Its Interpretation

The partial dual problem of (5) with a fixed outlier indicator has an intuitive geometric picture. Some variants of ν-SVM can be geometrically interpreted on the basis of the dual form [29,30,31]. Substituting (2) into the objective function in (5), we obtain the Lagrangian of problem (5) with a fixed as:

where non-negative slack variables are introduced to represent the hinge loss. Here, the parameters and for are non-negative Lagrange multipliers. For a fixed , the Lagrangian is convex in the parameters and ξ and concave in and . Hence, the min-max theorem [32] (Proposition 6.4.3) yields:

The last equality comes from the optimality condition with respect to the variables . Given the optimal solution of the dual problem, the optimal coefficient in the primal problem is given by , and the bias term b is obtained from the complementary slackness of such that and .

Let us give a geometric interpretation of the above expression. For the training data , the convex sets, and , are defined as the reduced convex hulls of the data points for each label, i.e.,

The coefficients in are bounded above by a non-negative real number. Hence, the reduced convex hull is a subset of the convex hull of the data points in the RKHS . Let be the Minkowski difference of two subsets,

where of subsets A and B denotes . We obtain:

for each . As a result, the optimal value of (5) is given as , where:

Therefore, the dual form of robust -SVM can be expressed as the maximization of the minimum distance between two reduced convex hulls, and . The estimated decision function in robust -SVM is provided by the optimal solution of (12) up to a scaling factor depending on . Moreover, the optimal value is proportional to the squared RKHS norm of in the decision function .

4. Breakdown Point of Robust SVMs

4.1. Finite-Sample Breakdown Point

Let us describe how to evaluate the robustness of learning algorithms. There are a number of robustness measures for evaluating the stability of estimators as discussed later in Section 4.3. In this paper, we use the finite-sample breakdown point, and it will be referred to as the breakdown point for short. The breakdown point quantifies the degree of impact that the outliers have on the estimators when the contamination ratio is not necessarily infinitesimal [33]. In this section, we present an exact evaluation of the breakdown point of robust SVMs.

The breakdown point indicates the largest amount of contamination such that the estimator still gives information about the non-contaminated data [20] (Chapter 3.2). More precisely, for an estimator based on a dataset D of size m that takes a value in a normed parameter space, the finite-sample breakdown point is defined as:

where is the family of datasets of size m including at least elements in common with the non-contaminated dataset D, i.e.,

For simplicity, the dependency of on the dataset D is dropped. The condition of the breakdown point can be rephrased as:

where is the norm on the parameter space. In most cases of interest, does not depend on the dataset D. For example, the breakdown point of the one-dimensional median estimator is .

4.2. Breakdown Point of Robust -SVM

The parameters of robust -SVM have a clear meaning unlike those of robust C-SVM and ROD. In fact, is a lower bound of the number of support vectors and an upper bound of the margin error, as mentioned in Section 3.1. In addition, we show that the parameter μ is exactly equal to the breakdown point of the decision function under a mild assumption. Such an intuitive interpretation will be of great help in tuning the parameters in the learning algorithm. Section 5 describes how to tune the learning parameters.

To start with, let us derive a lower bound of the breakdown point for the optimal value of Problem (5) that is expressed as up to a constant factor. As shown in Section 3.3, the boundedness of is equivalent to the boundedness of the RKHS norm of in the estimated decision function . Given a labeled dataset , let us define the label ratio r as:

In what follows, we assume to avoid technical difficulty.

Theorem 1.

Let D be a labeled dataset of size m with a label ratio . For the parameters such that and , we assume . Then, the following two conditions are equivalent:

- (i)

- The inequalityholds.

- (ii)

- Uniform boundedness,holds, where is the family of contaminated datasets defined from D.

The proof of the above theorem is given in Appendix A. The inequality has an intuitive interpretation. If is violated, the majority of, say, positive labeled samples in the non-contaminated training dataset can be replaced with outliers. In such a situation, the statistical features in the original dataset will not be retained.

Remark 1.

The condition (14) has an intuitive interpretation. Assume that . After removing some training samples due to the optimal outlier indicator η, there exist at least positive training samples for any . In the standard ν-SVM, the condition guarantees the boundedness of the optimal value, , for a non-contaminated dataset D [29]. For the robust -SVM, ν and r are replaced with and , respectively. As a result, the inequality (14) is obtained as a sufficient condition of for each . This implies the pointwise boundedness of . However, this interpretation does not prove the uniform boundedness of for any . In the proof in Appendix A, we prove the uniform boundedness over .

The inequality (14) indicates the trade-off between the ratio of outliers μ and the ratio of support vectors . This result is reasonable. The number of support vectors corresponds to the dimension of the statistical model. When the ratio of outliers is large, a simple statistical model should be used to obtain robust estimators.

When the contamination ratio in the training dataset is greater than the parameter μ of robust -SVM, the estimated decision function is not necessarily bounded.

Theorem 2.

Suppose that ν and μ are rational numbers such that and . Then, there exists a dataset D of size m with the label ratio r such that and:

hold, where is defined from D.

The proof is given in Appendix B. Theorems 1 and 2 provide lower and upper bounds of the breakdown point, respectively. Hence, the breakdown point of the function part in the estimated decision function is exactly equal to , when the learning parameters of robust -SVM satisfy and . Otherwise, the breakdown point of f is strictly less than μ. Note that the results in Theorems 1 and 2 hold for the global optimal solution.

Remark 2.

Let us consider the robustness of the local optimal solution obtained by robust -SVM. Let be the global optimal solution of robust -SVM. For the outlier indicator in Algorithm 1, we have:

where the last equality is guaranteed by the result in Section 3.3. Therefore, is less sensitive to contamination than the RKHS element of the global optimal solution.

Now, we will show the robustness of the bias term b. Let be the estimated bias parameter obtained by Algorithm 1. We will derive a lower bound of the breakdown point of the bias term. Then, we will show that the breakdown point of robust -SVM with a bounded kernel is given by a simple formula.

Theorem 3.

Let D be an arbitrary dataset of size m with a label ratio r that is greater than zero. Suppose that ν and μ satisfy , , and . For a non-negative integer ℓ, we assume:

Then, uniform boundedness,

holds, where is defined from D.

The proof is given in Appendix C, in which a detailed analysis is needed especially when the kernel function is unbounded. The proof shows that the uniform boundedness holds even if is a local optimal solution in Algorithm 1. Note that the inequality (15) is a sufficient condition of Inequality (14). Theorem 3 guarantees that the breakdown point of the estimated decision function is not less than , when (15) holds.

The robustness of for a bounded kernel is considered in the theorem below.

Theorem 4.

Let D be an arbitrary dataset of size m with a label ratio r that is greater than zero. For parameters such that and , suppose that and hold. In addition, assume that the kernel function of the RKHS is bounded, i.e., . Then, uniform boundedness,

holds, where is defined from D.

The proof is given in Appendix D. Compared with Theorem 3 in which arbitrary kernel functions are treated, Theorem 4 ensures that a tighter lower bound of the breakdown point is obtained for bounded kernels. The above result agrees with those of other studies. The authors of [14] proved that bounded kernels produce robust estimators for regression problems in the sense of bounded response, i.e., robustness against a single outlier.

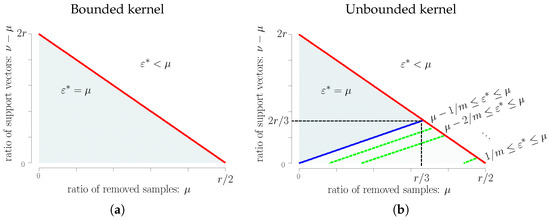

Combining Theorems 1–4, we find that the breakdown point of robust -SVM with is given as follows.

- Bounded kernel: For , the breakdown point of is less than μ. For , the breakdown point of is equal to μ.

- Unbounded kernel: For , the breakdown point of is less than μ. For , the breakdown point of is equal to μ. When , the breakdown point of is equal to μ, and the breakdown point of is bounded from below by and from above by μ, where depends on ν and μ, as shown in Theorem 3.

Figure 2 shows the breakdown point of robust -SVM. The line is critical. For unbounded kernels, we only obtain a bound of the breakdown point.

Figure 2.

(a) breakdown point of given by robust -SVM with bounded kernel; (b) breakdown point of given by robust -SVM with unbounded kernel.

4.3. Breakdown Point Revisited

Let us reconsider the breakdown point of learning methods.

4.3.1. Effective Case of Breakdown Point

Suppose that the function is obtained by a learning method using the dataset D. Learning methods are categorized into two types according to the norm of . The first type is the learning methods satisfying , and the second type is the ones such that , where the supremum is taken over arbitrary dataset of size m, i.e., .

For learning methods of the first type, the breakdown point indicates the number of outliers such that the estimator remains in a uniformly-bounded region. This is meaningful information about the robustness of the learning method. In this case, the larger breakdown point is regarded as a more robust method. As shown in Theorems 1 and 2, the robust -SVM is a learning method of the first type.

The second type implies that the hypothesis space of the learning method is bounded regardless of datasets. The C-SVM, robust C-SVM and ROD belong to learning methods of the second type. Indeed, given a labeled dataset , the non-negative property of the hinge loss in C-SVM leads to:

where the last inequality comes from the fact that the objective value at and is greater than or equal to the optimal value. Likewise, one can prove that robust C-SVM and ROD have the same property. In this case, the naive definition of the breakdown point shown in Section 4.1 is not adequate, because the boundary effect of the hypothesis set is not taken into account. In the general definition of the breakdown point, the boundary of the hypothesis space is taken into account [20] (Chapter 3.2.5).

In this paper, we focus on the breakdown point of learning algorithms of the first type. Then, the analysis based on the breakdown point suggests proper choices of hyperparameters as shown in succeeding sections.

4.3.2. Other Robust Estimators

Robust statistical inference has been studied for a long time in mathematical statistics, and a number of robust estimators have been proposed for many kinds of statistical problems [20,34,35]. In mathematical analysis, one needs to quantify the influence of samples on estimators. Here, the influence function, change of variance and breakdown point are often used as measures of robustness. In the machine learning literature, these measures have been used to analyze the theoretical properties of SVM and its robust variants. In [36], the robustness of a learning algorithm using a convex loss function was investigated on the basis of an influence function defined over an RKHS. When the influence function is uniformly bounded on the RKHS, the learning algorithm is regarded to be robust against outliers. It was proven that the least squares loss provides a robust learning algorithm for classification problems in this sense [36].

From the standpoint of the breakdown point, however, convex loss functions do not provide robust estimators, as shown in [20] (Chapter 5.16). Yu et al. [14] proved that the breakdown point of a learning algorithm using clipped loss is greater than or equal to in regression problems. In Section 4.2, we show a detailed analysis of the breakdown point for robust -SVM.

5. Admissible Region for Learning Parameters

The theoretical analysis in Section 4.2 suggests that robust -SVM satisfying is a good choice for obtaining a robust classifier, especially when a bounded kernel is used. Here, r is the label ratio of the non-contaminated original data D, and usually, it is unknown in real-world data analysis. Thus, we need to estimate r from the contaminated dataset .

If an upper bound of the outlier ratio is known to be , we have , where is defined from D. Let be the label ratio of . Then, the label ratio of the original dataset D should satisfy , where and . Let and be:

Robust -SVM with reaches the breakdown point μ for any non-contaminated dataset D such that for given . On the other hand, the parameters on the outside of are not necessary. Indeed, for any non-contaminated data D such that for given , the parameters satisfying do not yield a learning method that reaches the breakdown point μ.

When the upper bound is unknown, we set . As shown in the comments after Theorem 1, the outlier ratio greater than can totally violate the statistical features of the original dataset. In such a case, we need to reconsider the observation process. For , we obtain , where and . Hence, in the worst case, the admissible set of learning parameters ν and μ is:

Given contaminated training data , for any D of size m with a label ratio , such that with , robust -SVM with provides a classifier with the breakdown point μ. A parameter on the outside of is not necessary, for the same reasons as for .

The admissible region of is useful when the parameters are determined by a grid search based on cross-validation. On the other hand, C of robust C-SVM and λ in ROD can take a wide range of positive real numbers. Hence, differently from robust -SVM, these algorithms need heuristics to determine the region of the grid search for the learning parameters.

The numerical experiments presented in Section 6 applied a grid search to the region .

6. Numerical Experiments

We conducted numerical experiments on synthetic and benchmark datasets to compare a number of SVMs. Algorithm 1 was used for robust -SVM, and DCA in [12] was used for robust C-SVM with the ramp loss. We used CPLEX to solve the convex quadratic problems.

6.1. DCA versus Global Optimization Methods

As has been shown in many studies including [37], DCA quite often gives global optimal solutions to many different and various non-convex optimization problems. We examined how often DCA produces global optimal solutions to robust -SVM with the 0-1 valued outlier indicator. Here, the numerical solution of DCA in robust -SVM denotes the output of Step 5 in Algorithm 1. In these numerical experiments, the optimization problem was formulated as a mixed integer programming (MIP) problem, and the CPLEX MIP solver was used to compute the global optimal solution of robust -SVM based on a relatively small dataset. The numerical solution given by DCA was compared with the global optimal solution.

In binary classification problems, positive (resp. negative) samples were generated from a multivariate normal distribution with mean (resp. ) and a variance-covariance matrix , where I is the identity matrix and c is a positive constant. Each class had 20 samples. For such a small dataset, the global optimal solution was obtained by the CPLEX MIP solver. Outliers were added by flipping positive labels randomly, and the outlier ratio was . The DCA with the multi-start method was used to solve the robust -SVM using the linear kernel. In the multi-start method, a number of initial points were randomly generated, and for each initial point, a numerical solution was obtained by DCA. Among these numerical solutions, the point that attained the smallest objective value was chosen as the output of the multi-start method. was the objective value at the numerical solution of DCA, and was the global optimal value. Note that the optimal value of the problem in robust -SVM is non-positive, i.e., . In addition, one can find that any numerical solution obtained by DCA satisfies .

In the numerical experiments, 100 training datasets such that were randomly generated, and was computed for each dataset. Table 1 shows the number of times that holds out of 100 trials. When the achievable lowest test error, i.e., the Bayes error, was large, the DCA tended to yield a local optimal solution that was not globally optimal. When the Bayes error was small, DCA produced approximately global optimal solutions in almost all trials. Even when DCA using a single initial point failed to find the global optimal solution, the multi-start method with five or 10 initial points greatly improved the quality of the numerical solutions. In numerical experiments, DCA was more than 50 times more computationally efficient than the MIP solver.

Table 1.

Number of times that the numerical solution of difference of convex functions algorithm (DCA) satisfies out of 100 trials. The number of initial points used in the multi-start method is denoted as #initial points. The“Dim.” and “Cov.” columns denote the dimension d and the covariance matrix of the input vectors in each label. The column labeled “Err.” shows the Bayes error of each problem setting.

6.2. Computational Cost

We conducted numerical experiments to compare the computational cost of robust -SVM with that of robust C-SVM. Both learning algorithms employed the DCA. The numerical experiments were conducted on AMD Opteron Processors 6176 (2.3 GHz) with 48 cores, running Cent OS Linux Release 6.4. We used three benchmark datasets, Sonar, BreastCancer and spam, which were also used in the experiments in Section 6.5. m training samples were randomly chosen from each dataset, and each dataset was contaminated by outliers. The outlier ratio was , and outliers were added by flipping the labels randomly. Robust -SVM and robust C-SVM with the linear kernel were used to obtain classifiers from the contaminated datasets. This process was repeated 20 times for each dataset. Table 2 presents the average computation time and average ratio of support vectors (SV ratio) together with standard deviations. The support vector was numerically identified as the data point having the coefficient such that is greater than . Although the SV ratio is bounded below by , the bound was not necessarily tight. A similar tendency is often observed in ν-SVM. In terms of the computation time, two learning algorithms were not significantly different except in the case of robust C-SVM with a small C that induces a strong regularization.

Table 2.

Computation time (Time) and ratio of support vectors (SV Ratio) of robust -SVM and robust C-SVMwith standard deviations.

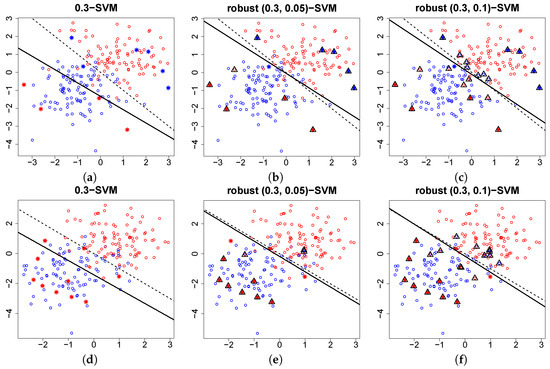

6.3. Outlier Detection

Robust -SVM uses an outlier indicator to suppress the influence of outliers. Figure 3 shows that the outlier indicator in the robust -SVM using the linear kernel is able to detect outliers in a synthetic setting. Similar results have been reported for learning methods using outlier indicators such as ROD and ER-SVM. Systematic experiments using a recall-precision criterion were presented in [17,19].

Figure 3.

Plot of contaminated dataset of size . The outlier ratio is , and the asterisks (∗) denote the outlier. In the panels of the upper (resp. lower) row, outliers are added by flipping labels (resp. flipping positive labels) randomly. The dashed line is the true decision boundary, and the solid line is the decision boundary estimated using ν-SVM with in (a,d); robust -SVM with in (b,e); and in (c,f). The triangles denote the samples on which is assigned.

6.4. Breakdown Point

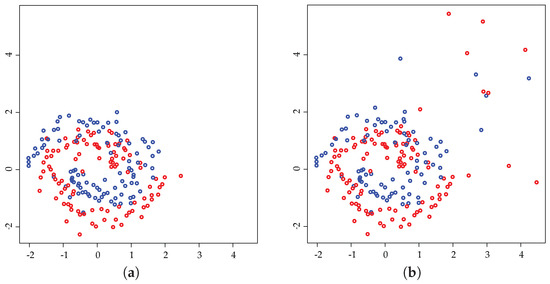

We investigate the validity of Inequality (14) in Theorem 1. In the numerical experiments, the original data D were generated using mlbench.spiralsin the mlbench library of the R language [38]. Given an outlier ratio μ, positive samples of size were randomly chosen from D, and they were replaced with randomly-generated outliers to obtain a contaminated dataset . The original data D and an example of the contaminated data are shown in Figure 4. The decision function was estimated from by using robust -SVM. Here, the true outlier ratio μ was used as the parameter of the learning algorithm. The norms of f and b were then calculated. The above process was repeated 30 times for each pair of parameters , and the maximum values of and were computed.

Figure 4.

(a) original data D; (b) contaminated data . In this example, the sample size is , and the outlier ratio is .

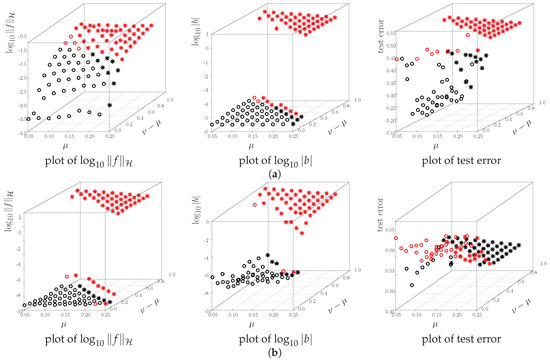

Figure 5 shows the results of the numerical experiments. The maximum norm of the estimated decision function is plotted for the parameter on the same axis as in Figure 2. The top (bottom) panels show the results for a Gaussian (linear) kernel. The left and middle columns show the maximum norm of f and b, respectively. The maximum test errors are presented in the right column. In all panels, the red points denote the top 50 percent of values, and the asterisks (∗) are the point that violates the inequality . In this example, the numerical results agree with the theoretical analysis in Section 4; i.e., the norm becomes large when the inequality is violated. Accordingly, the test error gets close to ; no information for classification. Even when the unbounded linear kernel is used, robustness is confirmed for the parameters in the left lower region in the right panel of Figure 2.

Figure 5.

Plots of maximum norms and worst-case test errors. The top (Bottom) panels show the results for a Gaussian (linear) kernel. Red points mean the top 50 percent of values; the asterisks (∗) are points that violate the inequality . (a) Gaussian kernel; (b) linear kernel.

In the bottom right panel, the test error gets large when the inequality holds. This result comes from the problem setup. Even with non-contaminated data, the test error of the standard ν-SVM is approximately , because the linear kernel works poorly for spiral data. Thus, the worst-case test error under the target distribution can go beyond . For the parameter at which (14) is violated, the test error is always close to . Thus, a learning method with such parameters does not provide any useful information for classification.

6.5. Prediction Accuracy

As shown in Section 5, the theoretical analysis of the breakdown point yields the admissible region, such as , for learning parameters in robust -SVM. Learning parameters outside the admissible region produce an unstable learning algorithm. Hence, one can reduce the computational cost of tuning the learning parameters by ignoring outside of the admissible region. In this section, we verify the usefulness of the admissible region.

We compared the generalization ability of robust -SVM with ν-SVM and robust C-SVM using the ramp loss. In robust -SVM, a grid search of the region is used to choose the learning parameters, ν and μ.

The datasets are presented in Table 3. The datasets are from the mlbench and kernlab libraries of the R language [38]. The number of positive samples in these datasets is less than or equal to the number of negative samples. Before running the learning algorithms, we standardized each input variable to be mean zero and standard deviation one.

Table 3.

Test error and standard deviation of robust -SVM, robust C-SVM and ν-SVM. The dimension of the input vector, number of training samples, number of test samples and label ratio of all samples with no outliers are shown for each dataset. Linear and Gaussian kernels were used to build the classifier in each method. The outlier ratio in the training data ranged from 0% to 15%, and the test error was evaluated on the non-contaminated test data. The asterisks (*) mean the best result for a fixed kernel function in each dataset, and the double asterisks (**) mean that the corresponding method is 5% significant compared with the second best method under a one-sided t-test. The learning parameters were determined by five-fold cross-validation on the contaminated training data.

We randomly split the dataset into training and test sets. To evaluate the robustness, the training data were contaminated by outliers. More precisely, we randomly chose positive labeled samples in the training data and changed their labels to negative; i.e., we added outliers by flipping the labels. After that, robust -SVM, robust C-SVM using the ramp loss and the standard ν-SVM were used to obtain classifiers from the contaminated training dataset. The prediction accuracy of each classifier was evaluated over test data that had no outliers. Linear and Gaussian kernels were employed for each learning algorithm. The learning parameters, such as and C, were determined by conducting a grid search based on five-fold cross-validation over the training data. For robust -SVM, the parameter was selected from the admissible region in (16). For standard ν-SVM, the candidate of the regularization parameter ν was selected from the interval , where is the label ratio of the contaminated training data. For robust C-SVM, the regularization parameter C was selected from the interval . In the grid search of the parameters, 24 or 25 candidates were examined for each learning method. Thus, we needed to solve convex or non-convex optimization problems more than times in order to obtain a classifier. The above process was repeated 30 times, and the average test error was calculated.

The results are presented in Table 3. For non-contaminated training data, robust -SVM and robust C-SVM were comparable to the standard ν-SVM. When the outlier ratio is high, we can conclude that robust -SVM and robust C-SVM tend to work better than the standard ν-SVM. In this experiment, the kernel function does not affect the relative prediction performance of these learning methods. In large datasets, such as spam and Satellite, robust -SVM tends to outperform robust C-SVM. When the learning parameters, such as and C, are appropriately chosen by using a large dataset, the learning algorithms with multiple learning parameters clearly work better than those with a single learning parameter. In addition, in robust C-SVM, there is a difficulty in choosing the regularization parameter. Indeed, the parameter C does not have a clear meaning, and thus, it is not so easy to determine its candidates in the grid search optimization. In contrast, ν in ν-SVM and its robust variant has a clear meaning, i.e., a lower bound of the ratio of support vectors and an upper bound of the margin error on the training data [5]. Such a clear meaning is helpful for choosing candidate points of regularization parameters.

7. Concluding Remarks

We have investigated the breakdown point of robust variants of SVMs. The theoretical analysis provides inequalities of learning parameters, ν and μ, in robust -SVM that guarantee the robustness of the learning algorithm. Numerical experiments showed that the inequalities are critical to obtaining a robust classifier. The exact evaluation of the breakdown point for robust -SVM enables us to restrict the range of the learning parameters and to increase the chance of finding a robust classifier with good performance for the same computational cost.

In our paper, the dual representation of robust SVMs is applied to the calculation of the breakdown point. Theoretical analysis using the dual representation can be a powerful tool for the detailed analysis of other learning algorithms.

On the theoretical side, it is interesting to establish the relationship between the robustness, say breakdown point, and the convergence speed of learning algorithms, as presented for the parametric inference in mathematical statistics [34] (Chapter 2.4). Furthermore, it is important to determine the optimal parameter choice of in robust -SVM as an extension of the parameter choice for ν-SVM [39]. Another important issue is to develop efficient optimization algorithms. Although the DC algorithm [12,27] and convex relaxation [14,17] are promising methods, more scalable algorithms will be required to deal with massive datasets that are often contaminated by outliers. Recently, a computationally-efficient algorithm, called iteratively weighted SVM (IWSVM), was developed to solve optimization problems in the robust C-SVM and its variants [40]. Moreover, a fixed point of IWSVM is assured to be a local optimal solution obtained by the DC algorithm. It will be worthwhile to investigate the applicability of IWSVM to robust -SVM.

Acknowledgments

This work was supported by JSPS KAKENHI, Grant Number 16K00044 and 15K00031.

Author Contributions

Takafumi Kanamori and Akiko Takeda contributed the theoretical analysis; Takafumi Kanamori and Shuhei Fujiwara performed the experiments; Takafumi Kanamori and Akiko Takeda wrote the paper. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

The proof is decomposed into two lemmas. Lemma A1 shows that Condition (i) is sufficient for Condition (ii), and Lemma A2 shows that Condition (ii) does not hold if Inequality (14) is violated. For the dataset , let and be the index sets defined as . When the parameter μ is equal to zero, the theorem holds according to the argument on the standard ν-SVM [29]. Below, we assume .

Lemma A1.

Under the assumptions of Theorem 1, Condition (i) leads to Condition (ii).

Proof of Lemma A1.

We will show that is not empty for any . For a contaminated dataset , let us define as an index set, such that the sample for is replaced with as an outlier. In the same way, is defined for negative samples in D. Therefore, for any index i in or , we have . The assumptions of the theorem ensure . Let us define and . These sets are not empty. Indeed, we have:

where Condition (i) in Theorem 1 is used in the second inequality. Likewise, we have .

We define two points in as:

Then, we have:

Because and are both less than or equal to due to (A1), holds for all .

Now, let us prove the inequality,

The above argument leads to:

for any . Let us define:

for the original dataset D. Then, we obtain:

The upper bound does not depend on the contaminated dataset . Thus, the inequality (A2) holds. ☐

Lemma A2.

Under the condition of Theorem 1, we assume . Then, we have:

Proof of Lemma A2.

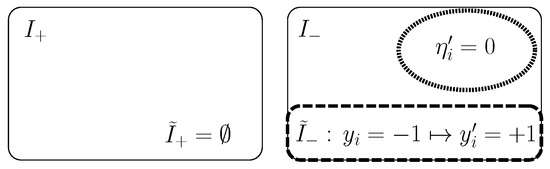

We will use the same notation as in the proof of Lemma A1. Without loss of generality, we can assume . We will prove that there exists a feasible parameter and a contaminated training set such that becomes empty. The construction of the dataset is illustrated in Figure A1. Suppose that and and that holds for all , meaning that all outliers in are made by flipping the labels of the negative samples in D. This is possible, because holds. The outlier indicator is defined by for samples in , and otherwise. This assignment is possible because . Then, we have:

where is used in the last inequality. In addition, holds only when . Therefore, we have . The infeasibility of the dual problem means that the primal problem is unbounded or infeasible. In this case, the infeasibility of the primal problem is excluded. Hence, a contaminated dataset and an outlier indicator exist such that:

holds. ☐

Figure A1.

Index sets and value of defined in the proof of Lemma A2.

Appendix B. Proof of Theorem 2

Proof.

For a rational number , there exists an such that and hold. For such m, let be training data such that and , where the index sets are defined in the proof of Appendix A. Since the label ratio of D is , and we have . For defined from D, let be a contaminated dataset of D such that outliers are made by flipping the labels of the negative samples in D. Thus, there are negative samples in . Let us define the outlier indicator such that for negative samples in . Then, any sample in with should be a positive one. Hence, we have . The infeasibility of the dual problem means that the primal problem is unbounded. Thus, we obtain . ☐

Appendix C. Proof of Theorem 3

Let us define with as the decision function estimated using robust -SVM based on the dataset D.

Proof.

The non-contaminated dataset is denoted as . For the dataset D, let and be the index sets defined by . Inequality (14) holds under the conditions of Theorem 3. Given a contaminated dataset , let be the negative margin of , i.e., for . For , the function is defined as:

where the index set is defined by the sorted negative margins as follows:

The estimated bias term is a local optimal solution of because of (6). The function is continuous. In addition, is linear on the interval such that is unchanged. Hence, is a continuous piecewise linear function. Below, we prove that local optimal solutions of are uniformly bounded regardless of the contaminated dataset . To prove the uniform boundedness, we control the slope of .

For the non-contaminated data D, let R be a positive real number such that:

The existence of R is guaranteed. Indeed, one can choose:

because the RKHS norm of is uniformly bounded above for and D is a finite set. For the contaminated dataset , let us define the index sets and for each label by:

For any non-contaminated sample , we have . Hence, for should be an outlier that is not included in D. This fact leads to:

On the basis of the argument above, we can prove two propositions:

- The function is increasing for .

- The function is decreasing for .

In addition, for any , the Lipschitz constant of is greater than or equal to for .

Let us prove the first statement. If holds, we have:

from the definition of the index set . Let us consider two cases:

- (i)

- for all , holds and

- (ii)

- there exists an index such that .

For a fixed b such that , let us assume (i) above. Then, for any index i in , we have , meaning that . Hence, the size of the set is less than or equal to . Therefore, the size of the set is greater than or equal to . The first inequality of (15) leads to . Therefore, in the set , the number of negative samples is more than the number of positive samples.

For a fixed b such that , let us assume (ii) above. Due to the inequality (A3), for any index , the negative margin is at the top of those ranked in the descending order. Hence, the size of the set is greater than or equal to . Therefore, the size of the set is less than or equal to . The second inequality of (15) leads to . Furthermore, in the case of (ii), the negative label dominates the positive label in the set .

For negative (resp. positive) samples, the negative margin is expressed as (resp. ) with a constant . Thus, the continuous piecewise linear function is expressed as:

where are constants as long as is unchanged. As proven above, is a positive integer, since negative samples outnumber positive samples in when . As a result, local optimal solutions of the bias term should satisfy:

In the same manner, we can prove the second statement by using the fact that is a sufficient condition of:

Then, we have:

In summary, we obtain:

☐

Appendix D. Proof of Theorem 4

Proof.

We will use the same notation as in the proof of Theorem 3 in Appendix C. Note that Inequality (14) holds under the assumption of Theorem 4. The reproducing property of the RKHS inner product yields:

for any due to the boundedness of the kernel function and Inequality (14). Hence, for a sufficiently large , the sets and become empty for any .

Under Inequality (A3), suppose that holds for all . Then, for , we have . Thus, holds. Since is the empty set, is also the empty set. Therefore, has only negative samples. Let us consider the other case; i.e., there exists an index , such that . Assuming that , we can prove that the negative labels dominate the positive labels in in the same manner as the proof of Theorem 3. For any , the function is strictly increasing for . In the same way, we can prove that is strictly decreasing for . Moreover, for any and for , one can prove that the absolute value of the slope of is bounded below by according to the argument in the proof of Theorem 3. As a result, we obtain . ☐

References

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Berlinet, A.; Thomas-Agnan, C. Reproducing Kernel Hilbert Spaces in Probability and Statistics; Kluwer Academic: Boston, MA, USA, 2004. [Google Scholar]

- Perez-Cruz, F.; Weston, J.; Hermann, D.J.L.; Schölkopf, B. Extension of the ν-SVM Range for Classification. In Advances in Learning Theory: Methods, Models and Applications 190; IOS Press: Amsterdam, The Netherlands, 2003; pp. 179–196. [Google Scholar]

- Schölkopf, B.; Smola, A.; Williamson, R.; Bartlett, P. New support vector algorithms. Neural Comput. 2000, 12, 1207–1245. [Google Scholar] [CrossRef] [PubMed]

- Suykens, J.A.K.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Bartlett, P.L.; Jordan, M.I.; McAuliffe, J.D. Convexity, classification, and risk bounds. J. Am. Stat. Assoc. 2006, 101, 138–156. [Google Scholar] [CrossRef]

- Steinwart, I. On the influence of the kernel on the consistency of support vector machines. J. Mach. Learn. Res. 2001, 2, 67–93. [Google Scholar]

- Zhang, T. Statistical behavior and consistency of classification methods based on convex risk minimization. Ann. Stat. 2004, 32, 56–134. [Google Scholar] [CrossRef]

- Shen, X.; Tseng, G.C.; Zhang, X.; Wong, W.H. On ψ-learning. J. Am. Stat. Assoc. 2003, 98, 724–734. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, M.; Xu, L.; White, M.; Schuurmans, D. Relaxed Clipping: A Global Training Method for Robust Regression and Classification. In Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2010; pp. 2532–2540. [Google Scholar]

- Collobert, R.; Sinz, F.; Weston, J.; Bottou, L. Trading Convexity for Scalability. In Proceedings of the ICML06, 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: New York, NY, USA, 2006; pp. 201–208. [Google Scholar]

- Wu, Y.; Liu, Y. Robust truncated hinge loss support vector machines. J. Am. Stat. Assoc. 2007, 102, 974–983. [Google Scholar] [CrossRef]

- Yu, Y.; Aslan, Ö.; Schuurmans, D. A Polynomial-Time Form of Robust Regression. In Advances in Neural Information Processing Systems 25; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 2483–2491. [Google Scholar]

- Feng, Y.; Yang, Y.; Huang, X.; Mehrkanoon, S.; Suykens, J.A. Robust Support Vector Machines for Classification with Nonconvex and Smooth Losses. Neural Comput. 2016, 28, 1217–1247. [Google Scholar] [CrossRef] [PubMed]

- Tsyurmasto, P.; Uryasev, S.; Gotoh, J. Support Vector Classification with Positive Homogeneous Risk Functionals; Technical Report, Research Report 2013-4; Department of Industrial and Systems Engineering, University of Florida: Gainesville, FL, USA, 2013. [Google Scholar]

- Xu, L.; Crammer, K.; Schuurmans, D. Robust Support Vector Machine Training Via Convex Outlier Ablation. In Proceedings of the AAAI, Boston, MA, USA, 16–20 July 2006; pp. 536–542.

- Fujiwara, S.; Takeda, A.; Kanamori, T. DC Algorithm for Extended Robust Support Vector Machine; Technical Report METR 2014–38; The University of Tokyo: Tokyo, Japan, 2014. [Google Scholar]

- Takeda, A.; Fujiwara, S.; Kanamori, T. Extended robust support vector machine based on financial risk minimization. Neural Comput. 2014, 26, 2541–2569. [Google Scholar] [CrossRef] [PubMed]

- Maronna, R.; Martin, R.D.; Yohai, V. Robust Statistics: Theory and Methods; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Schapire, R.E.; Freund, Y.; Bartlett, P.L.; Lee, W.S. Boosting the margin: A new explanation for the effectiveness of voting methods. Ann. Stat. 1998, 26, 1651–1686. [Google Scholar] [CrossRef]

- Kimeldorf, G.S.; Wahba, G. Some results on Tchebycheffian spline functions. J. Math. Anal. Appl. 1971, 33, 82–95. [Google Scholar] [CrossRef]

- Wahba, G. Advances in Kernel Methods; Chapter Support Vector Machines, Reproducing Kernel Hilbert Spaces, and Randomized GACV; MIT Press: Cambridge, MA, USA, 1999; pp. 69–88. [Google Scholar]

- Takeda, A.; Sugiyama, M. ν-Support Vector Machine as Conditional Value-at-Risk Minimization. In Proceedings of the ICML, ACM International Conference Proceeding Series, Yokohama, Japan, 3–5 December 2008; Cohen, W.W., McCallum, A., Roweis, S.T., Eds.; ACM: New York, NY, USA, 2008; Volume 307, pp. 1056–1063. [Google Scholar]

- Rockafellar, R.T.; Uryasev, S. Conditional value-at-risk for general loss distributions. J. Bank. Financ. 2002, 26, 1443–1472. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Le Thi, H.A.; Dinh, T.P. Convex analysis approach to d.c. programming: Theory, algorithms and applications. Acta Math. Vietnam. 1997, 22, 289–355. [Google Scholar]

- Yuille, A.L.; Rangarajan, A. The concave-convex procedure. Neural Comput. 2003, 15, 915–936. [Google Scholar] [CrossRef] [PubMed]

- Crisp, D.J.; Burges, C.J.C. A Geometric Interpretation of ν-SVM Classifiers. In Advances in Neural Information Processing Systems 12; Solla, S.A., Leen, T.K., Müller, K.-R., Eds.; MIT Press: Cambridge, MA, USA, 2000; pp. 244–250. [Google Scholar]

- Kanamori, T.; Takeda, A.; Suzuki, T. Conjugate relation between loss functions and uncertainty sets in classification problems. J. Mach. Learn. Res. 2013, 14, 1461–1504. [Google Scholar]

- Takeda, A.; Mitsugi, H.; Kanamori, T. A Unified Robust Classification Model. In Proceedings of the 29th International Conference on Machine Learning (ICML-12), ICML’12, Edinburgh, Scotland, 26 June–1 July 2012; Langford, J., Pineau, J., Eds.; Omnipress: New York, NY, USA, 2012; pp. 129–136. [Google Scholar]

- Bertsekas, D.; Nedic, A.; Ozdaglar, A. Convex Analysis and Optimization; Athena Scientific: Belmont, MA, USA, 2003. [Google Scholar]

- Donoho, D.; Huber, P. The Notion of Breakdown Point. In A Festschrift for Erich L. Lehmann; CRC Press: Boca Raton, FL, USA, 1983; pp. 157–184. [Google Scholar]

- Hampel, F.R.; Rousseeuw, P.J.; Ronchetti, E.M.; Stahel, W.A. Robust Statistics. The Approach Based on Influence Functions; John Wiley and Sons, Inc.: Hoboken, NJ, USA, 1986. [Google Scholar]

- Huber, P.J.; Ronchetti, E.M. Robust Statistics, 2nd ed.; Wiley: New York, NY, USA, 2009. [Google Scholar]

- Christmann, A.; Steinwart, I. On robustness properties of convex risk minimization methods for pattern recognition. J. Mach. Learn. Res. 2004, 5, 1007–1034. [Google Scholar]

- Le Thi, H.A.; Dinh, T.P. The DC (Difference of Convex Functions) Programming and DCA Revisited with DC Models of Real World Nonconvex Optimization Problems. Ann. Oper. Res. 2005, 133, 23–46. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2014. [Google Scholar]

- Steinwart, I. On the optimal parameter choice for ν-support vector machines. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1274–1284. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Y. Adaptively weighted large margin classifiers. J. Comput. Graph. Stat. 2013, 22, 416–432. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).