Preference Inconsistence-Based Entropy

Abstract

:1. Introduction

2. Preliminary Knowledge

2.1. Information Entropy

2.2. Preference Relation

3. Preference Inconsistence Based Entropy

- upward preference inconsistent set (UPIS):

- downward preference inconsistent set (DPIS):

- upward preference inconsistent degree (UPID):

- downward preference inconsistent degree (DPID):where are the set of upward and downward preference inconsistent set, respectively.

- upward preference inconsistent entropy (UPIE):

- downward preference inconsistent entropy (DPIE):where and are the upward preference inconsistence degree and downward preference inconsistence degree, respectively. As for preference consistent ordinal decision system, the preference decisions are certain, and and are all equal to 0, which is consistent with Shannon entropy.

- upward preference inconsistent joint entropy (UPIJE):

- downward preference inconsistent joint entropy (DPIJE):

- upward preference inconsistent conditional entropy:

- downward preference inconsistent conditional entropy:

- upward preference inconsistent information granules:

- downward preference inconsistent information granules:

- upward reference inconsistent information entropy:

- downward preference inconsistent information entropy:

- upward reference inconsistent conditional entropy:

- downward reference inconsistent conditional entropy:

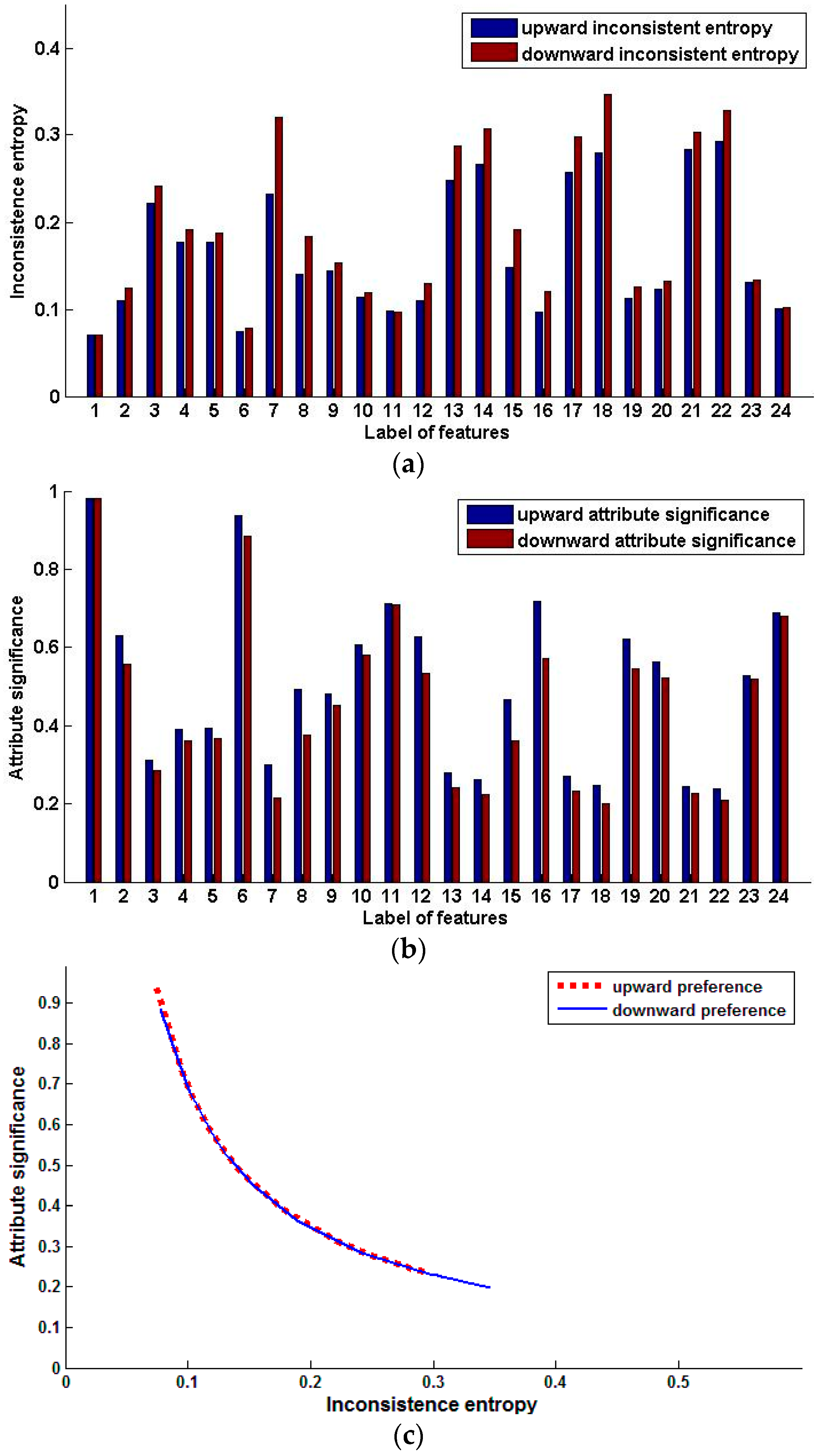

4. The Application of PIE

4.1. Feature Selection

- upward relative significance:

- downward relative significance:

| Algorithm 1 Forward feature selection (FFS) based on PIE |

| Input: the preference inconsistent ordinal decision system ; |

| Output: selected feature subset . |

| 1. foreach |

| 2. if |

| 3. |

| 4. end if |

| 5. end for |

| 6. foreach |

| 7. compute |

| 8. end for |

| 9. find the minimal and the corresponding attribute |

| 10. |

| 11. while |

| 12. for each |

| 13. compute ) |

| 14. end for |

| 16. find the maximal and the corresponding attribute |

| 17. if |

| 18. |

| 19. else |

| 20. exit while |

| 21. end if |

| 22. end while |

| 23. return |

| Algorithm 2 Backward feature selection (BFS) based on PIE |

| Input: the preference inconsistent ordinal decision system ; |

| Output: selected feature subset . |

| 1. foreach |

| 2. if |

| 3. |

| 4. end if |

| 5. end for |

| 6. |

| 7. for each |

| 8. compute ) |

| 9. if |

| 10. |

| 11. end if |

| 12. end for |

| 13. return |

4.2. Sample Condensation

| Algorithm 3 Sample Condensation based on PIE (BFS) |

| Input: the preference inconsistent ordinal decision system ; |

| Output: the preference consistent sample subset. |

| 1. for each |

| 2. |

| 3. compute the preference inconsistence entropy |

| 4. while |

| 5. for each |

| 6. Compute |

| 7. end for |

| 8. find the maximum and the corresponding sample x |

| 9. |

| 10. compute the preference inconsistence entropy |

| 11. end while |

| 12. end for |

| 13. ; |

| 14. for each |

| 15. ; |

| 16. end for |

| 17. return |

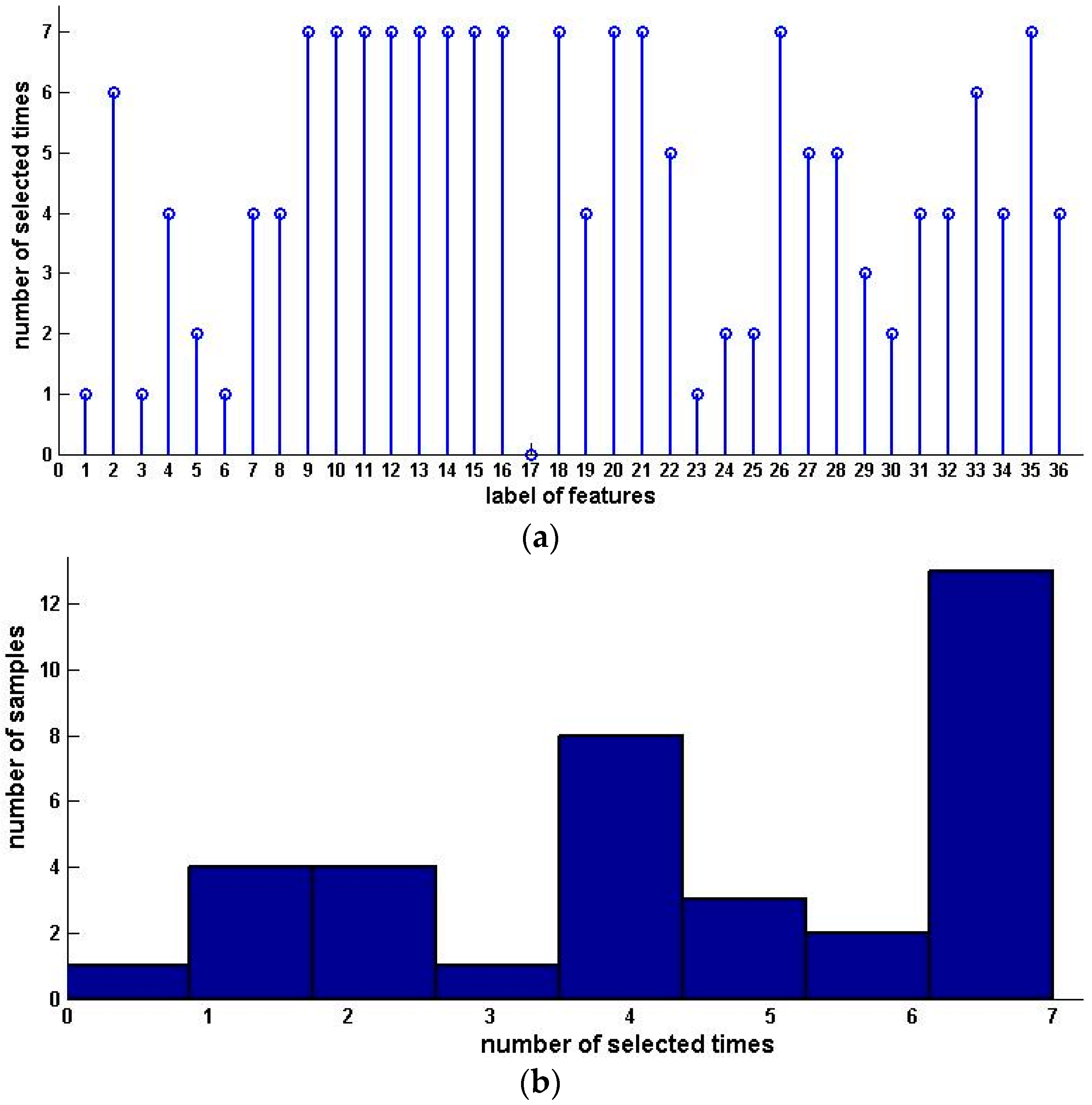

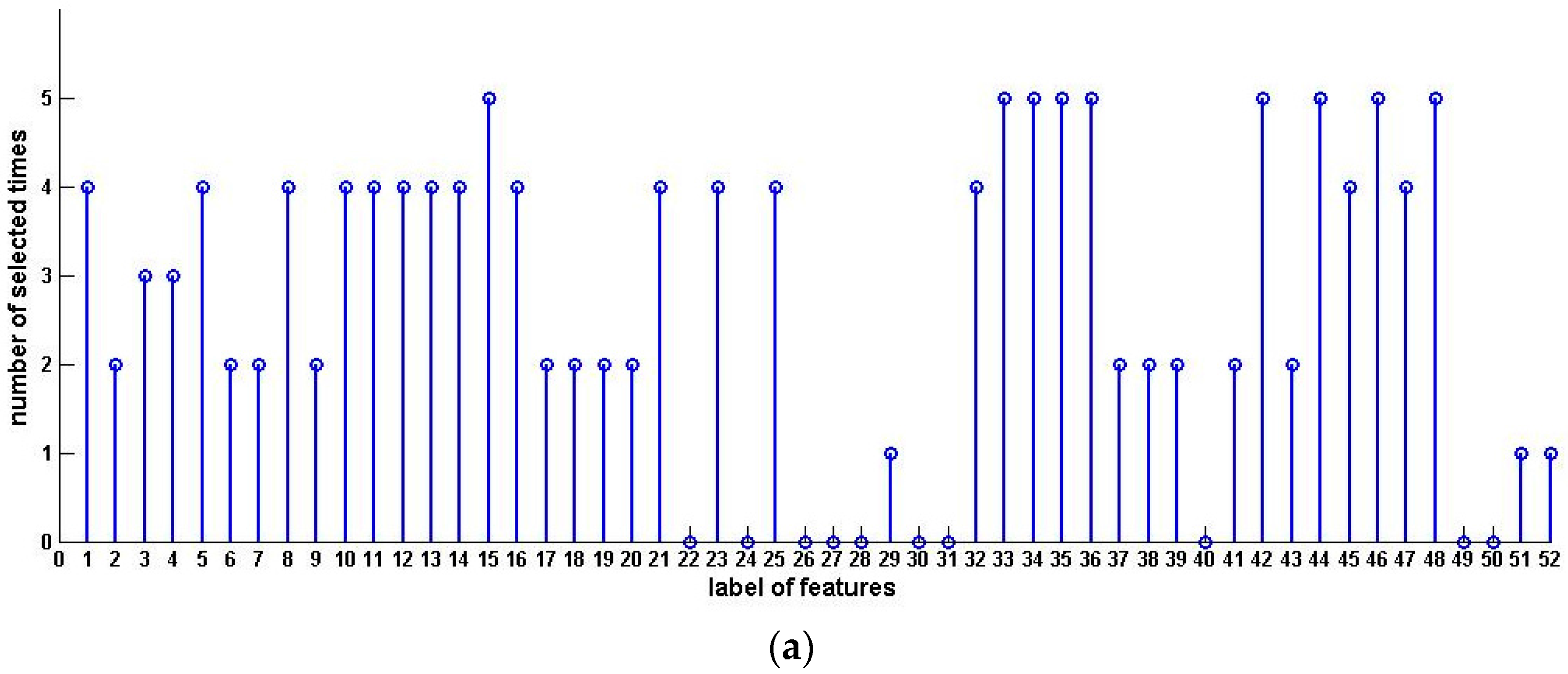

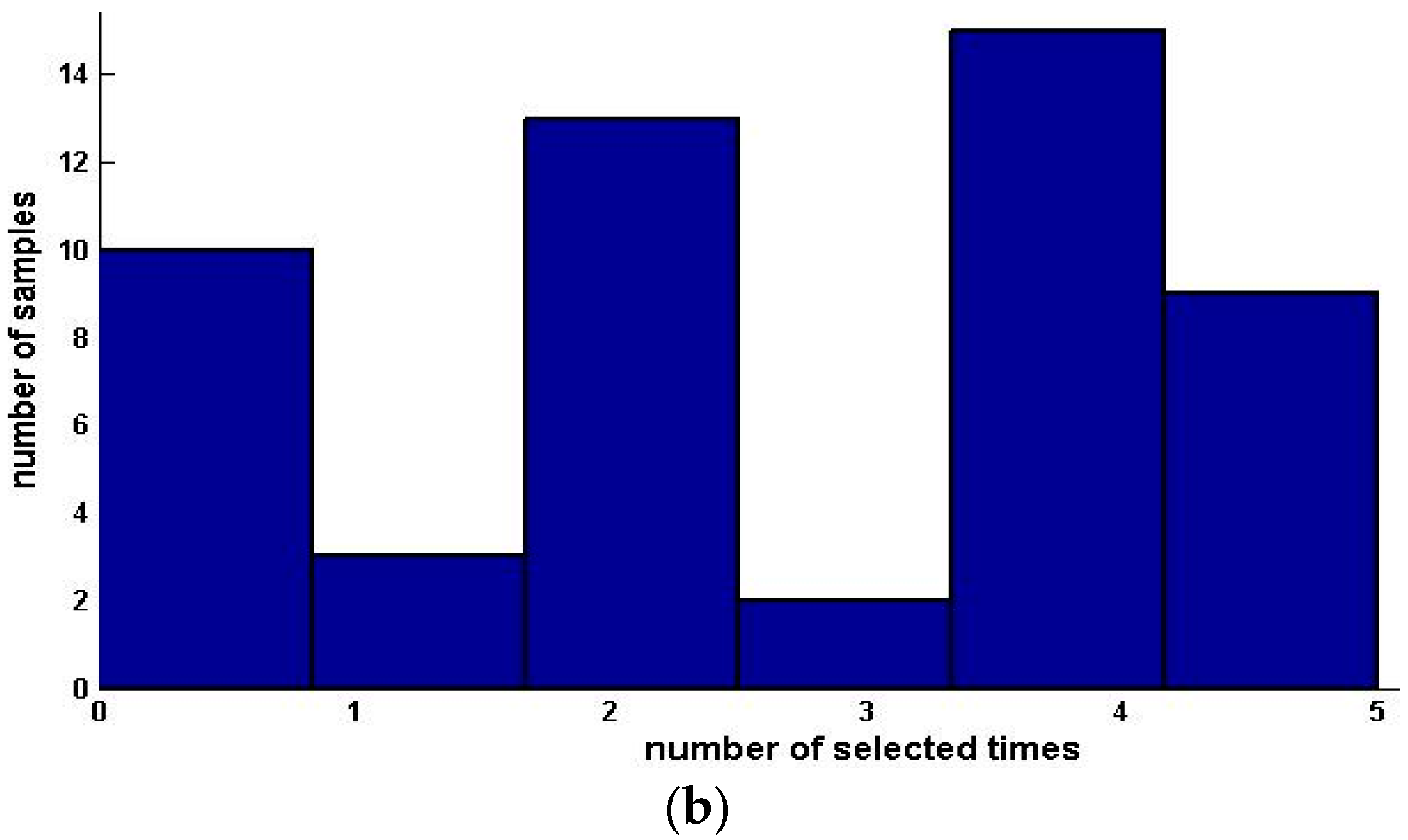

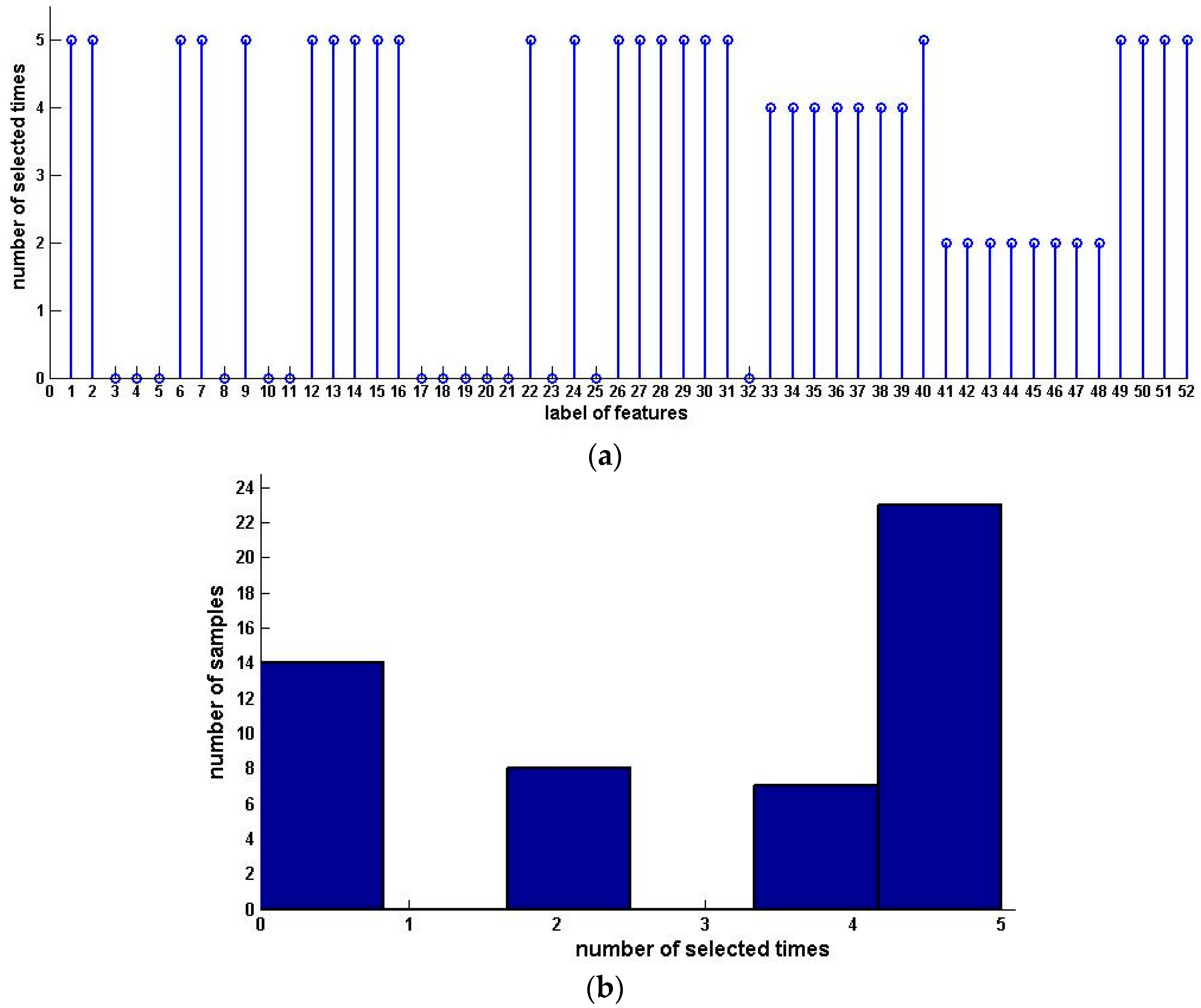

5. Experimental Analysis

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lotfi, F.H.; Fallahnejad, R. Imprecise Shannon’s entropy and multi attribute decision making. Entropy 2010, 12, 53–62. [Google Scholar] [CrossRef]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Chu, A.T.W.; Kalaba, R.E.; Spingarn, K. A comparison of two methods for determining the weights of belonging to fuzzy sets. J. Optim. Theory Appl. 1979, 27, 531–538. [Google Scholar] [CrossRef]

- Hwang, C.-L.; Lin, M.-J. Group Decision Making under Multiple Criteria: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 1987. [Google Scholar]

- Choo, E.U.; Wedley, W.C. Optimal criterion weights in repetitive multicriteria decision-making. J. Oper. Res. Soc. 1985, 36, 983–992. [Google Scholar] [CrossRef]

- Fan, Z.P. Complicated Multiple Attribute Decision Making: Theory and Applications. Ph.D. Thesis, Northeastern University, Shenyang, China, 1996. [Google Scholar]

- Moshkovich, H.M.; Mechitov, A.I.; Olson, D.L. Rule induction in data mining: Effect of ordinal scales. Expert Syst. Appl. 2002, 22, 303–311. [Google Scholar] [CrossRef]

- Harker, P.T. Alternative modes of questioning in the analytic hierarchy process. Math. Model. 1987, 9, 353–360. [Google Scholar] [CrossRef]

- Saaty, T.L.; Vargas, L.G. Uncertainty and rank order in the analytic hierarchy process. Eur. J. Oper. Res. 1987, 32, 107–117. [Google Scholar] [CrossRef]

- Xu, Z.S. Method for group decision making with various types of incomplete judgment matrices. Control Decis. 2006, 21, 28–33. [Google Scholar]

- Van Laarhoven, P.J.M.; Pedrycz, W. A fuzzy extension of Saaty’s priority theory. Fuzzy Sets Syst. 1983, 11, 199–227. [Google Scholar] [CrossRef]

- Luce, R.D.; Suppes, P. Preferences Utility and Subject Probability. In Handbook of Mathematical Psychology; Luce, R.D., Bush, R.R., Galanter, E.H., Eds.; Wiley: New York, NY, USA, 1965; Volume 3, pp. 249–410. [Google Scholar]

- Alonso, S.; Chiclana, F.; Herrera, F.; Herrera-Viedma, E. A Learning Procedure to Estimate Missing Values in Fuzzy Preference Relations Based on Additive Consistency. In Modeling Decisions for Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2004; pp. 227–238. [Google Scholar]

- Xu, Z.S. A practical method for priority of interval number complementary judgment matrix. Oper. Res. Manag. Sci. 2002, 10, 16–19. [Google Scholar]

- Herrera, F.; Herrera-Viedma, E.; Verdegay, J.L. A model of consensus in group decision making under linguistic assessments. Fuzzy Sets Syst. 1996, 78, 73–87. [Google Scholar] [CrossRef]

- Herrera, F.; Herrera-Viedma, E.; Verdegay, J.L. Direct approach processes in group decision making using linguistic OWA operators. Fuzzy Sets Syst. 1996, 79, 175–190. [Google Scholar] [CrossRef]

- Greco, S.; Matarazzo, B.; Slowinski, R. Rough approximation of a preference relation by dominance relations. Eur. J. Oper. Res. 1999, 117, 63–83. [Google Scholar] [CrossRef]

- Greco, S.; Matarazzo, B.; Slowinski, R. Rough approximation by dominance relations. Int. J. Intell. Syst. 2002, 17, 153–171. [Google Scholar] [CrossRef]

- Hu, Q.H.; Yu, D.R.; Guo, M.Z. Fuzzy preference based rough sets. Inf. Sci. 2010, 180, 2003–2022. [Google Scholar] [CrossRef]

- Abbas, A.E. Entropy methods for adaptive utility elicitation. IEEE Trans. Syst. Man Cybern. 2004, 34, 169–178. [Google Scholar] [CrossRef]

- Yang, J.; Qiu, W. A measure of risk and a decision-making model based on expected utility and entropy. Eur. J. Oper. Res. 2005, 164, 792–799. [Google Scholar] [CrossRef]

- Abbas, A.E. Maximum Entropy Utility. Oper. Res. 2006, 54, 277–290. [Google Scholar] [CrossRef]

- Abbas, A. An Entropy Approach for Utility Assignment in Decision Analysis. In Proceedings of the 22nd International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering, Moscow, Russian Federation, 3–9 August 2002; pp. 328–338.

- Hu, Q.H.; Yu, D.R. Neighborhood Entropy. In Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; Volume 3, pp. 1776–1782.

- Jaynes, E.T. Information theory and statistical mechanics II. Phys. Rev. 1957, 108, 171–190. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Kullback, S. Information Theory and Statistics; Wiley: New York, NY, USA, 1959. [Google Scholar]

- Hu, Q.H.; Che, X.J.; Zhang, L.D.; Guo, M.Z.; Yu, D.R. Rank entropy based decision trees for monotonic classification. IEEE Trans. Knowl. Data Eng. 2012, 24, 2052–2064. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Zeng, K.; Kun, S.; Niu, X.Z. Multi-Granulation Entropy and Its Application. Entropy 2013, 15, 2288–2302. [Google Scholar] [CrossRef]

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | x10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 0.28 | 0.25 | 0.60 | 0.48 | 0.42 | 0.55 | 0.78 | 0.75 | 0.83 | 0.85 |

| A2 | 0.28 | 0.31 | 0.42 | 0.47 | 0.51 | 0.66 | 0.55 | 0.65 | 0.80 | 0.91 |

| D | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 3 | 3 |

| Sequence | Attribute | Description | |

|---|---|---|---|

| 1 | fertiliser | {LL, LN, HN, HH} | enumerated |

| 2 | slope | Slope of the paddock | numeric |

| 3 | aspect-dev-NW | The deviation from the north-west | numeric |

| 4 | olsenP | numeric | |

| 5 | minN | numeric | |

| 6 | TS | numeric | |

| 7 | Ca-Mg | Calcium magnesium ration | numeric |

| 8 | LOM | Soil lom (g/100g) | numeric |

| 9 | NFIX-mean | A mean calculation | numeric |

| 10 | Eworms-main-3 | Main 3 spp earth worms per g/m2 | numeric |

| 11 | Eworms-No-species | Number of spp | numeric |

| 12 | KUnSat | mm/h | numeric |

| 13 | OM | numeric | |

| 14 | Air-Perm | numeric | |

| 15 | Porosity | numeric | |

| 16 | HFRG-pct-mean | Mean percent | numeric |

| 17 | legume-yield | kgDM/ha | numeric |

| 18 | OSPP-pct-mean | Mean percent | numeric |

| 19 | Jan-Mar-mean-TDR | numeric | |

| 20 | Annual-Mean-Runoff | In mm | numeric |

| 21 | root-surface-area | M2/m3 | numeric |

| 22 | Leaf-P | Ppm | numeric |

| Class | Pasture prod-class | {Low, Median, High} | enumerated |

| Data Set | Algorithm | Preference | Selected Features Subset | Significance of Feature Subset | Inconsistence Entropy of Feature Subset |

|---|---|---|---|---|---|

| Data set 1 | Forward Feature Selection | upward | 4, 11, 1 | 0.97, 0.25, 0.29 | 0 |

| downward | 4, 11, 1 | 0.97, 0.24, 0.31 | 0 | ||

| Backward Feature Selection | upward | 17, 18, 20, 22 | 0.4, 0.10, 0.19, 0.97 | 0 | |

| downward | 17, 18, 20, 22 | 0.48, 0.10, 0.17, 0.97 | 0 | ||

| Data set 2 | Forward Feature Selection | upward | 4, 11, 22 | 0.36, 0.27, 0.97 | 0 |

| downward | 4, 11, 22 | 0.32, 0.26, 0.97 | 0 | ||

| Backward Feature Selection | upward | 5, 4, 3, 1 | 0.10, 0.15, 0.19, 0.97 | 0 | |

| downward | 5, 4, 3, 1 | 0.10, 0.13, 0.17, 0.97 | 0 | ||

| Data set 3 | Backward Feature Selection | upward | 10, 16, 4 | 0.55, 0.92, 0.97 | 0 |

| downward | 10, 16, 4 | 0.52, 0.92, 0.97 | 0 | ||

| Data set 4 | Forward Feature Selection | upward | 4, 11, 16 | 0.97, 0.25, 0.92 | 0 |

| downward | 4, 11, 16 | 0.97, 0.24, 0.92 | 0 |

| Features Subset | Upward Preference | Downward Preference |

|---|---|---|

| All features | 2,4,7,8,9,10,11,12,13,14,15,16, 18,19,20,21,22,26,27,28,33,35 | 1,2,3,4,5,6,7,8,12,17,22,23, 24,25,28,29,30,31,32,34,35,36 |

| 4,11,1 | 2,9,10,11,12,13,14,15,16,18,19,20, 21,22,26,27,28,29,30,31,32,33,34,35,36 | 1,2,3,4,5,6,7,8,23,24,25, 28,29,30,31,32,34,35,36 |

| 4,11,22 | 2,4,7,8,9,10,11,12,13,14,15,16, 18,19,20,21,22,26,27,28,33,35 | 1,2,3,4,5,6,7,8,12,17,22,23, 24,25,28,29,30,31,32,34,35,36 |

| 17,18,20,22 | 1,2,4,7,8,9,10,11,12,13,14,15, 16,18,19,20,21,22,26,27,28,33,35 | 1,2,3,4,5,6,7,8,12,17,22,23, 24,25,28,29,30,31,32,34,35,36 |

| 1,3,4,5 | 5,9,10,11,12,13,14,15,16,18, 20,21,24,25,26,31,32,33,34,35,36 | 1,2,4,6,11,12,19,20,21,22,23, 24,25,28,29,30,31,32,34,35,36 |

| 10,16,4 | 2,3,4,8,9,10,11,12,13,14,15,16, 18,20,21,26,27,29,31,32,33,34,35,36 | 1,3,5,6,7,17,18,19,22,23,24, 25,28,29,30,31,32,34,35,36 |

| 4,11,16 | 2,5,6,7,9,10,11,12,13,14,15,16,18,20, 21,22,23,24,25,26,28,29,30,31,32,34,35,36 | 1,2,3,4,5,6,7,8,10,14,17,19,22,23, 24,25,27,28,29,30,31,32,33,34,35,36 |

| Sequence | Attribute | Description | |

|---|---|---|---|

| 1 | site | where fruit is located | enumerated |

| 2 | daf | number of days after flowering | enumerated |

| 3 | fruit | individual number of the fruit | enumerated |

| 4 | weight | weight of whole fruit in grams | numeric |

| 5 | storewt | weight of fruit after storage | numeric |

| 6 | pene | penetrometer indicates maturity of fruit at harvest | numeric |

| 7 | solids_% | a test for dry matter | numeric |

| 8 | brix | a refactometer measurement used to indicate sweetness or ripeness of the fruit | numeric |

| 9 | a* | the a* coordinate of the HunterLab L*, a*, b* notation of color measurement | numeric |

| 10 | egdd | the heat accumulation above a base of 8c from emergence of the plant to harvest of the fruit | numeric |

| 11 | fgdd | the heat accumulation above a base of 8c from flowering to harvesting | numeric |

| 12 | ground spot | the number indicating color of skin where the fruit rested on the ground | numeric |

| 13 | glucose | measured in mg/100g of fresh weight | numeric |

| 14 | fructose | measured in mg/100g of fresh weight | numeric |

| 15 | sucrose | measured in mg/100g of fresh weight | numeric |

| 16 | total | measured in mg/100g of fresh weight | numeric |

| 17 | Glucose + fructos | measured in mg/100g of fresh weight | numeric |

| 18 | starch | measured in mg/100g of fresh weight | numeric |

| 19 | sweetness | the mean of eight taste panel scores | numeric |

| 20 | flavour | the mean of eight taste panel scores | numeric |

| 21 | dry/moist | the mean of eight taste panel scores | numeric |

| 22 | fibre | the mean of eight taste panel scores | numeric |

| 23 | heat_input_emerg | the amount of heat emergence after harvest | numeric |

| 24 | heat_input_flower | the amount of heat input before flowering | numeric |

| 25 | acceptability | the acceptability of the fruit | enumarated |

| Data Set | Algorithm | Preference | Selected Features Subset | Significance of Feature Subset | Inconsistent Entropy of Feature Subset |

|---|---|---|---|---|---|

| Original data | Forward Feature Selection | upward | 1, 2, 14 | 0.98, 0.63, 0.26 | 0 |

| downward | 1, 2, 14 | 0.98, 0.55, 0.22 | 0 | ||

| Backward Feature Selection | upward | 15, 17, 18, 22, 24 | 0.66, 0.38, 0.35, 0.33, 0.98 | 0 | |

| downward | 15, 17, 18, 22, 24 | 0.66, 0.38, 0.35, 0.33, 0.98 | 0 | ||

| Sorted by feature label descending | Forward Feature Selection | upward | 1, 2, 14 | 0.98, 0.63, 0.26 | 0 |

| downward | 1, 2, 14 | 0.98, 0.55, 0.22 | 0 | ||

| Backward Feature Selection | upward | 9, 3, 2, 1 | 0.98, 0.32, 0.72, 0.94 | 0 | |

| downward | 9, 3, 2, 1 | 0.82, 0.30, 0.75, 0.98 | 0 | ||

| Sorted by significance descending | Forward Feature Selection | upward | 1, 2, 14 | 0.98, 0.63, 0.26 | 0 |

| downward | 1, 2, 14 | 0.98, 0.55, 0.22 | 0 | ||

| Sorted by significance ascending | Backward Feature Selection | upward | 8, 12, 2, 16, 1 | 0.49, 0.62, 0.63, 0.71, 0.98 | 0 |

| downward | 8, 12, 2, 16, 1 | 0.37, 0.53, 0.55, 0.57, 0.98 | 0 |

| Features subset | Upward Preference | Downward Preference |

|---|---|---|

| All features | 1,5,8,10,11,12,13,14,15,16,21,23, 25,32,33,34,35,36,42,44,45,46,47,48 | 1,2,6,7,9,12,13,14,15,16,22,24,26,27,28,29, 30,31,33,34,35,36,37,38,39,40,49,50,51,52 |

| 1,2,14 | 1,3,4,15,33,34,35,36,42,44,46,48,52 | 1,2,6,7,9,12,13,14,15,16,22,24, 26,27,28,29,30,31,40,49,50,51,52 |

| 15,17,18,22,24 | 5,8,10,11,12,13,14,15,16,21,23, 25,32,33,34,35,36,42,44,45,46,47,48 | 1,2,6,7,9,12,13,14,15,16,22,24,26,27,28, 29,30,31,33,34,35,36,37,38,39,40,49,50,51,52 |

| 9,3,2,1 | 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16, 17,18,19,20,21,23,25,32,33,34,35,36, 37,38,39,41,42,43,44,45,46,47,48,51 | 1,2,6,7,9,12,13,14,15,16,22,24,26,27, 28,29,30,31,33,34,35,36,37,38,39,40, 41,42,43,44,45,46,47,48,49,50,51,52 |

| 8,12,2,16,1 | 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16, 17,18,19,20,21,23,25,29,32,33,34,35, 36,37,38,39,41,42,43,44,45,46,47,48 | 1,2,6,7,9,12,13,14,15,16,22,24,26,27, 28,29,30,31,33,34,35,36,37,38,39,40, 41,42,43,44,45,46,47,48,49,50,51,52 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, W.; She, K.; Wei, P. Preference Inconsistence-Based Entropy. Entropy 2016, 18, 96. https://doi.org/10.3390/e18030096

Pan W, She K, Wei P. Preference Inconsistence-Based Entropy. Entropy. 2016; 18(3):96. https://doi.org/10.3390/e18030096

Chicago/Turabian StylePan, Wei, Kun She, and Pengyuan Wei. 2016. "Preference Inconsistence-Based Entropy" Entropy 18, no. 3: 96. https://doi.org/10.3390/e18030096

APA StylePan, W., She, K., & Wei, P. (2016). Preference Inconsistence-Based Entropy. Entropy, 18(3), 96. https://doi.org/10.3390/e18030096