1. Introduction

Pseudorandom binary sequences play a significant role in many fields, such as error control coding, spread spectrum communications, stochastic computation, Monte Carlo simulations in numerical analysis, statistical sampling, and cryptography [

1,

2,

3]. The sequences, which are applied to all these fields, are based on their good randomness. To test the randomness of binary sequences, some criterion have been proposed, such as SP800 [

4], TestU01 [

5], FIPS140-1 [

6], and Crypt-XS [

7]. The indexes in these test suites are combined from statistics and complexity, and, to a larger extent, from information science.

Information science considers that an information process or data sequence uses the probability measure for random states and Shannon’s entropy as the uncertainty function of these states. Very early, Fisher and Boekee proposed their information measures for maximum-likelihood estimates in statistics [

8,

9]. In 1948, Shannon introduced the concept of “entropy” into information science and proposes an uncertainty measure of random states [

10]. Presently, Shannon’s entropy is still one of the most widely used measures to evaluate the randomness of sequences. Moreover, Renyi [

11], Stratonovich [

12], and Kullback and Leibler [

13], all generalize Shannon’s entropy from a different perspective. Another interesting issue is the relationship between Shannon’s entropy and other complexity measures. This research is rather limited. Lempel and Ziv proposed the so-called Lempel–Ziv complexity in [

14], and analyze the relationship to Shannon’s entropy. Liu revealed the relationship between Shannon’s entropy with eigenvalue and nonlinear complexity in [

15,

16], respectively.

In 2002, Bandt proposed a natural complexity measure for time series called permutation entropy (PE) [

17]. PE is easily implemented and is computationally much faster than other comparable methods, such as Lyapunov exponents, while also being robust to noise [

18], which makes it more and more popular [

19,

20,

21,

22,

23,

24,

25,

26]. In theory, the authors of [

19] proposed a generalized PE based on a recently postulated entropic form and compared it to the original PE. Fan

et al. [

20] proposed a multiscale PE as a new complexity measure of nonlinear time series and the authors of [

21] generalized it by introducing weights. Unakafova

et al. [

22] discussed the relationship between PE and Kolmogorov–Sinai entropy in the one-dimensional case,

et al. In application, the authors of [

23] used PE to quantify the nonstationarity effect in the vertical velocity records. Li

et al. [

24] investigated PE as a tool to predict the absence seizures of genetic absence epilepsy rats from Strasbourg. Zunino

et al. [

25] identified the delay time of delay dynamical system by using a PE analysis method. Mateos

et al. [

26] developed a PE method to characterize electrocardiograms and electroencephalographic records in the treatment of a chronic epileptic patient. PE is an interesting complexity measure based on Shannon’s entropy and can detect some phenomenons. However, it does not mean that PE is beyond Shannon’s entropy, or can completely replace it; it is just a supplement of Shannon’s entropy.

PE is a randomness measure for time series based on a comparison of neighboring values. This definition makes it difficult to apply to binary sequences. A binary sequence has only two kinds of symbols, “0” and “1”. Therefore, the comparison of neighboring values may appear as a large number or an equal sign. Almost all the applications of PE are for real number time series.

In this paper, we will generalize the PE measure to binary sequences. First, we propose a modified PE measure for binary sequences. Then, we analyze the theoretical value of PE for random binary sequences. This value can be used as one of the criterion to measure the randomness of binary sequences. Then, we will reveal the relationship between this PE measure with other randomness measure, as Shannon’s entropy and Lempel–Ziv complexity. The results show that PE is consistence with these two measures. At last, we use PE as one of the randomness measures to evaluate the randomness of chaotic binary sequences.

The rest of this paper is organized as follows. The modified PE for binary sequences and the theoretical analysis for random binary sequences are introduced in

Section 2. The relationship between PE, Shannon’s entropy, and Lempel–Ziv complexity for random binary sequences are revealed in

Section 3. In

Section 4, we use this PE to measure the randomness of chaotic binary sequences. Finally,

Section 5 concludes the paper.

2. PE and Its Theoretical Limitation for Random Binary Sequences

First, we briefly review the description in [

17] for time series.

Example 1: Consider a time series with eight values x = (3 5 1 9 16 8 4 10). If order n = 2, we compare the seven pairs of neighbors. For 3 < 5, 5 > 1, 1 < 9, 9 < 16, 16 > 8, 8 > 4, and 4 < 10, then there are four of seven satisfy xt < xt+1, which is represented by 01, and three of seven satisfy xt > xt+1, which represented by 10. Then, the PE of order n = 2 can be calculated as −(4/7)log(4/7) − (3/7)log(3/7) ≈ 0.9852. If order n = 3, we compare three consecutive values. (1 9 16) is represented by 012; (3 5 1) and (9 16 8) are represented by 120; (5 1 9) and (8 4 10) are represented by 102; (16 8 4) is represented by 210. Then, the PE of order n = 3 can be calculated as −2(1/6)log(1/6) − 2(2/6)log(2/6) ≈ 1.9183. The definition of PE is described as follows.

Definition 1 [

17]:

Consider a time series {xt}t=1, …,T. We study all n! permutations M of order n, which are considered here as possible order types of n different numbers. For each M, we determine the relative frequency (# means number)This estimates the frequency of M as good as possible for a finite series of values. The permutation entropy of order n ≥ 2 is defined as:where the sum runs over all n!

permutations M of order n. According to Definition 1, we know that PE is a measure based on Shannon’s entropy. This measure is a supplement of Shannon’s entropy, which can detect some additional information.

Example 2: Consider a time series with sixteen values x = (4 3 2 1 3 2 1 4 2 1 4 3 2 4 3 1). By using Shannon’s entropy, the time series x is uniformly distributed, and Shannon’s entropy can be calculated as −4(1/4)log(1/4) = 2, which equals to the ideal value. This result means that the series x is an ideal random sequence in this sense. Now we calculate the PE with order n = 3. (4 3 2), (3 2 1), (3 2 1), (4 2 1), (4 3 2) and (4 3 1) are represented by 210; (2 1 3), (2 1 4), (2 1 4) and (3 2 4) are represented by 102; (1 3 2), (1 4 2) and (1 4 3) are represented by 120; (2 4 3) is represented by 021; Then, the PE of order n = 3 can be calculated as −(6/14)log(6/14) − (4/14)log(4/14) − (3/14)log(3/14) − (1/14)log(1/14) ≈ 1.7885, which is much lower than the PE of completely random sequence, as shown below. This result indicates that the series x is not an ideal random sequence with the permutation 012 and 201 never appear, which is inconsistent with the result of Shannon’s entropy.

It is clear that for a completely random sequence, where all n! possible permutations appear with the same probability, H(n) reach its maximum value logn!.

However, if the time series {xt}t=1, …,T be a binary sequences, with only two kinds of symbols “0” and “1” in its sequence, the upper theory does not hold anymore.

Let us consider the permutations M. Are there n! possible permutations in total? The answer is No! For example, consider the binary sequences consist with two 0s and two 1s. The total number of permutations should be 6, not 4!. This is because of the repeatability of symbols in the sequence. Furthermore, for a completely random binary sequence, the possible permutations will not appear with the same probability. The total number of possible permutations and their probabilities will be determined after the following definition of PE for binary sequences.

Definition 2: Consider a binary sequence {st}t=1, …,T.

We study all permutations M of order n, which are considered here as possible order types of n different numbers. Assume be the 0s, and be the 1s in sequence {st}, where i1, i2, …, ik, j1, j2, …, jp are different from each other, and k + p = T. We set , 1

≤ l ≤ k, and , 1

≤ m ≤ p, then, the binary sequence is transformed into a series of integer values. Calculating the relative frequency of each M as The permutation entropy of order

n ≥ 2 is defined as:

where the sum runs over all permutations

M of order

n.

The main effect in Definition 2 is to transform the binary sequence into a series of integer values. For example, the sequence 000000 is transformed into 1 2 3 4 5 6, 100000 is transformed into 6 1 2 3 4 5, and 111000 is transformed into 4 5 6 1 2 3. The following example is used to describe how PE is calculated by definition 2.

Example 3: Let us take a binary sequence with nine symbols, 001010110. The PE can not be calculated by definition 1 for the consecutive repeatability of symbols in the sequence (e.g., 00 and 11). Using Definition 2, this binary sequence can be transformed into 1 2 6 3 7 4 8 9 5; therefore, no consecutive repeated symbols appear. Choose the order n = 3, we compare three consecutive values. (1 2 6) and (4 8 9) correspond to the permutation 123; (2 6 3) and (3 7 4) correspond to the permutation 132; (6 3 7) and (7 4 8) correspond to the permutation 213, and (8 9 5) correspond to the permutation 231. The PE of order n = 3 is H(3) = −3(2/7)log(2/7) − (1/7)log(1/7) ≈ 1.9502.

If the sequence {

st} be a completely random binary sequence, PE will reach its maximum value. Consider an infinite length completely random binary sequence (i.i.d) with the probabilities of 0s and 1s be

p0 and

p1, respectively, and

p0 =

p1 = 0.5. In this case, the total number of possible permutations

M should be 2

n −

n, and their probabilities can be calculated as follows:

here,

p(1) is the probability of permutation “12…

n”,

p(2),

p(3), …,

p(2

n −

n) are the probability of other possible permutations. Put these probabilities into

H(

n),

H(

n) can be written as:

The value

is the maximum value of PE under order

n for binary sequence and stands for the completely random binary sequences. In other words, a binary sequence is random if its PE is close to

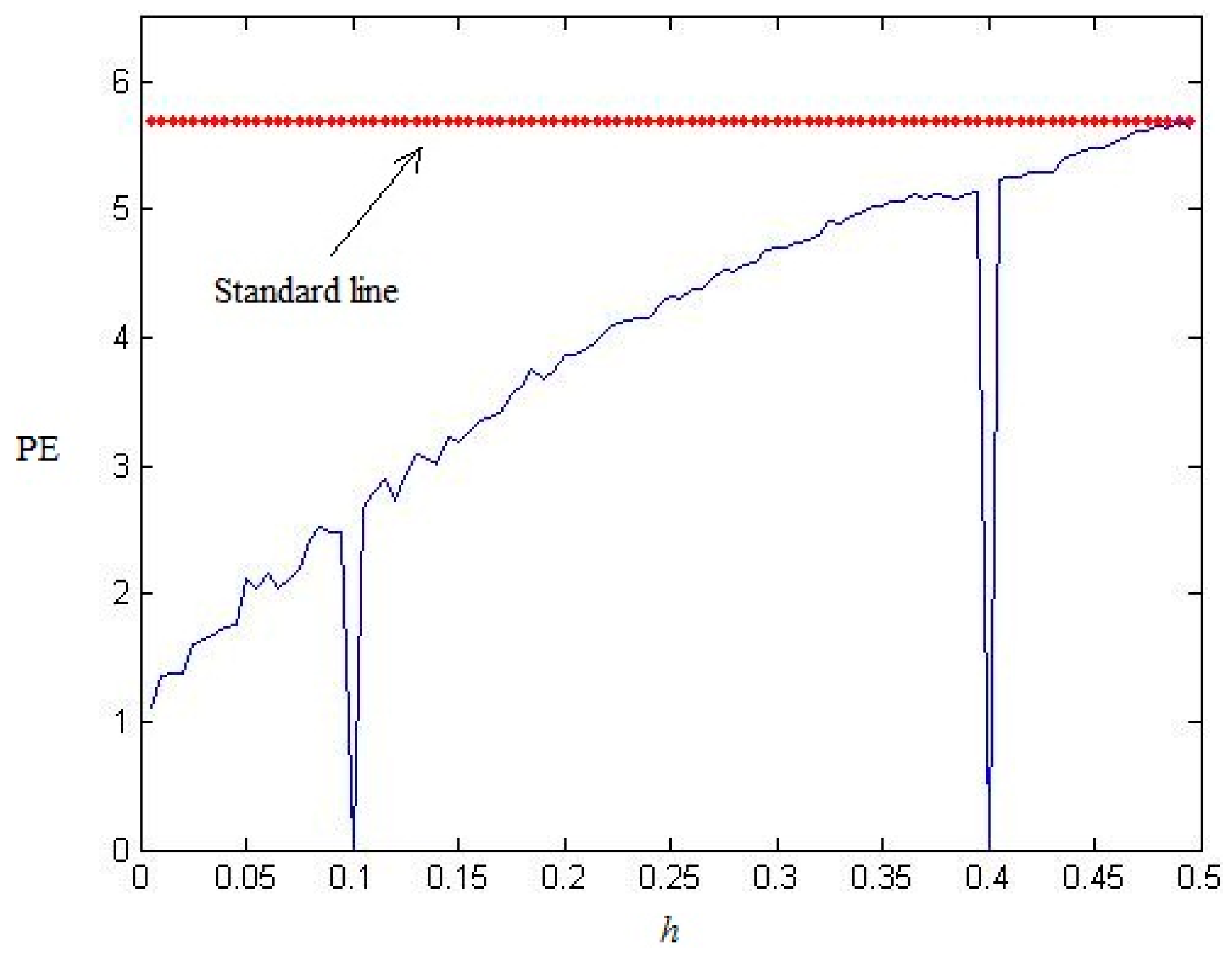

. We use the “rand” function in Matlab to generate 100 random binary sequences with

p0 =

p1 = 0.5; the PE values with different order

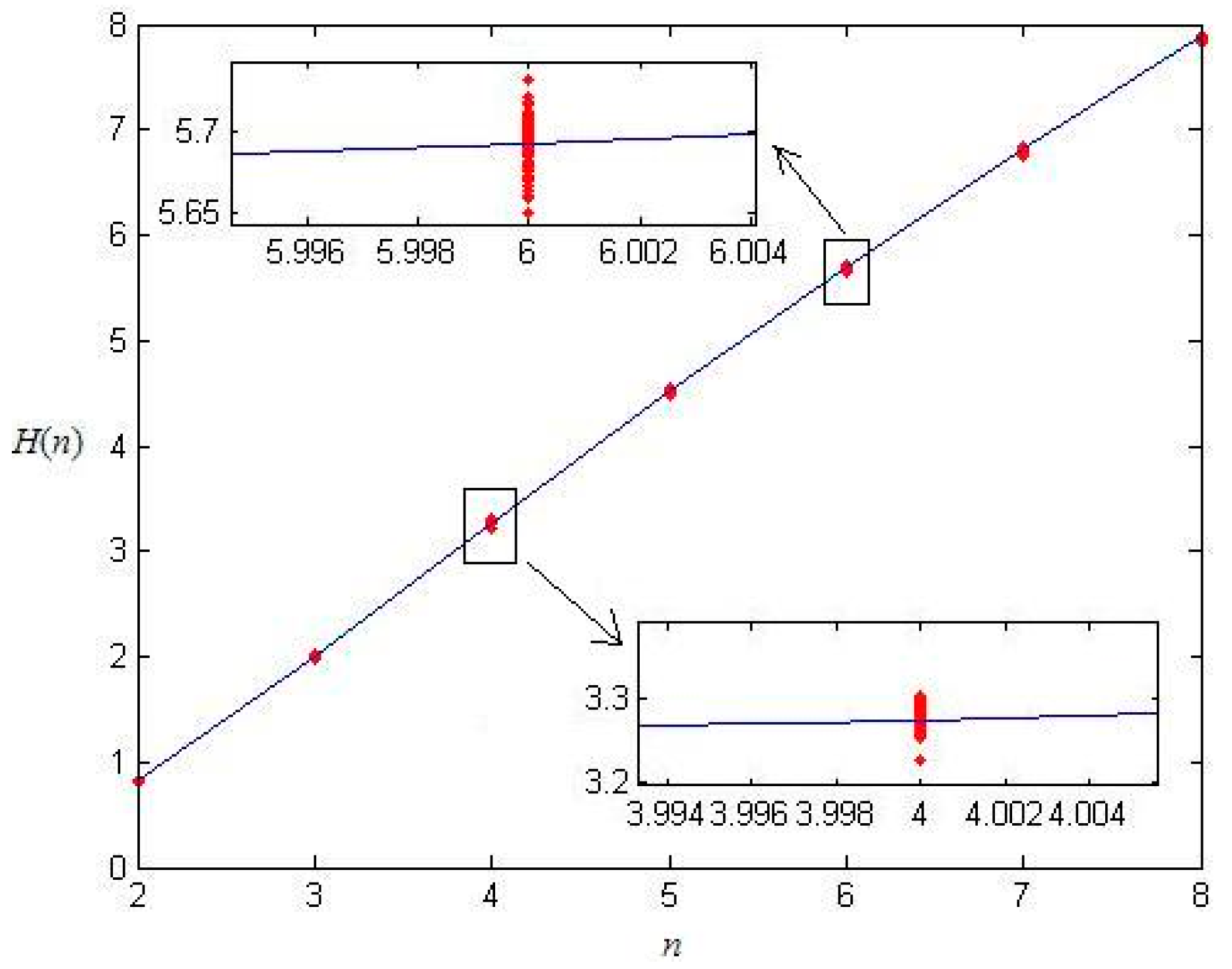

n are shown in

Figure 1. From

Figure 1, we find that all the PE values of these binary sequences (red dots in the figure) are close to the theoretical curve (blue line in the figure), which proves our theoretical result.

Furthermore, we can generalize our result to a general random binary sequence with

p0 ≠

p1. In this case, the total number of possible permutations

M is also 2

n −

n, while their probabilities are different. The theoretical PE value can be written as:

here,

. If we set

p0 =

p1 = 0.5, Equation (2) will degenerate into Equation (1) since the following equation always holds:

Figure 1.

Permutation entropy (PE) of completely random binary sequences with different n.

Figure 1.

Permutation entropy (PE) of completely random binary sequences with different n.

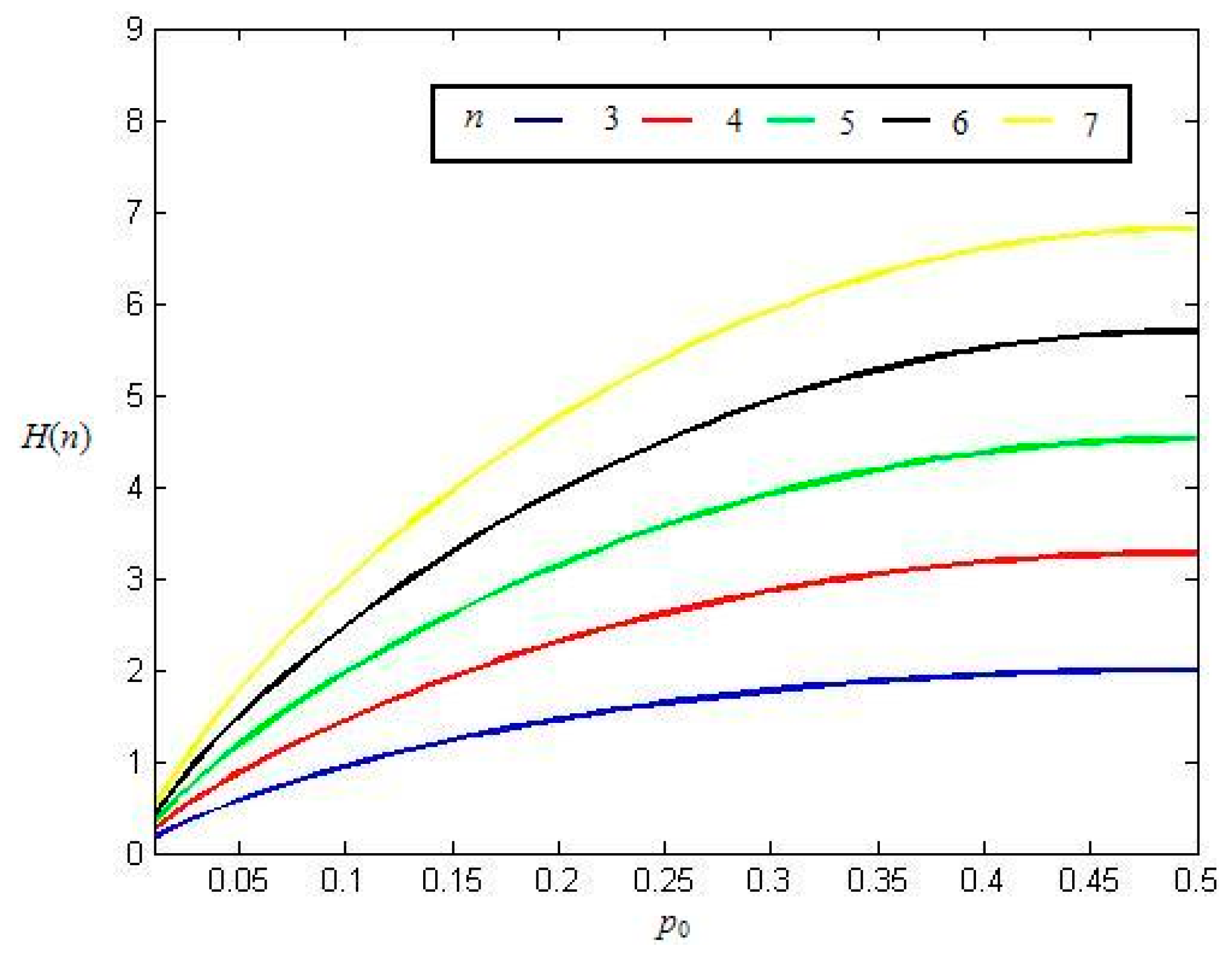

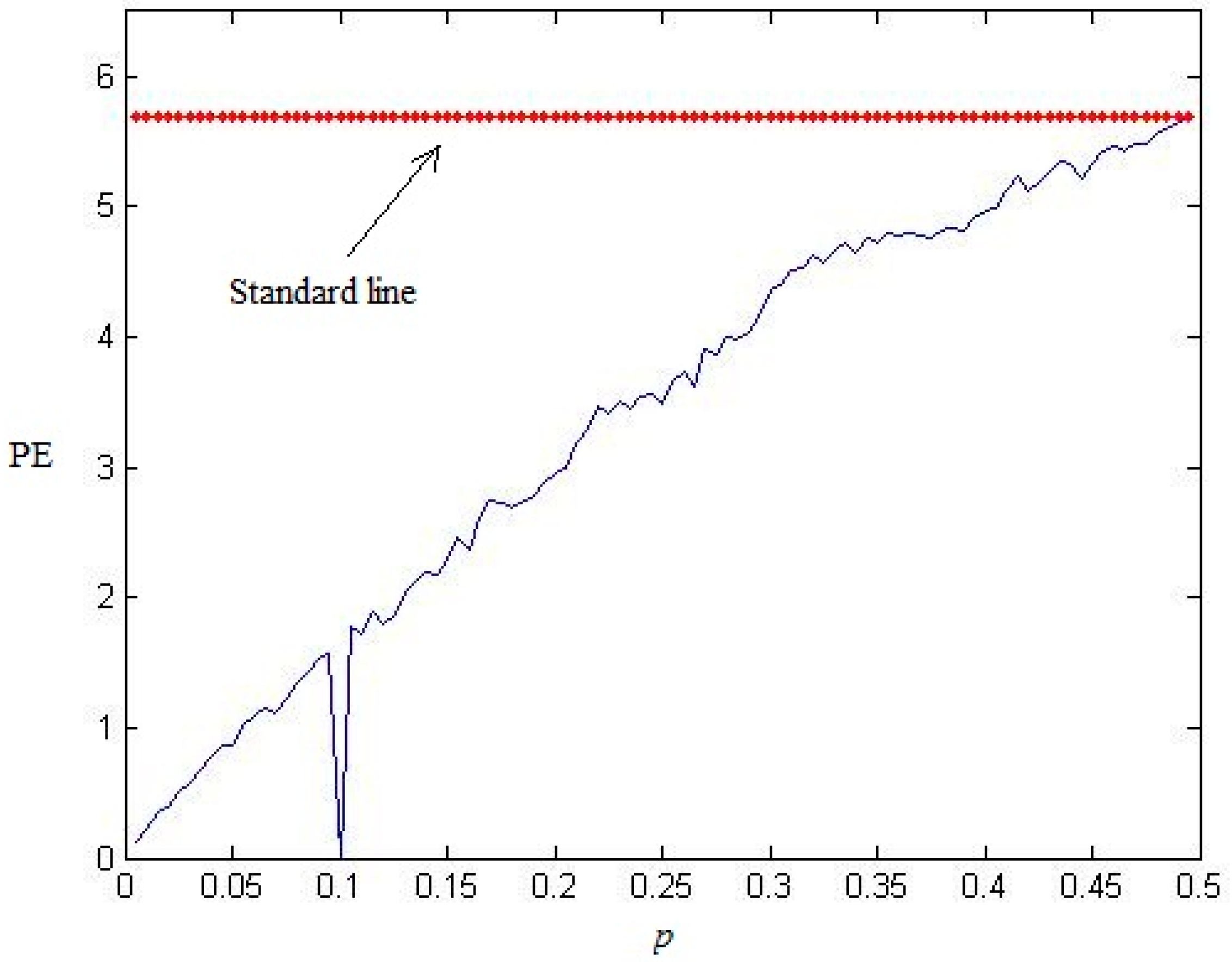

Figure 2 shows the relationship between

H(

n) and

p0 for different order

n. From

Figure 2, we can see that

H(

n) is increased with

p0 increases from 0 to 0.5, which is consistent to our intuition. Furthermore, the larger the order

n is, the larger the

H(

n) is. For different

n, the curves are similar and only have the difference on the magnitude. Therefore, we say PE is robust to its order

n.

Figure 2.

The relationship between H(n) and p0 for different order n of random binary sequences.

Figure 2.

The relationship between H(n) and p0 for different order n of random binary sequences.