Calculation of Differential Entropy for a Mixed Gaussian Distribution

Abstract

:1. Introduction

2. Conclusions

Acknowledgements

References

- Shannon, C. E. A Mathematical Theory of Communication. The Bell System Technical Journal 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Cover, T. M.; Thomas, J. A. Elements of Information Theory; John Wiley and Sons: New Jersey, 2006. [Google Scholar]

- Lazo, A. C. G. V.; Rathie, P. N. On the Entropy of Continuous Probability Distributions. IEEE Transactions on Information Theory 1978, IT-24(1), 120–122. [Google Scholar] [CrossRef]

- Michalowicz, J. V.; Nichols, J. M.; Bucholtz, F. Calculation of Differential Entropy for Continuous Probability Distributions. Technical Report MR/5650/, U. S. Naval Research Laboratory Technical Report. 2008. [Google Scholar]

- Bhatia, V.; Mulgrew, B. Non-parametric Likelihood Based Channel Estimator for Gaussian Mixture Noise. Signal Processing 2007, 87, 2569–2586. [Google Scholar] [CrossRef]

- Wang, Y. G.; Wu, L. A. Nonlinear Signal Detection from an Array of Threshold Devices for Non-Gaussian Noise. Digital Signal Processing 2007, 17(1), 76–89. [Google Scholar] [CrossRef]

- Tan, Y.; Tantum, S. L.; Collins, L. M. Cramer-Rao Lower Bound for Estimating Quadrupole Resonance Signals in Non-Gaussian Noise. IEEE Signal Processing Letters 2004, 11(5), 490–493. [Google Scholar] [CrossRef]

- Lu, Z. W. An Iterative Algorithm for Entropy Regularized Likelihood Learning on Gaussian Mixture with Automatic Model Selection. Neurocomputing 2007, 69(13–15), 1674–1677. [Google Scholar] [CrossRef]

- Mars, N. J. I.; Arragon, G. W. V. Time Delay Estimation in Nonlinear Systems. IEEE Transactions on Acoustics, Speech, and Signal Processing 1981, ASSP-29(3), 619–621. [Google Scholar] [CrossRef]

- Hild, K. E.; Pinto, D.; Erdogmus, D.; Principe, J. C. Convolutive Blind Source Separation by Minimizing Mutual Information Between Segments of Signals. IEEE Transactions on Circuits and Systems I 2005, 52(10), 2188–2196. [Google Scholar] [CrossRef]

- Rohde, G. K.; Nichols, J. M.; Bucholtz, F.; Michalowicz, J. V. Signal Estimation Based on Mutual Information Maximization. In ‘Forty-First Asilomar Conference on Signals, Systems, and Computers’, IEEE; 2007. [Google Scholar]

- Gradshteyn, I. S.; Ryzhik, I. M. Table of Integrals, Series and Products, 4th ed.; Academic Press: New York, 1965. [Google Scholar]

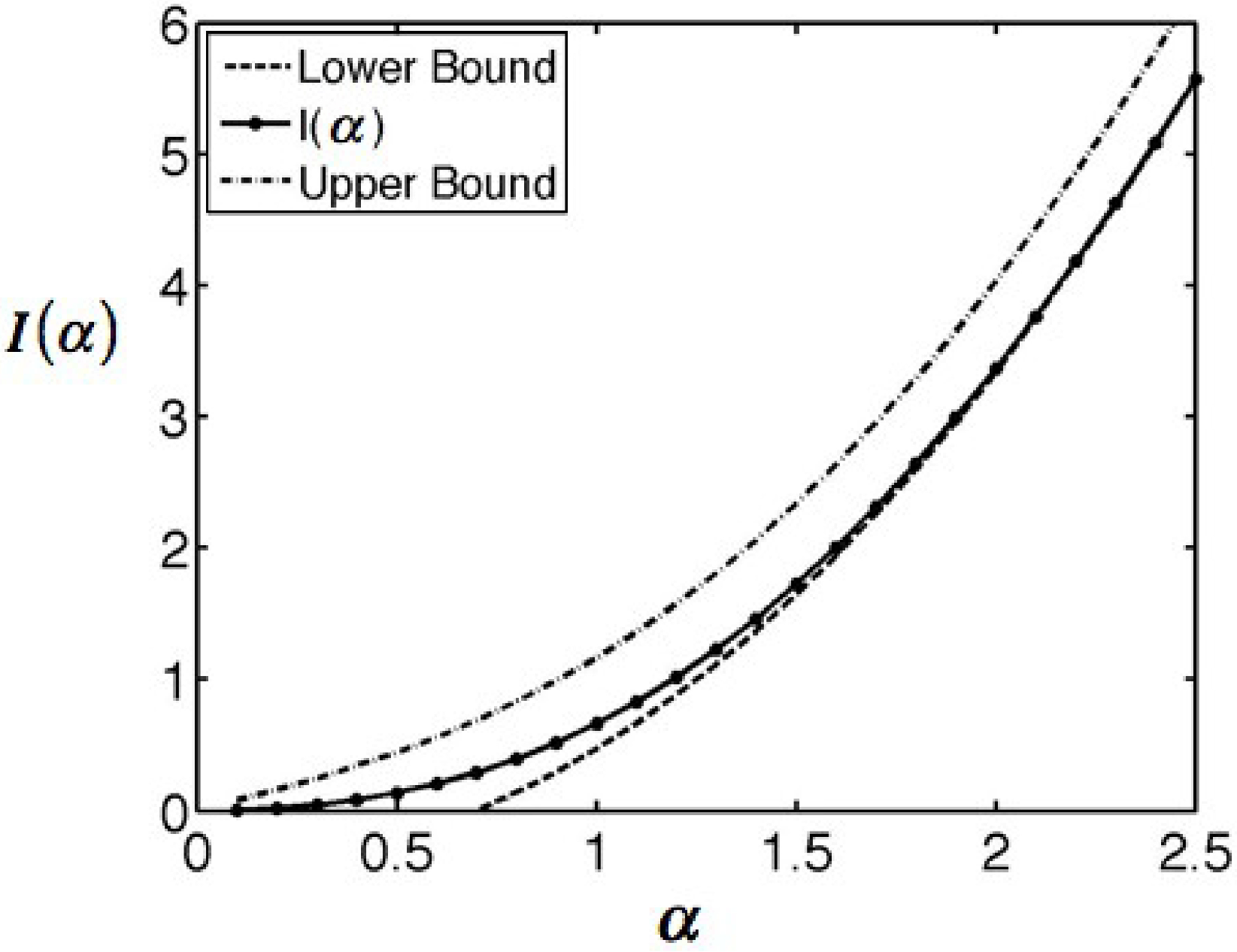

| α | I(α) | α2 − I | α | I(α) | α2 − I | |

|---|---|---|---|---|---|---|

| 0.0 | 0.000 | 0.000 | (continued) | |||

| 0.1 | 0.005 | 0.005 | 2.1 | 3.765 | 0.645 | |

| 0.2 | 0.020 | 0.020 | 2.2 | 4.185 | 0.656 | |

| 0.3 | 0.047 | 0.043 | 2.3 | 4.626 | 0.664 | |

| 0.4 | 0.086 | 0.074 | 2.4 | 5.089 | 0.671 | |

| 0.5 | 0.139 | 0.111 | 2.5 | 5.574 | 0.676 | |

| 0.6 | 0.207 | 0.153 | 2.6 | 6.080 | 0.680 | |

| 0.7 | 0.292 | 0.198 | 2.7 | 6.607 | 0.683 | |

| 0.8 | 0.396 | 0.244 | 2.8 | 7.154 | 0.686 | |

| 0.9 | 0.519 | 0.291 | 2.9 | 7.722 | 0.688 | |

| 1.0 | 0.663 | 0.337 | 3.0 | 8.311 | 0.689 | |

| 1.1 | 0.829 | 0.381 | 3.1 | 8.920 | 0.690 | |

| 1.2 | 1.018 | 0.422 | 3.2 | 9.549 | 0.691 | |

| 1.3 | 1.230 | 0.460 | 3.3 | 10.198 | 0.692 | |

| 1.4 | 1.465 | 0.495 | 3.4 | 10.868 | 0.692 | |

| 1.5 | 1.723 | 0.527 | 3.5 | 11.558 | 0.692 | |

| 1.6 | 2.005 | 0.555 | 3.6 | 12.267 | 0.693 | |

| 1.7 | 2.311 | 0.579 | 3.7 | 12.997 | 0.693 | |

| 1.8 | 2.640 | 0.600 | 3.8 | 13.747 | 0.693 | |

| 1.9 | 2.992 | 0.618 | 3.9 | 14.517 | 0.693 | |

| 2.0 | 3.367 | 0.633 | 4.0 | 15.307 | 0.693 |

© 2008 by the authors. Licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Michalowicz, J.V.; Nichols, J.M.; Bucholtz, F. Calculation of Differential Entropy for a Mixed Gaussian Distribution. Entropy 2008, 10, 200-206. https://doi.org/10.3390/entropy-e10030200

Michalowicz JV, Nichols JM, Bucholtz F. Calculation of Differential Entropy for a Mixed Gaussian Distribution. Entropy. 2008; 10(3):200-206. https://doi.org/10.3390/entropy-e10030200

Chicago/Turabian StyleMichalowicz, Joseph V., Jonathan M. Nichols, and Frank Bucholtz. 2008. "Calculation of Differential Entropy for a Mixed Gaussian Distribution" Entropy 10, no. 3: 200-206. https://doi.org/10.3390/entropy-e10030200

APA StyleMichalowicz, J. V., Nichols, J. M., & Bucholtz, F. (2008). Calculation of Differential Entropy for a Mixed Gaussian Distribution. Entropy, 10(3), 200-206. https://doi.org/10.3390/entropy-e10030200