Panchromatic Image Super-Resolution Via Self Attention-Augmented Wasserstein Generative Adversarial Network

Abstract

1. Introduction

- (1)

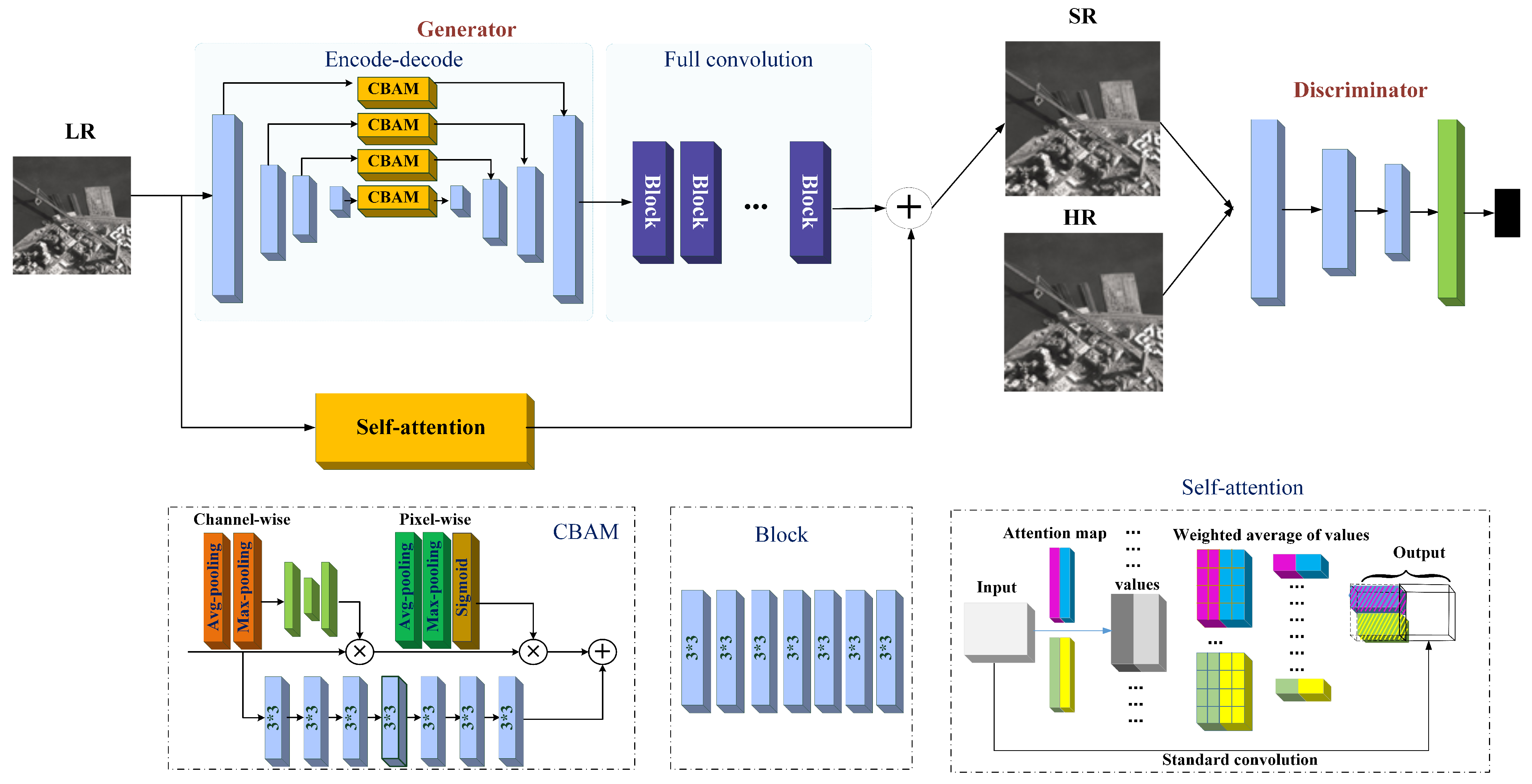

- We propose a WGAN-based network (SAA-WGAN) for PAN image SR, which is integrated with the encode-decode structure and CBAM.

- (2)

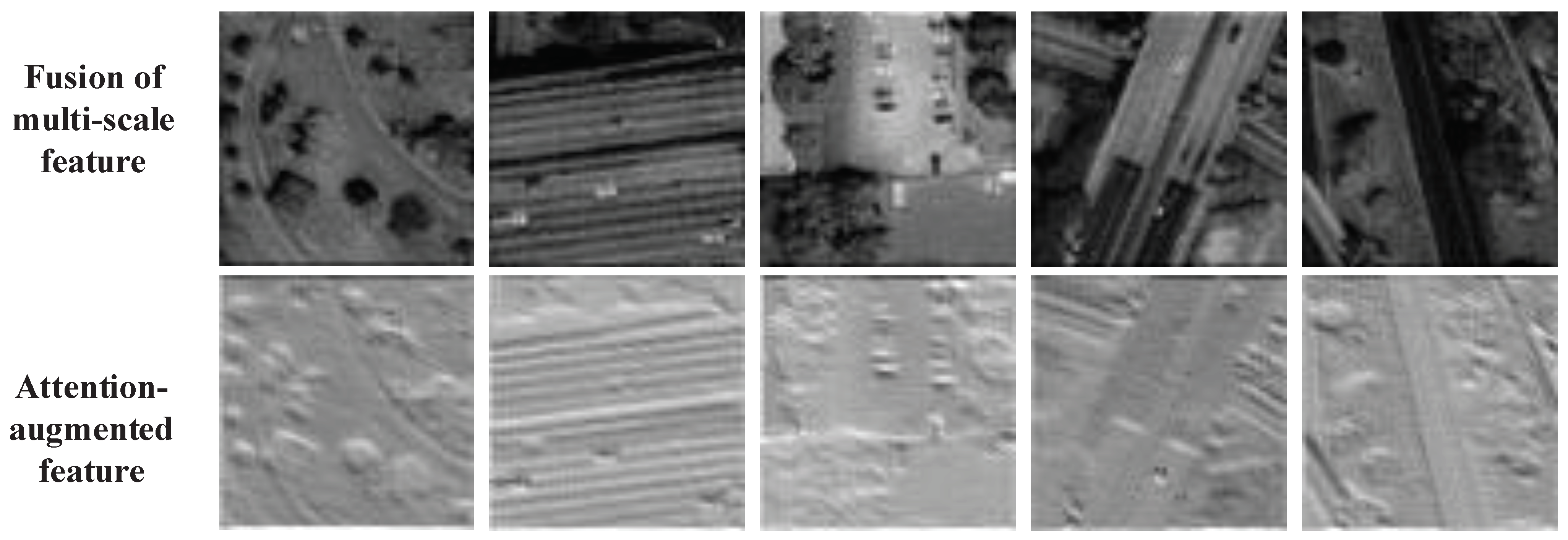

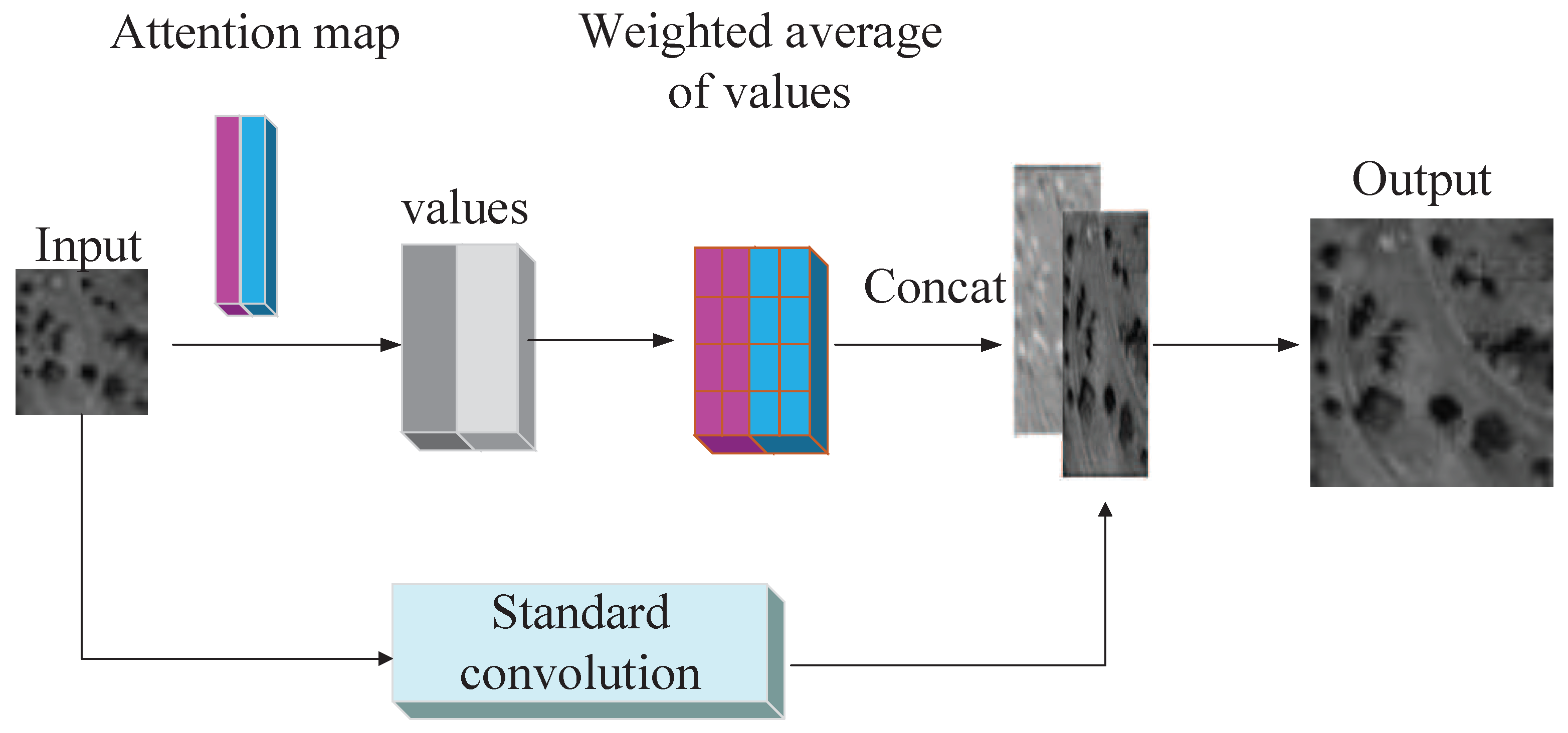

- We apply the self-attention module into the WGAN network, from which the long-range features can be well preserved and transferred.

- (3)

- The generate loss is a combination of pixel loss, perceptual loss, and gradient loss to achieve the supervise in terms of both image quality and visual effect.

- (4)

- Extensive evaluations have been conducted to verify the above contributions.

2. Related Work

2.1. Generative Adversarial Networks

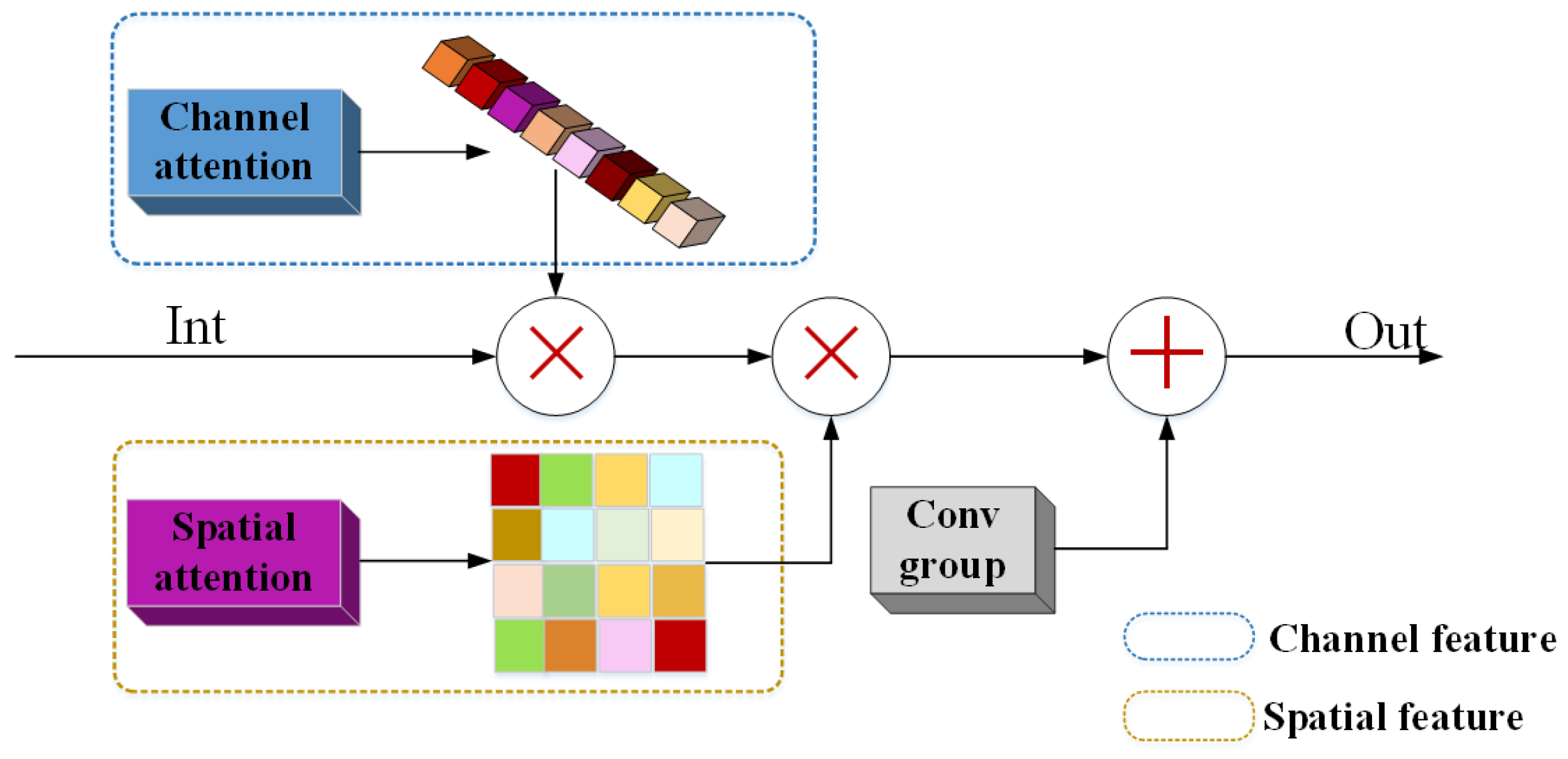

2.2. Attention Features

3. Method

3.1. Architecture

3.2. Loss function

4. Experimental Evaluation

4.1. Implementation Details

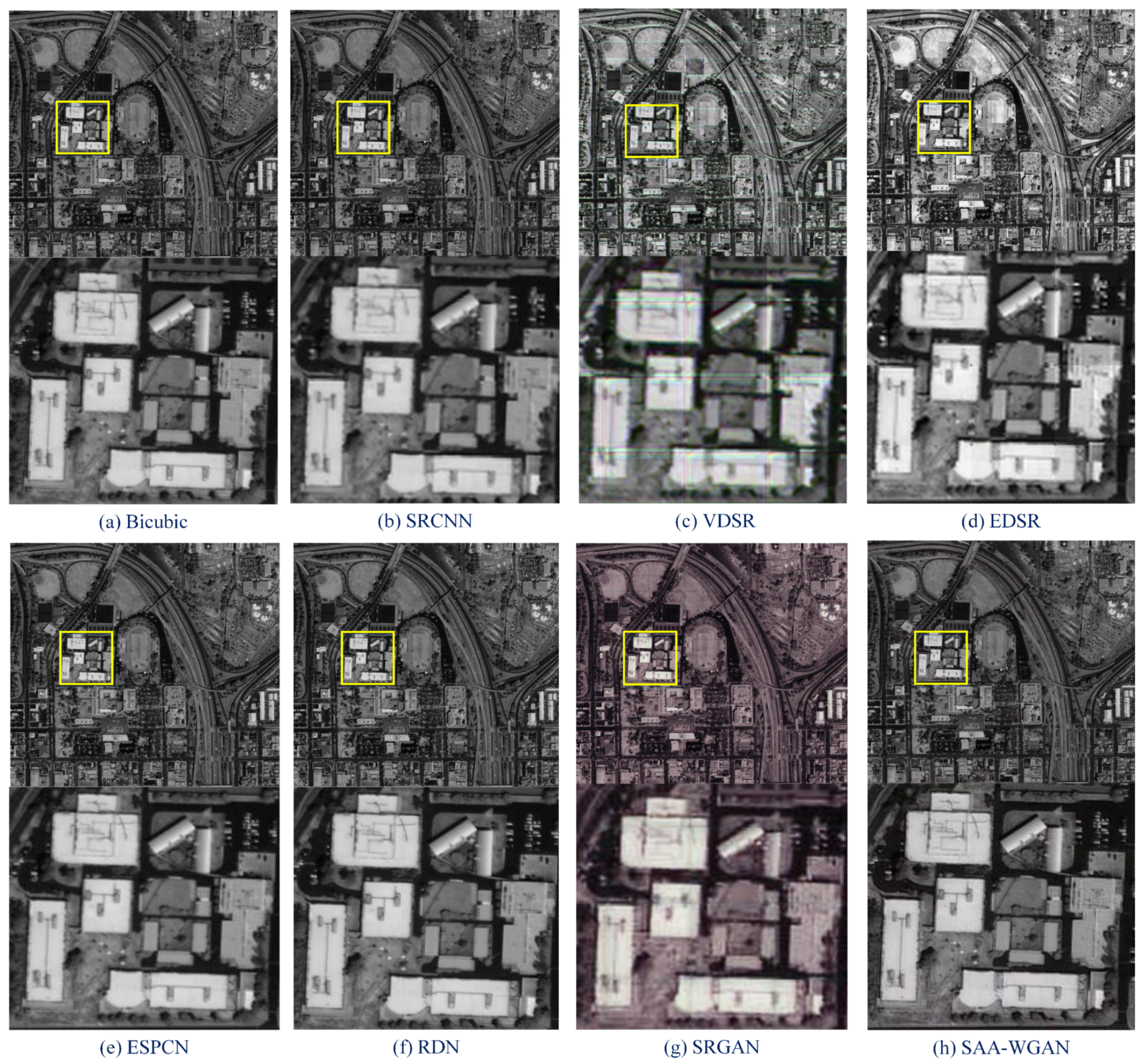

4.2. Comparisons and Results

- Comparison test between castrated model without SAA convolution and SAA-WGAN.

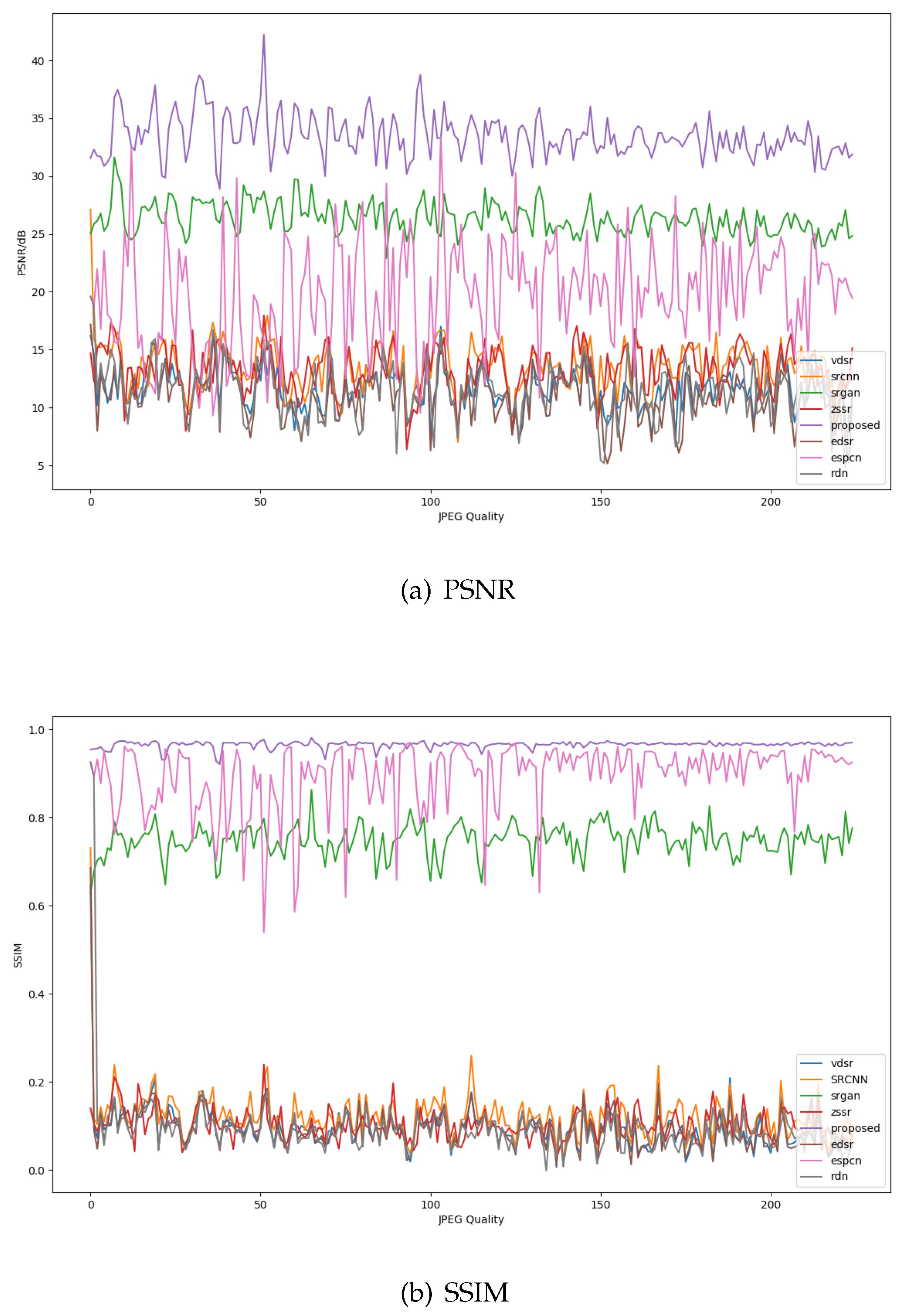

- Comparison test on PAN datasets of DOTA and GEO, evaluation index of PSNR and SSIM on GEO images.

- Comparison with benchmark networks on classic datasets, including Set5, Set14, BSD100, and Urban100.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Courtrai, L.; Pham, M.T.; Lefèvre, S. Small Object Detection in Remote Sensing Images Based on Super-Resolution with Auxiliary Generative Adversarial Networks. Remote Sens. 2020, 12, 3152. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Hao, S.; Wang, L. Improving Remote Sensing Image Super-Resolution Mapping Based on the Spatial Attraction Model by Utilizing the Pansharpening Technique. Remote Sens. 2019, 11, 247. [Google Scholar] [CrossRef]

- Ma, W.; Pan, Z.; Yuan, F.; Lei, B. Super-Resolution of Remote Sensing Images via a Dense Residual Generative Adversarial Network. Remote Sens. 2019, 11, 2578. [Google Scholar] [CrossRef]

- Du, J.; Song, J.; Cheng, K.; Zhang, Z.; Zhou, H.; Qin, H. Efficient Spatial Pyramid of Dilated Convolution and Bottleneck Network for Zero-Shot Super Resolution. IEEE Access 2020, 8, 117961–117971. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.; Liao, Q. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Trans. Multimed. 2019, 21, 3106–3121. [Google Scholar] [CrossRef]

- Khetan, A.; Oh, S. Achieving budget-optimality with adaptive schemes in crowdsourcing. In Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29, pp. 4844–4852. [Google Scholar]

- Zhang, Y.; Li, K.; Kai, L.; Wang, L.; Yun, F. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. CoRR 2017. Available online: http://xxx.lanl.gov/abs/1706.03762 (accessed on 6 December 2017).

- Hu, Y.; Li, J.; Huang, Y.; Gao, X. Channel-wise and Spatial Feature Modulation Network for Single Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3911–3927. [Google Scholar] [CrossRef]

- Bello, I.; Zoph, B.; Vaswani, A.; Shlens, J.; Le, Q.V. Attention Augmented Convolutional Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 Octobere–2 November 2019. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Advances in Neural Information Processing Systems; 2017; Available online: https://arxiv.org/pdf/1704.00028.pdf (accessed on 6 December 2017).

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Dai, T.; Cai, J. Second-order Attention Network for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, L.; Wang, Y.; Liang, Z.; Lin, Z.; Yang, J.; An, W.; Guo, Y. Learning Parallax Attention for Stereo Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Weng, L. From GAN to WGAN. Available online: https://arxiv.org/pdf/1904.08994.pdf (accessed on 18 April 2019).

- Shamsolmoali, P.; Zareapoor, M.; Wang, R.; Jain, D.K.; Yang, J. G-GANISR: Gradual generative adversarial network for image super resolution. Neurocomputing 2019, 366, 140–153. [Google Scholar] [CrossRef]

- Du, J.; Zhou, H.; Qian, K.; Tan, W.; Yu, Y. RGB-IR Cross Input and Sub-Pixel Upsampling Network for Infrared Image Super-Resolution. Sensors 2020, 20, 281. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image SuperResolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Fast and accurate image super-resolution with deep laplacian pyramid networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2599–2613. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zhang, X.; Song, H.; Zhang, K.; Qiao, J.; Liu, Q. Single image super-resolution with enhanced Laplacian pyramid network via conditional generative adversarial learning. Neurocomputing 2020, 398, 531–538. [Google Scholar] [CrossRef]

| Loss | PSNR | |||

|---|---|---|---|---|

| 1 | ✓ | ✕ | ✕ | 32.23 |

| 2 | ✕ | ✓ | ✓ | 32.37 |

| 3 | ✓ | ✓ | ✕ | 32.45 |

| 4 | ✓ | ✕ | ✓ | 32.71 |

| 5 | ✓ | ✓ | ✓ | 33.29 |

| Image Index | Scale | Set5 | Set14 | BSD100 | Urban100 | ||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | ||

| Bicubic | ×2 | 33.66 | 0.9299 | 30.24 | 0.8688 | 29.56 | 0.8431 | 26.88 | 0.8403 |

| SRCNN | ×2 | 36.66 | 0.9542 | 32.45 | 0.9067 | 31.36 | 0.8879 | 29.50 | 0.8946 |

| SCN | ×2 | 37.05 | 0.9576 | 33.17 | 0.9120 | 31.56 | 0.8923 | 30.32 | 0.9021 |

| RDN | ×2 | 38.24 | 0.9614 | 34.01 | 0.9212 | 32.34 | 0.9017 | 32.89 | 0 9353 |

| VDSR | ×2 | 37.53 | 0.9590 | 33.05 | 0.9130 | 31.90 | 0.8960 | 30.77 | 0.9140 |

| EDSR | ×2 | 38.11 | 0.9602 | 33.92 | 0.9195 | 32.32 | 0.9013 | 32.93 | 0.9351 |

| LapSRN | ×2 | 37.52 | 0.9591 | 33.08 | 0.9130 | 31.05 | 0.8950 | 30.41 | 0.9101 |

| RCAN | ×2 | 38.27 | 0.9614 | 34.12 | 0.9216 | 32.41 | 0.9027 | 33.34 | 0.9384 |

| SAA-WGAN | ×2 | 38.34 | 0.9733 | 34.71 | 0.9310 | 33.91 | 0.9130 | 34.53 | 0.9453 |

| Bicubic | ×4 | 28.42 | 0.8104 | 26.00 | 0.7027 | 25.96 | 0.6675 | 23.14 | 0.6577 |

| SRCNN | ×4 | 30.48 | 0.8628 | 27.50 | 0.7513 | 26.90 | 0.7101 | 24.52 | 0.7221 |

| DRCN | ×4 | 31.45 | 0.8714 | 28.00 | 0.7677 | 27.14 | 0.7312 | 25.67 | 0.7556 |

| RDN | ×4 | 32.47 | 0.8990 | 28.81 | 0.7871 | 27.72 | 0.7419 | 26.61 | 0.8028 |

| VDSR | ×4 | 31.35 | 0.8830 | 28.02 | 0.7680 | 27.29 | 0.7260 | 25.18 | 0.7540 |

| EDSR | ×4 | 32.46 | 0.8968 | 28.80 | 0.7876 | 27.71 | 0.7420 | 26.64 | 0.8033 |

| LapSRN | ×4 | 31.45 | 0.8850 | 28.19 | 0.7720 | 27.32 | 0.7270 | 25.21 | 0.7560 |

| RCAN | ×4 | 32.73 | 0.9013 | 28.98 | 0.7910 | 27.85 | 0.7455 | 27.10 | 0.8142 |

| SAA-WGAN | ×4 | 33.03 | 0.9115 | 29.45 | 0.8110 | 29.65 | 0.9101 | 28.53 | 0.8372 |

| Bicubic | ×8 | 24.40 | 0.6580 | 23.10 | 0.5660 | 23.67 | 0.5480 | 20.74 | 0.5167 |

| SRCNN | ×8 | 25.33 | 0.6900 | 24.13 | 0.5660 | 21.29 | 0.5440 | 22.46 | 0.6950 |

| SCN | ×8 | 25.59 | 0.7071 | 24.02 | 0.6028 | 24.30 | 0.5698 | 21.52 | 0.5571 |

| VDSR | ×8 | 25.93 | 0.7240 | 24.26 | 0.6140 | 24.49 | 0.5830 | 21.70 | 0.5710 |

| EDSR | ×8 | 26.96 | 0.7762 | 24.91 | 0.6420 | 24.81 | 0.5985 | 22.51 | 0.6221 |

| LapSRN | ×8 | 26.15 | 0.7380 | 24.35 | 0.6200 | 24.54 | 0.5860 | 21.81 | 0.5810 |

| DRRN | ×8 | 24.87 | 0.8290 | 24.81 | 0.7734 | 20.79 | 0.7968 | 21.84 | 0.7896 |

| RCAN | ×8 | 27.31 | 0.7878 | 25.23 | 0.6511 | 24.98 | 0.6058 | 23.00 | 0.6452 |

| SAA-WGAN | ×8 | 26.17 | 0.8338 | 25.33 | 0.7742 | 27.97 | 0.8816 | 24.97 | 0.8224 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, J.; Cheng, K.; Yu, Y.; Wang, D.; Zhou, H. Panchromatic Image Super-Resolution Via Self Attention-Augmented Wasserstein Generative Adversarial Network. Sensors 2021, 21, 2158. https://doi.org/10.3390/s21062158

Du J, Cheng K, Yu Y, Wang D, Zhou H. Panchromatic Image Super-Resolution Via Self Attention-Augmented Wasserstein Generative Adversarial Network. Sensors. 2021; 21(6):2158. https://doi.org/10.3390/s21062158

Chicago/Turabian StyleDu, Juan, Kuanhong Cheng, Yue Yu, Dabao Wang, and Huixin Zhou. 2021. "Panchromatic Image Super-Resolution Via Self Attention-Augmented Wasserstein Generative Adversarial Network" Sensors 21, no. 6: 2158. https://doi.org/10.3390/s21062158

APA StyleDu, J., Cheng, K., Yu, Y., Wang, D., & Zhou, H. (2021). Panchromatic Image Super-Resolution Via Self Attention-Augmented Wasserstein Generative Adversarial Network. Sensors, 21(6), 2158. https://doi.org/10.3390/s21062158