1. Introduction

User-generated content such as product and app reviews is not only indicative of customer satisfaction but also rich in emotional expression, offering more insights into users’ experiences, frustrations and expectations. Traditional sentiment analysis techniques typically categorize textual data into broad polarity labels such as “positive”, “negative” or “neutral” [

1,

2]. While this approach offers a general indication of customer satisfaction, it often lacks the granularity required to capture the complex emotional dimensions that underpin consumer attitudes and behaviors. Emotion detection, by contrast, enables a more nuanced understanding by identifying specific emotional states and uncovering the underlying motivations and expectations that drive customer responses. This added depth is essential for comprehensively analyzing consumer feedback, informing strategic marketing decisions and enhancing brand perception. Understanding emotions like joy, anger, sadness, fear, love and surprise provides a more granular and actionable perspective on customer feedback. For instance, anger may highlight critical product defects or service failures, while joy can signal value propositions or successful UXs. This emotional granularity enables e-commerce platforms and app developers to go beyond reactive customer service and instead engage in proactive strategy design, enhancing product features, customizing marketing campaigns and improving user interfaces based on emotional cues [

3,

4].

Additionally, extracting emotions from reviews, rather than simply classifying them as positive or negative, provides a richer and more actionable understanding of customer experiences [

5]. Emotions such as anger, joy, sadness, fear or surprise offer more nuanced insights that are essential for businesses seeking to understand and respond to user sentiment effectively [

6]. For example, two reviews labeled as negative might stem from entirely different emotional contexts, one expressing anger over a defective product and the other conveying sadness about a disappointing experience. Each of these emotions implies different expectations and demands a different response strategy [

7].

Emotion detection also enables more effective customer segmentation and personalization. Customers who express joy or love in their reviews can be targeted with loyalty programs or promotional offers, while those who show signs of fear or frustration might benefit from proactive customer support. These insights help customize communication and improve retention [

8]. Moreover, analyzing emotional patterns in user reviews informs product development and user experience (UX) design. Identifying recurring feelings of disappointment or delight associated with specific features helps teams focus on what truly matters to users. For instance, joy related to intuitive design usually validates UX decisions, while frustration over delays or bugs can guide prioritization in development. Beyond marketing and design, emotion-aware systems are also increasingly valuable in AI applications. Virtual assistants, recommendation engines and customer support bots all benefit from the ability to interpret user emotions, enabling more empathetic and context-sensitive interactions [

9,

10]. While traditional sentiment analysis provides a broad overview, extracting emotions uncovers the deeper, more specific UXs behind reviews. This emotional granularity empowers businesses to make more empathetic and effective decisions in the field of e-commerce [

11].

One of the contributions of the current research consists of leveraging fine-tuned transformer models, specifically DistilBERT, to perform emotion classification on customer reviews. This approach allows us to extract high-level semantic features from text and classify them with high accuracy, even in the presence of informal language, slang or typographical errors common in online reviews. By applying this methodology, we aim to demonstrate how emotion-aware analytics significantly enhance decision-making in the e-commerce ecosystem, driving both customer satisfaction and business performance.

In this research, we apply emotion classification to customer reviews in order to gain more fine-grained insights from their feedback on products and applications. We use the emotions dataset (

https://huggingface.co/datasets/dair-ai/emotion accessed on 15 May 2025) available through the Hugging Face Hub (HFH) [

12]. In the initial phase, we employ a pre-trained DistilBERT model (

distilbert-base-uncased) as a feature extractor to tokenize the review texts. The extracted features are then used to train several traditional classifiers implemented via the scikit-learn library, including Logistic Regression (LR), Support Vector Classification (SVC) and Random Forest (RF). The performance of each model is evaluated to establish a baseline.

In the second phase, the same pre-trained transformer is fine-tuned end-to-end using different training strategies, allowing the model to adapt its internal representations to the specific task of emotion classification. Fine-tuning the hidden states that serve as inputs to the classifier helps mitigate issues that arise when pre-trained features are not well-aligned with the downstream task. The training process leverages the Weights & Biases (

wandb) API for experiment tracking and logging. For this phase, we use

AutoModelForSequenceClassification to construct the classifier on top of the tokenized inputs, allowing the model to learn both feature representations and classification jointly. The performance of the fine-tuned models is compared to that of the baseline classifiers, with special attention given to the impact of different training configurations provided by the Hugging Face Trainer API. Finally, the best-performing model is applied to a corpus of real-world customer reviews (sealuzh/app_reviews,

https://huggingface.co/datasets/sealuzh/app_reviews, accessed on 16 May 2025) [

13] to identify the predominant emotions expressed, providing deeper insights into UX and product perception.

To the best of our knowledge, this two-phase framework, combining feature-based baseline evaluation with strategically fine-tuned transformer models for emotion classification on real-world customer feedback, is original in its design and has not been previously applied in this specific configuration within the context of e-commerce analytics.

2. Literature Review

Emotion extraction from customer reviews has emerged as an important area within Natural Language Processing (NLP), sentiment analysis and affective computing [

14], [

15]. This section presents a comprehensive review of the existing literature on methods and models developed to identify and classify emotions in customer-generated reviews. It explores foundational theories of emotion representation, the evolution of rule-based and machine learning approaches and the growing adoption of deep learning and transformer-based architectures. Additionally, it highlights key application domains such as e-commerce, healthcare [

16], hospitality [

17,

18], airline industry [

19], energy and telecommunication and social media, where emotion mining contributes to UX management and targeted marketing strategies.

Grasping customer emotions and preferences is important to driving success in product design [

20]. This research focuses on analyzing customer reviews of eco-friendly products by developing a prediction pipeline that performs both aspect detection and sentiment analysis. Two advanced pre-trained language models, BERT and T5, are fine-tuned using a mix of synthetic and manually labeled datasets to identify specific product features (aspects) mentioned in the reviews and to classify the associated sentiments as positive, negative or neutral. Experimental results show that both models perform well, with BERT slightly outperforming T5 by achieving 92% accuracy compared to T5’s 91%. BERT also demonstrates superior performance across other evaluation metrics such as precision, recall, F1-score and computational efficiency, leading to its selection for the final prediction pipeline.

Also, analyzing customer satisfaction through online reviews has emerged as an innovative and valuable approach [

21]. This study analyzes hotel customer satisfaction using online reviews from Ctrip.com. By applying TF-IDF and K-means clustering, the researchers identify 10 factors influencing satisfaction: epidemic prevention, consumption emotion, convenience, environment, facilities, catering, target group, perceived value, price and service. Using a backpropagation neural network, they assess each factor’s impact. The findings highlight that consumption emotion, perceived value, epidemic prevention, target group and convenience significantly influence satisfaction, especially epidemic prevention, a newly recognized factor. Another study aims to deepen the analysis of customer emotions in hotel reviews and develop a machine learning-based model to detect and classify emotional states [

22]. Using 80,593 Vietnamese-language reviews from Agoda and Booking.com (2009–2022), the research proposes a data mining and emotion detection framework. The model categorizes emotions into four states: happy, angry, depressed and hopeful, achieving up to 81% exact match, 90% precision and 90% recall. Moreover, Sutoyo et al. [

23] emphasizes the importance of recognizing emotions in communication, particularly within online product reviews, which significantly influence purchasing decisions. Given the scarcity of emotion-labeled datasets in local languages, the research contributes a curated dataset of 5400 Indonesian-language product reviews spanning 29 product categories. Each review is annotated with five distinct emotions and verified by a clinical psychology expert. The proposed dataset lays the foundation for developing machine or deep learning models to automatically classify emotions, enabling more effective emotion recognition in local-language product reviews. Additionally, Punetha and Jain [

24] introduces a novel unsupervised sentiment classification model to analyze both sentiments and emotions in restaurant reviews, aiming to improve customer satisfaction. Unlike traditional machine learning methods, which require large, labeled datasets and high computational resources, the proposed model uses a mathematical optimization framework that is domain- and language-independent. It was tested on two datasets and achieved state-of-the-art performance, validated through statistical analysis. Furthermore, Wu and Gao [

25] explores Emotional Customer Experience (ECX) by identifying emotions, triggers and constructors and associated co-creation behaviors during hotel service interactions. Using appraisal theory and thematic analysis on 1063 TripAdvisor reviews of luxury hotels in Ireland, the research reveals that multiple emotions can arise from a single service interaction. The study identifies three co-creation behaviors, reinforcing intention, active and resourceful behaviors, that contribute to a more positive ECX.

Another research investigates the qualitative aspects of user-generated content to understand and predict customers’ brand attitudes based on 10,000 TripAdvisor reviews [

26]. By applying logistic regression, artificial neural networks and structural modeling, the research identifies which features, sentiment, emotion or parts of speech, are most predictive. The findings show that sentiment is the strongest predictor of brand attitude and positive sentiment and content polarity are positively associated with favorable brand attitudes, while negative high- and low-arousal emotions correlate negatively. In the e-commerce market, Rashid et al. [

27] focuses on understanding consumer sentiment in the Bangladeshi market through a richly annotated dataset from Daraz and Pickaboo, comprising Bengali and English reviews. The reviews span a wide array of products and express emotions both textually and via emojis. Expert annotators classified each review into five emotions, happiness, sadness, fear, anger and love, and grouped them under positive (happiness, love) and negative (sadness, anger, fear) sentiment categories. Another study investigates how three discrete emotions, anger, fear and sadness, influence the perceived helpfulness of online reviews, using verified purchase reviews from Amazon.com [

28]. The research reveals that product type plays a moderating role in shaping the impact of these emotions. Anger embedded in customer reviews tends to reduce perceived helpfulness more significantly for experience goods than for search goods. In contrast, fear is shown to positively influence perceived helpfulness, likely because it conveys more persuasive and cautionary messages. On the other hand, as the level of sadness in a review increases, its perceived helpfulness tends to decline.

The issue of information asymmetry in e-commerce was addressed by examining the emotional content of online customer reviews as a potential signal of product quality [

29]. Drawing on signaling theory, the authors propose a model that also considers the moderating roles of perceived empathy and cognitive effort. Through a controlled laboratory experiment involving 120 participants, divided equally into two treatment groups, the research uses ANOVA, linear regression and binary logistic regression to test its hypotheses. The results show that the emotional content of reviews significantly enhances perceived product quality, which in turn positively influences purchase decisions. Also, the challenge of information overload in e-commerce reviews was addressed [

30] by proposing an efficient machine-based method for extracting user clustering information from review texts. Specifically, it integrates an emotional correlation analysis model with a Self-Organizing Map (SOM) to construct fine-grained user emotion vectors. These vectors are then used for visual cluster analysis, enabling the rapid identification of user groups and their characteristics. The empirical analysis, based on real Amazon book reviews, demonstrates that the method achieves an average precision of 0.71.

Recognizing the growing significance of user-generated content on platforms such as blogs, forums and e-commerce sites, Demircan et al. [

31] explores sentiment analysis of social media texts using machine learning methods. The research finds that the most accurate alignment between text and emotions occurs in product reviews and ratings on e-commerce websites. To leverage this, product reviews and their associated scores were extracted and organized into a structured dataset. Sentiments were labeled as positive, negative or neutral based on review scores. The study developed Turkish-language sentiment analysis models using several machine learning algorithms, including SVM, RF, decision tree, LR and k-nearest neighbors (KNNs). Cross-validation on independent test data revealed that SVM and RF models consistently outperformed the others in terms of accuracy. Moreover, Yang [

32] proposes a deep learning-based method to enhance emotion analysis of consumer reviews in the context of e-commerce big data, addressing the limitations of insufficient feature extraction in traditional sentiment analysis. The approach begins with generating contextualized word vectors using ALBERT, a lightweight pretrained language model. It then applies a BiGRU model to capture semantic information from both forward and backward directions, followed by a CNN model to extract local features of the text. An attention mechanism is used to compute the weight distribution, emphasizing the most relevant parts of the input for emotion detection. Experiments conducted on a public consumer review dataset demonstrate that the proposed method achieves high performance, with a recall of 0.9417, precision of 0.9552 and F1-score of 0.9484.

Additionally, Zhang et al. [

33] presents a novel sentiment multiclassification method designed to handle the complexity of consumer reviews, which often contain multiple emotional expressions due to varying experiences with products and services. The proposed approach is based on a directed weighted model, where sentiment entity vocabularies, i.e., words or phrases with emotional attributes, are represented as nodes, and the relationships between them are represented as directed weighted links that reflect their sentiment similarity. Furthermore, Yelisetti and Geethanjali [

34] focuses on sentiment analysis by detecting emotions in unstructured social media and review data, which reflect public opinions on political events, products and social issues. Thus, the paper proposes an advanced deep learning model for text classification. Features are initially extracted using TF-IDF and then converted into vector representations using Doc2Vec. The core of the method is a deep learning architecture called convolutional bidirectional long short-term memory (CBLSTM), which classifies sentiments as positive (good) or negative (bad). To enhance performance, the model’s hyperparameters are optimized using a meta-heuristic Frog Leap Algorithm (FLA). Experiments conducted on four datasets, including product reviews and Twitter data, show that the proposed CBLSTM-FLA model outperforms traditional models like LSTM-RNN and LSTM-CNN, achieving 98.1% accuracy on review datasets and 97.5% accuracy on Twitter datasets.

Another research focuses on automatic emotion classification from Bengali text, a task critical for enhancing Human–Computer Interaction (HCI) applications where text is a primary communication medium [

35]. Given the unstructured and disorganized nature of textual content on social media, blogs and e-commerce platforms, and the lack of tools for low-resource languages like Bengali, the paper addresses a significant gap. The researchers developed an emotion corpus of 8047 Bengali texts and classified six primary emotions: anger, fear, disgust, sadness, surprise and joy. They applied eight standard machine learning algorithms, LR, multinomial naive Bayes, SVM, random forest, decision tree, KNN, adaptive boosting, and tested an ensemble model combining LR, RF and SVM. Using both Bag of Words (BoW) and TF-IDF for feature extraction, the best result was achieved by the ensemble model with TF-IDF, which obtained a weighted F1-score of 62.39%.

The effectiveness of different deep learning models for sentiment classification in e-commerce review texts was further examined [

36], focusing on how the transformer-based BERT model outperforms traditional architectures like CNNs and RNNs. Recognizing the transformative impact of BERT in NLP, the authors fine-tuned it on mobile e-commerce review data and used its output as embeddings for additional deep learning models, specifically CNN and RNN. The performance of several configurations, BERT, BERT-RNN and BERT-CNN, was compared, identifying the most effective approach for binary sentiment classification. Experimental results reveal that the BERT-CNN model achieves the best performance. Another research proposes a deep learning-based sentiment analysis system designed to handle large-scale e-commerce product reviews more efficiently than traditional classifier-based methods [

37]. The system follows a three-phase pipeline: data collection and pre-processing, feature extraction using count vectorizer and dimensionality reduction and sentiment classification using a hybrid LSTM-SVM model with incremental learning. Feature extraction includes identifying keywords, text length and word count. These features are then passed to a hybrid model that combines LSTM for sequential feature learning and SVM for final classification. The model incorporates incremental learning, enabling it to adapt to new data dynamically. The proposed system outperforms existing techniques with a reported accuracy of 92%, along with strong precision, recall, sensitivity and specificity scores. Furthermore, Märtin, Bissinger and Asta [

38] introduces a novel approach that leverages emotion recognition and situation-aware software adaptation to personalize key touchpoints of the digital customer journey, thereby enhancing UX and the effectiveness of e-commerce applications. The method incorporates eye-tracking, emotion detection and user personas to enable real-time, individualized adaptations of interactive web applications.

Recent advancements in the field are reflected by:

- (a)

Adoption of advanced NLP techniques:

Several studies demonstrate strong performance using pre-trained transformer models such as BERT, T5 and ALBERT for emotion and sentiment classification [

18,

32,

36,

37].

Hybrid and deep learning models (e.g., BiGRU, CNN, LSTM-SVM, CBLSTM) enhance both local and global text understanding [

32,

34,

37].

- (b)

Domain and language diversity:

Research spans various sectors: e-commerce, hospitality, social-media and restaurants, showing domain-wide applicability [

21,

22,

25,

27].

Local-language datasets were developed for Indonesian [

23], Bengali [

27,

35], Vietnamese [

22] and Turkish [

31], addressing multilingual emotion analysis.

- (c)

Unsupervised and low-resource techniques:

Unsupervised models and optimization-based methods show promising results without requiring large labeled datasets [

24,

30].

Some models work effectively in low-resource settings by leveraging novel clustering or correlation approaches [

30,

35].

- (d)

Emotion categorization beyond polarity:

Several studies move beyond simple sentiment polarity to classify discrete emotions like happiness, anger, sadness, fear and hopefulness ([

22,

27,

28]).

Emotion triggers and their impact on behaviors (e.g., co-creation, brand attitude) are also examined ([

25,

26,

29]).

- (e)

Real-world applications and practical implications:

Studies provide actionable insights for product design, service improvement, marketing strategies and personalized digital experiences ([

20,

25,

38,

39]).

A comparison is provided in

Table 1 considering the objective, methods, data sources, main results and whether large language models (LLMs) or transformers have been used.

In terms of limitations and gaps of the previous studies, we identified the following: (a) lack of standard emotion taxonomies as emotions are often defined inconsistently (e.g., four emotions vs. five vs. six classes), making cross-study comparison and dataset alignment difficult; (b) data limitations as many studies use platform-specific data (e.g., Amazon, TripAdvisor), which may limit generalizability across industries or languages. Some datasets are synthetic or manually labeled in small volumes, limiting robustness in large-scale or real-time applications ([

20,

23]); (c) limited real-time and streaming capabilities as most models are trained on static datasets and only a few consider real-time emotion detection for dynamic environments ([

22,

38]); (d) underexplored multimodality as nearly all studies focus on textual data, neglecting other emotional cues like voice, facial expression or emojis (except [

27]); (e) interpretability and transparency as explainability of emotion predictions is generally lacking, which is essential for decision support; (f) lack of benchmarking across models as only a handful of studies compare multiple architectures or establish clear benchmarks across traditional machine learning, deep learning and transformer-based models ([

31,

36]).

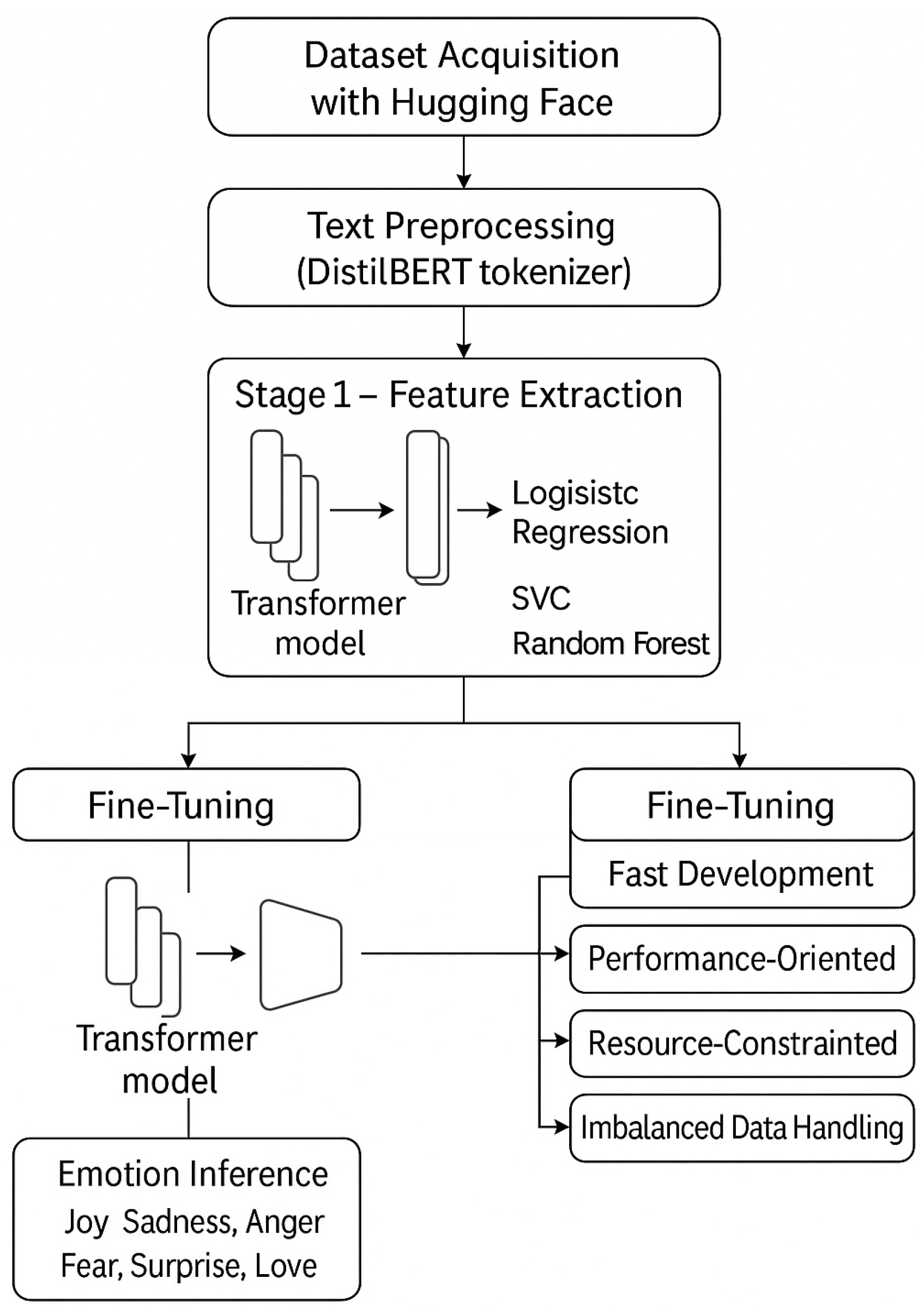

3. Methodology

To advance emotion-aware analytics in e-commerce, we employ fine-tuned transformer models, specifically DistilBERT, to perform emotion classification on customer reviews. This approach enables the extraction of high-level semantic features from textual data, allowing for accurate emotion detection even in the presence of informal expressions, slang or typographical errors that frequently occur in user-generated content. By applying this method, we aim to demonstrate how emotion classification enhances both customer satisfaction insights and business decision-making processes.

Our methodology is structured in two phases. In the first phase, we adopt the publicly available emotions dataset from the Hugging Face Hub (HFH) to develop and benchmark baseline classifiers. In total, 386,176 datasets are available on the HFH as of May 2025. Thus, from this hub, we extracted the emotions dataset. We utilize the pre-trained distilbert-base-uncased model as a feature extractor to tokenize and embed the review texts. These feature vectors are then fed into traditional machine learning classifiers implemented using the Scikit-learn library: namely, Logistic Regression (LR), Support Vector Classification (SVC) and Random Forest (RF). Each model’s performance is evaluated to establish a benchmark for subsequent experimentation.

In the second phase, we fine-tune the same pre-trained DistilBERT model in an end-to-end manner, enabling it to adjust its internal representations specifically for the task of emotion classification. This step addresses the potential misalignment between generic pre-trained features and the target classification task. Fine-tuning is conducted using the AutoModelForSequenceClassification module from Hugging Face Transformers, allowing for joint optimization of both feature extraction and classification layers. Training and experiment tracking are facilitated through the Weights & Biases (wandb) API, which supports detailed monitoring of different configurations.

We propose four fine-tuning strategies (TA) using the Hugging Face Trainer API and compare the resulting models’ performance against the baseline classifiers. The best-performing model is subsequently applied to a real-world dataset of customer reviews (sealuzh/app_reviews) to identify the dominant emotions expressed in user feedback. This analysis offers actionable insights into product perception and UX, reinforcing the value of emotion classification in e-commerce applications.

As mentioned above, we propose four TrainingArguments (TA1–TA4) settings, each tailored for different use cases when fine-tuning transformer models like DistilBERT for emotion classification:

TA1. Fast development. This setup is optimized for quick experimentation or debugging. It uses just one epoch, a small batch size and evaluates frequently (every few steps rather than per epoch). This is helpful when testing data pipelines, tokenization or model structure without waiting for a full training run. Saving and logging are lightweight to speed up execution. This is useful for prototyping, checking code correctness and debugging on small subsets of data.

TA2. Performance-oriented fine-tuning. This configuration aims to maximize model performance. It increases the number of epochs, uses a slightly larger learning rate and introduces warmup steps to stabilize early training. It also tracks and loads the best-performing model based on a chosen metric (e.g., F1-score) and uses evaluation and saving at each epoch. It is useful for the best possible accuracy and generalization.

TA3. Resource-constrained or low memory settings. This variant is designed for situations where computing resources are limited, such as training on a single GPU or using Colab. It reduces the per-device batch size but uses gradient accumulation to simulate larger batches. It also enables mixed precision (fp16) training to save memory and speed up computations if supported. It is useful for training on laptops, free GPU instances or constrained environments where efficiency is essential.

TA4. Imbalanced dataset handling. This strategy focuses on datasets with class imbalance. While the

TrainingArguments themselves do not directly support class weighting, this setup is designed to be used with a model that handles class weighting manually. It runs for a moderate number of epochs and tracks the best model based on F1 score to balance precision and recall. It is useful for emotion classification tasks with unbalanced class frequencies, where classes like “surprise” or “love” are underrepresented compared to “joy” or “sadness”. The methodology flow is presented in

Figure 1.

The proposed steps for the emotion classification pipeline are shown in

Table 2.

TA2 is designed as a general-purpose configuration optimized for overall accuracy and generalization across balanced or moderately skewed datasets. It does not assume or require explicit class weighting, and it serves as a default production-ready setup. TA4 specifically targets datasets with severe imbalance. It introduces externally defined class-weighted loss functions and focuses on improving F1-score across minority classes, which may otherwise be neglected in standard performance-optimized training. TA4 is useful in contexts where fairness across classes or representation of underrepresented emotions is prioritized over overall accuracy.

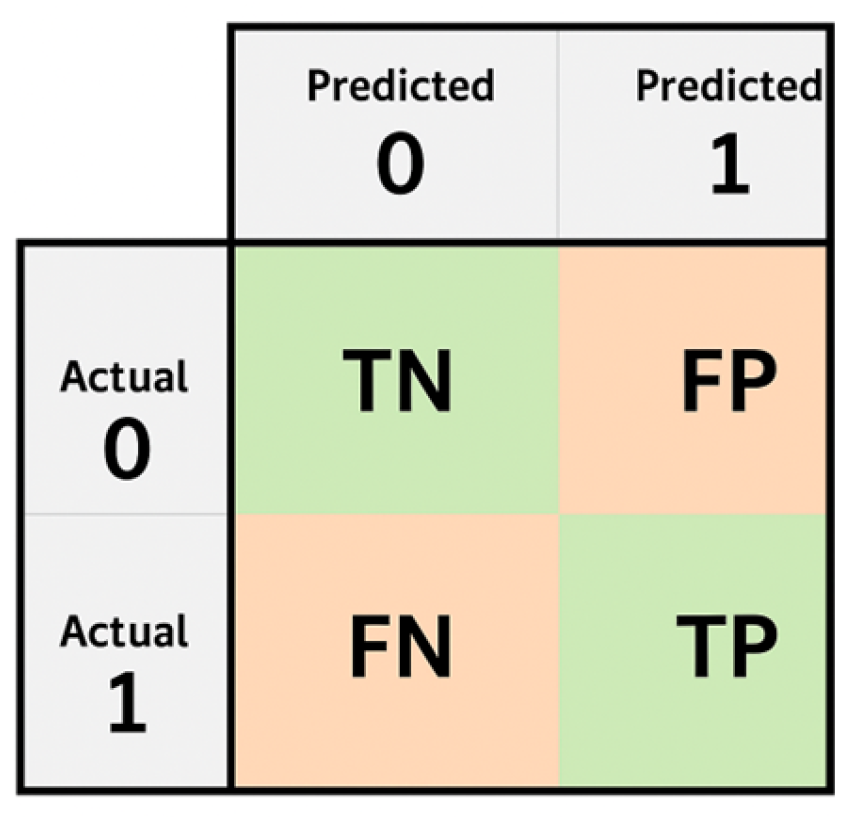

After training the models on the training set, the testing phase takes place. In classification problems, several statistical indicators are used to describe a model’s performance and generalization capabilities. A useful tool in describing the classification power of models is the confusion matrix. The general form of a confusion matrix for binary classification problems is presented in

Figure 2.

Actual represents the true label of the instances in the test set. Predicted is what the ML model returns, i.e., the label/flag that the ML model considers. True Negative (TN) is the number of instances where the ML model correctly predicts the label 0. False Positive (FP) is the number of instances classified by the ML model as 1, when in reality, they are negative (0). False Negative (FN) is the number of instances classified by the model as 0, although in reality, they are positive (1). True Positive (TP) represents observations that are correctly classified by the ML model as 1. Based on all these indicators, we may calculate the following metrics:

Classification models do not directly output a specific class for the instances they classify; instead, they return a probability of belonging to one of the classes. The default threshold is 0.5. This means that if the predicted probability for class 1 is greater than 0.5, the instance is classified as class 1; otherwise, it is classified as class 0. Therefore, this threshold is important to trade-off between precision and recall, depending on the classification problem.

4. Results

Two datasets are employed in our simulations. The emotion dataset from HFH consists of 20,000 records. A sample of emotions dataset is provided in

Table 3. The second dataset consists of reviews, and it belongs to the HFH. It consists of 288,000 records. A sample of reviews dataset is also presented in

Table 4.

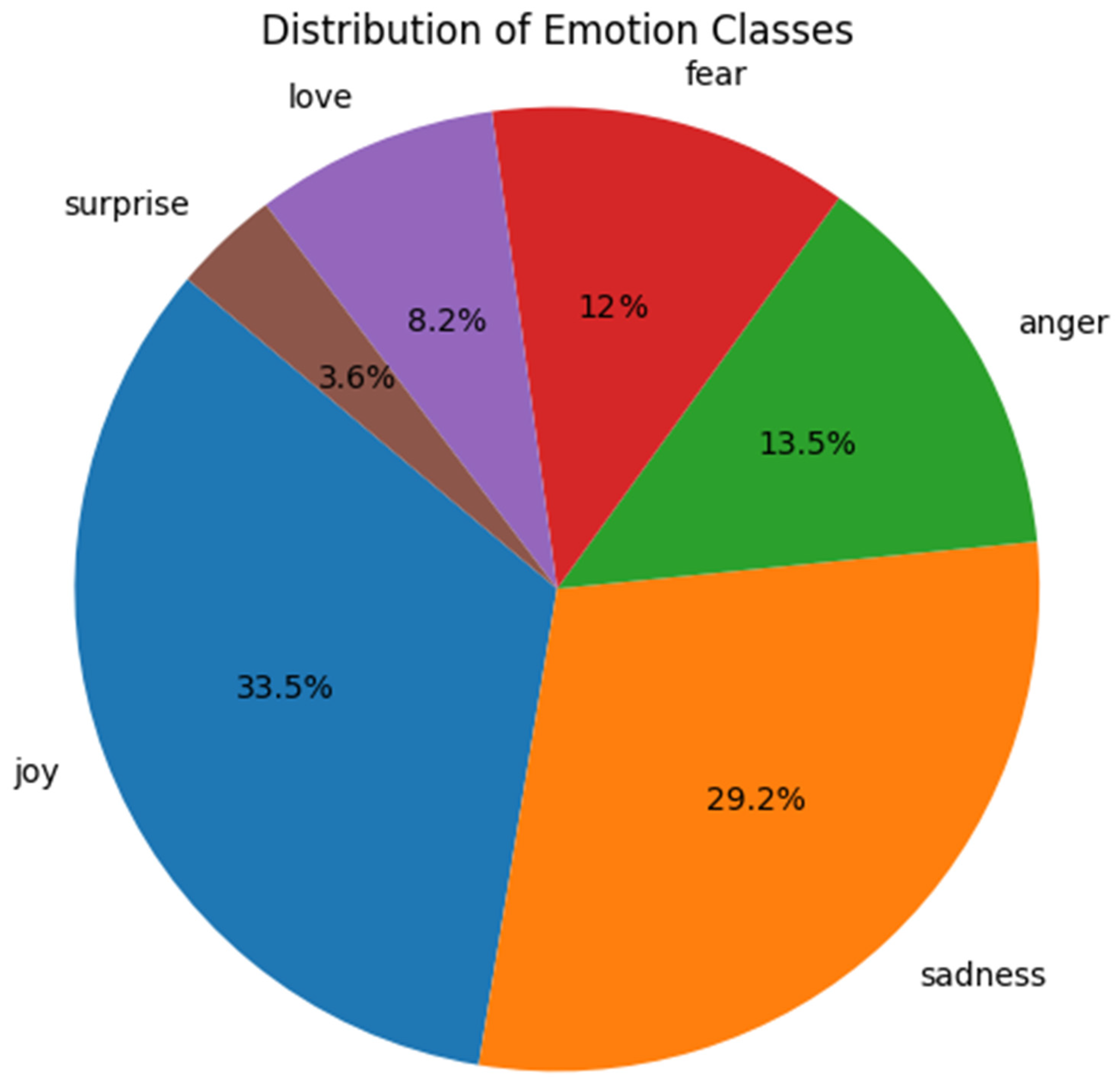

Figure 3 illustrates how various emotions are represented within a dataset. “Joy” is the most prominent emotion, accounting for 33.5% of the total, which indicates that the dataset leans significantly toward positive sentiment. “Sadness” follows closely behind at 29.2%, suggesting a strong presence of negative sentiment as well. “Anger” and “fear” contribute 13.5% and 12.1%, respectively, showing that intense negative emotions are also well represented. “Love” makes up 8.2% of the data, reflecting another positive emotion, though it appears less frequently than joy. “Surprise” is the least common emotion, appearing in only 3.6% of the dataset. These indicate that the dataset is imbalanced, with a dominance of positive emotions (especially “joy”) but also substantial representation of negative emotions (“sadness,” “anger,” and “fear”), while “surprise” is significantly underrepresented.

The 2D t-SNE embedding visualization in

Figure 4 illustrates the structure of emotion-labeled textual data, where each subplot corresponds to a distinct emotion: sadness, joy, love, anger, fear and surprise. The density of each hexbin region represents how many data points (e.g., sentence embeddings) fall within that area of the 2D space. For emotions like “joy”, “love” and “sadness”, there is a clear presence of dense central clusters. This suggests that the textual representations for these emotions tend to group closely in the high-dimensional embedding space, indicating internal consistency in how these emotions are expressed. On the other hand, emotions such as “anger”, “fear” and especially “surprise” exhibit more dispersed distributions, with less pronounced clustering. This implies a wider variation in how these emotions are linguistically conveyed or greater semantic ambiguity in their expressions.

Since t-SNE aims to preserve local relationships, the tight clustering in certain emotions indicates that those classes have more coherent features, which might make them easier to classify. In contrast, the looser structures observed in emotions like “anger” and “surprise” suggest that those categories may require more sophisticated modeling approaches or additional contextual data to distinguish effectively.

The variation in hexbin density also reflects data imbalance, where some emotion classes are underrepresented in the training data, or linguistic characteristics that cause certain emotions to appear more diffusely in embedding space.

Figure 4 reveals how emotion classes are distributed in a compressed space and offers insights into the separability and consistency of each class.

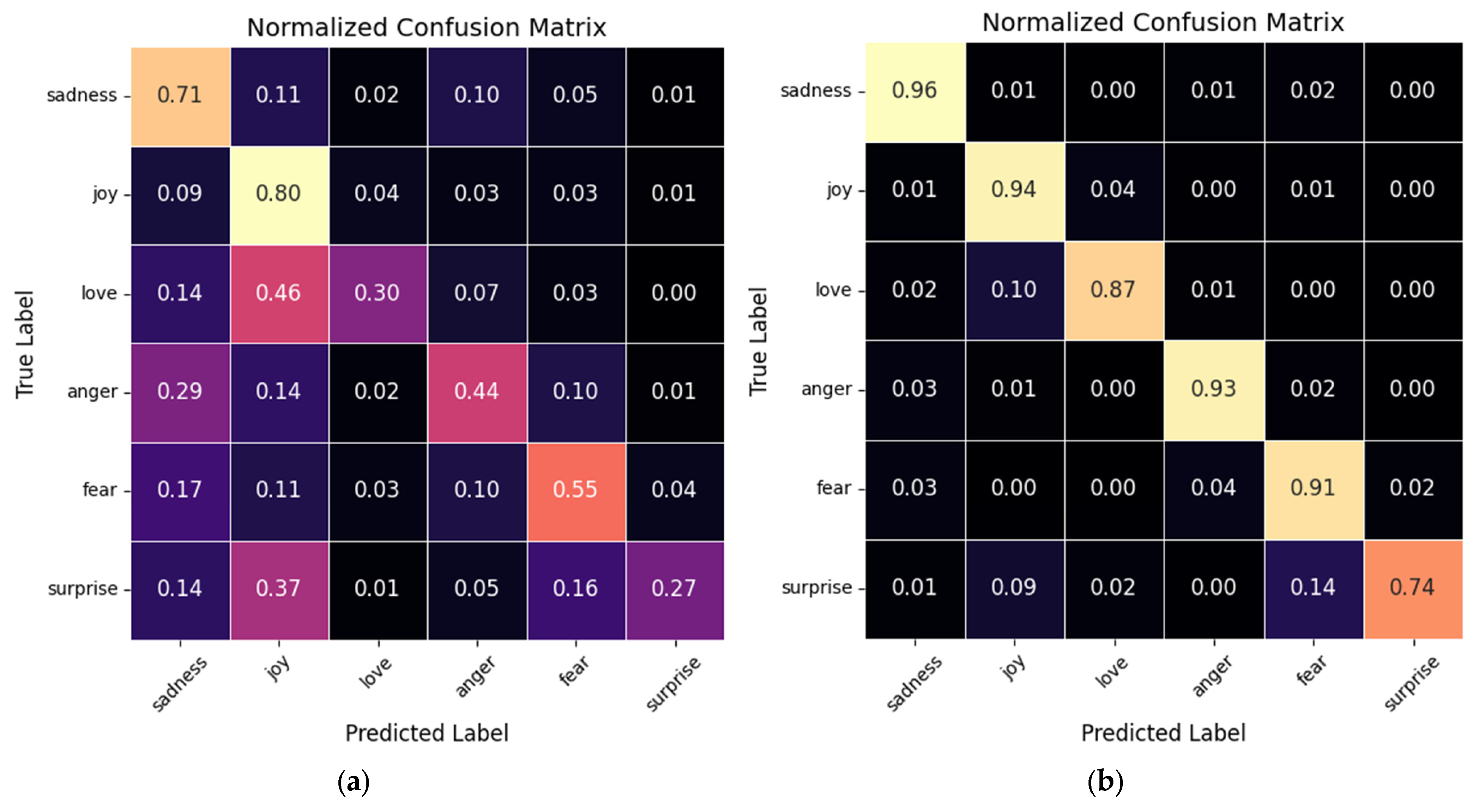

The two normalized confusion matrices in

Figure 5 compare the performance of a pretrained model (left) and a fine-tuned model (right) on an emotion classification task. In the pretrained model, performance is moderate, with the highest accuracy seen for “joy” (80%) and “sadness” (71%). However, several classes are confused with one another. For instance, “love” is often misclassified as “joy” (46%) and “sadness” (14%) and “surprise” is commonly predicted as “joy” (37%). “Anger” and “fear” also show considerable confusion with “sadness”, “joy” and each other, which weakens the model’s ability to distinguish between more nuanced or less frequent emotional states. In contrast, the fine-tuned model shows an improvement across all classes. “Sadness” and “joy” now have accuracies of 96% and 94%, respectively. “Love” and “anger” are both recognized with high accuracy (87% and 93%) and fear reaches 91%. Even “surprise”, which was poorly predicted in the pretrained model, improves to 74%. Misclassifications are minimal, indicating the model has learned more refined distinctions between emotion classes after fine-tuning. Thus, fine-tuning enhances the model’s precision, especially for less frequent or more ambiguous classes like love and surprise.

Therefore, fine-tuning significantly improves classification performance across all emotion classes, particularly for less frequent or ambiguous emotions (love, surprise, anger, fear). Compared to the pretrained model, the fine-tuned version achieves higher accuracy and fewer misclassifications, indicating that task-specific training enables the model to learn more precise distinctions between emotions.

The results obtained with the pre-trained models for tokenization and the three classifiers are presented in

Table 5.

The results obtained with the fine-tuned model in various training strategies are presented in

Table 6. Python 3.x was employed for simulations in a Google Collaboratory environment equipped with an NVIDIA T4 GPU.

In

Table 7, the hyperparameter values and training options are presented.

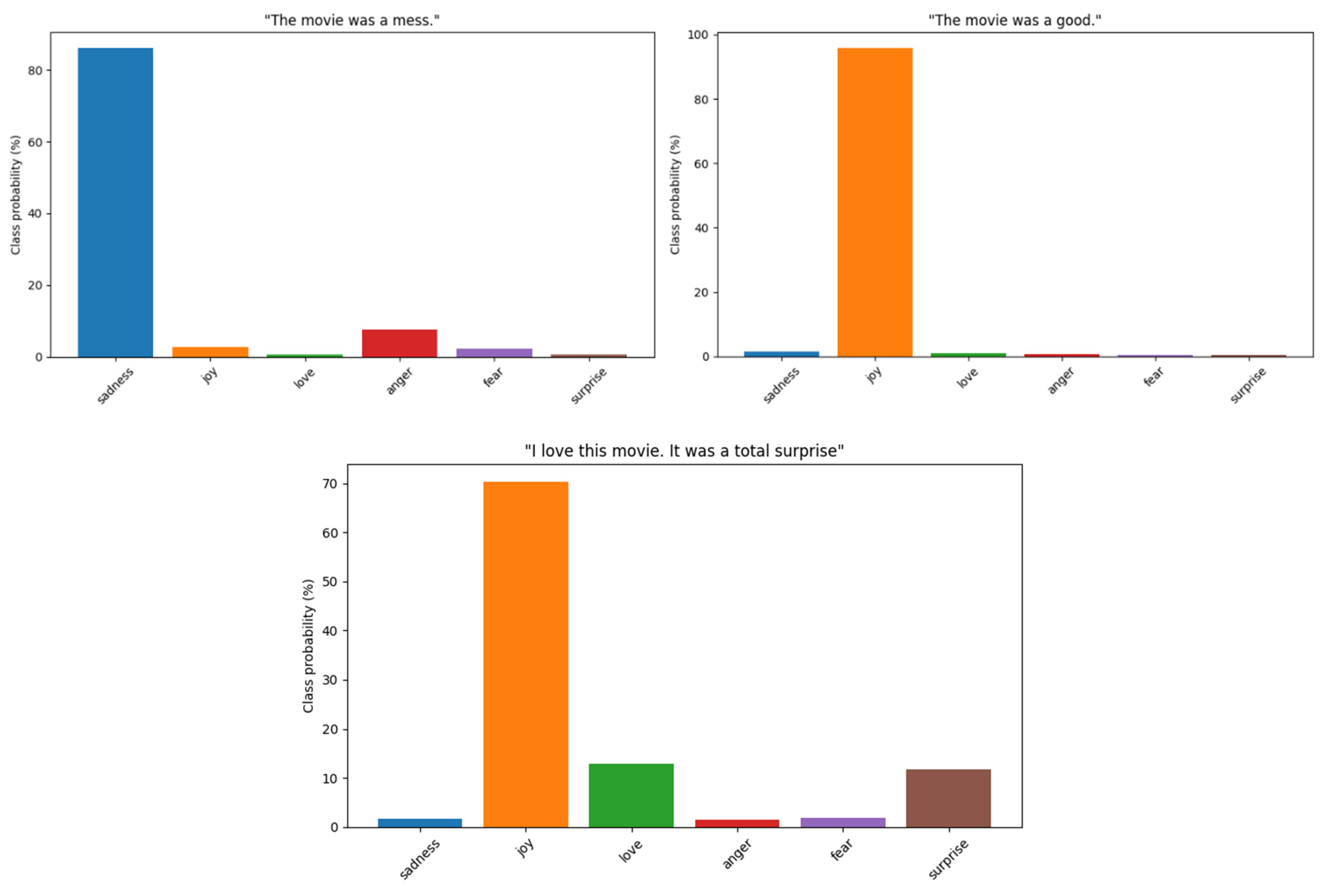

The subplots in

Figure 6 represent emotion classification probabilities for three different text inputs. Each subplot visualizes the model’s confidence in assigning a specific emotion to the input sentence.

“The movie was a mess”. The model predicts “sadness” with high confidence (above 80%), followed by a small probability for “anger” (~10%). This indicates the model interprets the phrase as expressing disappointment or regret, which aligns with a negative sentiment dominated by “sadness”.

“The movie was a good”. Despite the grammatical error, the model confidently predicts “joy” (near 95%), with negligible probabilities for other emotions. This suggests that the model is robust to minor textual issues and can still identify positive sentiment when key words like “good” are present.

“I love this movie. It was a total surprise”. This sentence triggers a dominant “joy” prediction (~70%), with notable secondary probabilities for both “love” (~13%) and “surprise” (~12%). The model recognizes the overall positive tone of the message, capturing the emotional mix conveyed: affection and delight.

The model demonstrates strong semantic sensitivity, distinguishing between clearly negative, positive and mixed-emotion texts with high accuracy and intuitive probability distributions.

Also, the three subplots in

Figure 7 display emotion classification probabilities for user reviews or app feedback. Each subplot shows how likely the model is to assign each emotion to the corresponding sentence.

“Awsome App! Easy to use works great on Notes w/Realtek dongle”. The model predicts “joy” with overwhelming confidence (around 95%), indicating clear recognition of strong positive sentiment. All other emotion classes register near-zero probabilities, meaning there is little ambiguity in the emotional tone of the statement.

“I’ll forgo the refund. But no go with Watson dongle… Nexus 9. Yet to try on Nexus 6p. So very disappointed!!! :-(”. The dominant emotion is “sadness”, with a near-perfect confidence close to 100%. This result reflects the user’s expressed disappointment and frustration. The model correctly identifies that this message contains no elements of “joy” or “surprise” and only very faint traces of “anger” or “fear”.

“I hate it u need to upgrade this app to upgrade/download another app! hate it”. The primary prediction here is “anger” (around 60%), with “sadness” also present (~30%). This reflects a clear expression of irritation and frustration, possibly mixed with a feeling of helplessness or dissatisfaction. Minor probabilities for “fear” and “joy” are likely noise or model uncertainty.

The model accurately distinguishes positive reviews (“joy”) from negative feedback (“sadness” or “anger”). Mixed feelings (as in the third case) are reasonably reflected through the distribution across multiple emotion classes.

These emotion classification probabilities further reinforce the value of emotion-specific modeling and how they translate into actionable strategies in e-commerce or app development contexts:

- (a)

Considering the joy-dominant review, with the text “Awesome App! Easy to use works great on Notes w/Realtek dongle” and with the emotion predicted “joy” (~95%), it provides the following:

Main insight—the model confidently identifies a highly positive UX, likely associated with ease of use and device compatibility.

In terms of feature refinement, the model reinforces and replicates interface elements or workflows praised here in other parts of the app.

For marketing personalization, uthis feedback can be used as a testimonial or to promote compatibility with Realtek dongles.

For retention, one can target users with similar devices for loyalty or referral campaigns.

- (b)

Considering the sadness-dominant review, with the text “I’ll forgo the refund. But no go with Watson dongle… Nexus 9. Yet to try on Nexus 6p. So very disappointed!!! :-(” with the emotion predicted “sadness” (~100%), it provides the following:

Main insight—this message expresses disappointment, potentially over compatibility issues or unmet expectations.

For proactive engagement, it triggers a support follow-up or automated outreach to offer troubleshooting for the Watson dongle.

For feature refinement, it flags this specific device or dongle combo for QA review or bug tracking.

For content strategy, it updates documentation or product pages to clarify device compatibility, reducing future confusion.

- (c)

Considering the anger + sadness mix, with the text “I hate it u need to upgrade this app to upgrade/download another app! hate it” and with the predicted emotions anger (~60%) and sadness (~30%), it provides the following:

Main insight—this feedback reveals not just negative sentiment, but specific user frustration with forced app dependencies or downloads.

For UX redesign, it should consider decoupling mandatory upgrades or rethinking how dependencies are communicated to users.

For crisis control, high anger scores can trigger priority flags in moderation or support dashboards.

For retention effort, if multiple users express anger around this issue, it signals a critical churn risk that needs urgent intervention.

The probabilistic outputs from the classifier (rather than just the final label) offer a richer picture of the user’s emotional state. They help detect nuance (e.g., anger coexisting with sadness). They assist in ranking issues by intensity or urgency. They can power real-time analytics that prioritize feedback with extreme emotion scores for faster handling.

The analysis of emotion classification probabilities (from

Figure 7), the strategic applications of emotion-aware analytics and the conceptual framework highlighting the “why” behind user sentiment for more empathetic decision-making reflects the following findings:

The results illustrated in

Figure 7 underscore the practical value of emotion-aware analytics in uncovering “why” users feel a certain way, not just whether they feel positive or negative. By going beyond sentiment polarity and assigning probability distributions across specific emotions, we gain richer, multidimensional insights that directly inform empathetic, user-centric strategies.

The review “Awesome App! Easy to use works great on Notes w/Realtek dongle” with the predicted emotion joy (95%) clearly signals satisfaction and ease of use, and the high confidence score removes ambiguity. Recognizing the emotional source of positivity, in this case, feature stability and hardware compatibility, allows the product team to intentionally preserve and promote these experiences.

The review “I’ll forgo the refund. But no go with Watson dongle… Nexus 9. Yet to try on Nexus 6p. So very disappointed!!!”, with predicted emotion sadness (~100%), reflects user resignation and disappointment, not rage or hostility. This distinction is critical. A support response rooted in empathy may include proactive assistance, clear device support documentation, and a tone that acknowledges the user’s frustration without escalating conflict. Such emotional intelligence is only possible when the model helps reveal the “why” behind the dissatisfaction.

The review “I hate it u need to upgrade this app to upgrade/download another app! hate it”, with the predicted emotions anger (60%) and sadness (30%), communicates irritation over app dependencies. The emotion mix reveals both frustration (anger) and a sense of helplessness (sadness). Instead of dismissing this as mere “negativity”, understanding this emotional blend may guide the product team to reconsider intrusive UX patterns and empower users with more transparent choices. It is a form of design empathy driven by emotion analytics.

Overall, the fine-tuned emotion classifier not only predicts the dominant emotion accurately but also provides meaningful probability distributions across multiple emotions. This probabilistic insight enables nuanced understanding of user sentiment, distinguishing between pure positive/negative reactions and mixed emotional states (e.g., anger + sadness). These richer outputs allow for actionable, emotion-aware strategies in product development and customer support:

Positive feedback (high joy confidence) can guide feature reinforcement and marketing.

High sadness scores enable empathetic support and troubleshooting interventions.

Mixed anger–sadness feedback flags UX issues and churn risks, triggering design changes and crisis mitigation.

To validate the effectiveness of fine-tuning, we compared the performance of baseline classifiers using pre-trained DistilBERT features with four end-to-end fine-tuned variants. As shown in

Table 8, the fine-tuned models significantly outperform feature-based approaches, confirming the value of optimizing transformer representations specifically for emotion classification.

As summarized in

Table 8, our fine-tuned DistilBERT model achieves an F1-score of 0.9587, which is highly competitive when compared to recent studies leveraging large language models and deep learning for emotion classification in consumer reviews. For instance, ALBERT-BiGRU-CNN in [

30] achieved an F1-score of 0.9484, while the hybrid CBLSTM approach in [

32] reached 98.1% accuracy on a different dataset. Our model not only performs at a similar or higher level, but it also offers greater flexibility through tailored training strategies (TA1–TA4) that account for deployment contexts such as class imbalance, computational constraints, and rapid development—an advantage not commonly addressed in prior works. Compared to [

18,

35], which report 92% accuracy with BERT-based models, our approach demonstrates improved accuracy (up to 96.9%) while using a lighter and faster model (DistilBERT), further reinforcing its applicability in real-world e-commerce scenarios.

Moreover, unlike several studies that use binary or sentiment-level classification ([

29,

34,

35]), our model classifies across six emotion-specific categories, enabling more nuanced and actionable insights into customer feedback. While some approaches explore multilingual or region-specific datasets ([

20,

21,

25,

33]), they often do not benchmark against transformer-based models or fine-tune them for performance optimization. Our end-to-end comparative framework, from feature-based baselines to advanced transformer fine-tuning, therefore represents a novel and original contribution, particularly in applying emotion-aware analytics at scale in the e-commerce context.

Our results demonstrate that emotion-aware analytics (a) reveal root emotional drivers, not just the outcome, (b) enable more nuanced support interactions (e.g., anger vs. sadness trigger different resolutions), (c) guide product refinements with user sentiment context, (d) allow marketing to tap into authentic emotional moments (joy, love) and ultimately foster trust and loyalty by treating customers as emotionally complex individuals, not sentiment scores.

5. Conclusions

The results of our research confirm that fine-tuned transformer models, particularly DistilBERT, significantly improve the performance of emotion classification tasks when applied to customer reviews in e-commerce and app development contexts. The two-phase methodology, beginning with baseline classifiers using extracted features and progressing to end-to-end fine-tuning, demonstrates significant gains in accuracy, F1-score and overall emotional granularity. For example, while classical models like Random Forest achieved F1-scores around 0.80, the fine-tuned models surpassed 0.95 under optimized training configurations. This confirms the value of adapting the model’s internal representations to the specifics of emotion classification rather than relying solely on pre-trained, general-purpose embeddings.

The introduction of four distinct training strategies (TA1 to TA4) allows for a flexible and context-aware application of the methodology. These strategies cater to various operational needs such as rapid debugging, maximum performance, constrained computational resources or class imbalance, making the pipeline scalable and broadly applicable. This level of adaptability ensures that researchers and practitioners may tailor training to their environment without sacrificing model integrity.

More importantly, the use of emotion-specific classification, rather than simple sentiment polarity, unlocks more nuanced insights into user feedback. The model distinguishes among six emotions, joy, sadness, anger, fear, love and surprise, providing a richer and more actionable understanding of the UX. Our analysis enables more precise and empathetic decisions across product design, customer support and user engagement strategies. The model’s robustness to informal language, grammatical errors and typographical noise highlights its suitability for real-world user-generated content. This robustness ensures that emotion predictions are accurate even when the input text is not cleanly structured. For instance, the model correctly identified “joy” in a grammatically incorrect but sentimentally positive sentence, demonstrating good semantic understanding.

Visual tools such as t-SNE plots and confusion matrices offer insight into the internal consistency and separability of emotion classes. The t-SNE visualization reveals that emotions such as “joy” and “sadness” cluster tightly in embedding space, indicating internal coherence, while more ambiguous or rare classes like “surprise” exhibit looser patterns. This aligns with the observed confusion in pre-trained models and the improved clarity brought by fine-tuning. Notably, the confusion matrix shows that classes like “love” and “surprise” benefit significantly from fine-tuning, with accuracy improvements that reflect more refined decision boundaries.

Emotion probability distributions, rather than simple predicted labels, also provide practical advantages. They help reveal mixed emotions in user feedback, such as “anger” combined with “sadness”, and allow teams to prioritize feedback based on emotional intensity or urgency. This richer representation supports real-time analytics and enables proactive responses to emotionally charged reviews.

When applied to real-world app reviews, the fine-tuned model identified distinct emotional drivers. Reviews expressing “joy” helped highlight successful features or compatibility cases that can be leveraged for promotional or retention purposes. Reviews expressing “sadness” reflected disappointment or unmet expectations, prompting QA investigations and better user communication. Reviews marked by “anger” or emotional complexity indicated usability pain points and signaled areas for crisis intervention or redesign. These insights translate directly into actionable strategies that enhance both product experience and customer trust.

For example, Amazon integrates emotion detection to identify negative sentiment in reviews and route flagged items for faster resolution or automated support follow-up. Alibaba uses emotion-aware AI in its customer service chatbots to adapt conversational tone and escalate angry customers to human agents, reducing churn. Sephora leverages emotional insights from product reviews to segment marketing campaigns, e.g., targeting joyful users with referral incentives or tailoring ad copy to address user-reported frustrations with specific product lines.

In our own experimental analysis, we showed how specific emotions identified in user reviews can drive actionable decisions. A review expressing high sadness due to device incompatibility could trigger documentation updates or proactive support outreach, while a review marked by anger toward forced app dependencies suggests the need for UX redesign. These examples illustrate how emotion-aware analytics detect what users feel and also reveal why, an important distinction for developing empathetic, user-centric e-commerce strategies. As customer experience becomes a key differentiator, integrating emotional intelligence into analytics pipelines offers a competitive advantage in both operational response and long-term loyalty building.

Ultimately, our research demonstrates that emotion-aware analytics powered by fine-tuned transformers enables the classification of what users feel and an understanding of why they feel that way. This distinction is critical for fostering empathy in product development, crafting more personalized marketing approaches, and delivering customer support that acknowledges the emotional context of feedback. By integrating probabilistic emotion modeling with scalable, flexible training setups, this methodology empowers organizations to move beyond surface-level sentiment and make decisions rooted in emotional intelligence.

Despite strong performance, the proposed approach has several limitations. The emotion classification relies on a fixed set of six discrete classes, which may oversimplify the complexity of real-world emotions (like “sarcasm” that can be confused with “joy”). Imbalanced class distributions, particularly for underrepresented emotions like “surprise” and “love” affect model accuracy despite mitigation strategies. Future work should explore more multi-label or dimensional emotion models and improved balancing techniques. Additionally, the model focuses solely on textual input, missing out on multimodal signals such as emojis, audio or visual cues that are common in user feedback. Expanding to the multimodal emotion recognition could enhance insight depth. Furthermore, the model’s real-time deployment and interpretability are limited. Integrating it into live systems, enhancing transparency through explainable AI and interpretability with multimodal language models would improve usability and trust. Future work should address these challenges to make emotion-aware analytics more scalable, adaptive and user-aligned.

We also acknowledge that relying solely on app store reviews may not capture the full spectrum of customer feedback, especially when compared to diverse sources such as e-commerce websites, social media platforms or email-based customer support. These channels may reflect different emotional tones, vocabulary, and behavioral patterns, and incorporating multi-platform data would enhance the generalizability of our findings. Furthermore, we agree that emotions and user concerns may evolve over time, particularly in fast-moving domains such as mobile applications and AI-based services. As noted, some reviews in our dataset date back to 2016, which may not fully reflect current user expectations or emotional expressions. Future research may also include more recent datasets to capture evolving sentiment trends and technological perceptions.

Additionally, as part of future work, we intend to explore multi-label classification or emotion blending techniques to better represent such instances, rather than forcing a single-label decision. Future work will also focus on integrating multimodal data sources (e.g., emojis, images and voice signals) alongside text, enabling a more comprehensive understanding of user emotions that better reflects the communication patterns.