When Generative AI Meets Abuse: What Are You Anxious About?

Abstract

1. Introduction

2. Literature Review and Hypotheses

2.1. Generative AI Abuse Anxiety

2.2. Technology Acceptance Model

2.3. Trust

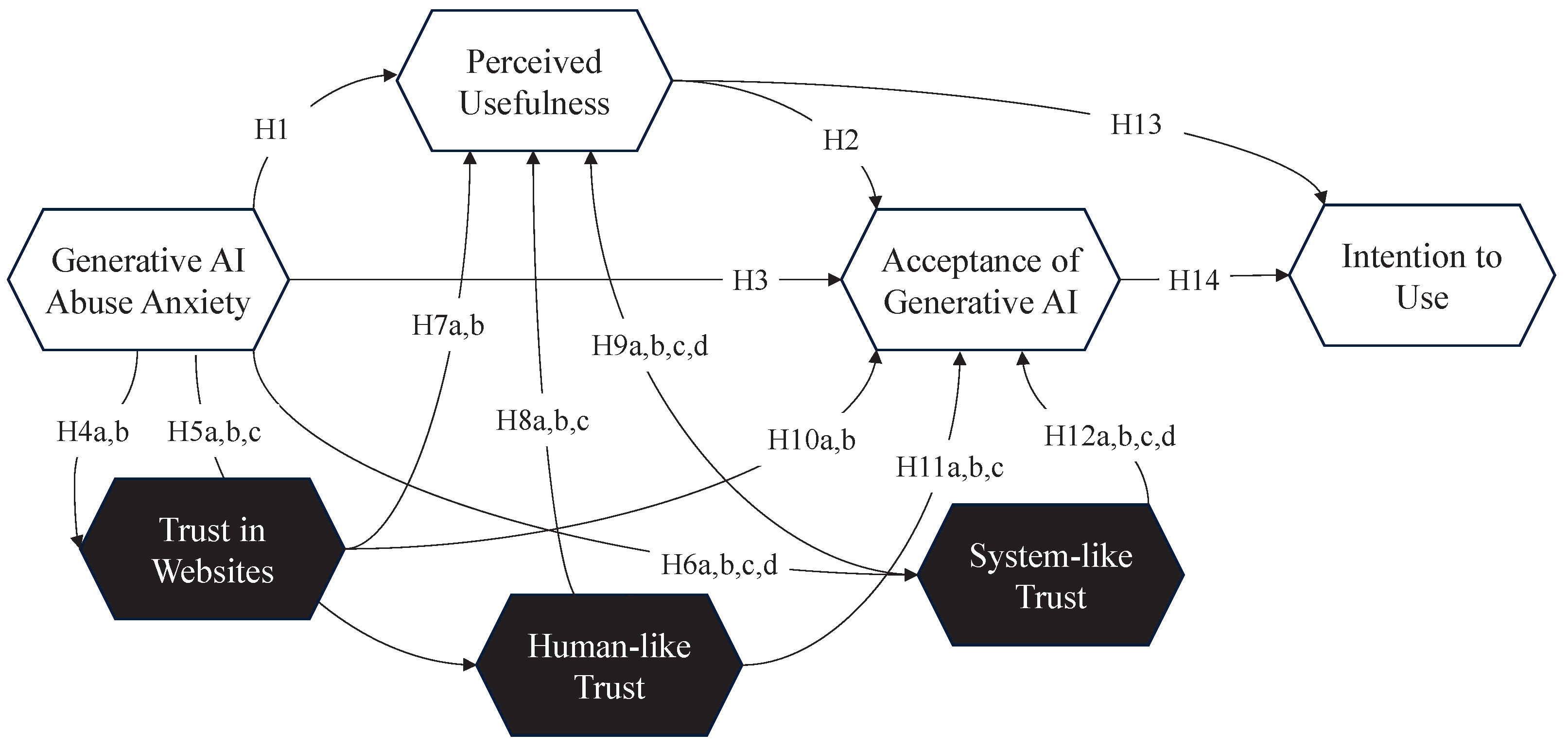

2.4. Hypothesis and Theoretical Model

3. Method

3.1. Measures and Questionnaire

3.2. Participants and Data Collection

3.3. Measurement Model Assessment

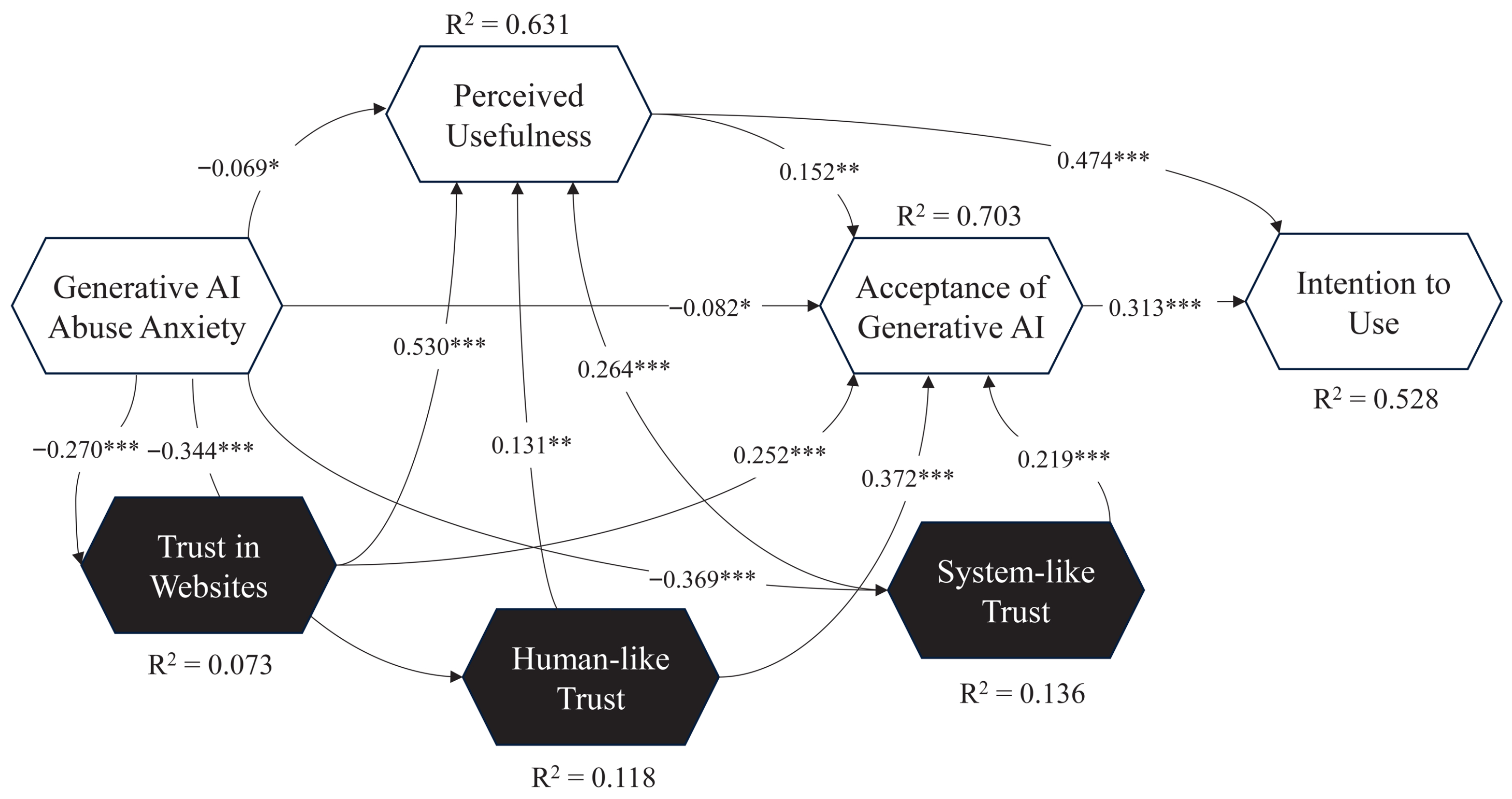

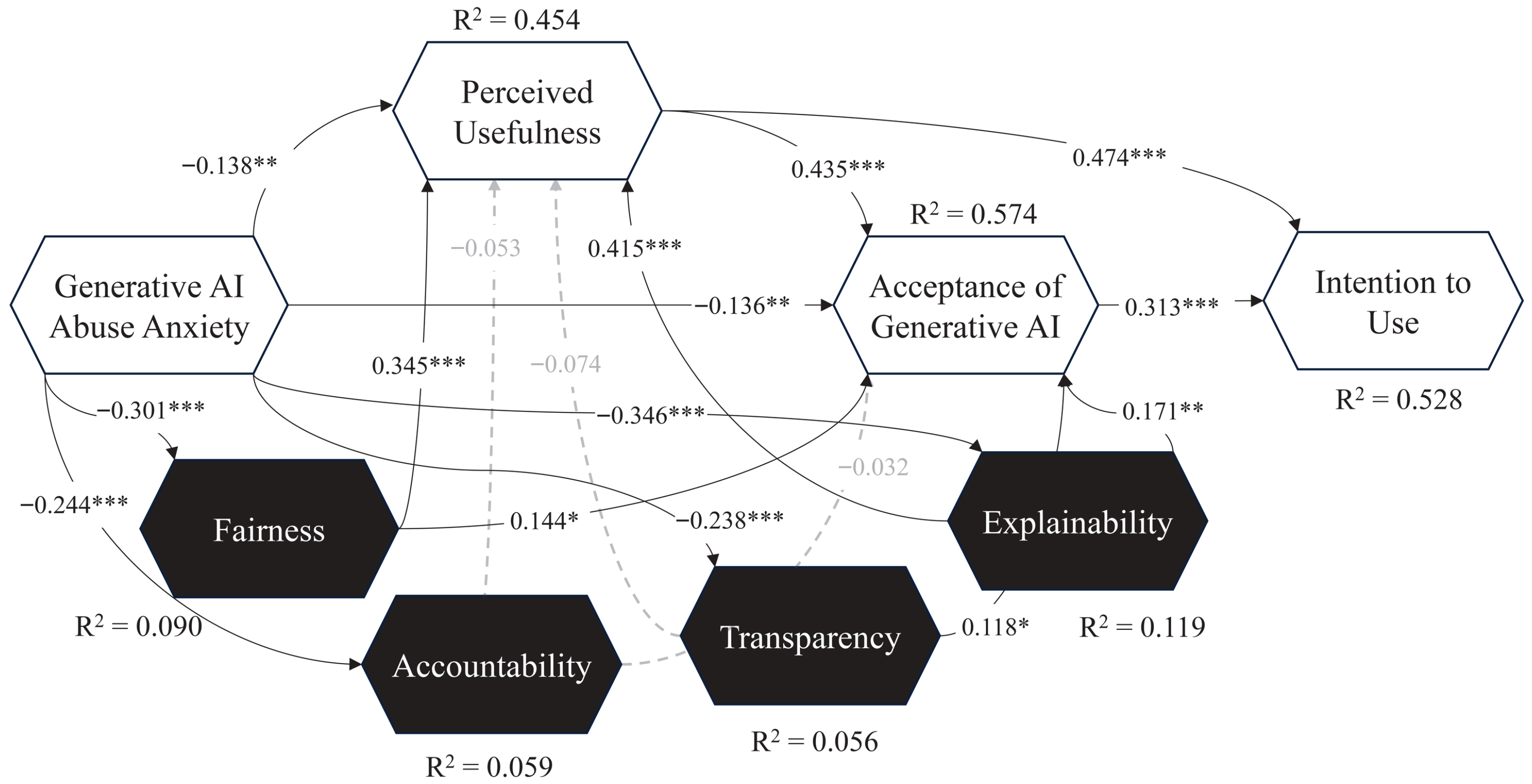

3.4. Structural Model

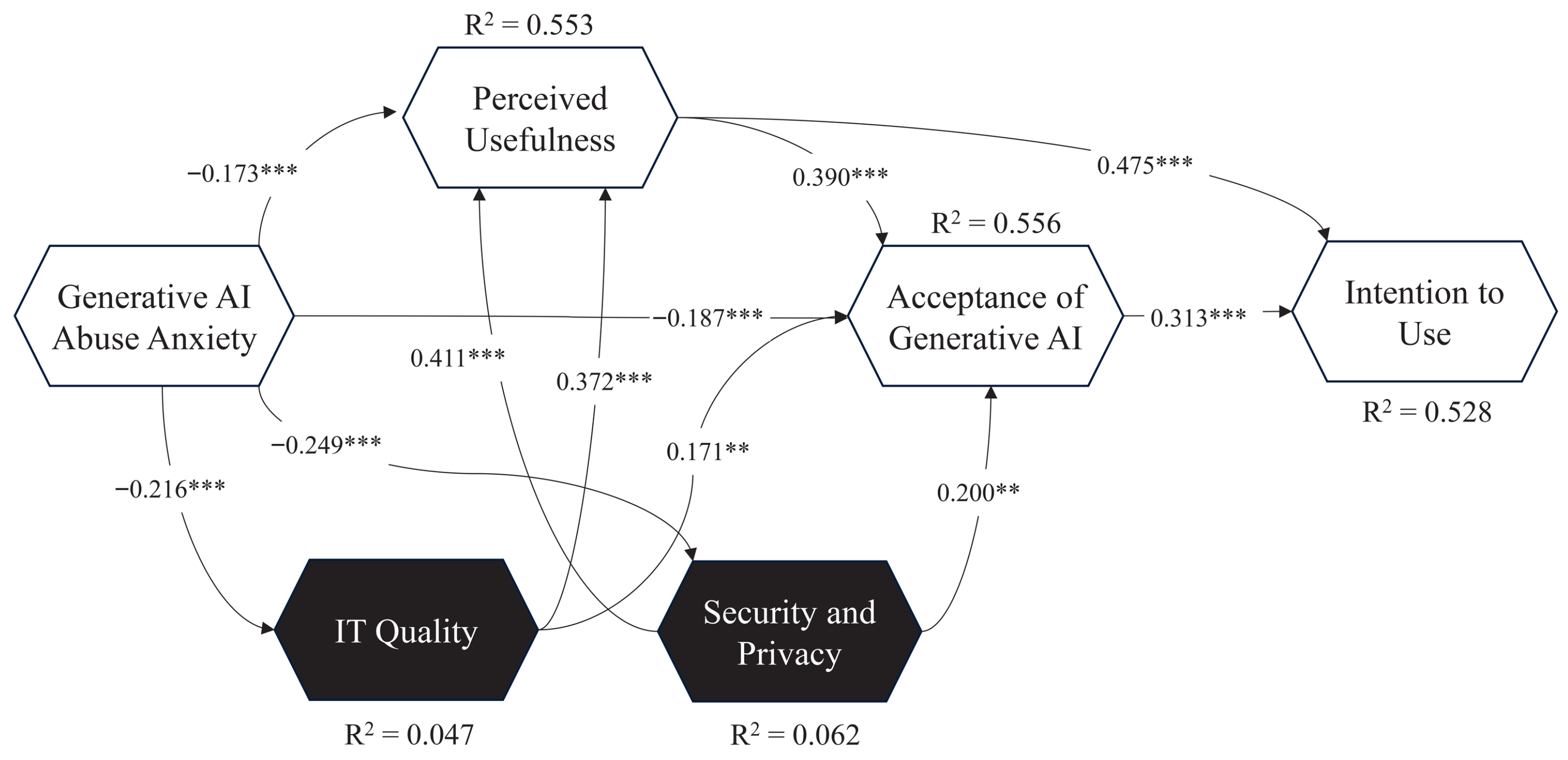

3.5. Structural Model for Trust in Websites

- IT Quality → PU → AGAI

- IT Quality → System-like Trust → AGAI

- IT Quality → Human-like Trust → AGAI

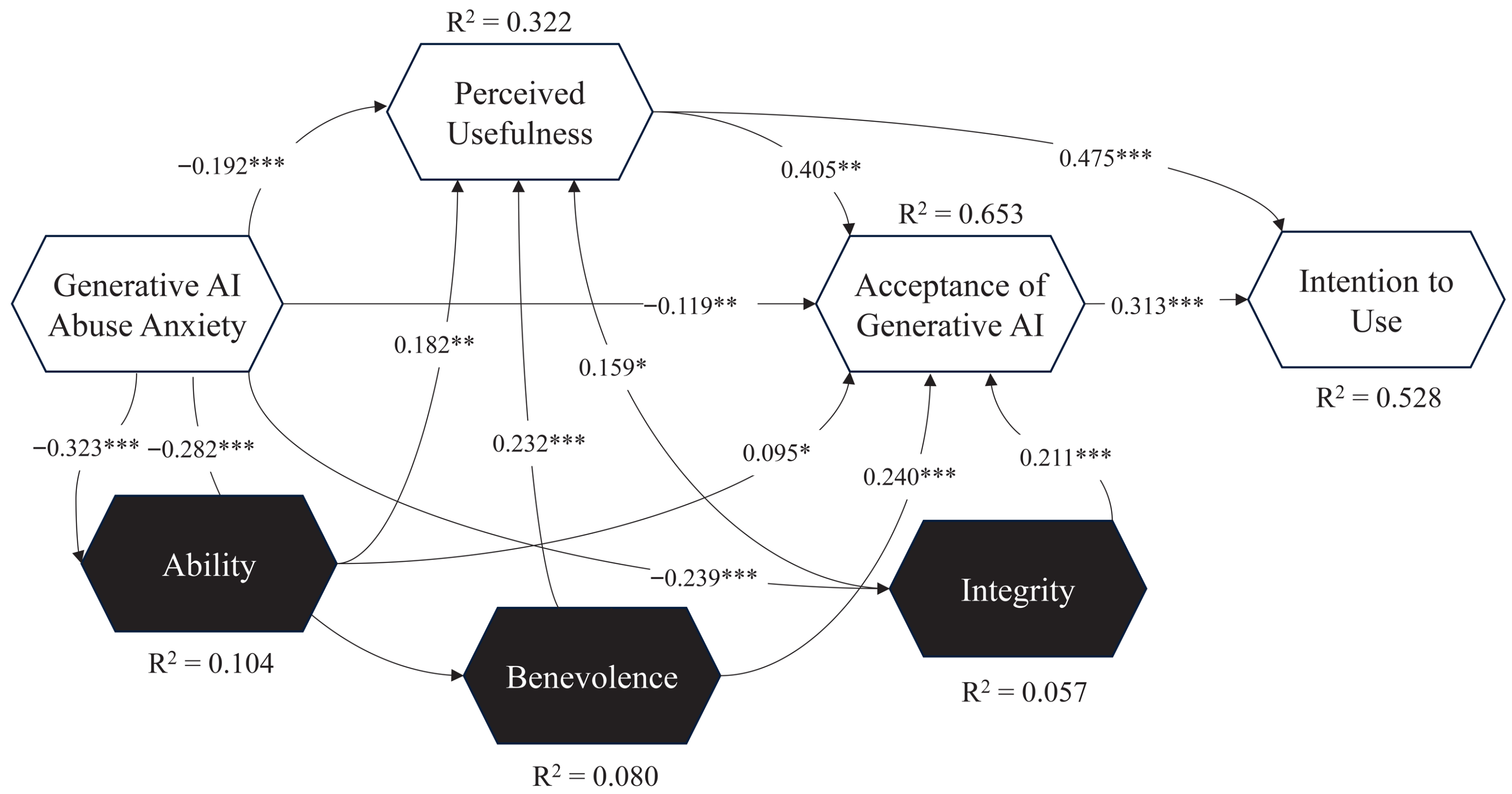

3.6. Structural Model for Human-like Trust

3.7. Structural Model for System-like Trust

4. Discussion

4.1. Theoretical Implications

4.2. Practical Implications

4.3. Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Variables | Survey Items |

|---|---|

| Generative AI Abuse Anxiety Wang and Wang [53] | I am concerned that generative AI may be misused. I worry about potential problems associated with generative AI. I fear that generative AI could malfunction or spiral out of control. |

| Perceived Usefulness Choung et al. [14] | Using generative AI helps me complete tasks more quickly. Generative AI improves my task performance. Generative AI boosts my efficiency in completing tasks. I find generative AI to be useful for my tasks. |

| Intention to Use Choung et al. [14] | I plan to keep using generative AI. I anticipate continuing to use generative AI. Using generative AI is something I would continue to do. |

| Generative AI Acceptance Choung et al. [14] | I have a positive attitude toward generative AI. I find using generative AI enjoyable. I believe using generative AI is a good choice. I consider generative AI a smart way to accomplish tasks. |

| IT Quality Hsu et al. [16] | I find the generative AI on this website user-friendly. I am satisfied with the services offered by the generative AI on this website. |

| Security and Privacy Hsu et al. [16] | This website has implemented security measures to protect users’ privacy and data when using generative AI. I feel that using generative AI on this website is safe. This website guarantees that my personal information is protected. |

| Ability Lankton et al. [12] | Generative AI is competent and effective in completing tasks. Generative AI fulfills its role of content generation effectively. Generative AI is a capable and proficient system for task execution. |

| Benevolence Choung et al. [14] | Generative AI cares about our needs and interests. Generative AI aims to solve the problems faced by human users. Generative AI strives to be helpful and does not act out of self-interest. |

| Integrity Choung et al. [14] | Generative AI is honest in human-computer interaction. Generative AI honors its commitments and fulfills its promises. Generative AI does not abuse the information and advantages it has over its users. |

| Fairness Shin [17] | I feel that the content generated by generative AI is fair. I feel that generative AI is impartial and does not discriminate against anyone. I trust that generative AI follows fair and unbiased procedures. |

| Accountability Shin [17] | I feel that there will be someone responsible for the negative impacts caused by generative AI. I feel that the algorithms of generative AI will allow third-party inspection and review. If generative AI is misused, I believe malicious users will be held accountable. |

| Transparency Shin [17] | I feel that the evaluation criteria and standards of generative AI’s algorithms are transparent. I feel that any output generated by generative AI algorithms can be explained. I feel that the evaluation criteria and standards of generative AI’s algorithms are understandable. |

| Explainability Shin [17] | I feel it is easy to understand how generative AI generates content. I feel that the content generated by generative AI is explainable. I feel that the explanations provided by generative AI can validate whether the content is correct. |

| Constructs | Step 2 | Partial MI | Step 3a | Step 3b | Full MI | |||

|---|---|---|---|---|---|---|---|---|

| Original Correlation | 5.00% | Original Differences | Confidence Interval | Original Differences | Confidence Interval | |||

| AGAI | 1 | 0.999 | Yes | −0.014 | [−0.220, 0.221] | −0.02 | [−0.271, 0.276] | Yes/Yes |

| ABL | 0.999 | 0.998 | Yes | 0.046 | [−0.214, 0.215] | 0.191 | [−0.281, 0.283] | Yes/Yes |

| ACC | 1 | 0.995 | Yes | 0.028 | [−0.216, 0.225] | 0.09 | [−0.374, 0.392] | Yes/Yes |

| BEN | 1 | 0.998 | Yes | −0.033 | [−0.213, 0.223] | −0.055 | [−0.255, 0.259] | Yes/Yes |

| EXP | 1 | 0.997 | Yes | −0.051 | [−0.217, 0.225] | −0.005 | [−0.333, 0.331] | Yes/Yes |

| FAI | 0.999 | 0.999 | Yes | −0.019 | [−0.216, 0.220] | 0.11 | [−0.357, 0.356] | Yes/Yes |

| GAIA | 0.998 | 0.995 | Yes | 0.116 | [−0.214, 0.214] | −0.159 | [−0.308, 0.312] | Yes/Yes |

| ITQ | 1 | 0.999 | Yes | −0.134 | [−0.218, 0.228] | 0.114 | [−0.288, 0.291] | Yes/Yes |

| INT | 0.999 | 0.997 | Yes | 0.075 | [−0.214, 0.224] | −0.119 | [−0.289, 0.301] | Yes/Yes |

| ITU | 1 | 0.999 | Yes | −0.014 | [−0.216, 0.218] | −0.08 | [−0.335, 0.324] | Yes/Yes |

| PU | 1 | 1 | Yes | −0.147 | [−0.214, 0.225] | 0.06 | [−0.307, 0.315] | Yes/Yes |

| SAP | 1 | 0.999 | Yes | −0.033 | [−0.216, 0.227] | −0.042 | [−0.290, 0.290] | Yes/Yes |

| TRA | 0.996 | 0.993 | Yes | 0.105 | [−0.225, 0.226] | 0.045 | [−0.371, 0.406] | Yes/Yes |

| Constructs | Step 2 | Partial MI | Step 3a | Step 3b | Full MI | |||

|---|---|---|---|---|---|---|---|---|

| Original Correlation | 5.00% | Original Differences | Confidence Interval | Original Differences | Confidence Interval | |||

| AGAI | 1 | 0.999 | Yes | 0.04 | [−0.217, 0.222] | 0.101 | [−0.267, 0.264] | Yes/Yes |

| ABL | 1 | 0.999 | Yes | 0.015 | [−0.213, 0.223] | 0.146 | [−0.278, 0.278] | Yes/Yes |

| ACC | 0.999 | 0.995 | Yes | 0.064 | [−0.219, 0.216] | −0.252 | [−0.384, 0.382] | Yes/Yes |

| BEN | 1 | 0.998 | Yes | −0.08 | [−0.220, 0.220] | 0.009 | [−0.254, 0.251] | Yes/Yes |

| EXP | 1 | 0.996 | Yes | −0.054 | [−0.216, 0.224] | −0.122 | [−0.339, 0.349] | Yes/Yes |

| FAI | 1 | 0.999 | Yes | −0.054 | [−0.216, 0.223] | 0.013 | [−0.358, 0.349] | Yes/Yes |

| GAIA | 0.998 | 0.995 | Yes | 0.005 | [−0.221, 0.216] | −0.07 | [−0.308, 0.316] | Yes/Yes |

| ITQ | 1 | 0.999 | Yes | 0.152 | [−0.219, 0.224] | 0.104 | [−0.290, 0.278] | Yes/Yes |

| INT | 0.999 | 0.997 | Yes | −0.034 | [−0.219, 0.216] | −0.008 | [−0.298, 0.288] | Yes/Yes |

| ITU | 1 | 0.999 | Yes | 0.001 | [−0.217, 0.216] | 0.021 | [−0.329, 0.320] | Yes/Yes |

| PU | 1 | 1 | Yes | −0.059 | [−0.219, 0.224] | 0.226 | [−0.311, 0.313] | Yes/Yes |

| SAP | 0.999 | 0.999 | Yes | 0.075 | [−0.219, 0.228] | 0.013 | [−0.284, 0.284] | Yes/Yes |

| TRA | 1 | 0.993 | Yes | −0.009 | [−0.220, 0.214] | −0.094 | [−0.387, 0.395] | Yes/Yes |

| Constructs | Step 2 | Partial MI | Step 3a | Step 3b | Full MI | |||

|---|---|---|---|---|---|---|---|---|

| Original Correlation | 5.00% | Original Differences | Confidence Interval | Original Differences | Confidence Interval | |||

| AGAI | 1 | 0.999 | Yes | 0.073 | [−0.222, 0.221] | −0.115 | [−0.277, 0.281] | Yes/Yes |

| ABL | 0.999 | 0.998 | Yes | 0.166 | [−0.221, 0.229] | −0.136 | [−0.279, 0.300] | Yes/Yes |

| ACC | 0.997 | 0.994 | Yes | 0.195 | [−0.223, 0.219] | −0.329 | [−0.375, 0.399] | Yes/Yes |

| BEN | 0.999 | 0.998 | Yes | 0.148 | [−0.221, 0.227] | −0.082 | [−0.249, 0.263] | Yes/Yes |

| EXP | 1 | 0.996 | Yes | 0.174 | [−0.215, 0.222] | −0.125 | [−0.341, 0.347] | Yes/Yes |

| FAI | 1 | 0.999 | Yes | 0.125 | [−0.228, 0.229] | −0.063 | [−0.356, 0.365] | Yes/Yes |

| GAIA | 0.997 | 0.995 | Yes | −0.076 | [−0.225, 0.220] | −0.202 | [−0.315, 0.328] | Yes/Yes |

| ITQ | 1 | 0.999 | Yes | −0.142 | [−0.218, 0.220] | 0.097 | [−0.283, 0.305] | Yes/Yes |

| INT | 0.999 | 0.997 | Yes | 0.126 | [−0.220, 0.220] | −0.17 | [−0.293, 0.298] | Yes/Yes |

| ITU | 1 | 0.999 | Yes | −0.017 | [−0.219, 0.220] | −0.221 | [−0.316, 0.346] | Yes/Yes |

| PU | 1 | 1 | Yes | 0.046 | [−0.219, 0.217] | −0.131 | [−0.300, 0.322] | Yes/Yes |

| SAP | 1 | 0.999 | Yes | −0.108 | [−0.222, 0.227] | −0.055 | [−0.300, 0.288] | Yes/Yes |

| TRA | 0.996 | 0.993 | Yes | 0.113 | [−0.224, 0.219] | −0.129 | [−0.380, 0.405] | Yes/Yes |

| Path Structural Relationships | Estimate | S.E. | C.R. | p |

|---|---|---|---|---|

| ITQ ← Trust in Websites | 0.668 | Regression weight of reference | ||

| SAP ← Trust in Websites | 0.854 | 0.160 | 6.936 | *** |

| FAI ← System-like Trust | 0.837 | Regression weight of reference | ||

| ACC ← System-like Trust | 0.715 | 0.076 | 9.763 | *** |

| TRA ← System-like Trust | 0.766 | 0.070 | 9.908 | *** |

| EXP ← System-like Trust | 0.761 | 0.085 | 9.108 | *** |

| ABL ← Human-like Trust | 0.729 | Regression weight of reference | ||

| BEN ← Human-like Trust | 0.793 | 0.113 | 8.534 | *** |

| INT ← Human-like Trust | 0.809 | 0.117 | 8.597 | *** |

| Paths | CI [2.5%, 97.5%] | p | |

|---|---|---|---|

| ITQ → System-like Trust | [0.190, 0.413] | 0.299 | *** |

| System-like Trust → AGAI | [0.220, 0.393] | 0.306 | *** |

| ITQ → System-like Trust → AGAI (Indirect effect) | [0.053, 0.140] | 0.092 | *** |

| ITQ → Human-like Trust | [0.144, 0.388] | 0.264 | *** |

| Human-like Trust → AGAI | [0.364, 0.538] | 0.452 | *** |

| ITQ → Human-like Trust → AGAI (Indirect effect) | [0.062, 0.187] | 0.119 | *** |

References

- Kumar, P.C.; Cotter, K.; Cabrera, L.Y. Taking Responsibility for Meaning and Mattering: An Agential Realist Approach to Generative AI and Literacy. Read. Res. Q. 2024, 59, 570–578. [Google Scholar] [CrossRef]

- Kang, H.; Lou, C. AI Agency vs. Human Agency: Understanding Human–AI Interactions on TikTok and Their Implications for User Engagement. J. -Comput.-Mediat. Commun. 2022, 27, zmac014. [Google Scholar] [CrossRef]

- Stokel-Walker, C.; Van Noorden, R. What ChatGPT and Generative AI Mean for Science. Nature 2023, 614, 214–216. [Google Scholar] [CrossRef] [PubMed]

- Omrani, N.; Rivieccio, G.; Fiore, U.; Schiavone, F.; Agreda, S.G. To Trust or Not to Trust? An Assessment of Trust in AI-Based Systems: Concerns, Ethics and Contexts. Technol. Forecast. Soc. Change 2022, 181, 121763. [Google Scholar] [CrossRef]

- Liang, S.; Shi, C. Understanding the Role of Privacy Issues in AIoT Device Adoption within Smart Homes: An Integrated Model of Privacy Calculus and Technology Acceptance. Aslib J. Inf. Manag. 2025. ahead-of-print. [Google Scholar] [CrossRef]

- Tang, Z.; Goh, D.H.L.; Lee, C.S.; Yang, Y. Understanding Strategies Employed by Seniors in Identifying Deepfakes. Aslib J. Inf. Manag. 2024. ahead-of-print. [Google Scholar] [CrossRef]

- Malik, A.; Kuribayashi, M.; Abdullahi, S.M.; Khan, A.N. DeepFake Detection for Human Face Images and Videos: A Survey. IEEE Access 2022, 10, 18757–18775. [Google Scholar] [CrossRef]

- Shin, D.; Park, Y.J. Role of Fairness, Accountability, and Transparency in Algorithmic Affordance. Comput. Hum. Behav. 2019, 98, 277–284. [Google Scholar] [CrossRef]

- Johnson, D.G.; Verdicchio, M. AI Anxiety. J. Assoc. Inf. Sci. Technol. 2017, 68, 2267–2270. [Google Scholar] [CrossRef]

- Jacovi, A.; Marasović, A.; Miller, T.; Goldberg, Y. Formalizing Trust in Artificial Intelligence: Prerequisites, Causes and Goals of Human Trust in AI. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event, Canada, 3–10 March 2021; pp. 624–635. [Google Scholar] [CrossRef]

- Gefen, D.; Karahanna, E.; Straub, D.W. Trust and TAM in Online Shopping: An Integrated Model. MIS Q. 2003, 27, 51–90. [Google Scholar] [CrossRef]

- Lankton, N.; McKnight, D.; Tripp, J. Technology, Humanness, and Trust: Rethinking Trust in Technology. J. Assoc. Inf. Syst. 2015, 16, 880–918. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human Trust in Artificial Intelligence: Review of Empirical Research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust in AI and Its Role in the Acceptance of AI Technologies. Int. J.-Hum.-Comput. Interact. 2023, 39, 1727–1739. [Google Scholar] [CrossRef]

- Botsman, R. Who Can You Trust? How Technology Brought Us Together and Why It Might Drive Us Apart; Perseus Books: New York, NY, USA, 2017. [Google Scholar]

- Hsu, M.H.; Chuang, L.W.; Hsu, C.S. Understanding Online Shopping Intention: The Roles of Four Types of Trust and Their Antecedents. Internet Res. 2014, 24, 332–352. [Google Scholar] [CrossRef]

- Shin, D. The Effects of Explainability and Causability on Perception, Trust, and Acceptance: Implications for Explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Beckers, J.J.; Schmidt, H.G. The Structure of Computer Anxiety: A Six-Factor Model. Comput. Hum. Behav. 2001, 17, 35–49. [Google Scholar] [CrossRef]

- Feuerriegel, S.; Hartmann, J.; Janiesch, C.; Zschech, P. Generative AI. Bus. Inf. Syst. Eng. 2024, 66, 111–126. [Google Scholar] [CrossRef]

- Alasadi, E.A.; Baiz, C.R. Generative AI in Education and Research: Opportunities, Concerns, and Solutions. J. Chem. Educ. 2023, 100, 2965–2971. [Google Scholar] [CrossRef]

- Dolata, M.; Feuerriegel, S.; Schwabe, G. A Sociotechnical View of Algorithmic Fairness. Inf. Syst. J. 2022, 32, 754–818. [Google Scholar] [CrossRef]

- Babic, B.; Gerke, S.; Evgeniou, T.; Cohen, I.G. Beware Explanations from AI in Health Care. Science 2021, 373, 284–286. [Google Scholar] [CrossRef]

- Schiavo, G.; Businaro, S.; Zancanaro, M. Comprehension, Apprehension, and Acceptance: Understanding the Influence of Literacy and Anxiety on Acceptance of Artificial Intelligence. Technol. Soc. 2024, 77, 102537. [Google Scholar] [CrossRef]

- Peres, R.; Schreier, M.; Schweidel, D.; Sorescu, A. On ChatGPT and beyond: How Generative Artificial Intelligence May Affect Research, Teaching, and Practice. Int. J. Res. Mark. 2023, 40, 269–275. [Google Scholar] [CrossRef]

- Jovanović, M.; Campbell, M. Generative Artificial Intelligence: Trends and Prospects. Computer 2022, 55, 107–112. [Google Scholar] [CrossRef]

- Kreps, S.; McCain, R.M.; Brundage, M. All the News That’s Fit to Fabricate: AI-Generated Text as a Tool of Media Misinformation. J. Exp. Political Sci. 2022, 9, 104–117. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- King, W.R.; He, J. A Meta-Analysis of the Technology Acceptance Model. Inf. Manag. 2006, 43, 740–755. [Google Scholar] [CrossRef]

- Rafique, H.; Almagrabi, A.O.; Shamim, A.; Anwar, F.; Bashir, A.K. Investigating the Acceptance of Mobile Library Applications with an Extended Technology Acceptance Model (TAM). Comput. Educ. 2020, 145, 103732. [Google Scholar] [CrossRef]

- Rauniar, R.; Rawski, G.; Yang, J.; Johnson, B. Technology Acceptance Model (TAM) and Social Media Usage: An Empirical Study on Facebook. J. Enterp. Inf. Manag. 2014, 27, 6–30. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Tondeur, J. The Technology Acceptance Model (TAM): A Meta-Analytic Structural Equation Modeling Approach to Explaining Teachers’ Adoption of Digital Technology in Education. Comput. Educ. 2019, 128, 13–35. [Google Scholar] [CrossRef]

- Wu, B.; Chen, X. Continuance Intention to Use MOOCs: Integrating the Technology Acceptance Model (TAM) and Task Technology Fit (TTF) Model. Comput. Hum. Behav. 2017, 67, 221–232. [Google Scholar] [CrossRef]

- Wallace, L.G.; Sheetz, S.D. The Adoption of Software Measures: A Technology Acceptance Model (TAM) Perspective. Inf. Manag. 2014, 51, 249–259. [Google Scholar] [CrossRef]

- Wu, I.L.; Chen, J.L. An Extension of Trust and TAM Model with TPB in the Initial Adoption of On-Line Tax: An Empirical Study. Int. J.-Hum.-Comput. Stud. 2005, 62, 784–808. [Google Scholar] [CrossRef]

- Califf, C.B.; Brooks, S.; Longstreet, P. Human-like and System-like Trust in the Sharing Economy: The Role of Context and Humanness. Technol. Forecast. Soc. Change 2020, 154, 119968. [Google Scholar] [CrossRef]

- Lam, T. Continuous Use of AI Technology: The Roles of Trust and Satisfaction. Aslib J. Inf. Manag. 2025. ahead-of-print. [Google Scholar] [CrossRef]

- Habbal, A.; Ali, M.K.; Abuzaraida, M.A. Artificial Intelligence Trust, Risk and Security Management (AI TRiSM): Frameworks, Applications, Challenges and Future Research Directions. Expert Syst. Appl. 2024, 240, 122442. [Google Scholar] [CrossRef]

- Vorm, E.S.; Combs, D.J.Y. Integrating Transparency, Trust, and Acceptance: The Intelligent Systems Technology Acceptance Model (ISTAM). Int. J. Hum.-Comput. Interact. 2022, 38, 1828–1845. [Google Scholar] [CrossRef]

- Al-Adwan, A.S.; Li, N.; Al-Adwan, A.; Abbasi, G.A.; Albelbisi, N.A.; Habibi, A. Extending the Technology Acceptance Model (TAM) to Predict University Students’ Intentions to Use Metaverse-Based Learning Platforms. Educ. Inf. Technol. 2023, 28, 15381–15413. [Google Scholar] [CrossRef]

- Rousseau, D.M.; Sitkin, S.B.; Burt, R.S.; Camerer, C. Not So Different After All: A Cross-Discipline View Of Trust. Acad. Manag. Rev. 1998, 23, 393–404. [Google Scholar] [CrossRef]

- Keohane, R.O. Reciprocity in International Relations. Int. Organ. 1986, 40, 1–27. [Google Scholar] [CrossRef]

- Hancock, P.A.; Kessler, T.T.; Kaplan, A.D.; Brill, J.C.; Szalma, J.L. Evolving Trust in Robots: Specification Through Sequential and Comparative Meta-Analyses. Hum. Factors 2021, 63, 1196–1229. [Google Scholar] [CrossRef]

- Došilović, F.K.; Brčić, M.; Hlupić, N. Explainable Artificial Intelligence: A Survey. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 0210–0215. [Google Scholar] [CrossRef]

- Molm, L.D.; Takahashi, N.; Peterson, G. Risk and Trust in Social Exchange: An Experimental Test of a Classical Proposition. Am. J. Sociol. 2000, 105, 1396–1427. [Google Scholar] [CrossRef]

- Zhou, T.; Ma, X. Examining Generative AI User Continuance Intention Based on the SOR Model. Aslib J. Inf. Manag. 2025. ahead-of-print. [Google Scholar] [CrossRef]

- Memarian, B.; Doleck, T. Fairness, Accountability, Transparency, and Ethics (FATE) in Artificial Intelligence (AI) and Higher Education: A Systematic Review. Comput. Educ. Artif. Intell. 2023, 5, 100152. [Google Scholar] [CrossRef]

- Shin, D. User Perceptions of Algorithmic Decisions in the Personalized AI System:Perceptual Evaluation of Fairness, Accountability, Transparency, and Explainability. J. Broadcast. Electron. Media 2020, 64, 541–565. [Google Scholar] [CrossRef]

- Liang, L.; Sun, Y.; Yang, B. Algorithm Characteristics, Perceived Credibility and Verification of ChatGPT-Generated Content: A Moderated Nonlinear Model. Aslib J. Inf. Manag. 2025. ahead-of-print. [Google Scholar] [CrossRef]

- Al-Debei, M.M.; Akroush, M.N.; Ashouri, M.I. Consumer Attitudes towards Online Shopping: The Effects of Trust, Perceived Benefits, and Perceived Web Quality. Internet Res. 2015, 25, 707–733. [Google Scholar] [CrossRef]

- Amin, M.; Ryu, K.; Cobanoglu, C.; Nizam, A. Determinants of Online Hotel Booking Intentions: Website Quality, Social Presence, Affective Commitment, and e-Trust. J. Hosp. Mark. Manag. 2021, 30, 845–870. [Google Scholar] [CrossRef]

- Seckler, M.; Heinz, S.; Forde, S.; Tuch, A.N.; Opwis, K. Trust and Distrust on the Web: User Experiences and Website Characteristics. Comput. Hum. Behav. 2015, 45, 39–50. [Google Scholar] [CrossRef]

- Jeon, M.M.; Jeong, M. Customers’ Perceived Website Service Quality and Its Effects on e-Loyalty. Int. J. Contemp. Hosp. Manag. 2017, 29, 438–457. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Wang, Y.S. Development and Validation of an Artificial Intelligence Anxiety Scale: An Initial Application in Predicting Motivated Learning Behavior. Interact. Learn. Environ. 2022, 30, 619–634. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to Use and How to Report the Results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Henseler, J.; Hubona, G.; Ray, P.A. Using PLS Path Modeling in New Technology Research: Updated Guidelines. Ind. Manag. Data Syst. 2016, 116, 2–20. [Google Scholar] [CrossRef]

- Rai, A. Explainable AI: From Black Box to Glass Box. J. Acad. Mark. Sci. 2020, 48, 137–141. [Google Scholar] [CrossRef]

- Dehling, T.; Sunyaev, A. A Design Theory for Transparency of Information Privacy Practices. Inf. Syst. Res. 2024, 35, iii–x. [Google Scholar] [CrossRef]

- Ye, X.; Yan, Y.; Li, J.; Jiang, B. Privacy and Personal Data Risk Governance for Generative Artificial Intelligence: A Chinese Perspective. Telecommun. Policy 2024, 48, 102851. [Google Scholar] [CrossRef]

- Troisi, O.; Fenza, G.; Grimaldi, M.; Loia, F. Covid-19 sentiments in smart cities: The role of technology anxiety before and during the pandemic. Comput. Hum. Behav. 2022, 126, 106986. [Google Scholar] [CrossRef]

- Guo, Y.; An, S.; Comes, T. From Warning Messages to Preparedness Behavior: The Role of Risk Perception and Information Interaction in the Covid-19 Pandemic. Int. J. Disaster Risk Reduct. 2022, 73, 102871. [Google Scholar] [CrossRef]

- Choi, J.; Yoo, D. The Impacts of Self-Construal and Perceived Risk on Technology Readiness. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 1584–1597. [Google Scholar] [CrossRef]

- Legood, A.; van der Werff, L.; Lee, A.; den Hartog, D.; van Knippenberg, D. A Critical Review of the Conceptualization, Operationalization, and Empirical Literature on Cognition-Based and Affect-Based Trust. J. Manag. Stud. 2023, 60, 495–537. [Google Scholar] [CrossRef]

- Lazcoz, G.; de Hert, P. Humans in the GDPR and AIA Governance of Automated and Algorithmic Systems. Essential Pre-Requisites against Abdicating Responsibilities. Comput. Law Secur. Rev. 2023, 50, 105833. [Google Scholar] [CrossRef]

- Cobbe, J.; Singh, J. Artificial Intelligence as a Service: Legal Responsibilities, Liabilities, and Policy Challenges. Comput. Law Secur. Rev. 2021, 42, 105573. [Google Scholar] [CrossRef]

- Dong, H.; Chen, J. Meta-Regulation: An Ideal Alternative to the Primary Responsibility as the Regulatory Model of Generative AI in China. Comput. Law Secur. Rev. 2024, 54, 106016. [Google Scholar] [CrossRef]

| Characteristics | Option | Frequency | Percentage (%) |

|---|---|---|---|

| Gender | Male | 169 | 53.1% |

| Female | 149 | 46.9% | |

| Age | <23 | 58 | 18.2% |

| 23–30 | 107 | 33.6% | |

| 31–40 | 104 | 32.7% | |

| 41–50 | 39 | 12.3% | |

| >50 | 10 | 3.1% | |

| Education | Associate degree and below | 41 | 12.9% |

| Undergraduate | 142 | 44.7% | |

| Graduate | 135 | 42.6% | |

| Usage Frequency | Once or more times daily | 39 | 12.3% |

| Once a week | 99 | 31.1% | |

| Once every two weeks | 67 | 21.1% | |

| Once a month | 113 | 35.5% |

| Variable | Items | Factor Loading | AVE | CR | Cronbach Alpha |

|---|---|---|---|---|---|

| Generative AI Abuse Anxiety (GAIA) | GAIA1 | 0.907 | 0.791 | 0.919 | 0.868 |

| GAIA2 | 0.926 | ||||

| GAIA3 | 0.832 | ||||

| Perceived Usefulness (PU) | PU1 | 0.892 | 0.779 | 0.934 | 0.906 |

| PU2 | 0.899 | ||||

| PU3 | 0.857 | ||||

| PU4 | 0.883 | ||||

| Acceptance of Generative AI (AGAI) | AGAI1 | 0.85 | 0.722 | 0.912 | 0.871 |

| AGAI2 | 0.829 | ||||

| AGAI3 | 0.856 | ||||

| AGAI4 | 0.863 | ||||

| Intention to Use (ITU) | ITU1 | 0.921 | 0.838 | 0.939 | 0.903 |

| ITU2 | 0.916 | ||||

| ITU3 | 0.909 | ||||

| IT Quality (ITQ) | ITQ1 | 0.936 | 0.882 | 0.938 | 0.867 |

| ITQ2 | 0.942 | ||||

| Security and Privacy (SAP) | SAP1 | 0.902 | 0.795 | 0.921 | 0.871 |

| SAP2 | 0.905 | ||||

| SAP3 | 0.867 | ||||

| Ability (ABL) | ABL1 | 0.907 | 0.799 | 0.923 | 0.874 |

| ABL2 | 0.893 | ||||

| ABL3 | 0.881 | ||||

| Benevolence (BEN) | BEN1 | 0.868 | 0.774 | 0.911 | 0.853 |

| BEN2 | 0.913 | ||||

| BEN3 | 0.856 | ||||

| Integrity (INT) | INT1 | 0.85 | 0.731 | 0.891 | 0.816 |

| INT2 | 0.865 | ||||

| INT3 | 0.851 | ||||

| Fairness (FAI) | FAI1 | 0.874 | 0.818 | 0.931 | 0.888 |

| FAI2 | 0.923 | ||||

| FAI3 | 0.915 | ||||

| Accountability (ACC) | ACC1 | 0.891 | 0.79 | 0.919 | 0.867 |

| ACC2 | 0.885 | ||||

| ACC3 | 0.891 | ||||

| Transparency (TRA) | TRA1 | 0.886 | 0.761 | 0.905 | 0.844 |

| TRA2 | 0.887 | ||||

| TRA3 | 0.843 | ||||

| Explainability (EXP) | EXP1 | 0.811 | 0.697 | 0.873 | 0.783 |

| EXP2 | 0.855 | ||||

| EXP3 | 0.838 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. ABL | 0.894 | ||||||||||||

| 2. AGAI | 0.538 | 0.850 | |||||||||||

| 3. ACC | 0.102 | 0.321 | 0.889 | ||||||||||

| 4. BEN | 0.474 | 0.618 | 0.212 | 0.880 | |||||||||

| 5. EXP | 0.434 | 0.594 | 0.357 | 0.464 | 0.835 | ||||||||

| 6. FAI | 0.289 | 0.562 | 0.496 | 0.404 | 0.544 | 0.904 | |||||||

| 7. GAIA | −0.323 | −0.413 | −0.244 | −0.282 | −0.346 | −0.301 | 0.889 | ||||||

| 8. ITQ | 0.247 | 0.550 | 0.157 | 0.300 | 0.394 | 0.316 | −0.215 | 0.939 | |||||

| 9. INT | 0.533 | 0.591 | 0.147 | 0.535 | 0.439 | 0.355 | −0.239 | 0.251 | 0.855 | ||||

| 10. ITU | 0.434 | 0.640 | 0.377 | 0.430 | 0.618 | 0.665 | −0.360 | 0.455 | 0.389 | 0.915 | |||

| 11. PU | 0.438 | 0.689 | 0.251 | 0.458 | 0.602 | 0.547 | −0.355 | 0.614 | 0.426 | 0.690 | 0.883 | ||

| 12. SAP | 0.386 | 0.581 | 0.115 | 0.423 | 0.429 | 0.373 | −0.248 | 0.498 | 0.363 | 0.457 | 0.639 | 0.892 | |

| 13. TRA | 0.193 | 0.390 | 0.666 | 0.259 | 0.399 | 0.523 | −0.238 | 0.190 | 0.205 | 0.476 | 0.270 | 0.112 | 0.872 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. ABL | |||||||||||||

| 2. AGAI | 0.616 | ||||||||||||

| 3. ACC | 0.117 | 0.369 | |||||||||||

| 4. BEN | 0.546 | 0.715 | 0.246 | ||||||||||

| 5. EXP | 0.523 | 0.717 | 0.428 | 0.565 | |||||||||

| 6. FAI | 0.327 | 0.636 | 0.562 | 0.461 | 0.645 | ||||||||

| 7. GAIA | 0.367 | 0.471 | 0.278 | 0.322 | 0.412 | 0.336 | |||||||

| 8. ITQ | 0.284 | 0.632 | 0.179 | 0.350 | 0.476 | 0.361 | 0.238 | ||||||

| 9. INT | 0.630 | 0.701 | 0.175 | 0.639 | 0.550 | 0.416 | 0.280 | 0.297 | |||||

| 10. ITU | 0.488 | 0.721 | 0.427 | 0.488 | 0.729 | 0.742 | 0.405 | 0.513 | 0.453 | ||||

| 11. PU | 0.492 | 0.775 | 0.282 | 0.521 | 0.712 | 0.610 | 0.394 | 0.693 | 0.495 | 0.763 | |||

| 12. SAP | 0.442 | 0.666 | 0.133 | 0.491 | 0.517 | 0.424 | 0.283 | 0.572 | 0.431 | 0.514 | 0.718 | ||

| 13. TRA | 0.224 | 0.453 | 0.779 | 0.307 | 0.489 | 0.606 | 0.272 | 0.222 | 0.249 | 0.546 | 0.308 | 0.129 |

| Paths | CI-[2.5%, 97.5%] | Hypotheses | Supported? |

|---|---|---|---|

| GAIA → PU | [−0.135, 0.000] | H1 | Yes |

| PU → AGAI | [0.031, 0.251] | H2 | Yes |

| GAIA → AGAI | [−0.145, −0.015] | H3 | Yes |

| GAIA → Trust in Websites | [−0.376, −0.166] | ||

| GAIA → Human−Like Trust | [−0.445, −0.240] | ||

| GAIA → System−Like Trust | [−0.466, −0.274] | ||

| Trust in Websites → PU | [0.423, 0.648] | ||

| Human−Like Trust → PU | [0.029, 0.224] | ||

| System−Like Trust → PU | [0.172, 0.354] | ||

| Trust in Websites → AGAI | [0.152, 0.382] | ||

| Human−Like Trust → AGAI | [0.281, 0.457] | ||

| System−Like Trust → AGAI | [0.140, 0.301] | ||

| PU → ITU | [0.359, 0.581] | H13 | Yes |

| AGAI → ITU | [0.197, 0.430] | H14 | Yes |

| Paths | CI [2.5%, 97.5%] | Hypotheses | Supported? |

|---|---|---|---|

| GAIA → ITQ | [−0.334, −0.098] | H4a | Yes |

| GAIA → SAP | [−0.363, −0.136] | H4b | Yes |

| ITQ → PU | [0.268, 0.485] | H7a | Yes |

| SAP → PU | [0.277, 0.536] | H7b | Yes |

| ITQ → AGAI | [0.057, 0.305] | H10a | Yes |

| SAP → AGAI | [0.058, 0.355] | H10b | Yes |

| Paths | CI [2.5%, 97.5%] | Hypotheses | Supported? |

|---|---|---|---|

| GAIA → ABL | [−0.426, −0.216] | H5a | Yes |

| GAIA → BEN | [−0.388, −0.170] | H5b | Yes |

| GAIA → INT | [−0.345, −0.129] | H5c | Yes |

| ABL → PU | [0.052, 0.308] | H8a | Yes |

| BEN → PU | [0.108, 0.360] | H8b | Yes |

| INT → PU | [0.033, 0.285] | H8c | Yes |

| ABL → AGAI | [0.011, 0.179] | H11a | Yes |

| BEN → AGAI | [0.142, 0.332] | H11b | Yes |

| INT → AGAI | [0.118, 0.306] | H11c | Yes |

| Paths | CI [2.5%, 97.5%] | Hypotheses | Supported? |

|---|---|---|---|

| GAIA → FAI | [−0.403, −0.201] | H6a | Yes |

| GAIA → ACC | [−0.343, −0.145] | H6b | Yes |

| GAIA → TRA | [−0.348, −0.129] | H6c | Yes |

| GAIA → EXP | [−0.439, −0.253] | H6d | Yes |

| FAI → PU | [0.219, 0.465] | H9a | Yes |

| ACC → PU | [−0.186, 0.082] | H9b | No |

| TRA → PU | [−0.187, 0.048] | H9c | No |

| EXP → PU | [0.308, 0.514] | H9d | Yes |

| FAI → AGAI | [0.034, 0.250] | H12a | Yes |

| ACC → AGAI | [−0.133, 0.072] | H12b | No |

| TRA → AGAI | [0.009, 0.231] | H12c | Yes |

| EXP → AGAI | [0.058, 0.280] | H12d | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Tan, H. When Generative AI Meets Abuse: What Are You Anxious About? J. Theor. Appl. Electron. Commer. Res. 2025, 20, 215. https://doi.org/10.3390/jtaer20030215

Song Y, Tan H. When Generative AI Meets Abuse: What Are You Anxious About? Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(3):215. https://doi.org/10.3390/jtaer20030215

Chicago/Turabian StyleSong, Yuanzhao, and Haowen Tan. 2025. "When Generative AI Meets Abuse: What Are You Anxious About?" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 3: 215. https://doi.org/10.3390/jtaer20030215

APA StyleSong, Y., & Tan, H. (2025). When Generative AI Meets Abuse: What Are You Anxious About? Journal of Theoretical and Applied Electronic Commerce Research, 20(3), 215. https://doi.org/10.3390/jtaer20030215