1. Introduction

In the digital age, unstructured text data have grown rapidly, necessitating the use of advanced processing and analysis techniques. Topic labelling, the process of assigning various unstructured text labels that correlate with subjects or categories, is a significant application of text categorisation. Its main goal is to extract general tags in order to facilitate a variety of analytical applications. In addition, there are numerous possible applications for this strategy in numerous fields, each of which has its own distinct set of opportunities and challenges.

Content tagging apps use these techniques to improve user experience and search engine rankings by automatically classifying a range of digital content. Additionally, text classification aids in common applications such as automated response generation and email spam detection. By grouping user content, businesses can identify emerging trends and more accurately characterise different types of customers. Specifically, by enabling the extraction of valuable information from customer comments, text classification removes the need for a time-consuming human evaluation in the product review process. Sentiment analysis, a related field, goes even further by predicting people’s feelings about specific topics based on text input. This is helpful for social and political discourse analysis in addition to economic analysis. For example, Ferrara and Ciano (2024) [

1] recently demonstrated that short-term ESG stock price forecasts are considerably sharpened when explainable AI models are combined with news and social media signals, which serve as a proxy for market sentiment.

Storytelling skills are becoming more and more important in digital wine retail. Price, availability, and consumer trust can all be impacted by a single expert review; however, these reviews are often long, full of metaphors, and written in a vocabulary (tannin, terroir, finish, etc.) that is difficult for sentiment analysis tools to understand. Therefore, it is both a linguistic and a business challenge to transform such language into organised, e-commerce-ready information. In relation to this, Croijmans et al. [

2] performed a computational analysis of reviews by wine experts and discovered that despite the assertions that wine descriptions lack information, experts consistently and informatively describe wines. Their research demonstrated how expertise can overcome limitations in describing sensory experiences like flavour and smell by revealing domain-specific vocabulary and the capacity to predict wine properties from review text. Reviews serve as dynamic quality signals in markets, as evidenced by the strong correlation between consumer price responses and expert wine ratings [

3].

The main goal of this study is to investigate the use of state-of-the-art neural network architectures for the classification of wine reviews and the extraction of key attributes that characterise the quality and style of wine. By making it easier to create eye-catching marketing ads and enhance websites, these techniques seek to support numerous facets of data analysis and decision-making processes, as well as the marketing process. The usefulness of deep learning methods in sentiment analysis, especially for social media content, has been reaffirmed by recent studies. For example, a comprehensive study comparing different deep learning architectures embedded with Word2Vec and GloVe techniques was carried out by Rakshit and Sarkar [

4]. Their results demonstrate how well Word2Vec-embedded models, particularly bidirectional recurrent architectures such as Bi-LSTM and Bi-GRU, classify tweet polarity. Furthermore, recent research demonstrates that graph convolutional network methods for text classification perform well on domain-specific tasks [

5]. Similarly, Prakash and Vijay [

6] show that hierarchical transformer-based sentiment analysis frameworks can combine explainability and aspect extraction, resulting in precise predictions and clear identification of unique sentiment signals for a given domain. These studies support the potential of cutting-edge machine learning techniques to enhance text classification and sentiment analysis skills across a range of applications. Other research [

7], suggesting a natural connection between textual review extraction and the automated production of e-commerce marketing assets, shows that generative AI systems can produce more persuasive visual content than human marketers. However, conventional bag-of-words or flat RNN classifiers frequently misinterpret domain-specific polarity (‘dry’ is not a negative term) and fail to recognise the hierarchical structure of wine notes (word → sentence → review). As a result, prior research either avoids the issue of how text insights should be incorporated into marketing materials or hand-codes small lexica.

We present an end-to-end pipeline that (i) uses a Hierarchical Attention Network to classify expert reviews, (ii) uses a domain-specific lexicon to recalibrate sentiment, and (iii) transforms the resulting descriptors into AI-generated visuals that are evaluated in order to fill these gaps. The objective is to demonstrate how narrative language can be recoloured into content aimed at consumers after being grayscaled in the data. Four interrelated research questions (RQs) that each focused on a crucial component of the pipeline’s architecture, functionality, and use were used to examine this.

- RQ1

How is the perceived stylistic difference between the two image sets related to (a) participants’ overall set-level preference and (b) the gap in their mean relevance/appeal ratings?

- RQ2

Which self-reported rating factors are predictive of appeal and relevance scores, and do these predictive weights change depending on wine marketing experience?

- RQ3

In terms of categorising expert wine reviews by style and quality, how does our Hierarchical Attention Network (with and without GloVe augmentation) stack up against the Spectral-HAN and LSTM baselines?

- RQ4

When compared to a generic sentiment tool, does recalibrating sentiment scores using a wine-tuned lexicon improve aspect-level accuracy and decrease polarity errors?

Our primary contributions, which connect linguistic analysis, model innovation, and real-world e-commerce applications, are guided by these RQs:

- (1)

A web-based user study assessing stylistic preferences and rating factors validates the efficacy of our prompt-engineering pipeline for RQ1 and RQ2, which demonstrates a proof-of-concept pipeline that converts key descriptors into AI-generated images.

- (2)

We develop a hierarchical attention classifier that surpasses flat LSTM and Spectral-HAN baselines and also rivals published DistilBERT results (addressing RQ3).

- (3)

We release a cleaned corpus of 46,000 Wine Enthusiast reviews, plus the full web interface and assets used in our user study, to enable reproducible benchmarking and domain-specific sentiment research (supporting RQ3 and RQ4).

The state of the art in sentiment analysis, wine informatics, and text classification is reviewed in

Section 2. The dataset, neural architectures, and evaluation methodology are explained in

Section 3. The experimental results are presented in

Section 4, and then the commercial implications, limitations, and future work directions are examined in

Section 5. The study is finally concluded in

Section 6.

2. Literature Review

Natural language processing (NLP) and machine learning have fueled recent advances in text classification and sentiment analysis, enabling the extraction of useful details from large amounts of unstructured data. This section examines the evolution of text categorisation methods, explores the creation of neural network designs, and illustrates how these tools are applied to the analysis of complex textual domains such as wine reviews.

2.1. Evolution of Text Classification and Topic Labelling

Text classification, the process of assigning predefined categories to textual data, has been a foundational task in natural language processing (NLP). Many approaches rely on statistical and machine learning methods, such as Random Forest, Naïve Bayes classifiers and Support Vector Machines (SVMs) for tasks such as spam filtering [

8], content filtering [

9], or document classification [

10]. One interesting advancement in the area was the Latent Dirichlet Allocation (LDA), which represents documents as mixtures of topics, where each topic is characterised by a distribution over words [

11]. It works especially well for uncovering hidden patterns in large datasets, making it possible to find recurring themes in documents [

12]. By adding more statistical data for better topic coherence, LDA variants, like the Doubly Correlated Topic Model, expand on their potential [

13]. Deep learning and clustering methods are frequently used for topic modelling [

14].

It is necessary to understand the challenges related to class imbalance or label ambiguity, including for the evaluation of results. Some studies investigated the relationship between changes in a classifier’s confusion matrix and the resulting metrics. One such study introduces the concept of “measure invariance,” a critical property that determines whether a performance measure is sensitive to certain changes in classification outcomes [

15]. Tasks involving human communication, such as sentiment analysis, may require measures, like the area under the curve (AUC), that are more sensitive to shifts in both positive and negative classifications.

2.2. Sentiment Analysis and Its Diverse Applications

Sentiment analysis involves determining the emotional tone behind a body of text, ranging from positive and negative sentiments to more nuanced emotional states. It has applications across domains, including market research, social media monitoring, and customer feedback analysis [

16]. Traditional sentiment analysis methods employed lexicon-based approaches, which matched words with predefined sentiment scores. However, these methods often failed to account for context, sarcasm, and idiomatic expressions.

Deep learning models have significantly advanced sentiment analysis by learning from large datasets to recognise complex patterns and contextual cues. The effectiveness of embedding techniques like Word2Vec and GloVe combined with bidirectional architectures such as Bi-LSTM and Bi-GRU in capturing the polarity of tweets has been demonstrated [

4]. This highlights the importance of contextual embeddings in improving sentiment classification accuracy.

Aspect-based Sentiment Analysis (AbSA) has emerged as a specialised domain of sentiment analysis, offering a more granular approach by analyzing sentiments at the level of specific aspects or entities within text. Unlike traditional sentiment analysis, which often evaluates the overall tone of a document or sentence, AbSA focuses on determining sentiments tied to distinct aspects, enabling a better understanding of user opinions [

16,

17,

18]. Despite its potential, the field presents several challenges that continue to inspire research and innovation.

One major challenge in AbSA is aspect extraction (AE), which involves identifying explicit aspects, implicit aspects, and Opinion Target Expressions (OTEs). Researchers have explored various machine learning and deep learning approaches to improve AE. However, handling cross-domain and cross-lingual scenarios remains a significant hurdle, and effective mapping of relationships between aspects and sentiments is an ongoing concern [

19].

Similarly, the classification process, which assigns sentiment polarities to extracted aspects, faces its own set of difficulties. Models must account for interactions, dependencies, and contextual–semantic relationships between aspects, sentiment words, and surrounding text. Challenges such as multiword targets and managing inter-aspect dependencies remain active areas of research [

20].

Another emerging area within AbSA is sentiment evolution (SE), which seeks to understand and predict how sentiments about aspects change over time using mechanisms such as social network analysis or epistemic network analysis. Recognising dynamic factors such as cognitive attitudes, social behaviours, and external influences poses a significant challenge. Additionally, predicting these changes in dynamic social media environments or within a blended learning context is an open problem that requires further exploration [

21].

2.3. Advanced Neural Network Architectures for Text Analysis

Many deep learning architectures have facilitated text analysis innovations in recent years. Originally designed for image recognition, Convolutional Neural Networks (CNNs) have been reinterpreted for NLP applications by means of convolutional filters over embedded matrices to gather local textual information. Especially in large annotated corpora, these filters greatly improve classification performance and are effective at spotting n-gram-like patterns [

22]. CNNs by themselves, nevertheless, might occasionally find it difficult to handle longer dependencies inherent in natural language.

Using gated techniques to store and retrieve information across long text sequences, Recurrent Neural Networks (RNNs), especially Long Short-Term Memory (LSTM) networks [

23] and their bidirectional variants (i.e., BiLSTM), address this problem. Effective in representing temporal and contextual interactions, these designs fit text categorisation, sentiment analysis, and other applications using sequential data. Nevertheless, because of vanishing or exploding gradients, conventional RNNs can still find difficulty processing extremely long documents.

Most famously BERT transformer-based models enhance these powers through multi-head attention systems, enabling them to manage whole sequences in concurrently rather than step by step. BERT achieves state-of-the-art performance on several NLP benchmarks by learning context from both left and right tokens using a bidirectional training target. Transformers can, however, sometimes overfit smaller domain-specific datasets and can be computationally costly; hence, careful fine-tuning is advised.

Hierarchical Attention Networks (HANs) [

24] mix recurrent units with attention at both word and sentence levels. HANs give better-grained control over which phrases or sentences are most important in classification by modelling text in a hierarchical manner, with words arranged into sentences and then sentences arranged into documents. For wine reviews, which frequently feature several lines with different descriptions of flavour, scent, and quality, this is particularly important. Moreover, attention mechanisms enable the model to focus especially on domain-specific vocabulary, thus possibly catching minor variations in the wine language [

25].

These cutting-edge designs, CNNs, RNNs (LSTM/BiLSTM), transformers (BERT), and hierarchical models (HAN) offer, all around, complementary methods to text analysis. Their interaction with rich word embeddings (e.g., Word2Vec, GloVe) improves semantic understanding, therefore facilitating more accurate classification of complex material, including expert wine evaluations. The next sections address how these designs might be modified and assessed for the specific goal of wine review classification.

2.4. Analyzing Wine Reviews: Challenges and Techniques

2.4.1. The Complexity of Wine Language

When it comes to analysing wine categories based on evaluations, one must first understand the wine language, or winespeak. People who often drink or taste wine have their own vocabulary when referring to the acidity of the wine, sweetness, level of alcohol, flavour, colour, or other wine properties. Of course, its flavour or taste is the most subjective and generous characteristic, having associations with fruits, flowers, spices, herbs, or chocolate. This feature is quite similar with coffee picking. They both have resemblances in the arts where music, paintings, or sculptures may have different interpretations due to the subjectivity, sensibility, or experience of the consumer.

Reviews of the literature on wine reviews are performed in different papers to determine if the language and expressions used in such reviews influence the price of the wines. For instance, Ref. [

26] tests the following hypotheses supported by the study: Higher-priced wine reviews will be more verbose; wine reviewers will use more emotional language with lower-priced wines; wine reviewers will use more logical language with higher priced wines; and wines of higher price will have more social language in reviews. It was stated that sophisticated reviews usually appear for expensive and therefore better wines, while concise and straightforward comments accompany cheap wines. In any case, understanding wine language increases a consumer’s confidence in selecting a wine kind and knowing what to expect.

Online marketing efforts became extremely important and necessary as the COVID epidemic raised the user count of social media networks and the time spent online. Any product can become prominent and viral with great promotion and sharing. The social internet caused surprising points of view or products to emerge suddenly. One study [

27] revealed how wine companies use Instagram in response to visual communication tools and demand to be in trend with brand reputation showing. It underlines the need of not just the language one uses but also of who speaks it and over what channels of communication. Another study [

28] examined the digital presence and consumer engagement of 311 Greek wineries on Facebook and Instagram between 2019 and 2022, including during the COVID-19 epidemic. The results show consistently low degrees of interaction on wineries’ profiles, which emphasises the need for more study on winery marketing strategies and implies that the suggested three-phase social media analytics methodology can act as a model for improving brand engagement across many sectors.

Red and white wines were lexically analysed from the reviews points of view [

29]. Wine expert reviews are more analytical and with many details when it comes to red wines and more impersonal and credible for white wines. Therefore, white wine reviews are considered more reliable.

Whether the reviewer is expert or not, they might use many metaphors when describing the properties of wine, depending on their knowledge and background. Expressions coming from textiles (e.g., silky, velvety, satiny, pillowy), architecture (e.g., structure and balance), physiology (e.g., breeding or ageing), and anatomy (e.g., skeleton or spine) may occur in the language that describes wine [

30].

A quantitative analysis indicated that wine reviewers mainly use the WAW (Wine Aroma Wheel) and WSET (Wine and Spirit Education Trust) standard vocabularies composed by oenologists in 1984 and 2023 [

31]. Only 10.5% of reviewers have other wine language patterns, which include the terms “juicy”, “dark”, “crisp”, “firm”, and “structured” with the highest frequency.

2.4.2. Machine Learning and Neural Networks in Wineinformatics

At the intersection of data science, artificial intelligence, and oenology, the field of wineinformatics was proposed. It is used mostly for wine quality prediction, but also it has other applications like market analysis, viticulture optimisations, or flavour profiling. Wine quality prediction is performed using machine learning models on reviews, feedback, or wine competitions.

Machine learning applications in oenology include quality prediction, variety classification, market analysis, and viticulture optimisation. Comparative studies demonstrate the effectiveness of ensemble methods in wine quality prediction. According to one study, AdaBoost and Random Forest excel in classifying Pinot Noir [

32], while another finds Random Forest to be especially successful at predicting white wine quality [

33].

Classifying numerical data is relatively straightforward, but the textual elements of wine reviews and feedback introduce linguistic nuances that demand more sophisticated methods. Neural networks, including CNN, BiLSTM, and BERT, have proven particularly adept at modelling these complexities. For instance, research applying ANNs to wine technology illustrates their ability to uncover intricate patterns in data that simpler approaches may miss [

34].

Text analysis in wine research has shifted from traditional machine learning to deep learning, which employs architectures such as Feedforward Neural Networks (FNNs), Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), and transformer networks (such as BERT). One study [

35] used acidity, sugar, and alcohol content to predict red and white wine quality. Another dual-method technique employed exploratory data analysis and a VGG19 CNN [

36] to estimate the likelihood of distinct quality groups, demonstrating deep learning’s ability to capture complex connections between chemical properties and overall quality. NLP research has also showed potential in the analysis of consumer-facing wine data such as ratings, reviews, and social media posts. A recent study [

37] developed a recommendation system that uses BERT to identify wine varietals, hence raising consumer expectations and overall quality perception.

Data from an electronic nose (i.e., an intelligent sensor system utilised in the food and beverage industries) was examined [

38] utilising Artificial Neural Networks (ANNs) and basic machine learning techniques such as KNN and SVM. A similar detection method employed a backpropagation neural network (a form of FNN) to classify wines and liquors [

39]. Deep learning models have demonstrated remarkable effectiveness in extracting knowledge from tough datasets, frequently improving through synergy with other analytical methods. For example, merging feedforward neural networks with evolutionary algorithms resulted in accurate prediction of fermentation factors [

40]. Another study [

41] used molecular composition and ANN-based spectroscopic analysis to categorise grape varietals and measure ripeness, while research on hyperspectral images of grape fruit [

42] shows that CNNs, Inception Time Networks, and MiniRocket can estimate sugar content.

In terms of market analysis, text mining wine reviews indicated diverse customer profiles across countries. The American market loves berry-like, chocolate, and citrus overtones, whilst the British market prefers jammy, berry, cherry, and dark chocolate flavours. Chinese consumers want refreshing, delicate flavours with mellow scents [

43]. Consumer reviews have also been harvested from Twitter to identify sentiment towards red wines [

44]. In terms of variety categorisation, Lefever et al. [

45] employed text mining on wine reviews to forecast a wine’s colour, grape variety, and country of origin. Chemometric and sensometric approaches [

46] and language processing techniques [

47] have also been used.

Recent advances in wineinformatics show a growing trend toward integrating sophisticated data analysis techniques into oenology. One notable approach employs a tri-clustering algorithm that discovers latent groupings spanning wine characteristics, attributes, and vintage information [

48]. Furthermore, other researchers used Naïve Bayes and SVM classifiers to unravel the complexities inherent in Bordeaux wines [

49]. Adding further depth to the field, a recent contribution introduces the Computational Wine Wheel, a natural language processing tool specifically designed to sift through wine reviews [

50]. However, neural networks outperformed both Naïve Bayes and SVM on a Bordeaux dataset comprising more than 10,000 wines [

51].

Most wine reviews focus on quality predictions or on classical text mining using AdaBoost, Random Forest, Siamese Neural Networks, or CNNs. Hierarchical Attention Networks (HANs) were poorly applied to wine reviews, and thus using HAN models for wine classification reflects the complexities of the wine language.

Extrapolating, classifying wine reviews can also be applied to assortments of coffee, chocolate, beer, or tea and even to food pairing (using Generative Adversarial Networks for approaching generative artificial intelligence [

52] or Siamese Neural Networks, i.e., twin neural networks [

53]).

Collectively, these studies highlight the promise of deep learning techniques for identifying links and trend analysis in wine datasets. They yield scalable, accurate solutions for quality assessment and control while also proving that wine language can predict key features, with review length and word choice influencing pricing and rating.

2.5. Bridging Textual Analysis and Visual Content Generation

2.5.1. Generative Models and Marketing Innovation

The field of digital marketing is one of the most dynamic areas of growth for a company and recent developments in technology coupled with the widespread accessibility of digital devices are leading to its evolution from a subcategory of classic marketing to a new, almost independent field. As V. Soni [

54] highlights, digital marketing is increasingly focusing on the user, and measurable interactive and generative models are playing an essential role in this evolution.

Generative artificial intelligence models (GenAI) offer revolutionary capabilities for marketing, a field that, according to [

55], is predicted to experience the greatest impact from this technology, with projected productivity gains of up to 15% in marketing expenditure, translating to an estimated

$463 billion annually. GenAI can generate personalised marketing content, such as product descriptions, emails, and advertisements tailored to individual preferences and behaviours [

56], it can produce dynamic advertisements that adapt in real time based on user behaviour or market trends, or it can automate the analysis of consumer feedback, social media data, and market trends [

57]. Thus, it is surprising that many companies have already begun leveraging GenAI to carry out essential and innovative marketing initiatives. A study by [

58] that involved several marketing professionals showed that all the specialists interviewed had already explored the possibilities offered by different text-to-image models, such as DALL-E, Midjourney, or Stable Diffusion.

The DALL-E model can convert textual inputs into vivid visual representations. By mapping abstract textual descriptions into detailed images using embeddings derived from language models, such as GloVe or GPT, models like this bridge the gap between linguistic data and creative visual outputs, thus facilitating the creation of targeted advertisements and enabling deeper consumer engagement. Studies show that personalised marketing materials generated using AI increase click-through and conversion rates by more than 30% [

59]. In addition, using AI-generated content not only reduces production costs and time but also increases efficiency, empowering companies to innovate, foster engagement, and expand their reach in a competitive market.

2.5.2. Applications in the Wine Industry

The wine industry, known for its deep connection to tradition and craftsmanship, would greatly benefit from exploring the potential of GenAI to implement innovation in areas such as marketing, product design, and consumer education while maintaining their artisanal roots.

We have already established that wine aficionados have their own rich vocabulary when describing different product characteristics and this vocabulary usually references associations with vivid imagery. Wine reviews are often times almost artistic in their description of the product and the wine industry can benefit from combining textual analysis of reviews with visual content generation. By extracting key descriptors from reviews, wineries can create imagery that reflects the sensory attributes and narratives associated with their products.

By extracting key descriptors from reviews, wineries can create imagery that reflects the sensory attributes and narratives associated with their products. As Refs. [

60,

61] emphasise, the connection between sensory descriptors and visual narratives fosters a stronger emotional bond with consumers. Using generative models like DALL-E [

62] to convert sensory descriptors into visually compelling imagery would enhance brand perception and consumer loyalty. The consumers’ understanding and appreciation of complex wine characteristics can be significantly enhanced by translating sensory data into visuals. For example, a wine described as “crisp with notes of green apple” can be represented with imagery that reflects freshness and lightness [

63].

Another side of the wine industry in which generative models could have a significant impact is the design of wine labels, which represent a critical marketing tool allowing products to stand out in competitive markets. The best use of AI capabilities in this area would be to generate customised label designs based on sensory keywords extracted from wine reviews. Ref. [

64] indicated that aesthetically engaging labels significantly influence consumer purchasing decisions, making this application both practical and profitable. Moreover, when creating such personalised designs, specific cultural and regional preferences, both general and specific ones resulting from textual product reviews, can be more easily considered. For example, in the Chinese market, where elegance and tradition are attributes highly appreciated by consumers, generative models could create designs that include subtle patterns and traditional motifs that align with local tastes [

43]. By bridging cultural nuances with advanced AI technologies, wineries can expand their global appeal.

2.6. Applications of Neural Networks in E-Commerce

There is little doubt that e-commerce’s future is data driven, customer-centric, and highly experiential. AI models enable marketers to listen to the customer’s voice at scale, distilling actionable sentiment and intent signals from a constant flow of data.

Recent reviews on machine learning (ML) and deep learning (DL) in e-commerce show several research directions, including sentiment analysis, recommendation systems, disinformation detection, fraud detection, prediction of customer churn, and product classification [

65,

66,

67]. Researchers increasingly employ transformer-based models, such as BERT and GPT, that have the ability to capture nuanced contextual meanings, outperforming earlier approaches based on RNNs or CNNs. Hierarchical Attention Networks (HANs) can effectively summarise lengthy customer reviews by identifying critical sentences or aspects [

68]. Deep learning techniques and neural factorisation can aid in personalisation, enabling e-commerce platforms to offer more precise product recommendations adapted to individual user behaviour [

69]. Meanwhile, CNN-based and hybrid neural network approaches can distinguish genuine reviews from manipulated reviews, reinforcing trustworthiness on e-commerce platforms [

70] with the help of rules-based classifiers. Although powerful, these models present ongoing challenges, including multilingual application difficulties, interpretability issues, and computational complexity, particularly with respect to real-time processing [

71].

Another recent trend in digital commerce is AI-driven marketing personalisation. Researchers have been developing sophisticated recommender systems and targeting algorithms that go beyond one-size-fits-all approaches. Yıldız et al. (2023) [

72] present a hyper-personalised product recommendation system for fashion retail. Their approach integrated multiple data mining techniques: (1) RFM-based customer segmentation to group shoppers by behaviour, (2) association rule mining on each segment (considering location and purchase history) to learn preferences, and (3) a recommendation engine tailored to each segment’s profile. By segmenting customers and then providing group-specific recommendations, this system attempts to address the gap between individual personalisation and scalable marketing. The result is a more context-aware recommender that can, for example, suggest seasonal apparel relevant to a customer’s region and buying patterns. In this regard, the common thread is using AI to learn from consumer interactions (clicks, views, past purchases) and then optimise the marketing mix for each consumer.

2.7. Synthesis and Research Gap

As established through the literature review, significant progress has been made in textual and sentiment analysis, enhancing their applicability across diverse domains, including wine reviews. These advances allow us to better understand subjective content, with new subdomains such as AbSA (Aspect-based Sentiment Analysis) giving us the opportunity to capture finer details regarding the consumer perceptions of wines, wineries, or producers.

Likewise, developments in neural network topologies, notably Convolutional Neural Networks, Inception Recurrent Convolutional Neural Networks, and BERT models, have pushed the boundaries of text analysis capabilities providing reliable and accurate predictions and demonstrating robust generalisation abilities.

However, there are also a few challenges that must be considered gaps when looking at the application of such techniques, especially in the particularly complex field of oenology.

Research in computational wine analysis has revealed significant challenges in processing review text. Current methods struggle with context-dependent interpretations and regional variations in wine descriptions. These linguistic complexities particularly affect Aspect-based Sentiment Analysis, as overlapping references and subjective expressions make it difficult to accurately identify and classify wine components. Reviewer preferences and cultural backgrounds further compound these challenges, affecting how sentiments are expressed and interpreted across different markets.

Despite these advances, gaps exist in the application of advanced text categorisation and sentiment analysis techniques to specialised domains such as wine reviews. Current models can struggle to capture the subtle vocabulary, complicated descriptions, and industry-specific terminology found in wine-related literature. Aspect-based Sentiment Analysis (AbSA) can have difficulties in accurately extracting and understanding implicit aspects and multi-word expressions specific to this discipline. Furthermore, the issue of class imbalance exists in wine evaluations, where specific sentiment classes or characteristics may be underrepresented, compromising the strength of classification algorithms. The distinct vocabulary and interpretive elements of wine reviews pose unique analytical challenges. More advanced language models would enhance our understanding of wine evaluation and description practices.

Deep learning models are widely employed in text analysis; however, the adoption of advanced models like Hierarchical Attention Networks (HANs) for wine review classification has been relatively limited. In instances where the HAN has been applied, its effectiveness in sensory descriptor extraction has varied, suggesting a need for more tailored approaches.

Another related area for which relatively limited research has been conducted so far is the one regarding the possibilities of connecting textual analysis and visual content generation. Generative AI has shown considerable promise in developing visual content for personalised marketing materials. Furthermore, several advantages of using generative models such as DALL-E or Midjourney have been clearly identified, such as increasing conversion rates, reducing necessary production resources, and increasing efficiency. However, there is still a scarcity of literature on combining textual analysis with other data modalities, such as sensory profiles or regional characteristics, to enhance wine categorisation.

Moreover, the scarcity of large-scale, annotated datasets specific to wine reviews presents a significant barrier to the development and validation of robust text analysis models.

The goal of our research is to further explore the application of advanced neural network architectures to classify wine reviews and extract the salient characteristics that define the quality and style of wine, as well as to investigate the possibilities of automatically generating narratives based on topic modelling results. To achieve this, we scraped a large dataset from Winemag.com, which includes over 46,000 wine reviews collected between 2020 and 2023. This dataset contains diverse attributes such as ratings, descriptions, prices, and wine origins, providing a rich foundation for our analysis. We will evaluate the performance of four different neural network models on a wine classification task: the Hierarchical Attention Network (HAN), the Spectral Hierarchical Attention Network (SpectralHAN), HAN augmented with pre-trained GloVe embeddings (HAN + GloVe), and a Long Short-Term Memory network with GloVe embeddings (LSTM + GloVe).

3. Methods

Figure 1 presents the general methodology of our study, illustrating the process from data collection through analysis to the generation of marketing content. The process consists of five main stages: (1) data collection and pre-processing of wine reviews, (2) sentiment analysis using multiple tools, (3) neural network modelling with four different architectures, (4) characteristic extraction from high-quality reviews, and (5) marketing content generation. Each stage builds upon the results of previous stages, creating a framework for wine review analysis and marketing content generation. The following subsections detail each component of this pipeline.

3.1. Data Scraping

We constructed a web scraper in Python 3.9.12 to collect wine reviews from Winemag.com. It uses BeautifulSoup to parse HTML content from multiple pages, extracting details like ratings, descriptions, prices, and wine origins. The structure of the extracted data is shown in

Table 1.

Table 2 presents the number of wine reviews scraped over a four-year period from 2020 to 2023.

The country distribution of the reviewed wine is shown in

Table 3.

As highlighted in

Table 3, in our dataset, the United States has the highest number of winery reviews, with more than 21k, significantly exceeding France and Italy. Other countries like Portugal, Spain, and Australia also have notable representation, while smaller wine-producing countries such as Uruguay and Romania are also represented.

After the scraping process was completed, the data was saved to a JSON file which was used for the next steps of the methodology.

3.2. Data Preprocessing

The data were read from the JSON file constructed during the data scraping step. By using NLTK, a popular text processing Python library, we initiated our preprocessing by tokenising text and applying fundamental cleaning steps—converting to lowercase and removing common words (like “the”, “is”, “at”) and punctuation marks. This created a cleaner corpus where each review was represented as a sequence of meaningful words.

To build our vocabulary, we analyzed how frequently each word appeared across the training dataset. Words appearing less than five times were filtered out to reduce noise and keep the vocabulary manageable. We then created a system to convert words into numbers that our model could understand, including special markers to padding shorter texts and handling new words not seen during training.

Given the hierarchical nature of our model architecture, we used a two-level structure to process reviews. Each review was broken down into sentences, with a limit of 15 sentences per review. We limited the word count in each sentence to 50. Reviews that exceeded these limits were trimmed, while shorter ones were padded to ensure consistent dimensions. This hierarchical structuring balances structural information with fixed input sizes via padding and truncation.

3.3. Sentiment Analysis

VADER, TextBlob, and Flair are tools for sentiment analysis, each with its own approach, strengths, and weaknesses, as further discussed in

Table 4. VADER [

73] and TextBlob [

74] are mainly lexicon-based tools for sentiment analysis. VADER evaluates the emotional intensity of words and phrases, providing sentiment scores on a scale from −1 (most negative) to 1 (most positive), with 0 indicating neutral sentiment. TextBlob offers comparable sentiment analysis but also supports aspect-based analysis, allowing it to identify specific subjects or topics in the text and assess their associated sentiment polarity. Flair [

75] employs neural network models and contextual string embeddings, allowing it to capture more nuanced sentiment based on the surrounding context of words and phrases. These techniques have been widely used together or apart to capture the public sentiment on a wide range of problems from sustainability [

76] to political discourse [

77].

Using the tools from

Table 4 we assessed the sentiment polarity of the reviews from our dataset and analyzed the results through correlation matrices, descriptive statistics, and percentages of positive sentiments identified by each tool. The results are shown in

Table 5.

The correlation between sentiment scores and critic ratings is low, 0.316 for VADER, 0.131 for TextBlob, and 0.282 for Flair, showing that a high sentiment score does not necessarily map to a high wine rating and vice versa. Descriptive statistics reinforce the point: all three tools mark most reviews as positive (80.94% for VADER, 80.21% for TextBlob, and a striking 91.10% for Flair). Such skew may reflect the generally appreciative tone of professional wine prose, yet it also suggests that generic analyzers overestimate positivity because they miss subtle critiques embedded in specialised language.

To test whether the U.S.-heavy composition of the corpus biases these results, we compared reviews about U.S. wines with those about all other countries combined. The mean compound polarity differs by only 0.037 points (0.478 vs. 0.515), and the correlation with critic score shifts by just 0.06 (0.29 vs. 0.35). These small gaps imply that, within English-language notes, the generic tools do not systematically inflate or deflate sentiment for non-U.S. wines.

Even so, generic analyzers often invert the polarity of prized wine descriptors.

Table A1 from the appendix lists ten tasting note fragments that include approving cues a sommelier would value, phrases such as ’light, crisp, and refreshing’, ’zesty nose’, ’ripe acidity’ and ’familiar and likable’, but each fragment receives a neutral (0.00) or negative compound score. This mismatch hints at how general lexicons misread oenological language and underscores the need for a domain-tuned sentiment model.

Together, these findings highlight the limitations of off-the-shelf tools for wine discourse and motivate training a domain-specific analyzer.

3.4. Neural Network-Based Data Analyses

We used complex neural networks to classify wine quality based on written descriptions starting from the specialised language extracted from reviews. If a wine’s point score is 90 or higher, we classify it as high quality; otherwise, it is labeled as not high quality.

We split the data into three sets: training, validation, and test. First, we set aside 20% of the data for the test set. Then, from the remaining 80%, we took 12.5% for validation (which amounts to 10% of the total data). This setup allows us to train the model on the training set, use the validation set to tune hyperparameters or early stopping, and keep the test set completely held out for the final evaluation and comparison between models.

3.4.1. Hierarchical Attention Network (HAN)

The Hierarchical Attention Network [

24] was adapted to better understand the hierarchical structure of documents, capturing both word- and sentence-level representations. Initially, each word in a sentence was converted into an embedding vector using a pre-trained embedding matrix. A bidirectional Gated Recurrent Unit (GRU) processed these embeddings to produce hidden states for each word. Subsequently, word-level attention processes were employed on these hidden states to derive a weighted representation of the sentence, highlighting terms that significantly enhance its meaning.

For each word in sentence , we obtained the embedding and computed the hidden state using a bidirectional GRU. The word context vector was calculated as and the attention weights were obtained using a softmax function on the dot product with a word-level context vector . The vector of the sentence is calculated as the weighted sum of the hidden states of the word.

The sentence vectors were processed through another bidirectional GRU to capture inter-sentence dependencies and sentence-level attention was applied similarly to obtain the document representation v. Finally, this document vector v was passed through a softmax classifier to produce the probability distribution over classes.

The model uses the Adam optimiser and binary cross-entropy loss.

3.4.2. Spectral-Normalised Hierarchical Attention Network (S-HAN)

The Spectral-Normalised HAN extended the HAN model by incorporating spectral normalisation to improve training stability and generalisation [

84]. Spectral normalisation constrains the Lipschitz constant of the network by normalising the weight matrices. Specifically, for each weight matrix

W in the network, we computed the largest singular value

using the power iteration method and scaled the weights as

. We apply this normalisation to all weight matrices in the attention mechanisms and GRU layers while maintaining the same overall architecture as HAN but with normalised weights ensuring controlled spectral norms. By implementing spectral normalisation, we prevent the problem of exploding or vanishing gradients and promote smoother optimisation landscapes. The complete implementation is detailed in Algorithm 1.

| Algorithm 1 Hierarchical Attention Network with Spectral Normalisation |

Require: Document consisting of sentences Ensure: Classification probability y /* Spectral Normalisation Function */ 1: function Spectral Normalize(W) 2: 3: for to num_iterations do 4: 5: 6: end for 7: 8: return 9: end function /* Apply Spectral Normalisation to Attention and Classifier Weights */ 10: Spectrally normalize attention and classifier weights: 11: 12: 13: 14: 15: /* Word Encoder and Attention */ 16: for each sentence do 17: for each word in do 18: 19: end for 20: 21: for to do 22: 23: end for 24: 25: 26: end for /* Sentence Encoder and Attention */ 27: 28: for to N do 29: 30: end for 31: 32: /* Classification */ 33: 34: return y

|

Spectral normalisation helps neural networks generalise better in several ways. It does this by limiting how much each layer can change its output based on input changes. This makes the model more stable and less likely to overreact to small differences in data. It also acts like a brake on the network’s weights, keeping them from getting too big. This helps prevent overfitting, where a model learns the training data too well and cannot handle new information.

3.4.3. Long Short-Term Memory Network (LSTM) with GloVe Embeddings

The LSTM model [

23] can capture long-term dependencies within the text by sequentially processing the input sequence of words.

As shown in Algorithm 2, our implementation uses pre-trained GloVe embeddings [

85] to enhance the model’s understanding of word meanings and similarities. The wine reviews dataset, which includes labels showing the rating of each wine and textual descriptions of the wines themselves, is still the main data utilised to train an LSTM model. During training, the model takes in this data and learns patterns, attitudes, and language usage relevant to its domain. As a result of rigorous training on massive, general-purpose text corpora, GloVe embeddings give a rich, general-purpose semantic representation of words. These corpora include Common Crawl and Wikipedia. With the help of the GloVe embeddings, which provide the model with pre-existing knowledge about word meanings and associations, the model can optimise its parameters to minimise loss on the wine reviews dataset. By learning patterns particular to wine reviews, training on the data, and fine-tuning the embeddings, the model becomes domain-specific. Starting with pre-trained, embeddings provides a solid foundation for the model, often leading to faster convergence during training and potentially better performance on general language aspects. Furthermore, by keeping the embedding layer trainable and handling Out-of-Vocabulary (OOV) words appropriately, the model can adapt the pre-trained embeddings to better fit the wine reviews’ domain.

An embedding matrix is created for the model’s vocabulary, initialising the embeddings with corresponding GloVe vectors. The model then maps each word in the input sequence to its embedding vector, creating a sequence of embedding vectors that represent the semantic content of the text. The sequence is fed into a bidirectional LSTM network, which updates its hidden and cell states based on the current input and previous states. The model computes the mean of all hidden states across the sequence, providing a comprehensive representation that captures the overall context. Next, it is trained using a suitable loss function and an optimiser (i.e., Adam), ensuring it does not become biased toward the majority class. The Adam optimisation algorithm [

86] is an extension to stochastic gradient descent that has gained popularity for deep learning applications, including natural language processing. It is designed to update network weights iteratively based on training data, offering several benefits such as simplicity, computational efficiency, minimal memory requirements, and adaptability to diagonal rescaling of gradients. This approach presented offers several advantages, including semantic enrichment, improved generalisation, fine-tuning, domain-specific vocabulary, mean pooling over hidden states, and robustness to vocabulary gaps.

| Algorithm 2 Long Short-Term Memory Network with GloVe Embeddings |

Require: Input sequence of words Ensure: Classification probabilities /* Initialize Embedding Layer with GloVe Embeddings */ 1: Load pre-trained GloVe embeddings 2: Build vocabulary from training data 3: Initialize embedding matrix where d is embedding dimension 4: for each word do 5: if w in GloVe vocabulary then 6: ▹ Assign pre-trained GloVe embedding 7: else 8: Initialize randomly ▹ For words not in GloVe 9: end if 10: end for /* Embedding Layer */ 11: for to T do 12: ▹ Map word to embedding vector 13: end for /* LSTM Encoder */ 14: Initialize hidden state and cell state to zero vectors 15: for to T do 16: ▹ Forget gate 17: ▹ Input gate 18: ▹ Candidate cell state 19: ▹ Update cell state 20: ▹ Output gate 21: ▹ Update hidden state 22: end for /* Sequence Representation */ 23: Compute mean of hidden states: /* Classification Layer */ 24: Apply dropout: 25: Compute logits: 26: Compute class probabilities: 27: return

|

3.4.4. Hierarchical Attention Network (HAN) with GloVe Embeddings

The LSTM + GloVe approach previously discussed treats the entire text as a sequence of words and processes it using an LSTM network, capturing temporal dependencies but potentially struggling with long documents due to issues like vanishing gradients and computational complexity. To address these problems, in the algorithm presented in Algorithm 3, we also used GloVe embeddings to improve the HAN algorithm. The semantic richness of GloVe embeddings is useful for both LSTM and HAN models, but the latter’s design makes it possible for these embeddings to engage with attention mechanisms on several levels, which can result in more accurate feature representations.

Tuning hyperparameters and allocating computational resources with precision is necessary for HAN because of its increased complexity caused by extra GRU layers and attention processes. Nevertheless, compared to the LSTM method, the model’s increased complexity allows it to grasp more subtle data patterns, which frequently leads to improved generalisation and improved classification accuracy.

We can think of GloVe embeddings as a language processing agent that has learned a great deal about the links between words and their meanings via studying a wide variety of texts. Although it lacks subject expertise, this agent has a general grasp of language semantics. The agent can concentrate on and learn domain-specific information with the help of the HAN, which functions as an adaptive mechanism while processing specialised content such as wine evaluations. Words like “tannin,” “bouquet,” “oak,” and “finish” are specific to wine evaluations and are essential for the agent to recognise and highlight in order to grasp the finer points of the text. To determine the overall emotion or quality evaluation of the wine, the AI can prioritise sentences at the phrase level using the attention mechanism. For example, words that emphasise notable qualities or offer comparative assessments are given greater weight. The agent improves its general language representations to match the specialised vocabulary and stylistic expressions of the wine industry by training on the specific dataset of wine reviews. By providing a hierarchical framework for the texts, the HAN makes it easier for the agent to adapt by directing its attention to the most informative words and sentences.

| Algorithm 3 Hierarchical Attention Network with GloVe Embeddings |

Require: Document consisting of sentences Ensure: Classification probabilities /* Load Pre-trained GloVe Embeddings */ 1: Load GloVe embedding matrix /* Initialize Embedding Layer */ 2: Build vocabulary from training data 3: Initialize embedding matrix 4: for each word do 5: if w in GloVe then 6: 7: else 8: Initialize randomly from 9: end if 10: end for /* Word Encoder and Attention */ 11: for each sentence do 12: for each word in do 13: ▹ Embed word 14: end for 15: 16: for to do 17: 18: end for 19: Compute word attention weights: 20: 21: Compute sentence representation: 22: end for /* Sentence Encoder and Attention */ 23: 24: for to N do 25: 26: end for 27: Compute sentence attention weights: 28: 29: Compute document representation: /* Apply Dropout */ 30: /* Classification Layer */ 31: Initialize classifier weight using Xavier initialisation 32: Compute logits: 33: Compute class probabilities: 34: return

|

3.5. Extracting Wine Characteristics and Generating Marketing Images

Next, we employed a multistage natural language processing approach to extract salient characteristics from high-scoring wine reviews and generate image prompts for AI-based visualisation to be used for marketing purposes. The approach is shown in Algorithm 4.

| Algorithm 4 Wine Review Image Prompt Generation |

Require: DataFrame D containing wine reviews and scores Ensure: Set of image prompts P Parameters: N: Number of top wines to consider K: Number of top characteristics to extract M: Number of prompts to generate /* Extract Top Wine Reviews */ 1: /* Extract Characteristics */ 2: 3: for each review do 4: 5: 6: 7: 8: end for /* Select Top Characteristics */ 9: /* Generate Prompts */ 10: 11: for to M do 12: 13: 14: 15: end for 16: return P 17: function GeneratePrompt() 18: 19: 20: for each characteristic do 21: 22: end for 23: 24: } 25: return 26: end function

|

As shown in Algorithm 4, the top N wines, ranked by their point scores, were selected for analysis to ensure a focus on the characteristics of premium wines (line 2). Each review was tokenised (line 6), followed by bigram extraction (line 7), and part-of-speech tagging (line 8). The algorithm specifically selects adjectives (tagged as JJ) and nouns (tagged as NN), which usually carry the most descriptive information in wine reviews, along with the extracted bigrams (line 9).

The extracted characteristics, including individual words and bigrams, were combined from all reviews (lines 4–10). These characteristics were then ranked by frequency and the K most common characteristics were selected to represent the core attributes of premium wines in the dataset (line 12).

To generate prompts, we randomly sampled eight characteristics from the top K (line 15). A semi-structured template for image generation prompts was developed in the GeneratePrompt function (lines 17–26), incorporating the sampled characteristics and additional descriptive elements.

The algorithm does not explicitly categorise characteristics into predefined oenological dimensions (colour, body, taste, and aroma). Instead, it treats all characteristics equally, focusing on their frequency and random sampling for diversity (Csample). Then, it generates M unique prompts (lines 13–17), each designed to guide an AI image generation tool in creating visually compelling representations of premium wines for marketing purposes.

The GeneratePrompt function creates a base prompt and then adds visual elements for each characteristic sampled (lines 20–22). It also includes standard elements about lighting, background, and image quality (lines 23–24).

By sampling from the top K characteristics, the approach ensures variability in the generated prompts while maintaining a focus on the most relevant characteristics of premium wines, as determined by their frequency in top-rated wine reviews.

3.6. Evaluating the Generated Marketing Images

Inspired by the approach of Oppenlaender et al. (2024) [

87], we first sampled 20 diverse, wine-specific prompts. Each prompt was rendered once by ChatGPT 4o Image Generation and, using the identical text, once by Midjourney v6. ChatGPT returns a single image per prompt, whereas Midjourney outputs four variants; we consistently selected the first variant to avoid cherry-picking, yielding a corpus of 40 images (20 per generator).

For every image, participants later provided two 5-point Likert ratings: relevance (brief-fit) and appeal (commercial viability). After completing all image ratings, participants indicated their perceived stylistic difference between the two sets, a general preference, the factors that influenced their judgments (visual appeal, creativity, emotional appeal, brand/commercial viability, technical quality, realistic presentation), and basic demographics, including self-reported wine-marketing experience (1 = none, 5 = expert).

The evaluation ran on a custom Python/Flask website hosted on an Ubuntu virtual machine (Oracle Cloud) with Gunicorn, Nginx and Let’s Encrypt SSL. Gunicorn served the Flask app concurrently to multiple users, Nginx handled static content, and HTTPS encryption ensured secure transmission of responses.

The Web page consisted of four sequential pages: (1) participant information, (2–3) evaluation of Image Sets 1 and 2, and (4) demographic and post-evaluation questions. For each image, participants provided ratings on two 5-point Likert scales: relevance (how well the image matches the brief) and appeal (commercial viability for advertising). The images were presented with their corresponding briefs and the AI source was not disclosed to ensure blind evaluation.

Each session received a unique ID; all responses were stored row-wise in a comma-separated file with 91 columns (demographics, post-task items, and per-image ratings). The Web page’s source code and the 40 generated images are openly available in the GitHub repository cited in the Data Availability Statement.

4. Results

For RQ1, we correlated the three-level style difference scale (

1 =“Very similar”, 3 =“Very different”) with preference strength and with set-level rating gaps using Pearson’s

r. For RQ2, we decomposed binary factor checkboxes and ran point-biserial correlations plus logistic regressions featuring the interaction term (factor × expertise). For RQ3, we benchmarked four classifiers—HAN, Spectral-HAN, HAN + GloVe, and LSTM + GloVe. Accuracy, precision, recall, and macro-F

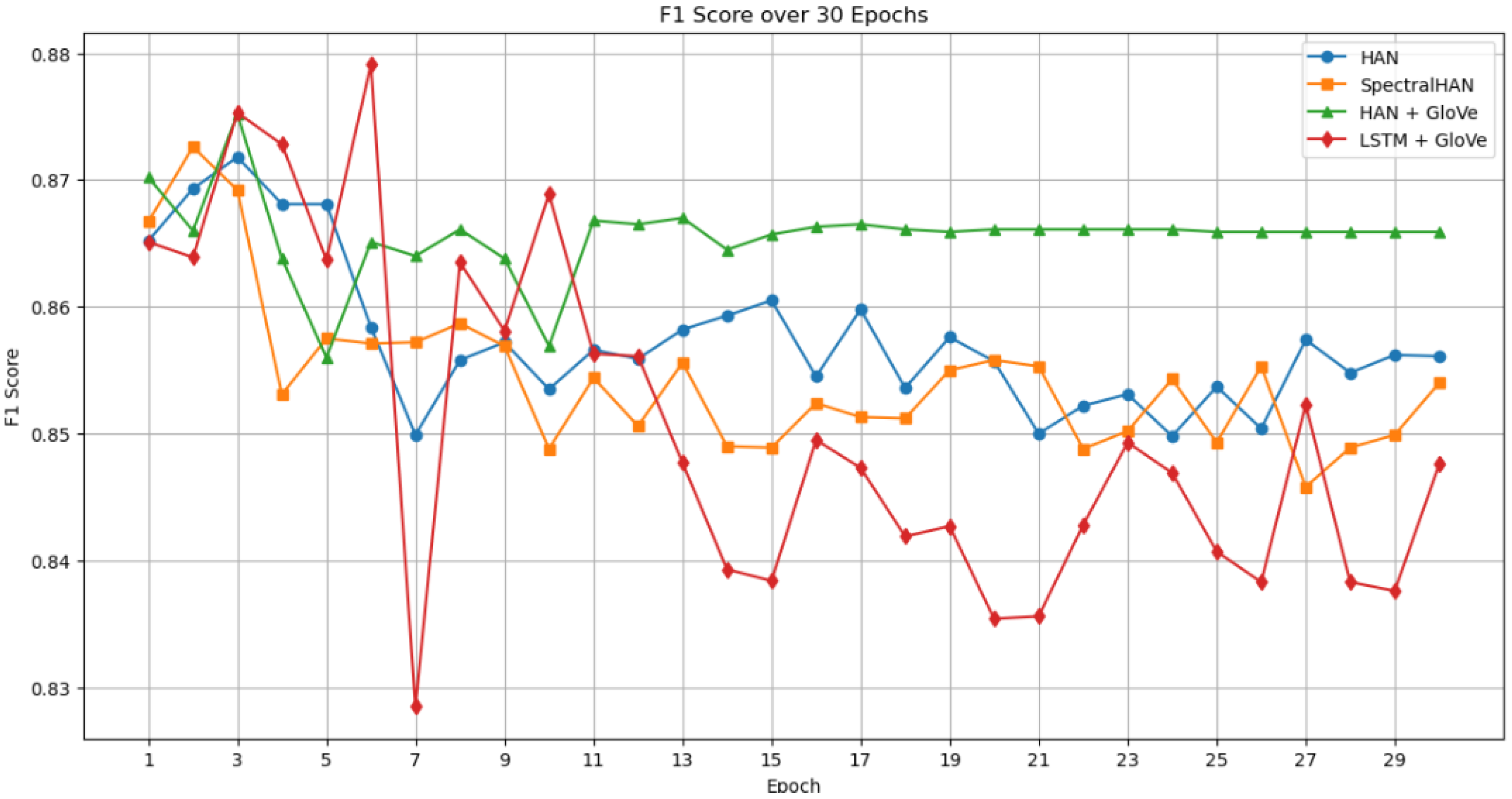

1 are summarised in

Table 6, while paired McNemar tests on held-out errors appear in

Table 7. Validation-epoch curves are plotted in

Figure 2 and

Figure 3. For RQ4, we compared a generic sentiment tool with our wine-tuned lexicon on a manually labelled subset of 1200 sentences. Polarity accuracy and macro-F

1 are reported in

Table 5; tool characteristics are contrasted in

Table 4.

All analyses were executed in Python 3.9.12 (pandas 2.2.3, scipy 1.13.1, statsmodels 0.15.0).

4.1. HAN Analyses

We evaluated the performance of four different neural network models on a wine classification task: the Hierarchical Attention Network (HAN), the Spectral Hierarchical Attention Network (SpectralHAN), HAN augmented with pre-trained GloVe embeddings (HAN + GloVe), and a Long-Short-Term Memory network with GloVe embeddings (LSTM + GloVe). The dataset was divided into a training set of 32,602 samples, a validation set of 4658 samples, and a test set of 9316 samples, with a vocabulary size of 6461 unique tokens.

Table 6 summarises the key performance metrics for each model, including accuracy, precision, recall, and F1 score across 30 training epochs.

Figure 2 and

Figure 3 illustrate the trends in validation accuracy and F1 scores over the training epochs for all models.

The learning patterns and performance characteristics of four models were evaluated on a wine classification task: Hierarchical Attention Network (HAN), SpectralHAN, HAN enhanced with GloVe embeddings (HAN + GloVe), and LSTM with GloVe embeddings (LSTM + GloVe). With a dataset of 4658 validation samples, 9316 test samples, and 32,602 training samples, we tracked how each model evolved and became more generalised over training.

HAN showed consistent performance, peaking at 83.98% validation accuracy early in training and staying relatively close to this level all the way to 82.57% at the end. SpectralHAN had a similar pattern, peaking early at 83.66% accuracy and finishing at 81.88%. HAN + GloVe achieved 84.71% peak accuracy, the highest of all models, and continued to perform well, completing at 83.75%. On the other hand, LSTM + GloVe equaled HAN + GloVe’s early performance, hitting 84.69%, but then showed a significant downturn in the last stages, finishing at 80.87%.

HAN + GloVe consistently provided high precision, peaking at 89.66% and remaining around 87% near the conclusion, indicating trustworthy positive identifications. LSTM + GloVe reached the highest precision of 90.73%; however this dropped to 82.38% in later epochs. SpectralHAN originally had the highest recall of 92.53%, indicating a good capacity to recognise positive cases early in training. The F1 scores for all models peaked between the second and sixth epochs, with HAN + GloVe and LSTM + GloVe both above 87%, indicating a great balance of precision and recall throughout the early training periods.

Notably, HAN + GloVe demonstrated exceptional stability throughout the training phase. There was minimal change in its validation accuracy and F1 score in later epochs after initial improvements peaked. The metrics of the HAN + GloVe model don not change after epoch 17, as we can see. Since the StepLR scheduler of the training code is set to decrease the learning rate by 0.1 every five epochs from an initial learning rate of 0.001, the HAN + GloVe model performance metrics hit a plateau after epoch 17. This indicates that the learning rate undergoes an exponential decay as the training progresses. It stays at 0.001 for the first five epochs, drops to 0.0001 for the sixth to tenth, rises to 0.00001 for the eleventh to fifteenth, and then continues to fall by an order of magnitude every five epochs. The learning rate drops to 0.000001 by Epoch 16, and it reaches 0.00000001 at epoch 26. Because the optimiser has very low learning rates, after Epoch 15 it is essentially updating the model’s parameters insignificantly. Since the gradient descent steps are so small, they have little effect on the model’s weights, so the model’s accuracy and loss are essentially constant throughout subsequent epochs. The training process comes to a standstill because the optimiser cannot improve the model’s performance by adjusting the parameters correctly. Despite our best efforts, no matter how we tweaked the decay factor, increased the step size, or switched to a different scheduler (ReduceLROnPlateau), the top values of the model metrics did not improve; instead they deteriorated.

Overall, the combination of hierarchical attention and domain-informed embeddings proved to be the most balanced and dependable option. This synergy allowed the model to focus on the most telling words and phrases in each review while also taking advantage of broader contextual clues captured by GloVe vectors. Put simply, the attention mechanism’s ability to highlight especially meaningful segments of text worked hand-in-hand with the embeddings’ capacity to encode semantic relationships learned from the corpora.

In the context of the analysis, McNemar’s test was employed on the hold-out (validation) set to determine whether there is a statistically significant difference in the classification accuracies of the models. By comparing the models’ predictions on the same set of wine quality instances, the test evaluates whether one model consistently outperforms the other. The results are presented in

Table 7.

The results of

Table 7 show that HAN + GloVe consistently performs better than the other models, proving that the improved accuracy of the model is statistically significant and practically relevant for our task. The incorporation of GloVe embeddings improves the model’s precision in categorising wine reviews. Even though all models demonstrated strengths in capturing nuances of wine language, it was the hierarchical attention approach, when supported by rich pretrained embeddings, that consistently rose to the top.

4.2. Comparison of the Classification Results

Table 8 juxtaposes our Hierarchical Attention Network with GloVe embeddings (HAN + GloVe) against the CNN, BiLSTM, and BERT pipelines of Katumullage et al. [

22] (their models were trained on ∼140 k

Wine Spectator notes, whereas we use 46 k

Wine Enthusiast reviews; absolute accuracies are therefore not directly comparable) and a multilingual DistilBERT baseline fine-tuned on our corpus.

Although its accuracy trails the heavier CNN/BiLSTM/BERT pipelines, our model achieves the highest precision (89.7%) and overall F

1 (88.3%), edging out the BERT baseline by 0.4 pp. In the pipeline from

Figure 1 the classifier acts as a gatekeeper: with a 0.5 decision threshold it discards 41% of low-scoring reviews while retaining 92% of those rated ≥90 points, thus supplying a high-purity pool for keyword extraction and prompt generation. Our HAN + GloVe model contains ≈6 million parameters (about

of the 135 million in multilingual DistilBERT), achieves a comparable F

1 (88.3% vs. 84.9%), and processes a review ≈4× faster on a T4 GPU (9 ms vs. 38 ms) while still providing interpretable attention weights. These weights appear as two vectors—word–level

and sentence-level

—which can be visualised as a heat-map overlay on the review text; marketers instantly see which words and sentences drove the prediction and can lift the top-weighted descriptors directly into their creative briefs. In high-stakes curation tasks, explainability can outweigh the small numeric margin.

4.3. Wine Characteristic Extraction

The algorithm presented in

Section 3.5 extracted and analyzed the top characteristics from the wine reviews, yielding the following 15 most frequent terms: wine, tannins, acidity, palate, black, time, drink, years, magnificent, fruits, structure, leather, red, aromas, and new. These terms represent a mix of sensory descriptors, temporal references, and structural elements commonly associated with high-quality wines.

Using these characteristics, the system generated multiple prompts. For example,

“Create a captivating wine advertisement image featuring a glass of deep red, full-bodied wine. The wine should appear rich and fruity. Include elegant lighting that accentuates the wine’s colour and texture. In the background, subtly showcase elements that suggest luxury and sophistication. Incorporate visual elements or symbols that evoke the sense of acidity. Incorporate visual elements or symbols that evoke the sense of fruits. Incorporate visual elements or symbols that evoke the sense of drink. The overall style should be high-quality, photorealistic, and visually striking.”

All prompts consistently included instructions for elegant lighting, luxury elements in the background, and a high-quality photorealistic style.

Figure 4 and

Figure 5 present sample images generated using the same prompts on ChatGPT and Midjourney, respectively, allowing a direct comparison between the two AI image generation systems.

These visual examples show the distinct artistic styles and interpretation approaches employed by each platform when processing the same textual descriptions. All forty images can be found in the wine-evaluation (

https://github.com/vladdiac/wine-evaluation, branch main, commit 1f3a964, 13 June 2025) and winereviews (

https://github.com/vladdiac/winereviews, branch main, commit 27e9d97, 13 June 2025) GitHub repositories mentioned in the Data Availability Statement at the end of the article.

4.4. Image Evaluation

Participants were asked whether the two image sets looked “very similar,” “somewhat different,” or “very different.”

Table 9 reports, for each category.

n—number of participants;

% Set 2 pref.—percentage whose preference strength was above zero (they chose “prefer Set 2” or “strongly prefer Set 2”);

Preference strength—mean on a five-point scale ( = strongly Set 1 … = strongly Set 2);

relevance and appeal—the average rating difference (Set 2 minus Set 1); positive values favour Set 2, negative values favour Set 1.

Table 9.

Preference and rating gaps by perceived stylistic difference.

Table 9.

Preference and rating gaps by perceived stylistic difference.

| Style Difference | n | % Set 2 Pref. | Pref. Strength | Relevance | Appeal |

|---|

| Somewhat different | 16 | 31.25 | −0.25

| −0.15

| −0.23

|

| Very different | 8 | 87.50 | 0.88 | −0.09

| 0.19 |

| Very similar | 5 | 20.00 | −0.80

| 0.18 | −0.03

|

Table 9 makes the pattern explicit. When participants judged the two image sets as

very different (

), 88% expressed at least a mild preference for Set 2 and their average preference strength was

on the

to

scale. By contrast, those who found the sets

very similar (

) preferred Set 2 only 20% of the time and scored

on the same scale. The resulting Pearson correlation of

indicates a moderate positive association. As participants moved one step up the three-level style difference scale (for example, from ’very similar’ to ’somewhat different’), their mean preference score increased by about 0.5 points on the five-point scale.

For the expertise analysis, the self-reported wine-marketing scale (1 = none, 5 = expert) was split into novices (1–2) and experts (3–5).

Table 10 shows, for each rating factor,

Endorsement rates (percentage ticking the factor) in novices and experts;

Point-biserial correlation r between endorsing the factor (1 = yes, 0 = no) and higher appeal scores within each group (positive r = higher appeal when the factor is cited).

Table 10.

Factor endorsement rates and correlations with appeal.

Table 10.

Factor endorsement rates and correlations with appeal.

| Factor | % Novices | % Experts | r (Novices) | r (Experts) |

|---|

| Visual appeal/aesthetics | 83.3 | 90.9 | 0.20 | −0.29

|

| Creativity/originality | 50.0 | 36.4 | 0.25 | 0.16 |

| Emotional appeal | 38.9 | 27.3 | 0.05 | 0.49 |

| Brand/commercial viability | 16.7 | 27.3 | −0.23

| −0.17

|

| Technical image quality | 44.4 | 45.5 | −0.30

| −0.10

|

| Realistic product presentation | 55.6 | 45.5 | −0.13

| −0.43

|

Using the five-point experience item, participants were split into novices (levels 1–2,

) and experts (levels 3–5,

).

Table 10 shows that novices mentioned creativityin 50% of cases and, when they did, their mean appeal ratings rose (point biserial

). Experts endorsed creativity less often (36%) and showed almost no association with appeal (

). Instead, experts in our sample penalised technical lapses (

) and brand-fit issues (

), whereas novices were largely insensitive to those factors. The factor-by-expertise interaction therefore confirms that the criteria driving appeal shift markedly with professional experience.

5. Discussion

In this study, we used a Hierarchical Attention Network (HAN) with GloVe embeddings to handle the nuanced language of wine reviews. Although HANs and pre-trained embeddings have been employed in other text classification tasks, this work underscores their utility in capturing fine-grained wine descriptors for improved classification. The model processes text in a hierarchical way, analyzing both words and sentences, to pinpoint the terms most indicative of quality, style, or flavour. Drawing on GloVe word embeddings allows the classifier to align closely with domain-specific nuances such as “tannin,” “oak,” or “citrus,” improving its ability to map textual clues to meaningful wine attributes. This hierarchical attention captures context more effectively than traditional flat models. For example, word- and sentence-level attention weights focus on the multiword cue ’ripe black cherry at the end’, while a bag-of-words baseline would allocate equal weight to each individual token. By incorporating pre-trained GloVe embeddings, the model also benefits from rich semantic knowledge; wine-related terms are understood in context (e.g., “dry” vs. “sweet” or “Bordeaux” vs. “Rioja”), even if those exact word combinations never appeared in the training data. This combination of HAN + GloVe thus provides both accuracy and interpretability. It outperforms simpler classifiers (like bag-of-words or basic LSTMs) by a substantial margin, aligning with what prior studies have found for HANs on general text tasks. Equally important, the attention weights offer guidance that a sommelier or marketer can appreciate—the model can explain its predictions by highlighting tasting notes that drove the classification, which adds trust and transparency to the AI’s decisions.

In our data, reviews from the United States make up the largest single country segment (46.5 percent) and almost all New World reviews (see

Table 3), but overall there is a nearly even split between Old World (European) and New World wine regions, at around 47 percent versus 53 percent. However, even though the geographic distribution is almost balanced, there are some stylistic differences that can affect the performance of the model. In particular, Old World regions often produce more sparkling wines, which come with their own unique vocabulary (terms such as “mousse,” “autolytic,” “perlage,” and “dosage”). Meanwhile, New World reviews can be heavily skewed toward still wines, especially bold reds. Sparkling wine descriptors appear in only seven to eight percent of reviews, although sparkling wines account for a significant portion of global wine production, especially in the Old World.

Our error analysis showed a slight accuracy gap of just under 2% in favour of New World wines, suggesting that the model largely captures the distinct language patterns of both traditions. Still, the under-representation of sparkling wine terminology may limit the model’s ability to accurately classify these wines or detect key quality markers.

If necessary, we could address this by using style-based sampling in addition to regional considerations so we capture a wider range of wine types. This approach would help ensure that specialised categories such as sparkling wines, which have their own unique set of descriptors, receive sufficient attention in the model.

5.1. Research Questions: Synthesis and Key Insights

RQ1 hints that visual distinctiveness resonates with consumers. As

Table 9 shows, larger style gaps boost both absolute preference and the rating spread between image sets. This echoes the multi-domain sentiment gain that hierarchical context delivers in textual classification [

88] and aligns with Cao et al.’s U-shaped congruence curve, where either perfect fit or deliberate dissonance drives choice [

89]. Together, these results imply that clear visual–verbal signalling—whether via distinctive style or hierarchical language cues—helps consumers differentiate products.

RQ2 suggests that aesthetics lure novices; authenticity reassures experts. Point-biserial tests in

Table 10 rank “label design” as the top novice driver, whereas the significant factor × expertise interaction shows experts pivot to authenticity cues. Wine, perfume, and food reviews indeed use largely non-overlapping sensory vocabularies, each tuned to its domain [

90], and a graph-neural model that injects external lexica lifts aspect accuracy in a similar way [

91]. Luo et al. further show that a visual sweetness scale helps novices choose but leaves experts unmoved [

92], underscoring how cue utilisation shifts with knowledge.

Hierarchical context demonstrates clear advantages over depth alone, as evidenced in our analysis of RQ3. While LSTM+GloVe achieves superior peak precision (90.73%) and F1 scores (87.91%) in

Table 6, HAN+GloVe consistently delivers the highest classification accuracy (84.71%) and demonstrates significantly better error patterns in paired McNemar tests (