Analyzing the Dynamics of Customer Behavior: A New Perspective on Personalized Marketing through Counterfactual Analysis

Abstract

1. Introduction

2. Time Series Segmentation Algorithms

3. Materials and Methods

3.1. Data Understanding and Preprocessing

- Recency (R) represents the number of days which have passed since the last purchase prior to the current time interval;

- Frequency (F) pertains to the total number of purchases within the current time interval;

- Monetary (M) signifies the total purchasing amount during the current time interval;

- Lifespan (L) reflects the number of days which pass between the initial purchase and the last one in the current time interval.

3.2. Customer Behavior Segmentation

3.3. Customer Behavior Status Prediction

3.4. Counterfactual Analysis and Personalized Strategies

4. Results

4.1. Customer Purchasing Behavior Segmentation

4.2. Customer Purchasing Status Prediction

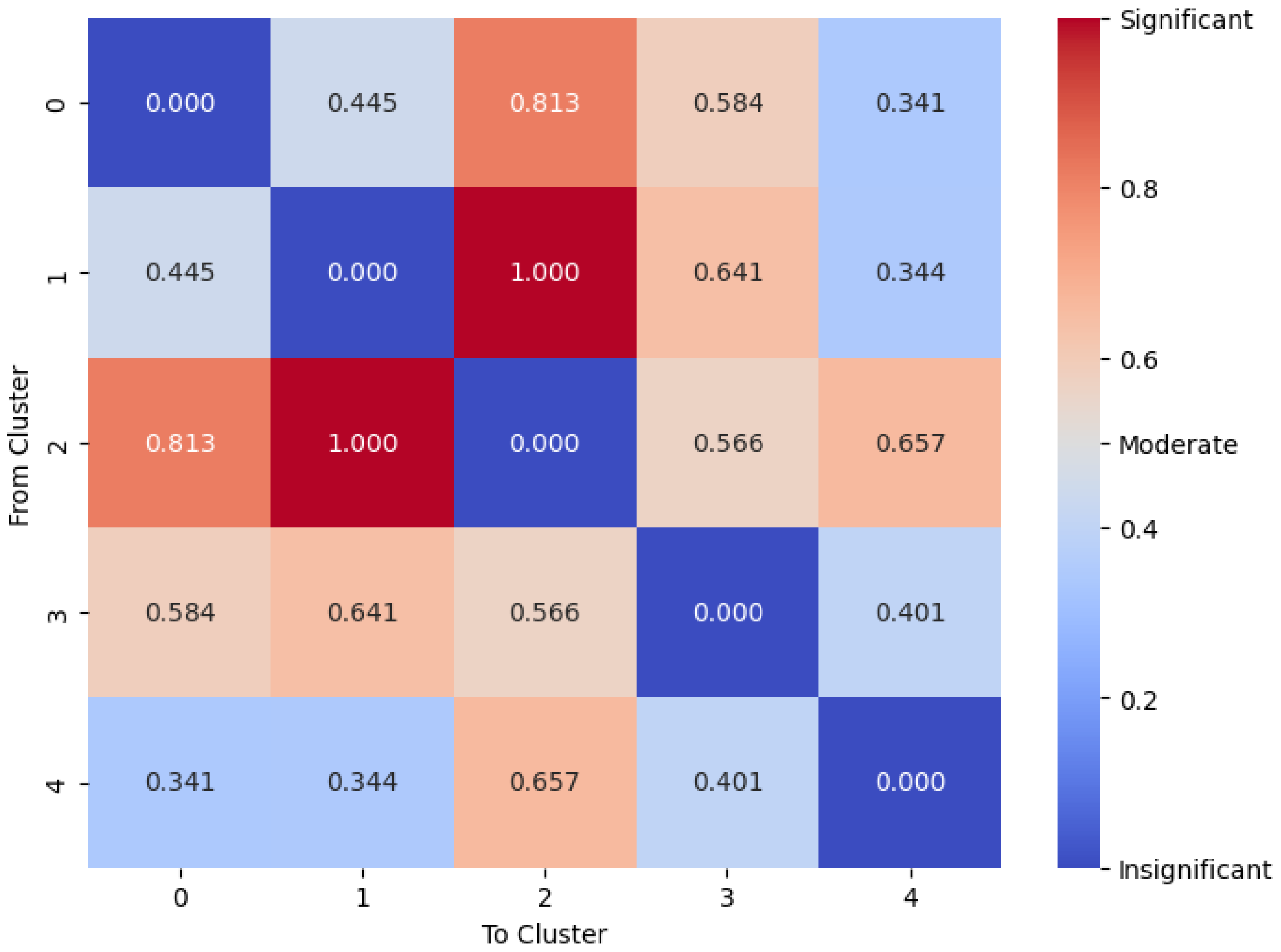

4.3. Counterfactual Analysis and Personalized Strategies

5. Discussion and Managerial Implications

6. Conclusions

7. Limitations and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Customer Purchasing Behavior Feature Extraction Algorithm

| Algorithm A1: Extracting Behavioral Feature Vectors |

| Data: A chronologically ordered sequence of transactions such that each row contains (CustomerId, InvoiceNo., TransactionDate, and Value) columns |

| Result: Feature vectors consist of R, F, M, and L values calculated for each customer in each month |

| 1 Read input data in dfdata; |

| 2 Extract the year and month from the date column; |

| 3 Group dfdata by customer, year, and month in dfrfml; |

| 4 foreach unique customer, year, and month in dfrfml do |

| 5 Find the first and last purchase dates in the current month; |

| 6 Find the most recent purchase date before the current month; |

| 7 R ← Difference in days between the first purchase date and the previous purchase date; |

| 8 Temp ← Count and sum purchases in dfdata within the current month; |

| 9 F ← Tempcount; |

| 10 M ← Tempsum; |

| 11 L ← Difference in days between the last purchase date in the current month and the first purchase date for the customer; |

| 12 end |

| 13 return dfdata |

References

- Xiahou, X.; Harada, Y. B2C E-Commerce Customer Churn Prediction Based on K-Means and SVM. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 458–475. [Google Scholar] [CrossRef]

- Kotler, P.; Armstrong, G. Principles of Marketing, 11th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Huang, S.-C.; Chang, E.-C.; Wu, H.-H. A Case Study of Applying Data Mining Techniques in an Outfitter’s Customer Value Analysis. Expert Syst. Appl. 2009, 36, 5909–5915. [Google Scholar] [CrossRef]

- Chang, E.-C.; Huang, S.-C.; Wu, H.-H. Using K-means Method and Spectral Clustering Technique in an Outfitter’s Value Analysis. Qual. Quant. 2010, 44, 807–815. [Google Scholar] [CrossRef]

- Alves Gomes, M.; Meisen, T. A Review on Customer Segmentation Methods for Personalized Customer Targeting in E-commerce Use Cases. Inf. Syst. e-Bus. Manag. 2023, 21, 527–570. [Google Scholar] [CrossRef]

- Aksoy, N.C.; Kabadayi, E.T.; Yilmaz, C.; Alan, A.K. A Typology of Personalisation Practices in Marketing in the Digital Age. J. Mark. Manag. 2021, 37, 1091–1122. [Google Scholar] [CrossRef]

- Sarkar, M.; Puja, A.R.; Chowdhury, F.R. Optimizing Marketing Strategies with RFM Method and K-Means Clustering-Based AI Customer Segmentation Analysis. J. Bus. Manag. Stud. 2024, 6, 54–60. [Google Scholar] [CrossRef]

- Dibb, S. Market Segmentation: Strategies for Success. Mark. Intell. Plan. 1998, 16, 394–406. [Google Scholar] [CrossRef]

- Miguéis, V.L.; Camanho, A.S.; Cunha, J.F.E. Customer Data Mining for Lifestyle Segmentation. Expert Syst. Appl. 2012, 39, 9359–9366. [Google Scholar] [CrossRef]

- Safari, F.; Safari, N.; Montazer, G.A. Customer Lifetime Value Determination Based on RFM Model. Mark. Intell. Plan. 2016, 34, 446–461. [Google Scholar] [CrossRef]

- Manjunath, K.; Suhas, Y.; Kashef, R. Distributed Clustering Using Multi-Tier Hierarchical Overlay Super-Peer Peer-to-Peer Network Architecture for Efficient Customer Segmentation. Electron. Commer. Res. Appl. 2021, 47, 101040. [Google Scholar] [CrossRef]

- Calvet, L.; Ferrer, A.; Gomes, M.I.; Juan, A.A.; Masip, D. Combining Statistical Learning with Metaheuristics for the Multi-Depot Vehicle Routing Problem with Market Segmentation. Comput. Ind. Eng. 2016, 94, 93–104. [Google Scholar] [CrossRef]

- Murray, P.W.; Agard, B.; Barajas, M.A. Market Segmentation Through Data Mining: A Method to Extract Behaviors from a Noisy Data Set. Comput. Ind. Eng. 2017, 109, 233–252. [Google Scholar] [CrossRef]

- Song, M.; Zhao, X.; E, H.; Ou, Z. Statistics-based CRM Approach via Time Series Segmenting RFM on Large-scale Data. Knowl. Based Syst. 2017. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M. A New Methodology for Customer Behavior Analysis using Time Series Clustering: A Case Study on a Bank’s Customers. Kybernetes, 2019; ahead-of-print. [Google Scholar] [CrossRef]

- Guney, S.; Peker, S.; Turhan, C. A Combined Approach for Customer Profiling in Video on Demand Services Using Clustering and Association Rule Mining. IEEE Access 2020, 8, 84326–84335. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M. A New Framework for Predicting Customer Behavior in Terms of RFM by Considering the Temporal Aspect Based on Time Series Techniques. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 515–531. [Google Scholar] [CrossRef]

- Galal, M.; Salah, T.; Aref, M.; ElGohary, E. Smart Support System for Evaluating Clustering as a Service: Behavior Segmentation Case Study. Int. J. Intell. Comput. Inf. Sci. 2022, 22, 35–43. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Sheikh Baghery, F. A Novel Time Series Clustering Method with Fine-tuned Support Vector Regression for Customer Behavior Analysis. Expert Syst. Appl. 2022, 204, 117584. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Bahrini, A. An Analytical Framework Based on the Recency, Frequency, and Monetary Model and Time Series Clustering Techniques for Dynamic Segmentation. Expert Syst. Appl. 2022, 192, 116373. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, H.; Gao, Y. Research on Customer Lifetime Value Based on Machine Learning Algorithms and Customer Relationship Management Analysis Model. Heliyon 2023, 9, e13384. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.-H. Apply Robust Segmentation to the Service Industry Using Kernel-Induced Fuzzy Clustering Techniques. Expert Syst. Appl. 2010, 37, 8395–8400. [Google Scholar] [CrossRef]

- Wei, J.-T.; Lin, S.-Y.; Wu, H.-H. A Review of the Application of RFM Model. Afr. J. Bus. Manag. Dec. Spec. Rev. 2010, 4, 4199–4206. [Google Scholar]

- Djurisic, V.; Kascelan, L.; Rogic, S.; Melovic, B. Bank CRM Optimization Using Predictive Classification Based on the Support Vector Machine Method. Appl. Artif. Intell. 2020, 34, 941–955. [Google Scholar] [CrossRef]

- Dogan, O.; Ayçin, E.; Bulut, Z. Customer Segmentation by Using RFM Model and Clustering Methods: A Case Study in the Retail Industry. Int. J. Contemp. Econ. Adm. Sci. 2018, 8, 1–19. [Google Scholar]

- Amoozad Mahdiraji, H.; Tavana, M.; Mahdiani, P.; Abbasi Kamardi, A.A. A Multi-Attribute Data Mining Model for Rule Extraction and Service Operations Benchmarking. Benchmarking Int. J. 2022, 29, 456–495. [Google Scholar] [CrossRef]

- Parvaneh, A.; Tarokh, M.; Abbasimehr, H. Combining Data Mining and Group Decision Making in Retailer Segmentation Based on LRFMP Variables. Int. J. Ind. Eng. Prod. Res. 2014, 25, 197–206. [Google Scholar]

- Peker, S.; Kocyigit, A.; Eren, P.E. LRFMP Model for Customer Segmentation in the Grocery Retail Industry: A Case Study. Mark. Intell. Plan. 2017, 35, 544–559. [Google Scholar] [CrossRef]

- Wei, J.T.; Lin, S.Y.; Yang, Y.Z.; Wu, H.H. The Application of Data Mining and RFM Model in Market Segmentation of a Veterinary Hospital. J. Stat. Manag. Syst. 2019, 22, 1049–1065. [Google Scholar] [CrossRef]

- Akhondzadeh-Noughabi, E.; Albadvi, A. Mining the Dominant Patterns of Customer Shifts Between Segments by Using Top-k and Distinguishing Sequential Rules. Manag. Decis. 2015, 53, 1976–2003. [Google Scholar] [CrossRef]

- Mosaddegh, A.; Albadvi, A.; Sepehri, M.M.; Teimourpour, B. Dynamics of Customer Segments: A Predictor of Customer Lifetime Value. Expert Syst. Appl. 2021, 172, 114606. [Google Scholar] [CrossRef]

- Seret, A.; vanden Broucke, S.K.; Baesens, B.; Vanthienen, J. A Dynamic Understanding of Customer Behavior Processes Based on Clustering and Sequence Mining. Expert Syst. Appl. 2014, 41, 4648–4657. [Google Scholar] [CrossRef]

- Xu, D.; Tian, Y. A Comprehensive Survey of Clustering Algorithms. Ann. Data Sci. 2015, 2, 165–193. [Google Scholar] [CrossRef]

- Aghabozorgi, S.; Seyed Shirkhorshidi, A.; Ying Wah, T. Time-Series Clustering—A Decade Review. Inf. Syst. 2015, 53, 16–38. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; Wiley Online Library: Hoboken, NJ, USA, 1990. [Google Scholar]

- Sakoe, H.; Chiba, S. A Dynamic Programming Approach to Continuous Speech Recognition. In Proceedings of the Seventh International Congress on Acoustics, Budapest, Hungary, 18–26 August 1971; Volume 3, pp. 65–69. [Google Scholar]

- Sakoe, H.; Chiba, S. Dynamic Programming Algorithm Optimization for Spoken Word Recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef]

- Wang, X.; Smith, K.; Hyndman, R. Characteristic-Based Clustering for Time Series Data. Data Min. Knowl. Discov. 2006, 13, 335–364. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Ikotun, A.M.; Oyelade, O.O.; Abualigah, L.; Agushaka, J.O.; Eke, C.I.; Akinyelu, A.A. A Comprehensive Survey of Clustering Algorithms: State-of-the-Art Machine Learning Applications, taxonomy, challenges, and future research prospects. Eng. Appl. Artif. Intell. 2022, 110, 104743. [Google Scholar] [CrossRef]

- Ahmad, A.; Dey, L. A K-Means Clustering Algorithm for Mixed Numeric and Categorical Data. Data Knowl. Eng. 2007, 63, 503–527. [Google Scholar] [CrossRef]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. Available online: https://digitalassets.lib.berkeley.edu/math/ucb/text/math_s5_v1_article-17.pdf (accessed on 22 April 2024).

- Fayyad, U.; Reina, C.; Bradley, P.S. Initialization of Iterative Refinement Clustering Algorithms. In Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 27–31 August 1998; pp. 194–198. [Google Scholar]

- Antunes, C.; Oliveira, A.L. Temporal data mining: An overview. In Proceedings of the KDD Workshop on Temporal Data Mining, San Francisco, CA, USA, 26–29 August 2001; pp. 1–13. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Kluwer Academic Publishers: London, UK, 1981. [Google Scholar]

- Dunn, J.C. A Fuzzy Relative of the ISODATA Process and Its Use in Detecting Compact Well-Separated Clusters. Cybern. Syst. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- Krishnapuram, R.; Joshi, A.; Nasraoui, O.; Yi, L. Low-Complexity Fuzzy Relational Clustering Algorithms for Web Mining. IEEE Trans. Fuzzy Syst. 2001, 9, 595–607. [Google Scholar] [CrossRef]

- Shavlik, J.W.; Dietterich, T.G. Readings in Machine Learning; Morgan Kaufmann: Cambridge, MA, USA, 1990. [Google Scholar]

- Wang, X.; Smith, K.A.; Hyndman, R.J.; Alahakoon, D.A. Scalable Method for Time Series Clustering. Available online: https://api.semanticscholar.org/CorpusID:8168184 (accessed on 21 April 2024).

- Andreopoulos, B.; An, A.; Wang, X. A Roadmap of Clustering Algorithms: Finding a Match for a Biomedical Application. Brief. Bioinform. 2009, 10, 297–314. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Knowledge Discovery and Data Mining, Portland, OR, SUA, 2–4 August 1996. [Google Scholar]

- Chandrakala, S.; Chandra, C. A Density-Based Method for Multivariate Time Series Clustering in Kernel Feature Space. In Proceedings of the IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1885–1890. [Google Scholar]

- Wang, W.; Yang, J.; Muntz, R. STING: A Statistical Information Grid Approach to Spatial Data Mining. In Proceedings of the International Conference on Very Large Data Bases, San Francisco, CA, USA, 25–29 August 1997; pp. 186–195. [Google Scholar]

- Sheikholeslami, G.; Chatterjee, S.; Zhang, A. WaveCluster: A Multi-Resolution Clustering Approach for Very Large Spatial Databases. In Proceedings of the International Conference on Very Large Data Bases, San Francisco, CA, USA, 24–27 August 1998; pp. 428–439. [Google Scholar]

- Aghabozorgi, S.; Wah, T.Y.; Herawan, T.; Jalab, H.A.; Shaygan, M.A.; Jalali, A.A. Hybrid Algorithm for Clustering of Time Series Data Based on Affinity Search Technique. Sci. World J. 2014, 2014, 562194. [Google Scholar] [CrossRef] [PubMed]

- Lai, C.-P.; Chung, P.-C.; Tseng, V.S. A Novel Two-Level Clustering Method for Time Series Data Analysis. Expert Syst. Appl. 2010, 37, 6319–6326. [Google Scholar] [CrossRef]

- Venkataanusha, P.; Anuradha, C.; Murty, P.S.R.C.; Kiran, C.S. Detecting Outliers in High-Dimensional Datasets Using Z-Score Methodology. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2019, 9, 48–53. [Google Scholar] [CrossRef]

- Chikodili, N.B.; Abdulmalik, M.D.; Abisoye, O.A.; Bashir, S.A. Outlier Detection in Multivariate Time Series Data Using a Fusion of K-Medoid, Standardized Euclidean Distance, and Z-Score. In Information and Communication Technology and Applications. ICTA 2020. Communications in Computer and Information Science; Misra, S., Muhammad-Bello, B., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Yıldız, E.; Güngör Şen, C.; Işık, E.E. A Hyper-Personalized Product Recommendation System Focused on Customer Segmentation: An Application in the Fashion Retail Industry. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 571–596. [Google Scholar] [CrossRef]

- Hughes, A.M. Boosting Response with RFM. Mark. Tools 1996, 3, 48. [Google Scholar]

- Hosseini, S.M.S.; Maleki, A.; Gholamian, M.R. Cluster Analysis Using a Data Mining Approach to Develop CRM Methodology to Assess Customer Loyalty. Expert Syst. Appl. 2010, 37, 5259–5264. [Google Scholar] [CrossRef]

- Wei, J.-T.; Lin, S.-Y.; Weng, C.-C.; Wu, H.-H. A Case Study of Applying LRFM Model in Market Segmentation of a Children’s Dental Clinic. Expert Syst. Appl. 2012, 39, 5529–5533. [Google Scholar] [CrossRef]

- Li, D.-C.; Dai, W.-L.; Tseng, W.-T. A Two-Stage Clustering Method to Analyze Customer Characteristics to Build Discriminative Customer Management: A Case of Textile Manufacturing Business. Expert Syst. Appl. 2011, 38, 7186–7191. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques; Elsevier Science: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Paparrizos, J.; Gravano, L. k-Shape: Efficient and Accurate Clustering of Time Series. ACM SIGMOD Rec. 2016, 45, 69–76. [Google Scholar] [CrossRef]

- Pearl, J. Theoretical Impediments to Machine Learning with Seven Sparks from the Causal Revolution. Technical report. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Cheng, D.; Zhu, Q.; Huang, J.; Wu, Q.; Yang, L. A Novel Cluster Validity Index Based on Local Cores. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 985–999. [Google Scholar] [CrossRef]

- Desgraupes, B. Clustering Indices. Available online: https://cran.r-project.org/web/packages/clusterCrit/vignettes/clusterCrit.pdf (accessed on 21 April 2024).

- Paparrizos, J.; Gravano, L. Fast and Accurate Time-Series Clustering. ACM Trans. Database Syst. 2017, 42, 13. [Google Scholar] [CrossRef]

- Ramos, P.; Santos, N.; Rebelo, R. Performance of State Space and ARIMA Models for Consumer Retail Sales Forecasting. Robot. Comput. Integr. Manuf. 2015, 34, 151–163. [Google Scholar] [CrossRef]

- Chouakria, A.D.; Nagabhushan, P.N. Adaptive Dissimilarity Index for Measuring Time Series Proximity. Adv. Data Anal. Classif. 2007, 1, 5–21. [Google Scholar] [CrossRef]

- Montero, P.; Vilar, J.A. TSclust: An R Package for Time Series Clustering. J. Stat. Softw. 2014, 62, 1–43. [Google Scholar] [CrossRef]

- Batista, G.; Keogh, E.; Tataw, O.; Alves de Souza, V. CID: An Efficient Complexity-Invariant Distance for Time Series. Data Min. Knowl. Discov. 2013, 28, 634–669. [Google Scholar] [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Martínez, F.; Charte, F.; Frías, M.P.; Martínez-Rodríguez, A.M. Strategies for Time Series Forecasting with Generalized Regression Neural Networks. Neurocomputing 2022, 491, 509–521. [Google Scholar] [CrossRef]

| Study | Features | Methodology | Dataset | Conclusion |

|---|---|---|---|---|

| Song et al. [14] 2017 | Recency, frequency, and monetary | K-means clustering is applied to the RFM model in various time intervals. Multiple Correspondence Analysis (MCA) is used to regularize the three dimensions of the RFM model into unified clustering centers for better business implications. | The dataset is related to telecom service and includes approximately 481,905,749 rows of data. | The study proposed a methodology utilizing the RFM model and MCA to analyze large-scale data and clustering experiments on both dimensions of RFM and time intervals. It concluded that fitting the RFM model separately in time intervals guarantees precise performance. |

| Abbasimehr and Shabani [15] 2019 | Recency, frequency, and monetary | The Ward clustering method was used with four similarity measures including Euclidean distance, COR, CORT, and DTW. The validity of the obtained clusters was tested using the silhouette index. | Data are derived from transactions made by B2B POS customers of a bank over seven months. There are 259,000 transactions recorded, involving 2531 customers. | The study identified clusters of CPB through time series clustering and subsequently analyzed these clusters to reveal distinctive customer characteristics that enable tailored marketing recommendations for each group. |

| Guney et al. [16] 2020 | Length, recency, frequency, monetary, and product category | This study employs the LRFMP model to categorize customers, standardizes the data using min-max normalization, and identifies customer segments using K-means clustering. Then, it employs the Apriori algorithm for association rule mining to analyze content preferences and determine customer preferences. | The dataset is sourced from an Internet protocol television operator and comprises STB-based data from 195,493 subscribers over two years. | Customers were categorized into four groups and their rental preferences were determined. The study suggests that the proposed approach effectively identifies customer groups, aiding in the development of suitable marketing strategies for implementation. |

| Abbasimehr and Shabani [17] 2021 | Recency, frequency, and monetary | Agglomerative hierarchical clustering with Ward’s method is utilized, employing various distance measures such as Euclidean distance, CORT, DTW, and CID. Additionally, traditional time series forecasting methods like ARIMA, SMA, and KNN are employed to predict customers’ future clusters. | The dataset includes eleven months of customers’ POS transactions from a bank. | The proposed method for segment-level customer behavior forecasting surpasses all other individual forecasters in symmetric mean absolute percentage error (SMAPE). This approach is designed to enhance customer relationship management. |

| Gala, et al. [18] 2022 | Behavioral and purchase features (not explicitly stated) | Hierarchical clustering is utilized to segment customers according to their purchasing behaviors, with parameter linkage and the number of clusters adjusted to optimize performance. The silhouette score serves as a metric for assessing the quality of the clusters. | It comprises data from 1659 customers in the food sector, encompassing details on 5685 orders placed by these customers. | They proposed a system to automate the clustering process. It was tested on two different datasets from the supermarket and restaurant industries and achieved a silhouette score of 0.69, which indicates high accuracy in segmenting customers. |

| Abbasimehr and Sheikh Baghery [19] 2022 | Time series features, including measures of central tendency, variability, etc. | It proposes a new method combining Laplacian feature ranking and hybrid Support Vector Regression for customer behavior analysis. The approach is evaluated across four clustering algorithms including k-medoids, K-means, Fuzzy c-Means, and Self-Organizing Maps. | The data comprises eleven months of POS transactions in various guilds, such as home appliance stores, grocery shops, and supermarkets. | The optimal clustering algorithm varied across datasets, with K-means being most effective for grocery and k-medoids performing best for home appliance and supermarket data. In forecasting accuracy and mean SMAPE, it outperformed other methods in certain clusters and showed superior forecasting accuracy in grocery and supermarket datasets. |

| Abbasimehr and Bahrini [20] 2022 | Recency, frequency, and monetary | Four distance measures of DTW, CID, CORT, and SBD and three clustering algorithms of hierarchical, spectral, and k-shape are utilized to identify customer groups with similar behavior. The silhouette and CID indexes are employed to evaluate and select the best clustering results. Subsequently, customer segments are labeled and analyzed based on their behavior to inform marketing strategies. | It was derived from a bank over eleven months and consists of 2,156,394 transactions conducted by consumers across grocery and appliance retailers’ domains. | Customers were categorized into four groups including high-value, middle-value, middle-to-low-value, and low-value based on their behavior. Analyzing these segments revealed distinct behavioral patterns, providing valuable insights for designing effective marketing strategies and enhancing customer lifetime value. |

| Sun et al. [21] 2023 | Recency, frequency, monetary | The methodology in this study utilizes the RFM model for customer classification, followed by the application of the BG/NBD model to predict purchase expectations and the gamma-gamma model to forecast consumption amounts. Customer lifetime value (CLV) is then calculated based on these predictions. | The dataset used in this study is the online retail dataset, which is generated from non-store online retail transactions registered in the UK. | The research concluded that the proposed method improves the accuracy of customer value, user-level correlation analysis, and explanation of intermediary effects. It can provide marketing strategies for diverse customer segments while ensuring the quantity and quality of these groups. |

| Number of Clusters | Number of Samples in Each Cluster |

| 3 | [[271,125], [10], [17]] |

| 4 | [[271,122], [7], [16], [7]] |

| 5 | [[271,121], [3], [16], [7], [5]] |

| 6 | [[271,113], [3], [10], [7], [5], [14]] |

| 7 | [[271,105], [3], [10], [7], [5], [14], [8]] |

| 8 | [[271,109], [3], [10], [7], [11], [2], [7], [3]] 1 |

| 9 | [[270,529], [3], [10], [7], [11], [2], [7], [3], [580]] |

| K | Euclidean | Manhattan | Chebyshev | CORT | DTW | CID |

|---|---|---|---|---|---|---|

| 4 | 0.461 | 0.457 | 0.426 | 0.114 | 0.450 | 0.283 |

| 5 | 0.486 1 | 0.485 | 0.382 | 0.206 | 0.392 | 0.200 |

| 6 | 0.427 | 0.458 | 0.425 | 0.205 | 0.373 | 0.227 |

| 7 | 0.469 | 0.461 | 0.446 | 0.151 | 0.385 | 0.227 |

| 8 | 0.438 | 0.470 | 0.404 | 0.122 | 0.417 | 0.221 |

| 9 | 0.447 | 0.427 | 0.416 | 0.128 | 0.428 | 0.215 |

| Cluster | Size | Cluster Compactness |

|---|---|---|

| 0 | 1264 | 63.98 |

| 1 | 13,552 | 116.02 |

| 2 | 2731 | 135.06 |

| 3 | 1443 | 210.41 |

| 4 | 6684 | 108.67 |

| Classifier | Accuracy | F1-Score | Cohen’s Kappa | sMAPE |

|---|---|---|---|---|

| Random Forest | 0.969 | 0.953 | 0.982 | 2.821 |

| Logistic Regression | 0.998 1 | 0.999 | 0.999 | 0.345 |

| Decision Tree | 0.986 | 0.959 | 0.981 | 0.361 |

| Potential Scenarios | Current Status | Desired Status | ||

|---|---|---|---|---|

| Cluster 0 | Cluster 2 | Cluster 3 | Cluster 4 | |

| Current Status | X | |||

| X  X X | |||

| X  X X | |||

| X  X X | |||

| Potential Scenarios | Current Status | Undesired Status | ||

|---|---|---|---|---|

| Cluster 3 | Cluster 0 | Cluster 2 | Cluster 4 | |

| Current Status | X | |||

| X | |||

| X | |||

| X  X X | |||

| X  X X | |||

| X  X X | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ebadi Jalal, M.; Elmaghraby, A. Analyzing the Dynamics of Customer Behavior: A New Perspective on Personalized Marketing through Counterfactual Analysis. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1660-1681. https://doi.org/10.3390/jtaer19030081

Ebadi Jalal M, Elmaghraby A. Analyzing the Dynamics of Customer Behavior: A New Perspective on Personalized Marketing through Counterfactual Analysis. Journal of Theoretical and Applied Electronic Commerce Research. 2024; 19(3):1660-1681. https://doi.org/10.3390/jtaer19030081

Chicago/Turabian StyleEbadi Jalal, Mona, and Adel Elmaghraby. 2024. "Analyzing the Dynamics of Customer Behavior: A New Perspective on Personalized Marketing through Counterfactual Analysis" Journal of Theoretical and Applied Electronic Commerce Research 19, no. 3: 1660-1681. https://doi.org/10.3390/jtaer19030081

APA StyleEbadi Jalal, M., & Elmaghraby, A. (2024). Analyzing the Dynamics of Customer Behavior: A New Perspective on Personalized Marketing through Counterfactual Analysis. Journal of Theoretical and Applied Electronic Commerce Research, 19(3), 1660-1681. https://doi.org/10.3390/jtaer19030081