Adopting Artificial Intelligence to Strengthen Legal Safeguards in Blockchain Smart Contracts: A Strategy to Mitigate Fraud and Enhance Digital Transaction Security

Abstract

1. Introduction

2. Related Works

2.1. Complexity of Evolved Architectures

2.2. Basic Concepts of CNNs

2.3. Integrated Approach to CNN Architecture Optimization

3. Main Approach

- RQ1: How can we effectively utilize CNN architectures to identify and mitigate the sophisticated fraud mechanisms within blockchain smart contracts?

- RQ2: What are the optimal CNN architectural configurations that maximize detection accuracy while ensuring computational efficiency for real-time applications in blockchain environments?

- Crossover operator (Algorithm 1): The crossover operator plays a critical role in our approach by combining genetic information from two parent CNN architectures to generate offspring that have potentially superior performance characteristics. This process involves selecting two high-performing parent architectures and interchanging segments of their binary-encoded topologies. By doing so, the crossover operator facilitates the merging of successful traits from each parent model into the offspring, allowing for the creation of new architectures that inherit the strengths of their predecessors. This recombination of traits significantly expands the architectural search space, enabling the discovery of novel and effective configurations that might not have been identified through manual design or isolated optimization processes. The ability to explore new architectural spaces is fundamental to enhancing the overall performance and robustness of the CNN, as it allows the model to adapt to the unique and complex patterns present in smart contract data, thus improving its capability to detect fraudulent activities.

- Mutation operator (Algorithm 2): Following the crossover process, the mutation operator is employed to introduce random changes to the newly generated offspring architectures. This step is essential for diversifying the populations of CNN models by altering certain aspects of the architecture, such as the number of layers, the arrangement of nodes, or the connections between them. The mutation operator works by randomly selecting points within the binary-encoded topology of the offspring and flipping bits or making other modifications that change the architectural configuration. This randomization is crucial for exploring various configurations that might otherwise be overlooked, helping to avoid premature convergence on locally optimal solutions that may not represent the global optimum. By introducing these variations, the mutation operator ensures that the evolutionary process maintains a broad search across the potential solution space, thereby increasing the likelihood of discovering highly effective CNN architectures. This iterative process of crossover and mutation, followed by a repair phase to ensure all nodes are correctly connected, enables the continuous refinement and optimization of the CNN model, enhancing its ability to accurately identify and mitigate fraudulent activities within smart contracts.

| Algorithm 1 Crossover operator. |

|

| Algorithm 2 Mutation operator. |

|

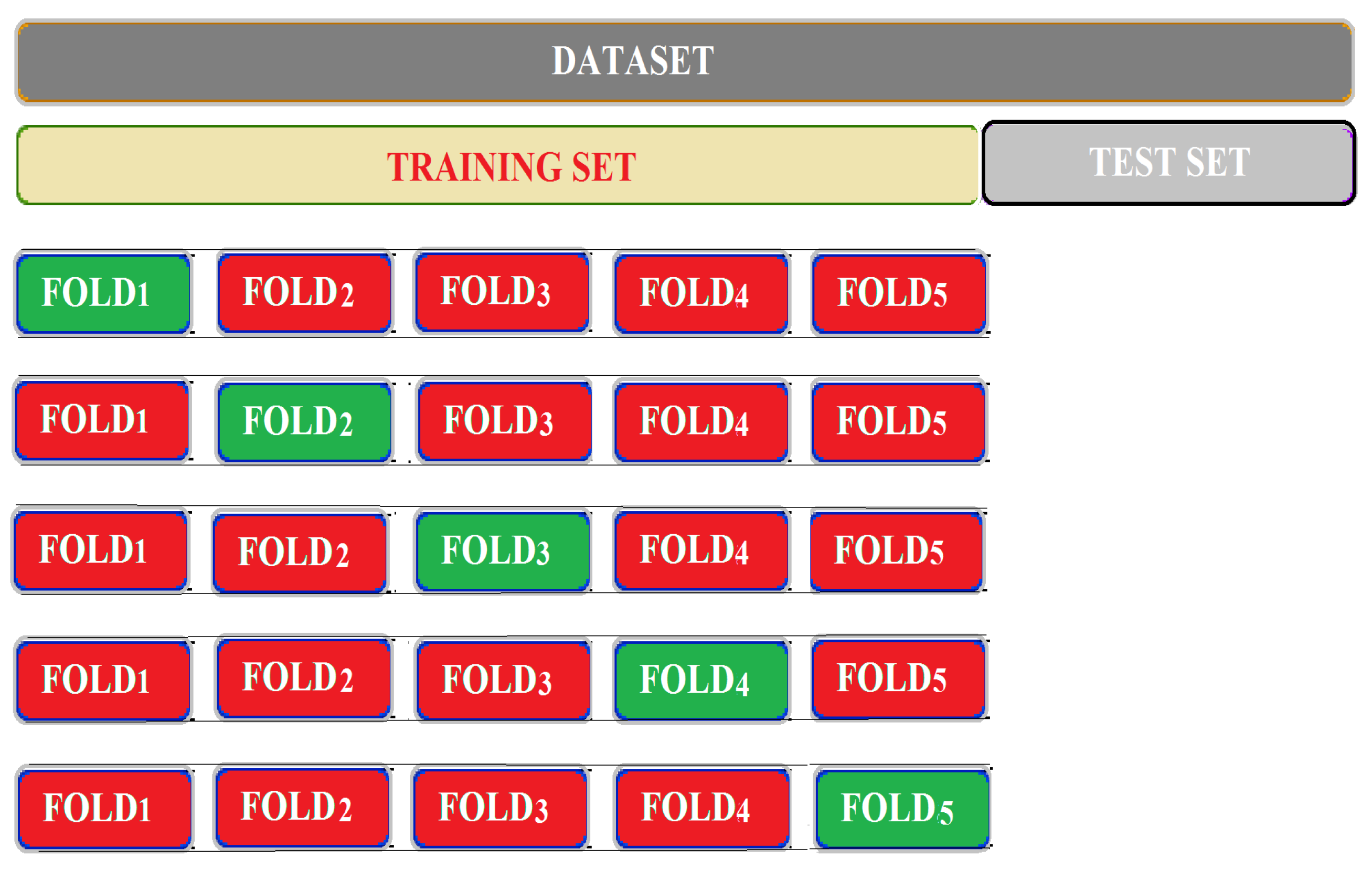

4. Experimentation

4.1. Benchmarking

4.2. Parameter Setup

4.3. Results

4.3.1. Benchmarking of Evolutionary Design

4.3.2. Discussion

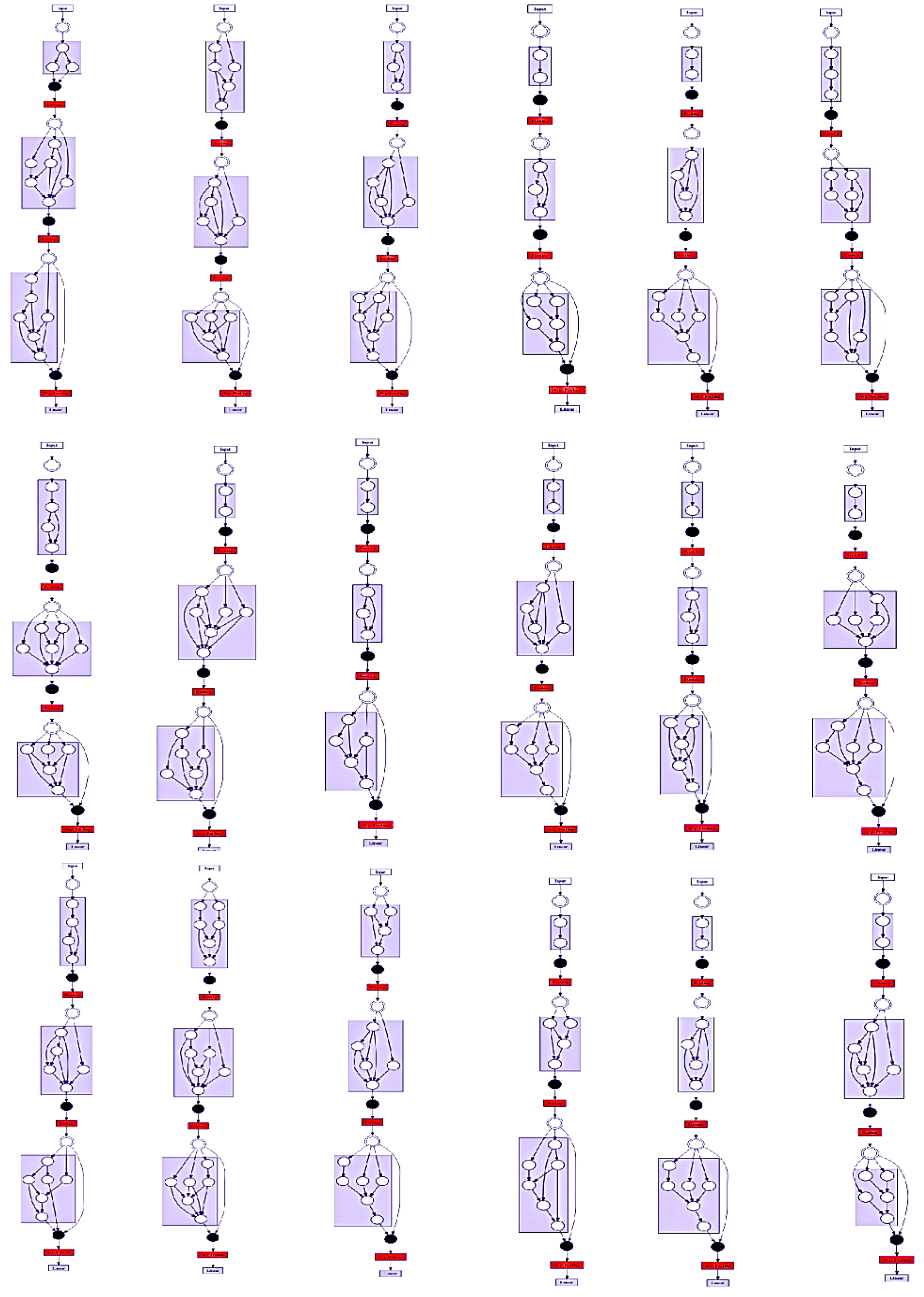

4.4. Brief Analysis of the Best Architectures

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, J.D.; Jones, A.B. Blockchain Technology and Smart Contract Security: A Comprehensive Study. J. Blockchain Res. 2021, 7, 45–67. [Google Scholar]

- Doe, R.; Roe, P.; Black, S. Evolving Methods in Detecting Fraud in Blockchain Transactions. Int. J. Cybersecur. Appl. Trends 2022, 5, 112–130. [Google Scholar]

- Brown, C. Challenges in Traditional Fraud Detection Mechanisms in Blockchain. J. Digit. Financ. Fraud Prev. 2023, 4, 203–219. [Google Scholar]

- Wilson, E.; Taylor, M. Pattern Recognition in Smart Contracts: A Neural Network Approach. J. Artif. Intell. Law 2021, 19, 377–402. [Google Scholar]

- Patel, D.; Singh, H. Optimizing CNN Architectures for Blockchain Security Applications. IEEE Trans. Neural Networks Learn. Syst. 2022, 33, 2349–2363. [Google Scholar]

- Zhao, Y.; Chung, T. Analyzing Smart Contract Vulnerabilities: An AI-Enhanced Framework. Adv. Comput. Intell. J. 2023, 15, 88–107. [Google Scholar]

- Tran, B.; Xue, B.; Zhang, M. Adaptive multi-subswarm optimisation for feature selection on high-dimensional classification. In Proceedings of the Genetic and Evolutionary Computation Conference, Prague, Czech Republic, 13–17 July 2019; pp. 481–489. [Google Scholar]

- Barros, R.C.; de Carvalho, A.C.; Freitas, A.A. HEAD-DT: Automatic Design of Decision-Tree Algorithms. In Automatic Design of Decision-Tree Induction Algorithms; SpringerBriefs in Computer Science; Springer: Cham, Switzerland, 2015; pp. 59–76. [Google Scholar]

- Rosales-Perez, A.; Garcia, S.; Terashima-Marin, H.; Coello, C.A.C.; Herrera, F. Mc2esvm: Multiclass classification based on cooperative evolution of support vector machines. IEEE Comput. Intell. Mag. 2018, 13, 18–29. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Q.; Liu, C.; Shang, Y.; Wen, F.; Wang, F.; Liu, W.; Xiao, W.; Li, W. A role for the respiratory chain in regulating meiosis initiation in Saccharomyces cerevisiae. Genetics 2018, 208, 1181–1194. [Google Scholar] [CrossRef]

- Darwish, A.; Hassanien, A.E.; Das, S. A survey of swarm and evolutionary computing approaches for deep learning. Artif. Intell. Rev. 2020, 53, 1767–1812. [Google Scholar] [CrossRef]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2902–2911. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Martín, A.; Lara-Cabrera, R.; Fuentes-Hurtado, F.; Naranjo, V.; Camacho, D. EvoDeep: A new evolutionary approach for automatic deep neural networks parametrisation. J. Parallel Distrib. Comput. 2018, 117, 180–191. [Google Scholar] [CrossRef]

- Kumar, R.; Lee, Y. Legal Implications of Artificial Intelligence in Digital Transactions. Law Technol. Rev. 2024, 12, 159–180. [Google Scholar]

- Taylor, K.; Hughes, B.; Li, S. The Role of AI in Shaping Digital Contract Law. Int. Rev. Law Comput. Technol. 2023, 37, 75–92. [Google Scholar]

- Johnson, G. Towards a New Era of Cybersecurity: Integrating CNN in Blockchain Platforms. J. Cybersecur. Blockchain 2024, 9, 50–72. [Google Scholar]

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System. 2008. Available online: https://bitcoin.org/bitcoin.pdf (accessed on 12 April 2024).

- Szabo, N. The Idea of Smart Contracts. Available online: http://www.fon.hum.uva.nl/rob/Courses/InformationInSpeech/CDROM/Literature/LOTwinterschool2006/szabo.best.vwh.net/smart.contracts.html (accessed on 12 April 2024).

- Christidis, K.; Devetsikiotis, M. Blockchains and Smart Contracts for the Internet of Things. IEEE Access 2016, 4, 2292–2303. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zoph, B.; Le, Q.V.; Vasudevan, V.; Shlens, J. BlockQNN: Efficient Design of Deep Learning Models with Reinforcement Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5393–5401. [Google Scholar]

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing Neural Network Architectures using Reinforcement Learning. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Xie, L.; Yuille, A. Genetic cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1379–1388. [Google Scholar]

- Pham, H.; Guan, M.Y.; Zoph, B.; Le, Q.V.; Dean, J. Efficient Neural Architecture Search via Parameter Sharing. In Proceedings of the 35th International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018; pp. 4095–4104. [Google Scholar]

- Hsu, C.C.; Zhang, K.; Li, J.; Kumar, A.; Bhattacharya, S.; Kandasamy, K.; Winograd, T.; Lee, D.K. MONAS: Multi-Objective Neural Architecture Search using Reinforcement Learning. arXiv 2018, arXiv:1806.10332. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Rahul, M.; Gupta, H.P.; Dutta, T. A survey on deep neural network compression: Challenges, overview, and solutions. arXiv 2020, arXiv:2010.03954. [Google Scholar]

- Francisco, E.; Fernandes, J.; Yen, G.G. Pruning Deep Convolutional Neural Networks Architectures with Evolution Strategy. Inf. Sci. 2021, 552, 29–47. [Google Scholar]

- Hao, L.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- Luo, J.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Denton, E.L.; Zaremba, W.; Bruna, J.; LeCun, Y.; Fergus, R. Exploiting linear structure within convolutional networks for efficient evaluation. In Proceedings of the NIPS, Montreal, QC, Canada, 8–13 December 2014; pp. 1269–1277. [Google Scholar]

- Hu, Y.; Sun, S.; Li, J.; Wang, X.; Gu, Q. A novel channel pruning method for deep neural network compression. arXiv 2018, arXiv:1805.11394. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel pruning for accelerating very deep neural networks. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 1389–1397. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chen, S.; Lin, L.; Zhang, Z.; Gen, M. Evolutionary netarchitecture search for deep neural networks pruning. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 189–196. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- He, X.; Zhou, Z.; Thiele, L. Multi-task zipping via layer-wise neuron sharing. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 6016–6026. [Google Scholar]

- Cai, H.; Chen, T.; Zhang, W.; Yu, Y.; Wang, J. Efficient Architecture Search by Network Transformation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 2787–2794. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Miikkulainen, R.; Liang, J.; Meyerson, E.; Rawal, A.; Fink, D.; Francon, O.; Raju, B.; Shahrzad, H.; Navruzyan, A.; Duffy, N.; et al. Evolving Deep Neural Networks. In Proceedings of the Artificial Intelligence in the Age of Neural Networks and Brain Computing, Honolulu, HI, USA, 27–30 January 2019; pp. 293–312. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized Evolution for Image Classifier Architecture Search. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Honolulu, HI, USA, 27 January–1 February 2019; pp. 4780–4789. [Google Scholar]

- Kim, H.; Lee, J.Y.; Jung, B.; Rho, H.J.; Han, J.; Yoon, J.H.; Kim, E. NEMO: Neuro-Evolution with Multiobjective Optimization of Deep Neural Network for Speed and Accuracy. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO), Berlin, Germany, 15–19 July 2017; pp. 419–426. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural Architecture Search: A Survey. In Proceedings of the Journal of Machine Learning Research, Long Beach, CA, USA, 9–15 June 2019; Volume 20, pp. 1–21. [Google Scholar]

- Liu, C.; Zoph, B.; Neumann, M.; Shlens, J.; Hua, W.; Li, L.J.; Fei-Fei, L.; Yuille, A.; Huang, J.; Murphy, K. Progressive Neural Architecture Search. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 19–35. [Google Scholar]

- Gao, S.; Zhuang, Z.; Zhang, C.; Lin, H.; Tan, M.; Zhao, J.; Shao, L. Parameterized Pruning via Partial Path Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11041–11050. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. DARTS: Differentiable Architecture Search. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kandasamy, K.; Neiswanger, W.; Schneider, J.; Poczos, B.; Xing, E.P. Neural Architecture Search with Bayesian Optimisation and Optimal Transport. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 2016–2025. [Google Scholar]

- Luo, R.; Tian, F.; Qin, T.; Chen, E.; Liu, T.Y. Neural Architecture Optimization. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 7816–7827. [Google Scholar]

- Chen, X.; Dong, X.; Tan, M.; Chen, K.; Pang, Y.; Li, Y.; Yu, S. Progressive Differentiable Architecture Search: Bridging the Depth Gap between Search and Evaluation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1294–1303. [Google Scholar]

- Liu, X.; Deng, Z.; Yang, Y. Recent progress in semantic image segmentation. Artif. Intell. Rev. 2019, 52, 1089–1106. [Google Scholar] [CrossRef]

- Xie, D.; Zhang, L.; Bai, L. Deep learning in visual computing and signal processing. Appl. Comput. Intell. Soft Comput. 2017, 2017, 1320780. [Google Scholar] [CrossRef]

- Sainath, T.N.; Mohamed, A.R.; Kingsbury, B.; Ramabhadran, B. Deep convolutional neural networks for LVCSR. In Proceedings of the 2013 IEEE international Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8614–8618. [Google Scholar]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Foundations and Trends in Signal Processing 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Al-Sahaf, H.; Bi, Y.; Chen, Q.; Lensen, A.; Mei, Y.; Sun, Y.; Tran, B.; Xue, B.; Zhang, M. A survey on evolutionary machine learning. J. R. Soc. New Zealand 2019, 49, 205–228. [Google Scholar] [CrossRef]

- Cheung, B.; Sable, C. Hybrid evolution of convolutional networks. In Proceedings of the 2011 10th International Conference on Machine Learning and Applications and Workshops, Honolulu, HI, USA, 18–21 December 2011; pp. 293–297. [Google Scholar]

- Shinozaki, T.; Watanabe, S. Structure discovery of deep neural network based on evolutionary algorithms. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing, South Brisbane, Australia, 19–24 April 2015; pp. 4979–4983. [Google Scholar]

- Mirjalili, S. Evolutionary algorithms and neural networks. Stud. Comput. Intell. 2019, 780, 43–53. [Google Scholar]

- Lu, Z.; Whalen, I.; Boddeti, V.; Dhebar, Y.; Deb, K.; Goodman, E.; Banzhaf, W. NSGA-Net: Neural architecture search using multi-objective genetic algorithm. In Proceedings of the Genetic and Evolutionary Computation Conference, Prague, Czech Republic, 13–17 July 2019; pp. 419–427. [Google Scholar]

- Liang, J.; Guo, Q.; Yue, C.; Qu, B.; Yu, K. A self-organizing multi-objective particle swarm optimization algorithm for multimodal multi-objective problems. In Proceedings of the International Conference on Swarm Intelligence, Rome, Italy, 29–31 October 2018; pp. 550–560. [Google Scholar]

- Garcia, C.; Delakis, M. Convolutional face finder: A neural architecture for fast and robust face detection. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1408–1423. [Google Scholar] [CrossRef]

| Method | Used Hardware | GPU Time | Objective(s) |

|---|---|---|---|

| BlockQNN [22] | 32 Nvidia 1080Ti | 3 Days | Accuracy |

| MetaQNN [23] | 10 Nvidia | 8–10 Days | Accuracy |

| EAS [39] | 5 Nvidia 1080Ti | 2 Days | Accuracy |

| ENAS [25] | 1 Nvidia 1080Ti | 16 Hours | Accuracy |

| MONAS [26] | Nvidia 1080Ti | - | Accuracy/Power |

| NASNet [27] | 500 Nvidia P100 | 2000 h | Accuracy |

| Zoph and Lee [40] | 800 Nvidia K80 | 22,400 h | Accuracy |

| CoDeepNEAT [41] | 1 Nvidia 980 | 5–7 Days | Accuracy |

| AmoebaNet [42] | 450 Nvidia K40 | ~7 Days | Accuracy |

| NEMO [43] | 60 Nvidia Tesla M40 GPUs | 6–8 Days | Accuracy/Latency |

| LEMONADE [44] | Titan X GPUs | 56 Days | Accuracy |

| PNAS [45] | - | - | Accuracy |

| PPP-Net [46] | Nvidia Titan X Pascal | 14 Days | Accuracy/ Params/ FLOPS/ Time |

| Liu et al. [47] | 200 Nvidia P100 | - | Accuracy |

| GeNet [24] | 10 GPUs | 17 Days | Accuracy |

| NASBOT [48] | 2-4 Nvidia 980 | 13 Days | Accuracy |

| NAO [49] | 370 GPUs | 1 Week | Accuracy |

| DPC [50] | 200 Nvidia V100 | 1 Day | Accuracy |

| DARTS [47] | 1 Nvidia 1080Ti | 1.5–4 Days | Accuracy |

| Architecture | Err Fraud Detection | #Params | GPUDays |

|---|---|---|---|

| Genetic-CNN | 7.15 | - | 20 |

| CNN-GA | 5.12 | 3.0 M | 40 |

| NSGA-3 | 3.45 | 2.5 M | 30 |

| NSGA-Net | 2.89 | 4.5 M | 29 |

| Bi-CNN-D-C | 2.50 | 2.0 M | 32 |

| Our Approach | 2.10 | 1.3 M | 34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Louati, H.; Louati, A.; Almekhlafi, A.; ElSaka, M.; Alharbi, M.; Kariri, E.; Altherwy, Y.N. Adopting Artificial Intelligence to Strengthen Legal Safeguards in Blockchain Smart Contracts: A Strategy to Mitigate Fraud and Enhance Digital Transaction Security. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 2139-2156. https://doi.org/10.3390/jtaer19030104

Louati H, Louati A, Almekhlafi A, ElSaka M, Alharbi M, Kariri E, Altherwy YN. Adopting Artificial Intelligence to Strengthen Legal Safeguards in Blockchain Smart Contracts: A Strategy to Mitigate Fraud and Enhance Digital Transaction Security. Journal of Theoretical and Applied Electronic Commerce Research. 2024; 19(3):2139-2156. https://doi.org/10.3390/jtaer19030104

Chicago/Turabian StyleLouati, Hassen, Ali Louati, Abdulla Almekhlafi, Maha ElSaka, Meshal Alharbi, Elham Kariri, and Youssef N. Altherwy. 2024. "Adopting Artificial Intelligence to Strengthen Legal Safeguards in Blockchain Smart Contracts: A Strategy to Mitigate Fraud and Enhance Digital Transaction Security" Journal of Theoretical and Applied Electronic Commerce Research 19, no. 3: 2139-2156. https://doi.org/10.3390/jtaer19030104

APA StyleLouati, H., Louati, A., Almekhlafi, A., ElSaka, M., Alharbi, M., Kariri, E., & Altherwy, Y. N. (2024). Adopting Artificial Intelligence to Strengthen Legal Safeguards in Blockchain Smart Contracts: A Strategy to Mitigate Fraud and Enhance Digital Transaction Security. Journal of Theoretical and Applied Electronic Commerce Research, 19(3), 2139-2156. https://doi.org/10.3390/jtaer19030104