Abstract

The relationship between online review types and their outcomes is dynamic. However, it remains unclear how the influence of the three prominent kinds of online reviews (ratings, photos, and text) evolves from the initial to the phases of the restaurant visit cycle. To address this gap in the literature, this study administers a survey in mainland China using two time-lag intervals. Based on the data collection methodology proposed in the consumption-system approach, this survey separates the pre- (T1) and post- (T2) stages of specific restaurant visits. While rating reviews’ direct impact on behavioral intentions increases during the visit cycle, that of photo reviews does not change before and after restaurant visits. As for text reviews, these do not directly influence behavioral intentions before a restaurant visit; however, the impact increases after a visit, highlighting a difference in behavioral responses between the pre- and post-restaurant-visit phases. Rating reviews’ direct effect on review skepticism is negatively significant after visiting a restaurant; moreover, review skepticism is important in mediating the relationship between rating reviews and behavioral intentions after a visit.

1. Introduction

Consider the following scenario: a consumer is planning a get-together with friends and is searching for restaurants they have never been to before. To select a location, the consumer sifts through various text, rating, and photo reviews of potential restaurants. The contents vary, prompting them to wonder which type is most accurate. Now ask, does this consumer’s behavioral response based on a review change after visiting the restaurant? While this customer may actively react to the reviews with which he or she initially engages, does this consumer’s perception of them become diluted over subsequent restaurant visits?

This study aims to address these questions. While researchers have extensively examined online reviews and recognized their importance as a construct [,,,,], one recent study has demonstrated that online review types (text, rating, and photo reviews) are dynamic over time, indicating that consumers should not view all reviews as equally valid []. This suggests that review skepticism is similarly subject to change. In other words, if changes in online review content affect review skepticism, how do such alterations directly or indirectly affect behavioral intentions over time? Particularly, there is scant research on whether the review skepticism–behavioral intention linkage strengthens, weakens, or remains unchanged during subsequent restaurant visits.

Previous studies have focused mainly on online reviews’ positive or negative effects [,,,]. However, a comprehensive understanding of these impacts is critical for establishing a theoretical mechanism explaining how consumers understand online reviews and how review skepticism is enhanced or diluted over time. To this end, utilizing the stimuli-organism-response (SOR) model and a coping framework provides an important foundation for expanding the study of emotional responses to stimuli from a traditional cross-sectional focus to a longitudinal shift. These theories therefore focus primarily on cross-sectional studies, but an expansion of these theories is inevitable given the evidence that emotional responses to review stimuli change during subsequent episodes. Thus, our analysis will help managers predict changes in behavioral intentions and allow researchers to discern how the impacts of the three types of online reviews differ over time.

Using two fundamental approaches, we sought to identify the evolutionary mechanism of behavioral intentions and their drivers. First, we investigated how they evolve both before and after restaurant visits during consumption episodes. As behavioral intentions update through subsequent consumption phases [,], the overall structural evolution of online reviews regarding review outcomes must likewise change before and after restaurant visits. Nevertheless, studies working with empirical evidence of this remain scarce. Second, we updated the SOR mechanism and coping framework by exploring temporal and carryover effects that researchers have yet to investigate. Our examination of the similarities and differences in these effects reveals differences in the alteration’s magnitude and significance.

This study makes two theoretical contributions to the academic literature in our field. First, we expand the theoretical framework for analyzing how the three online review types affect online skepticism before visiting a restaurant and subsequently impact behavioral intentions from a cross-sectional perspective. In doing so, we provide important insight into how consumers’ judgments of online reviews evolve as drivers of behavioral reactions when these reviews are ambiguous or skeptical. Second, we advance online review and consumer behavior research by identifying an evolutionary mechanism that comprises two consumption phases: consumers’ response to online reviews both before and after restaurant visits. While previous studies have emphasized the importance of the sequential process between online reviews and their outcomes [,,,], to account for actual online review dynamics due to review type variations, our research is foundational for addressing how consumer responses to reviews change across consumption episodes.

This article commences with an overview of marketing construct dynamics and an explanation of online reviews and their outcomes. We then outline how we adapted the SOR model and coping framework and develop this study’s hypotheses. Following this, we describe our analysis’ two-time-interval design and test our proposed hypotheses. Finally, we compare our findings concerning the pre- and post-consumption phases and discuss their theoretical and practical implications.

2. The Dynamics of Online Reviews

To date, most online review research has been cross-sectional. While recent studies have highlighted the dynamism of marketing constructs [,], few have applied this to online reviews [,]. Furthermore, researchers have generally agreed that behavioral responses should be approached from an evolutionary perspective that considers the function of time [,]. For example, Duan et al. [] demonstrate that the effects of E-WOM dilute with time. These findings underscore that researchers have not yet reached a consensus on this topic. In particular, because there is a temporal proximity between consumption experiences and online reviews [], the effects of online review types should either strengthen or weaken over time.

Supporting our approach and expanding the key theoretical rationales in the literature requires a full understanding of online reviews’ dynamic impacts on review skepticism and behavioral intentions. First, the SOR model can explain the online review–review skepticism–behavioral response linkage. This linkage, or mechanism, emphasizes the importance of certain stimuli that influence consumers’ internal evaluations, facilitating behavioral responses, specifically as an evolutionary step in consumer behavior []. Because external stimuli influence behavioral intentions (or responses), online reviews can significantly stimulate and shape consumers’ evaluations [,]. Here, an organism refers to an internal structure pivotal in the relationship between external stimuli and behavioral responses [,]. Thus, behavioral responses are derived from a series of stimuli and the organism’s evaluations (e.g., emotional or cognitive judgments), resulting in various responses. That is, text, ratings, and photo reviews may produce different degrees of stimulation, meaning evaluations may change during consumption episodes.

The second theoretical foundation is the coping framework, which helps elucidate the impacts of online reviews and shifts in consumer behavior []. For example, when choosing a new restaurant, consumers evaluate informational cues based on online review types. When assessing them, they experience stressful feelings (e.g., skepticism or frustration) []. We thus argue that this process can increase or decrease skepticism, inducing behavioral intentions that may trigger further actions over time. This is consistent with Bagozzi’s coping framework [], which highlights the appraisal processes–emotional reactions–coping response linkage. As such, researchers examining tourism and hospitality services have increasingly focused on how consumers cope with chaotic situations (e.g., trying to select a restaurant while on vacation) through cognitive appraisal processes [,].

2.1. The Three Types of Online Reviews and the Differential Responses Elicited

Online reviews are important in the process of consumer decision-making regarding purchasing or using new or unfamiliar services. Reviews can be broadly divided into three categories: text-only, ratings, and photo-only. We excluded those with photos as they may overlap or be confused with pure text reviews. Here, a text review refers to a review utilizing only text; a rating review refers to a cognitive evaluation indicated mainly by a ranking system, such as a certain number of stars, with some text (e.g., Yelp); and a photo review only includes picture, lacking any kind of text (e.g., Instagram).

More specifically, consumers’ evaluations depend on how they perceive these review types []. For example, while researchers have demonstrated that text reviews positively influence consumers’ behavioral intentions over time [], non-text ones are more useful for their informative persuasiveness []. Specifically, photo reviews can enhance consumers’ understanding of other review kinds from the salient visual cue. However, consumers often regard reviews without photos with skepticism or distrust []. Additionally, a recent study demonstrated that text reviews can improve ratings [], suggesting that their effects are mixed. Thus, the differences in consumer review skepticism that reflect the specific characteristics of online review types should be identified.

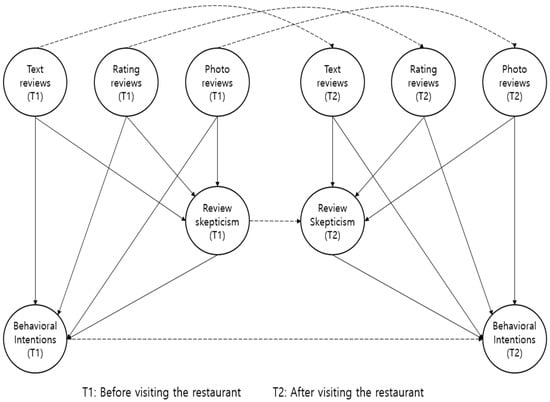

Figure 1 presents our main research approach. Changes in emotions and behavioral intentions deriving from specific stimuli should coincide with changes in attitudes over time. Because updated online reviews influence attitudes [], analyzing how the effects of individual review types differ over time (before and after a consumption event) is critical.

Figure 1.

Conceptual model. Note: the dotted lines indicate carryover effects.

2.2. Review Skepticism and Its Outcomes

Skepticism is a psychological tendency to be sensitive to negative evidence and to reject positive data []. The academic literature on consumer behavior defines it as a consumer’s psychological tendency to doubt, disbelieve, and question []. This attitude naturally extends to online reviews. In other words, it is a psychological condition that results in doubting a message []. Here, we define review skepticism as a consumer’s doubt concerning online reviews related to a particular service.

Researchers have already emphasized the importance of online reviews in using, visiting, or purchasing new services. Smith and Anderson [] found that nearly half of American consumers are uncertain whether online reviews are trustworthy and unbiased. Additionally, consumers believe firms often manipulate online reviews []. Recent studies have underscored the importance of consumer skepticism of online reviews in decision-making processes [,]. Indeed, the literature has convincingly demonstrated that consumer skepticism is critical in facilitating consumer withdrawal (Mendini et al., 2018), indicating that the cognitive accumulation of review skepticism potentially discourages consumption behavior [] and can hinder interactions with service providers []. This highlights the need for innovative research tracing review skepticism and feedback changes to their antecedents and outcomes over time.

To address review skepticism, this study focuses its analysis on behavioral intentions. From a consumption system perspective, behavioral intentions can be influenced by repeated restaurant visit cycles []. This includes word-of-mouth (WOM) marketing and loyalty intention. Thus, we define behavioral intentions as a customer’s desire to visit repeatedly, engage in WOM marketing, and maintain loyalty to a particular restaurant.

2.3. Research Hypotheses

Although previous studies have quantified each review type’s effect on behavioral intentions [,,], they may weaken after a restaurant visit compared to beforehand. For example, a recent study has demonstrated that sensory reviews (e.g., photos) are less effective than other types after consumption experiences []. While customers may initially react to reviews, their perceptions of said reviews may dilute after subsequent restaurant visits. Nonetheless, because text reviews are written after a consumption experience and are more detailed than other types [], we expect they will more strongly impact behavioral intentions than the other types. Thus, we propose the following hypothesis:

H1.

Text reviews more strongly impact behavioral intentions than the other two review types, but the impacts of all three dilute over time.

Consumers often reject good reviews and accept bad ones. This is because trust in online reviews is often low and suspicious cases increase customer skepticism [,]. In particular, many review skeptics react more strongly to rating reviews and accept them more rapidly in later (as opposed to earlier) consumption stages [] because their earlier judgment is updated during subsequent consumption episodes. However, we argue that consumers tend to share more objective information using photos via Instagram or SNS, helping to decrease review skepticism. Altruism theory suggests that users help others reduce uncertainty by sharing useful and objective information (i.e., photo reviews) on social media []. As photo posting usually occurs before consuming meals in restaurants, it may help reduce visually perceived risks or trust-related biases. Thus, we proposed the following hypothesis:

H2.

The three types of online reviews negatively affect review skepticism, but the relationships strengthen as consumers’ review perceptions improve over time.

Numerous studies have shown that skeptical consumers respond negatively to certain products or services [,,]. While they suggest that skepticism can negatively affect behavioral intentions, they do not address exactly how this relationship evolves. Earlier marketing and communication studies emphasized shifts in review skepticism (i.e., from neutral to weak) [], whereas Huifeng and Ha [] demonstrated that the relationship between review skepticism and behavioral intentions strengthens over time. These findings indicate that the direction of this relationship’s evolution is mixed. Although we predict a negative linkage between review skepticism and behavioral intentions, we expect it to weaken or dilute over time. This is because (aside from one-time restaurant visits) the difference between actual consumption experiences and review skepticism may decrease during subsequent visits. Thus, we propose the following hypothesis:

H3.

The impact of review skepticism on behavioral intentions decreases over time as subsequent restaurant visits dilute negative perceptions of online reviews.

The presence of carryover effects is illustrated in Figure 1. Carryover effects refer to individual constructs’ direct effects on the same constructs across subsequent periods (T1 → T2). The three online review types, review skepticism, and intentions at T1 are anchors that can affect subsequent judgments at T2 []. Adaptation-level theory suggests that consumer evaluations of earlier stimuli are key referents by which later stimuli are judged []. Because the impacts of online reviews in assisting consumer decision-making tend to weaken over time [], the three review types are unlikely to generate carryover effects. However, we anticipate that review skepticism and behavioral intentions will have positive carryover effects. Conceptually, attitude theory emphasizes that individuals’ current attitudes toward certain objects remain consistent with their past attitudes [], and the satisfaction cycle model also focuses on the repetitive sphere of behavioral intentions []. Thus, we propose the following final hypothesis:

H4.

While review skepticism and behavioral intentions generate positive carryover effects, the three review types do not.

Notably, this study does not formally hypothesize the mediating role of review skepticism between the three online review types and behavioral intentions. However, the SOR and coping framework underscore the mediating effect of emotional responses to review stimuli, thereby facilitating behavioral intentions. Using the mediating role of review skepticism, we argue that the three review types more dynamically influence behavioral intentions late in a restaurant visit cycle when customers update their emotional responses to review stimuli [].

3. Methodology

3.1. Data Collection

This study tested the proposed hypotheses using data from a tracking study on customers’ successive restaurant visits. We administered a survey (see Appendix A) in mainland China using two time-lag intervals. Based on the data collection methodology proposed in the consumption-system approach [,], this survey was conducted by separating the pre- (T1) and post- (T2) stages of specific restaurant visits. Because mainland China is a vast and diverse area, we collected data specifically from first-tier cities such as Beijing, Shanghai, Guangzhou, and Shenzhen.

We excluded fast food establishments, instead focusing on casual and fine dining enterprises, as they had the most online text and rating reviews. To select an optimal sample, we only included participants who met the following criteria: those who have (1) searched for a new restaurant within the past month, (2) read all the text, ratings, and photo reviews of the restaurant, and (3) made a reservation at that restaurant based on these reviews. To reduce respondent bias, we excluded those who already knew of a particular restaurant they had wanted to visit or had read online reviews before participating in the initial survey (T1). In addition, to verify the respondents’ eligibility, we assessed their qualifications by asking several questions at the beginning of the survey.

A professional online research firm (www.wjx.cn 8 February 2023) collected data for both phases, which were approximately two months apart. The first survey (T1) was conducted from early to mid-May 2023, and the second (T2) from mid-July to the end of July. The main criteria for limiting the time interval were used to minimize the dilution of participants’ consumption experience by adjusting the gap between T1 (pre-visit) and T2 (post-visit). For example, while many participants visited 1–2 weeks after making a reservation, some visited at least one month later. However, all responses were limited to less than two months, primarily to reduce common method bias [,].

At T1, we contacted 2250 respondents who met the sample criteria through email and text messages. A total of 1032 participated in the T1 survey, but we excluded 36 who provided careless responses; thus, the final sample totaled 996. After two months (T2), we administered the second survey to the same participants in the initial survey (996 in T1). To increase the response rate at T2, we texted and emailed respondents twice. A total of 794 completed the T2 survey, but we excluded 56 as they did not visit their preferred restaurant after the T1 survey. Additionally, we removed nine who provided careless responses. Thus, we used the remaining 729 viable responses to test our hypotheses (response rate = 32.4%). All participants reported on the same restaurant at T2 as they had at T1. For example, if Participant A’s response was about Restaurant B at T1, then that same participant reported about the same Restaurant B at T2. That is, 729 responses mean that 729 restaurants were surveyed. However, some respondents visited the same restaurants as other respondents. Thus, to clarify, participants visited a total of 517 different restaurants. Finally, they reported on each restaurant once during T1 and T2.

Of the respondents, 56.8% (n = 406) were female, and participant ages ranged from 19 to 62, with a mean of 38.7. The main restaurant types visited were Chinese (43.1%), Western (27.7%), Japanese (6.4%) and other (22.8%). The restaurant visit statistics were representative as they resembled the market share of restaurants in China [].

3.2. Measures

Because all measurement items were originally written in English, we performed back-translation. To this end, one marketing professor fluent in both English and Chinese and two Chinese doctoral students participated in this work. After the questionnaire was completed, 27 Chinese undergraduate students participated in a pilot test to ensure face validity. Based on the results, we concluded there were no face validity issues.

All constructs were measured using multiple items from previously published scales. As shown in Table 1, text reviews were measured using two items adapted from Ong [] and Yayli and Bayram []. These items were used in the 2012 study; however, more recently, they have been adopted in multidisciplinary studies [,,,]. While previous studies have mainly evaluated text reviews using text mining techniques [,,], we used a scale because we focused on changes in temporal and carryover effects. For the same reason, we used two items partially modified from Gavilan et al. [] and Park et al. [] to measure rating reviews and two items adapted from Rieh [] and Ren et al. [] to measure photo reviews. Meanwhile, online reviews were divided into two classifications: text reviews from a qualitative perspective and ratings from a quantitative perspective []. Therefore, this study approached online reviews by separating these two perspectives.

Table 1.

Measurement items and CFA results.

Our analysis measured review skepticism using four items adapted from Zhang et al. []. Their scales cover three main features: truthfulness, motivation, and identity; we focused on these features based on their guidelines. Finally, we measured behavioral intentions using three items adapted from Ryu and Jang []. All constructs were measured on a seven-point Likert-type scale (1 = strongly disagree to 7 = strongly agree; 1 = very unlikely to 7 = very likely) at T1 and T2.

3.3. Measurement Comparison

Each construct’s mean change is a useful metric for assessing consumer responses. Thus, we compared the changes in the means by evaluating the differences between T1 and T2. As shown in Table 2, our partwise t-test analysis demonstrated that most constructs increased or decreased significantly, excluding text reviews. In other words, the mean scores changed substantially before and after restaurant visits. However, all Cronbach alphas remained unchanged across consumption periods, indicating their internal consistency was stable [].

Table 2.

Mean (SD) and Cronbach’s alphas for the scales at T1 and T2.

3.4. Common Method Bias

As most studies inevitably involve tradeoffs, reducing the possibility of common method bias is critical []. To address this, we assessed the possibility of common method bias using the single-method factor approach []. Specifically, we created a common method factor that included indicators from all the main constructs and evaluated each indicator’s variance, which was substantially explained by the principal construct and the common method in SmartPLS 4.0. The results showed that the average substantively explained indicator variance was 0.759 while the average for method-based variance was 0.012, meaning that the ratio of substantive variance was approximately 63:1. Additionally, we assessed common method bias using Harman’s single-factor test at two different time points. A factor with a variance at both time periods explained less than 50% (T1: 40.1% vs. T2: 38.6%). Thus, we concluded that common method bias was not an issue.

3.5. Non-Response Bias

The response rate for the second time-lag interval (T2) was relatively low; we used an extrapolation method to test for non-response bias. Researchers [] recommend that although “a low response rate does not affect the validity of the data collected, it is still necessary to test for non-response bias” (p. 91). To this end, we compared respondents who participated in the initial survey but not in the second (n = 996–794 = 202) to those who partook in both (n = 202). Based on Armstrong and Overton’s extrapolation method [], we performed a t-test to compare the differences in the means of key constructs and demographic information. The results revealed no significant differences at p < 0.05. Thus, we concluded that non-response bias was not a problem.

4. Results

4.1. Analytic Methods

We tested our research hypotheses using partial least squares (PLS) models. Like our proposed model, the PLS approach is suitable for analyzing theoretical and complex models []. In particular, scholars have recommended using PLS modeling when estimating behavioral change dynamics [] or marketing construct evolution [,]. Furthermore, Roemer [] highlighted that using a single multi-period model with matched consumer surveys (i.e., a survey with the same respondents from T1 to T2) is critical when estimating carryover effects using the PLS approach. In line with these observations, we tested our model using Smart-PLS 4.0.

4.2. Measurement Model

Anderson and Gering [] recommended that researchers use a two-stage analytical procedure when conducting structural equation modeling. The first phase is verifying the outer loadings by establishing a measurement model involving internal consistency reliability as well as convergent and discriminant validity. As shown in Table 1, all loading values exceeded the critical value of 0.7, and all average variance extracted (AVE) values ranged from 0.68 to 0.86, exceeding the cutoff value of 0.5 []. These results demonstrate that we secured convergent validity. Meanwhile, the composite reliability and Cronbach’s alpha values were acceptable, thereby indicating that we obtained internal consistency reliability [].

We checked for discriminant validity using Fornell and Larcker’s guidelines [], which stipulated that the correlations between constructs should be less than the square root of the AVE value. As detailed in Table 3, our results met these criteria. However, researchers have recently criticized Fornell and Larcker’s criterion, suggesting the heterotrait–monotrait ratio of correlations (HTMT) as an alternative criterion [,], identifying an HTMT ratio with a 0.85 cutoff as the optimal assessment of discriminant validity. As Table 3 shows, our results also met the HTMT criteria, ensuring discriminant validity.

Table 3.

Discriminant validity: correlations and HTMT results.

4.3. Structural Model

We applied a cross-validated predictive ability test (CVPAT) to assess the model’s predictive accuracy. Recent studies have suggested that obtaining significant CVPAT results is useful for assessing the external validity of structural models in specific contexts []. As presented in Table 4, CVPAT was conducted at both the overall model level and for each of the seven constructs []. For example, a negative average loss value difference suggests that the indicator average is smaller and is therefore preferable []. The overall model level has a strong predictive validity.

Table 4.

CVPAT results.

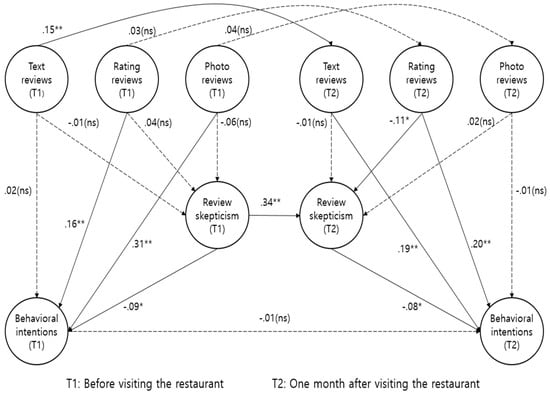

Figure 2 summarizes our hypothesized model’s overall structural estimates. Although it presents complex estimates, our key approach was to test the significance of the temporal, carryover, and mediated effects. Table 5 shows the dynamic relationship’s significance over time. Specifically, our outcomes reveal whether these relationships strengthened, weakened, or remained unchanged over subsequent restaurant visits.

Figure 2.

Path estimates. Note: the dotted lines are not significant at p < 0.05.

Table 5.

Changes in path coefficients.

While text reviews’ direct effect on behavioral intentions was not significant at T1 (β = 0.02, p > 0.05), the same relationship at T2 had strengthened significantly (β = 0.19, p < 0.01). As for rating reviews’ direct effects on behavioral intentions, this was significant at T1 (β = 0.16, p < 0.05), and the same relationship was significantly positive at T2 (β = 0.20, p < 0.01). However, the relationship change was not significant (Δ β = 0.04, p > 0.05). Although the direct effect of photo reviews on behavioral intentions was significant at T1 (β = 0.31, p < 0.01), the same relationship was not significant at T2 (β = −0.01, p > 0.05), and the relationship had weakened significantly (Δ β = −0.32, p > 0.01). This shift indicates that the relationship diluted over time. Thus, the temporal effects for the three review types strengthened, weakened, or remained unchanged, partially supporting H1.

The direct effect of text reviews on review skepticism was not significant at T1 (β = −0.01, p > 0.05) or T2 (β = −0.01, p > 0.05), and the temporal effect remained unchanged over time. Meanwhile, although rating reviews’ direct effect on review skepticism was not significant at T1 (β = 0.04, p > 0.05), the same relationship was significant at T2 (β = −0.11, p < 0.05), and the effect decreased significantly over time (Δ β = −0.15, p < 0.01). Finally, although photo reviews’ direct effect on review skepticism was not significant at T1 (β = −0.06, p > 0.05) or T2 (β = 0.02, p > 0.05), it increased significantly over time (Δ β = 0.08, p < 0.05). Thus, H2 was partially supported.

The direct effect of review skepticism on behavioral intentions was significant at T1 (β = −0.09, p < 0.05), and the same relationship was also significant at T2 (β = −0.08, p < 0.05). The relationship during both periods was negatively significant, and the effect remained unchanged over time (Δ β = 0.01, p > 0.05). As the relationship did not strengthen over time, H3 was rejected. H4 focused on potential carryover effects, and our analysis showed that these effects occurred for text reviews (β = 0.15, p < 0.01) and review skepticism (β = 0.34, p < 0.01) but not for rating and photo reviews or behavioral intentions. Thus, H4 was partially supported.

Interestingly, our findings revealed that rating reviews had a mediating effect (β = 0.09, p < 0.05) at T2. The direct effect of rating reviews on behavioral intentions was significant for both periods, strengthening the impact of rating reviews, especially with review skepticism as a mediator. This result suggests that rating reviews provide new insights for reducing review skepticism relative to other review types.

5. Discussion

Although the academic literature highlights the importance of online review dynamics, researchers have mainly focused on the relationship between behavioral intentions and their drivers from a cross-sectional perspective. In particular, while researchers have extensively investigated the direct relationships between the three main online review types and their outcomes, studies examining review skepticism’s direct and indirect roles over time have been relatively limited. Our study fills this critical gap in the hospitality literature by providing evidence that the effects of the three online review types differ from before visiting the restaurant to after visiting the restaurant.

Regarding these two stages, this study provides novel insights for gaining a better understanding of online reviews and consumer behavior. In taking up a dynamic perspective of online reviews [], as behavioral responses develop differently over time, the impact of rating reviews (which can be cognitively processed more easily than the other online review types) strengthens. Put simply, while prior research [] had found that rating reviews’ influence is positive from a cross-sectional perspective, our outcomes provide ample evidence that rating reviews’ impact on behavioral intentions strengthens significantly. However, our analysis revealed no difference in the direct impact of photo reviews on behavioral intentions after restaurant visits. Our findings strongly support prior research showing that photo reviews are useful as information cues before purchasing or visiting a particular service [,,]. Interestingly, while text reviews did not significantly impact behavioral intentions at T1, their impact increased significantly at T2, highlighting a difference in behavioral responses between the pre-restaurant-visit and post-restaurant-visit phases. As such, our identification of the dynamic nature of online reviews’ influence on consumer behavior emphasizes a key theoretical finding that updates previous work in this area.

As noted earlier, review skepticism plays critical direct and indirect roles across consumption episodes. We identified no significant relationships between the three main online review types and behavioral intentions at T1. However, at T2, rating reviews’ direct effect on review skepticism was negatively significant, which also played an important mediating role in the relationship between rating reviews and behavioral intentions. Notably, the linkage between review skepticism and behavioral intentions was negatively significant at both T1 and T2, and its impact remained unchanged. This indicates little change in review skepticism from one restaurant visit to the next. Specifically, because the stimuli for review skepticism are relatively weak, the state of review skepticism does not easily change over time.

By providing ample evidence regarding decision-making stimuli and highlighting the diminishing role of review skepticism during subsequent restaurant visits, this study contributes to theories involving the SOR mechanism and the coping framework. Although prior research has focused on online review types, how they have evolved concerning review skepticism remained unknown until our research was presented here. To begin with, our analysis revealed the SOR mechanism’s limited effect when consumers search for new restaurants at T1. If consumers perceive online reviews negatively when choosing a restaurant, the three online review types should stimulate their skepticism []. However, at T1, we found that not all review types directly stimulate review skepticism and only ratings and photo reviews directly affected behavioral intentions. Interestingly, only rating reviews directly affected review skepticism at T2. Our findings thus demonstrate that the impact of these types on review skepticism changes over time.

Second, although the effect of the coping framework, which addresses the appraisal processes–emotional reactions–coping responses mechanism, was limited at T1, it accurately captured the rating review–review skepticism–behavioral intentions linkage at T2. Conceptually, coping is key for behavioral adaptation [], but our outcomes demonstrate that consumer acceptance via coping processes varies as time passes. Specifically, we identified that the coping framework operates in a specific context over time rather than immediately when a stimulus is given. In the initial stage of online reviews, coping strategies should focus on reducing the problem’s negative emotional impact and diverting attention from skepticism []. However, this logic is more relevant in the diffusion stage (T2) than the initial phase (T1) of online review stimulation, and we show that processing the substance of consumer appraisals and emotional responses requires the passage of time.

Third, our findings both update and extend the scholarly understanding of photo reviews. While we found that photo reviews do not directly affect review skepticism at either T1 or T2, Zhu et al. [] showed a direct relationship between photo reviews and behavioral intentions in the early stage of searching for specific objects. However, this relationship is diluted or ineffective over time. Furthermore, we discovered a gap between cross-sectional and longitudinal studies when decision-making processes involve photo reviews. Specifically, while photo reviews are a significant driver of behavioral intentions in the early consumption stage, their effectiveness declines sharply in the late phase, suggesting that photo reviews’ influence on behavioral intentions decreases over time.

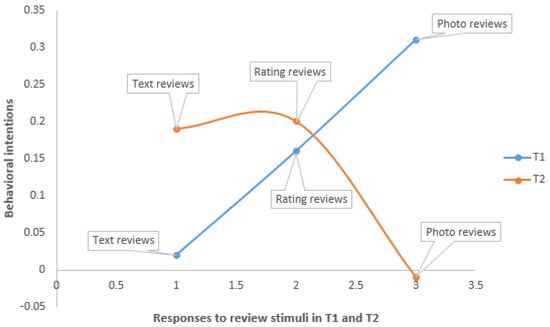

Our findings have important implications for managers responsible for designing online review strategies. When managing reviews before and after a consumption episode, they should focus on identifying each online review type’s impact. As shown in Figure 3, our analysis of the three types lays a foundation for acquiring a deeper understanding of changes in consumer behavior. In particular, the impact of text reviews dramatically increases after post-visit restaurants, while the influence of photo reviews decreases sharply. Nonetheless, the impact of rating reviews remains stable before and after a restaurant visit.

Figure 3.

Summary of online review impacts.

As the importance of online reviews before and after purchases receives increasing recognition in the marketplace, a deeper understanding of both review types has become critical. Notably, we found that although text reviews’ impact on behavioral intentions was insignificant at T1, their influence increased significantly over time. Based on these results, we recommend that managers strategically engage in feedback as initial reviews are generated. For example, when customer reviews are posted on their websites, communication managers should personally write a response to address such comments and immediately share these with customers. Alternatively, managers can adopt a policy of timely responses to positive or negative reviews, increasing the likelihood of repeat (or potential) customers assessing their responses favorably. In this way, as the number of text reviews increases over time, their usefulness and effectiveness will induce positive evaluations and favorable emotions of prospective and existing customers alike.

As mentioned earlier, another practical implication of our findings concerns the role that rating reviews play. Of the three types, only rating reviews decreased review skepticism; however, we identified that this effect was more significant at the late consumption stage than at the early phase. Thus, customer relationship managers in the hospitality industry should take comfort in knowing that review skepticism can decrease. Put simply, review skepticism can be attenuated, or at least does not necessarily prevent consumers from developing favorable intentions as time passes. Specifically, we found that the impact of skepticism regarding rating reviews decreases with time. Thus, such reviews should be carefully managed when the consumer base is highly skeptical of online reviews. This is because rating reviews involve a cognitive evaluation rather than evaluative comments, which are mainly subjective. Nonetheless, rating reviews can be used to help generate revisits. For example, rather than simply employing the strategy of leaving simple rating reviews, service operation managers in the hospitality sector could disclose the direction of ratings over time, providing customers with a more objective picture of the status of their service evaluations. Consequently, communication, service operation, and customer relationship managers must be cognizant of changes in online reviews and share evidence of consumer evaluations with potential and repeat customers. In this way, both analyses of and responses to changes in consumer behavior improve a business’ strategic capabilities regarding how to leverage the customer comments, ratings, and photos to which firms respond sensitively.

Although this study provides ample evidence that the drivers of behavioral intentions evolve, it does have some limitations regarding the initial and later phases of the restaurant visit cycle. First, this study focused on dinners with family or friends and did not consider other types of dinners, such as for business. However, if a consumer initially visits a particular restaurant for a social gathering and has a positive experience, he or she may later hold a business meeting there. Moreover, generally, when invited customers visit a new restaurant, they tend to search for the restaurant online and check reviews. Thus, future studies should consider how the impacts of online reviews on review skepticism levels depend on the visit’s purpose. Another limitation is that our panel could be divided into two categories: those who search for online reviews and those who write reviews or post comments. Therefore, future studies should control for these participant demographics. Furthermore, although this study uses a time-lag interval approach, our findings may not fully explain the longitudinal dynamics of the relationships among variables, which could be critical for understanding the evolution of consumer behavior over time. To address this limitation, we recommend that future studies extend our findings by conducting longitudinal studies using at least three or more intervals.

Finally, this study may have a shortcoming, which might affect our research value: that is, the peculiarities of the Chinese cultural environment were not considered. Although previous studies demonstrated that measurement items used in this study were applicable to the Chinese cultural environment settings [,,], we suggest that future research adapts it to the Chinese context in order to increase its robustness. If these idiosyncrasies are supplemented in future research, they may help generalize this study’s results.

Author Contributions

Conceptualization, H.-Y.H. and T.W.; methodology, H.-Y.H.; software, T.W.; validation, T.W. and H.-Y.H.; formal analysis, H.-Y.H.; investigation, T.W.; resources, T.W.; data curation, T.W.; writing—original draft preparation, H.-Y.H.; writing—review and editing, T.W.; visualization, T.W.; supervision, H.-Y.H.; project administration, H.-Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data will be made available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. This Study’s Questionnaire

This survey asks you about your personal opinions associated with your restaurant experiences. Please show the extent to which you think restaurants offering services should have the features described in each statement. Do this by using the scale presented below. If you strongly agree that these restaurants should have a certain feature, place a one on the line preceding the statement, where 1 = “strongly disagree”. If you strongly disagree that these restaurants should have a specific feature, place a seven on the line, where 7 = “strongly agree”. (However, you can also find various anchors according to each question.) If your evaluations (or feelings) are not strong, place one of the numbers between one and seven on the line—all we are interested in is a number that best represents your perceptions about restaurants and the services they offer.

- <Sample criteria selection questions>

- 1. Have you searched for a new restaurant in the last month?

- Yes 2. No

- 2. Have you read all the text, ratings and photo reviews of the restaurant you want to visit?

- Yes 2. No

- 3. Did you make a reservation at that restaurant based on these reviews?

- Yes 2. No

- 4. With respect to the restaurant indicated in this survey, please specify which statement is true by ticking the appropriate box.

- I am a first-time customer at this restaurant. ( )

- I am a repeat customer at this restaurant. ( )

- 5. Please write the name of the restaurant that you will visit.

- ( )

- ⊙ Please respond to this survey only for the restaurant you have listed above.

Please tick the most appropriate number on the 7-point scale.

| Online Reviews | Strongly Disagree | Strongly Agree | |||||

| Pure text reviews (without photos or ratings) help me make a final decision. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| A recently posted pure text review related to a restaurant affects my decision-making. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| The overall rating associated with a particular restaurant is very bad. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Rating reviews for a particular restaurant (with some text) are trustworthy. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Pure photo reviews that include reviewers’ experience are realistic. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Photos containing multiple entities are useful for my decision-making. | 1 | 2 | 3 | 4 | 5 | 6 | 6 |

For each of the statements below please indicate the extent to which the statements describe your experience with the restaurant. Please circle the most appropriate number on the 7-point scale, where 1 = strongly disagree and 7 = strongly agree.

| Review Skepticism | Strongly Disagree | Strongly Agree | |||||

| Online restaurant reviews are generally not truthful. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Those writing restaurant reviews are not necessarily the real customers. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Online restaurant reviews are often inaccurate. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| The same person often posts reviews under different names. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

The following questions are related to behavioral intentions. Please indicate the most appropriate number by circling the most appropriate number on the 7-point scale, where 1 = Very unlikely and 7 = Very likely.

| Behavioral Intentions | Very Unlikely | Very Likely | |||||

| I would like to return to this restaurant in the future. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| I would recommend this restaurant to my friends. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| I am willing to stay longer than I planned at this restaurant. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

References

- Bilgihan, A.; Seo, S.; Choi, J. Identifying Restaurant Satisfiers and Dissatisfiers: Suggestions from Online Reviews. J. Hosp. Mark. Manag. 2018, 27, 601–625. [Google Scholar] [CrossRef]

- Cheng, Y.; Ho, H. Social Influence’s Impact on Reader Perceptions of Online Reviews. J. Bus. Res. 2015, 68, 883–887. [Google Scholar] [CrossRef]

- Kim, R.Y. The Influx of Skeptics: An Investigation of the Diffusion Cycle Effect on Online Review. Electron. Mark. 2020, 30, 821–835. [Google Scholar] [CrossRef]

- Zhang, Z.; Ye, Q.; Law, R.; Li, Y. The Impact of E-word-of-mouth on the Online Popularity of Restaurants: A Comparison of Consumer Reviews and Editor Reviews. Int. J. Hosp. Manag. 2010, 29, 694–700. [Google Scholar] [CrossRef]

- Ramadhani, D.P.; Alamsyah, A.; Febrianta, M.Y.; Damayanti, L.Z.A. Exploring Tourists’ Behavioral Patterns in Bali’s Top-Rated Destinations: Perception and Mobility. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 743–773. [Google Scholar] [CrossRef]

- Lee, E.; Shin, S.Y. When Do Consumers Buy Online Product Reviews: Effects of Review Quality, Product Type, and Reviewer’s Photo”. Comput. Hum. Behav. 2014, 31, 356–366. [Google Scholar] [CrossRef]

- Cheng, X.; Fu, S.; Sun, J.; Bilgihan, A.; Okumus, F. An Investigation on Online Reviews in Sharing Economy Driven Hospitality Platforms: A Viewpoint of Trust. Tour. Manag. 2019, 71, 366–377. [Google Scholar] [CrossRef]

- Filieri, R. What Makes Online Reviews Helpful? A Diagnosticity-Adoption Framework to Explain Informational and Normative Influences in e-WOM. J. Bus. Res. 2015, 68, 1261–1270. [Google Scholar] [CrossRef]

- Ho-Dac, N.N.; Carson, S.J.; Moore, W.L. The Effects of Positive and Negative Online Customer Reviews: Do Brand Strength and Category Maturity Matter? J. Mark. 2013, 77, 37–53. [Google Scholar] [CrossRef]

- Wang, B.; Jia, T. Seize the Favorable Impression: How Hosts should Manage Positive Online Reviews. Int. J. Contemp. Hosp. Manag. 2024, 36, 1375–1392. [Google Scholar] [CrossRef]

- Godes, D.; Silva, J.C. Sequential and Temporal Dynamics of Online Reviews. Mark. Sci. 2012, 31, 448–473. [Google Scholar] [CrossRef]

- Zhang, Y.; Ha, H. The Evolution of Consumer Restaurant Selection: Changes in Restaurant and Food Delivery Application Attributes over Time. J. Bus. Res. 2024, 170, 114323. [Google Scholar] [CrossRef]

- Duan, W.; Gu, B.; Whinston, A.B. The Dynamics of Online Word-of-Mouth and Product Sales: An Empirical Investigation of the Movie Industry. J. Retail. 2008, 84, 233–242. [Google Scholar] [CrossRef]

- Kim, J.M.; Jun, M.; Kim, C.K. The Effects of Culture on Consumers’ Consumption and Generation of Online Reviews. J. Interact. Mark. 2018, 43, 134–150. [Google Scholar] [CrossRef]

- Wan, Y.; Ma, B.; Pan, Y. Opinion Evolution of Online Consumer Reviews in the e-commerce Environment. Electron. Commer. Res. 2018, 18, 291–311. [Google Scholar] [CrossRef]

- Bergkvist, L.; Eisend, M. The Dynamics Nature of Marketing Constructs. J. Acad. Mark. Sci. 2021, 49, 521–541. [Google Scholar] [CrossRef]

- Zhang, J.; Watson, G.F.; Palmatier, R.W.; Dant, R.P. Dynamic Relationship Marketing. J. Mark. 2016, 80, 53–75. [Google Scholar] [CrossRef]

- Oliver, R.L. Satisfaction: A Behavioral Perspective on the Consumer, 2nd ed.M.E. Sharpe: New York, NY, USA, 2010. [Google Scholar]

- Singer, J.D.; Willett, J.B. Applied Longitudinal Data Analysis: Modeling Change and Event Occurrence; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Kim, S.; Kim, Y. Regulatory Framing in Online Hotel Reviews: The Moderating Roles of Temporal Distance and Temporal Orientation. J. Hosp. Tour. Manag. 2022, 50, 139–147. [Google Scholar] [CrossRef]

- Jacoby, J. Stimulus-Organism-Response Reconsidered: An Evolutionary Step in Modeling (Consumer) Behavior. J. Consum. Psychol. 2002, 12, 51–57. [Google Scholar] [CrossRef]

- Liu, F.; Lai, K.; Wu, J.; Duan, W. Listening to Online Reviews: A Mixed-Methods Investigation of Customer Experience in the Sharing Economy. Decis. Support Syst. 2021, 149, 113609. [Google Scholar] [CrossRef]

- Su, L.; Yang, Q.; Swanson, S.R.; Chen, N.C. The Impact of Online Reviews on Destination Trust and Travel Intention: The Moderating Role of Online Review Trustworthiness. J. Vacat. Mark. 2022, 28, 406–423. [Google Scholar] [CrossRef]

- Ma, W.; Tariq, A.; Ali, M.W.; Nawaz, M.A.; Wang, X. An Empirical Investigation of Virtual Networking Sites Discontinuance Intention: Stimuli Organism Response-based Implication of User Negative Disconfirmation. Front. Psychol. 2022, 13, 862568. [Google Scholar] [CrossRef] [PubMed]

- Sherman, E.; Mathur, A.; Smith, R.B. Store Environment and Consumer Purchase Behavior: Mediating Role of Consumer Emotions. Psychol. Mark. 1997, 14, 361–378. [Google Scholar] [CrossRef]

- Jain, A.; Dash, S.; Malhotra, N.K. Consumption Coping to Deal with Pandemic Stress: Impact on Subjective Well-being and Shifts in Consumer Behavior. Eur. J. Mark. 2023, 57, 1467–1501. [Google Scholar] [CrossRef]

- Bagozzi, R.P. The Self-Regulation of Attitudes, Intentions, and Behavior. Soc. Psychol. Q. 1992, 55, 178–204. [Google Scholar] [CrossRef]

- Ho, G.K.S.; Lam, C.; Law, R. Conceptual Framework of Strategic Leadership and Organizational Resilience for the Hospitality and Tourism Industry for Coping with Environmental Uncertainty. J. Hosp. Tour. Insights 2023, 6, 835–852. [Google Scholar] [CrossRef]

- Jordan, E.J.; Vogt, C.A.; DeShon, R.P. A Stress and Coping Framework for Understanding Resident Responses to Tourism Development. Tour. Manag. 2015, 48, 500–512. [Google Scholar] [CrossRef]

- Ghose, A.; Ipeirotis, P.G. Estimating the Helpfulness and Economic Impact of Product Reviews: Mining Test and Reviewer Characteristics. IEEE Trans. Knowl. Data Eng. 2011, 23, 1498–1512. [Google Scholar] [CrossRef]

- Korfiatis, N.; García-Bariocanal, E.; Sánchez-Alonso, S. Evaluating Context Quality and Helpfulness of Online Product Reviews: The Interplay of Review Helpfulness vs. Review Context. Electron. Commer. Res. Appl. 2012, 11, 205–217. [Google Scholar] [CrossRef]

- An, Q.; Ma, Y.; Du, Q.; Xiang, Z.; Fan, W. Role of User-generated Photos in Online Hotel Reviews: An Analytical Approach. J. Hosp. Tour. Manag. 2020, 45, 633–640. [Google Scholar] [CrossRef]

- Altab, H.; Yinping, M.; Sajjad, H.; Frimpong, A.N.; Frempong, M.F.; Adu-Yeboah, S.S. Understanding Online Consumer Textual Reviews and Rating: Review Length with Moderated Multiple Regression Analysis Approach. SAGE Open 2022, 12, 1–21. [Google Scholar] [CrossRef]

- Lis, B.; Fisher, M. Analyzing Different Types of Negative Online Consumer Reviews. J. Prod. Brand Manag. 2020, 29, 637–653. [Google Scholar] [CrossRef]

- Hogarth, R.M.; Einhorn, H.J. Order Effects in Belief Updating: The Belief-adjustment Model. Cogn. Psychol. 1992, 24, 1–55. [Google Scholar] [CrossRef]

- Obermiller, C.; Spangenberg, E.R.; MacLachian, D.L. Ad Skepticism: The Consequence of Disbelief. J. Advert. 2005, 34, 7–17. [Google Scholar] [CrossRef]

- Webb, D.J.; Mohr, L.A. A Typology of Consumer Responses to Cause-related Marketing: From Skeptics to Socially Concerned. J. Public Policy Mark. 1998, 17, 226–239. [Google Scholar] [CrossRef]

- Smith, A.; Anderson, M. Online Reviews. Available online: www.pewinternet.org/2016/12/19/online-reviews/ (accessed on 17 July 2023).

- Gammon, J. Americans Rely on Online Reviews to Make Purchase Decisions But at the Same Time They Do Not Trust That Reviews Are True and Fair. Available online: https://today.yougov.com/topics/lifestyle/articles-reports/2014/11/24/americans-relyonline-reviews-despite-not-trusting/ (accessed on 17 July 2023).

- Ahmad, F.; Guzmán, F. Consumer Skepticism about Online Reviews and Their Decision-making Process: The Role of Review Self-efficacy and Regulatory Focus. J. Consum. Mark. 2021, 38, 587–600. [Google Scholar] [CrossRef]

- Huifeng, P.; Ha, H.; Lee, J. Perceived Risks and Restaurant Visit Intentions in China: Do Online Consumer Reviews Matter? J. Hosp. Tour. Manag. 2020, 43, 179–189. [Google Scholar] [CrossRef]

- Huifeng, P.; Ha, H. Relationship Dynamics of Review Skepticism using Latent Growth Curve Modeling in the Hospitality Industry. Curr. Issues Tour. 2023, 26, 496–510. [Google Scholar] [CrossRef]

- Zhang, X.; Ko, M.; Carpenter, D. Development of a Scale to Measure Skepticism toward Electronic Word-of-mouth. Comput. Hum. Behav. 2016, 56, 198–208. [Google Scholar] [CrossRef]

- Javed, M.; Malik, F.A.; Awan, T.M.; Khan, R. Food Photo Posting on Social Media while Dining: An Evidence Using Embedded Correlational Mixed Methods Approach. J. Food Prod. Mark. 2021, 27, 10–26. [Google Scholar] [CrossRef]

- Jin, S.; Phua, J. Making Reservations Online: The Impact of Consumer-written and System-aggregated User-generated Content (UGG) in Travel Booking Websites on Consumers’ Behavioral Intentions. J. Travel Tour. Mark. 2016, 33, 101–117. [Google Scholar] [CrossRef]

- Liu, X.; Lin, J.; Jiang, X.; Chang, T.; Lin, H. eWOM Information Richness and Online User Review Behavior: Evidence from TripAdvisor. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 880–898. [Google Scholar] [CrossRef]

- Lopez, A.; Garza, E. Do Sensory Reviews Make more Sense? The Mediation of Objective Perception in Online Review Helpfulness. J. Res. Interact. Mark. 2022, 16, 438–456. [Google Scholar] [CrossRef]

- Cadario, R. The Impact of Online Word-of-mouth on Television Show Viewership: An Inverted U-shaped Temporal Dynamic. Mark. Lett. 2015, 26, 411–422. [Google Scholar] [CrossRef]

- Groening, C.; Sarkis, J.; Zhu, Q. Green Marketing Consumer-level Theory Review: A Compendium of Applied Theories and Further Research Directions. J. Clean. Prod. 2018, 172, 1848–1866. [Google Scholar] [CrossRef]

- Kwon, J.; Ahn, J. The Effect of Green CSR Skepticism on Positive Attitude, Reactance, and Behavioral Intention. J. Hosp. Tour. Insights 2021, 4, 59–76. [Google Scholar] [CrossRef]

- Yoon, D.; Chen, R. A Green Shadow: The Influence of Hotel Customers’ Environmental Knowledge and Concern on Green Marketing Skepticism and Behavioral Intentions. Tour. Anal. 2017, 22, 281–293. [Google Scholar] [CrossRef]

- Brzozowska-Woš, M.; Schivinski, B. The Effect of Online Reviews on Consumer-based Brand Equity: Case-study of the Polish Restaurant Sector. Cent. Eur. Manag. J. 2019, 27, 2–27. [Google Scholar] [CrossRef]

- Johnson, M.D.; Herrmann, A.; Huber, F. The Evolution of Loyalty Intentions. J. Mark. 2006, 70, 122–132. [Google Scholar] [CrossRef]

- Bowling, N.A.; Beehr, T.A.; Wagner, S.H.; Libkuman, T.M. Adaption-level Theory, Opponent Process Theory, and Dispositions: An Integrated Approach to the Stability of Job Satisfaction. J. Appl. Psychol. 2005, 90, 1044–1053. [Google Scholar] [CrossRef]

- Eagly, A.H.; Chaikem, S. The Psychology of Attitudes; Harcourt College Publishers: Fort Worth, TX, USA, 1993. [Google Scholar]

- Li, H.; Meng, F.; Pan, B. How Does Review Disconfirmation Influence Customer Online Review Behavior? A Mixed-method Investigation. Int. J. Contemp. Hosp. Manag. 2020, 32, 3685–3703. [Google Scholar] [CrossRef]

- Mittal, V.; Kumar, P.; Tsiros, M. Attribute-level Performance, Satisfaction, and Behavioral Intentions over Time: A Consumption-system Approach. J. Mark. 1999, 63, 88–101. [Google Scholar] [CrossRef]

- Anser, M.K.; Ali, M.; Usma, M.; Rana, M.; Yousaf, Z. Ethical Leadership and Knowledge Hiding: An Intervening and Interactional Analysis. Serv. Ind. J. 2020, 41, 307–329. [Google Scholar] [CrossRef]

- Usman, M.; Ali, M.; Ogbonnaya, C.; Babalola, M.T. Fueling the Intrapreneurial Spirit: A Closer Look at How Spiritual Leadership Motivates Employee Intrapreneurial Behaviors. Tour. Manag. 2021, 83, 104227. [Google Scholar] [CrossRef]

- Blazyte, A. Market Share of Restaurants in China in 2019, by Category. 2023. Available online: https://www.statista.com/statistics/1120173/china-major-types-of-restaurants/ (accessed on 29 July 2023).

- Ong, B.S. The Perceived Influence of User Reviews in the Hospitality Industry. J. Hosp. Mark. Manag. 2012, 21, 463–485. [Google Scholar] [CrossRef]

- Yayli, A.; Bayram, M. E-WOM: The Effects of Online Consumer Reviews on Purchasing Decisions. Int. J. Internet Mark. Advert. 2012, 7, 51–64. [Google Scholar]

- Lei, Z.; Yin, D.; Mitra, S.; Zhang, H. Swayed by the Reviews: Disentangling the Effects of Average Ratings and Individual Reviews in Online Word-of-Mouth. Prod. Oper. Manag. 2022, 31, 2393–2411. [Google Scholar] [CrossRef]

- Ahn, H.; Park, E. The Impact of Consumers’ Sustainable Electronic-Word-of-Mouth in Purchasing Sustainable Mobility: An Analysis for Online Review Comments of E-commerce. Res. Transp. Bus. Manag. 2024, 52, 101086. [Google Scholar] [CrossRef]

- Guerreiro, J.; Rita, P. How to Predict Explicit Recommendations in Online Reviews Using Text Mining and Sentiment Analysis. J. Hosp. Tour. Manag. 2020, 43, 269–272. [Google Scholar] [CrossRef]

- Jia, S. Motivation and Satisfaction of Chinese and U.S. Tourists in Restaurants: A Cross-cultural Text Mining of Online Reviews. Tour. Manag. 2020, 78, 104071. [Google Scholar] [CrossRef]

- Sheng, J.; Amankwah-Amoah, J.; Wang, X.; Khan, Z. Managerial Responses to Online Reviews: A Text Analytics Approach. Br. J. Manag. 2019, 30, 315–327. [Google Scholar] [CrossRef]

- Gavilan, D.; Avello, M.; Martines-Navarro, G. The Influence on Online Ratings and Reviews on Hotel Booking Consideration. Tour. Manag. 2018, 66, 53–61. [Google Scholar] [CrossRef]

- Park, C.W.; Sutherland, I.; Lee, S.K. Effects of Online Reviews, Trust, and Picture-superiority on Intention to Purchase Restaurant Services. J. Hosp. Tour. Manag. 2021, 47, 228–236. [Google Scholar] [CrossRef]

- Rieh, S.Y. Judgment of Information Quality and Cognitive Authority in the Web. J. Am. Soc. Inf. Sci. Technol. 2002, 53, 145–161. [Google Scholar] [CrossRef]

- Ren, M.; Vu, H.Q.; Li, G.; Law, R. Large-scale Comparative Analyses of Hotel Photo Content Posted by Managers and Customers to Review Platforms based on Deep Learning: Implications for Hospitality Marketers. J. Hosp. Mark. Manag. 2021, 30, 96–119. [Google Scholar] [CrossRef]

- Li, X.; Wu, C.; Mai, F. The Effect of Online Reviews on Product Sales: A Joint Sentiment-topic Analysis. Inf. Manag. 2019, 56, 171–184. [Google Scholar] [CrossRef]

- Ryu, K.; Jang, S. The Effect of Environmental Perceptions on Behavioral Intentions through Emotions: The Case of Upscale Restaurants. J. Hosp. Tour. Res. 2007, 31, 56–72. [Google Scholar] [CrossRef]

- MacKenzie, S.B.; Podsakoff, P.M. Common Method Bias in Marketing: Causes, Mechanisms, and Procedural Remedies. J. Retail. 2012, 88, 542–555. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.; Podsakoff, N.P. Common Method Biases in Behavioral Research: A Critical Review of the Literature and Recommended Remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef] [PubMed]

- Templeton, L.; Deehan, A.; Taylor, C.; Strang, J. Surveying General Practitioners: Does a Low Response Rate Matter? Br. J. Gen. Pract. 1997, 47, 91–94. [Google Scholar] [PubMed]

- Armstrong, J.S.; Overton, T.S. Estimating Nonresponse Bias in Mail Surveys. J. Mark. Res. 1977, 14, 396–402. [Google Scholar] [CrossRef]

- Anderson, R.E.; Swaminathan, S. Customer Satisfaction and Loyalty in e-markets: A PLS Path Modeling Approach. J. Mark. Theory Pract. 2011, 19, 221–234. [Google Scholar] [CrossRef]

- Lehto, T.; Oinas-Kukkonen, H. Explaining and Predicting Perceived Effectiveness and Use Continuance Intention of a Behavior Change Support System for Weigh Loss. Behav. Inf. Technol. 2015, 34, 176–189. [Google Scholar] [CrossRef]

- Yang, C.Z.; Ha, H. The Evolution of E-WOM Intentions: A Two Time-lag Interval Approach after Service Failures. J. Hosp. Tour. Manag. 2023, 56, 147–154. [Google Scholar] [CrossRef]

- Roemer, E. A tutorial on the Use of PLS Path Modeling in Longitudinal Studies. Ind. Manag. Data Syst. 2016, 116, 1901–1921. [Google Scholar] [CrossRef]

- Anderson, J.C.; Gering, D.W. Structural Equation Modeling in Practice: A Review and Recommended Two-step Approach. Psychol. Bull. 1988, 103, 411–423. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, C.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Vaske, J.J.; Beaman, J.; Sponarski, C.C. Rethinking Internal Consistency in Cronbach’s Alpha. Leis. Sci. 2017, 39, 163–173. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Structural Equation Models with Unobservable Variables and Measurement Error: Algebra and Statistics. J. Mark. Res. 1981, 18, 382–388. [Google Scholar] [CrossRef]

- Roemer, E.; Schuberth, F.; Henseler, J. HTMTs: An Improved Criterion for Assessing Discriminant Validity in Structural Equation Modeling. Ind. Manag. Data Syst. 2021, 121, 2637–2650. [Google Scholar] [CrossRef]

- Voorhees, C.M.; Brady, M.K.; Calantone, R.; Ramirez, E. Discriminant Validity Testing in Marketing: An Analysis, Causes for Concerns, and Proposed Remedies. J. Acad. Mark. Sci. 2016, 44, 119–134. [Google Scholar] [CrossRef]

- Hair, J.; Alamer, A. Partial Least Squares Structural Equation Modeling (PLS-SEM) in Second Language and Education Research: Guidelines using an Applied Example. Res. Methods Appl. Linguist. 2022, 1, 100027. [Google Scholar] [CrossRef]

- Sharma, P.N.; Liengaard, B.D.; Hair, J.F.; Sarstedt, M.; Ringle, C.M. Predictive Model Assessment and Selection in Composite-based Modeling Using PLS-SEM: Extensions and Guidelines for Using CVPAT. Eur. J. Mark. 2023, 57, 1662–1677. [Google Scholar] [CrossRef]

- Liengaard, B.D.; Sharma, P.N.; Hult, G.T.M.; Jensen, M.B.; Sarstedt, M.; Hair, J.F.; Ringle, C.M. Prediction: Coveted, yet Forsaken? Introducing a Cross-validated Predictive Ability Test in Partial Least Squares Path Modeling. Decis. Sci. 2021, 52, 362–392. [Google Scholar] [CrossRef]

- Chen, Y.; Fay, S.; Wang, Q. The Role of Marketing in Social Media: How Online Consumer Reviews Evolve. J. Interact. Mark. 2011, 25, 85–94. [Google Scholar] [CrossRef]

- Li, H.; Zhang, L.; Guo, R.; Ji, H.; Yu, B. Information Enhancement or Hindrance? Unveiling the Impacts of User-generated Photos in Online Reviews. Int. J. Contemp. Hosp. Manag. 2023, 35, 2322–2351. [Google Scholar] [CrossRef]

- Kim, R.Y. When Does Online Review Matter to Consumers? The Effect of Product Quality Information Cues. Electron. Commer. Res. 2021, 21, 1011–2030. [Google Scholar] [CrossRef]

- Nach, H.; Lejeune, A. Coping with Information Technology Challenges to Identity: A Theoretical Framework. Comput. Hum. Behav. 2010, 26, 618–629. [Google Scholar] [CrossRef]

- Folkman, S.; Lazarus, R. An Analysis of Coping in a Middle-aged Community Sample. J. Health Soc. Behav. 1980, 21, 219–239. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Zhang, X.; Wang, J.; Chen, S. Research on the Influence of Online Photograph Reviews on Tourists’ Travel Intentions: Rational and Irrational Perspectives. Asia Pac. J. Mark. Logist. 2023, 35, 17–34. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, W.; Zhang, T.; Han, W.; Zhu, Y. Harms of Inconsistency: The Impact of User-generated and Marketing-generated Photos on Hotel Booking Intentions. Tour. Manag. Perspect. 2024, 51, 101249. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).