Abstract

Solver participation plays a critical role in the sustained development of knowledge-intensive crowdsourcing (KI-C) systems. Extant theory has highlighted numerous factors that influence solvers’ participation behaviors in KI-C. However, a structured investigation and integration of significant influential factors is still lacking. This study consolidated the state of academic research on factors that affect solver behaviors in KI-C. Based on a systematic review of the literature published from 2006 to 2021, this study identified five major solver behaviors in KI-C. Subsequently, eight solver motives and seventeen factors under four categories, i.e., task attributes, solver characteristics, requester behaviors, and platform designs, were identified to affect each of the solver behaviors. Moreover, the roles of solver motives and the identified factors in affecting solver behaviors were demonstrated as well. We also suggested a number of areas meriting future research in this study.

1. Introduction

Knowledge-intensive crowdsourcing (KI-C) is becoming a domain of innovation and an increasingly diffused phenomenon in today’s knowledge-intensive economy [1,2,3]. KI-C refers to a type of participative online activity in which an individual or an organization proposes a defined knowledge-intensive task on an intermediary platform to a group of individuals of varying knowledge, expertise, skills, or experience via a flexible open call in order to acquire their ideas, suggestions, or solutions for handling the task. Unlike simple tasks [4], routine tasks, and content collection tasks [5,6], knowledge-intensive tasks are always much more complicated, not easy to be decomposed in a clear way, do not have one (or more) correct answer(s), and are proposed through a flexible open call [1,3,7]. Moreover, their completion heavily relies on the knowledge, expertise, skills, and experience of engaged solvers. Common knowledge-intensive tasks include product design, software development, graphic design, ideation, and content creation.

Generally, a KI-C system consists of four major components, which are task, solver, requester, and online intermediary platform [8]. Solvers are the essential actors in KI-C systems whose wide and active participation heavily determines the sustained development of systems [9,10,11]. To understand and stimulate solvers’ participation in KI-C, prior studies have made great effort to identify the factors that influence solvers’ participation. These factors include solver motives [12,13,14,15], solver expertise [7,16,17], participation experience [18,19], cultural background [20,21], fairness of the requester and platform [22,23], platform designs [24,25], requester feedback [26,27], and task attributes [28,29,30]. Moreover, the relationships among some of these factors and their integrated effects on solvers’ participation have been examined as well. For example, Zheng, Li and Hou [29] found that contest autonomy, variety, and analyzability were associated with solvers’ intrinsic motivation and further impacted their participation intention. Franke, Keinz and Klausberger [22] indicated that the identity of the requester and some of the designs of the organized crowdsourcing system acted as the antecedents of solvers’ perceived fairness, which resulted in the consequences of solver participation willingness and ex post identification with the requester and crowdsourcing system. Tang et al. [31] proposed that feedback information type and valence affected solvers’ intrinsic motivation and, thus, influenced their knowledge contribution performance.

It can be indicated from these prior studies that there are multiple factors that have influences on solver participation in crowdsourcing. Nevertheless, to the best of our knowledge, few studies have been dedicated to summarizing and integrating these factors, which have mainly been investigated separately and partially in the existing studies to form a relatively comprehensive list of factors. In addition, as mentioned in the prior studies, solvers’ participation can be specialized as different behaviors, e.g., participating in a crowdsourcing platform [22,32] or engaging and contributing in a task [7,13,33], which are affected by different factors. Therefore, another essential work that is needed is to link the identified factors with specific solver participation behaviors.

This study offers a “state-of-the-art” overview of the KI-C body of literature to address the following issues: (1) What behaviors do solvers exhibit in KI-C? (2) Why (motives) do solvers exhibit these behaviors? (3) What factors influence the solvers’ behaviors? (4) What roles do those factors play in the solvers’ behaviors?

With respect to crowdsourcing, some reviews have been conducted. These reviews have focused on clarifying crowdsourcing definition [6,8], categorizing crowdsourcing [34], and further positioning it in relation to other concepts (such as open innovation [35], open-source software [36], and organizational forms [37]), broadly describing and exploring the development of the crowdsourcing literature [38,39,40,41], revealing the applications of crowdsourcing in different areas (such as the medical and health sectors [42], data management [43], and information systems [40]), defining the components of crowdsourcing systems and their relationships [44,45], and investigating a specific component or issue of crowdsourcing (such as privacy challenges [46], social mechanisms [47], gamification designs [48], task affordance [49], and factors influencing decisions to crowdsource [50]). The present review concentrates on solver behaviors in KI-C and is dedicated to synthesizing the factors that influence solver behaviors, which is different from past reviews on crowdsourcing. Our review, on one hand, can aid us in having a comprehensive understanding of solver behaviors in KI-C and their influential factors by summarizing and integrating the most significant factors investigated in the KI-C body of literature and categorizing them into multiple facets. On the other hand, our review supports academics and practitioners in developing theoretical models and managerial means that can be used to attract and retain solvers’ active participation and contribution in KI-C.

2. Methods Used

This study employed a systematic review method, a structured and comprehensive method aiming to make the literature selection and review process replicable and transparent [51], to conduct the review. We followed a three-stage procedure, which was planning, execution, and reporting.

2.1. Planning the Review

At this stage, we confined the research scope, defined the research purposes, and identified key data sources. This work focused on KI-C and aimed to investigate solver behaviors and their influential factors. We limited our data sources to peer-reviewed journals since these can be considered validated knowledge and are likely to have good quality and impact in the field [52]. Two data sources from the ISI Web of Science, including Science Citation Index Expanded (SCI-EXPANDED) and the Social Science Citation Index (SSCI), were chosen because they are two of the most comprehensive databases of peer-reviewed journals in the science and social science fields. Further, some search results from different dimensions, such as research type, published time, and citation counts, were presented, which allowed us to analyze and depict the search results more conveniently and effectively.

2.2. Conducting the Review

Four steps, i.e., identification of keywords and search terms, assessment and selection of studies, data extraction, and data synthesis, were executed in this stage. The first two steps pertained to the collection, filtering, and organization of the data, and the last two steps involved data processing and analysis.

2.2.1. Identification of Keywords and Search Terms

This study chose the term “crowdsourcing” as the search phrase because it fit well with our research focus and is a well-representative term that has been employed in other relevant reviews as well. Additionally, other similar keywords, including “crowdsource” and “crowdsourced”, were used to conduct the search in order to ensure we did not overlook any important literature. Even though the notion of “crowd” has generated streams, such as the major research streams of crowdfunding and crowdsensing, we excluded these from this review a priori. Consequently, we used the terms crowdsourcing, crowdsource, and crowdsourced (i.e., TS = (crowdsourcing or crowdsource or crowdsourced)) as a selection criterion for the topic (title, keywords, or abstract) to conduct our initial search.

The other search terms were: language “English”; document type “article” or “review”; published time “from 2006 to 2021”; and subject area “computer science information systems” or “management” or “information science library science” or “behavioral sciences” or “social science interdisciplinary” or “business” or “public environmental occupational health” or “operations research management science” or “urban studies” or “psychology multidisciplinary” or “ergonomics” or “public administration”.

We conducted the search on 29 January 2022, and captured an initial sample of 2549 papers, including 2487 articles, 96 review articles, 96 early-access, 1 data paper, and 1 retracted publication.

2.2.2. Assessment and Selection of Studies

At this step, we examined titles, abstracts, and even the full text of the papers and retained the articles that met our refined criteria: those belonging to the crowdsourcing of knowledge-intensive tasks; focusing on crowdsourcing with estimated requesters, solvers, and online platforms or websites; and investigating solver behaviors, solver motivations, or factors affecting solver behaviors. Meanwhile, we excluded the papers that concentrated on: simple tasks, indoor localization tasks, spatial tasks; specific crowdsourcing systems that were out of our research scope, such as crowdfunding, crowdsensing, and citizen sensing; developing techniques for building crowdsourcing system infrastructures or algorithms of matching tasks and solvers; and user-generated content voluntarily online, such as Wikipedia and Openstreetmap.

This step resulted in a significant reduction in the number of papers in the initial pool and the reservation of 119 article papers.

2.2.3. Data Extraction

Regarding the selected papers, this step examined them by employing a data extraction form used in systematic reviews and aimed at reducing human error and bias when looking into and assessing the papers [51]. It also acted as the data repository form through which the analysis emerged, a historical record of decisions made during the review process, and a visual representation of features of the selected papers. Based on the research questions and aims of this paper, the data extraction form contained the following information: titles, authors, institution of the first author, published years, publications, citation counts, article types, research approaches, task types, crowdsourcing taxonomy, research questions, employed theories, solver behaviors, solver motivations, and factors that influenced solver behaviors.

3. Results and Analysis

3.1. Descriptive Analysis

3.1.1. Publications by Year

The research on exploring the effects of various factors on solver participation in KI-C has gained prominence over the last several years. Table 1 shows the distribution of all the studies from 2006 to 2021.

Table 1.

Publications by year from 2006 to 2021.

Overall, there was a recent increase in published articles focusing on the topic of this study, especially from 2017 to 2021. This recent increase is consistent with the development of the crowdsourcing field. Meanwhile, it, to some degree, could reflect that there is a growing awareness of the importance of attracting and sustaining solvers’ participation in KI-C.

3.1.2. Publications by Journal

The selected 119 articles were published in 64 journals covering information systems and information management, management and business, social science, psychology and ergonomics, and public and urban studies. The top journals publishing no less than three articles included Information Systems Research (5), Production and Operations Management (5), International Journal of Information Management (5), Computers in Human Behaviors (5), Research Policy (5), Journal of Management Information Systems (4), Online Information Review (4), Behaviour & Information technology (4), Internet Research (3), Journal of Product Innovation Management (3), R&D Management (3), and Technological Forecasting and Social Change (3). These journals have high ranks in their corresponding fields, which indicated that the topic of this paper acts as an important field in crowdsourcing and has been studied deeply. In terms of the audience, these publishers from various disciplines demonstrated that the topic has a broad audience base.

3.1.3. Types of Knowledge-Intensive Task

Based on the descriptions in the selected papers directly, the KI-C tasks could be simply classified into the following groups: complex tasks (e.g., IT-relevant service, data modeling, translation and programming, technical problems, logo design), idea generation, innovative or creative tasks, citizen ideation and innovation tasks (e.g., open government project, civic problems, public transportation), knowledge sharing or exchange, open-source software projects, and cultural crowdsourcing projects (e.g., correcting errors in digitized newspapers, manuscript translation).

3.1.4. Research Methods Used

At a high level, the research methods used in the sample could be divided into empirical and non-empirical methods. The empirical methods included case studies, surveys, interviews, field experiments, and primary data. Non-empirical methods included conceptual or theoretical studies, optimization modeling, and literature reviews. Among the 119 studies, empirical studies had a prominent role. The top used empirical methods were surveys (45), primary data (i.e., analysis using data directly collected from crowdsourcing platforms) (35), interviews (18), field experiments (19), and case studies (11). The non-empirical methods consisted of conceptual or theoretical analyses (3), optimization modeling (2), and lab experiments (simulation experiment) (1). Additionally, 25 (21.01%) studies used a combination of methods.

3.1.5. Theoretical Foundations

In the sample, 69 papers offered some theoretical bases in their studies, whereas a few studies did not invoke a strong underlying theory. The most-used theories were motivation theory (9), self-determination theory (8), expectancy–value theory (4), social exchange theory (3), justice theory (3), and identity theory (2).

3.2. RQ1: What Behaviors Do Solvers Exhibit in KI-C?

This study categorized the solver behaviors examined in the prior studies into five types. They were participating in a KI-C platform, choosing a task to participate in, making effort in a task, contributing high-quality solutions, and continuous participation and contribution [53].

3.2.1. Participating in a KI-C Platform

Participating in a KI-C platform was the first and most essential activity that solvers had to implement before further accessing published tasks and interacting with others [54,55]. This behavior was mainly indicated by solvers’ self-expressed willingness to participate in a platform [22,32,56].

3.2.2. Choosing a Task to Participate in

Choosing a task from the task pool in the preferred platform to participate in and contribute to was a following behavior that solvers could exhibit. This behavior was directly related to the subsequent solution development. Solvers’ self-expressed willingness to participate in a specific task [29,30], the number of engaged solvers in a task [57,58,59], and task choice [33] were always employed as indicators for measuring this behavior.

3.2.3. Making Effort in a Task

Making effort referred to the extent to which a solver dedicated his or her time, expertise, and energy to complete the selected tasks. Two types of indicators, i.e., status-based and outcome-based, were proposed to measure the levels of effort that solvers made in a task. The status-based indicators included intensive and persistent engagement of energies [13]; physical, cognitive, and emotional engagement of energies [28,60]; and the committed resources [61] of a solver exerted. The outcome-based indicators were the number of solutions [62,63] and comments [64] that solvers submitted in a task. Sometimes, solvers could make a certain effort in a task but, finally, abandon it, which was named task abandonment [65]. This study incorporated this behavior into the category of making effort in a task.

3.2.4. Contributing High-Quality Solutions

The quality of a solution could be evaluated based on requesters’ scores [19,66], team rank in the contest [67], the likelihood of a solution implemented [18], solution innovativeness [7], and solution appropriateness [68]. This behavior drew the most significant attention from requesters who aimed to find the best solutions for their tasks. There is no doubt that it has become an extremely important focus in the field of solver behavior in KI-C, as indicated in Table 2.

Table 2.

Solvers’ participation behaviors and their indicators.

3.2.5. Continuous Participation and Contribution

After a solver participated in and contributed to a platform initially, he or she would confront the decision of whether to participate and contribute continuously or not. Generally, scholars have paid most attention on solvers’ continuous intention of participating in and contributing to their engaged KI-C platforms. A major indicator of solvers’ self-expressed further willingness to participate and contribute was proposed to measure this behavior [69,70,71]. Solvers’ positive participation experience and satisfaction are critical for keeping solver continuous participation and contribution. Moreover, prior studies have exerted a lot of concern on the antecedents of solver past participation satisfaction [71,72].

To sum up, the solver behaviors and their major indicators are shown in Table 2.

As shown in Table 2, the quality of solutions submitted by solvers in a task drew the most attention in the prior studies, which was followed by the behaviors of task choice and effort made. The number of articles focused on solver continuous participation and contribution in KI-C received less concern than the other four behaviors. In addition, according to our analysis, most of the studies focused on the investigation of a single behavior, and studies that concentrated on the relationships between different behaviors were limited. However, solvers’ different behaviors were not independent. For example, the higher level of effort that solvers exerted in a task, the higher the likelihood of developing high-quality solutions [73]. Solvers’ prior experience imposed an effect on their future participation [11,74].

3.3. RQ2: What (Motives) Motivates Solvers to Exhibit the Behaviors?

3.3.1. The Solver Motives

Many specific solver motives were mentioned in the prior studies, including almost twenty motives in [12], fourteen motives in [13], and ten motives in [14]. However, some of these motives were repetitive and had similar meanings. This study mainly demonstrated eight significant solver motives that were mostly emphasized.

(1) Monetary reward was an important motivation that stimulated solver participation and contribution in crowdsourcing. For a specific task, large monetary rewards were helpful for increasing solvers’ anticipation and compensation for the cost and, thus, had great attractiveness [9,10,75]. However, the quality of solvers’ submissions may not continuously increase with the rise in monetary reward size [10,76]. Consequently, some scholars have tried to explore efficient reward schemes [77,78] or to offer non-monetary rewards, such as free final products, free service, and training [12,76,79].

(2) Learning or developing competence was another important extrinsic motive that was emphasized in prior studies [80]. It indicated that solvers tend to acquire new and valuable knowledge and practice skills by participating in crowdsourcing. However, the studies that focused on examining the effect of this motive on solvers’ behaviors were not too many. Solvers’ learning motivation was positively associated with their participation intention in a crowdsourcing platform [9] but was negatively associated with solution appropriateness [68].

(3) Crowdsourcing assisted solvers to be freelancers because a lot of work opportunities were provided to them. To access new work and to improve career prospects became one of solvers’ major motives. This motivation has been examined to impact solvers’ participation in internal or firm-hosted crowdsourcing systems, especially crowdsourcing for employment [79,81], and online collaboration communities, such as question-and-answer websites [82,83].

(4) Gaining reputation or recognition was a relevant motivation when solvers were offered opportunities to share their know-how, enhance ego gratification, and be visible to and recognized by others [14]. To build an interactive social community and an online reputation system are becoming two main approaches adopted by crowdsourcing platforms to respond to this motivation [68,81,84].

(5) Being challenged and having fun was the utmost pure intrinsic motivation. It could be reflected by some other terms with similar meanings, such as curiosity [30], playfulness [56], enjoyment and addiction [80], and pastime [14]. This motivation has been investigated in different crowdsourcing contexts, especially contests for problem solving that would allow solvers to demonstrate themselves and enjoy the process of completing tasks [13,68,85].

(6) Self-efficacy could stimulate solvers to participate and contribute because they believed that their specific knowledge, skills, and experience could meet the requester and task requirements. Solvers’ self-efficacy enabled them to make more effort in competition conditions but less in non-competition conditions. In turn, solvers’ good performance aided in increasing their self-efficacy [86]. In addition, the influence of this motivation could be moderated by task complexity. Specifically, when task complexity was high (low), there was a convex (concave) relationship between solvers’ self-efficacy and effort [87].

(7) Altruism or reciprocity referred to one of the goals of solver engagement in crowdsourcing, which was helping others and enjoying the feeling of appreciation and respect from others [14,88]. This motive was positively associated with solvers’ willingness to share ideas [88] and willingness to participate continuously [80].

(8) The last, but not least, was having a sense of belonging to a community and was an important solver intrinsic motive. Solvers could identify themselves as a member of the community and align their benefits and goals with other members [81,89]. It helped to keep solvers’ participation in the crowdsourcing system [84,90]. As Fedorenko et al. [91] indicated, solvers would change their identity from “I” to “me” and ”we” with sustained involvement in a community.

3.3.2. The Interactive Effect of Solvers’ Motives

In practice, solvers’ different motives always integrate together to stimulate them to conduct activities in KI-C [14,76]. However, most of the current studies that have examined the influence of solvers’ motives have mainly considered these motives separately. Only several studies of our sample paid attention to investigating the relationships of motives and further examining their combination impacts. For example, Pee, Koh and Goh [33] clarified the effect of solvers’ motives on their task choice in terms of the distal–proximal perspective and indicated that solvers who were motivated by payment tended to focus on demonstrating a higher competence relative to others. Frey et al. [92] noted that the interaction of a strong desire for monetary rewards and higher intrinsic enjoyment of contributing decreased the number of non-substantial contributions to open projects. Sun, Fang and Lim [10] presented that solvers’ self-efficacy had a positive moderating effect on the relationship between solvers’ intrinsic motives and continuance intention. Liang, Wang, Wang and Xue [60] checked the interaction effect of solvers’ intrinsic and extrinsic motives on their effort in crowdsourcing contests and found that the positive association between intrinsic motivation and effort was reduced when a high level of extrinsic incentives was presented. In addition, extrinsic incentives were found to have a negative moderating effect on the relationship between intrinsic motivation and solvers’ engagement.

3.3.3. The Transformation of Solvers’ Motives

Solvers’ motives to exhibit behaviors in KI-C were not fixed but changed with development of involvement degree, participated tasks, and innovation stages.

Firstly, solvers with different roles had different motives. For instance, Frey, Lüthje and Haag [92] found that most valuable contributors were those who were motivated by high levels of intrinsic enjoyment of contributing in open projects. Battistella and Nonino [12] classified solvers in web-based crowdsourcing platforms into four categories in terms of their roles, (champion and expert roles; relationship roles; process roles; and expert, power, and process roles) and presented different motives for these four groups of solvers. Mack and Landau [93] compared the differences in motives of winners, losers, and deniers in innovation crowdsourcing contests. Moreover, extrinsic motivation was found to be distinguished successfully from unsuccessful participants more than intrinsic motivation.

Secondly, solvers’ motives evolved in different participation stages. For example, Soliman and Tuunainen [74] suggested that there was a transformation of solvers’ motives in their initial and continuous participation in a photography crowdsourcing platform. The solvers’ initial participation seemed to be inspired by selfish motives, but sustained participation seemed to be driven by both selfish and social motivations. Alam and Campbell [80] indicated that volunteers in a newspaper digitization crowdsourcing project showed intrinsic motives initially, though both intrinsic and extrinsic motivations played a critical role in their continued participation. Heo and Toomey [26] also demonstrated that participants’ motivations to share knowledge in crowdsourcing changed over time.

Thirdly, the motives of solvers who engaged in different tasks were different. Battistella and Nonino [76] found that the motives in successful web-based crowdsourcing platforms were a function of the phase of the innovation phase. Specifically, the more the phase became “concrete”, the more extrinsic motivations were used. Alam and Campbell [80] noted that volunteers’ intrinsic or extrinsic motives changed with different kinds of contributions, i.e., data shaping to knowledge shaping, in a newspaper digitization crowdsourcing project. Wijnhoven, Ehrenhard and Kuhn [14] found that citizens’ motivations to participate in open government projects varied from projects of citizen innovation and collaborative democracy to citizen sourcing.

3.4. RQ3: What Factors Influence the Solvers’ Behaviors?

According to the components of the KI-C system [8], this study categorized the factors that influenced the solver behaviors into four groups. They were task attributes, solver characteristics, requester behaviors, and platform designs. Under each group, the specific factors were identified from the selected articles. Table 3 presents the specific factors that corresponded to each of the solvers’ behaviors.

Table 3.

The factors influencing the solvers’ behaviors in KI-C.

The number at the intersection of a factor and a behavior represented the number of articles that investigated their relationship. The symbol “-” was used if there were no articles focusing on the relationship between a factor and a behavior. Two conditions need to be noted during building of this table. First, a factor remained only when it was suggested in multiple studies. A factor proposed in a single study was not presented, as it could be derived from an author bias or be applied to a particular context. Second, we assigned a factor to its influential behaviors according to the explicit findings in the articles that mentioned their relation and did not deduce excessively.

Moreover, several cues can be draw from Table 3. First of all, in terms of the number of supporting articles, “task attributes” was the factor category that was investigated the most, and it was followed by “platform designs”, “solver characteristics”, and “requester behaviors”. However, if we viewed solver motivation as one of the solver characteristics, then this factor category, i.e., solver characteristics, was considered to affect solver behaviors in KI-C the most. In addition, under these four categories, seventeen specific factors were identified to influence the solvers’ behaviors.

Second, each of the solver behaviors was influenced by multiple factors. For example, there were twelve specific factors covering four factor categories that affected solvers’ effort made in the selected tasks. More specifically, from the perspective of the number of related factors, the behaviors of task selection, effort made, and solution contribution were the top three behaviors that were studied the most. This is in line with the findings presented in Section 3.2.

Third, the major factors that influenced each of the solver behaviors were different. In particular, the factors for “task attributes” and “solver characteristics” were investigated to influence the solver behaviors, including task selection and effort making. Nevertheless, the factors in the “platform designs” mainly affected solvers’ participation in and contribution to a crowdsourcing platform initially and continuously.

Last but not least, the concerns for different specific factors under each factor category were different. For example, under the category of “task attributes”, task rewards was found to be one of utmost important attributes that affected solvers’ behaviors. Solvers’ domain expertise was found to mostly influence their behaviors compared to other factors in the category of “solver characteristics”. In addition, giving feedback to solvers was highlighted and required in order to promote solvers to contribute more and long-term. Incentive design was one of the priorities for crowdsourcing platforms to improve their solver services and further keep solver engagement.

3.4.1. Task Attributes

A task’s attributes referred to the information that reflected its context, problem, requirements, and other facets. The attributes were defined by requesters and were presented to solvers in order to facilitate them to make sense of the task and, thus, had an impact on their behaviors in the task as well. The major task attributes mentioned in our sample are illustrated below.

- (1)

- Monetary reward

Monetary reward was one of the important task attributes that was emphasized in academic studies but also was concerned with solvers. It acted as an informational cue indicating fairness and the appreciation of participation and effort that were internalized by solvers [54,94]. A certain level of monetary reward was indispensable for attracting solvers’ participation in a knowledge-intensive task, which was especially performed by crowdsourcing contests [54,57]. However, increasing the amount of monetary reward for a task did not always raise solvers’ willingness to participate [95]. For example, Liu, Hatton, Kull, Dooley and Oke [59] found that reward size and crowd size were correlated to one another in the form of an inverted U-shape, namely that moderate rewards maximized crowd size.

For the solvers who chose a task, a monetary reward generally positively affected their effort exerted in the task [87,96,97], but did not contribute greatly to the quality or novelty of submitted solutions [95]. In order to improve the effect of monetary reward on solvers’ efforts and contributions, award structure (e.g., performance contingent award) [3] and prize guarantee (ensuring that a winner will be picked and paid) [98] were suggested to be considered while setting a monetary reward for a task.

- (2)

- Task complexity

Task complexity referred to the difficulty level of a task [59,99] and could be demonstrated by two dimensions, i.e., analyzability and variability [29]. The analyzability highlighted the availability of concrete knowledge on a task, and the variability indicated the frequency of unexpected events and contingencies that could happen while performing the task. It was an important inherent characteristic that influenced solvers’ selection of a task and further effort. Task complexity was positively associated with solvers’ intrinsic motivation [29,96]. Tasks should preferably be less complex in order to attract solvers’ participation [29,99]. In addition, task complexity had a mediating role in the relationship between task reward [59], solvers’ self-efficacy [87], and solvers’ effort in a task.

- (3)

- Task autonomy and variety

Since these two attributes have been considered simultaneously in prior related studies and have similar roles in affecting solvers’ behaviors, we combined and explained them together. Specifically, task autonomy referred to the degree of freedom of solvers execute the task. Task variety referred to the degree to which the task required solvers to apply a wide range of skills and perform a variety of activities in order to complete it [29,30]. The autonomy and variety of a task, on the one hand, were positively related with solvers’ intrinsic motivations [29,30,96]; on the other hand, they played an important role in creating favorable crowd working conditions [54]. As a result, scholars have verified that these two task attributes are positively associated with solvers’ participation in a task and further have a positive effect on solvers’ effort and contribution quality in a task as well [73,96].

- (4)

- Task instruction

Task instructions often constitutes the first contact point between requesters and potential solvers [28]. Generally, clarity, accuracy, and targeting for potential solvers were the major indexes indicating the quality of task instruction. A task that was not described accurately or with high tacit and vagueness in its definition could indicate that requesters did not have clarity and specific criteria to evaluate outcomes. Solvers may doubt the objectiveness of evaluation process and perceive the risk that their outcomes may not be legitimately evaluated. In contrast, a well-articulated task statement reduced the uncertainties of potential solvers and increased their willingness to participate and put further effort into the task [100,101]. Specifically, clearly estimating a solver’s responsibility in contributing ideas [102], offering sufficient instructions and exemplars of expected outcomes [28,103], and illustrating intellectual property right arrangement [104] were suggested to requesters in presenting their task statement. However, good delineation did not mean to bound a task strictly, and lower task autonomy was greatly unfavorable to increasing of novelty and originality of solvers’ solutions [102,105].

- (5)

- Task in-process status

This attribute reflected the execution progress of a task, which could be indicated by the number of participants, submitted solutions, requesters’ feedback, etc. Out of these statuses, the quantity and quality of submitted solutions were emphasized mostly by scholars. In a crowdsourcing contest, high-quality submitted solutions decreased the likelihood of high-quality solvers entering it, resulting in lower overall quality in subsequent submissions [57,58,75]. In addition, it made solvers entering later exert less effort than early entrants due to a lower probability of winning the contest [3,63]. However, Körpeoğlu and Cho [106] noted that high-expertise solvers may raise their effort in response to increased competition. This was because more entrants raised the expected best performance among other solvers, creating positive incentives for solvers to exert higher effort to win the contest. In addition, Dissanayake, Mehta, Palvia, Taras and Amoako-Gyampah [86] also found that solvers’ self-efficacy showed a positive effect on their effort under competitive conditions.

3.4.2. Solver Characteristics

This study mainly presents the following solver characteristics that have been discussed extensively in prior studies. Characteristics such as age, gender, educational background, and employment status [14,66,107] were not included because there were few studies investigating their impacts directly and specifically.

- (1)

- Domain expertise

A solver’s domain expertise, to some extent, demonstrated his or her knowledge, skills, experience, and even creativity [3,67]. In KI-C, solvers’ domain expertise, especially that related to task domain, was an utmost important characteristic that determined the capacity of solvers to capture a task statement and further affected their participation possibility [28,93]. In addition, solvers with high expertise may have more privileges to take ownership of their behaviors and were more likely to develop solutions with high quality. For example, Dissanayake, Zhang, Yasar and Nerur [62] indicated that solvers, especially those who had high skill, tended to increase their effort toward the end of tournaments or when they grew closer to winning positions. Zhang et al. [108] suggested that participants’ domain knowledge had a significant effect on their manuscript transcription quality. Boons and Stam [66] argued that individuals having a related perspective associated with their educational background positively influenced their idea performance. In addition to the depth of domain expertise, expertise diversity also played a key role in affecting solvers’ substantial contributions [16,92].

- (2)

- Participation experience

Solvers’ participation experience was often indicated from their participation history, past submissions, and past interaction with others in KI-C. In general, solvers’ participation experience had a positive influence on their performance [19]. However, rich participation experience, especially past success experience, may result in solvers used to proposing ideas similar to their previous ideas [18]. Koh [103] also found that solvers’ domain experience negatively moderated the relationship between solvers’ adoption of exemplars provided by requesters and their advertised design quality. Additionally, solvers with positive and rich participation experience would inspire them to participate and contribute continuously [11,72].

- (3)

- Cultural background

Practically, a lot of crowdsourcing platforms are structured to perform foreign and local tasks with support from solvers all over the world. In these platforms, different solvers from different countries may have various cultures and wealth, which affects their task choice, effort, and contribution quality [20,21]. Specifically, Chua, Roth and Lemoine [20] found that solvers from countries with strict cultures were more likely to participate and succeed at foreign creative tasks than counterparts from loose cultures. Bockstedt, Druehl and Mishra [21] investigated the influence of contestants’ national cultural background, i.e., performance orientation and uncertainty avoidance, on their problem-solving efforts. Furthermore, these two studies both confirmed the positive effect of small culture distance between solvers and requesters on solvers’ performance.

- (4)

- Personality traits

Solver personality trait in this study mainly referred to solver value orientation during participation in KI-C. More specifically, Steils and Hanine [28] found that individuals who were monetary-reward-oriented were less likely to choose tasks with constraining instructions. Zhang, Chen, Zhao Yuxiang, Song and Zhu [108] suggested that solvers who were social-value-oriented, i.e., pro-social and pro-self, produced influence on their manuscript transcription quality by joining with solvers’ domain expertise. Schäper, Foege, Nüesch and Schäfer [88] argued that individuals’ psychological ownership of ideas would weaken their willingness to share ideas in a specific crowdsourcing platform. Shi et al. [109] examined the effects of solver personal traits, including conscientiousness, extraversion, and neuroticism, on solver engagement in crowdsourcing communities that were mediated by solver self-efficacy and task complexity.

- (5)

- Interaction with peers

A solver’s interaction with peers, i.e., other solvers, mainly is presented as peer feedback, which can be likes, votes, and comments on his or her contributions. The feedback brings solvers cognitive, integrative, and affective benefits. These benefits may promote and strengthen solvers’ credibility, confidence, and pleasure experience, thus strengthening their willingness to develop ideas and communicate with peers [64,110]. With specific feedback, its characteristics, including response speed [64], diversity, constructiveness, and integrativeness [111], brought different values for stimulating solvers. In addition, Chan et al. [112] demonstrated a significant impact of peer-to-peer interaction measured by interaction size, direction, and strength on participants’ likelihood of subsequent idea generation in crowdsourcing community. In order to increase the opportunities to be recognized by peers, sharing online profiles is an option for solvers [113].

3.4.3. Requester Behaviors

Requesters are the people seeking solutions from solvers and communicating with solvers during task completion, their characteristics, and things they do to impact solvers’ behaviors. In this study, we concentrated on two major requester behaviors, which were fairness and giving feedback.

- (1)

- Fairness

Solvers in a crowdsourcing platform or a task not only want a good deal, but also want a fair deal [22]. Fairness embodied three specific dimensions, which were distribution fairness (the fairness of resource allocation among solvers), procedural fairness (the fairness of requesters evaluating and selecting submissions), and interactional fairness (the fairness of requesters treating solvers with respect and politeness and offering information related to the process and the results of task completion) [22,23,71]. Fairness showed by requesters influenced solvers’ perceptions of trustworthiness of requesters and crowdsourcing platforms [71,87]. It was concerned with solvers and had an impact on solvers’ selection of tasks [22], effort making [87], solution development [23], and future participation in and contribution to a crowdsourcing platform [71]. However, the influences of these three fairness dimensions were asymmetric. As Faullant et al. [114] indicated, distribution fairness acted as a basic factor that must be fulfilled in order to avoid negative behavioral consequences, while procedural fairness could be considered as an excitement factor that caused truly positive behavioral consequences. In addition, Liu and Liu [71] revealed that distribution and interactional fairness was perceived by solvers to have an impact on their trust in requesters, while solvers’ perceived procedural and informational fairness significantly affected their trust in crowdsourcing platform management.

- (2)

- Give feedback

Feedback given by requesters could make solvers perceive that their contributions were important. Furthermore, solvers did think of feedback on their contributions as a genuine sign of appreciation and, consequently, stimulated their efforts exerted in a task [73,115], promoted their solution quality [27,116], and increased the likelihood of continuous participation [72]. Therefore, requesters were encouraged to give feedback to solvers, even negative feedback [115]. In addition, the positive effect of feedback was not always created casually but was influenced by its form and content. Particularly, forms of feedback could be no feedback, random feedback, indirect feedback, and direct feedback [73,115]. The content of feedback could be rejection with explanation or with similar linguistic style with the submitted ideas [70] or message framings, such as alignment with cooperation, individualism, and competition in the contents proposed by a solver [116]. Furthermore, the timing of feedback, such as early-stage and late-stage, in a contest [115], in-process feedback, its volume [98], and the direction and strength of feedback [112,117] needed to be considered when presenting feedback to solvers.

3.4.4. Platform Designs

Crowdsourcing platforms link requesters and solvers and facilitate them to conduct activities effectively. Many specific pieces of design advice have been developed by scholars for crowdsourcing platforms to improve their solver service and further attract and keep solvers’ participation and contribution. Out of these suggested designs, this study mainly emphasized four designs, i.e., present system-generated visual feedback, be trustworthy, have an incentive system, and foster a community, that were discussed in prior studies.

- (1)

- System-generated visual feedback

In general, the visual feedback presented by a crowdsourcing platform is specialized as status, rank contrast, reputation score, reward points, average, and top assimilation [26,69]. Sometimes, these types of feedback can be called gamification artifacts as well [56]. Further, these feedbacks offer solvers individual-level status information, providing them a sense of being fairly treated and respected [118]. Consequently, system-generated visual feedback could affect solvers’ participation in a crowdsourcing platform, both initially and continuously, by affecting solvers’ intrinsic motivation. For example, Heo and Toomey [69] indicated that simple, system-generated visual feedback on social comparisons could manipulate solvers’ motivation to continuously share knowledge in crowdsourcing. Feng, Jonathan Ye, Yu, Yang and Cui [56] also found that solvers’ intrinsic motives, e.g., self-presentation, self-efficacy, and playfulness, positively mediated the relationship between gamification artifacts, e.g., point rewarding and feedback giving, and their willingness to participate in a crowdsourcing platform.

- (2)

- Be trustworthy

Under a virtual environment, solvers always have a need of psychological expectation since interaction on a virtual platform is vulnerable to a few risks and uncertainties [119]. Commonly, a high-level trust of a platform can meet solvers’ psychological requirements and, thus, is likely to alleviate solvers’ hesitations. Being trustworthy was critical for a crowdsourcing platform to motivate solvers to participate and contribute [119,120]. A crowdsourcing platform aimed at showing its trustworthiness could formulate many effective mechanisms and regulations. For instance, Hanine and Steils [120] suggested avoiding negative feelings of solvers by dealing with their three emerging sources, which were information about the use of contributions and overpromising, protection of intellectual property rights, and prize allocation and selection criteria. At the same time, Bauer, Franke and Tuertscher [119] emphasized the importance of well-established norms that regulated the use of intellectual property within a crowdsourcing community. In contrast, there is no doubt that enhancing solvers’ feelings of pride and respect playe a central role in driving their ongoing activity in a crowdsourcing platform [118].

- (3)

- Incentive design

Incentives design is essential for crowdsourcing platforms because it plays role of appealing to or matching solvers’ motives [121]. Therefore, the development of incentives on the basis of motivations of potential solvers has become an alternative approach for crowdsourcing platforms. Consequently, a comprehensive package of incentives should be equipped in order to be responsive to the various motives indicated in Section 3.3 [89,122]. Of these incentives, prize and reputation were two highlighted incentives. For example, Kohler [123] suggested that prize, reputation system, socialization, feedback, and framing were the important components of an incentive system of a crowdsourcing platform. With respect to prize incentive, reward structure had a lot of attention in order to maximize its value of activating solvers. For instance, Tang, Li, Cao, Zhang and Yu [77] proposed a novel payment scheme with ex-ante and ex-post schemes to improve solvers’ malicious behaviors in macrotasking crowdsourcing systems. Malhotra and Majchrzak [124] suggested dual reward incentives, one for contributing best solutions and the other for undertaking actions in the knowledge integration process. On the other hand, the provision of opportunities for solvers to demonstrate themselves was critical for reputation incentive design [84]. These opportunities included featured solver profiles [125], multiple sources for solvers to be recognized [123], and the aforementioned system-generated visual feedback [56,69]. Finally, regarding innovation contests, a mixture of cooperative and competitive design features positively affected the creative process and solvers’ effort in developing solutions [105].

- (4)

- Foster a community

Having a sense of belonging to a community was one of the important motivations of solvers to participate and contribute in crowdsourcing [81,89]. It is, therefore, hardly surprising that fostering a community for solvers and requesters has become a concern of crowdsourcing platforms. Further, having a good community was helpful for sustaining solvers’ participation [84,126]. A lot of functions have been suggested to be included in a community, such as publishing news, offering tips and tricks for solvers to develop solutions, supporting interaction among solvers and requesters, and supporting self-demonstration [123,127]. With these functions, crowdsourcing platforms mainly look forward to facilitating identity creation and fostering social interaction for solvers [91,127]. As Fedorenko, Berthon and Rabinovich [91] suggested, identity construction offers powerful tools for adding value to solvers, developing sustainable relationships, and generating enhanced-value co-creation.

3.5. RQ4: What Roles Do the Factors Play in the Solvers’ Behaviors?

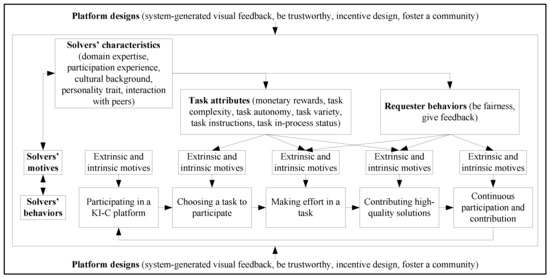

We demonstrated the ways that the identified factors and solvers’ motives affected the solvers’ behaviors, drawing upon the model of motive-incentive-activation-behavior (MIAB) [121,128]. The MIAB model suggests that individuals undertake a particular action when their motivations are activated. Moreover, the right mix of incentives that appeal to an individuals’ motives must be distributed to stimulate individuals. Consequently, a framework for demonstrating the roles of the factors and solvers’ motives in affecting the solvers’ behaviors was developed and is presented in Figure 1. There is no doubt that the framework could not cover all specific situations, but could provide a perspective to understand the functions of these factors.

Figure 1.

A framework for demonstrating the roles of the identified factors.

The five solver behaviors associated with each other sequentially. There was an increase in engagement level with the evolvement of participation behaviors. Additionally, a latter behavior could be implemented by a solver only after he or she completed its former behaviors. Meanwhile, the results of a former behavior had influence on its following behaviors. For example, the more effort that solvers expended on a task, the higher performance they may present [86,97]. With respect to the solvers’ continuous participation and contribution, solvers’ satisfaction and earned financial and non-financial benefits in the former four behaviors possibly imposed impacts separately or comprehensively [69,93].

Regarding each of the solver behaviors, the influential factors that were related to solver motives and characteristics, task attributes, requester behaviors, and platform designs may have an effect. As mentioned in Table 3, it can be found that there were differences in the influential factors between behaviors. Moreover, there were differences in the roles of the identified factors in affecting the solvers’ behaviors, which is illustrated below.

- (1)

- The solver motives

Motivational factors are the stable, psychological drivers within solvers that stimulate goal-directed actions [129]. Eight major motives of solvers were summarized from prior studies. They were monetary rewards, learning, career prospects, reputation or recognition, fun, self-efficacy, altruism or reciprocity, and belonging to a community. These motives drove solvers to undertake different actions in KI-C. Additionally, four conditions needed to be concerned in understanding the role of solvers’ motivations. First, multiple motives generally worked together to affect each of the solver behaviors. For example, Ebner, Leimeister and Krcmar [79] found that solvers who decided to participate in SAPiens had five different motives. Chris Zhao and Zhu [13] argued that there were eight major motives that affected solvers’ effort levels exerted in a task. Second, solvers’ motives to participate in KI-C varied from initiative to initiative. Third, solvers with different roles and characteristics had different motives. Finally, as indicated in Section 3.3.3, a solver’s motives to participate in crowdsourcing were not fixed, but evolved with the development of his or her participation experience and times.

- (2)

- Task attributes

As presented in Table 3, this factor category was particularly important for solvers’ selection of tasks and further efforts and contributions in the tasks. Regarding these behaviors, task attributes mainly acted as the incentives that appealed to or matched solvers’ motives [54,96]. Specifically, task awards and recognition acted as extrinsic motivation, while task autonomy, complexity, and variety were associated with intrinsic motivations [29,30,96]. In addition, solvers’ motivations could be influenced by how a task was designed for innovative solution search [68], and solvers could be motived by the linguistic cues used in the task instructions. Consequently, task instructions were encouraged to be simple and without many strict restrictions in order to stimulate solvers to develop solutions with high originality and value [101,102].

- (3)

- Requester behaviors

Being fair and giving feedback were two important behaviors of requesters that had an impact on solvers’ efforts and contributions in a task and afterward continuous participation and contribution. These two requester behaviors acted as the incentives that appealed to solvers’ motives. More specifically, being fair was associated with solvers’ trust in requesters and solvers’ expectations to receive something in return for what they gave [71,114,117]. The expectations could be financial rewards, as well as non-financial benefits, according to solvers’ extrinsic and intrinsic motives to participate in crowdsourcing. Violation of distribution fairness resulted in violation of the rule of reciprocity in tangible materials, while violations of procedural and interactional fairness concerned the solvers’ anticipation of ideals and intangible values [22,94].

With respect to requester feedback, it could help to enhance solvers’ concentration on task accomplishment, goal pursuit, and social values [72,116]. Moreover, providing feedback was a way of social interaction with requesters for solvers and eliminating solvers’ worries about requester behavioral uncertainty [98,116]. In addition, it could be a very effective motivator and could facilitate tapping into the altruistic, benevolent, self-efficacy, and learning motivations of solvers [27,72,116].

- (4)

- Solver characteristics

Solver characteristics produced an impact on solvers’ behaviors mainly through three ways. Firstly, solvers with different roles and characteristics had different motives and, thus, undertook different actions in crowdsourcing. For instance, Battistella and Nonino [12] showed that users with different roles were attracted by various motives. Wijnhoven, Ehrenhard and Kuhn [14] verified that participants with different citizens’ characteristics had different motives of involve in open government projects.

Second, solvers’ performances in a task were directly related to their characteristics, especially expertise, experience, and cultural background. For example, Bayus [18] found that an individual’s past success experience in generating implemented ideas negatively related to the likelihood of developing an implemented idea again. Bockstedt, Druehl and Mishra [21] and Chua, Roth and Lemoine [20] indicated that the national cultural background of solvers was associated with the number of ideas that they submitted in a given contest. Mack and Landau [7] confirmed that there was a form of an inverted U-shaped relationship between individuals’ domain-relevant skills and the innovative level of their proposed ideas. Khasraghi and Aghaie [19] demonstrated that most competitor participation history factors, including participation frequency, winning frequency, and last performance, had a significant effect on their performance in a contest.

Finally, solvers’ characteristics influenced their perception of task attributes, requester behaviors, and platform designs. In other words, understanding of a same object may vary from solver to solver. For instance, with respect to task attributes, solvers with different expertise and experience had different ideas against the instructions, autonomy, and even requester exemplars of a task [54,103]. Liu, Yang, Adamic and Chen [75], and it was found that experienced users were more likely to select tasks with high rewards than inexperienced users. In addition, regarding requester feedback, female users were more effectively motivated to generate more content than male users by cooperatively framed feedback, whereas competitively framed feedback was more effective at motivating male users [116]. Additionally, Chan, Li, Ni and Zhu [72] found that experienced ideators were less demotivated to improve their subsequent ideation performance when they received negative firm feedback, while inexperienced ideators were more motivated by positive firm feedback.

It was noted that the accumulated participation actions of a solver updated his or her individual characteristics, including skills, interests, participation, and past success experience. In the meantime, they were likely to change a solver’s motives and perception of various incentives [80,92,93]. These transformations could, in turn, exert positive or negative influences on solvers’ future participation behaviors.

- (5)

- Platform designs

In this study, we mainly paid attention on four important platform designs, which were system-generated visual feedback, being trustworthy, incentive design, and fostering a community. However, other designs, such as norms of IPR allocation [119], explicit instructions [124], and user interface [84] have also been considered by solvers and have influence on solvers’ behaviors. Generally, platform designs are activation-supporting IT artifacts for influencing solvers’ behaviors [82,83,121,122,130]. These IT artifacts are directly responsive to the incentives that match solvers’ motives. For instance, a diversity of prizes, e.g., requester prize and platform prize, and fees, e.g., membership fee, service fee, and possible taxes, were offered to match the motive of earning monetary rewards. With respect to the motive of learning, the platforms offered solvers three approaches to practice their skills, which were feedback, disclosed solutions, and suggestions including techniques, tips, and tricks for developing solutions. As another example, virtual prizes and solver show systems were two kinds of designs for matching the motive of gaining reputation and self-demonstration.

Second, platform designs provided solvers with a good environment to participate in and contribute to. They not only included the provided work opportunities, such as various tasks and real problems, but also included a space, such as a community or forum, for solvers to interact with peers and requesters. Last but not least, platform designs supported solvers in performing effectively in a platform. The support covered the full process of solver participation, including pre-participation, task selection, submission development, post evaluation, winner selection, completion of payment, IPR transfer and use, and further handling of possible conflicts and disputes.

4. Discussion and Identification of Future Research Needs

Based on the aforementioned results, this section aims to suggest potential ways to move research on solvers’ behaviors and their influential factors forward.

4.1. Linking the Factors Together and Investigating Their Relations

The selected articles suggested a wide range of factors and further explored their relations with solvers’ behaviors. However, there was a lack of in-depth investigation on how these identified factors were related and how they might affect each other.

Regarding the relationships among the factors, a focus is required on the interactions of solvers’ motives. As indicated in Section 3.3.2, only several of the studies looked into the relations of solvers’ motives and their interactive impact on solvers’ behaviors [10,33,92]. Most of our reviewed studies considered the influences of solvers’ motives separately.

Solvers’ expectations and motives exerted direct effect on their decision to participate and contribute in KI-C [33,121]. As indicated in Section 3.5, the other identified factors influenced solvers’ behaviors mainly through affecting their perception of the likelihood of their motives or expectations being satisfied [32,56,71,121]. Therefore, another focus required is to explore the factors and their priorities of being responsive to solvers’ major motives. In addition, the appropriate forms, content, and strength or level of some factors are likely to be concerned when presenting them to activate solvers’ motives [70,102].

The last attention that is required is examining the relations of factors, including solver characteristics, task attributes, requester behaviors, and platform designs. Some studies have started to examine this aspect, such as similar feedback from requesters or a platform having different meanings for different solvers and regulating task design and requester behaviors by improving platform designs [22,55,79,98,119].

Consequently, it is important to understand these factors from a whole perspective rather than a solo perspective that views them independently. Therefore, an important work that might be needed in the future is to examine and map the relationships among these identified factors. Knowing this need, a framework is required initially to cover these factors and link them together. To achieve this, a few solid theories should be involved or developed as the foundation.

4.2. Exploring the Transformation of Solvers’ Behaviors and Their Influential Factors

In this study, we indicated that the behaviors exhibited by a solver interacted. Prior studies have made great efforts to investigate factors that influence solvers’ single behavior, while knowledge and practices about how to connect their interrelated behaviors and transfer one behavior to another one are limited. This results in difficulty for fully making use of the wisdom of solvers. For example, one of practical facts is that a large proportion of solvers do not participate in or contribute to a task after signing up. Therefore, for a crowdsourcing platform, it is imperative to focus on solvers’ initial participation and adopt effective incentives to promote them to participate deeply. In a word, if a crowdsourcing platform expects solvers to participate continuously, a lot of efforts should be made to ensure that solvers have good satisfaction in their initial participation behaviors. As a result, an important future research theme is investigating the transformation of solvers’ participation behaviors and the drivers, barriers, and factors that promote these transformations.

4.3. Improving Solvers’ Behaviors from a Global Perspective and a Local Perspective

This suggestion mainly concentrates on improving solvers’ behaviors from a practical perspective. Based on previous findings, solvers with different roles exhibited various behaviors that were stimulated by various motives. Further, the same factor had different meanings for different solvers. These conditions create some implications for crowdsourcing platforms and requesters to improve solvers’ behaviors. Specifically, on behalf of the platform benefits, managers of platforms would like to have more and more qualified solvers to register and contribute. If managers adopt a series of governance mechanisms or policies for a specific group of solvers with similar backgrounds and motives but neglect other solvers, it may be very helpful for improving the participation intention and effort of members in this group but result in the loss of other types of solvers with different backgrounds and motives. Therefore, we suggest that crowdsourcing platforms implement designs to improve solver behaviors from a local perspective (improving behaviors of a specific group of solvers) or a global perspective (improving behaviors of solvers with different roles and motives). Under this view, future studies may focus on investigating the roles that solvers play in KI-C, the differences in motives and behaviors of solvers with different roles, and the effects of factors identified in this study on the behaviors of solvers with different roles.

On behalf of requester benefits, it may be simpler since requesters may just handle a task in a platform at a time in general. From Table 3, we can find that task attributes, including prizes, complexity, instructions, and autonomy, requester fairness, and feedback had an effect on solvers’ behaviors. In addition, some scholars have started to highlight the importance for requesters to keep in contact with solvers who contribute to their tasks, especially with great performances, and view them as high-quality external assets [91,123,124,131]. These conditions could drive requesters to design their tasks and interact with solvers properly and positively in order to facilitate the achievement of the current task goals and long-term benefits as well.

5. Conclusions

This study aimed to build a comprehensive view of what the literature has reported on the factors that affect solver behaviors in KI-C. With a thorough selection process, a total of 119 articles were selected from the data sources of SCI-EXPANDED and SSCI. Our literature review indicated that there was an increase in studies on investigating solver behaviors in KI-C and their influential factors. Furthermore, empirical methods, especially surveys, case studies, field experiments, and primary data, and various theories were introduced to support research on this topic.

Furthermore, the review divided solvers’ participation in KI-C into five specific interactive behaviors, which were participating in a KI-C platform, choosing a task to participate in, making an effort in a task, contributing high-quality solutions, and continuous participation and contribution. With respect to each of these behaviors, eight major solver motives, i.e., monetary rewards, learning, career prospects, reputation or recognition, fun, self-efficacy, altruism, and sense of belonging to a community, were summarized. In addition, we identified seventeen specific factors under four groups, which were task attributes, solver characteristics, requester behaviors, and platform designs. It was noted that there were differences between the factors that influenced different behaviors. To understand these factors and solver motives more, their roles in affecting solver behaviors were demonstrated as well. With these results and the aim of moving the literature forward, we developed some research needs concentrating on three themes. They were exploring the inner relationships among the identified factors, examining the transformation in solvers’ behaviors and their influential factors, and suggesting global and local perspectives for improving solvers’ participation behaviors in KI-C.

This study still also suffers from a couple of limitations. First of all, we conducted a thorough review of journal articles from 2006 to 2021, but we excluded conference papers, which accounted for a proportion of the related studies, and could not ensure the capture of all the material related to the topic with the increasing number of studies. Second, this study extracted the factors presented in the literature that influenced solvers’ behaviors but paid less attention to investigating and summarizing how each factor influenced each of the solvers’ behaviors specifically. Another limitation was the limit of generalizing our results in KI-C for other crowdsourcing systems, such as crowdsourcing of simple tasks.

Author Contributions

X.Z.: conceptualization, methodology, formal analysis, investigation, data curation, validation, visualization, writing—original draft, review, and editing. E.X.: visualization, supervision, validation, writing—review and editing. C.S. and J.S.: visualization, validation, writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (71802002), the Anhui Education Department (gxyqZD2022045), and Excellent Young Scholars funded by Anhui Polytechnic University and the Anhui Education Department (2008085QG336).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We sincerely appreciate Mats Magnusson, a distinguished professor of KTH Royal Institute of Technology, for presenting constructive and very helpful suggestions especially about review methodology and writing.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Basu Roy, S.; Lykourentzou, I.; Thirumuruganathan, S.; Amer-Yahia, S.; Das, G. Task assignment optimization in knowledge-intensive crowdsourcing. VLDB J. 2015, 24, 467–491. [Google Scholar] [CrossRef]

- Kittur, A.; Nickerson, J.V.; Bernstein, M.; Gerber, E.; Shaw, A.; Zimmerman, J.; Lease, M.; Horton, J. The future of crowd work. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work, San Antonio, TX, USA, 23–27 February 2013; pp. 1301–1318. [Google Scholar]

- Terwiesch, C.; Xu, Y. Innovation Contests, Open Innovation, and Multiagent Problem Solving. Manag. Sci. 2008, 54, 1529–1543. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhu, Q. Evaluation on crowdsourcing research: Current status and future direction. Inf. Syst. Front. 2014, 16, 417–434. [Google Scholar] [CrossRef]

- Cappa, F.; Oriani, R.; Pinelli, M.; De Massis, A. When does crowdsourcing benefit firm stock market performance? Res. Policy 2019, 48, 103825. [Google Scholar] [CrossRef]

- Penin, J.; Burger-Helmchen, T. Crowdsourcing of inventive activities: Definition and limits. Int. J. Innov. Sustain. Dev. 2011, 5, 246–263. [Google Scholar] [CrossRef]

- Mack, T.; Landau, C. Submission quality in open innovation contests—An analysis of individual-level determinants of idea innovativeness. RD Manag. 2020, 50, 47–62. [Google Scholar] [CrossRef]

- Estellés-Arolas, E. Towards an integrated crowdsourcing definition. J. Inf. Sci. 2012, 38, 189–200. [Google Scholar] [CrossRef]

- Ye, H.; Kankanhalli, A. Solvers’ participation in crowdsourcing platforms: Examining the impacts of trust, and benefit and cost factors. J. Strateg. Inf. Syst. 2017, 26, 101–117. [Google Scholar] [CrossRef]

- Sun, Y.; Fang, Y.; Lim, K.H. Understanding sustained participation in transactional virtual communities. Decis. Support Syst. 2012, 53, 12–22. [Google Scholar] [CrossRef]

- Wang, X.; Khasraghi, H.J.; Schneider, H. Towards an Understanding of Participants’ Sustained Participation in Crowdsourcing Contests. Inf. Syst. Manag. 2020, 37, 213–226. [Google Scholar] [CrossRef]

- Battistella, C.; Nonino, F. Exploring the impact of motivations on the attraction of innovation roles in open innovation web-based platforms. Prod. Plan. Control. 2013, 24, 226–245. [Google Scholar] [CrossRef]

- Chris Zhao, Y.; Zhu, Q. Effects of extrinsic and intrinsic motivation on participation in crowdsourcing contest: A perspective of self-determination theory. Online Inf. Rev. 2014, 38, 896–917. [Google Scholar] [CrossRef]

- Wijnhoven, F.; Ehrenhard, M.; Kuhn, J. Open government objectives and participation motivations. Gov. Inf. Q. 2015, 32, 30–42. [Google Scholar] [CrossRef]

- Cappa, F.; Rosso, F.; Hayes, D. Monetary and Social Rewards for Crowdsourcing. Sustainability 2019, 11, 2834. [Google Scholar] [CrossRef]

- Zhu, J.J.; Li, S.Y.; Andrews, M. Ideator Expertise and Cocreator Inputs in Crowdsourcing-Based New Product Development. J. Prod. Innov. Manag. 2017, 34, 598–616. [Google Scholar] [CrossRef]

- Piazza, M.; Mazzola, E.; Perrone, G. How can I signal my quality to emerge from the crowd? A study in the crowdsourcing context. Technol. Forecast. Soc. Chang. 2022, 176, 121473. [Google Scholar] [CrossRef]

- Bayus, B.L. Crowdsourcing New Product Ideas over Time: An Analysis of the Dell IdeaStorm Community. Manag. Sci. 2013, 59, 226–244. [Google Scholar] [CrossRef]

- Khasraghi, H.J.; Aghaie, A. Crowdsourcing contests: Understanding the effect of competitors’ participation history on their performance. Behav. Inf. Technol. 2014, 33, 1383–1395. [Google Scholar] [CrossRef]

- Chua, R.Y.J.; Roth, Y.; Lemoine, J.-F. The Impact of Culture on Creativity: How Cultural Tightness and Cultural Distance Affect Global Innovation Crowdsourcing Work. Adm. Sci. Q. 2014, 60, 189–227. [Google Scholar] [CrossRef]

- Bockstedt, J.; Druehl, C.; Mishra, A. Problem-solving effort and success in innovation contests: The role of national wealth and national culture. J. Oper. Manag. 2015, 36, 187–200. [Google Scholar] [CrossRef]

- Franke, N.; Keinz, P.; Klausberger, K. “Does This Sound Like a Fair Deal?”: Antecedents and Consequences of Fairness Expectations in the Individual’s Decision to Participate in Firm Innovation. Organ. Sci. 2012, 24, 1495–1516. [Google Scholar] [CrossRef]

- Zou, L.; Zhang, J.; Liu, W. Perceived justice and creativity in crowdsourcing communities: Empirical evidence from China. Soc. Sci. Inf. 2015, 54, 253–279. [Google Scholar] [CrossRef]

- Kohler, T.; Chesbrough, H. From collaborative community to competitive market: The quest to build a crowdsourcing platform for social innovation. RD Manag. 2019, 49, 356–368. [Google Scholar] [CrossRef]

- Randhawa, K.; Wilden, R.; West, J. Crowdsourcing without profit: The role of the seeker in open social innovation. RD Manag. 2019, 49, 298–317. [Google Scholar] [CrossRef]

- Heo, M.; Toomey, N. Motivating continued knowledge sharing in crowdsourcing: The impact of different types of visual feedback. Online Inf. Rev. 2015, 39, 795–811. [Google Scholar] [CrossRef]

- Wooten, J.O.; Ulrich, K.T. Idea Generation and the Role of Feedback: Evidence from Field Experiments with Innovation Tournaments. Prod. Oper. Manag. 2017, 26, 80–99. [Google Scholar] [CrossRef]

- Steils, N.; Hanine, S. Recruiting valuable participants in online IDEA generation: The role of brief instructions. J. Bus. Res. 2019, 96, 14–25. [Google Scholar] [CrossRef]

- Zheng, H.; Li, D.; Hou, W. Task Design, Motivation, and Participation in Crowdsourcing Contests. Int. J. Electron. Commer. 2011, 15, 57–88. [Google Scholar] [CrossRef]

- Garcia Martinez, M. Inspiring crowdsourcing communities to create novel solutions: Competition design and the mediating role of trust. Technol. Forecast. Soc. Chang. 2017, 117, 296–304. [Google Scholar] [CrossRef]

- Tang, J.; Zhou, X.; Zhao, Y.; Wang, T. How the type and valence of feedback information influence volunteers’ knowledge contribution in citizen science projects. Inf. Process. Manag. 2021, 58, 102633. [Google Scholar] [CrossRef]

- Taylor, J.; Joshi, K.D. Joining the crowd: The career anchors of information technology workers participating in crowdsourcing. Inf. Syst. J. 2019, 29, 641–673. [Google Scholar] [CrossRef]

- Pee, L.G.; Koh, E.; Goh, M. Trait motivations of crowdsourcing and task choice: A distal-proximal perspective. Int. J. Inf. Manag. 2018, 40, 28–41. [Google Scholar] [CrossRef]

- Nakatsu, R.T.; Grossman, E.B.; Iacovou, C.L. A taxonomy of crowdsourcing based on task complexity. J. Inf. Sci. 2014, 40, 823–834. [Google Scholar] [CrossRef]

- Cricelli, L.; Grimaldi, M.; Vermicelli, S. Crowdsourcing and open innovation: A systematic literature review, an integrated framework and a research agenda. Rev. Manag. Sci. 2021, 16, 1269–1310. [Google Scholar] [CrossRef]

- Olson, D.L.; Rosacker, K. Crowdsourcing and open source software participation. Serv. Bus. 2013, 7, 499–511. [Google Scholar] [CrossRef]

- Martinez-Corral, A.; Grijalvo, M.; Palacios, M. An organisational framework for analysis of crowdsourcing initiatives. Int. J. Entrep. Behav. Res. 2019, 25, 1652–1670. [Google Scholar] [CrossRef]

- Modaresnezhad, M.; Iyer, L.; Palvia, P.; Taras, V. Information Technology (IT) enabled crowdsourcing: A conceptual framework. Inf. Process. Manag. 2020, 57, 102135. [Google Scholar] [CrossRef]

- Hossain, M.; Kauranen, I. Crowdsourcing: A comprehensive literature review. Strateg. Outsourcing: Int. J. 2015, 8, 2–22. [Google Scholar] [CrossRef]

- Nevo, D.; Kotlarsky, J. Crowdsourcing as a strategic IS sourcing phenomenon: Critical review and insights for future research. J. Strateg. Inf. Syst. 2020, 29, 101593. [Google Scholar] [CrossRef]

- Assis Neto, F.R.; Santos, C.A.S. Understanding crowdsourcing projects: A systematic review of tendencies, workflow, and quality management. Inf. Process. Manag. 2018, 54, 490–506. [Google Scholar] [CrossRef]