Abstract

Skin diseases represent a major worldwide health hazard affecting millions of people yearly and substantially compromising healthcare systems. Particularly in areas where dermatologists are scarce, standard diagnostic techniques, which mostly rely on visual inspection and clinical experience, are frequently subjective, time-consuming, and prone to mistakes. This investigation undertakes a comparative analysis of four state-of-the-art deep learning architectures, YOLO11, YOLOv8, VGG16, and ResNet50, in the context of skin disease identification. This study evaluates the performance of these models using pivotal metrics, building upon the foundation of the YOLO paradigm, which revolutionized spatial attention and multi-scale representation. A properly selected collection of 900 high-quality dermatological images with nine disease categories was used for investigation. Robustness and generalizability were guaranteed by using data augmentation and hyperparameter adjustment. By varying benchmark models in balancing accuracy and recall while limiting false positives and false negatives, YOLO11 obtained a test accuracy of 80.72%, precision of 88.7%, recall of 86.7%, and an F1 score of 87.0%. The expedition performance of YOLO11 signifies a promising trajectory in the development of highly accurate skin disease detection models. Our analysis not only highlights the strengths and weaknesses of the model but also underscores the rapid development of deep learning techniques in medical imaging.

Keywords:

YOLO; skin disease; CNN; machine learning; neural networks; deep learning; medical image analysis 1. Introduction

Many people throughout the world suffer from skin conditions, which represent a serious public health concern due to their potential to cause both physical and psychological issues. Various reports indicate that approximately 30–70% of individuals worldwide experience some form of skin disease, influenced by environmental, demographic, and genetic factors [1]. According to the World Health Organization, an estimated 325,000 new melanoma cases and 57,000 related deaths occur annually [2]. If the current progression continues, the incidence is expected to reach 510,000 cases and 96,000 deaths annually [3]. The increasing prevalence of skin diseases, from eczema and acne to melanoma and psoriasis, underscores the need for enhanced diagnostic strategies, particularly in resource-limited regions, to reduce misdiagnosis and improve accessibility [4,5].

Current diagnostic approaches rely primarily on visual examination, supported by dermatoscopic imaging and histopathological analysis [6]. However, their subjective nature and inter-observer variability often lead to errors, especially for rare or visually similar conditions [7]. In developing countries, the shortage of dermatologists further delays diagnosis, resulting in poorer outcomes for diseases like melanoma [8]. To overcome these challenges, deep learning and artificial intelligence (AI) have emerged as essential tools for automating and enhancing dermatological diagnosis [9,10].

Medical image analysis, particularly in dermatology, has shown enormous promise due to recent developments in computer vision, especially convolutional neural networks (CNNs) and deep learning models [11,12,13,14,15,16]. Artificial intelligence (AI) methods have demonstrated an impressive ability to classify skin conditions with accuracy comparable to or exceeding that of skilled dermatologists [10,17]. Researchers have suggested that deep learning-based skin disease classification systems could reduce diagnostic effort in clinical settings, improve treatment planning, and enhance early detection [12,16,18,19]. However, several challenges persist, including dataset biases, class imbalances, and difficulties in distinguishing dermatological disorders that share visual similarities [13,19]. Standardizing datasets, improving model generalization, and incorporating domain-specific information are all important criteria that will help advance automated dermatological classification methods [14].

A variety of CNN architectures, including ResNet, VGG, and Inception models, have been explored in previous research for dermatology applications [12,17,20,21,22,23,24,25,26]. Despite their notable achievements in image classification, the efficacy of these systems often depends on large, meticulously annotated datasets [27]. Additionally, hybrid approaches that combine CNNs with recurrent neural networks (RNNs) or attention mechanisms, as well as lightweight designs such as MobileNet, have been proposed to increase classification accuracy and processing efficiency [15,20,28]. More recently, transformer-based models have demonstrated improved performance in skin disease diagnosis compared to traditional architectures. A deep learning approach was developed, combining Vision Transformers, Swin Transformers, and DINOv2 with understandable AI tools to enhance classification transparency and support diagnostic applications [29]. DermaTransNet was introduced, integrating MaxViT and U-Net architectures with attention mechanisms for precise skin layer segmentation across multiple resolutions [30]. Additionally, YoTransViT was proposed, merging ViT-, CNN-, and YOLOv8-based segmentation techniques to enable robust skin disease classification and real-time diagnostic applications [31]. However, recent research indicates that convolutional neural network-based models still struggle with significant intra-class similarities and inter-class variability in skin disease datasets [23,24,32].

In the field of skin disease diagnosis, several benchmark datasets exist, but many classes remain under-represented, meaning fewer samples are available for these categories. One of the biggest challenges is that many disease patterns appear visually similar, making it difficult for models, as well as doctors, to accurately identify them. As a result, misclassification becomes a significant issue, requiring further analysis by doctors for a proper diagnosis. While there are several techniques to improve model accuracy, these models still struggle with correctly identifying diseases that share similar visual patterns [33,34].

To address these misclassification challenges, we have introduced YOLO11, a cutting-edge deep learning model tailored specifically for the classification of dermatological disorders. By integrating C3k2 blocks and the C2PSA module, YOLO11 enhances feature extraction, localization precision, and classification efficacy, offering significant improvements over previous YOLO versions [32,35,36]. The proposed model was evaluated on a dataset of 900 high-resolution images spanning nine distinct skin disease classes, where it achieved improved performance compared to established architectures including YOLOv8, VGG16, and ResNet50. YOLO11 is a newly developed model within the YOLO family. To the best of our knowledge, there has been no prior research implementing YOLO11 in the field of skin disease classification. Therefore, direct comparison with existing studies was not feasible.

2. Metholodology

2.1. Dataset Collection and Preparation

2.1.1. Dataset

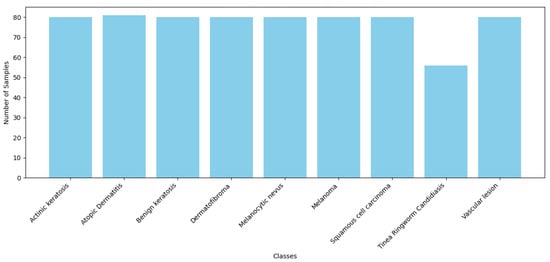

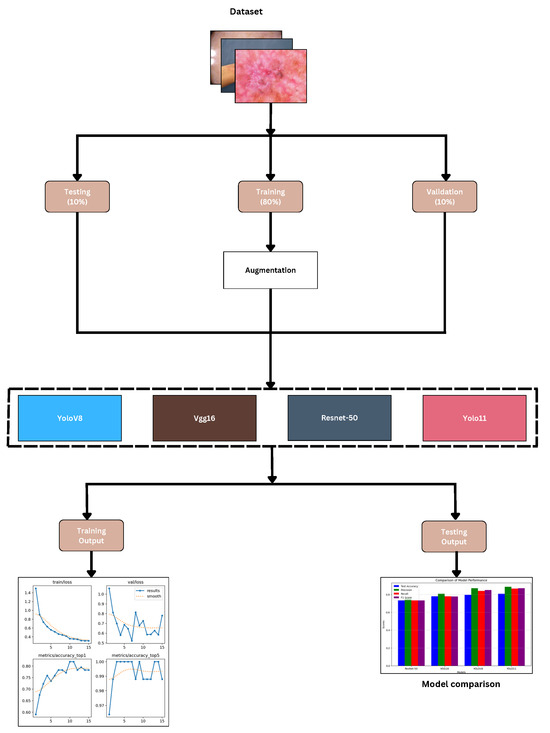

The study used Kaggle to acquire 900 high-resolution dermatological images from various skin disease categories, covering a total of nine distinct skin disorders, as shown in Figure 1 and Figure 2. The dataset was divided into three parts—80% for training, 10% for validation, and 10% for testing—in order to ensure a systematic and objective assessment, as illustrated in Figure 3. This dataset splitting was intended to optimize model performance. We performed cross-validation, which was implemented to avoid overfitting of the models. The following categories of skin conditions were included in the classification task:

Figure 1.

Sample images of skin disorders from the dataset.

Figure 2.

Number of samples per class in training dataset.

Figure 3.

Workflow of research.

- Actinic Keratosis: A type of pre-cancerous skin lesion that can develop due to prolonged exposure to ultraviolet (UV) radiation from the sun.

- Atopic Dermatitis: A persistent inflammatory skin disorder.

- Benign Keratosis: A non-cancerous skin growth.

- Dermatofibroma: A benign skin tumor.

- Melanocytic Nevus: Commonly known as a mole, a benign proliferation of melanocytes.

- Melanoma: A skin cancer originating from melanocytes.

- Squamous Cell Carcinoma: A type of skin cancer that arises from squamous epithelial cells.

- Tinea (Ringworm) Candidiasis: A fungal skin infection.

- Vascular Lesion: An abnormal clustering of blood vessels in the skin.

2.1.2. Dataset Augmentation

We used Roboflow to apply sophisticated data augmentation techniques designed to improve model generalization and reduce overfitting. The trained models were more reliable because the augmentations had been specifically constructed to mimic real-world variances in dermatological imaging. The augmentation process involved random vertical and horizontal flipping to produce a range of points of view, in addition to 90° clockwise and counterclockwise rotations and upside-down positions to fit various camera perspectives. We also applied zoom-based cropping, ranging from 0% to a maximum magnification of 20%, to account for scale fluctuations. The random movement from −45° to +45° greatly increased rotational invariance. Moreover, we utilized it to replicate various lighting and illumination scenarios, varying the brightness from −20% to +20% and modulating the exposure from −15% to +15%.

To address the challenge of there being fewer samples of under-represented classes, we performed augmentation techniques to increase the number of samples for under-represented classes, ensuring a more balanced and effective training process. Additionally, we separated a portion of the dataset from the main data to create an independent evaluation set, allowing for a more reliable and unbiased assessment of the model’s performance.

To guarantee uniformity among the model input layers, each image was resized to a constant 224-by-224 pixel resolution.

The preparation techniques aimed to enhance the model’s generalizing capacity, variance, and stability when applied to new data. The enlarged dataset improves the accuracy and dependability of skin disease classification by letting deep learning algorithms recognize intricate dermatological traits and variances.

2.2. Performance Metrics

We evaluated the performance of the classification models using major criteria generally used in machine learning and deep learning classification systems. These include more comprehensive knowledge of how effectively the model distinguishes diseases.

- Precision: Determines the proportion of exactly discovered favorable incidents to the total expected outcomes. Reduced false positives signify higher accuracy, which is absolutely vital in medical diagnosis to prevent needless patient anxiety.

- Recall: The ratio of positive events to the total actual outcome. In dermatological classification, a high recall is required to reduce false negatives and guarantee that cases of skin disorders are not missed.

- F1 Score: The harmonic mean of recall and precision, providing a fair assessment of a model’s performance, particularly in situations of class imbalance. It ensures that the evaluation considers both false negatives and false positives.

These performance metrics taken together show the relative performance of the proposed YOLO11 model to benchmark models including VGG16, ResNet-50, and YOLOv8. With these models, we can be sure of a thorough and reliable evaluation system that highlights the pros and cons of each architectural design in the classification of skin diseases.

2.3. Proposed Model

YOLO11 Architecture

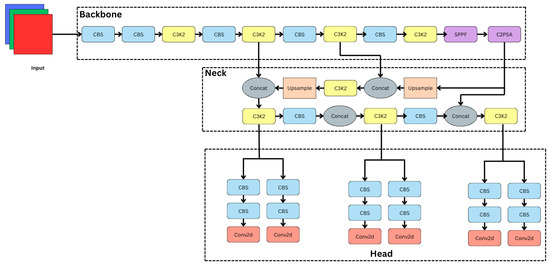

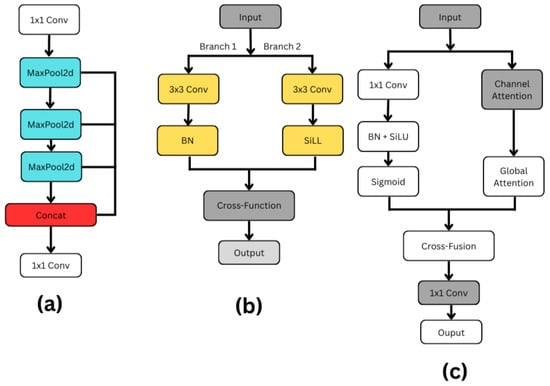

As shown in Figure 4, the proposed YOLO11 architecture presents a novel deep learning framework designed for the classification of dermatological diseases. The C3k2 blocks, including the C2PSA module, significantly improve multi-scale representation, spatial attention, and feature extraction. The architecture consists of three fundamental elements, backbone, neck, and head, which are important for hierarchical feature and classification processing.

Figure 4.

YOLO11 architecture.

2.3.1. Backbone: Hierarchical Feature Extraction

The extraction of a range of features, from low-level to high-level, from the input dermatological images depends critically on the backbone. The architecture consists of C3k2 blocks, multiple CBS (Convolution–BatchNorm–SiLU) layers, and dedicated feature enhancement modules:

- CBS Layers: These layers perform convolution, followed by batch normalization and the SiLU activation function, which makes sure that feature learning is strong and effective.

- C3k2 Blocks: As illustrated in Figure 5b, the modification of the C2f block in the neck for the C3k2 drastically changes YOLO11. The efficient feature extraction of the YOLO11 design is significantly influenced by the C3k2 block. To encourage information flow and computational efficiency, this block splits the input feature map into two distinct branches. Using a 3 × 3 convolution with a stride of 2, Branch 1 preserves significant structural information while lowering spatial dimensions. So, batch normalization (BN) normalizes feature distributions following the convolution, therefore stabilizing and speeding up training. Using SiLL (Simple Lightweight Layer) activation rather than batch normalization, Branch 2 provides a computationally efficient non-linearity by executing a 3 × 3 convolution with a stride of 2. When their changes are complete, a cross-function combines the results from both branches into a refined output using feature acquisition. This architecture provides faster processing performance, better parameter efficiency, and better multi-scale feature extraction for the C3k2 block compared to earlier designs. The c3k option improves detection performance in YOLO11 and provides network flexibility by implying that the C3k2 block can function as a conventional bottleneck (when c3k = False) or as an enhanced C3 module (when c3k = True) [35,36].

Figure 5. Architecture components: (a) SPPF; (b) C3k2; (c) C2PSA.

Figure 5. Architecture components: (a) SPPF; (b) C3k2; (c) C2PSA. - SPPF (Spatial Pyramid Pooling Fast): As shown in Figure 5a, the SPFF (Spatial Pyramid Feature Fusion) module is designed to capture multi-scale spatial features efficiently. The process begins with a 1 × 1 convolution, which primarily serves to adjust or reduce the number of feature channels without affecting the spatial resolution of the input. After this, the feature map passes through a sequence of three MaxPool2d operations, each progressively reducing the spatial dimensions while preserving the most critical features. After every MaxPooling operation, the resulting feature maps are saved, creating multiple branches representing different levels of downsampled information.Once all pooling operations are completed, these intermediate feature maps, along with the initial 1 × 1 convolution output, are concatenated along the channel dimension. This concatenation effectively fuses information from different scales, allowing the network to have a richer understanding of both fine and coarse details in the input. To integrate and compress the multi-scale features into a cohesive representation, a final 1 × 1 convolution is applied to the concatenated output. This step refines the features and potentially reduces the number of output channels, preparing the data for the next stages of the model. Overall, the SPFF module is a lightweight yet powerful structure that captures a broad context with minimal computational overhead, making it especially useful in real-time deep learning applications like object detection [35,36].

- C2PSA (Cross-Channel Partial Self-Attention): As can be seen in Figure 5c, by including spatial and channel attention methods, the C2PSA (Cross Stage Partial with Spatial Attention) block shown in YOLO11 enhances feature extraction. Two simultaneous branches are formed from the input feature map. A 1 × 1 convolution in the main branch compresses data; batch normalization and SiLU activation follow for stability and non-linearity, finishing with a sigmoid activation generating a spatial attention map. The second branch gathers broad contextual information using global attention and emphasizes important feature channels using channel attention. A cross-fusion method combines the results from both branches, hence allowing the model to use spatial and channel-wise data at the same time. Before their move to the next stage, a final set of eleven convolutions improves the combined qualities. By combining exact spatial focus with enhanced channel attention, the C2PSA block significantly increases YOLO11’s ability to identify small, concealed, or complex items, hence improving detection accuracy in comparison to prior YOLO versions [35,36].

2.3.2. Neck: Multi-Scale Feature Fusion

The neck refines and fuses the extracted features before classification, as shown in Figure 4. It consists of the following:

- Feature Concatenation: The combination of intermediate feature maps from several backbone layers are combined to enhance the representation of dermatological patterns.

- Upsampling Operations: Low-resolution features are upsampled to retain fine details, ensuring accurate classification of small-scale lesions.

- C3k2 Blocks: C3k2 blocks help to optimize the network for strong categorization by means of further refinement and extraction of high-level representations.

2.3.3. Head: Classification and Prediction

The head, which ensures proper differentiation among illness categories, is responsible for final classification and prediction, as illustrated in Figure 4. Its components are as follows:

- CBS Layers: By honing the feature maps, these layers maintain spatial information required for categorization.

- Conv2D Output Layers: The final Conv2D output layers generate categorization scores, ensuring correct diagnosis of skin disorders.

2.3.4. Advantages of YOLO11

The advantages of YOLO11’s C3k2 block and C2PSA use are numerous:

- The new C3k2 block’s enhanced hierarchical feature extraction aids in the localization of dermatological patterns.

- By amplifying only the most important spatial features, the C2PSA module makes classification more reliable.

- Combining SPPF and upsampling techniques guarantees effective management of lesions of various sizes.

- Although architectural changes influence the excellent computational structure of YOLO11, it remains appropriate for practical dermatological applications.

Since its enhanced architecture maintains processing economy and increases classification accuracy, YOLO11 is a feasible approach for the detection of dermatological diseases.

2.4. The State-of-the-Art Model

2.4.1. ResNet-50

ResNet-50 provides a deep convolutional neural network designed to address the vanishing gradient problem through residual learning. The architecture provides 50 layers, including convolutional layers, batch normalization layers, and fully connected layers, among others. Additionally, it provides skip connections to facilitate efficient gradient flow. Given its efficacy in feature extraction and classification tasks, transfer learning offers excellent possibilities in this system. ResNet-50’s residual blocks let deep networks learn complex characteristics without sacrificing performance by allowing more picture identification and medical image analysis.

2.4.2. VGG16

The VGG16 is a convolutional neural network with a simple yet strong design with sixteen layers. The method reduces the number of dimensions by using max pooling layers to keep strengthening small 3 × 3 convolutional kernels. This keeps the spatial hierarchy. Though it claims excellent classification accuracy dependent on more than 138 million variables, VGG16 consumes a large deal of memory and computing resources. Its popularity in transfer learning is therefore understandable, as the model’s detailed feature representations make it useful for many image-based tasks, such as diagnosing skin conditions and sorting medical images into groups.

2.4.3. YOLOv8

YOLOv8, developed painstakingly for quick and precise visual recognition applications, represents the state-of-the-art in real-time item identification. Unlike traditional anchor-based detection systems, the anchor-free detection approach used by YOLOv8 lowers processing requirements while preserving accuracy. The C2f module is included in the system to increase efficiency; feature extraction is accomplished with the CSPDarknet backbone. Object detection, segmentation, and location prediction are among the visual tasks the model can handle within its limitations. YOLOv8 is a lesion recognition and segmentation tool for dermatological applications that enhances real-time performance and increases the efficacy of automated skin disease analysis.

2.5. Experimental Setup

This research was conducted on Google Colab since it provides 13 GB of random access memory (RAM), an Intel Xeon central processor unit (CPU), and two virtual CPUs (vCPUs). This configuration was chosen to emulate circumstances with minimal resources to assure that the advised technique is feasible and may be used by practitioners and academics with basic computational skills. The study demonstrates the potential use of these models in situations where premium infrastructure is not readily accessible. Such an approach raises the repeatability of the results as well as their relevance in different working settings.

3. Results and Discussion

3.1. Evaluation

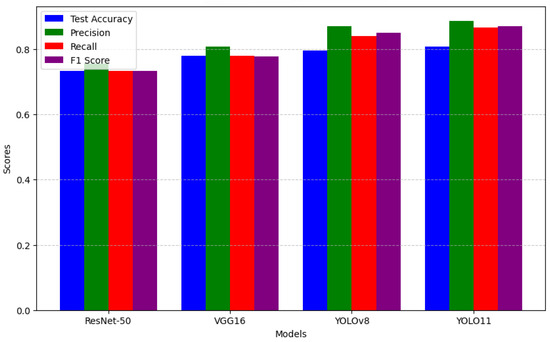

The evaluation of the models reveals that YOLO11 performs slightly better than the other models across key performance metrics in the classification task. As shown in Table 1, YOLO11 works better than ResNet50, VGG16, and YOLOv8 with a test accuracy of 80.72%, precision of 88.7%, recall of 86.7%, and an F1 score of 87.0%. YOLO11 exhibits higher precision and recall, showing a better balance in identifying real advantageous impacts while avoiding erroneous outcomes and false negatives, even though the improvement over YOLOv8 is not significant.

Table 1.

The following table shows the performance of the models.

In Table 2, the hyperparameters used for training include 25 epochs, the AdamW optimizer, a learning rate of 1 × 10−4, weight decay of 0.0005, momentum of 0.937, and a batch size of 32. These settings helped all models perform well, with YOLO11 achieving the best results.

Table 2.

Hyperparameter settings. The following table presents the hyperparameter settings used for training the models.

3.2. Discussion

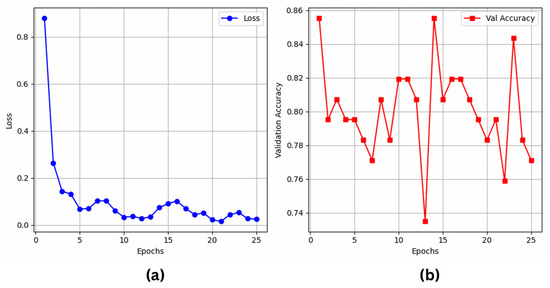

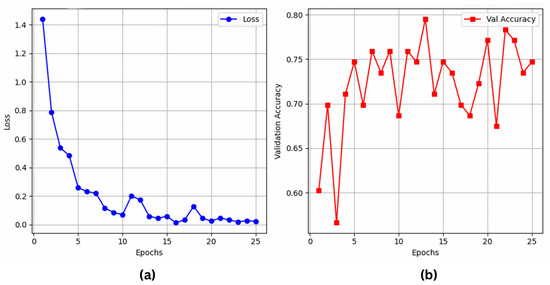

The results indicate that YOLO11 beats YOLOv8, ResNet50, and VGG16 in classification with 80.72% accuracy and an F1 score of 87.0 percent. Although YOLOv8 has 87.0% recall and performs well, YOLO11 is a more robust model for classification since it properly balances accuracy (86.7%) and recall (88.7%). As shown in Figure 6a,b, ResNet50 competes with 79.07% accuracy, even if it falls below the YOLO-based models. On the other hand, VGG16 shows significant limitations in handling tough activity identification tasks with a 72.09% accuracy, as illustrated in Figure 7a,b.

Figure 6.

ResNet50’s (a) training loss; (b) validation accuracy over epochs.

Figure 7.

VGG16’s (a) training loss; (b) validation accuracy over epochs.

The results indicate that YOLO11 enables more feature learning and robustness, thereby qualifying it as an appropriate model for applications of real-world activity. Future studies could investigate its efficiency and scalability in different sectors and fields.

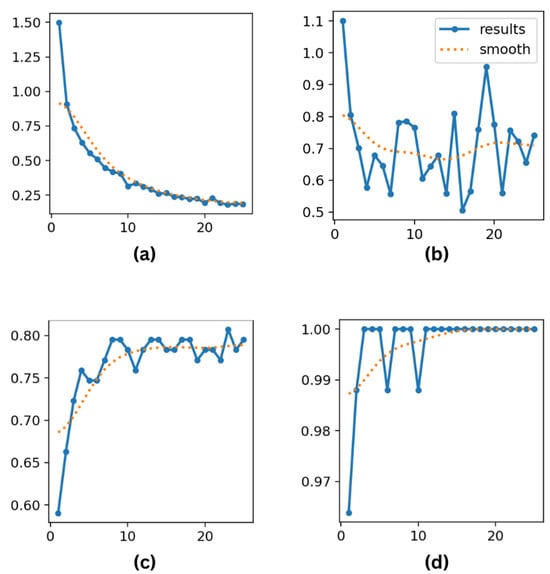

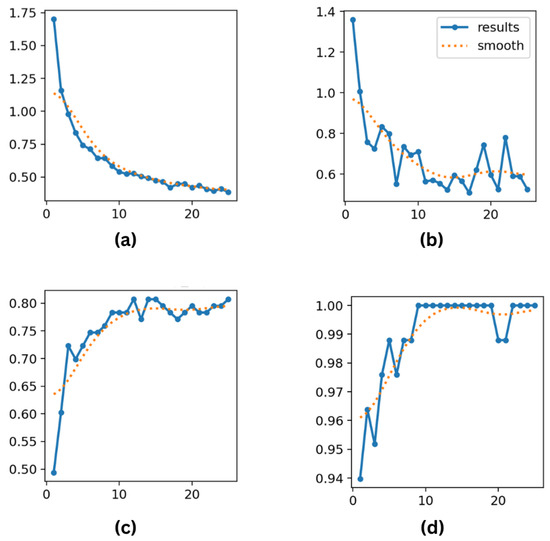

On the other hand, YOLOv8 and YOLO11 perform well in classification tasks even if their primary purpose is object detection. As demonstrated in Figure 8a–d for YOLO11 and Figure 9a–d for YOLOv8, YOLOv8 achieves a test accuracy of 79.51%, with a precision of 87.0%, recall of 84.0%, and F1 score of 85.0%. However, by obtaining the best precision (88.7%) and recall (86.7%), YOLO11 significantly improves these measures, demonstrating its capacity for more precise instance classification and improved generalization. YOLO11’s improved architecture and optimization methods, which likely enhance feature extraction and lessen overfitting in classification tasks, are responsible for the modest performance boost.

Figure 8.

(a) Training loss; (b) validation loss; (c) top-1 accuracy; and (d) top-5 accuracy metrics for YOLO11 over 25 epochs.

Figure 9.

(a) Training loss; (b) validation loss; (c) top-1 accuracy; and (d) top-5 accuracy metrics for YOLOv8 over 25 epochs.

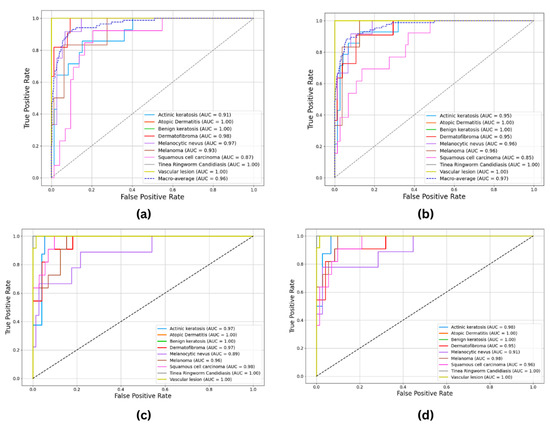

The ROC and AUC curves for ResNet50 (Figure 10a), VGG16 (Figure 10b), YOLO11 (Figure 10c), and YOLOv8 (Figure 10d) provide a visual comparison of classification performance across models. YOLO11 achieved AUC scores ranging from 0.89 to 1.00, including perfect scores (1.00) for atopic dermatitis, benign keratosis, vascular lesions, and tinea ringworm candidiasis. High AUC values were also recorded for melanoma (0.96), squamous cell carcinoma (SCC) (0.98), and dermatofibroma (0.97), indicating strong and consistent performance across all classes.

Figure 10.

ROC and AUC curves: (a) ResNet50; (b) VGG16; (c) YOLO11; (d) YOLOv8.

YOLO11 demonstrates slightly higher classification accuracy and precision compared to YOLOv8, making it a suitable choice for applications where marginal improvements in performance are critical. While YOLOv8 continues to offer acceptable and competitive results, the observed enhancements in YOLO11 suggest its potential for achieving state-of-the-art outcomes in specific contexts. As illustrated in Figure 11, these findings underscore the importance of selecting models based on available computational resources and the specific requirements of a given task while also highlighting the adaptability of YOLO-based architectures in addressing diverse classification challenges.

Figure 11.

Performance comparison barchart.

4. Conclusions and Future Research

This study is solely dedicated to measuring the effectiveness of YOLO11 in comparison with three state-of-the-art deep learning architectures (YOLOv8, VGG16, and ResNet50) for skin disease classification. YOLO11 achieved the highest performance among the models, with an accuracy exceeding 80%. Specifically, YOLO11 performed approximately 1.02 times better than ResNet50, 1.12 times better than VGG16, and 1.03 times better than YOLOv8. YOLO11’s key contributions lie in its architectural design, particularly the integration of the C2PSA module and C3k2 blocks, which enhance feature extraction, as well as its improved computational efficiency [35,36]. We found that this study addresses significant challenges in dermatological image classification, particularly diseases which exhibit visual similarities but can still be individually identified. YOLO11 adeptly addresses misclassification challenges, promoting the advancement of more reliable automated diagnostic systems.

The high rate of visual similarity between certain skin diseases often leads to misclassifications, making it difficult to consistently achieve high accuracy. As a result, progress in this field tends to be small and incremental rather than groundbreaking. Although the performance of YOLO11 was not groundbreaking, it was nonetheless noteworthy and meaningful. Future work should aim to validate YOLO11 on larger, clinically diverse datasets that include rare skin conditions. Moreover, exploring model compression and optimization strategies could make YOLO11 more suitable for deployment in mobile and telemedicine applications. In conclusion, while YOLO11 presents promising advancements in model architecture and efficiency, its impact should be understood as a part of the gradual evolution of AI-based dermatological diagnostics as well as clinical integration.

Author Contributions

Conceptualization, R.A.D.; methodology, R.A.D.; software, R.A.D.; validation, R.A.D. and S.B.; formal analysis, R.A.D.; investigation, R.A.D.; resources, R.A.D.; data curation, R.A.D.; writing—original draft preparation, R.A.D.; writing—review and editing, R.A.D. and S.B.; visualization, R.A.D. and S.B.; supervision, S.B.; project administration, R.A.D. and S.B.; funding acquisition, S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The experimental study reported here uses a dataset collected via Kaggle. It contains 900 dermatoscopic images of various individuals worldwide, representing a diverse range of skin diseases. The following link provides public access to this dataset: https://www.kaggle.com/datasets/riyaelizashaju/skin-disease-classification-image-dataset (accessed on 1 January 2025).

Acknowledgments

This study had no external funding. We also appreciate the use of publicly available data that supported this effort.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Richard, M.; Paul, C.; Nijsten, T.; Gisondi, P.; Salavastru, C.; Taieb, C.; Trakatelli, M.; Puig, L.; Stratigos, A.; EADV Burden of Skin Diseases Project Team. Prevalence of most common skin diseases in Europe: A population-based study. J. Eur. Acad. Dermatol. Venereol. 2022, 36, 1088–1096. [Google Scholar] [CrossRef] [PubMed]

- Moustaqim-Barrette, A.; Conte, S.; Kelly, A.; Lebeau, J.; Alli, S.; Lagacé, F.; Litvinov, I.V. Evaluation of weather and environmental factors and their association with cutaneous melanoma incidence: A national ecological study. JAAD Int. 2024, 16, 264–271. [Google Scholar] [CrossRef] [PubMed]

- Arnold, M.; Singh, D.; Laversanne, M.; Vignat, J.; Vaccarella, S.; Meheus, F.; Cust, A.E.; De Vries, E.; Whiteman, D.C.; Bray, F. Global burden of cutaneous melanoma in 2020 and projections to 2040. JAMA Dermatol. 2022, 158, 495–503. [Google Scholar] [CrossRef]

- Behara, K.; Bhero, E.; Agee, J.T. AI in dermatology: A comprehensive review into skin cancer detection. PeerJ Comput. Sci. 2024, 10, e2530. [Google Scholar] [CrossRef]

- Flohr, C.; Hay, R. Putting the burden of skin diseases on the global map. Br. J. Dermatol. 2021, 184, 189–190. [Google Scholar] [CrossRef] [PubMed]

- De, A.; Mishra, N.; Chang, H.T. An approach to the dermatological classification of histopathological skin images using a hybridized CNN-DenseNet model. PeerJ Comput. Sci. 2024, 10, e1884. [Google Scholar] [CrossRef]

- Thomsen, I.M.N.; Heerfordt, I.M.; Karmisholt, K.E.; Mogensen, M. Detection of cutaneous malignant melanoma by tape stripping of pigmented skin lesions–A systematic review. Skin Res. Technol. 2023, 29, e13286. [Google Scholar] [CrossRef]

- Garrison, Z.R.; Hall, C.M.; Fey, R.M.; Clister, T.; Khan, N.; Nichols, R.; Kulkarni, R.P. Advances in early detection of melanoma and the future of at-home testing. Life 2023, 13, 974. [Google Scholar] [CrossRef]

- Aksoy, S.; Demircioglu, P.; Bogrekci, I. Advanced Artificial Intelligence Techniques for Comprehensive Dermatological Image Analysis and Diagnosis. Dermato 2024, 4, 173–186. [Google Scholar] [CrossRef]

- Luo, N.; Zhong, X.; Su, L.; Cheng, Z.; Ma, W.; Hao, P. Artificial intelligence-assisted dermatology diagnosis: From unimodal to multimodal. Comput. Biol. Med. 2023, 165, 107413. [Google Scholar] [CrossRef]

- Zhang, B.; Zhou, X.; Luo, Y.; Zhang, H.; Yang, H.; Ma, J.; Ma, L. Opportunities and challenges: Classification of skin disease based on deep learning. Chin. J. Mech. Eng. 2021, 34, 112. [Google Scholar] [CrossRef]

- Qu, H.Q.; Kao, C.; Hakonarson, H. Implications of the non-neuronal cholinergic system for therapeutic interventions of inflammatory skin diseases. Exp. Dermatol. 2024, 33, e15181. [Google Scholar] [CrossRef] [PubMed]

- Rusydiyah, E.; Novitasari, D.C.R.; Mushlihul, M.; Amin, A.N.A.; Nurrohman, H.F.; Haq, D.Z.; Putri, E.R.S. Skin cancer diagnosis system on object detection using various CNN YOLOv5 in Android mobile. J. Theor. Appl. Inf. Technol. 2024, 102, 1–11. [Google Scholar]

- Noronha, S.S.; Mehta, M.A.; Garg, D.; Kotecha, K.; Abraham, A. Deep learning-based dermatological condition detection: A systematic review with recent methods, datasets, challenges, and future directions. IEEE Access 2023, 11, 140348–140381. [Google Scholar] [CrossRef]

- Ahmad, B.; Usama, M.; Ahmad, T.; Khatoon, S.; Alam, C.M. An ensemble model of convolution and recurrent neural network for skin disease classification. Int. J. Imaging Syst. Technol. 2022, 32, 218–229. [Google Scholar] [CrossRef]

- Goswami, T.; Dabhi, V.K.; Prajapati, H.B. Skin disease classification from image-a survey. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 599–605. [Google Scholar]

- Minarno, A.E.; Lusianti, A.; Azhar, Y.; Wibowo, H. Classification of Skin Cancer Images Using Convolutional Neural Network with ResNet50 Pre-trained Model. JOIV Int. J. Inform. Vis. 2024, 8, 2013–2019. [Google Scholar] [CrossRef]

- Akay, M.; Du, Y.; Sershen, C.L.; Wu, M.; Chen, T.Y.; Assassi, S.; Mohan, C.; Akay, Y.M. Deep learning classification of systemic sclerosis skin using the MobileNetV2 model. IEEE Open J. Eng. Med. Biol. 2021, 2, 104–110. [Google Scholar] [CrossRef]

- Aziz, F.; Saputri, D.U.E. Efficient skin lesion detection using yolov9 network. J. Med. Inform. Technol. 2024, 2, 11–15. [Google Scholar] [CrossRef]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef]

- Sadik, R.; Majumder, A.; Biswas, A.A.; Ahammad, B.; Rahman, M.M. An in-depth analysis of Convolutional Neural Network architectures with transfer learning for skin disease diagnosis. Healthc. Anal. 2023, 3, 100143. [Google Scholar] [CrossRef]

- Agarwal, R.; Godavarthi, D. Skin disease classification using CNN algorithms. Eai Endorsed Trans. Pervasive Health Technol. 2023, 9, 1–8. [Google Scholar] [CrossRef]

- ElGhany, S.A.; Ibraheem, M.R.; Alruwaili, M.; Elmogy, M. Diagnosis of Various Skin Cancer Lesions Based on Fine-Tuned ResNet50 Deep Network. Comput. Mater. Contin. 2021, 68, 117–135. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Koundal, D.; Mahajan, S.; Pandit, A.K.; Zaguia, A. Deep learning based automated diagnosis of skin diseases using dermoscopy. Comput. Mater. Contin. 2022, 71, 1–16. [Google Scholar] [CrossRef]

- Fan, J.; Kim, J.; Jung, I.; Lee, Y. A study on multiple factors affecting the accuracy of multiclass skin disease classification. Appl. Sci. 2021, 11, 7929. [Google Scholar] [CrossRef]

- Li, H.; Pan, Y.; Zhao, J.; Zhang, L. Skin disease diagnosis with deep learning: A review. Neurocomputing 2021, 464, 364–393. [Google Scholar] [CrossRef]

- Ahammed, M.; Al Mamun, M.; Uddin, M.S. A machine learning approach for skin disease detection and classification using image segmentation. Healthc. Anal. 2022, 2, 100122. [Google Scholar] [CrossRef]

- Veni, N.K.; Deepapriya, B.; Vardhini, P.H.; Kalyani, B.; Sharmila, L. A Novel Method for Prediction of Skin Diseases Using Supervised Classification Techniques. Skin 2022, 20, 21. [Google Scholar]

- Mohan, J.; Sivasubramanian, A.; Ravi, V. Enhancing skin disease classification leveraging transformer-based deep learning architectures and explainable ai. Comput. Biol. Med. 2025, 190, 110007. [Google Scholar] [CrossRef]

- Salam, A.A.; Akram, M.U.; Yousaf, M.H.; Rao, B. DermaTransNet: Where Transformer Attention Meets U-Net for Skin Image Segmentation. IEEE Access 2025, 13, 64305–64329. [Google Scholar] [CrossRef]

- Saha, D.K.; Joy, A.M.; Majumder, A. YoTransViT: A transformer and CNN method for predicting and classifying skin diseases using segmentation techniques. Inform. Med. Unlocked 2024, 47, 101495. [Google Scholar] [CrossRef]

- Sapkota, R.; Meng, Z.; Churuvija, M.; Du, X.; Ma, Z.; Karkee, M. Comprehensive performance evaluation of yolo11, yolov10, yolov9 and yolov8 on detecting and counting fruitlet in complex orchard environments. arXiv 2024, arXiv:2407.12040. [Google Scholar]

- Alruwaili, M.; Mohamed, M. An Integrated Deep Learning Model with EfficientNet and ResNet for Accurate Multi-Class Skin Disease Classification. Diagnostics 2025, 15, 551. [Google Scholar] [CrossRef]

- Daneshjou, R.; Vodrahalli, K.; Liang, W.; Novoa, R.A.; Jenkins, M.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in dermatology ai: Assessments using diverse clinical images. arXiv 2021, arXiv:2111.08006. [Google Scholar]

- Rao, S. YOLOv11 Architecture Explained: Next-Level Object Detection with Enhanced Speed and Accuracy. Mediu. Oct 2024, 22. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).