Federated Learning Strategies for Atrial Fibrillation Detection

Abstract

1. Introduction

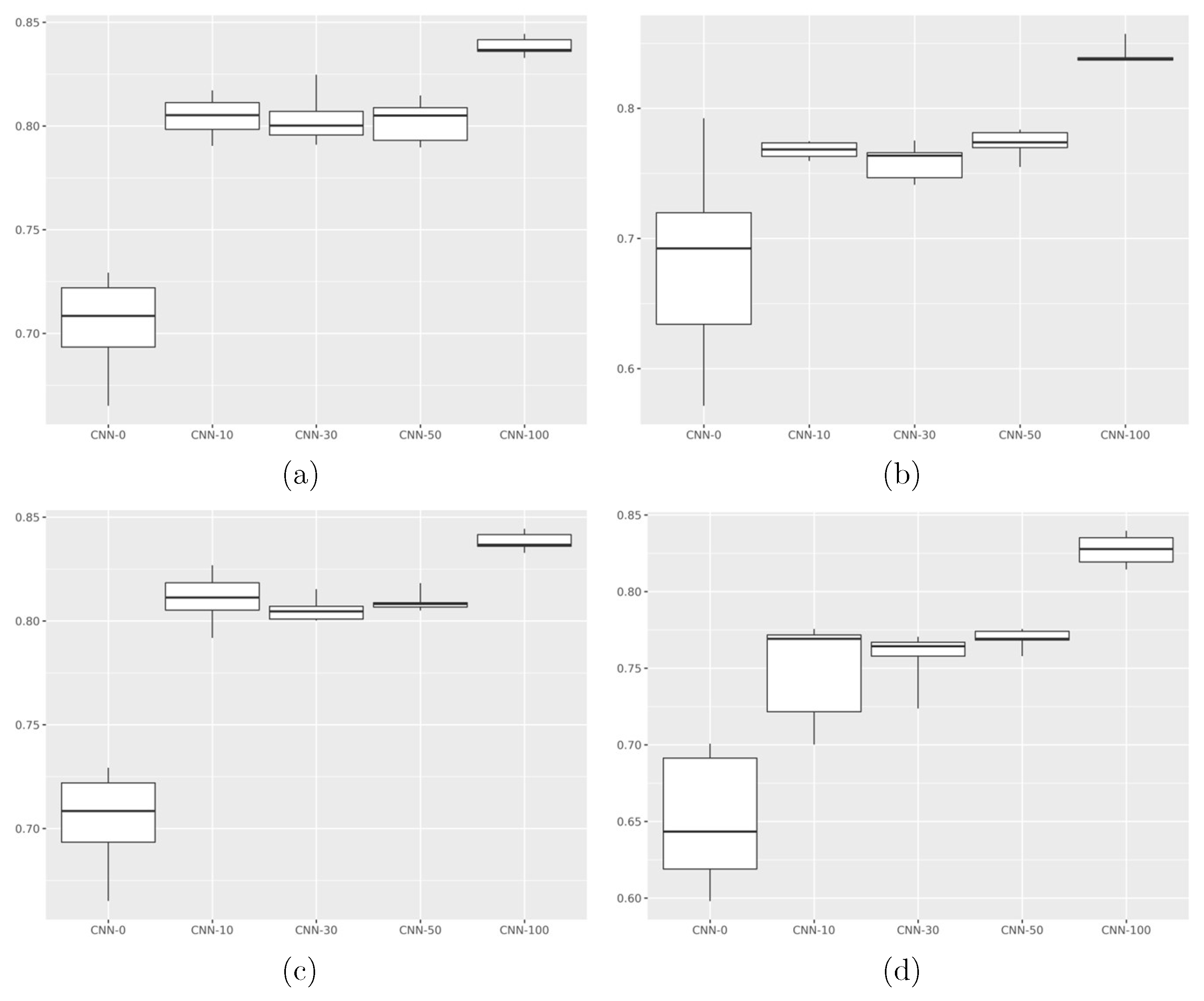

- How machine learning models perform in scenarios where data is not independently and identically distributed;

- That sharing a small amount of data makes a statistically significant impact on model performance;

- That federated models perform worse than their non-federated counterparts.

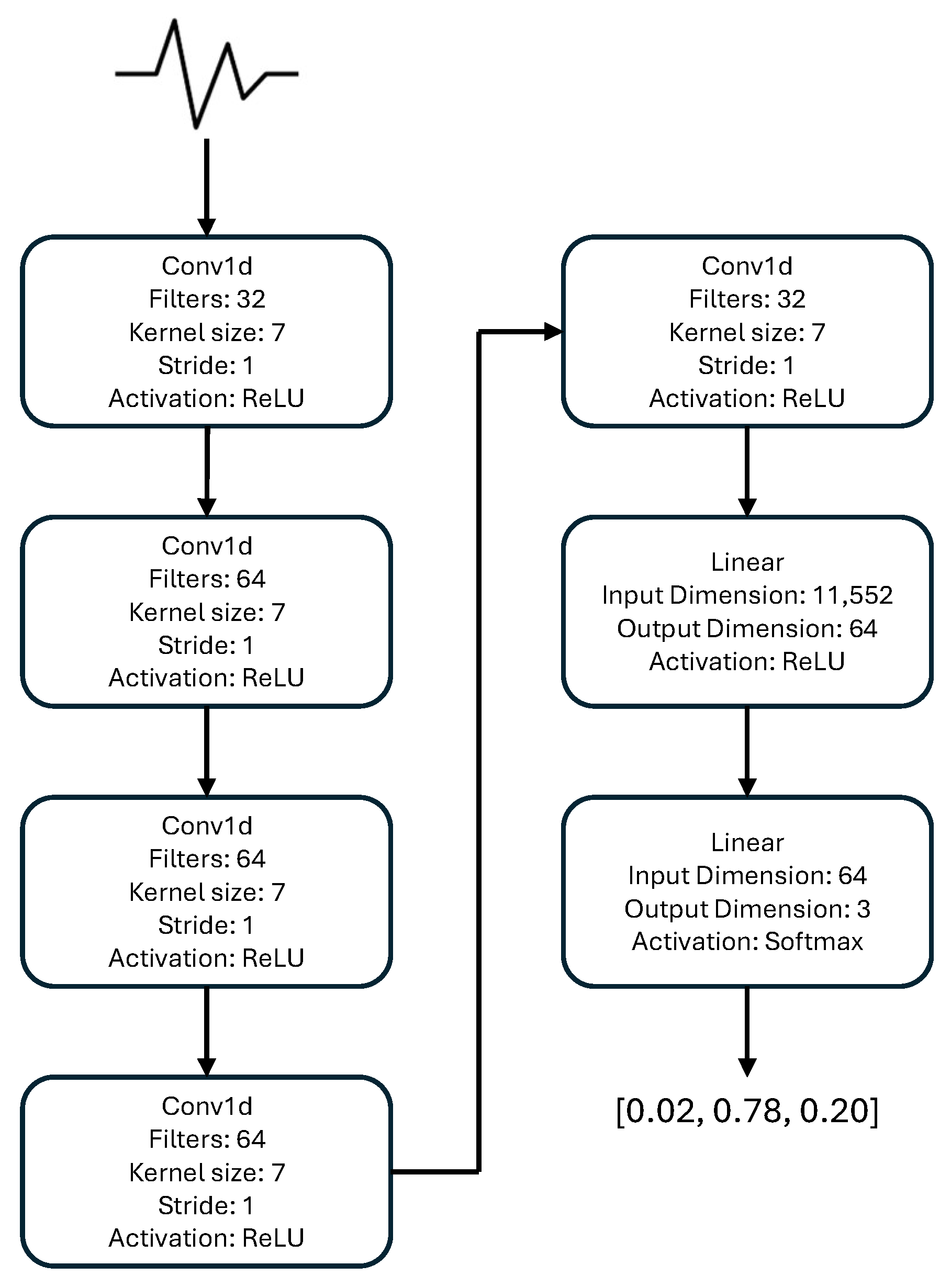

2. Materials and Methods

Statistical Analysis

3. Results

Explainability

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tsao, C.W.; Aday, A.W.; Almarzooq, Z.I.; Anderson, C.A.; Arora, P.; Avery, C.L.; Baker-Smith, C.M.; Beaton, A.Z.; Boehme, A.K.; Buxton, A.E.; et al. Heart disease and stroke statistics—2023 update: A report from the American Heart Association. Circulation 2023, 147, e93–e621. [Google Scholar] [CrossRef]

- De Bacquer, D.; De Backer, G.; Kornitzer, M.; Blackburn, H. Prognostic value of ECG findings for total, cardiovascular disease, and coronary heart disease death in men and women. Heart 1998, 80, 570–577. [Google Scholar] [CrossRef]

- Haider, S.S.; Bath, A. A bundle in the heart: Wolff-parkinson-white syndrome presenting as cardiac arrest. Chest 2019, 156, A748. [Google Scholar] [CrossRef]

- Tison, G.H.; Zhang, J.; Delling, F.N.; Deo, R.C. Automated and interpretable patient ECG profiles for disease detection, tracking, and discovery. Circ. Cardiovasc. Qual. Outcomes 2019, 12, e005289. [Google Scholar] [CrossRef]

- Kashou, A.H.; Noseworthy, P.A.; Beckman, T.J.; Anavekar, N.S.; Cullen, M.W.; Angstman, K.B.; Sandefur, B.J.; Shapiro, B.P.; Wiley, B.W.; Kates, A.M.; et al. ECG interpretation proficiency of healthcare professionals. Curr. Probl. Cardiol. 2023, 48, 101924. [Google Scholar] [CrossRef]

- Jones, N. How machine learning could help to improve climate forecasts. Nature 2017, 548. [Google Scholar] [CrossRef] [PubMed]

- Abouelmehdi, K.; Beni-Hessane, A.; Khaloufi, H. Big healthcare data: Preserving security and privacy. J. Big Data 2018, 5, 1. [Google Scholar] [CrossRef]

- Kyoso, M.; Uchiyama, A. Development of an ECG identification system. In Proceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Istanbul, Turkey, 25–28 October 2001; Volumer 4, pp. 3721–3723. [Google Scholar]

- Singh, Y.N.; Gupta, P. ECG to individual identification. In Proceedings of the 2008 IEEE Second International Conference on Biometrics: Theory, Applications and Systems, Washington, DC, USA, 29 September–1 October 2008; pp. 1–8. [Google Scholar]

- Konečnỳ, J. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics; PMLR: Cambridge, MA, USA, 2017; pp. 1273–1282. [Google Scholar]

- Covert, I.; Ji, W.; Hashimoto, T.; Zou, J. Scaling laws for the value of individual data points in machine learning. arXiv 2024, arXiv:2405.20456. [Google Scholar] [CrossRef]

- Sakib, S.; Fouda, M.M.; Fadlullah, Z.M.; Abualsaud, K.; Yaacoub, E.; Guizani, M. Asynchronous federated learning-based ECG analysis for arrhythmia detection. In Proceedings of the 2021 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Athens, Greece, 7–10 September 2021; pp. 277–282. [Google Scholar]

- Raza, A.; Tran, K.P.; Koehl, L.; Li, S. Designing ecg monitoring healthcare system with federated transfer learning and explainable ai. Knowl.-Based Syst. 2022, 236, 107763. [Google Scholar] [CrossRef]

- Goto, S.; Solanki, D.; John, J.E.; Yagi, R.; Homilius, M.; Ichihara, G.; Katsumata, Y.; Gaggin, H.K.; Itabashi, Y.; MacRae, C.A.; et al. Multinational federated learning approach to train ECG and echocardiogram models for hypertrophic cardiomyopathy detection. Circulation 2022, 146, 755–769. [Google Scholar] [CrossRef] [PubMed]

- Chorney, W.; Wang, H. Towards federated transfer learning in electrocardiogram signal analysis. Comput. Biol. Med. 2024, 170, 107984. [Google Scholar] [CrossRef]

- Gruwez, H.; Barthels, M.; Haemers, P.; Verbrugge, F.H.; Dhont, S.; Meekers, E.; Wouters, F.; Nuyens, D.; Pison, L.; Vandervoort, P.; et al. Detecting paroxysmal atrial fibrillation from an electrocardiogram in sinus rhythm: External validation of the AI approach. Clin. Electrophysiol. 2023, 9, 1771–1782. [Google Scholar] [CrossRef]

- Ma, C.; Liu, C.; Wang, X.; Li, Y.; Wei, S.; Lin, B.S.; Li, J. A multistep paroxysmal atrial fibrillation scanning strategy in long-term ECGs. IEEE Trans. Instrum. Meas. 2022, 71, 4004010. [Google Scholar] [CrossRef]

- Myrovali, E.; Hristu-Varsakelis, D.; Tachmatzidis, D.; Antoniadis, A.; Vassilikos, V. Identifying patients with paroxysmal atrial fibrillation from sinus rhythm ECG using random forests. Expert Syst. Appl. 2023, 213, 118948. [Google Scholar] [CrossRef]

- Baek, Y.S.; Lee, S.C.; Choi, W.; Kim, D.H. A new deep learning algorithm of 12-lead electrocardiogram for identifying atrial fibrillation during sinus rhythm. Sci. Rep. 2021, 11, 12818. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Liu, S.; Jia, H.; Deng, X.; Li, C.; Wang, A.; Yang, C. A two-step method for paroxysmal atrial fibrillation event detection based on machine learning. Math. Biosci. Eng. 2022, 19, 9877–9894. [Google Scholar] [CrossRef] [PubMed]

- Parsi, A.; Glavin, M.; Jones, E.; Byrne, D. Prediction of paroxysmal atrial fibrillation using new heart rate variability features. Comput. Biol. Med. 2021, 133, 104367. [Google Scholar] [CrossRef]

- Surucu, M.; Isler, Y.; Perc, M.; Kara, R. Convolutional neural networks predict the onset of paroxysmal atrial fibrillation: Theory and applications. Chaos Interdiscip. J. Nonlinear Sci. 2021, 31, 113119. [Google Scholar] [CrossRef]

- Maghawry, E.; Ismail, R.; Gharib, T.F. An efficient approach for paroxysmal atrial fibrillation events prediction using extreme learning machine. J. Intell. Fuzzy Syst. 2021, 40, 5087–5099. [Google Scholar] [CrossRef]

- Castro, H.; Garcia-Racines, J.D.; Bernal-Norena, A. Methodology for the prediction of paroxysmal atrial fibrillation based on heart rate variability feature analysis. Heliyon 2021, 7, e08244. [Google Scholar] [CrossRef]

- SÜRÜCÜ, M.; İŞLER, Y.; Kara, R. Diagnosis of paroxysmal atrial fibrillation from thirty-minute heart ratevariability data using convolutional neural networks. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 2886–2900. [Google Scholar] [CrossRef]

- Liu, B.; Ding, M.; Shaham, S.; Rahayu, W.; Farokhi, F.; Lin, Z. When machine learning meets privacy: A survey and outlook. ACM Comput. Surv. (CSUR) 2021, 54, 31. [Google Scholar] [CrossRef]

- He, J.; Cao, T.; Duffy, V.G. Machine learning techniques and privacy concerns in human-computer interactions: A systematic review. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2023; pp. 373–389. [Google Scholar]

- Alreshidi, F.S.; Alsaffar, M.; Chengoden, R.; Alshammari, N.K. Fed-CL-an atrial fibrillation prediction system using ECG signals employing federated learning mechanism. Sci. Rep. 2024, 14, 21038. [Google Scholar]

- Khan, M.A.; Alsulami, M.; Yaqoob, M.M.; Alsadie, D.; Saudagar, A.K.J.; AlKhathami, M.; Farooq Khattak, U. Asynchronous federated learning for improved cardiovascular disease prediction using artificial intelligence. Diagnostics 2023, 13, 2340. [Google Scholar] [CrossRef]

- Manocha, A.; Sood, S.K.; Bhatia, M. Federated learning-inspired smart ECG classification: An explainable artificial intelligence approach. Multimed. Tools Appl. 2024, 84, 21673–21696. [Google Scholar] [CrossRef]

- Jimenez Gutierrez, D.M.; Hassan, H.M.; Landi, L.; Vitaletti, A.; Chatzigiannakis, I. Application of federated learning techniques for arrhythmia classification using 12-lead ecg signals. In International Symposium on Algorithmic Aspects of Cloud Computing; Springer: Cham, Switzerland, 2023; pp. 38–65. [Google Scholar]

- Ying, Z.; Zhang, G.; Pan, Z.; Chu, C.; Liu, X. FedECG: A federated semi-supervised learning framework for electrocardiogram abnormalities prediction. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101568. [Google Scholar] [CrossRef]

- Mehta, S.; Rathour, A. ECG Data Security and Privacy: Leveraging Federated Learning for Better Classification. In Proceedings of the 2023 4th International Conference on Intelligent Technologies (CONIT), Hubli, India, 23–25 June 2023; pp. 1–6. [Google Scholar]

- Mane, D.; Jain, J.; Jaju, U.; Agarwal, K.; Kalamkar, A. A Federated Approach Towards Detecting ECG Arrhythmia. In Proceedings of the 2024 2nd International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 10–12 July 2024; pp. 106–111. [Google Scholar]

- Raza, A.; Tran, K.P.; Koehl, L.; Li, S. AnoFed: Adaptive anomaly detection for digital health using transformer-based federated learning and support vector data description. Eng. Appl. Artif. Intell. 2023, 121, 106051. [Google Scholar] [CrossRef]

- Wolff, J.; Matschinske, J.; Baumgart, D.; Pytlik, A.; Keck, A.; Natarajan, A.; von Schacky, C.E.; Pauling, J.K.; Baumbach, J. Federated machine learning for a facilitated implementation of Artificial Intelligence in healthcare–a proof of concept study for the prediction of coronary artery calcification scores. J. Integr. Bioinform. 2022, 19, 20220032. [Google Scholar] [CrossRef]

- Chorney, W.; Rahman, A.; Wang, Y.; Wang, H.; Peng, Z. Federated Learning for Heterogeneous Multi-Site Crop Disease Diagnosis. Mathematics 2025, 13, 1401. [Google Scholar] [CrossRef]

- Nesheiwat, Z.; Goyal, A.; Jagtap, M. Atrial Fibrillation; StatPearls: Treasure Island, FL, USA, 2023. [Google Scholar]

- Lip, G.Y.; Hee, F.L.S. Paroxysmal atrial fibrillation. QJM 2001, 94, 665–678. [Google Scholar] [CrossRef] [PubMed]

- Nattel, S.; Dobrev, D. Electrophysiological and molecular mechanisms of paroxysmal atrial fibrillation. Nat. Rev. Cardiol. 2016, 13, 575–590. [Google Scholar] [CrossRef]

- Boriani, G.; Laroche, C.; Diemberger, I.; Fantecchi, E.; Popescu, M.I.; Rasmussen, L.H.; Dan, G.A.; Kalarus, Z.; Tavazzi, L.; Maggioni, A.P.; et al. ‘Real-world’ management and outcomes of patients with paroxysmal vs. non-paroxysmal atrial fibrillation in Europe: The EURObservational Research Programme–Atrial Fibrillation (EORP-AF) General Pilot Registry. EP Eur. 2016, 18, 648–657. [Google Scholar] [CrossRef] [PubMed]

- Al-Makhamreh, H.; Alrabadi, N.; Haikal, L.; Krishan, M.; Al-Badaineh, N.; Odeh, O.; Barqawi, T.; Nawaiseh, M.; Shaban, A.; Abdin, B.; et al. Paroxysmal and non-paroxysmal atrial fibrillation in middle eastern patients: Clinical features and the use of medications. analysis of the Jordan atrial fibrillation (JoFib) study. Int. J. Environ. Res. Public Health 2022, 19, 6173. [Google Scholar] [CrossRef]

- Peigh, G.; Zhou, J.; Rosemas, S.C.; Roberts, A.I.; Longacre, C.; Nayak, T.; Schwab, G.; Soderlund, D.; Passman, R.S. Impact of atrial fibrillation burden on health care costs and utilization. Clin. Electrophysiol. 2024, 10, 718–730. [Google Scholar] [CrossRef]

- Stahrenberg, R.; Weber-Krüger, M.; Seegers, J.; Edelmann, F.; Lahno, R.; Haase, B.; Mende, M.; Wohlfahrt, J.; Kermer, P.; Vollmann, D.; et al. Enhanced detection of paroxysmal atrial fibrillation by early and prolonged continuous holter monitoring in patients with cerebral ischemia presenting in sinus rhythm. Stroke 2010, 41, 2884–2888. [Google Scholar] [CrossRef]

- Zhu, H.; Cheng, C.; Yin, H.; Li, X.; Zuo, P.; Ding, J.; Lin, F.; Wang, J.; Zhou, B.; Li, Y.; et al. Automatic multilabel electrocardiogram diagnosis of heart rhythm or conduction abnormalities with deep learning: A cohort study. Lancet Digit. Health 2020, 2, e348–e357. [Google Scholar] [CrossRef]

- Li, X.; Huang, K.; Yang, W.; Wang, S.; Zhang, Z. On the convergence of fedavg on non-iid data. arXiv 2019, arXiv:1907.02189. [Google Scholar]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Moody, G.E. Spontaneous termination of atrial fibrillation: A challenge from physionet and computers in cardiology 2004. In Proceedings of the Computers in Cardiology, Chicago, IL, USA, 19–22 September 2004; pp. 101–104. [Google Scholar]

- Wang, X.; Ma, C.; Zhang, X.; Gao, H.; Clifford, G.D.; Liu, C. Paroxysmal atrial fibrillation events detection from dynamic ecg recordings: The 4th china physiological signal challenge 2021. Proc. PhysioNet 2021, 1–83. [Google Scholar] [CrossRef]

- Tran, R.; Rankin, A.; Abdul-Rahim, A.; Lip, G.; Rankin, A.; Lees, K. Short runs of atrial arrhythmia and stroke risk: A European-wide online survey among stroke physicians and cardiologists. J. R. Coll. Physicians Edinb. 2016, 46, 87–92. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Sanjabi, M.; Beirami, A.; Smith, V. Fair resource allocation in federated learning. arXiv 2019, arXiv:1905.10497. [Google Scholar]

- Joshi, K.; Patel, M.I. Recent advances in local feature detector and descriptor: A literature survey. Int. J. Multimed. Inf. Retr. 2020, 9, 231–247. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Nogimori, Y.; Sato, K.; Takamizawa, K.; Ogawa, Y.; Tanaka, Y.; Shiraga, K.; Masuda, H.; Matsui, H.; Kato, M.; Daimon, M.; et al. Prediction of adverse cardiovascular events in children using artificial intelligence-based electrocardiogram. Int. J. Cardiol. 2024, 406, 132019. [Google Scholar] [CrossRef]

- Gibson, C.M.; Mehta, S.; Ceschim, M.R.; Frauenfelder, A.; Vieira, D.; Botelho, R.; Fernandez, F.; Villagran, C.; Niklitschek, S.; Matheus, C.I.; et al. Evolution of single-lead ECG for STEMI detection using a deep learning approach. Int. J. Cardiol. 2022, 346, 47–52. [Google Scholar] [CrossRef]

- Chorney, W.; Wang, H.; Fan, L.W. AttentionCovidNet: Efficient ECG-based diagnosis of COVID-19. Comput. Biol. Med. 2024, 168, 107743. [Google Scholar] [CrossRef]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of explainable AI techniques in healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef]

- Rozemberczki, B.; Watson, L.; Bayer, P.; Yang, H.T.; Kiss, O.; Nilsson, S.; Sarkar, R. The shapley value in machine learning. In The 31st International Joint Conference on Artificial Intelligence and the 25th European Conference on Artificial Intelligence; International Joint Conferences on Artificial Intelligence Organization: Los Angeles, CA, USA, 2022; pp. 5572–5579. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Nilsson, A.; Smith, S.; Ulm, G.; Gustavsson, E.; Jirstrand, M. A performance evaluation of federated learning algorithms. In Proceedings of the Second Workshop on Distributed Infrastructures for Deep Learning, Rennes, France, 10 December 2018; pp. 1–8. [Google Scholar]

- Haddadpour, F.; Mahdavi, M. On the convergence of local descent methods in federated learning. arXiv 2019, arXiv:1910.14425. [Google Scholar] [CrossRef]

- Cui, S.; Pan, W.; Liang, J.; Zhang, C.; Wang, F. Addressing algorithmic disparity and performance inconsistency in federated learning. Adv. Neural Inf. Process. Syst. 2021, 34, 26091–26102. [Google Scholar]

- Wang, Z.; Song, M.; Zhang, Z.; Song, Y.; Wang, Q.; Qi, H. Beyond inferring class representatives: User-level privacy leakage from federated learning. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 2512–2520. [Google Scholar]

- Hatamizadeh, A.; Yin, H.; Molchanov, P.; Myronenko, A.; Li, W.; Dogra, P.; Feng, A.; Flores, M.G.; Kautz, J.; Xu, D.; et al. Do gradient inversion attacks make federated learning unsafe? IEEE Trans. Med. Imaging 2023, 42, 2044–2056. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Wan, W.; Hu, S.; Lu, J.; Zhang, L.Y. Challenges and approaches for mitigating byzantine attacks in federated learning. In Proceedings of the 2022 IEEE International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Wuhan, China, 9–11 December 2022; pp. 139–146. [Google Scholar]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for federated learning on user-held data. arXiv 2016, arXiv:1611.04482. [Google Scholar] [CrossRef]

| Year | Privacy | Uniform | pAF | Accuracy (%) | References |

|---|---|---|---|---|---|

| 2021 | ✓ | ✗ | ✗ | 89.9 | [30] |

| 2021 | ✗ | ✓ | ✓ | 97.7 | [22] |

| 2021 | ✗ | ✓ | ✓ | 100 | [23] |

| 2021 | ✗ | ✓ | ✓ | 97 | [24] |

| 2021 | ✗ | ✓ | ✓ | 89.01 | [25] |

| 2021 | ✗ | ✓ | ✓ | 100 | [26] |

| 2022 | ✓ | ✓ | ✗ | 67.25 | [37] |

| 2022 | ✗ | ✓ | ✓ | 76.5 | [21] |

| 2022 | ✗ | ✓ | ✓ | 76.5 | [20] |

| 2022 | ✗ | ✓ | ✓ | 99.33 | [18] |

| 2023 | ✗ | ✗ | ✓ | 78.1 | [17] |

| 2023 | ✗ | ✓ | ✓ | 93.45 | [19] |

| 2023 | ✓ | ✗ | ✗ | 63.3 | [32] |

| 2023 | ✓ | ✓ | ✗ | 94.8 | [33] |

| 2023 | ✓ | ✗ | ✗ | 98 | [36] |

| 2023 | ✓ | ✓ | ✗ | 89.9 | [30] |

| 2024 | ✓ | ✗ | ✗ | 96.32 | [29] |

| 2024 | ✓ | ✓ | ✗ | 99.12 | [31] |

| 2024 | ✓ | ✗ | ✗ | 87.7 | [34] |

| 2024 | ✓ | ✗ | ✗ | 99.2 | [35] |

| Hospital Number | Parent Dataset | Sampling Rate | Samples |

|---|---|---|---|

| 1 | CinC 2001 | 128 | 850 |

| 2 | CinC 2004 | 128 | 180 |

| 3 | CPSC 2021 | 200 | 30,000 |

| 4 | CPSC 2021 | 200 | 20,000 |

| 5 | CPSC 2021 | 200 | 6198 |

| Label | Healthy | pAF | npAF | |

|---|---|---|---|---|

| Hospital Number | ||||

| 1 | 425 | 425 | 0 | |

| 2 | 0 | 120 | 60 | |

| 3 | 19,173 | 3412 | 7415 | |

| 4 | 11,136 | 1466 | 7398 | |

| 5 | 3366 | 403 | 2429 | |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| CNN-0 | ||||

| CNN-10 | ||||

| CNN-30 | ||||

| CNN-50 | ||||

| CNN-100 |

| Model | Accuracy (CI) | Precision (CI) | Recall (CI) | F1 Score (CI) | p-Value (CNN-0) | p-Value (CNN-100) |

|---|---|---|---|---|---|---|

| CNN-0 | (67.192, 73.535) | (57.767, 78.628) | (67.192, 73.535) | (59.492, 70.594) | ∼ | |

| CNN-10 | (79.139, 81.754) | (75.970, 77.595) | (79.422, 82.720) | (70.475, 79.048) | ||

| CNN-30 | (78.739, 82.005) | (74.101, 77.608) | (79.807, 81.316) | (73.299, 78.021) | ||

| CNN-50 | (78.911, 81.541) | (75.856, 78.686) | (80.305, 81.576) | (76.029, 77.761) | ||

| CNN-100 | (83.252, 84.406) | (83.0771, 85.251) | (83.252, 84.406) | (81.409, 84.031) | ∼ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chorney, W.; Ling, S.H. Federated Learning Strategies for Atrial Fibrillation Detection. J. Exp. Theor. Anal. 2025, 3, 23. https://doi.org/10.3390/jeta3030023

Chorney W, Ling SH. Federated Learning Strategies for Atrial Fibrillation Detection. Journal of Experimental and Theoretical Analyses. 2025; 3(3):23. https://doi.org/10.3390/jeta3030023

Chicago/Turabian StyleChorney, Wesley, and Sing Hui Ling. 2025. "Federated Learning Strategies for Atrial Fibrillation Detection" Journal of Experimental and Theoretical Analyses 3, no. 3: 23. https://doi.org/10.3390/jeta3030023

APA StyleChorney, W., & Ling, S. H. (2025). Federated Learning Strategies for Atrial Fibrillation Detection. Journal of Experimental and Theoretical Analyses, 3(3), 23. https://doi.org/10.3390/jeta3030023