Enabling Progressive Server-Side Rendering for Traditional Web Template Engines with Java Virtual Threads

Abstract

1. Introduction

2. Background and Related Work

2.1. Web Templates

- 1.

- Domain-specific language idiom

- 2.

- Supported data model APIs

- 3.

- Asynchronous support

- 4.

- Type safety and HTML safety

- 5.

- Progressive rendering

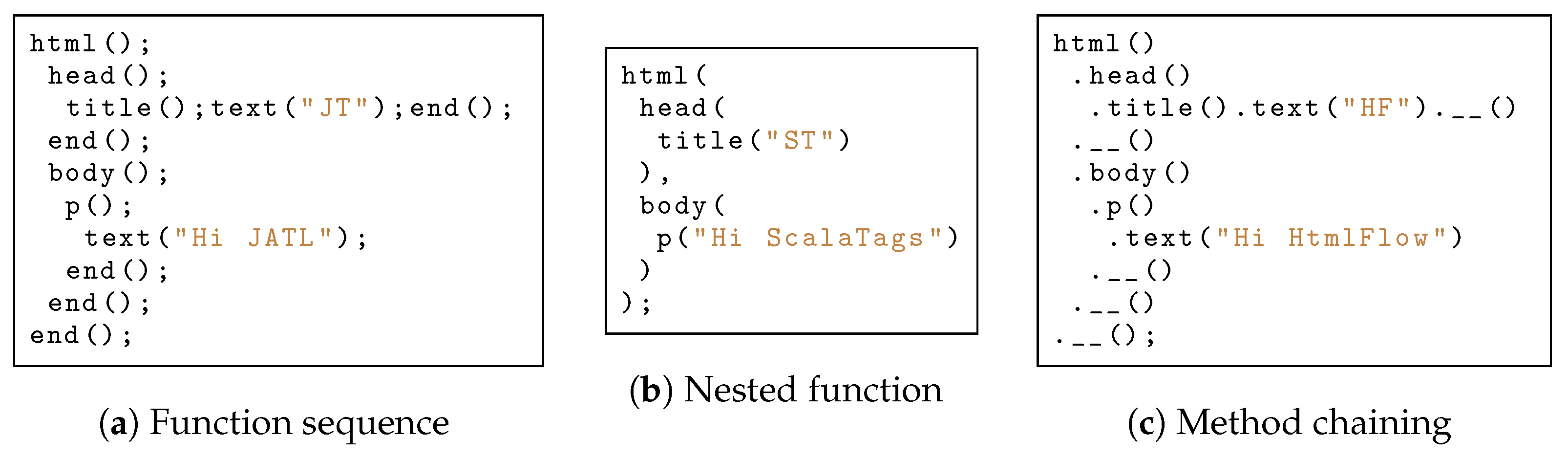

2.1.1. Domain-Specific Language Idiom

- <% for(String item : items) %> in JSP

- <% For Each item In items %> in legacy ASP with VBScript

- #foreach($item in $items) in Velocity

- <?php foreach ($items as $item):?> in PHP

- 1.

- Type safety: Because the templates are defined with the host programming language, the compiler can check the syntax and types of the templates at compile time, which can help catch errors earlier in the development process.

- 2.

- IDE support: Many modern IDEs provide code completion, syntax highlighting, and other features, which can make it easier to write and maintain templates.

- 3.

- Flexibility: Use all the features of the host programming language to generate HTML, can make it easier to write complex templates and reuse code.

- 4.

- Integration: Because the templates are defined in Java code, for example, you can easily integrate them with other Java code in your application, such as controllers, services, repositories and models.

2.1.2. Supported Data Model APIs

2.1.3. Asynchronous Support

2.1.4. HTML Safety

2.1.5. Progressive Rendering

2.2. Web Framework Architectures and Approaches to PSSR

2.2.1. Spring MVC

- Sending large responses without buffering the entire output in memory.

- Writing dynamic HTML in fragments as data is fetched or computed.

- Reducing time-to-first-byte (TTFB) and improving perceived performance.

| Listing 1. StreamingResponseBody handler in Spring MVC. |

| @GetMapping("/stream") fun handleStream(): StreamingResponseBody? { return StreamingResponseBody { outputStream -> for (i in 0..9) { val htmlFragment = "<p>Chunk " + i + "</p>\n" outputStream.write(htmlFragment.toByteArray()) outputStream.flush() // ensure partial response is sent Thread.sleep(500) // simulate delay } } } |

2.2.2. Spring WebFlux

| Listing 2. Progressive Server-Side Rendering in Spring WebFlux. |

| @GetMapping(value = "/pssr", produces = MediaType.TEXT_HTML_VALUE) public Flux<String> renderChunks() { return Flux.range(1, 10) .delayElements(Duration.ofMillis(500)) .map(i -> "<p>Chunk " + i + "</p>\n"); } |

2.2.3. Quarkus

| Listing 3. Progressive Server-Side Rendering in Quarkus using StreamingOutput. |

|

@GET @Path("/stream") @Produces(MediaType.TEXT_HTML) public StreamingOutput streamHtml() { return output -> { PrintWriter writer = new PrintWriter(output); for (int i = 1; i <= 5; i++) { writer.println("<p>Chunk " + i + "</p>"); writer.flush(); // Flush each chunk incrementally Thread.sleep(500); // Simulate processing delay } writer.flush(); }; } |

3. Problem Statement

| Listing 4. HtmlFlow reactive presentation template in Kotlin with an Observable model. |

| await { div, model, onCompletion -> model .doOnNext { presentation -> presentationFragmentAsync .renderAsync(presentation) .thenApply { frag -> div.raw(frag) } } .doOnComplete { onCompletion.finish() } .subscribe() } |

| Listing 5. HtmlFlow suspend presentation template in Kotlin with a Flow model. |

| suspending { model -> model .toFlowable() .asFlow() .collect { presentation -> presentationFragmentAsync .renderAsync(presentation) .thenApply { frag -> raw(frag) } } } |

| Listing 6. Presentation HTML template using JStachio. |

| {{#presentationItems}} <div class="card mb-3 shadow-sm rounded"> <div class="card-header"> <h5 class="card-title"> {{title}} - {{speakerName}} </h5> </div> <div class="card-body"> {{summary}} </div> </div> {{/presentationItems}} |

- 1.

- Separation of Concerns: HTML templates are decoupled from application logic, enabling front-end developers to contribute without modifying back-end code.

- 2.

- Cross-Language Compatibility: External DSLs are portable across languages and frameworks, easing integration in multi-language environments.

- 3.

- Familiarity: Many developers are comfortable with HTML syntax, lowering the barrier to entry and improving maintainability.

4. Benchmark Implementation

| Listing 7. Stock class. |

| data class Stock( val name: String, val name2: String, val url: String, val symbol: String, val price: Double, val change: Double, val ratio: Double ) |

| Listing 8. Presentation class. |

| data class Presentation(

val id: Long, val title: String, val speakerName: String, val summary: String ) |

- Reactive: The template engine is used in a reactive context, where the HTML content rendered using the reactive programming model. An example of this approach is the Thymeleaf template engine when using the ReactiveDataDriverContextVariable in conjunction with a non-blocking Spring ViewResolver.

- Suspendable: The template engine is used in a suspendable context, where the HTML content is rendered within the context of a suspending function. An example of this approach is the HtmlFlow template engine, which supports suspendable templates with the use of the Flow class from the Kotlin standard library.

- Virtual: The template engine is used in a non-blocking context, where the HTML content is rendered within the context of virtual threads. This method is used for the template engines that do not traditionally support non-blocking I/O, be it either because they use external DSLs and in consequence only support the blocking Iterable interface, or because they do not support the asynchronous rendering of HTML content.

- Blocking: The template engine is used in a blocking context, where the HTML content is rendered using the blocking interface of the Observable class, within the context of an OS-thread.

- Blocking: The template engine is used in a blocking context, where the HTML content is rendered using the blocking interface of the Observable class.

- Virtual: The template engine is used in a non-blocking context, where the HTML content is rendered within the context of virtual threads.

- Blocking: The template engine is used in a blocking context, where the HTML content is rendered using the blocking interface of the Observable class.

- Virtual: The template engine is used in a non-blocking context, where the HTML content is rendered within the context of virtual threads.

- Reactive: The template engine is used in a reactive context, where the HTML content is rendered using the reactive programming model. In this case, we use the HtmlFlow template engine, which supports asynchronous rendering through the writeAsync method.

5. Results

5.1. Environment Specifications

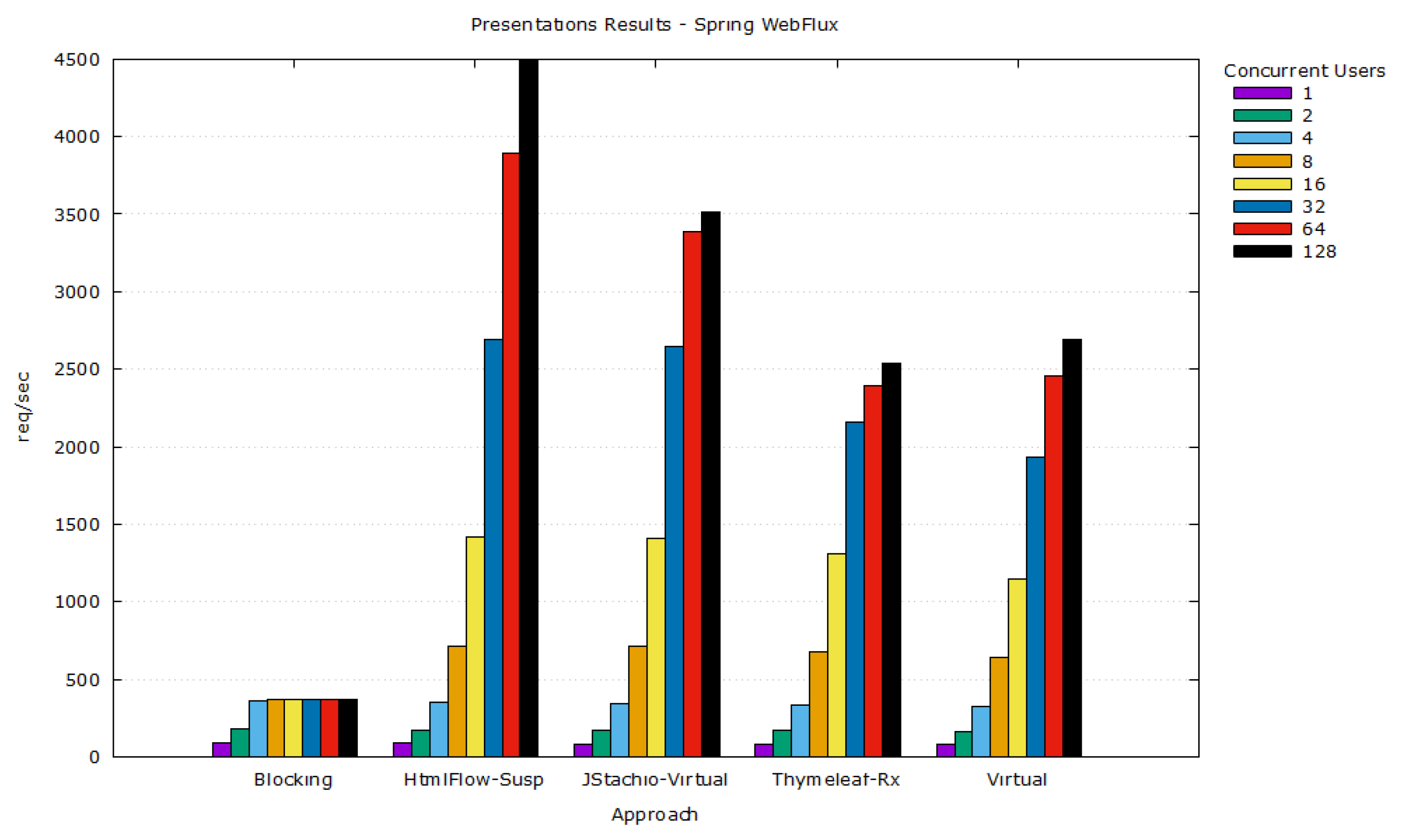

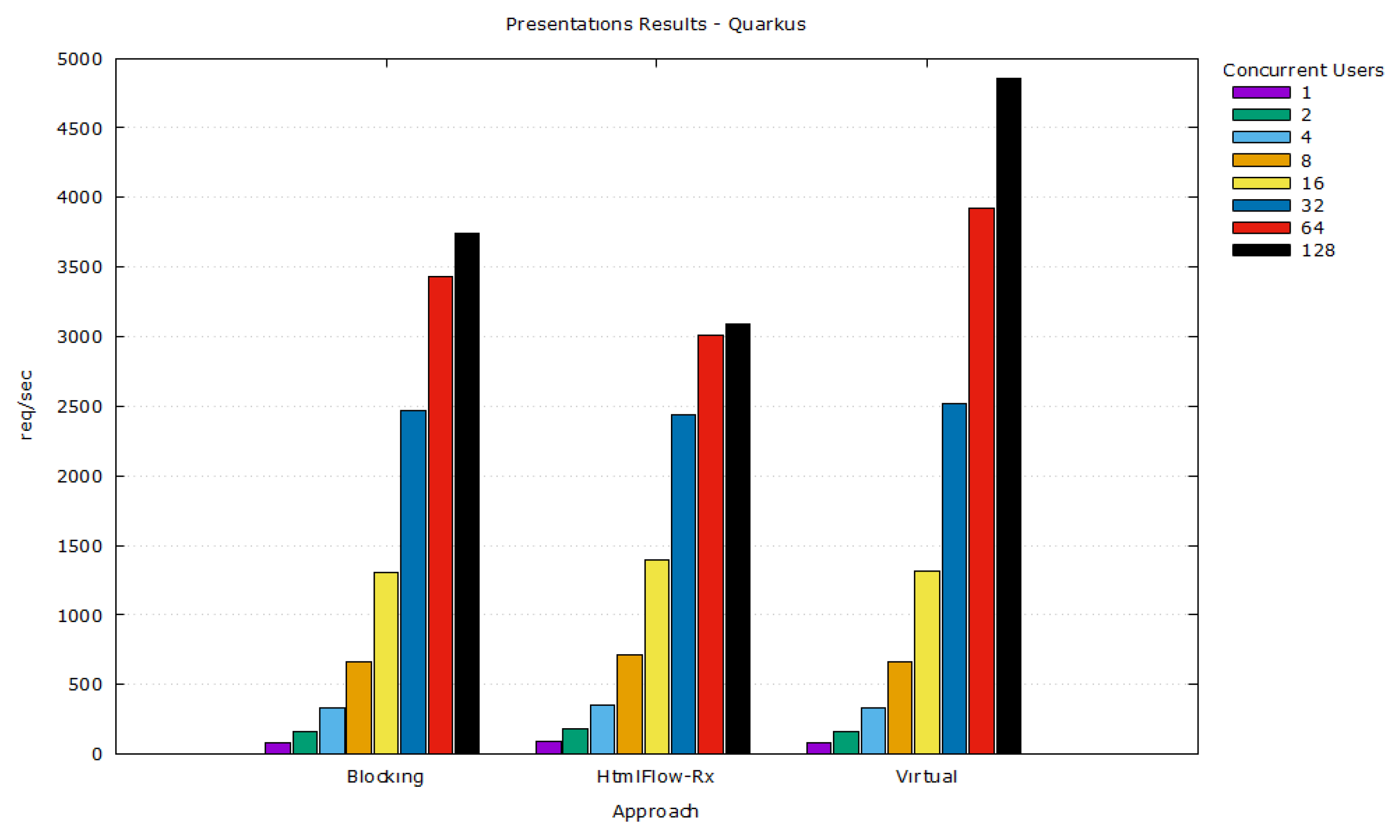

5.2. Scalability Results for the Presentations Class

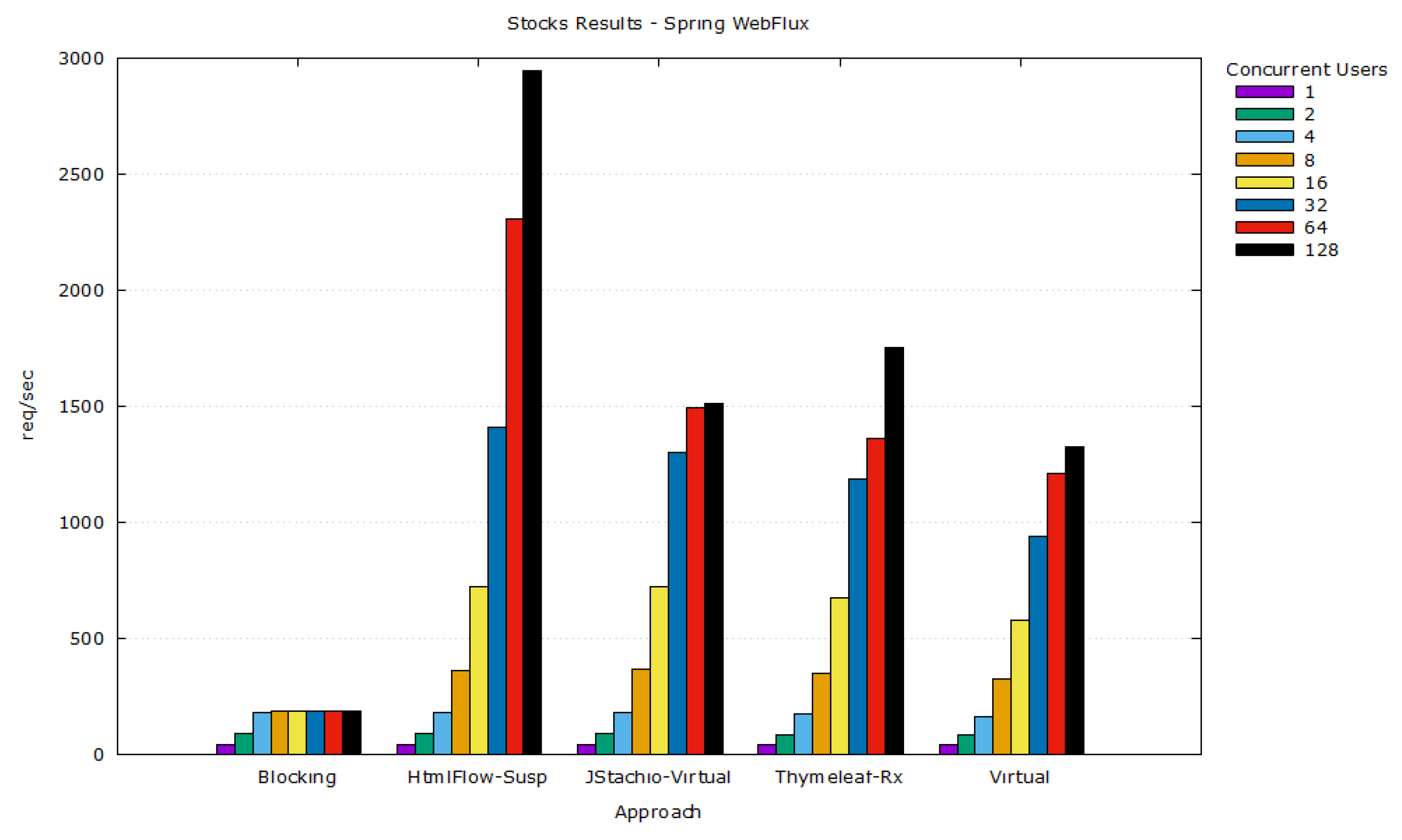

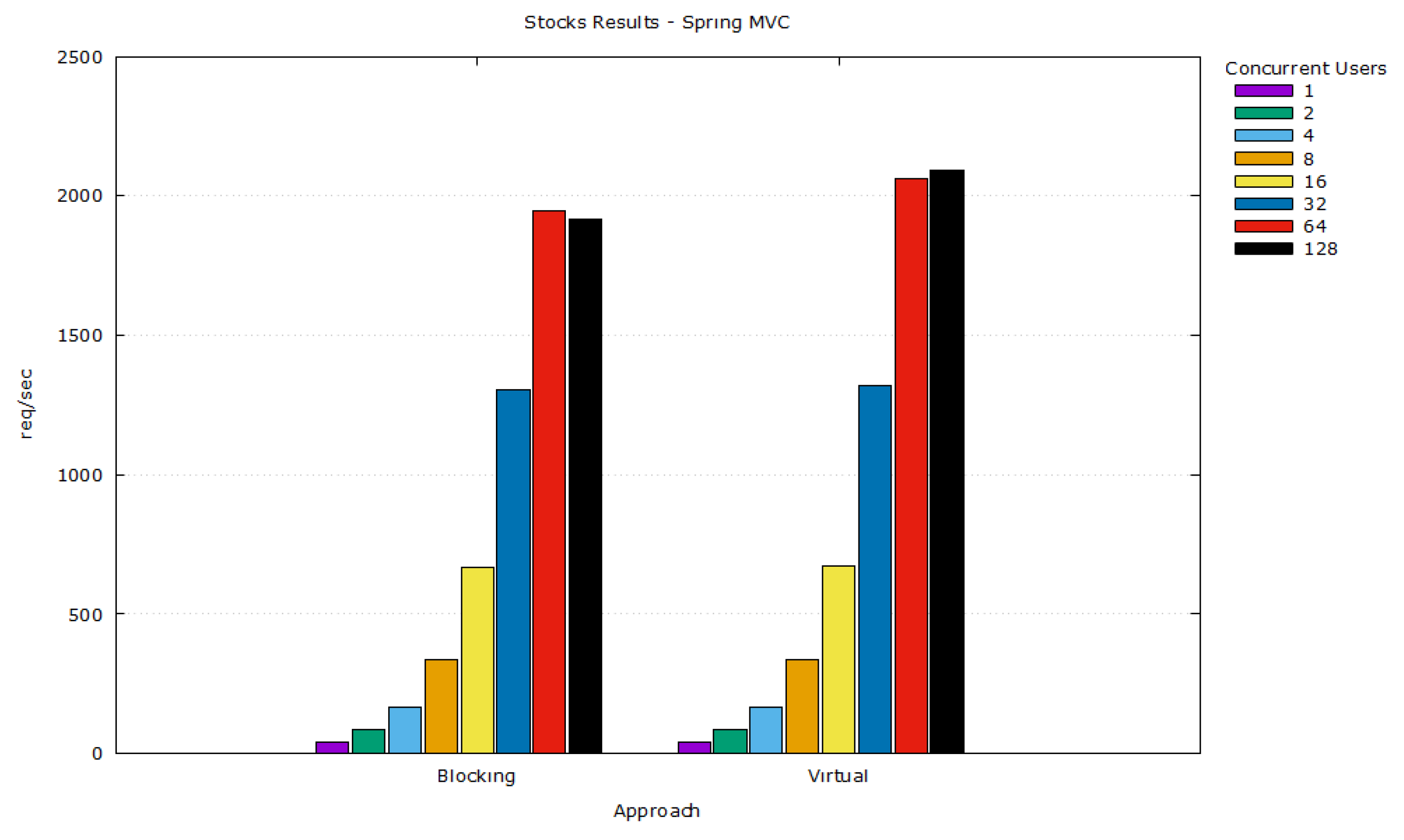

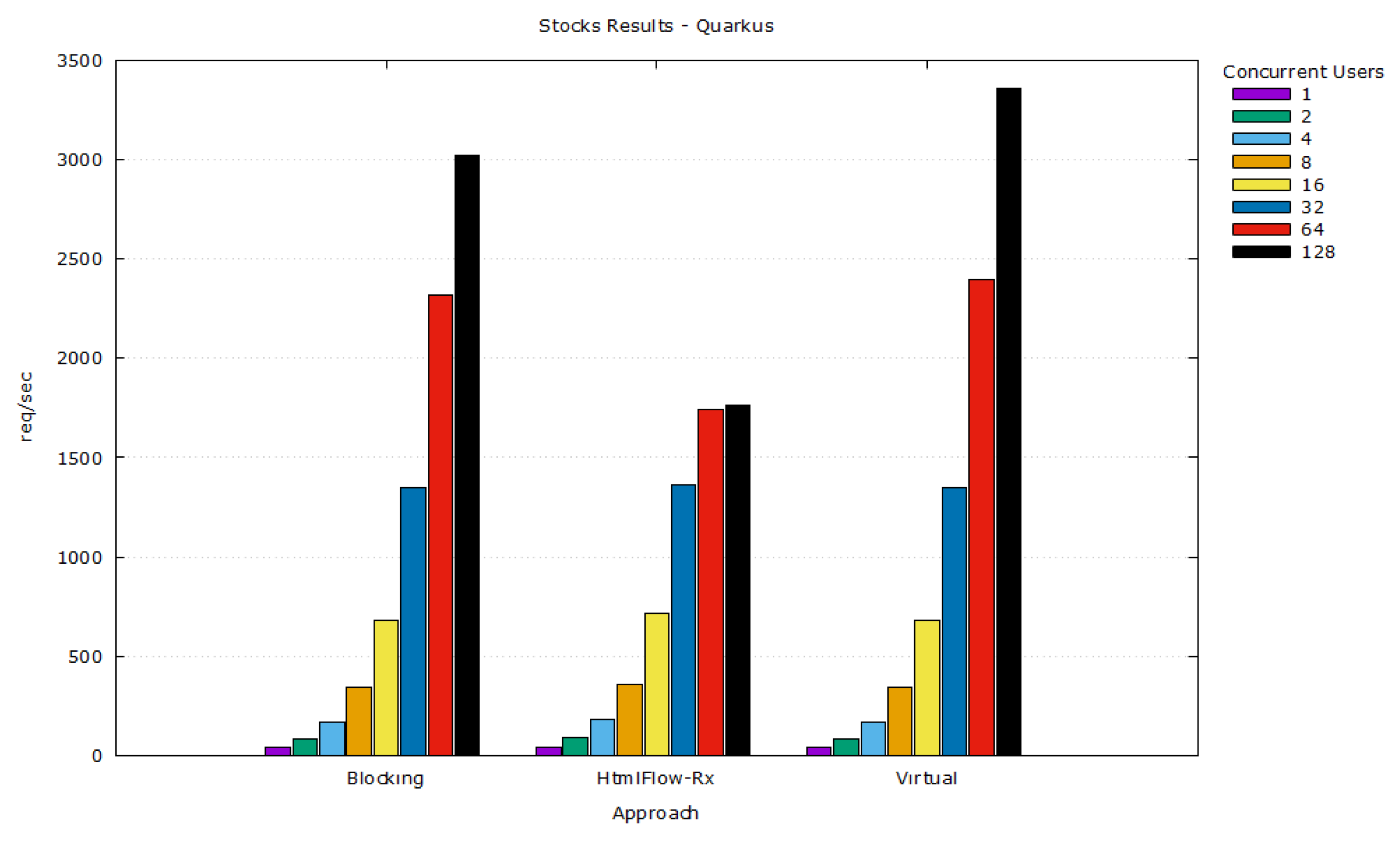

5.3. Scalability Results for the Stocks Class

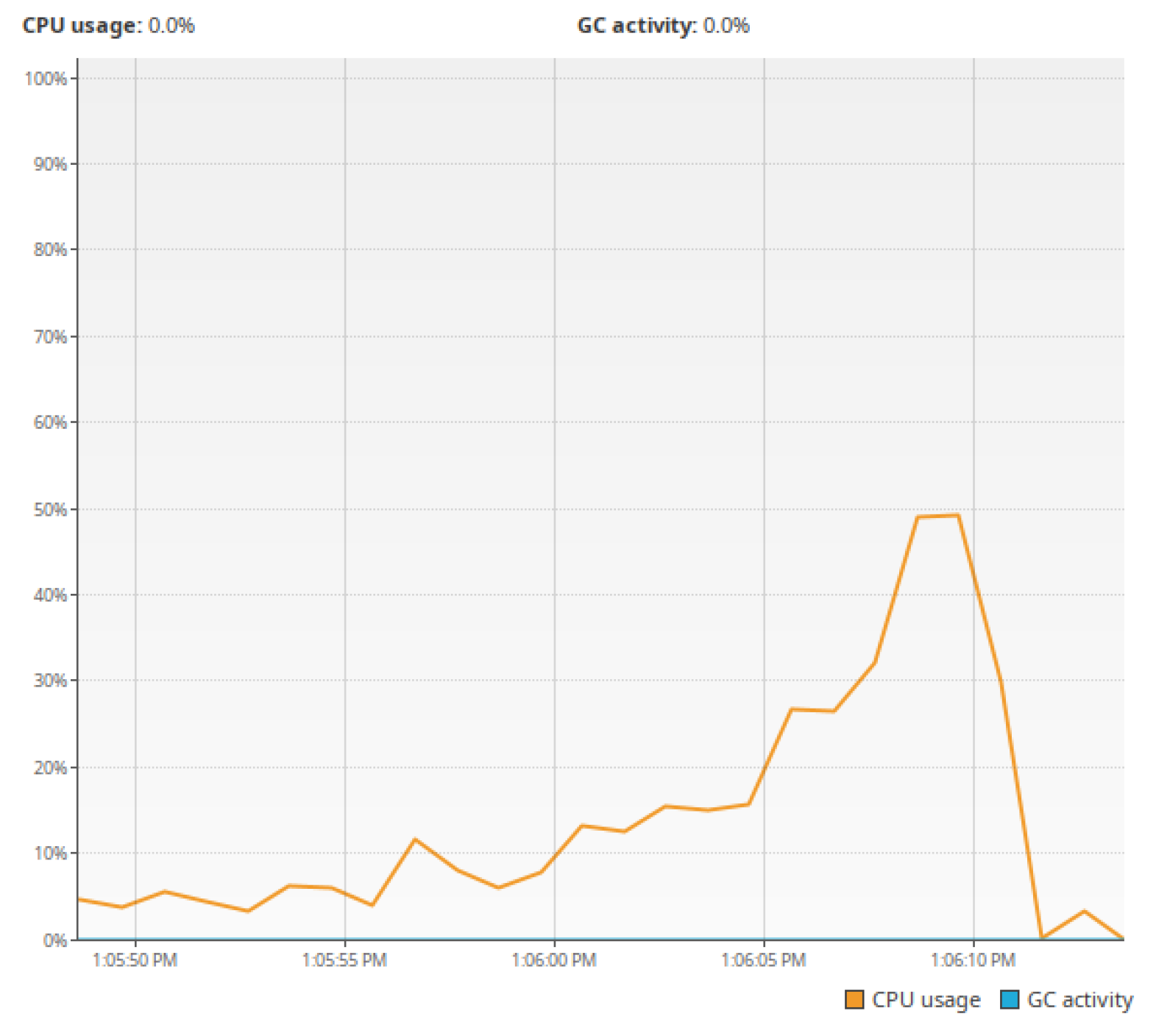

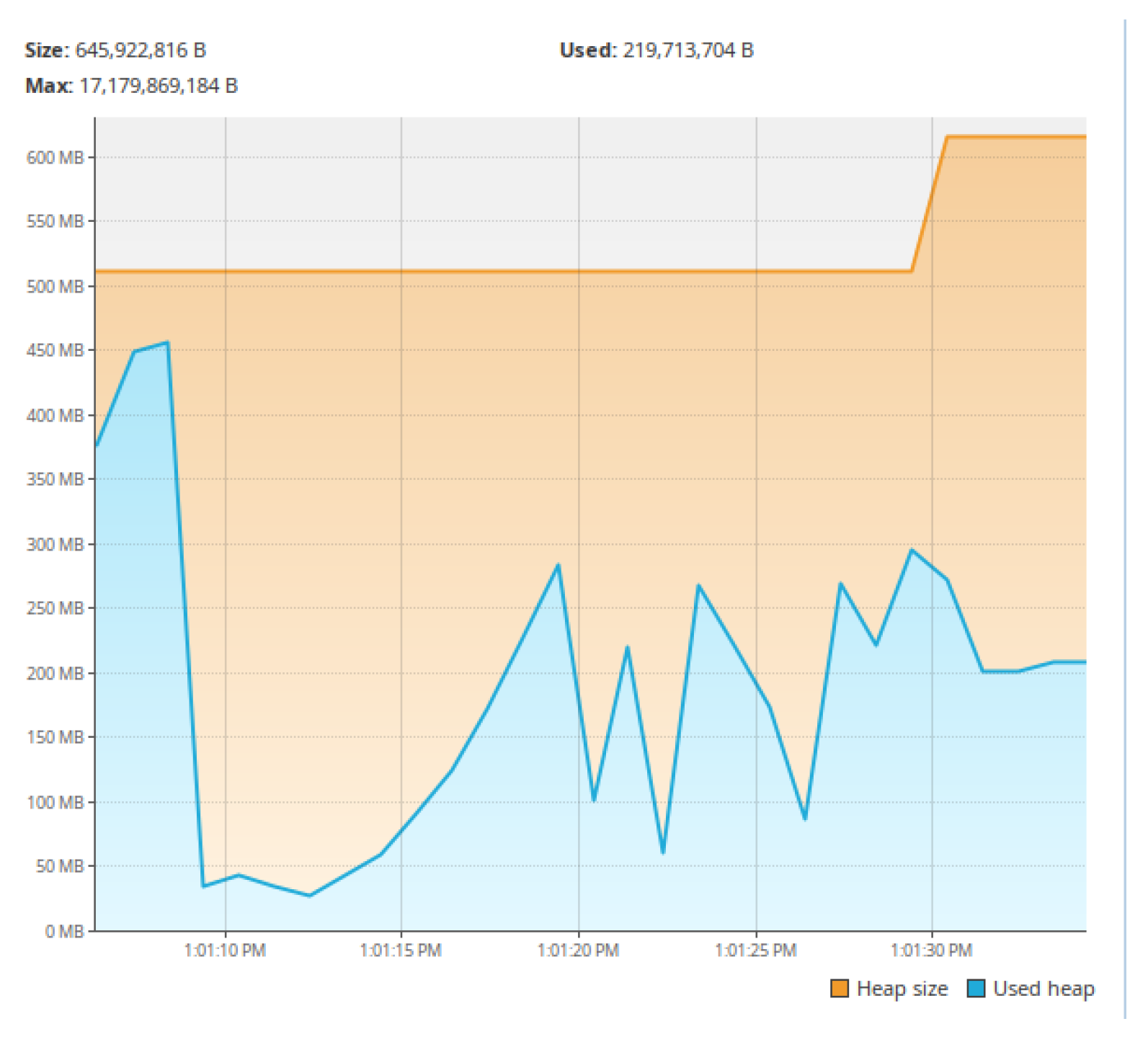

5.4. Memory Consumption and Resource Utilization Analysis

Virtual Threads vs. Structured Concurrency

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Edgar, M. First Contentful Paint. In Speed Metrics Guide: Choosing the Right Metrics to Use When Evaluating Websites; Apress: Berkeley, CA, USA, 2024; pp. 73–91. [Google Scholar] [CrossRef]

- Carvalho, F.M. Progressive Server-Side Rendering with Suspendable Web Templates. In Web Information Systems Engineering—WISE 2024; Barhamgi, M., Wang, H., Wang, X., Eds.; Springer: Singapore, 2025; pp. 458–473. [Google Scholar]

- Elmeleegy, K.; Chanda, A.; Cox, A.L.; Zwaenepoel, W. Lazy Asynchronous I/O for Event-Driven Servers. In Proceedings of the Annual Conference on USENIX Annual Technical Conference, ATEC ’04, Boston, MA, USA, 27 June –2 July 2004; p. 21. [Google Scholar]

- Carvalho, F.M.; Fialho, P. Enhancing SSR in Low-Thread Web Servers: A Comprehensive Approach for Progressive Server-Side Rendering with Any Asynchronous API and Multiple Data Models. In Proceedings of the 19th International Conference on Web Information Systems and Technologies, WEBIST ’23, Rome, Italy, 15–17 November 2023. [Google Scholar]

- Carvalho, F.M. HtmlFlow Java DSL toWrite Typesafe HTML. Technical Report. 2017. Available online: https://htmlflow.org/ (accessed on 29 May 2025).

- Fernández, D. Thymeleaf. Technical Report. 2011. Available online: https://www.thymeleaf.org/ (accessed on 29 May 2025).

- Fowler, M. Domain Specific Languages; Addison-Wesley Professional: Boston, MA, USA, 2010. [Google Scholar]

- Fowler, M. Patterns of Enterprise Application Architecture; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 2002. [Google Scholar]

- Alur, D.; Malks, D.; Crupi, J. Core J2EE Patterns: Best Practices and Design Strategies; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Parr, T.J. Enforcing Strict Model-View Separation in Template Engines. In Proceedings of the 13th International Conference on World Wide Web, WWW ’04, New York, NY, USA, 17–20 May 2004; pp. 224–233. [Google Scholar] [CrossRef]

- Krasner, G.E.; Pope, S. A Description of the Model-View-Controller User Interface Paradigm in the Smalltalk80 System. J. Object-Oriented Program. 1988, 1, 26–49. [Google Scholar]

- Netflix; Pivotal; Red Hat; Oracle; Twitter; Lightbend. Reactive Streams Specification. Technical Report. 2015. Available online: https://www.reactive-streams.org/ (accessed on 29 May 2025).

- Landin, P.J. The next 700 programming languages. Commun. ACM 1966, 9, 157–166. [Google Scholar] [CrossRef]

- Evans, E.; Fowler, M. Domain-Driven Design: Tackling Complexity in the Heart of Software; Addison-Wesley: Boston, MA, USA, 2004. [Google Scholar]

- Thompson, K. Programming Techniques: Regular Expression Search Algorithm. Commun. ACM 1968, 11, 419–422. [Google Scholar] [CrossRef]

- Resig, J. Pro JavaScript Techniques; Apress: New York, NY, USA, 2007. [Google Scholar]

- Hors, A.L.; Hégaret, P.L.; Wood, L.; Nicol, G.; Robie, J.; Champion, M.; Arbortext; Byrne, S. Document Object Model (DOM) Level 3 Core Specification. Technical Report. 2004. Available online: https://www.w3.org/TR/2004/REC-DOM-Level-3-Core-20040407/ (accessed on 29 May 2025).

- Carvalho, F.M.; Duarte, L.; Gouesse, J. Text Web Templates Considered Harmful. In Lecture Notes in Business Information Processing; Springer: Cham, Switzerland, 2020; pp. 69–95. [Google Scholar]

- Landin, P.J. Correspondence Between ALGOL 60 and Church’s Lambda-notation: Part I. Commun. ACM 1965, 8, 89–101. [Google Scholar] [CrossRef]

- Sussman, G.; Steele, G. Scheme: An Interpreter for Extended Lambda Calculus; AI Memo No. 349; MIT Artificial Intelligence Laboratory: Cambridge, MA, USA, 1975. [Google Scholar]

- Friedman; Wise. Aspects of Applicative Programming for Parallel Processing. IEEE Trans. Comput. 1978, C-27, 289–296. [Google Scholar] [CrossRef]

- Syme, D.; Petricek, T.; Lomov, D. The F# Asynchronous Programming Model. In Practical Aspects of Declarative Languages; Rocha, R., Launchbury, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 175–189. [Google Scholar]

- Elizarov, R.; Belyaev, M.; Akhin, M.; Usmanov, I. Kotlin coroutines: Design and implementation. In Proceedings of the 2021 ACM SIGPLAN International Symposium on New Ideas, New Paradigms, and Reflections on Programming and Software, Chicago, IL, USA, 20–22 October 2021; pp. 68–84. [Google Scholar]

- Meijer, E. Democratizing the Cloud with the .NET Reactive Framework Rx. In Proceedings of the Internaional Softare Development Conference, Vancouver, BC, Canada, 16–24 May 2009; Available online: https://qconsf.com/sf2009/sf2009/speaker/Erik+Meijer.html (accessed on 29 May 2025).

- RxJava Contributors. RX Java. Technical Report. 2025. Available online: https://github.com/ReactiveX/RxJava (accessed on 29 May 2025).

- VMWare; Contributors. Project Reactor. Technical Report. 2025. Available online: https://projectreactor.io/ (accessed on 29 May 2025).

- Davis, A.L. Akka HTTP and Streams. In Reactive Streams in Java: Concurrency with RxJava, Reactor, and Akka Streams; Apress: Berkeley, CA, USA, 2019; pp. 105–128. [Google Scholar]

- Ponge, J.; Navarro, A.; Escoffier, C.; Le Mouël, F. Analysing the performance and costs of reactive programming libraries in Java. In Proceedings of the 8th ACM SIGPLAN International Workshop on Reactive and Event-Based Languages and Systems, REBLS 2021, New York, NY, USA, 18 October 2021; pp. 51–60. [Google Scholar] [CrossRef]

- Breslav, A. Kotlin Language Documentation. Technical Report. 2016. Available online: https://kotlinlang.org/docs/kotlin-docs.pdf (accessed on 29 May 2025).

- Vogel, L.; Springer, T. User Acceptance of Modified Web Page Loading Based on Progressive Streaming. In Proceedings of the International Conference on Web Engineering, Bari, Italy, 5–8 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 391–405. [Google Scholar]

- Atwood, J. The Lost Art of Progressive HTML Rendering. Technical Report. 2005. Available online: https://blog.codinghorror.com/the-lost-art-of-progressive-html-rendering/ (accessed on 29 May 2025).

- Farago, J.; Williams, H.; Walsh, J.; Whyte, N.; Goel, K.; Fung, P. Object Search UI and Dragging Object Results. US Patent Applications 11/353,787, 14 February 2007. [Google Scholar]

- Schiller, S. Progressive Loading. US Patent Applications 11/364,992, 26 February 2007. [Google Scholar]

- Von Behren, R.; Condit, J.; Brewer, E. Why Events Are a Bad Idea (for {High-Concurrency} Servers). In Proceedings of the 9th Workshop on Hot Topics in Operating Systems (HotOS IX), Lihue, HI, USA, 18–21 May 2003. [Google Scholar]

- Kambona, K.; Boix, E.G.; De Meuter, W. An Evaluation of Reactive Programming and Promises for Structuring Collaborative Web Applications. In Proceedings of the 7th Workshop on Dynamic Languages and Applications, DYLA ’13, New York, NY, USA, 1–5 July 2013. [Google Scholar] [CrossRef]

- Kant, K.; Mohapatra, P. Scalable Internet servers: Issues and challenges. ACM SIGMETRICS Perform. Eval. Rev. 2000, 28, 5–8. [Google Scholar] [CrossRef]

- Meijer, E. Your Mouse is a Database. Queue 2012, 10, 20.20–20.33. [Google Scholar] [CrossRef]

- Jin, X.; Wah, B.W.; Cheng, X.; Wang, Y. Significance and Challenges of Big Data Research. Big Data Res. 2015, 2, 59–64. [Google Scholar] [CrossRef]

- Karsten, M.; Barghi, S. User-Level Threading: Have Your Cake and Eat It Too. Proc. ACM Meas. Anal. Comput. Syst. 2020, 4, 1–30. [Google Scholar] [CrossRef]

- Burke, B. RESTful Java with JAX-RS 2.0: Designing and Developing Distributed Web Services; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2013. [Google Scholar]

- Haynes, C.T.; Friedman, D.P.; Wand, M. Continuations and Coroutines. In Proceedings of the 1984 ACM Symposium on LISP and Functional Programming, LFP ’84, Austin, TX, USA, 5–8 August 1984; pp. 293–298. [Google Scholar] [CrossRef]

- Veen, R.; Vlijmincx, D. Scoped Values. In Virtual Threads, Structured Concurrency, and Scoped Values: Explore Java’s New Threading Model; Apress: Berkeley, CA, USA, 2024. [Google Scholar] [CrossRef]

- Model-View-Controller Pattern. In Learn Objective-C for Java Developers; Apress: Berkeley, CA, USA, 2009; pp. 353–402. [CrossRef]

- Bösecke, M. JMH Benchmark of the Most Popular Java Template Engines. Technical Report. 2015. Available online: https://github.com/mbosecke/template-benchmark (accessed on 29 May 2025).

- Reijn, J. Comparing Template Engines for Spring MVC. Technical Report. 2015. Available online: https://github.com/jreijn/spring-comparing-template-engines (accessed on 29 May 2025).

- Beronić, D.; Modrić, L.; Mihaljević, B.; Radovan, A. Comparison of Structured Concurrency Constructs in Java and Kotlin—Virtual Threads and Coroutines. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; pp. 1466–1471. [Google Scholar] [CrossRef]

- Navarro, A.; Ponge, J.; Le Mouël, F.; Escoffier, C. Considerations for integrating virtual threads in a Java framework: A Quarkus example in a resource-constrained environment. In Proceedings of the 17th ACM International Conference on Distributed and Event-Based Systems, Neuchatel, Switzerland, 27–30 June 2023; pp. 103–114. [Google Scholar]

- Fox, T. Eclipse Vert.xTM Reactive Applications on the JVM. Technical Report. 2014. Available online: https://vertx.io/ (accessed on 29 May 2025).

- Maurer, N.; Wolfthal, M. Netty in Action; Manning Publications: Shelter Island, NY, USA, 2015. [Google Scholar]

- Šimatović, M.; Markulin, H.; Beronić, D.; Mihaljević, B. Evaluating Memory Management and Garbage Collection Algorithms with Virtual Threads in High-Concurrency Java Applications. In Proceedings of the 48th ICT and Electronics Convention MIPRO 2025. Rijeka: Croatian Society for Information, Communication and Electronic Technology–MIPRO, Opatija, Croatia, 2–6 June 2025. [Google Scholar]

- Deinum, M.; Cosmina, I. Building Reactive Applications with SpringWebFlux. In Pro Spring MVC withWebFlux: Web Development in Spring Framework 5 and Spring Boot 2; Apress: Berkeley, CA, USA, 2021; pp. 369–420. [Google Scholar]

| Library | DSL Idiom | Data Model APIs | Asynchronous Support | Type Safety | HTML Safety | Progressive Rendering |

|---|---|---|---|---|---|---|

| Freemarker | External DSL | Iterable | ✕ | ✕ | ✕ | ✔ |

| JSP | External DSL | Iterable | ✕ | ✕ | ✕ | ✕ |

| JStachio | External DSL | Iterable | ✕ | ✔(1) | ✕ | ✔ |

| Pebble | External DSL | Iterable | ✕ | ✕ | ✕ | ✔ |

| Qute | External DSL | Iterable | ✕ | ✔(2) | ✕ | ✔ |

| Rocker | External DSL | Iterable | ✕ | ✕ | ✕ | ✔ |

| Thymeleaf | External DSL | Iterable Stream Publisher(3) | Publisher(3) | ✕ | ✕ | ✔ |

| Trimou | External DSL | Iterable | ✕ | ✕ | ✕ | ✔ |

| Velocity | External DSL | Iterable Sequence(4) | ✕ | ✕ | ✕ | ✔ |

| Clojure Hiccup | Nested Eager | All | ✕ | ✔ | ✕ | ✕ |

| Groovy MarkupBuilder | Nested Lazy | All | ✕ | ✔ | ✕ | ✔ |

| HtmlFlow | Chaining | All | ✔ | ✔ | ✔ | ✔ |

| j2html | Nested Eager | All | ✕ | ✔ | ✕ | ✕ |

| KotlinX | Nested Lazy | All | ✕ | ✔ | ✔(5) | ✔ |

| ScalaTags | Nested Eager | All | ✕ | ✔ | ✕ | ✕ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pereira, B.; Carvalho, F.M. Enabling Progressive Server-Side Rendering for Traditional Web Template Engines with Java Virtual Threads. Software 2025, 4, 20. https://doi.org/10.3390/software4030020

Pereira B, Carvalho FM. Enabling Progressive Server-Side Rendering for Traditional Web Template Engines with Java Virtual Threads. Software. 2025; 4(3):20. https://doi.org/10.3390/software4030020

Chicago/Turabian StylePereira, Bernardo, and Fernando Miguel Carvalho. 2025. "Enabling Progressive Server-Side Rendering for Traditional Web Template Engines with Java Virtual Threads" Software 4, no. 3: 20. https://doi.org/10.3390/software4030020

APA StylePereira, B., & Carvalho, F. M. (2025). Enabling Progressive Server-Side Rendering for Traditional Web Template Engines with Java Virtual Threads. Software, 4(3), 20. https://doi.org/10.3390/software4030020