1. Introduction

Today, software has become an indispensable element in almost every aspect of our lives, making our lives more convenient, user-friendly, and efficient, and supporting people’s comfortable lifestyles. In 2001, 17 software developers who had adopted methods different from traditional software development techniques met to discuss their principles and methods and presented their results. Agile software development methodologies (derived from the Agile Software Development Manifesto [

1]) and DevOps [

2] emerged, enabling software development methodologies that adapt to the speed of business and the convenience of software. Full-stack engineers, who have a wide range of skills and can handle all stages of the development process—from defining system requirements to design, development, operations, and maintenance—have become active by integrating development and operations, prioritizing automation and monitoring, and improving development efficiency. Technologists keeping pace with these new development technologies support the systems that connect our lives, and each engineer continues to challenge himself to improve quality.

On 18 July 2024, a system failure caused by a malfunction in CrowdStrike Holdings, Inc. [

3] security software resulted in blue screen errors on Windows devices worldwide. This incident is estimated to have affected approximately 8.5 million Windows devices worldwide, making it the largest such incident in history. The global economic damage from this outage is estimated to be at least USD 10 billion, and it disrupted numerous systems, including medical facilities and public transportation systems, impacting people’s lives around the world.

In Japan, frequent reports of system outages at banks and securities firms have prevented users from accessing systems or conducting transactions, causing widespread disruptions to business operations.

Considering that such a critical outage occurred in a system that requires high reliability and had a social impact, improving development productivity alone is not enough to reduce outages. It is essential to advance quality management technologies without compromising development productivity.

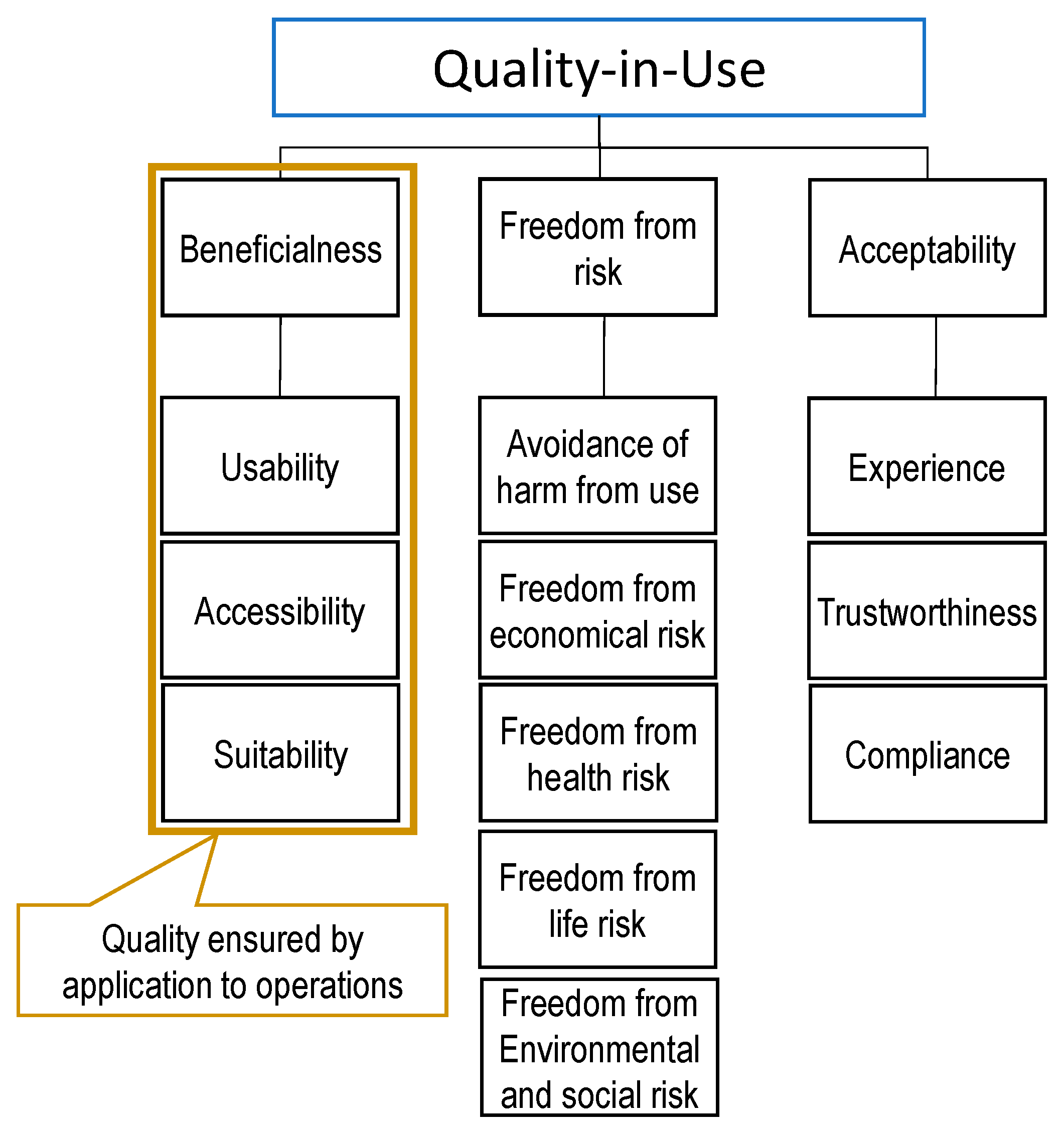

The latest quality model for “quality-in-use” in the ISO 25000 [

4] series of international standards for software quality requirements and evaluation, known as SQuaRE (Software Product Quality Requirements and Evaluation), which is widely used by many software quality management engineers, has added “environmental and social risks” as a sub-characteristic of “freedom from risk,” which requires consideration of measures to mitigate the social impact of systems (

Figure 1). Thus, when considering software quality, it is essential for software developers to consider the social impact of failures and to ensure that users can use the software safely and with confidence.

Definition of environmental and societal risk:

The extent to which a product or system mitigates the potential risk to the environment and society at large in the intended contexts of use.

Source:

ISO/IEC 25019:2023 [

5] Systems and software engineering—Systems and software Quality Requirements and Evaluation (SQuaRE)—quality-in-use model 3.2.2.2 freedom from environmental and societal risk.

Agile development methodologies are being adopted by companies developing SaaS and web systems to rapidly develop systems while maintaining high quality without sacrificing productivity. Various quality management and testing methodologies suitable for agile development have emerged, and to maintain development productivity, it is necessary to focus on the efficiency and automation of software testing, which is said to account for the majority of development work.

To effectively implement quality management, it is necessary to use a project Kanban board to visualize the pass rate of automated tests, the number of bugs identified, and the number of fixes, thereby clarifying the status of development productivity and quality.

Mugridge et al. [

6] addressed the issues of redundancy, repetition, and high maintenance costs associated with automation using capture-replay tools by developing a custom tool to achieve efficient automation of ATDD (Acceptance Test-Driven Development). Regarding the impact of automation, Berłowski et al. [

7] automated over 70% of functional tests in a Java EE/JSF-based project using Selenium WebDriver and JUnit, established a process for continuous execution in Scrum sprints, and demonstrated a 48% reduction in defect leakage rate and a reduction in customer acceptance test duration from 5 days to 3 days after implementation.

Jussi et al. [

8] studied barriers to test automation by conducting interviews and observing data from 12 teams in six Indian IT companies. They inductively theorized the barriers and success factors for adopting and scaling test automation in agile projects. They found that skill shortages and fear of fragile testing lead to a passive attitude, while management’s requirements for quality metrics and the team’s experimental culture act as drivers.

Furthermore, Kato et al. [

9] proposed a method for using the quality characteristics defined in international quality standards for software quality in agile software development using DevOps. They demonstrated that by using quality characteristics to clarify target quality in Scrum development and sprint plans, the number of defects detected can be significantly reduced, even as test density is increased. By using quality characteristics as project quality KPIs, it becomes possible to visualize DoR (Deliverable/Required/Acceptable). Furthermore, by using metrics for each quality characteristic as quality gates for each sprint, they demonstrated that it is possible to achieve built-in quality.

So how can we maximize the effectiveness of test automation to facilitate the collection of quality data? In general, organizational maturity in software development is commonly assessed using CMMI

® [

10]. Gibson et al. [

11] systematically examine the extent to which process improvement using CMMI actually works in practice, using publicly available data and 13 case studies from 10 companies. After a cross-sectional analysis of six indicators (cost, schedule, productivity, quality, customer satisfaction, and ROI), the results show a median cost reduction of 34%, an average schedule reduction of 22%, and a productivity improvement of up to 62%.

Meanwhile, the maturity of software testing activities can be assessed with TMMi

® [

12]. Garousi et al. [

13] conducted a polyphonic literature review covering 181 articles (academic 130/industry 51) on software testing maturity assessment and improvement (TMA/TPI) and extracted 58 maturity models, of which TMMi was used in the largest number of 34. The business benefits of TMMi include up to 63% reduction in defect costs, ROI payback within 12 months, and improved customer satisfaction; the operational benefits include prevention of release delays, shorter test cycles, improved risk management, and more accurate estimates based on measurements; and the technical benefits include fewer critical defects, improved traceability, test automation, and improved design techniques. The report concludes that TMMi is effective regardless of organization size or development process. In addition, the report identifies factors that inhibit the adoption of TMMi, such as lack of resources, resistance, and ROI uncertainty, and organizes measures to overcome these factors, providing practical guidance for practitioners considering test process improvement. Also, Eldh et al. [

14] proposed the Test Automation Improvement Model (TAIM) as a new approach to improve the quality and efficiency of test automation to complement TPI and TMMI, which do not support test automation.

The effectiveness of test automation depends on an organization’s adherence to automation principles and the guidelines for achieving them. Objectively evaluating and analyzing this adherence allows us to measure the maturity of test automation within a development organization. However, no existing maturity model explicitly maps its practices to ISO 9001 [

15] clauses, leaving compliance-driven organizations without clear guidance.

We have devised the following research questions (

Table 1) and elected to develop a quantifiable test automation maturity model (TAMM) employing a checklist to fill this gap, while taking into account the validity of the ensuing hypotheses.

H1: There is a positive correlation between low maturity and high individual differences among automation engineers, significant variation in design and code, and the number of issues identified during reviews.

H2: A consensus-based, multi-reviewer assessment approach yields consistent checklist scores with no material divergence after group discussion. Three evaluators with κ ≥ 0.75.

Research Gap: None of the existing maturity models explicitly link E2E test-automation maturity with an ISO 9001-based QMS.

Research Goal: To devise TAMM that bridges E2E automation and ISO 9001 QMS, and to validate it in three industrial projects.

Figure 2 positions TAMM against CMMi, TMMi, and TAIM along two strategic axes (ISO 9001 alignment, automation scope). Through this research, we provide methodologies for improving test automation maturity, maximizing the productivity of test work—which accounts for the majority of development tasks—through automated testing, and further improving development productivity and software quality control to a higher level.

2. Related Work for Test Automation

2.1. Study of Test Automation Effectiveness

Early empirical work for test automation showed that where you focus automation is as important as whether you automate. Elbaum et al. [

17] showed that prioritizing regression tests so that the cases most likely to reveal faults run first increases early defect detection and provides developers with faster feedback loops. Rothermel et al. [

18] examine whether test-case prioritization accelerates fault detection in regression testing. Leveraging 10,000+ test suites and >150,000 mutants, the study provides strong quantitative evidence that inexpensive structural heuristics can nearly double early fault-detection effectiveness in practical regression-testing scenarios. Building on this, Elbaum et al. compared multiple prioritization strategies across program types and release cadences. They concluded that history-based strategies outperform structural ones when change volatility is high. Together, these three studies established test-case ordering as an inexpensive automation strategy that improves quality without requiring new test artifacts. Also, Kato [

19] demonstrated that scenario testing—which incorporates use cases, such as those employed in performance and load testing—can improve the effectiveness of automated system testing by including use cases involving errors made by users, such as operational mistakes.

Automation then moved beyond unit-level suites. Memon et al. [

20] analyzed 12 rapidly evolving GUI applications and found that GUI-specific test cases expose faults that code-centric suites miss. Meanwhile, Memon et al. introduced “GUI smoke tests”—short, automatically generated event sequences that detected 64% of field faults in just 10% of the original execution time. These papers validated the feasibility of high-level automation for user-interface defect prevention.

Bertolino et al. [

21] quantified the economic dimension with an ROI model that balanced scripting and maintenance costs against fault-removal savings. Organizations that adopted their guidelines reported payback periods under nine months. Nagappan et al. [

22] linked Test-Driven Development (TDD)—often automated via continuous unit testing—to 40–90% lower defect density across four industrial projects. This signals that workflow-embedded automation multiplies the ROI predicted by earlier static models.

The scope and maturity of automation became the next order of business. The Test Automation Improvement Model (TAIM), which outlines capability stages that mirror the Capability Maturity Model Integration (CMMI) but emphasize the maintainability of scripts and the analysis of results. By mapping 63 empirical studies, they demonstrated that organizations reaching TAIM Level 3 reduced release lead times by ~30%. Garousi and Mäntylä [

23] complemented this with a multi-vocal review (MLR) that distilled practical “when/what to automate” heuristics—e.g., automate stable regression checks first and postpone flaky integration steps—grounded in literature and practitioner interviews.

The practitioner voice is further amplified in Rafi et al. [

24], who surveyed 115 engineers. The main issues raised were fragile scripts that caused false expectations and made maintenance difficult. The questions were designed based on a literature review suggesting that false alarms, or false detections, are a widely recognized issue in the industry. Parry et al. [

25] conducted a systematic analysis of the fragility issues that often arise in end-to-end (E2E) testing. They classified the root causes of these issues as timing, test order, and environment. Finally, they recommended mechanisms for isolation and retry. They reiterated that the effectiveness of automation hinges on the reliability of the tests themselves.

Software tools evolution has paralleled methodological insight. Berlowski et al. documented an SME’s year-long Selenium WebDriver adoption, recording a 48% drop in production escapes and an 18% decline in customer-reported issues after only 320 h of automation effort. This is evidence that modern open-source frameworks lower entry barriers outside of Fortune 500 contexts. Liu et al. [

26] benchmarked 11 automated test-generation tools for Java and revealed that large language model–powered generators achieve 14% higher branch coverage than search-based tools, yet they still lag behind developers in terms of oracle quality. This indicates the current limits of AI-assisted automation.

Continuous integration (CI) systems, such as Jenkins [

27], operationalize these techniques, enabling fast feedback and automatic enforcement of quality gates. The ubiquitous adoption of Jenkins thus acts as a sociotechnical enabler for the gains observed by many empirical studies.

Pérez-Verdejo et al. [

28] took a quality-model perspective, mapping characteristics to automation effects. They found that maintainability and reliability improve most consistently, whereas portability shows mixed outcomes. Mariani et al. [

29] synthesized these findings into a manifesto that positions test automation as the “central pillar” of modern QA. They urge researchers to treat automation debt as seriously as code debt. Bridging the gap between research and DevOps, Kato et al. [

30] introduced a quality classification rubric that maps testing tasks to DevOps pipeline stages. Their field data showed that categorizing tests by business risk rather than layer improved stakeholder buy-in for automation investments. Kato further argued that designing tests “automation-first” increases script reuse by 22% across sprints, aligning with behavior-driven design philosophies advocated in Agile literature.

Readability also matters. Buse et al. [

31] learned a metric for code readability and correlated it with post-release defects. When integrated with static analysis-triggered test creation, readable test code was associated with 16% fewer production bugs. This suggests that the way automated tests are written influences their long-term defect-shielding power.

Pressman and Maxim [

32] summarize foundational process wisdom in their practitioner text, which integrates decades of automation research into lifecycle guidance. Their chapters underpin many industries training programs, ensuring that academic findings propagate into practice.

In this way, the effects of test automation are being analyzed both academically and commercially, and research is continuing toward the realization of even better automation.

2.2. Considering Test Automation from Quality Control Perspective

The utilization of test automation is a prevalent practice that is often employed to enhance the efficiency of functional testing methodologies. However, to further enhance the effectiveness of test automation, it is also possible to achieve benefits beyond functional conformity by organizing it according to international quality standards for software quality. Consequently, it is imperative for individuals involved in quality management to possess a comprehensive understanding of the quality characteristics that serve as the foundation for the quality model.

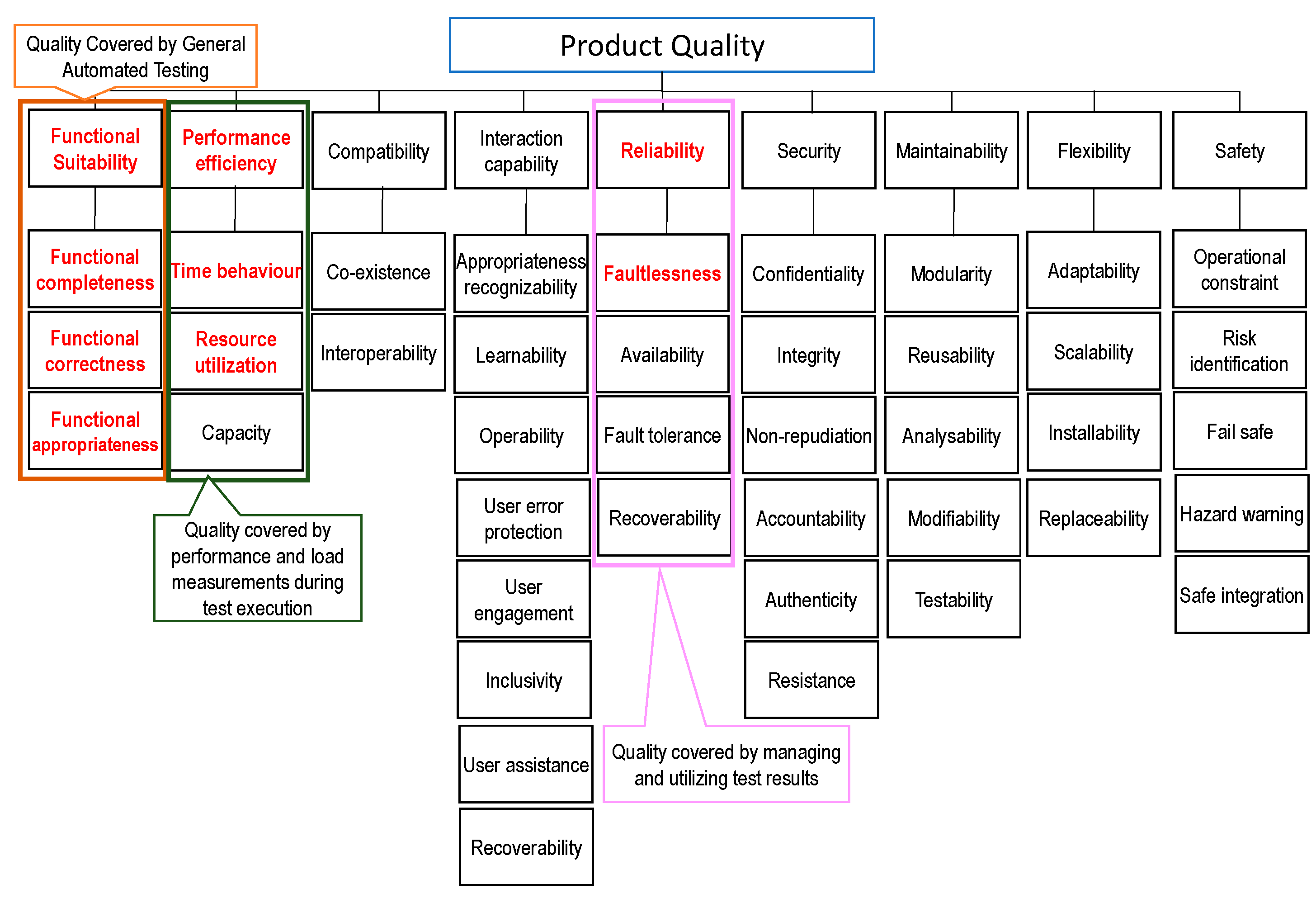

In general, the effects of software test automation by quality characteristics are represented in

Figure 3 for product quality and in

Figure 4 for quality-in-use. Ensuring “functional suitability” is a fundamental aspect of testing functionality. However, measuring the processing time and load status of each function during testing can also provide data to ensure “performance efficiency”. Moreover, the aggregation and analysis of test result reports can elucidate the status and trends of weak functions. Moreover, the repeated implementation of the system can contribute to ensuring its “reliability”.

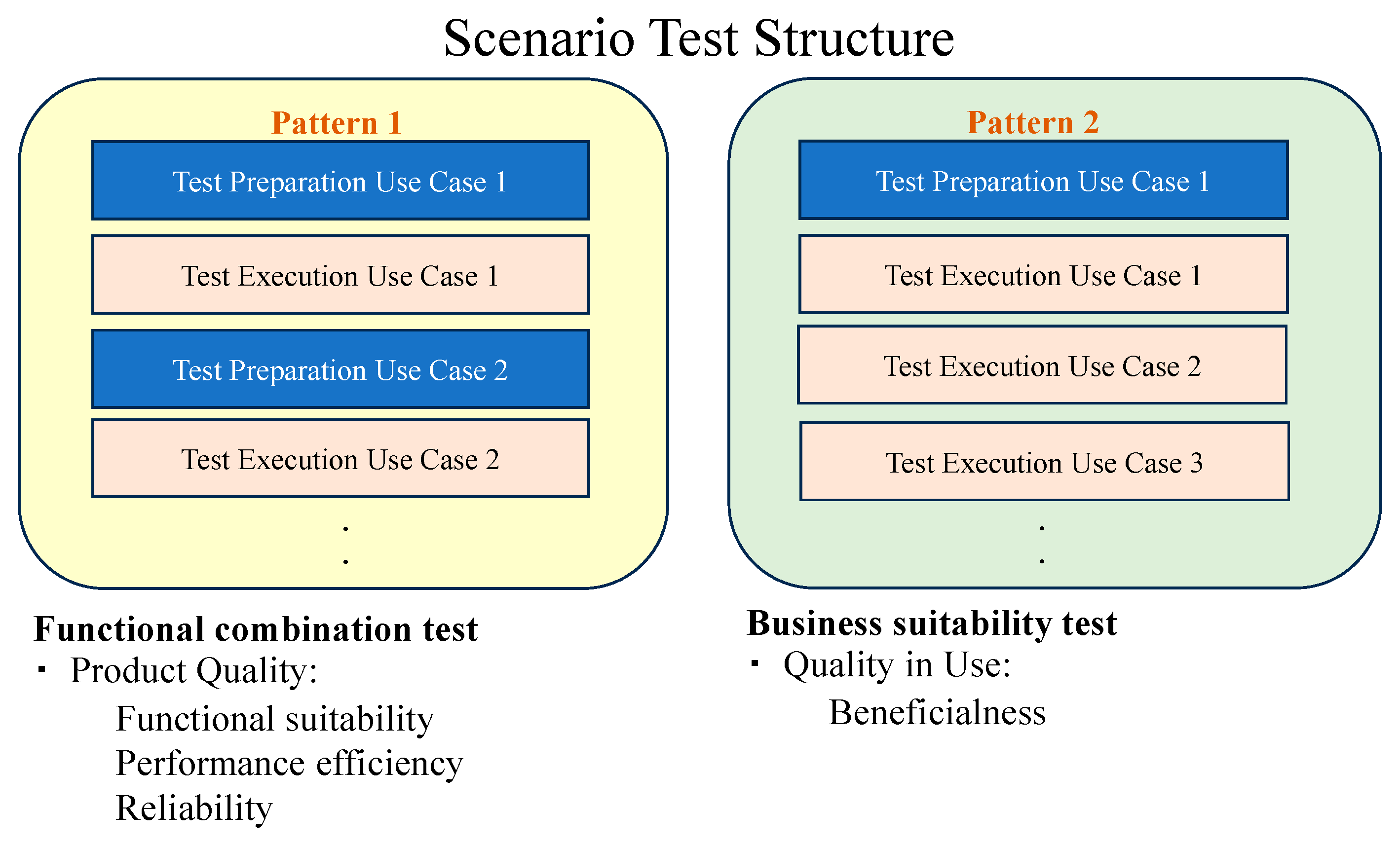

In the case of scenario testing, whether the product quality is to be confirmed as a functional combination test or quality-in-use is to be confirmed as a business adaptation test depends on how the scenario is implemented.

This approach is the same for both manual and automated testing, but in automated testing, by not outputting the preparatory test cases in the result report, the test results can be made applicable to both test patterns. If a problem occurs in the preparatory test cases, marking the test results as “NG” instead of “Fail” clearly indicates that a problem occurred prior to test execution and records that the test could not be executed.

As shown in

Figure 5, it is possible to manage both product and application quality based on the results of automated testing.

In the context of agile development, the utilization of automated unit test pass rates and use case automation as sprint completion criteria has been demonstrated to facilitate the implementation of test automation for quality control purposes. Furthermore, the creation of graphs depicting pass rates and reliability growth curves for each test facilitates a more comprehensive understanding of the prevailing quality standards.

Moreover, by validating the quality that has been assured for each quality characteristic, it is feasible to enhance the implementation of quality visibility through the automation of tests.

To perform quality control more accurately, it is important to quantify testing work through test automation. In other words, measuring the maturity of test automation will make it possible to measure the maturity of testing and understand its contribution to quality control. Furthermore, integrating with ISO 9001 and QMS can improve the maturity of test automation throughout the entire development organization.

2.3. Research Methodology for Test Automation

Wang et al. [

34] have developed a self-assessment tool to evaluate the maturity of test automation in the software industry. In this study:

15 key areas (KAs) were identified through a literature review.

An initial tool (including 77 assessment items) was developed.

The tool was improved based on the feedback collected.

The novelty of this study is as follows:

Identifies 15 key areas for evaluating test automation maturity.

The development and evaluation of an evaluation tool based on scientific methods.

Discusses the importance of addressing bias (especially response bias) in self-assessment tools.

Uses the Content Validity Index (CVI) and the cognitive interview method to confirm the effectiveness of assessment tools.

Addresses response bias: Identifies problems in the design of self-assessment tools and suggests improvements.

It also provides the following two benefits:

Industry practicality: It is useful for companies to identify problem areas and create improvement plans. It provides an objective and quantitative assessment of test automation maturity.

Academic contribution: It is a demonstration of the process of development of assessment tools that can be applied to other areas of software engineering.

However, the initial 77 items tool takes time to respond to, and there are issues with its practicality in the field, as well as the lack of specific guidelines for comparison with the industry as a whole and for each maturity level.

TAIM provides an objective improvement process that removes the subjectivity of the TPI and TMMI models, defining 10 KAs and one GA on the basis of scientifically valid measures, and provides a framework for evaluating and implementing improvements in each of the KAs in a stepwise fashion. The report provides the following information.

It comprehensively evaluates the entire testing process, including test code design, environment configuration, and tool integration, and uses measurements in each KA to quantify the quality and efficiency of the test suite and make the improvement process transparent. The results reveal high test code maintenance costs and lack of architecture as areas for improvement. TAIM is more of a capability model for full automation than a maturity model.

This study employed a mixed-methods design comprising (a) checklist-based maturity assessment, (b) statistical agreement analysis among three assessors, and (c) short-term remediation followed by re-assessment.

Sample: Three industrial teams (RPA, Power Automate, Generative-AI) selected via maximum-variation sampling.

Assessment procedure: Interview, audit for test suite, guidelines, other documents for test execution or maintenance and test results.

Reliability: Inter-rater agreement quantified by Cohen’s κ (target ≥ 0.75).

Hypothesis testing: H1 using Spearman’s ρ between maturity score and (i) design variance σ2, (ii) review comment count; H2 using κ.

Development organizations are increasingly incorporating new features and enhancing the quality of existing systems or services through derivative development, and managing various incidents using QMS. The enhancement of test automation, a process that has been demonstrated to contribute to development productivity and quality improvement, can be implemented concurrently with the improvement of the development process if it is carried out in conjunction with a Quality Management System (QMS). Consequently, a meticulous examination of TAMM in relation to ISO 9001 standards is imperative, along with the assessment of a maturity model.

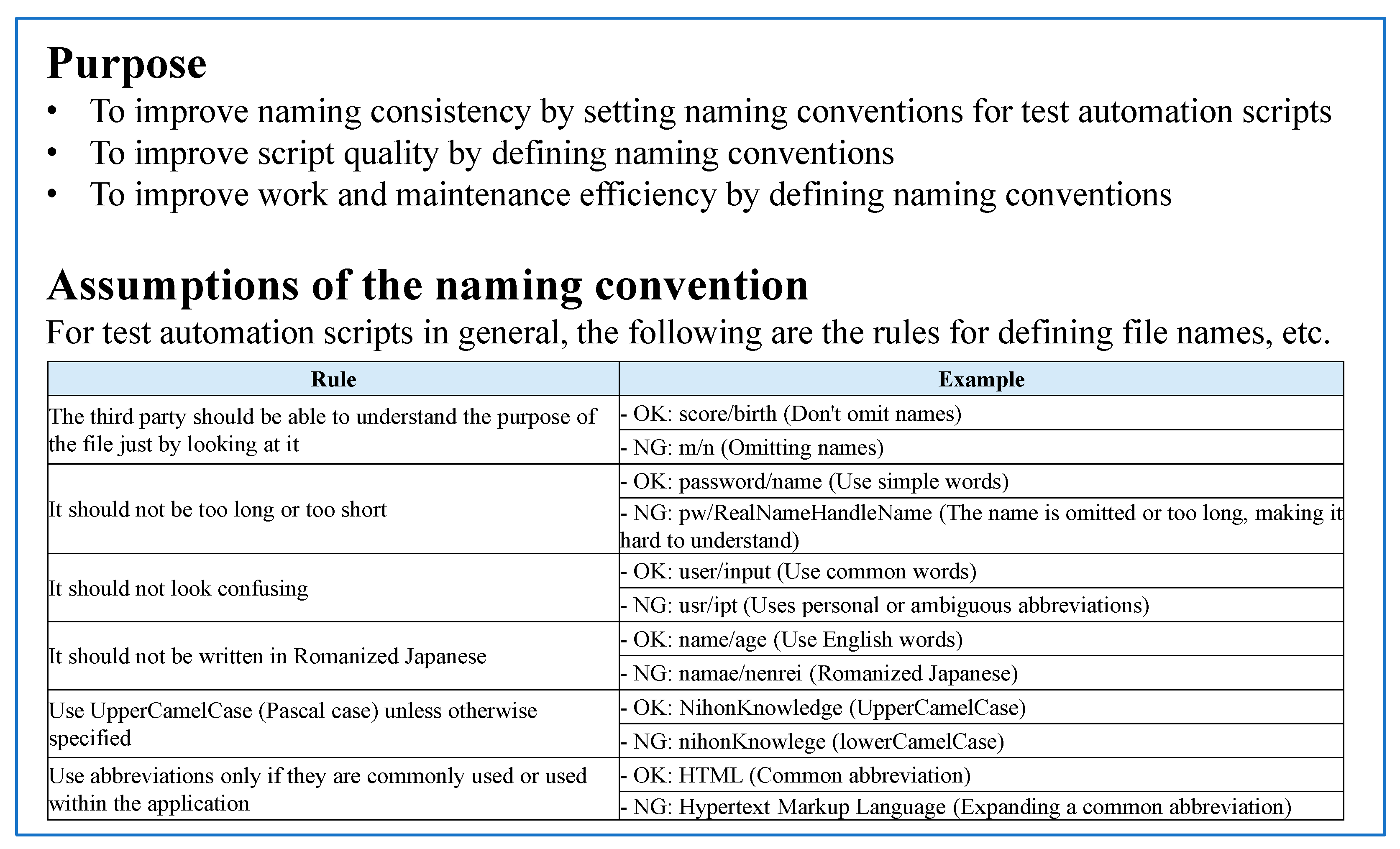

3. Effectiveness of Guidelines for Test Automation

In general, the field of software development is characterized by a high degree of individualization. Consequently, within the domain of software engineering, there has been substantial research conducted on coding conventions, review processes, and related subjects. The enhancement of code readability exerts a substantial influence on the quality of the code. Consequently, when developing software, we consistently adhere to coding conventions and verify compliance through reviews.

In the context of agile development, the project charter serves as a document that provides a comprehensive overview of the project, encompassing its purpose, scope, budget, schedule, and risks. This document is generated at the inception of the project and functions as a framework for project progression (

Table 2). The establishment of a project charter serves to facilitate the dissemination of project objectives among its members.

As with general software development, to increase the effectiveness of test automation, the development of automated tests must not become dependent on specific individuals. As shown in

Table 3, the actors involved in test automation can naturally be categorized. This categorization is the same as that used for general development work. According to their skills, designers and developers will also serve as reviewers. Based on this classification, Listing 1 is often considered the most appropriate guideline. However, such guidelines only list activities and standardize the tasks to be performed. They do not improve the quality of each task. Therefore, it is necessary to establish criteria. Clearly setting and managing compliance with these criteria makes it easier to link with the QMS.

| Listing 1. Draft table of contents for test automation guidelines. |

- 1.

Preparation (Common)

- -

Installing the test tool - -

Building a test environment - -

Checking the operation of test tool samples

- 2.

Test design/script implementation (Test scripts designers)

- -

Test Scenario Design - -

Design/implementation of use cases - -

Design/implementation of test data

- 3.

Test scripts development (Test scripts developers)

- -

Script development - -

Script debugging - -

Test suite creation

- 4.

Test execution (Test executors)

- -

Scripts execution - -

Test results analysis

- 5.

Test maintenance/Test operations (Maintenance developers)

- -

Development of a general-purpose test environment (CI environment) - -

Maintenance and updating of the test environment - -

Maintenance and updating of test tools - -

Script maintenance

|

Based on the key points of the identified guidelines, we created guidelines for projects using Ranorex [

35] and Power Automate [

36].

For projects using Power Automate, we created a separate document outlining coding conventions for flows that function as automation scripts. This difference stems from the fundamental differences between Power Automate and Ranorex. Power Automate is a GUI-based, no-code development tool, whereas Ranorex requires coding. This results in lower update frequencies for coding conventions compared to guidelines, hence the separate document.

Ranorex also supports GUI-based development; however, user code can be implemented in C#, and user coding is essential to improving the quality of automated testing. In other words, logic recorded and edited on a GUI basis can be converted to user code and then converted into reusable components. As with general development, these components increase the reusability of test code.

Listing 2 is the table of contents for the Power Automate guidelines, which span over 150 pages.

The guidelines provide step-by-step explanations of development and operational procedures, including installing Power Automate and utilizing scenario tests created for existing manual tests as automated tests. The guidelines also explain the necessity of subflows and how to create them. The review section describes the criteria for implementing flows and offers tips to prevent those flows from becoming person-dependent. The Operations section describes how to perform build verification checks (BVT) within the project’s shared environment and provides points to consider when executing BVT in the shared environment and the implementer’s local environment. It also describes the criteria for continuously upgrading Power Automate to ensure long-term stable operation.

In contrast, for projects that adopted Ranorex, the guidelines were developed using Confluence [

37], a wiki-based development document management tool. Confluence was chosen because the project uses Jira [

38], a ticket management tool, for task and bug management. Furthermore, Bitbucket [

39], a source code management tool that can be integrated with Ranorex, was adopted to manage test suites created with Ranorex. The guidelines are documented in the Confluence space and integrated with Jira.

The main differences between the Power Automate and Ranorex guidelines stem from these tools’ inherent design differences, with little variation in the rules governing test operations. Ranorex can convert code created in an integrated development environment into user code and transform it further into components.

The Ranorex guidelines also specify that test suites should be used as test case management tools with clearly defined test modules for each case. Additionally, the guidelines outline the implementation process for user code.

| Listing 2. Table of contents of guideline for project using Power Automate. |

1. About this guideline

1.1 Purpose of the Guidelines

1.2 Subject of the Guideline

2. Test Environment construction

2.1 Execution environment

2.2 Power Automate License

2.3 How to install Power Automate for Desktop

2.4 How to connect from Cloud Flow to Desktop Flow

3. Test Design Documentation

3.1 Positioning of Test Design Documentation

3.2 Template files for test design

3.3 How to design

4. Backup data creation

5. Test files creation

5.1 Test Case sheets

5.2 Test Data sheet

5.3 Generic Data Conversion Tool

5.4 Global Variables

6. Flow creation

6.1 Cloud Flow Configuration

6.2 Desktop Flow Configuration

6.3 Creation rules

6.4 Exception Handling

6.5 Precautions

6.6 Confirmation of Operation after Creation

6.7 Merge into shared environment

7. Review

7.1 Purpose of review

7.2 Review target

7.3 Reviewer

7.4 Example of review perspectives

7.5 Checklist for review

7.6 Record of review

8. Operation

8.1 Operational flow

8.2 Frequency and timing of test execution

8.3 Test execution method

8.4 Test result management

8.5 Operation for daily build testing

8.6 Test Result Reporting

8.7 Revision up Scenario

8.8 Conversion Scenario

8.9 Setup test

8.10 Past trouble collection

9. Maintenance

9.1 Product Revision Upgrade (without scenario update)

9.2 Product Revision Upgrade (with scenario update)

9.3 PAD Version Upgrade

9.4 Script management |

For this project, we used Jira, a ticket management tool, to oversee task execution and identify software defects. Using Confluence spaces to manage test automation documentation has proven effective in improving efficiency. The main difference between the Power Automate and Ranorex guidelines is the difference in tools. Ranorex can convert code created in the GUI into user code and then into components, thereby increasing reusability through componentization. Therefore, Ranorex guidelines primarily focus on reusability. They explain how to use test suites as test case management tools, how to use test modules associated with each test case, and provide important considerations for implementing user code.

These guidelines clearly define the use of test suites and test modules for managing Ranorex test cases and provide important considerations for implementing user code. When used with coding conventions (

Figure 6), they prevent inconsistencies between developers and reduce dependency on specific individuals. Furthermore, they clearly explain the concept and implementation methods of script verification, clarifying test design and implementation rules and enabling the measurement of test coverage.

Ranorex includes a data binding feature that allows tests to be executed repeatedly using different data sets. Utilizing this feature increases test coverage and enables the seamless automation of screen operation tests incorporating diverse data sets. To use the data binding feature without creating dependency on individuals, it is necessary to establish rules. Additionally, to distinguish between software and script issues, it is essential to clearly define test result reporting rules. Depending on the test execution order or environment, “flaky tests” may arise, causing test results to become unstable. Therefore, test reports are important for reducing these issues, and report rules play a crucial role in this process.

Ranorex can be integrated with Jenkins to build a continuous integration (CI) environment. Recovery scripts and error handling for test failures improve test continuity by enabling repeated execution of automated tests and early detection of degradation.

Establishing guidelines eliminates human dependency, ensures data quality, improves test accuracy, and contributes to the development of test automation personnel.

Test automation’s effectiveness in agile development methods can be enhanced by combining it with ideas and guidelines related to quality characteristics in agile process sprints. This combination ensures quality checks in each sprint are performed effectively and that each quality characteristic is met when adding new features.

Standardizing test automation provides the same benefits as the software engineering development process. For example, it improves the accuracy of development man-hour estimates and contributes to quality control. In other words, test automation guidelines should be consistent with development rules and their effects on the software engineering development process from the beginning. It is also desirable to apply general development process rules to test automation.

Similar to organizational project management evaluation processes, such as CMMI, developing a test automation maturity model and establishing assessment methods enables objective measurement of guideline effectiveness. Furthermore, integrating with a QMS based on ISO 9001 clarifies the level of test automation adoption and the challenges to quality improvement within the development organization.

4. Developing TAMM

4.1. Preparation for Maturity Model Building

Given that TAIM does not consider compatibility with ISO 9001, it was determined that an examination of methods that can be linked to quality management without compromising the usefulness of previous studies would be necessary.

In the context of constructing TAMM, the use of CMMI as a guideline and reference framework for the enhancement of test processes is a common practice. Furthermore, the utilization of the international standard ISO/IEC 33000 family was contemplated for the evaluation of TMMi and assessment methodologies. Given the pervasive utilization of the TMMi maturity model, a test technology version of CMMI, by numerous test teams, it was determined that the development of TAMM based on the TMMi model was warranted.

The model that was constructed places significant emphasis on E2E testing, with an underlying focus on continuous improvement. It utilizes compliance levels with established policies and rules as a metric for evaluating maturity, thereby enabling a comprehensive assessment of its strategic positioning.

4.2. Development of TAMM

CMMI and TMMi are both five-level model. However, we excluded organizations that do not implement test automation. We positioned TAMM at four levels. Each level in the test automation process is represented in

Figure 7.

Table 4 presents an overview of the status of each level. It also indicates the status that should be aimed for in order to reach the next level.

The model that was developed demonstrates the necessary steps to achieve the subsequent level. The model enables comprehension of the present state of test automation, even in the absence of an assessment. The model was initially presented at a seminar on test automation, where it was met with favorable feedback from numerous attendees.

This model facilitates comprehension of the levels of test automation and elucidates the issues that must be addressed to achieve the subsequent level.

Level 1 is a state in which engineers automate processes without any established rules, relying exclusively on their own methods. In essence, the efficacy of automation is contingent upon the actions of individuals. In general, E2E test automation code and flows created in this state are not only difficult to share with other engineers but also challenging to maintain and operate in the long term. Even unit test code, which is frequently developed by software code implementers, must be designed to avoid becoming dependent on individuals. To advance beyond this stage, it is imperative to elucidate the purpose of test automation, enhance teamwork awareness, and establish and disseminate minimum rules, such as coding conventions and test pass/fail criteria, as guidelines.

Level 2 signifies the ability to design and execute automated tests with a focus on quality enhancement and maintaining a certain level of quality. This is distinct from the mere automation of manual test scenarios. The team begins to recognize the benefits of test automation, and guidelines and rules for test automation are established. In order to progress to the subsequent level, it is imperative to establish a reproducible operational environment and employ build verification testing (BVT) to expeditiously identify degradation. Furthermore, the integration of continuous integration (CI) environments is crucial.

Level 3 is the state where automated tests can be repeatedly executed using external data, i.e., the state where automated testing is utilized as data-driven testing. In order to achieve data-driven testing, it is imperative to design and implement tests with automation in mind. When establishing automation goals, it is imperative to consider the potential benefits of test automation for the entire development organization or project. As an organization reaches this state, it can enjoy more benefits of automation. As the level of automation increases, the organization can leverage the results of automation to improve quality visibility and productivity.

The final level 4 indicates a state in which the organization maintains the level 3 status quo while continuously improving the test automation process by addressing various issues that arise. At this level, test automation is considered a standard component of software development, and the purpose and objectives of test design, implementation, and operation are not dependent on specific individuals. Instead, the implementation of test automation is based on a common understanding within the organization. Automated test results are utilized for the purpose of quality control and are represented visually in real time. Furthermore, various issues that arise in the lifecycle of automated test design, implementation, execution, and operation are addressed within the organization, and activities are carried out to further enhance the effectiveness of automation.

However, the application of this maturity model alone is insufficient to achieve Level 4. Therefore, an assessment checklist was developed, drawing upon previous examples and CMMI and TMMi. This initiative was undertaken in recognition of the imperative to identify issues across the entire test automation lifecycle and to contemplate countermeasures. The objective of conducting evaluations using the checklist is to establish a long-term roadmap for visualizing quality. This will enable the organization to reach the next maturity level and contribute to the reorganization of its development processes and improvements in development productivity.

5. Methodology of Assessment Checklist

5.1. Consideration of Assessment Methods

The checklists employed in the assessment are meticulously designed to align with quality improvement through quality management principles. In addition to CMMI and TMMi, which were referenced during the maturity model development, we have extensively referenced ISO 9004 [

40], an international standard created as a self-assessment tool for ISO 9001, which outlines the requirements for quality management. TAMM’s clauses 7 and 8 refer to ISO 9004, allowing them to be linked to sections 9 at ISO 9001, Performance Evaluation. Additionally, all automation-related issues are linked to improvement activities through management reviews.

Initially, the requirements for test automation are categorized into eight distinct categories. The rationale underlying this phenomenon pertains to the efficacy of test automation in quantitatively assessing the quality of software. In the context of quality management that adheres to ISO 9001 standards and fosters continuous improvement initiatives, it is imperative to evaluate the maturity of the test automation lifecycle process and to promote the implementation of improvement processes. By referring to established guidelines and rules, it is possible to determine the level of compliance within projects and organizations, measure the maturity of test automation, and understand the level of capability to meet requirements. A paucity of research has been conducted on the maturity of test automation in the context of QMS, particularly with regard to the promotion of improvement. Given the prevalence of organizations incorporating features and enhancing software quality through derivative development, it is logical to expect a correlation between advancements in test automation and QMS.

Each requirement is comprised of four to five items, with a focus on more than just the presence or absence of guidelines or policies. Additional considerations include the modularization and reusability of test scripts and flows, as well as the consideration of parallel execution during test execution. The requirements delineated in

Table 5 were formulated with consideration for the utilization, implementation, and operation of design documents and scripts.

5.2. Development of Assessment Checklists

For each requirement, we prepared assessment questions that could be scored. Referring to Annex A of ISO 9004 and TMMi, we prepared 11 questions that could be used to determine the compliance status of each requirement. By answering the questions, it is possible to assess each requirement (

Table 6).

Given the four levels of the maturity model and the process and improvement categories, which are based on ISO 9001, the checklist scores are set from 1 to 5, similar to ISO 9004. However, it should be noted that a single score is allotted for each requirement, with a maximum of five scores indicating the fulfillment of all requirements.

The checklist is to be completed by the assessor; however, an assessor with a high level of experience in automation will need to conduct interviews and make corrections to ensure that there are no problems with the descriptions.

Ultimately, a cumulative score is determined for each category, and the scores from the eight categories are presented in a radar chart to illustrate the variation in scores for each requirement. When determining the score for each item, we made sure to discuss and decide among multiple assessors to avoid inconsistencies.

6. Result

6.1. Assessment Implement Policy

The assessment of the test automation project was conducted in accordance with a predetermined policy. This policy called for the administration of checklist responses within the organization to be assessed. These responses were followed by interviews with members of the target organization. Various actual evidences were also confirmed.

In particular, a firm determination of the sufficiency of each item on the checklist was made through a combination of methods. These methods included the confirmation of test scripts and flows through sampling and the checking of test result reports.

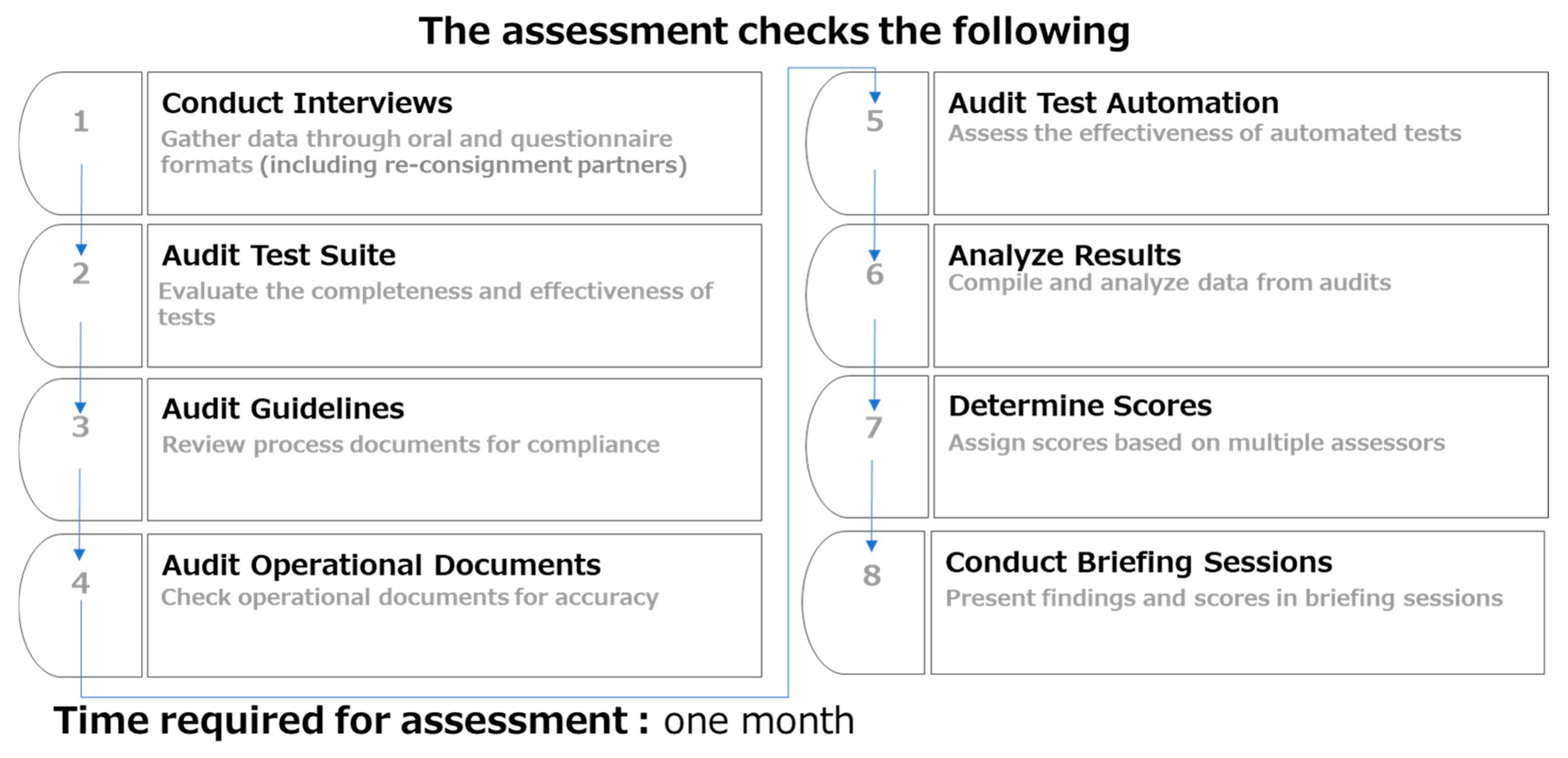

The final assessment implementation policy is illustrated in

Figure 8.

6.2. Result of Assessment

We considered simply assigning scores to each question on the checklist, but decided that in order to actually assign scores to each question, it would be necessary to first assign scores to all requirements and then have the assessor review the checklist again. This is because it is important to review the results of related questions depending on the existence of policies and the status of processes. To ensure the consistency and reliability of the assessment, a thorough and meticulous procedure was implemented. First, the item scores were determined by a panel of multiple assessors. This approach was taken to avoid any potential inconsistencies or variations in interpretation.

For example, even if a project or organization has a policy, it may be that only part of the policy is being used or that some people are not following it, so it is necessary to review the overall score.

It is difficult to determine where to place the assessment results on the maturity model, but we believe it is best to make a level judgment based on the presence or absence of policies and audit rules, as well as the implementation status.

Rather than simply presenting the results of the analysis, we believe that adding short- and long-term improvement points makes it easier to target the next level.

Short-term improvement activities focus on identifying issues in the current process and improving them to increase maturity. Long-term improvement items, on the other hand, include issues that are likely to arise after implementing short-term improvements, examples of issues that will become apparent through repeated improvements, and other items necessary to increase test automation maturity over the long term. Finally, we decided to present a roadmap for reaching Level 4, which is an expandable level, through repeated improvements.

The evaluation results report is based not only on a summary of the evaluation results, but also on the analysis results for each question item of the requirements. We have clearly stated the short-term improvement actions for each requirement and identified the issues for each item.

- (1)

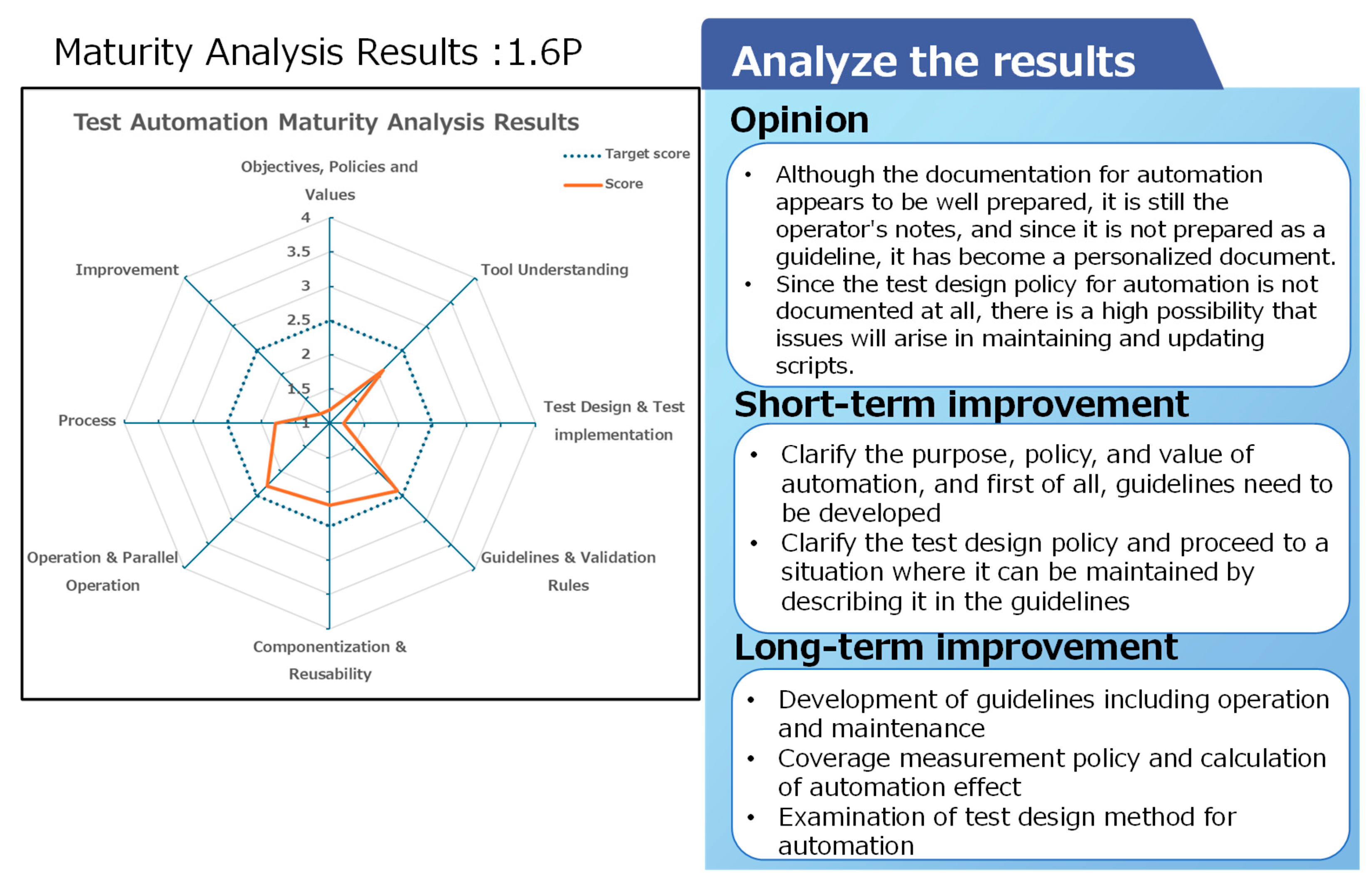

Example of a team using the capture replay tool

The team performing E2E testing using capture replay tool, the OpenText Functional Testing [

41], has a low overall score (

Figure 9).

While the team has documented automation rules in wiki, these are essentially notes from script writers, and third parties cannot maintain the system based on these records. There is no documentation of the purpose, goals, or value of automation, making it difficult to share the rationale for automation within the team.

Test design for automation has not been performed, and existing manual tests are being automated. Priorities for automation also appear to be set on an ad hoc basis. As a result, even when reviewing test results, it is unclear what level of quality is being ensured by automated tests, making the effectiveness of testing unclear.

Despite progress in automating E2E testing, the effectiveness of automation remains unclear due to the high degree of task specialization. By clarifying the guidelines for tests to be automated, it is believed that the effectiveness of automated testing can be shared across the team.

- (2)

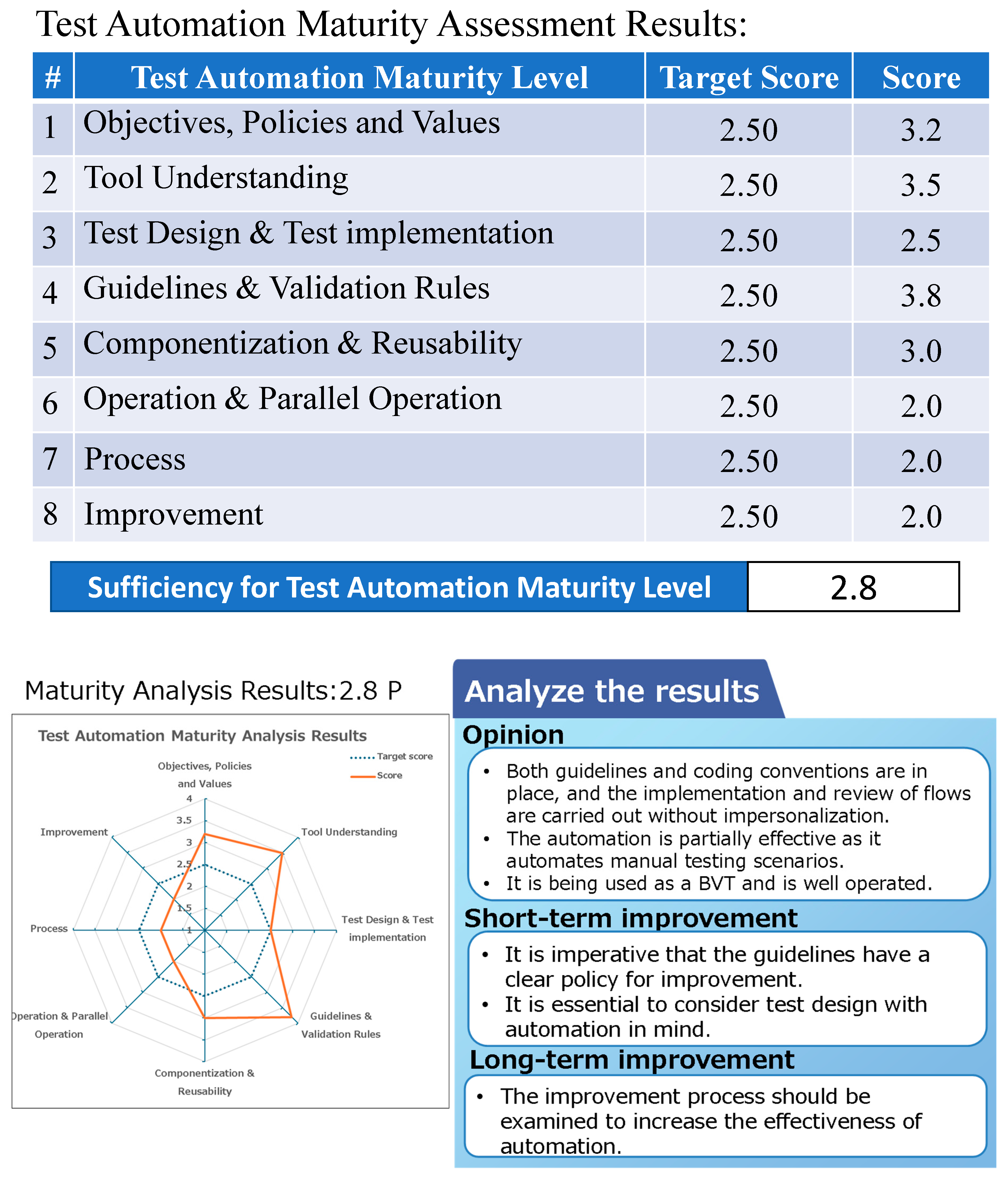

Examples of test automation with RPA tools

This is the result of the evaluation of E2E testing using Power Automate, which was also included in the guidelines (

Figure 10). The guidelines are well established, and the processes of test design, test flow implementation, review, and operation are performed without reliance on specific individuals, resulting in a high overall score. However, the automated tests primarily automate the E2E test scenarios used in manual testing from a business perspective, resulting in only partial benefits from test automation. Although it is used as BVT, its effectiveness as a degradation check is low because the operational phase is significantly delayed in the project timeline. In addition, the guidelines and review processes rely heavily on external consulting teams, making it difficult to establish an internal improvement process.

- (3)

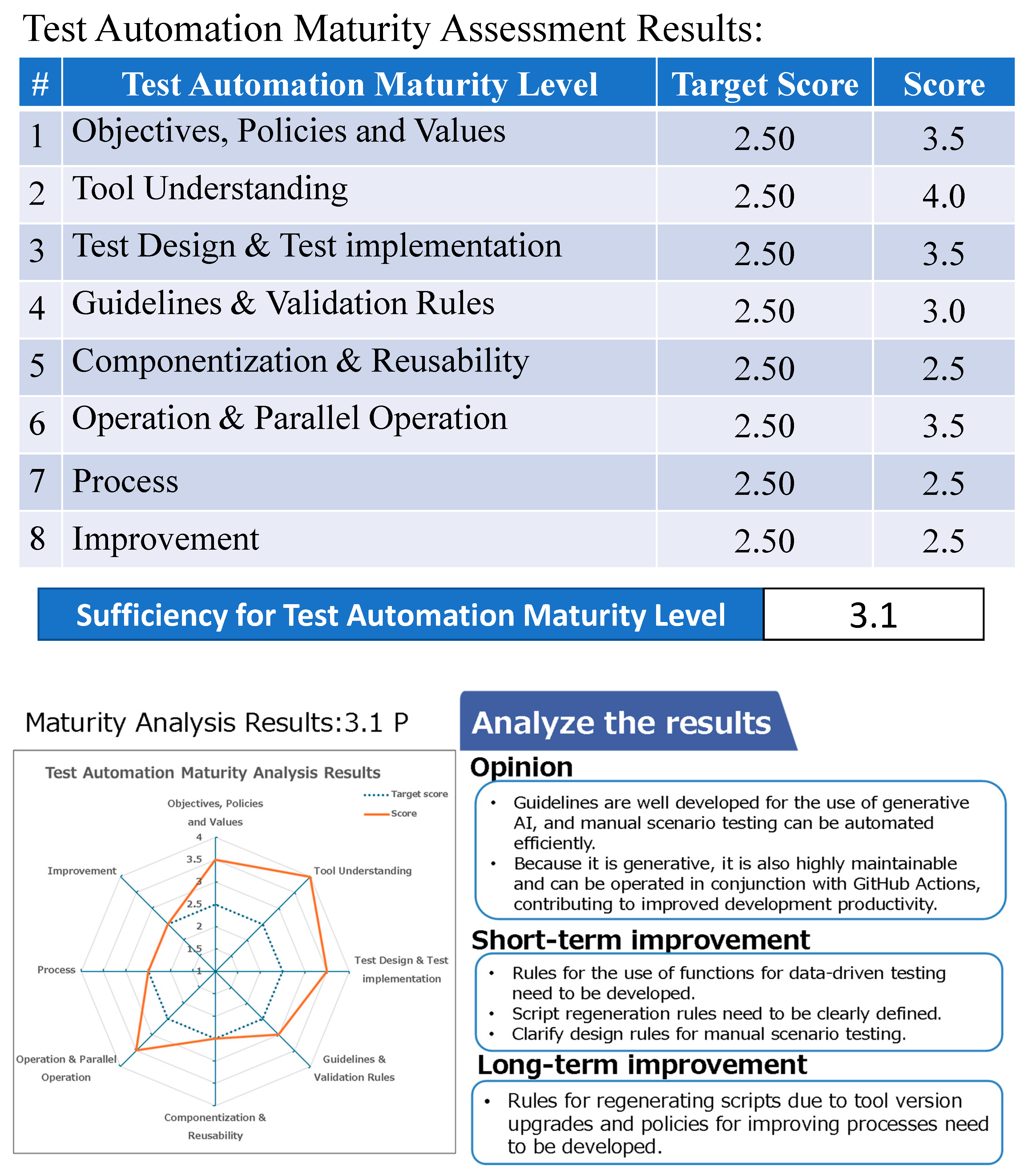

Example of E2E test automation using generative AI

Finally, we present the evaluation results of a team that uses a proprietary generative AI tool to generate pytest [

42] scripts using Playwright for manual scenario testing for E2E testing and to automate the process (

Figure 11).

As a generative AI tool, it enables the creation of automated tests immediately after test design is complete, resulting in high automation coverage for E2E testing. It not only supports automated scenario testing for functional combinations, but also for business verification, contributing significantly to development productivity. However, since the tool is used to automate manual test scenarios, despite its capability for data-driven testing, this feature is currently underutilized, which is a key challenge.

It is considered necessary to establish processes, guidelines, and improvement plans for test design rules to address the need for script regeneration due to tool version updates and to further drive automation.

6.3. Statistical Tests

Hypothesis 1: A quantitative approach is recommended to analyze fluctuations in the number of review comments. However, data collected using the same testing tool is unavailable. In the case of RPA tools, review comments are often found in automated test designs because these designs use existing manual scenario tests without modification. This is because existing scenario tests lack design rules and criteria.

Therefore, quality improvement through reviews is necessary.

Currently, multiple evaluators are conducting qualitative analysis to evaluate the results. At the same time, the effectiveness of automated testing in identifying software defects is being evaluated through qualitative analysis.

According to Hypothesis 2, if the scores given by multiple evaluators differ, the quality of the target software is classified based on quality characteristics. Then, discussions are held based on the qualitative analysis results, and the final decision is made by consensus among the three evaluators.

Hypothesis 2 assumes that the Cohen’s kappa (κ) value between Evaluator 3 and the 39 requirements should be 0.75 or higher; the existing approach meets this criterion.

Going forward, we plan to introduce a mechanism to calculate Spearman’s rho (ρ) in Hypothesis 1 and Cohen’s kappa (κ) in Hypothesis 2.

7. Discussion

Based on the findings of the TAMM survey, we recommend that the teams responsible for implementing automation using Power Automate promptly revise the following guidelines.

These guidelines classify each chapter, policy, and stakeholder and categorize them as follows: automation objectives and improvements, preparation, automation design, implementation, design/implementation review, operation, and maintenance.

Implementing automation in accordance with the revised guidelines is expected to increase the target values of TAMM’s “8. Improvement” and “7. Process” requirements from 2.0 to 2.5. Clarifying the processes in the guidelines enables a more thorough evaluation of each task’s compliance with the guidelines and improves automation quality by clarifying the criteria for each process. Improving the test automation process improves test automation activities under QMS management. TAMM increases maturity by integrating test automation issues with QMS, thereby improving overall development quality.

However, revising the guidelines takes two to three months. Implementing and operating test automation in accordance with the revised guidelines and conducting another TAMM evaluation after the product is released takes at least six months to a year.

8. Conclusions

This study has two limitations: sample size (n = 3) and follow-up period (six months). Nevertheless, the applicability of the proposed method has been demonstrated with three types of test tools. It has also been shown that implementing short-term measures based on assessment results can raise the maturity level immediately from 2.75 to 2.85—an increase of 0.1 points.

To increase the effectiveness of test automation, organizations must comprehensively review their policies and development processes. However, the absence of a mechanism to analyze the current state poses a significant challenge, hindering the identification of areas for improvement and the determination of necessary actions. Furthermore, even if such improvements are successful, it is difficult to apply unified policies and enhanced development processes to different organizations within the same company because of differences in maturity levels.

With the advent of agile development methods, scenario testing using use cases has expanded, and evaluation results are used as various criteria. Consequently, the number of organizations adopting end-to-end (E2E) test automation is expected to increase in the future. Therefore, measuring the maturity of E2E test automation will make it easier to identify areas for improvement based on the current situation and consider countermeasures, contributing to the improvement of the development process. To address this issue, we developed TAMM, which is compatible with QMS. We defined maturity levels and requirements and devised an assessment checklist and evaluation method. This method includes sample surveys and interviews.

The effectiveness of this evaluation method is as follows:

First, we conduct empirical evaluations to obtain quantitative measurements targeting organizations that utilize multiple evaluation tools.

Three evaluators determine scores and agree upon them to ensure a κ ≥ 0.75.

When combined with a QMS based on ISO 9001, TAMM can contribute to the maturity of development organizations and improve productivity by addressing automation issues, particularly in processes and improvement measures.

Test automation significantly improves software development productivity and enables quality visualization through automated test results.

From a quality management perspective, conducting test automation maturity assessments to evaluate development organization maturity and identify future challenges is a major benefit. Additionally, the consulting services provided by the author’s organization include identifying support measures for customers based on assessment results and developing countermeasures jointly. Deploying this method to various development organizations and combining it with data accumulation for improvement is expected to achieve quality visualization and productivity improvements through test automation.

To improve organizational maturity, it is essential to incorporate human resource development elements, such as accumulating test automation knowledge and establishing training programs, into the evaluation framework in addition to technical capabilities. Therefore, formulating test automation guidelines that consider the four Ps of ITIL [

43] (people, process, products, and partners) is a prerequisite for considering long-term improvement measures. Furthermore, strengthening the evaluation of test automation by utilizing generative AI and promoting the evolution of evaluation methods is essential.

Future improvements to TAMM will collect additional evaluation result data and prepare a score determination process based on quantitative analysis. Additionally, we will develop a mechanism for setting target scores based on the software domain and required quality. We will also consider analyzing evaluation results that take development productivity into account.

Our goal is to promote the adoption of the proposed methodology and disseminate TAMM guidelines and evaluation checklists to support self-assessment and self-improvement processes within a broader range of organizations, alongside their quality management systems (QMS).