Empirical Formal Methods: Guidelines for Performing Empirical Studies on Formal Methods

Abstract

1. Introduction

- A practical foundation for empirical formal methods, aiming to encourage further empirical research in this field;

- A comprehensive overview of research strategies that can be applied to contribute to theory building in FM, together with their fundamental characteristics, and specific threats to validity;

- For each strategy, a reflection of the main difficulties and potential weaknesses for its application in FM;

- For each strategy, pointers to papers within FM and software engineering, as well as an up-to-date list of references providing detailed guidelines;

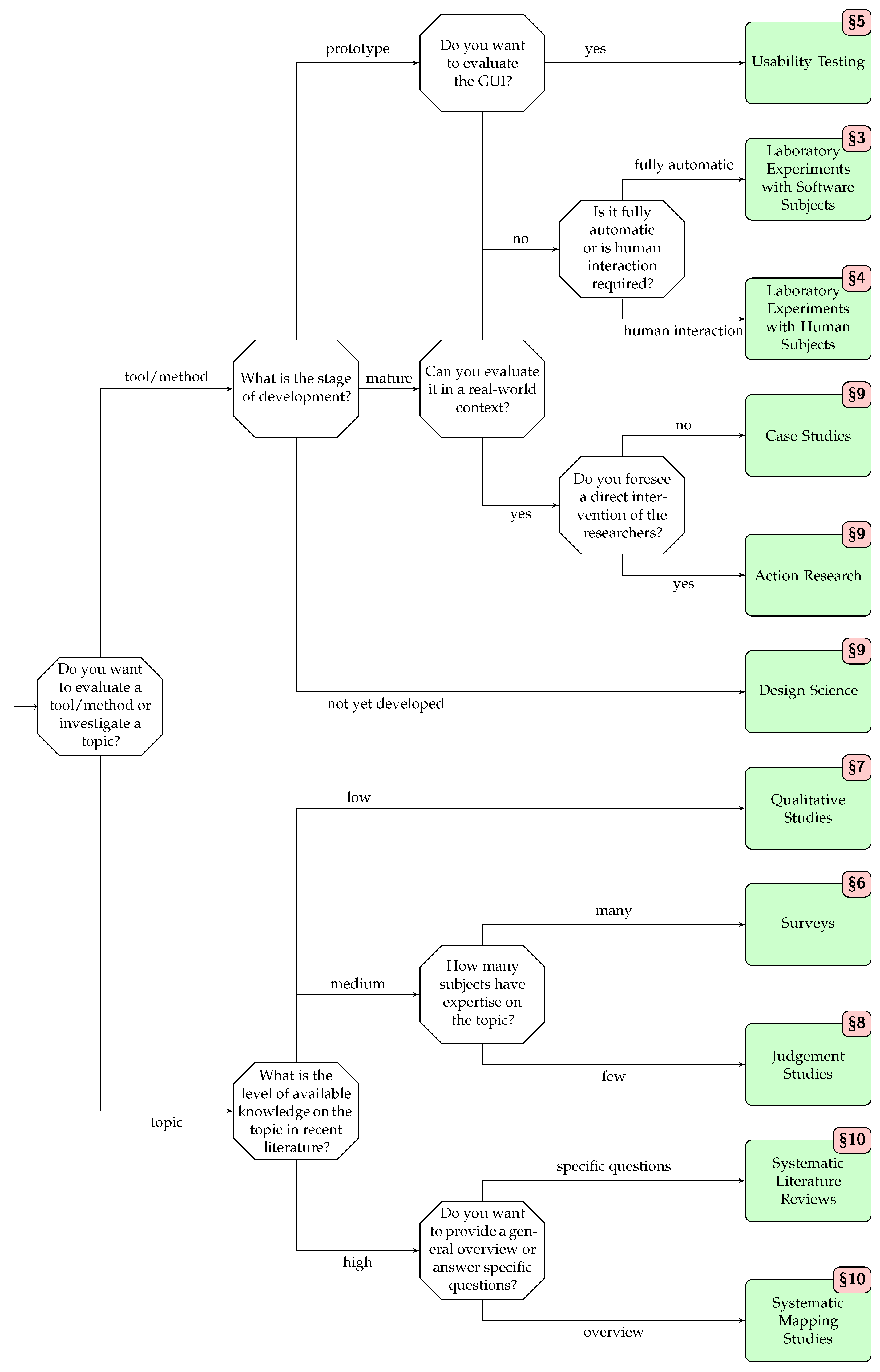

- An easy-to-use informal guide for selecting the most appropriate empirical strategy (cf. Figure 1).

2. Fundamental Ingredients

- Research Questions (RQs): these are statements in the interrogative form that drive the research. They are useful as a guideline for the researchers, who has a set of clear objectives to address, but also for the reader. The RQs also typically include the constructs of interest, which are the abstract concepts (e.g., efficiency, usability) to be investigated through the research.

- Data Collection Procedure: since empirical studies stem from data, these need to be collected, and a systematic and repeatable procedure needs to be established. The data collection procedure specifies which are the data sources, and how data is collected. Data are related to the constructs of interest, as one aims to use the data to measure or evaluate such constructs.

- Data Analysis Procedure: this specifies how the data is elaborated and interpreted to answer the RQs, thus establishing a chain of evidence that goes from data to constructs of interest. In other terms, the data analysis procedure establishes a link between empirical data and RQs. Both data collection and analysis procedures require to consider possible validity threats, and countermeasures to prevent possible threats need to be established and made explicit.

- Execution and Results: these specify how data collection and analysis have been carried out, and what is the specific output of these procedures. This part also systematically answers the RQs, based on the available evidence, while in principle data collection and analysis abstract away from concrete data, here the focus is specifically on the data and their interpretation.

- Threats to Validity: these specify what are the possible uncontrolled threats that could have occurred in data collection and analysis, and that could have influenced the observed results. In this part, the researchers should reinstate the mitigation strategies oriented to address typical threats to validity, and acknowledge residual threats. Different threats can typically occur depending on the type of study. Nevertheless, there are three main categories of threats, which in principle apply only to experiments, but that introduce a reasoning framework that can be useful for other types of studies:

- -

- Construct Validity: indicates to what extent the abstract constructs are correctly operationalised into variables that can be quantitatively measured, or qualitative evaluated. To ensure construct validity the researcher should show that the constructs of interests are well-defined and well-understood based on existing literature. Furthermore, the researcher should argue about the soundness of the proposed quantitative measures or evaluation strategies. For example, if a researcher wishes to measure effectiveness of a certain tool T, they should present related literature defining the concept of effectiveness, and defining a sound measure for this construct.

- -

- Internal Validity: indicates to what extent the researcher has ensured control of confounding external factors that could have impacted the results. These factors includes researcher bias, i.e., expectations/inclinations of the researcher that may have impacted the study design (e.g., in a questionnaire definition, or in the data analysis), and any aspect related to subjectivity or context-dependency in the production of the results. Internal validity can also be threatened by time-related aspects, e.g., with a maturation effect that could occur when the participants perform multiple tasks one after the other, or with fatigue effects due to long experimental treatments. For example, consider the case of comparing two tools A and B on a certain task K. If the subjects first use tool A and then tool B on task K, a learning effect could occur. Indeed, with tool A, they could have learned about task K, and this would have facilitated them in performing the same task when using tool B. To address this issue, the researcher could allocate some subjects only on tool A and others only on tool B.

- -

- External Validity: indicates to what extent the results can be applicable to contexts other than the one of the study, or, in other terms, to what extent the results can be considered general, i.e., what is the scope of validity of the study.

3. Laboratory Experiments with Software Subjects

- Definition of the Strategy: a laboratory experiment is a research strategy carried out in a contrived setting in which the researcher wishes to minimise the influence of confounding factors on the study results. In an experiment with software subjects, the researcher typically compares different tools, algorithms or techniques, to collect evidence, e.g., of their efficiency or effectiveness on a certain representative set of problems.

- Crucial characteristics: in a laboratory experiment with software subjects, one typically defines measurable constructs to be assessed and used for comparison between different software subjects. More specifically, the researcher identifies the constructs of interest, and how these constructs are mapped into variables that can be quantitatively measured, or, if this is not feasible, qualitatively estimated. The constructs of interest are typically strictly connected with the RQs, and the data are the source information that can be used to answer the RQs. Therefore, the researcher also needs to specify how one aims to collect the data associated with the variables. For example, in a quantitative study one may want to focus on the construct of effectiveness of a certain tool, with the RQ: What is the effectiveness of tool T? If the tool T is designed to find bugs in a certain artefact, this construct can be measured with the variable bug identification rate = number of identified bugs/total bugs. The data collection strategy could consist of measuring the number of bugs found by tool T on a specific dataset given as input (number of identified bugs), which contain a pre-defined set of bugs (total bugs). The dataset, also called benchmark, should be representative of the set of programs that the tool T aims to verify. If the tool T is designed for a specific type of artefact, then the artefacts should vary across different variables that characterise the artefact: e.g., language, size of the artefact, complexity. It is important to always report the characteristics of the dataset across these salient dimensions. Furthermore, to assess whether the effectiveness of a certain software subject is ‘good enough’, one also needs to define one or more baselines, i.e., other tools previously developed, or an artificial predictor (e.g., random, majority class), that can allow the researchers to state that the software subject overcomes the existing baselines for the given dataset.

- Weaknesses/Difficulties in FM: several difficulties may occur when applying this type of strategy in FM. Typically, a software subject is a tool such as, e.g., a model checker or a theorem prover. If the tool requires some interaction with the user, then this can affect the results, as the variable that is measured, e.g., bug identification rate, also depends on the human operator. To address this issue, the experiments should also include design elements that are proper of laboratory experiments with human subjects (cf. Section 4). Another pitfall can occur when the tool uses some random or probabilistic principle, and thus the results of the experiment can vary from one execution to the other. In these cases, the tool should be executed multiple times, and confidence intervals, e.g., on its effectiveness or other performance-related constructs, should be estimated and reported with appropriate p-values [14]. Furthermore, if one aims to report differences between different tools across multiple runs, appropriate statistical tests, e.g., t-test or Mann–Whitney U test, should be also performed, again reporting p-values and evaluating effect size. Another typical difficulty is identifying a baseline. Indeed, formal tools often target specific fine-grained objectives, e.g., runtime verification vs. property proving, and, in the case of model checkers, can use different modelling languages and different logics for expressing properties. Therefore, the comparison between tools is often hardly possible. In these cases, one can (i) define simple artificial baselines, against which the tools can be compared; (ii) restrict the comparison to the subset of the dataset for which a comparison is possible; and (iii) complement the quantitative evaluation with a qualitative evaluation, involving human subjects in the assessment of the effectiveness of the tool, e.g., with a usability study/judgement study (cf. Section 5 and Section 8), a questionnaire provided to users after using the tool, or qualitative effect analysis [15].

- Typical threats to validity: typical threats are related to the representativeness of the dataset (external validity), the soundness of the research design (internal validity), and the definition of variables and associated measures (construct validity). An inherent threat of this type of study, as for laboratory experiments in general, is the limited realism, as the lab setting is typically contrived and does not account for real-world aspects, e.g., learning curve required to learn a tool, incremental and iterative interaction with tools, or iterative nature of artefact development, which is normally not captured by a fixed dataset.

- Maturity in FM: laboratory experiments with software subjects and in particular tool comparison is relatively mature in FM. Many different competitions exist in which tools are evaluated in terms of performance (evaluation of their usability is rare). Experiments are typically conducted on a representative set of benchmark problems and executed by benchmarking environments like BenchExec [16], BenchKit [17], DataMill [18], or StarExec [19]. The oldest competitions concern Boolean satisfiability (SAT) solvers [20], initiated three decades ago, and Automated Theorem Provers (ATP) [21]. In 2019, 16 competitions in FM joined TOOLympics [22] to celebrate the 25th anniversary of the International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS). For several years now, TACAS and other FM conferences also feature artefact evaluations to improve and reward reproducibility, following their success in software engineering conferences, where they have been introduced over a decade ago [23,24]. A recent survey on FM ([25], Section 5.9) showed that their adoption in industry would benefit a lot from the construction of benchmarks and datasets for FM.

- Pointers to external guidelines: guidelines and recommendations for this kind of studies, also called benchmarking studies, are provided by Beyer et al. [16] and Vitek et al. [26]. Guidelines for combining these studies with other assessment methods are part of the DESMET (Determining an Evaluation Methodology for Software Methods and Tools) methodology by Kitchenham et al. [15].

- Pointers to studies outside FM: an example in the field of automatic program repair is the study by Ye et al. [27], which applies different automatic program repair techniques to the QuixBugs dataset and extensively report also the characteristics of the dataset. In automated GUI testing, Su et al. [28] compare different tools for the constructs of effectiveness, stability and efficiency. This is also a good example of applying statistical tests to the comparison of different tools. Herbold et al. [29] also uses statistical tests to compare cross-project defect prediction strategies from the literature on a common dataset. Another representative example, in the field of requirements engineering, is the work by Falessi et al. [30]. This can be taken as reference in case a set of building blocks need to be combined to produce different variants to be compared. The work is particularly interesting because it also illustrates the empirical principles that underpin the comparison. It should be noticed that the paper does not use a public and representative dataset as benchmark, but a dataset that is specifically from a company, thus including the laboratory experiment in the context of a case study (cf. Section 9). A similar problem of composition of building blocks is considered also by Maalej et al. [31], in the field of app review analysis, and by Abualhaija et al. [32], in the field of natural language processing applied to requirements engineering. This latter study is particularly interesting as it complements the performance evaluation with a survey with experts.

4. Laboratory Experiments with Human Subjects

- Definition of the Strategy: similarly to laboratory experiments with software subjects, an experiment with human subjects is a research strategy carried out in a contrived settings, in which the researcher wishes to minimise the influence of confounding factors on the study results. In these experiments, the typical goal is to evaluate constructs (e.g., understandably, effectiveness) concerning notations, tools, or methodologies, when their evaluation depends on human interaction.

- Crucial characteristics: in a laboratory experiment with human subjects, one typically defines measurable constructs to be assessed and used for comparison between different study objects, i.e., notations, tools, or methodologies. More technically, a laboratory experiment with software subjects typically evaluates the effect of certain independent variables (e.g., the tool under study, or the experience of the subjects) on other dependent variables (e.g., understandability, effectiveness). The researcher thus identifies the constructs of interest, and how these constructs are mapped into variables that can be measured quantitatively or, if this is not feasible, estimated qualitatively. The constructs of interest are typically strictly connected with the RQs, and the data is the source information that can be used to answer the RQs. As for laboratory experiments with software subjects, the researcher also needs to specify how one aims to collect the data associated with the variables. To this end, the researcher typically recruits a set of human subjects, either professional, or, more often, students, and asks them to perform a given task using the objects of the study, i.e., notations, tools, or methodologies. Subjects are typically divided into groups, or treatments, the experimental group and the control group. The former uses the object of the study to perform the task. The latter performs the task without using the object of the study. In the task, data is collected concerning the dependent variables, and in relation to the RQs. In these experiments, it is typical to refine the RQs into hypotheses to be statistically tested, based on evidence collected from the data of the experiment itself. An experiment with statistical hypothesis testing can be seen as two sequential black boxes, an experiment box, and a statistical assessment box. The first box represents the experiment itself which produces data, while the second one represents the actual procedure of hypothesis testing, which uses the data produced by the experiment box to state to what extent one can be confident that, given the data, the effect observed in the data is not due to chance. In the experiment box: (i) the inputs are the so-called independent variables, i.e., the variables that the researcher wants to manipulate, for example the type of tool to be used in the treatment; (ii) the outputs are dependent variables, i.e., the variables that represent the constructs that one wishes to observe, e.g., effectiveness, understandability; and (iii) additional input parameters, e.g., maximum time to execute the task, exercise used in the task. These are the controlled variables that are not considered independent variables, but that could influence the effect of independent variables on dependent variables, if not properly controlled, and if their effect is not properly cancelled. In the hypothesis testing box, the inputs are all the data associated with the dependent and independent variables, and the main outputs are: (i) the effect size, which represents the degree of the observed impact of independent variables on dependent variables; and (ii) the statistical significance of the results obtained, which is given by the p-value. The statistical significance roughly indicates how likely it is that the results obtained are due to chance, and not to the treatment. Therefore, lower p-values are preferable, and one normally identifies a significance level, called , often set to . When p-value , results are considered significant. In the hypothesis testing box, the output is produced from the input using a certain statistical test (e.g., t-test, ANOVA) that depends on the type of experimental design, and the nature of the variables under study (e.g., rate variables, categorical).

- Weaknesses/Difficulties in FM: applying this type of strategy in FM is made hard by the inherent complexity of most FM. A laboratory experiment typically wants to assess the effectiveness of a tool, but the subjects who will use the tool sometimes also need to be trained on the theory that underlies the tool, e.g., formal language, notation, and usage itself. This means that laboratory experiments may need to involve ‘experts’ in FM. However, experts in FM are typically proficient on a specific and well-defined set of approaches (e.g., theorem proving vs. model checking), and even tools [33,34]. Therefore, comparable subjects with similar expertise and in a sufficient number to achieve both statistical power and significance, are hard to recruit, and this makes it difficult to carry out experiments in FM. A possible solution is to focus experiments on fine grained, simple, aspects that can be taught in the time span of a class or a limited tutorial, e.g., a graphical notation or a specific temporal logic. If one wishes to evaluate formal tools, and in particular their user interfaces, it is feasible to perform usability studies (cf. Section 5). These do not normally require a large sample size (in many settings, 10 ± 2 subjects are considered sufficient [35,36], when one adopts specific usability techniques), as they do not aim to assess significance but rather to spot out specific usability pitfalls. If, instead, one wishes to evaluate entire methodologies, it is recommended to decompose them into steps, and design experiments that evaluate one step at the time, e.g., distinguishing between comprehension, modelling phase, verification phase.

- Typical threats to validity: typical threats to validity are associated with construct validity, i.e., to what extent the constructs are correctly operationalised into variables, internal validity, i.e., to what extent the research design is sound, and all possible factors that could have affected the outcome have been properly controlled, external validity, i.e., to what extent the results obtained are applicable to other setting, for example a real-world setting, and conclusion validity, which specifies to what extent the statistical tests provide confidence on the conclusion. For conclusion validity, one needs to specify: that the assumption of statistical tests are considered and properly assessed—most of the tests (so called parametric) assume a normal distribution of the variables; the value of the statistical power of the tests, which can be estimated based on the number of subjects involved, and that gives an indication of how likely it is that one has incorrectly missed an effect between the variables, while an effect is actually present. Similarly to laboratory experiments with software subjects, an inherent threat is the low degree of realism, as human subjects undertake a task in a constrained environment which somewhat simulates how the task would be carried out in the real world. In other terms, the external validity is inherently limited for these types of study, which tend to maximise internal validity.

- Maturity in FM: not surprisingly, given the complexity of many FM, laboratory experiments with human subjects are not extremely mature in FM, but quite some experiments exist. Sobel and Clarkson [37] conducted one of the first quasi-experiments, in an instructional setting, where undergraduate students developed an elevator scheduling system—with and without using FM. “The FM group produced better designs and implementations than the control group”. Debatably [38,39], this contradicts Pfleeger and Hatton [40], who investigated the effects of using FM in a case study, in an industrial setting, where professionals developed an air-traffic-control information system. They “found no compelling quantitative evidence that formal design techniques alone produced code of higher quality than informal design techniques”, yet “conclude that formal design, combined with other techniques, yielded highly reliable code”. We are also aware of some well-conducted controlled experiments for the comprehensibility of FM like Petri nets [41], Z [42,43], OBJ [44,45], and B [46,47], for a set of state-based (semi-)formal languages like Statecharts and the Requirements State Machine Language (RSML) [48], and for domain-specific methods and languages in business process modelling [49,50,51], software product lines [52,53] and security [54,55,56]. Further empirical studies on the usability of such FM would be very welcome. The same holds for human comprehensibility and usability of other well-known FM (e.g., Abstract State Machines (ASM), the Temporal Logic of Actions (TLA), and calculi like the Calculus of Communicating Systems (CCS) and Communicating Sequential Processes (CSP)), even prior to evaluating the effectiveness of tools based on such FM. We note that the formalisms of attack trees and attack-defense trees, popularised by Schneier [57] and formalised by Mauw et al. [58,59], are claimed to have an easily understandable human-readable notation [57,60]. However, as reported in [61,62], there have apparently been no empirical studies on their human comprehensibility. Thus, also in this case laboratory experiments with human subjects would be much needed.

- Pointers to external guidelines: Wholin et al. [7] published a book on experimentation in software engineering and the principles expressed in the book also apply to experiments in FM. A practical guide on conducting experiments with tools involving human participants is provided by Ko et al. [63]. Guidelines for analysing families of experiments or replications are provided by Santos et al. [64]. To have more insights on experiment design with human subjects, one can also refer to the Research Methods Knowledge Base (https://conjointly.com/kb/, accessed on 20 September 2022) [65], an online manual primarily designed for social science, but appropriate also for experiments in FM. When psychometric is involved because some questionnaire are used to evaluate certain variables, the reader should refer to the guidelines by Graziotin et al. [66], specific to software engineering research. To acquire background on the statistics used in experiments, the handbook by Box et al. [14] is a major reference. To focus on hypothesis testing, with clear and intuitive guidelines for the selection of the types of tests to apply, one of the main reference is the book by Motulsky [67]. The book is, in principle, oriented to biologists, but the provided guidelines are presented in an intuitive and general way, which is appropriate also for an FM readership. It should be noted that, though widely adopted, hypothesis testing has several shortcomings that have been criticised by the research community. Bayesian Data Analysis has been advocated as an alternative option, and guidelines in the field of software engineering have been provided by Furia et al. [68].

- Pointers to studies outside FM: in software engineering it is quite common to use this strategy, for example to evaluate visual/model-based languages, as done for example by the works of Abrahão et al. [69,70], focused on modelling notations. In the evaluation of methodologies, a representative work is the one by Santos et al. [71] on test-driven development. When one wants to focus on single specific methodological step, a reference work is the one by Mohanani et al. [72], about different strategies for framing requirements and their impact on creativity. When the focus is human factors, e.g., competence or domain knowledge, a representative work is the one by Aranda et al. [73], on the effect of domain knowledge on elicitation activities. Finally a comparison between an automated procedure and a manual one for feature location is presented by Perez et al. [74].

5. Usability Testing

- Definition of the strategy: usability testing focuses on observing users working with a product, and performing realistic tasks that are meaningful to them. The objective of the test is to measure usability-related variables (e.g., efficiency, effectiveness, satisfaction), and analyse users’ qualitative feedback. It can be seen as a laboratory experiment, but (i) with a more standardised design; (ii) with a limited amount of subjects (6 to 12, belonging to 2–3 user profile groups are considered sufficient by Dumas and Redish [75]); (iii) collecting both quantitative and qualitative data; and (iv) whose goal is to identify usability issues, rather than testing hypothesis and achieving statistical significance, which typically require larger samples. If larger groups of subjects are available, though, quantitative results of usability tests can be evaluated with statistical tests.

- Crucial characteristics: usability studies can be classified into three main types: (i) heuristic inspections (or expert reviews), in which a usability expert critically analyses a product according to pre-defined usability criteria (cf. the list from Nielsen and Molich [76]), without involving users; (ii) cognitive walkthrough, in which a researcher goes through the steps of the main tasks that one expects to perform with a product, and reflects on potential user reactions [77]; and (iii) usability testing, in which users are directly involved. Here we focus on usability testing, which is also the most common and most studied technique. Usability and usability tests are also the topic of the ISO 9241-11:2018 Part 11 standard [78]. In usability tests, the constructs to evaluate, and the related RQs, are pre-defined by the literature, as the researcher typically wants to assess a product according to a set of usability attributes. The usability attributes considered by the ISO standard are effectiveness (to what extent users’ goals are achieved), efficiency (how much resources are used), and satisfaction (the user personal judgement with the experience of using the tool). Other possible framing of the usability attributes are the 5E expected from a product, i.e., efficient, effective, engaging (equivalent to satisfaction), error tolerant, and easy to learn (i.e., time to become proficient with the tool) [79]. Holzinger, instead, considers learnability, efficiency, satisfaction, low error rate (analogous to effectiveness), and also memorability (to what extent a casual user can return to work with the tool without a full re-training) [80]. After a selection of the usability attributes (constructs) that the researcher wants to assess, one needs to define the user profile that will be considered in the test. Test subjects will be selected accordingly and a screening and/or pre-test (i.e., a sort of demographic questionnaire) will be carried out to assess that the expected profile is actually matched by the subjects. Data collection is performed through the test itself, which is supposed to last about one hour for each subject. A set of task-based scenarios are defined (e.g., installation, loading a model, modifying a model, verification, etc.), which the user needs to perform with the tool. Typically, a moderator is present at the test, who will interact with the users, instruct them, incrementally assign tasks and tests, and be available for support, if needed. An observer should also be appointed, who will take notes on user’s physical and verbal reactions. Video equipment, as well as microphones, logging computers, logging software (e.g., Inputlog (https://www.inputlog.net/overview/, accessed on 20 September 2022), Userlytics (https://www.userlytics.com/, accessed on 20 September 2022), ShareX (https://www.goodfirms.co/software/sharex, accessed on 20 September 2022)), and eye-tracking devices can be used, depending on the available resources. During the usability test sessions, it is highly recommended to ask the participants to think aloud, which means verbalising actions, expectations, decisions, and reactions (e.g., “now I am pressing the button to verify the model, I expect it to start verification, and to have the results immediately”; “the tool is stuck, I do not know if it is doing something or not”; “now I see this result, and I cannot interpret it”). After each task, the user should answer at least the Single Easy Questionnaire (SEQ) test, which means asking how easy was the task in a 7-point scale from 1—Very Difficult to 7—Very Easy. Other short questions about the perceived time required, and the intention to use can also be asked. After completion of all the tasks, the user typically fills a post-test questionnaire, which measure perception-related variables. Several standard questionnaires exist, e.g., SUS (System Usability Scale) and CSUQ (Computer System Usability Questionnaire)—cf. Sauro and Lewis for a complete list [81]. Data from the test are both quantitative and qualitative. Quantitative data include: time on tasks, success or completion rate for the tasks, error rate with recovery from errors, failure rate (no completion or no recovery), assistance requests, search (support from documentation) and other data that can be logged. Results of the post-task and post-test questionnaires, associated with perception-related aspects, are also quantitative. Typical variables evaluated in usability studies, with associated metrics, are reported by Hornbaek [82]. Qualitative data include think aloud and observation. Data analysis for quantitative data consists of assessing to what extent the different rates are acceptable, after establishing expected rates beforehand. Since, in the end, what matters is the user perception, the results of post-task and post-test questionnaire are somehow prioritised in terms of relevance, together with qualitative data. For the SUS test, scores go from 0 to 100, and rates above 68 are considered above average. For qualitative data, coding and thematic analysis can be used, similar to qualitative studies (cf. Section 7). Overall, as for laboratory experiments with human subjects, it is important to establish a clear link between constructs to evaluate and measure variables. Quantitative and qualitative data should be also triangulated to identify further insight. For example, qualitative data may indicate satisfaction in learning the tool, although, e.g., the error rate is high. When more subjects are available for the test, e.g., 30 or more, and one wants to establish statistical relations between variables such as effectiveness, perceived usability and intention to use, one can refer to the Technology Acceptance Model (TAM) [83]. The relation between usability dimensions and TAM are discussed by Lin [84].

- Weaknesses/Difficulties in FM: applying usability tests for FM tools is complicated by the typical need to first learn the underlying theory and principles, and then using an FM tool. It is sometimes difficult to separate difficulty in learning, e.g., a modelling language or a temporal logic, from the usability of a model checker. Therefore, the researcher should first assess the learnability of the theory, e.g., with laboratory experiments with human subjects, and afterwards should evaluate the FM tool. Selected subjects should have learned the theory before using the tool, and usability of FM tools should preferably be evaluated also after some time of usage, so that initial learning barriers have already been overcome by users. If one aims to make a first usability test with users that are not acquainted with FM—which can happen if the target users are industrial practitioners—one can present a tool, perform some tasks and use available post-test questionnaires, like SUS, to get a first measurable feedback, as was done in previous studies [85]. When industrial subjects are involved, the difficulty for the researcher is to find problems that are meaningful to the domain of the users, so that these can perceive the potential relevance of the tool. Another difficulty is the typical focus of FM tool developers on the performance of tools, especially for model checkers, with respect to usability aspects, because tools often come from research, which rewards technical aspects instead of user-relevant ones. To be effective, usability tests should be iterative, with versions of an FM tool that are incrementally improved based on the test output. This requires resources specifically dedicated to usability testing. However, if this is not possible, heuristic inspections or cognitive walkthrough should at least be performed on an intermediate version of the tool. Another issuse with FM tools is that these are not websites, but complex systems, which can have several functionalities to test (e.g., simulation or different types of verification as in many model checkers). Given the complexity, one should focus on the most critical aspects to be tested. Another issue with FM tools is the time that is sometimes required to perform verification which makes a realistic test on a complex model often infeasible. In these cases, it is useful to use the so-called Wizard of Oz method (WOZ) [86], in which the output is prepared and produced beforehand, or part of the interaction is simulated remotely by a human.

- Typical threats to validity: the typical construct validity threats are generally addressed thanks to the usage of well-defined usability attributes and measures. Particular care should be dedicated to the selection of the subjects, so that these are actually representative of the user group considered in the study. Pre-tests or initial screening can mitigate threats. Additional threats to construct validity are related to the way questionnaires are presented. Depending on the formulation of the tests, error of central tendency (the tendency to avoid the selection of extreme values in a scale), consistent response bias (responding with the same answer to similar questions), and serial position effect (tendency to select the first or final items in a list) need to be prevented. Error of central tendency can be addressed by eliminating central answers, or by asking respondents to explicitly rank items. Consistent response bias can be addressed by using negative versions of the same question, and shuffling the questions. Serial position effect is addressed by shuffling the list of possible answers. To guarantee that the answers to the different questions are a correct proxy of the constructs that one wishes to evaluate, it is also important to perform inter-item correlation analysis [87]. Internal validity can be hampered by think-aloud activities, which can influence the behaviour of the user, interaction with the moderators, expectations from the tool and possible rewards given after the activity. These threats cannot be entirely mitigated, but the researcher should clarify the following with the user: (i) what is the status of the tool and the goal of the activity, so that expectations are clear; (ii) interaction should be minimised; (iii) it is the tool that is under evaluation and not the user; and (iv) the reward will be given regardless of the results. Overall, to ensure that the analysis is not biased, it is also important to perform triangulation, that is reasoning about relations between think aloud, observations and post/task and post-test questionnaires. Concerning external validity, this can be limited by the low degree of realism given by the test environment, which happens for laboratory experiments. To reduce this, one can perform the test in the real environment, in which the user is typically working, so that interruption, noise and other factors can make the evaluation more realistic.

- Maturity in FM: throughout the years there have been efforts to address usability, but it has by no means become standard practice and many FM tools have never been analysed for what concerns their usability. The PhD thesis of Kadoda [88] addresses the usability aspects of FM tools. First, using the usability evaluation criteria proposed by Shackel [89], two syntax-directed editing tools for writing formal specifications are compared in a practical setting; second, using the cognitive dimensions framework proposed by Green and Petre [90], the usability of 17 theorem provers is analysed. Hussey et al. [91] demonstrate usability analysis of Object-Z user-interface designs through two small case studies. In parallel, there have been many attempts at improving the usability of specific FM through the use of dedicated user-friendly toolsets and the like to hide FM intricacies from non-expert users like practitioners, ranging from the SCR Requirements Reuse (SC(R)) toolset [92] through the IFADIS toolkit [93,94] to FRAMA-C platform [95] and the ASMETA toolset [96] built around the ASM method. Recently, a preliminary comparative usability study of seven FM (verification) tools involving railway practitioners was conducted [85]. The importance of usability studies of FM is confirmed by the recent FM survey by Garavel et al., in which over two-thirds of the 130 experts that participated responded that it is a top priority for FM researchers to “develop more usable software tools” ([25], Section 4.5).

- Pointers to external guidelines: for a prescriptive introduction to usability, the reader should refer to the ISO 9241-11:2018 Part 11 standard [78]. A main reference for usability testing is the handbook by Rubin and Chisnell [97]. Nielsen and Molich provide 10 ways to perform heuristic evaluation [76], while Mahatody et al. [77] report the state of the art of cognitive walkthrough. Quantitative metrics to measure usability attributes are given by Hornbaek [82]. Several resources are made available also via specialised websites (e.g., https://usabilitygeek.com/, accessed on 20 September 2022).

- Pointers to papers outside FM: A systematic literature review on usability testing, with references scored by their quality, is provided by Sagar and Anju [98]. A recent work addressing usability of two modelling tools is presented by Planas [99]. For works using TAM, and focusing on the assessment of attributes related to usability, also including understandability of languages, the reader can consider the works by Abrahão et al. [70,100].

6. Surveys

- Definition of the Strategy: A survey is a method to systematically gather qualitative and quantitative data related to certain constructs of interests from a group of individuals that are representative of a population of interest. The constructs are concepts that one wants to evaluate, e.g., usability of a certain tool or developers’ habits. The population of interest (also target population or population) is the group of individuals that is the focus of the survey, e.g., users of tool T, companies in a certain area, users of tool T from University A vs. users from University B, potential users of tool T with a background in computer science, etc. Surveys are normally oriented to produce statistics, so their output normally takes a quantitative form. Surveys are typically conducted by means of questionnaires, but they can be also carried out through interviews.

- Crucial characteristics: The survey process starts from RQs, and the identification of the constructs of interest just as for the other methods discussed. Then, one needs to characterise the target population, i.e., what are the characteristics of the subjects that will take the survey. Based on these characteristics, the researcher performs sampling, which means selecting a subset of subjects that can be considered representative for the population. This is normally carried out with probability sampling, in which subjects are selected according to some probability function (random, or stratified—i.e., based on subgroups of the population) from a sampling frame (i.e., an identifiable list of subjects that in an optimal scenario should cover the entire population of interest, for example the list of e-mail addresses of a company). The sample size required for the survey can be computed considering the size of the target population, desired confidence level, confidence interval and other parameters [101,102]. When designing a survey, one also needs to consider that a relevant portion of the selected subjects, usually about 80–90%, will not respond to the inquiry. Therefore, to have significant results, one needs to plan for a broad dissemination of the survey, so that, even with a low response rate, the desired sample is reached. If personal data is collected, it is also important to make sure to adhere to the GDPR [103] and to present an informed consent to the subjects. In this phase, it is also important to define the data management plan (for a template, check https://ec.europa.eu/research/participants/docs/h2020-funding-guide/cross-cutting-issues/open-access-data-management/data-management_en.htm#A1-template, accessed on 20 September 2022), which includes how the data will be stored and when it will be deleted. After determining the sample size, one needs to design the survey instrument, which can be composed of open-ended and/or close-ended questions. Each type of question has its own advantages and disadvantages, e.g., open-ended questions are richer in information but harder to process, while close-ended questions enable less spontaneous and extensive answers, but are easier to analyse and lead to comparable results between subjects. Regardless of the types of questions selected, a well-designed survey has the following attributes: (i) clarity, i.e., to what extent the questions are sufficiently clear to elicit the desired information; (ii) comprehensiveness, i.e., to what extent the questions and answers are relevant and cover all the important information required to answer by the RQs; and (iii) acceptability, i.e., to what extent the questions are acceptable in terms of time required to answer them and preservation of privacy and ethical issues. To address these attributes, researchers should perform repeated pilots of the survey instrument, with relevant subjects. If the researcher is not sufficiently confident with the topic of the survey or the type of respondents, it is also useful perform a set of interviews with selected subjects, to better define the questions to be included in the survey instrument. After piloting, the survey can be distributed to the selected sample, and the answers need to be recorded, following the data management plan defined beforehand. Then data is analysed and interpreted. In this phase, researchers should perform some form of coding for answers to open-ended questions (cf. Section 7), and should adjust the data considering missing answers. Data analysis and reporting can be performed by first presenting quantitative statistics, with percentages of respondents, possibly followed by more advanced statistical analysis. For example, if RQs concern relationships between variables, statistical hypothesis tests can be performed similar to laboratory experiments with human subjects (cf. Section 4). Other advanced methods include Structured Equation Modeling (SEM), which allows researchers to identify relationships between high-level, conceptual and so-called ‘latent’ variables (e.g., background, success, industrial adoption), by analysing multiple observable indicators that can be extracted from the survey (e.g., educational degree and current profession can be considered as indicators of background) [104,105].

- Weaknesses/Difficulties in FM: similar to the case of laboratory experiments with human subjects, the main issue is the selection of the participants, i.e., the respondents to the survey. FM experts are an inherently limited population, and each expert is specialised in a limited number of methods or tools. In practice, random sampling is often not practicable, and one needs to recruit as many subjects as possible, thus resorting to the so-called convenience sampling. Furthermore, the actual population of FM users, which could be the target of a survey about an FM tool, or about FM adoption in general, cannot be known in advance. Therefore, reasonable assumptions and arguments need to be provided to show that the sample of respondents is actually representative of a certain target population. The FM domain also uses technical jargon, which could make questions and answers not sufficiently clear to a sufficiently wide range of potential respondents. Therefore, in some cases the researchers are constrained to ask only general questions, which however limit the degree of insight that one can achieve.

- Typical threats to validity: the main threats to validity are associated with construct validity, which in this case can be actually measured by using different survey questions to measure the same construct, and then performing an inter-item correlation analysis [87]. This allows the researcher to discard some items related to a certain construct of interest, because the responses do not appear to be correlated with other items associated with the same construct, or because they are not sufficiently discriminative with respect to other items measuring different constructs. In principle, survey research distinguishes between validity (criterion, face, content, and construct) and reliability [106]. Here, we use the term internal validity, to account for the different validity types, in order to make the explanation more intuitive and consistent with respect to the other strategies described. Threats to internal validity concern the way in which the questionnaire is formulated, which could be leading to preferred answers (e.g., all the first answers are checked in a long list of options), and that, if too long, could lead to fatigue effects. The first issue is addressed by shuffling answers between respondents. The second one could be addressed by reducing the length of the questionnaire, and also by shuffling the questions between respondents, so that fatigue effects are compensated. Internal validity can also be affected by systematic response bias, especially in case Likert scales are used. This can occur when similar questions to measure the same construct are always presented in the same affirmative form. The respondent may simply check the same answer, since the questions look similar. To prevent this, the opposing question format is used, in which the same question is asked in positive and negative form. Shuffling the questions also helps at this regard. In survey research, external validity should be maximised, as one wants to collect information about an entire population. Claims about the appropriateness of the sample size should be included to support external validity. An additional threat, typical of surveys, concerns reliability, which is to what extent similar results in terms of distribution are obtained if the survey is repeated with a different sample on the same population. In practical scenarios, this means that the questions should trigger the same answers if asked to similar respondents, and can be addressed by verifying that the questions are sufficiently clear by piloting the questionnaire with a subset of respondents.

- Maturity in FM: while not particularly mature in FM, some seminal surveys exist. The first systematic survey of the use of FM in the development of industrial applications was conducted by Craigen et al. [107]. This extensive survey is based on twelve ‘case studies’ from industry and it was widely publicized [108,109,110]. One of these case studies is also reported in the classical survey on FM by Clarke, Wing et al. [111], together with other ‘case studies’ in specification and verification. The comprehensive survey on FM by Woodcock et al. [112] reviews the application of formal methods in no less than 62 different industrial projects world-wide. Basile et al. [113] and Ter Beek et al. [114] conducted a survey with FM industrial practitioners from the railway domain, aimed at identifying the main requirements of FM for the railway industry. Finally, Garavel et al. [25] conducted a survey on the past, present and future of FM in research, industry and education among a selection of internationally renowned FM experts, while Gleirscher and Marmsoler [115] conducted a survey on the academic and industrial use of FM in safety-critical software domains among FM professionals from Europe and North America.

- Pointers to external guidelines: Guidelines and suggestions specific to the software engineering domain are: the introductory technical report by Linåker et al. [116]; the comprehensive article, especially covering survey design, by Kitchenham and Pfleeger [117]; the article by Wagner et al. [118], which has a primary focus on data analysis strategies, and related challenges; the checklist by Molleri et al. [119]; the guidelines specific to sampling in software engineering, by Baltes and Ralph [120], especially concerning cases in which probabilistic sampling is hardly applicable; the guidelines by Ralph and Tempero about threats to construct validity [121]. More general textbooks on survey research are: the introductory textbook from Rea and Parker [102], covering all the relevant topics in an accessible way; the technical book by Heeringa et al. [122], specific for data analysis; the extensive book on categorical data analysis by Agresti [123], also covering topics that go beyond survey research in a technical, yet accessible way, and including several examples. For SEM, a primary reference is the book by Kline “Principles and Practice of Structural Equation Modeling” [105].

- Pointers to studies outside FM: a reference survey, oriented to uncover pain points in requirements engineering, involving several companies across the globe, is the NaPiRE (Naming the Pain in Requirements Engineering) initiative (http://www.re-survey.org/#/home, accessed on 20 September 2022). The results of this family of surveys have been published by Méndez-Fernández et al. [124]. Another recent and rigorous survey, using SEM, is the one by Ralph et al. [125], on the effects of COVID-19 on developers’ work. A survey using hypothesis testing based on multiple regression models is the one by Chou and Kao [126], about critical factors on agile software processes. Finally, a survey about modelling practices, also using hypothesis testing but with different types of tests, is the work by Torchiano et al. [127].

7. Qualitative Studies

- Definition of the Strategy: qualitative studies aim at collecting qualitative data by means of interviews, focus groups, workshops, observations, documentation inspection or other qualitative data collection strategies [128], and systematically analyse these data. These studies aim at inducing theories about constructs, based on the analysis of the data. Constructs and RQs can be defined beforehand, or—less frequently in FM—can emerge from the data themselves. Qualitative studies are typically used when the constructs of interest are abstract, conceptual and hardly measurable (e.g., human factors, social aspects, viewpoints, practices). Qualitative studies include the general framework of Grounded Theory (GT) [129,130,131,132], which has recently been specialised for the analysis of socio-technical systems [133].

- Crucial characteristics: qualitative studies typically start with general RQs about abstract constructs, and perform iterations of data collection and analysis to provide answers to the general RQs. Like surveys, qualitative studies require sampling of subjects or objects from which data is collected, while with surveys it is typical to resort to probabilistic sampling, with qualitative studies purposive sampling is typically used. With purposive sampling, given the RQ, the researcher samples strategically, by selecting the units that, in the given context, are the most appropriate to give different internal perspectives to come to a (locally) complete view and answer the RQ. In qualitative studies, the fundamental characteristic is the qualitative nature of the data analysed, in most of the cases sentences produced by human subjects during interviews. For example, one could formulate a general RQ: What are the human factors that characterise the understanding of the notation N?, with associated constructs (i.e., human factors, understanding). Typically, RQs in qualitative studies, and in particular in GT, are why and how questions [133], which are oriented to investigate the meaning of the analysed situations. However, what questions are also common, especially to induce descriptive theories. Furthermore, in the aforementioned RQ human factors and understanding are classes, not constructs, as in qualitative studies one often aims at providing classifications rather than measuring properties [121]. In this paper, we treat them as constructs to facilitate the reader in making analogies with the more quantitative strategies: while in quantitative studies one measures properties related to the constructs of interest, in qualitative studies one typically creates a classification related to the constructs. Coming back to our RQ, one can answer it by acquiring information through interviews involving novice users of the notation. The interview transcripts will be the qualitative data to be analysed. Data analysis is carried out by means of so-called thematic analysis [134,135]. Thematic analysis aims to identify concepts and relations thereof, based on the interpretation of the data. Thematic analysis makes use of coding, which means associating essence-capturing labels (called ‘codes’) to relevant chunks of data (e.g., sentences, paragraphs, lines). The codes represent concepts, which are then aggregated into categories, which in turn can be aggregated and linked to one another. It should be noted that different terminology is used by different researchers and schools of thought in GT. The terminology used here (e.g., concepts, categories) generally follows from Strauss and Corbin [130]. Later, we refer to coding families, which comes from Glaser [131]. For example, one interviewee may say “I often suffer from fatigue when reading large diagrams”. This can be coded with the labels fatigue, read, large diagrams. Another interviewee may say: “I find it hard to memorise all the types of graphical constructs, they are too many, and this makes it frustrating to read the diagrams”. The labels could be: construct types, read, frustration. The concepts fatigue and frustration could then be aggregated into the more general category, coded as feelings. Once concepts and categories are identified, one can identify relationships (hierarchical, causal, similarity, etc.), between concepts and categories. The process is iterative, and through the iterations some concepts and categories may be added or removed. The iterations need to resort to memos and constant comparison. Memos are notes that the researcher writes to justify codes, reflect on possible relations between concepts/categories, or about the analysis process itself. Constant comparison means comparing the emerging graph of concepts and categories with the data, so that there is clear evidence of the link between the more abstract categorisation and the data. The process of data collection and analysis is normally carried out until saturation is reached, i.e., until no further information appears to emerge from the collection and analysis of novel data. The theory, which answers the RQ, is represented by the conceptualisation that emerges from the data. In our example, the theory is a graph of all human factors affecting different dimensions of understanding different aspects of the notation N. The theory can also be represented by means of different classical coding families, which are typical patterns of concepts and relations thereof [129,131]. This general procedure, which we refer to as thematic analysis, is applied as part of the general framework of GT. With GT, data collection and analysis are executed as intertwined and iterative activities, and one applies so-called theoretical sampling to identify subjects to interview or objects to analyse based on the theory that has emerged from the data so far. Here, we do not discuss the GT framework, but we recommend the reader interested in qualitative studies to refer to the guidelines of Hoda [133], which are defined for the software engineering field, and can apply also to FM cases.

- Weaknesses/Difficulties in FM: An inherent difficulty in applying qualitative research methods in FM is the type of skills and attitude required from a qualitative researcher, which typically takes a constructivist (humans construct knowledge through interaction with the environment) rather than a positivist stance (humans discover knowledge through logical deduction from observation) in developing their research. This means that while FM practitioners search for proofs, and assume that mathematical objectivity can, and shall be achieved, qualitative research takes subjectivity and contradiction as intrinsic characteristics of reality. For this reason, the type of profile that is fit to do qualitative research in FM, and therefore has FM competence plus a constructivist mindset, is rare. Another difficulty in FM is the limited application of FM in industrial fields. Qualitative studies in FM should be based on interviews and observations of subjects practicing FM in real-world settings. Since these subjects are limited, one needs to resort to observations and interviews in research contexts, where FM are practiced, tools are developed and interaction with industrial partners takes place. These studies should complement the viewpoint of researchers with that of industrial partners, in order to have a complete, possible contrasting view of the subject matter.

- Typical threats to validity: threats to validity in qualitative studies can hardly be categorised according to the classes presented in Section 2. Other validity criteria shall be fulfilled, and different categorisations are provided in the literature; cf., e.g., Guba and Lincoln [136] (trustworthiness and authenticity) vs. Charmaz [132] (credibility, originality, resonance and usefulness) vs. Leung [137] (validity, reliability and generalizability). We refer to Charmaz and Thornberg for a discussion on the topic [138]. Regardless of the type of classification selected, the researcher should ensure that four main practices are followed, which are oriented to ensure that, despite the inherent subjectivity of qualitative research, interpretations are sound and reasonable: (i) clearly report the method adopted for data analysis, with at least one complete example that describes how the researcher passed from data to concepts, categories and relations thereof; (ii) in the results section, report quotes that exemplify concepts/categories and relations thereof; (iii) perform member checking/respondent validation: the researcher needs to (a) agree with the participants that what is transcribed and reported is actually what was meant by the participants; and (b) show the findings to the participants to understand to what extent these are accepted and considered reasonable; and (iv) perform triangulation: this means looking into multiple data sources (e.g., interviews, observations, documents) to corroborate the findings and involving more than one subject in the data analysis. A reference set of steps for structured triangulation between different analysts is reported in the guidelines by Cruzes [135].

- Maturity in FM: qualitative studies are not mature at all. We are only aware of [139], where Snook and Harrison report on five structured interviews, lasting around two hours each, conducted with FM practitioners from different companies, all with some experience of using of FM in real systems (e.g., B, Z, VDM, CCS, CSP, refinement, model checking, and theorem proving). They discuss the impact of FM on the company, its products, and its development processes, as well as their scalability, understandability, and tool support. It is worth mentioning that for the aforementioned systematic survey by Craigen et al. [107,110], the authors conducted 23 interviews involving about 50 individuals in both North America and Europe, lasting from half an hour to 11 h. Moreover, the authors of [140] mention that they interviewed FM practitioners, sponsors, and other technology stakeholders in an informal manner.

- Pointers to external guidelines: guidelines for conducting interviews are provided in the book Social Research Methods by Bryman [141], which also contains a comprehensive introductory manual with a relevant part on qualitative methods, including GT. For observational studies—which belong to the field of ethnography [142]—a relevant reference is Zhang [143]. A primary reference for coding is the book of Saldaña [144]. For GT, one can refer to the already cited article of Hoda [133]—which will be followed by an upcoming manual in the form of a book—and to the guidelines of Stol [145].

- Pointers to papers outside FM: Examples of qualitative studies based on interviews are: the one by Ågren et al. [146], studying the interplay between requirements and development speed in the context of a multi-case study; Yang et al. [147], on the use of exectution logs in software development; Strandberg et al. [148], on the information flow in software testing. Example studies using GT in software engineering are: Masood et al. [149], about the difference between Scrum by the book and Scrum in practice; Leite et al. [150], on the organisation of software teams in DevOps contexts.

8. Judgement Studies

- Definition of the strategy: a judgement study is a research strategy in which the researcher selects experts on a certain topic and aims to elicit opinions around a set of questions, possibly triggered by some hands-on experience, with the goal of reaching consensus among the experts.

- Crucial characteristics: in judgement studies, RQs cover aspects that require specific expertise to be answered, and for which a survey may not provide sufficient insight, or for which research is not sufficiently mature, like, e.g., What are the main problems of applying FM in industry? In which way can the use of tool T improve the identification of design issues? In judgement studies, the researcher typically selects a sample of subjects that are are considered experts on the topic of interest. The results of a judgement study can be used to drive the design of questionnaires to later be elaborated into surveys. For instance, once one has identified the typical problems of FM in industry, these problems can be presented as possible options to a larger set of participants. Data collection is typically qualitative, and it is performed by means of focus groups, brainstorming workshops, or Delphi studies. With focus groups, the experts (normally 8 to 10) participate in a synchronous meeting in which they are asked to provide their viewpoint on a topic of interest. Before the meeting, a moderator and a note taker are initially appointed, and recording, possibly with video, is set up. Before the focus group, the experts can be faced with a reflection-triggering task, for example observing a model of a system, playing with a tool interface or a more complex task (e.g., designing a model with tool T). This latter case typically occurs when one wants to evaluate a certain tool involving the opinion of multiple experts, e.g., building on top of the DESMET project methodology [15]. During the focus group, general warm-up questions are asked, also to elicit the expertise of each expert (e.g., In which projects did you use FM in industry?) followed by more specific questions (e.g., What could be the difficulties of using tool T in industry?) and closing with a question for final remarks (e.g., Do you have something to add?), after a summary. During focus groups, it is recommended to have a whiteboard, in which the moderator reports the results of the discussion so far. The moderator should make sure that all participants express their opinion, and that consensus is eventually reached—or, if not, contrasting opinions are clearly stated and agreed. Focus groups typically last one hour. If needed, multiple focus groups can be organised in parallel, and participants share the final findings in a plenary meeting. Focus groups can be carried out following the Nominal Group Technique (NGT) designed by Delbecq and Van de Ven [151]. Workshops are meetings that include between 10 and 30 participants, and are similar to focus groups in terms of their goal, i.e., brainstorming opinions and reaching consensus. The main difference is that workshops typically address more general questions, and can include different types of experts, with different degrees of expertise, whereas focus groups consist of more homogeneous participants focused on a more specific topic. Workshops can be carried out through adaptations of the NGT technique [151], in which each participant answers a general question using one or more sticky notes (e.g., What are the problems of applying FM in industry?). Then the sticky notes are read out loud, explained, attached to a whiteboard by the participants, iteratively grouped and prioritised. In focus groups and workshops, a crucial role is played by the moderator, who needs to ensure that none of the participants overtakes the meeting, and that all participants are able to express their viewpoint. With Delphi studies, a large number of experts is normally involved with respect to other methods (i.e., more than 30 subjects) and for longer periods of time (weeks to months), and the goal is to identify best practices or define procedures, aiming also at quantitatively measuring the consensus. The selected experts are individually asked to express their opinions around a certain problem or question, normally in written form and anonymously. The opinions are then shared with the other participants, and discussion takes place in order to reach consensus, similar to a paper reviewing process. The process typically takes place asynchronously. However, in practice, the discussion can also be carried out by means of a dedicated focus group, depending on the goal and the complexity of the RQs. With Delphi studies, multiple rounds of iterations are carried out to reach consensus. The initial round normally consists of an open question oriented to define the items to be discussed in later rounds (e.g., What are the best practices for introducing FM in industry?). The second round can give ratings of relevance or agreement to the different items that have been identified collectively (e.g., How relevant is it to have an internal contact person having some knowledge of modelling?). Therefore, in this round, quantitative answers are collected. In a third round, participants can re-evaluate their opinions based on the average results of the group. Therefore, in the end, consensus about, e.g., relevance or agreement, can be measured quantitatively. Focus groups, workshops and initial rounds of Delphi studies typically produce qualitative data. Data analysis in all these cases is carried out with thematic analysis, as described in Section 7. Quantitative analysis in Delphi studies aims at establishing that about 75% consensus is reached about the identified items [152].

- Weaknesses/Difficulties in FM: no particular difficulties characterise judgement studies in FM, which should therefore actually be encouraged as limited experts are typically available on certain techniques or tools, and the issues under discussion are normally particularly complex, e.g., industrial acceptance or scalability of FM tools. One practical difficulty arises with focus groups and workshops in which one needs to record many different voices, and it is not always easy to reconstruct the event from voice recordings only. Furthermore, poor equipment can make it difficult to record all the voices in a room. Video recording can address part of these issues, together with extensive note taking and transcriptions made early after the meeting.

- Typical threats to validity: an inherent threat to validity of judgement studies is the limited generalisability across subjects, given the limited sample, which affects external validity. However, an accurate and extensive selection of experts on the topic of interest can improve external validity by allowing generalisability across responses [12]. Other threats are related to internal validity, since the results of the study may be biased by dominant, disruptive or reluctant behaviour of participants. These issues can be addressed by the moderator and by ensuring balanced protocols for participation. Concerning construct validity, the main issue resides in the communication of questions, and the definition of a shared terminology. Piloting the study, and defining a common vocabulary beforehand can mitigate this issue. As for data analysis, typical threats of qualitative studies apply here.

- Maturity in FM: we are aware of only one judgement study on FM. In [6], nine different FM tools are analysed by 17 experts with experience in FM applied to railway systems. The study identifies specific strengths and weaknesses of the tools and characterises them by their suitability in specific development contexts.

- Pointers to external guidelines: for focus groups, the book by Grueger and Casey [153] is a primary reference, while, for a quicker tour on this methodology, one should refer to Breen [154]. A reflection on focus groups for software enigneering is reported by Kontio et al. [155]. For Delphi studies, the initial guidelines have been proposed by Dalkey and Helmer [156], but over 20 variants exist [157]. For the most commonly used guidelines, we recommend to refer to the survey by Varndell et al. [158]. For the NGT technique, which can be seen as a hybrid between focus groups and Delphi, the reader can refer to the original article [151], to the comparative study between NGT and Delphi by McMillan et al. [159] or to the simple guidelines by Dunham [160]. A good overview of different, group-based, brainstorming techniques is reported by Shestopalov [161].

- Pointers to papers outside FM: Delphi studies are not common in FM and not as commo in software engineering research as they are in healthcare and social sciences. In software engineering, Delphi studies have been used for software cost estimation, since their introduction as one of the most suitable techniques in [162], as well as for the identification of the most important skills required to excel in the software engineering industry [163,164,165]. Another good example using the Delphi method is reported by Murphy et al. [166], in the field of emergency nursing. An example of usage of the NGT technique is presented by Harvey et al. [167]. Focus groups are more frequently used in software engineering, typically to involve industrial participants in the validation of prototypical solutions, cf., e.g., Abbas et al. [168]. An example of a focus group study in software engineering, carried out via online tools, is presented by Martakis and Daneva [169]. An example of a combination of in-person focus groups and workshops in requirements engineering is presented by De Angelis et al. [170].

9. Case Studies, Action Research, and Design Science

- Definition of the Strategy: a case study is an empirical inquiry about a certain phenomenon carried out in a real-world context, in which it is difficult to isolate the studied phenomenon from the environment in which it occurs. In the software engineering and FM literature, the term ‘case study’ is frequently misused, as it often refers to retrospective experience reports with lessons learned, or to exemplary applications of a technique on a specific case [171]. In principle, the researcher does not take an active role in the phenomenon under investigation. When the researcher develops an artefact—tool or method—and applies it to a real-world context, one should design the study as Action Research [172] or Design Science [173]. However, the term case study is extremely common, and has established guidelines for reporting [174]. These guidelines are generally applicable also to those cases in which the researcher develops an artefact, applies it to data or people belonging to one or more companies, and possibly refines the artefact based on the feedback acquired through multiple iterations. Therefore, in this paper we will discuss only case study research as unifying framework, including also those cases in which the researcher actively intervenes in the context, as is common in software engineering and FM.

- Crucial characteristics: a crucial characteristic of a case study is the extensive characterisation of the context in which the investigation takes place, typically one or more companies or organisations—when more than one are considered, we speak about multiple case study. The researcher needs to clearly specify what is the process typically followed by the company, what are the documents produced, who are the actors involved, which are the tools used to support the process and other salient characteristics. Then, one needs to make explicit the unit(s) of analysis, i.e., the case being investigated. The unit can be the entire company, a team, a project, a document, etc., or sets thereof, in case the researcher wants to perform a comparison between different units. Furthermore, one needs to characterise the subjects involved in the research, their profile, as well as the objects, e.g., documents or artefacts. As for other research inquiries, a case study starts from the RQs. This is also what mainly differentiates a case study from an experience report, which is typically a retrospective reflection, and it is not guided by explicit RQs. In case studies, RQs typically start from the needs of the company in which the study is carried out. The RQs of a case study can include questions related to the application of a certain artefact, e.g., What is the applicability of tool‘T? with sub-questions: To what extent can we reduce the bugs by using tool T? To what extent is the performance of tool T considered acceptable by practitioners? In other cases, the RQs can be related to understand the process, e.g., What is the process of adoption of FM in the company? or What is the process of V&V through FM? As one can see, RQs in case studies can include both qualitative and quantitative aspects, and therefore quantitative and qualitative approaches are used for data collection and analysis, to answer the RQs. For example, to answer a general RQ such as What is the applicability of FM in company C? and associated sub-questions outlined above, one can first measure the performance in terms of bug reduction ensured by the application of FM (quantitative) and then interview practitioners to understand if these measures are acceptable (qualitative). More specifically, one can first observe the number of bugs in one or more projects carried out without FM, and compare this number with similar projects in which FM are applied—always providing an extensive account of the characteristics of the projects. To understand the perception of practitioners, one can interview them after they have experienced the usage of FM in the projects. Overall, these different types of data contribute to give an answer to the initial RQ. Techniques such as laboratory experiments, usability studies, surveys, qualitative studies and judgement studies can be carried out in the context of case studies. However, one needs to consider the limited data points normally available in case studies, and reasonably adapt the available techniques, applying their principles rather than their full prescriptions, and reduce expectations about generality of the findings.