A Framework for Rapid Robotic Application Development for Citizen Developers

Abstract

:1. Introduction

2. The Beaten Path in Robotic Software Development

2.1. State of the Art Robotic Middleware and Frameworks

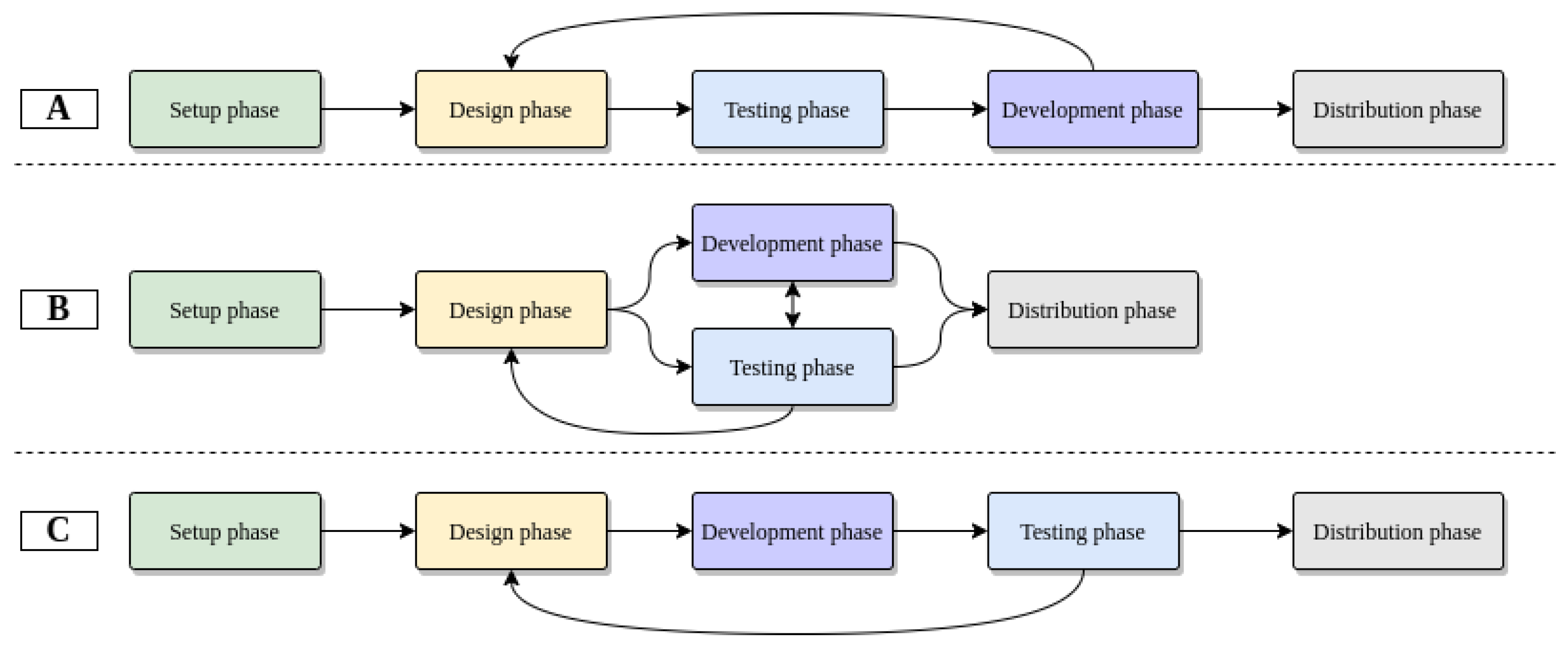

2.2. Robotic Applications: The Conventional Way

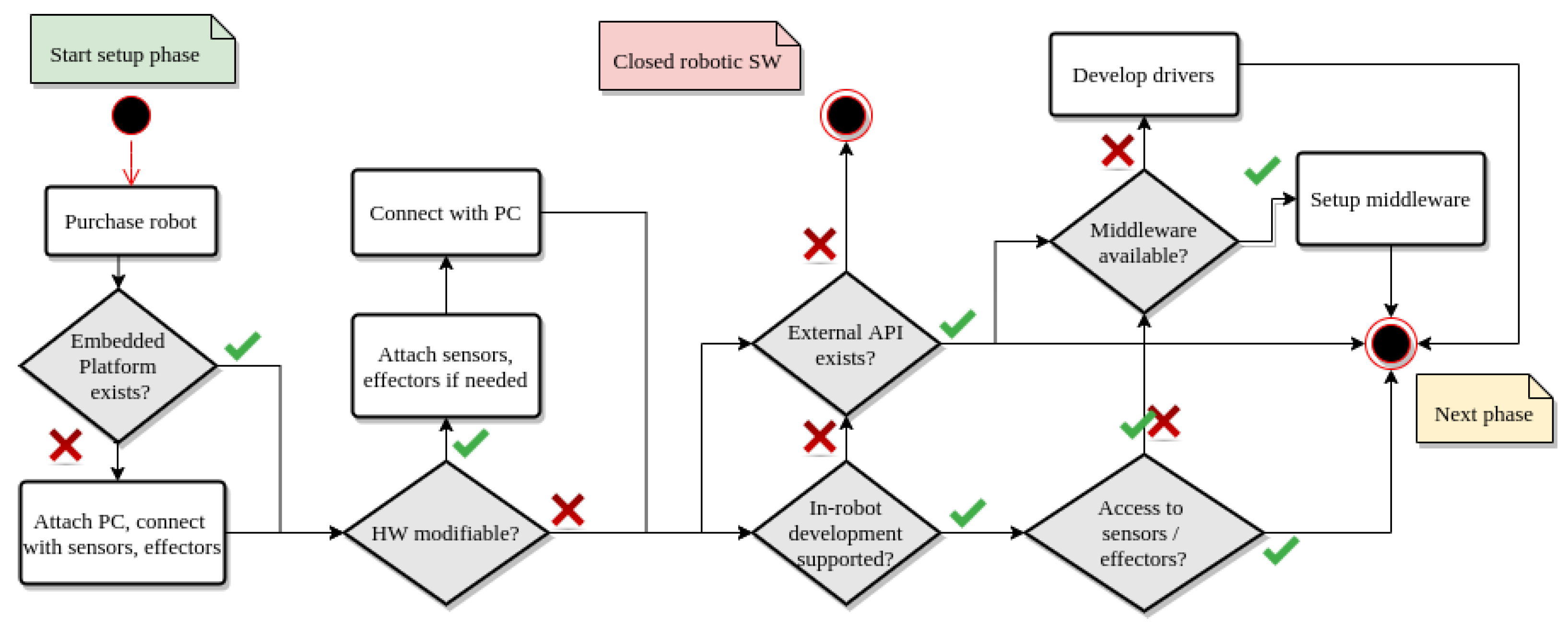

2.2.1. Setup Phase

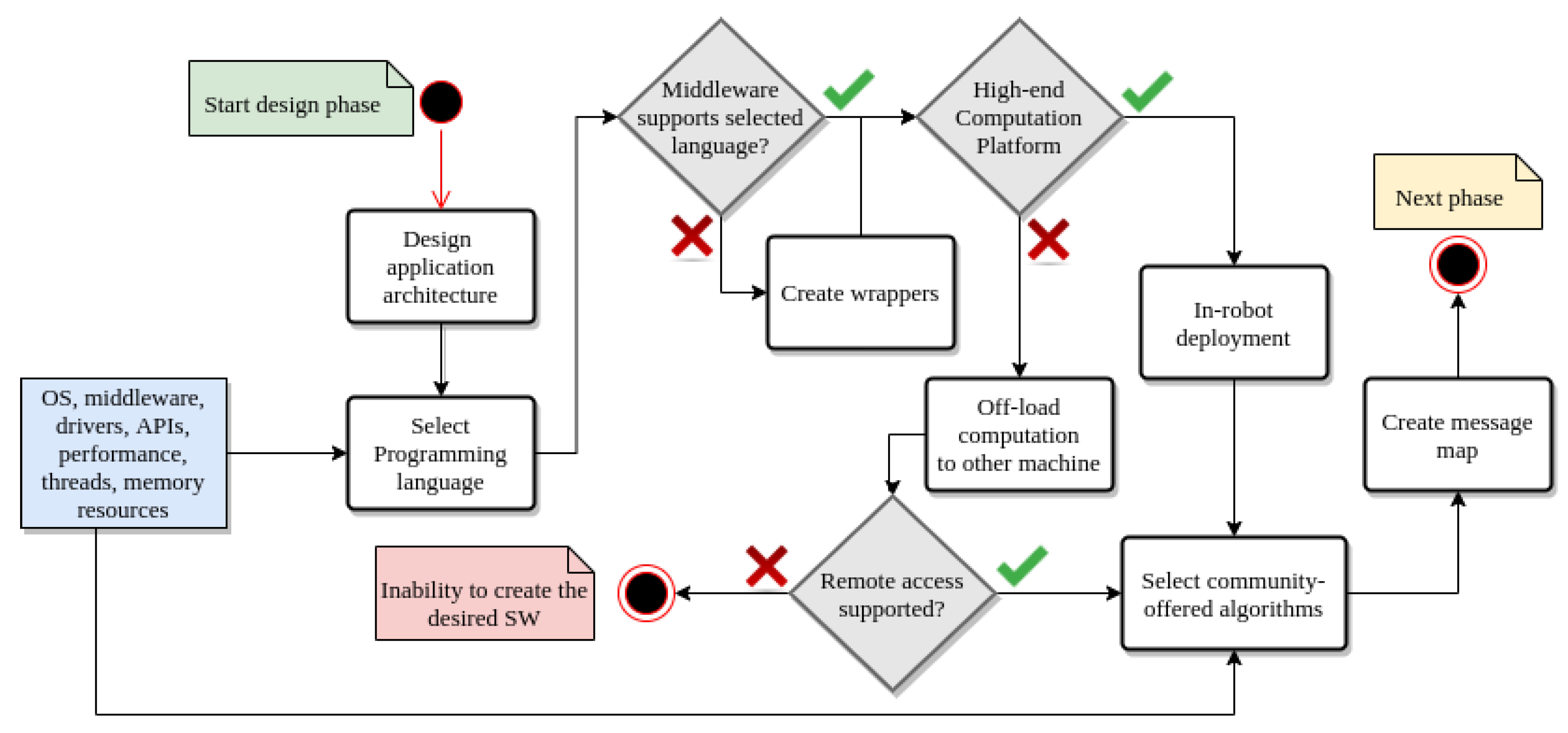

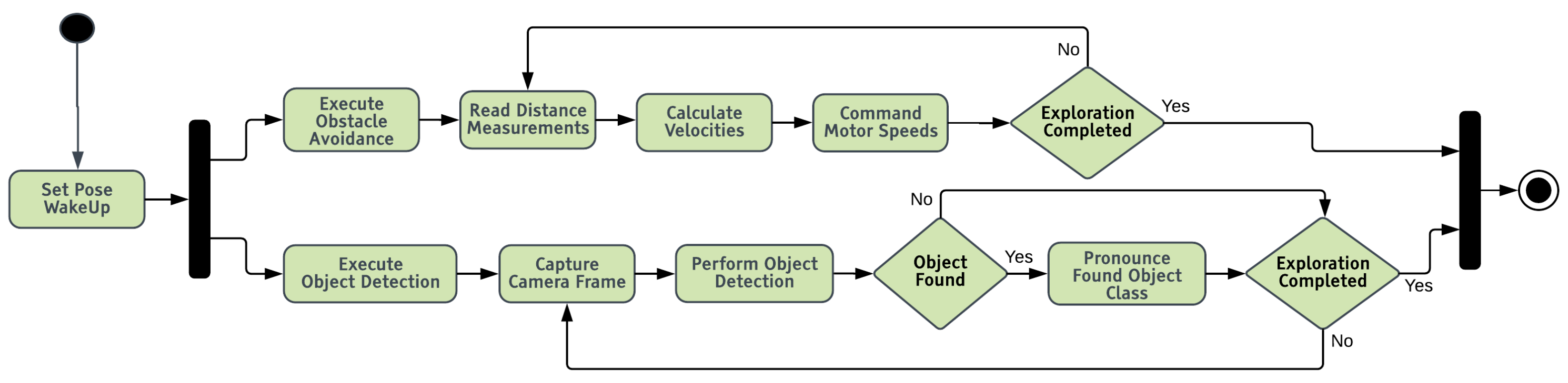

2.2.2. Design Phase

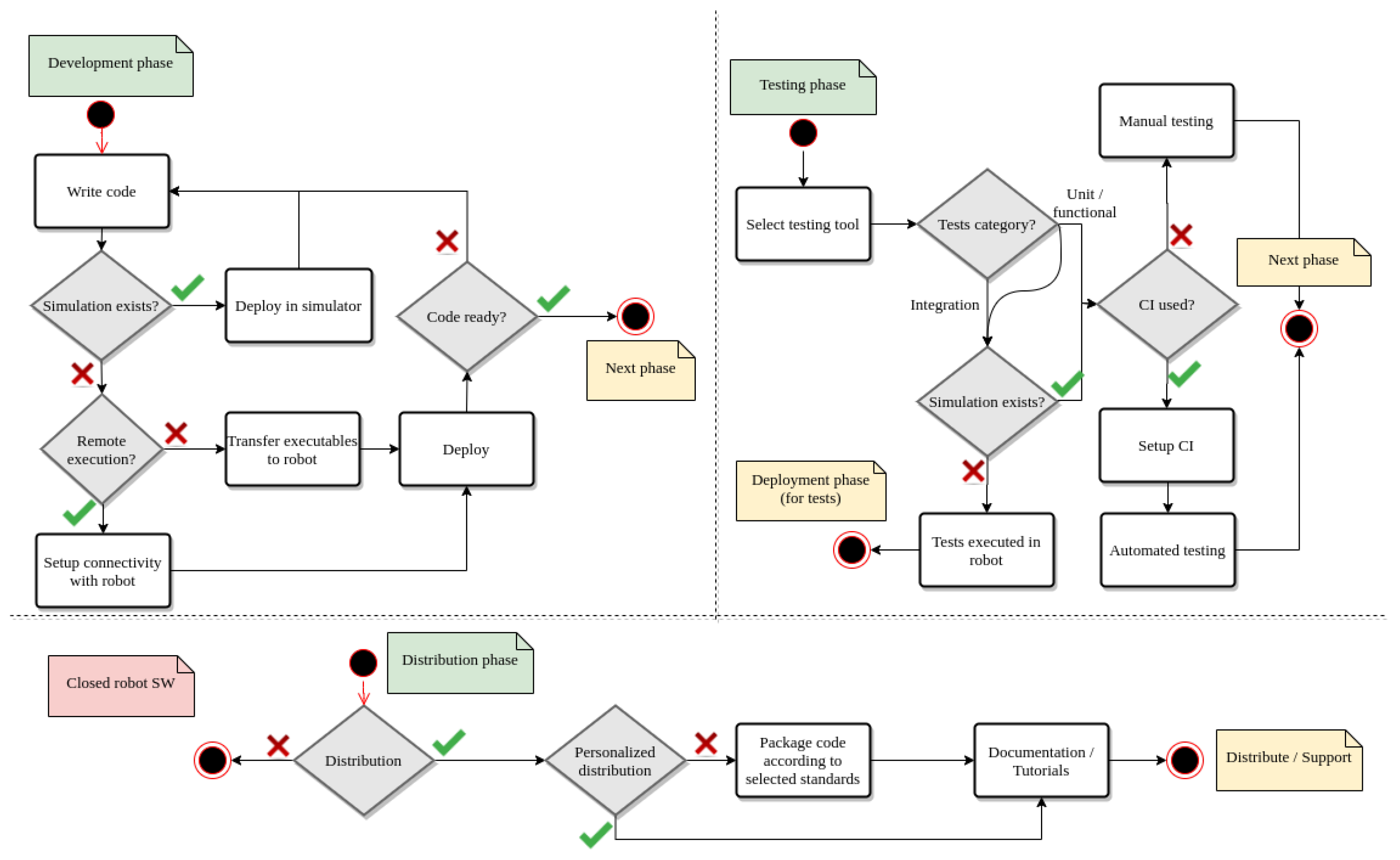

2.2.3. Development Phase

2.2.4. Testing Phase

2.2.5. Distribution Phase

- Make the pre-compiled binaries available to the web via a public link;

- Package and upload to OS-specific upstream repositories (e.g., Debian package);

- Package and upload to specialized repositories of robotics software, according to the selected middleware (for example, ROS upstream repositories).

2.3. Identifying the Limitations for Citizen-Developers

2.3.1. Resource-Related Inability to Meet the Requirements

2.3.2. Middleware-Related Inability to Meet the Requirements

2.3.3. Portability Problems to Other Robots

2.3.4. Complexity Due to Distributed Application Deployment

2.3.5. Physical Damage Due to Insufficient Testing

2.3.6. Erroneous 3rd Party Setup/Execution

2.4. Leveraging the Robotic Software Development Problems

2.4.1. Installation and Configuration

2.4.2. Remote Data Acquisition and Control of On-Robot Effectors

2.4.3. Simplified Testing Procedures

2.4.4. Seamless Portability/Distribution

3. R4A Approach

3.1. Specifications

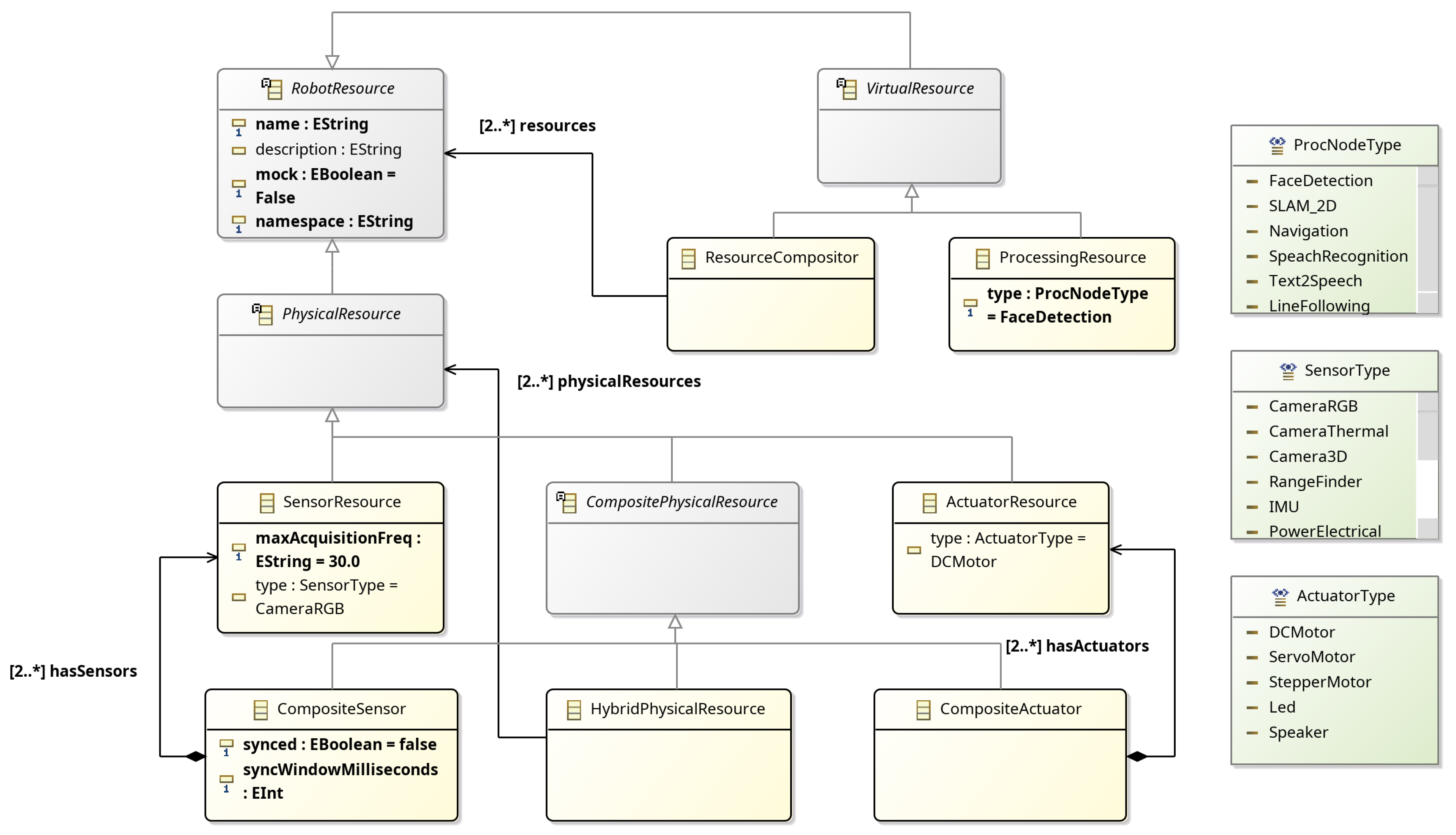

3.1.1. Robot Resource Abstraction

3.1.2. Rapid Development

3.1.3. Robotic Middleware Incorporation

3.1.4. Remote Execution

3.1.5. Multi-Robot Support

3.1.6. Enhanced Connectivity

3.1.7. Robot Runtime and Persistent Memory

3.1.8. Extendability

3.1.9. Deployment Flexibility

3.2. Resource Abstraction

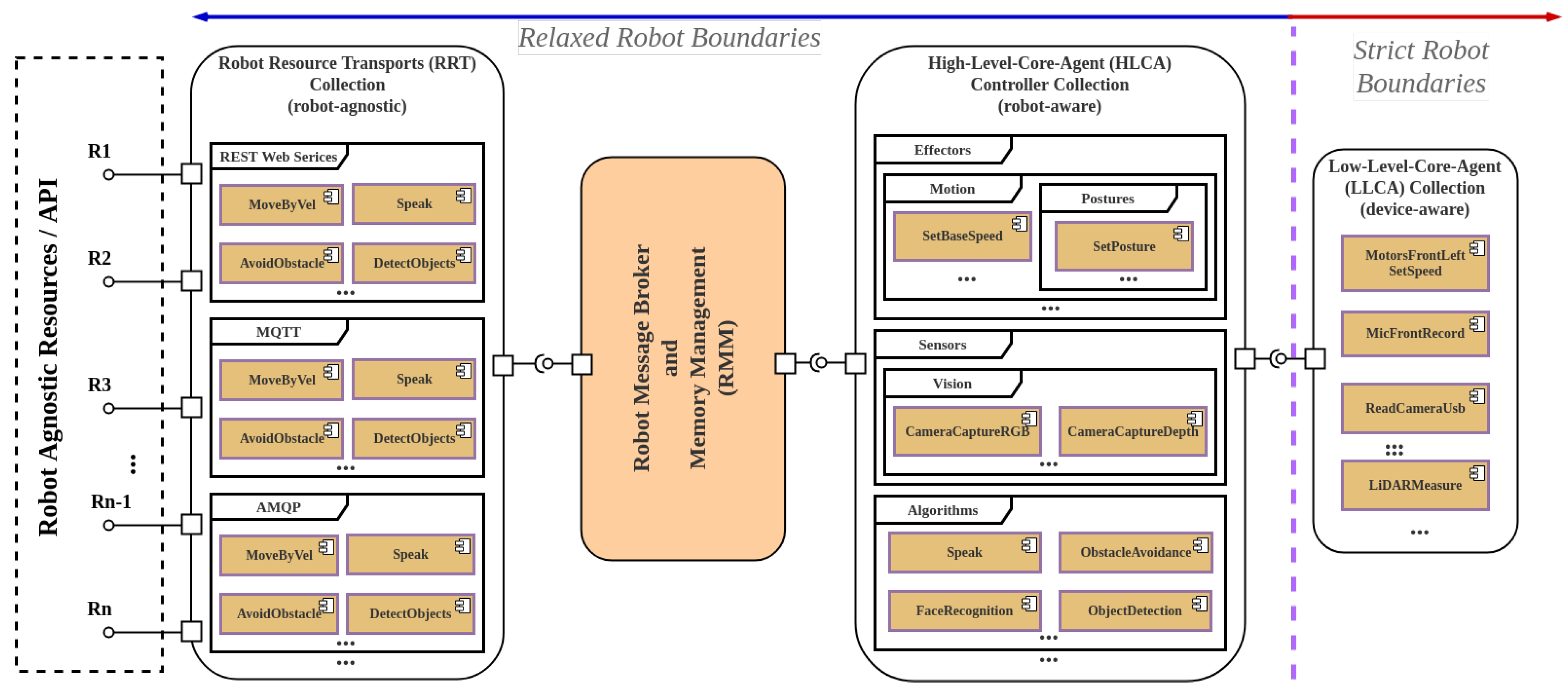

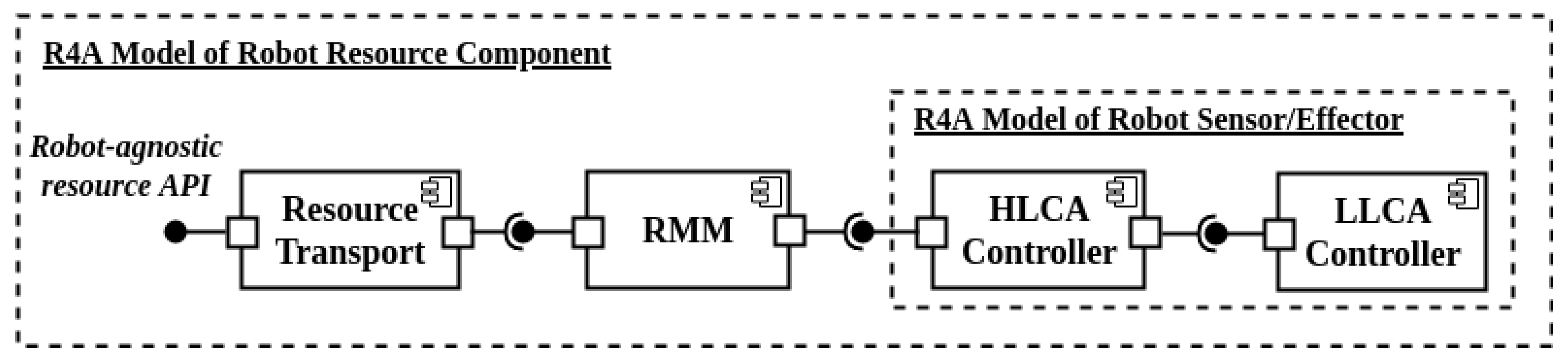

3.3. Resource-Oriented Architectural Approach

3.3.1. LLCA—Low Level Core Agent

3.3.2. HLCA—High Level Core Agent

3.3.3. RMM—Robot Message Broker and Memory Management

3.3.4. RRT—Robot Resource Transport

3.3.5. RAPI—Robot API

3.4. R4A Robotic Resources as Components

3.5. Rapid Development of Robotic Applications

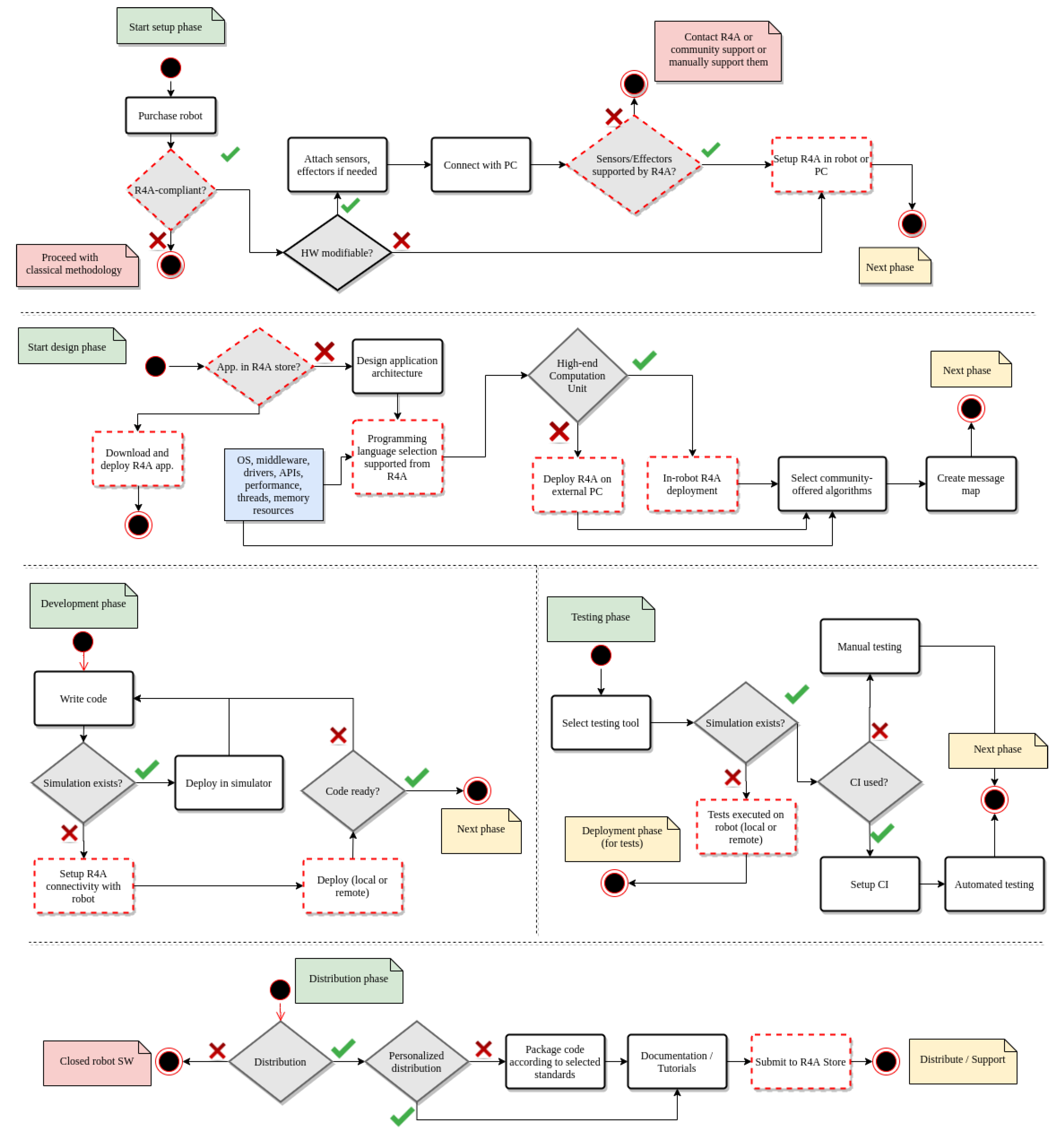

3.5.1. Setup Phase

3.5.2. Design Phase

3.5.3. Development and Testing Phases

3.5.4. Distribution Phase

4. Robot-Agnostic Application Example—A Case Study

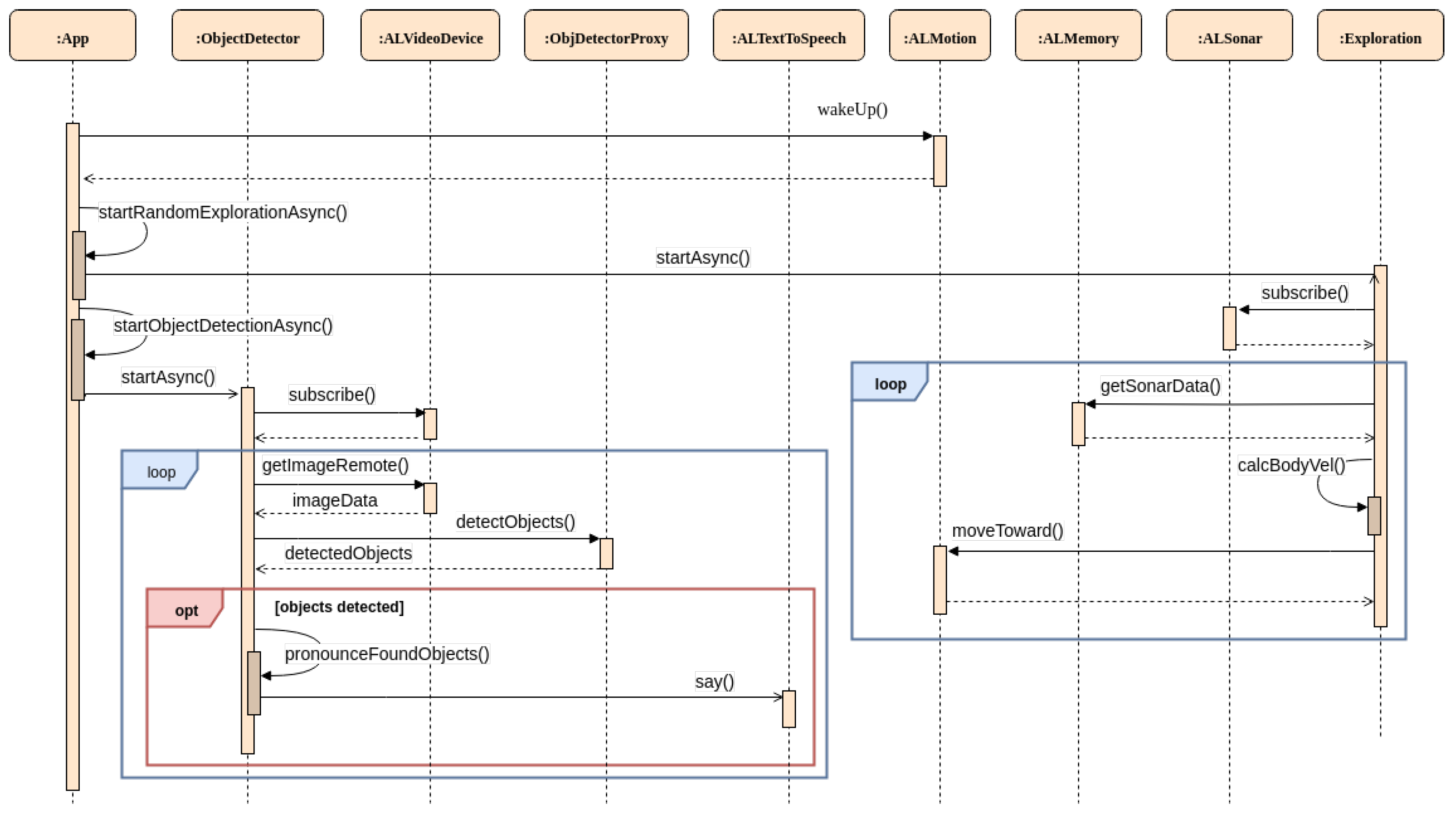

4.1. NAO Humanoid Robot—The Conventional Way

- ALMotionProxy::wakeUp: This is the initial command sent to the robot. The robot wakes up, sets the state of the motors to active, commands it to go to its initial position (stand) and finally sets the stiffness of the motor;

- ALMotionProxy::moveToward: This commands the robot to move at given y, x, z, theta velocities. This operation affects the overall body of the robot and not individual motors/joints;

- ALTextToSpeech::say: This utilizes the in-robot speech synthesis engine, transforming plain text to audio data, and performs playback via the NAO embedded speakers. This operation is used to inform (pronounce) about detected objects;

- ALVideoDevice: This module provides image streams obtained from the robot’s embedded front camera. It is used to obtain scene image frames to be subsequently sent to an object detector;

- ALSonar and ALMemory: The ALSonar module gives access to the ultrasonic sensor hardware and allows it to start data acquisition. In composition with the ALMemory module, it is possible to subscribe to sonar events and collect data, as long as they are available.

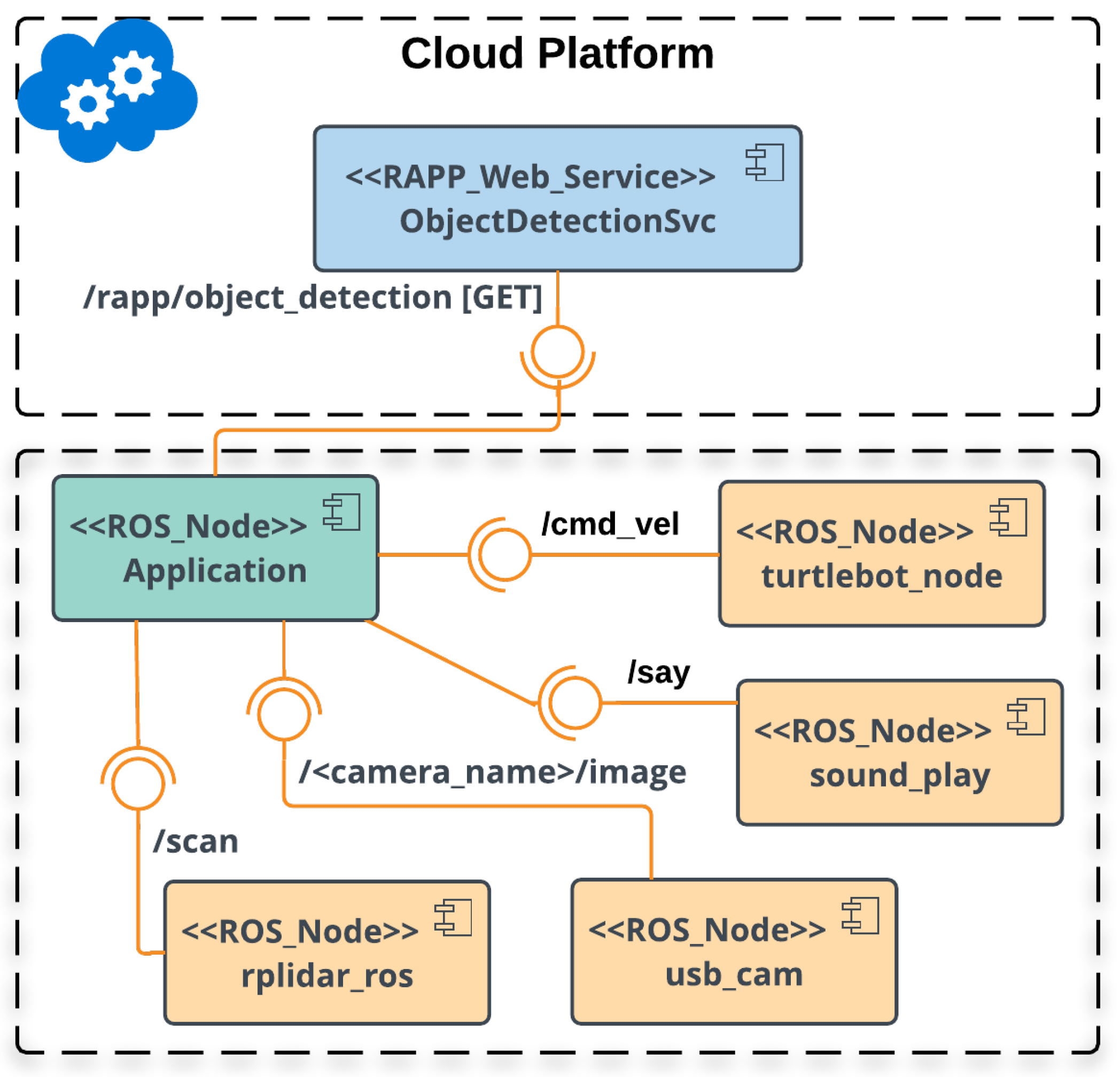

4.2. Turtlebot2—The Conventional Way

- A ROS subscriber that binds the LiDAR ROS topic to a callback, which will handle the data. This ROS topic is usually named;

- A ROS publisher to be used for setting velocities. The publisher must be bound to the topic and the velocities must be of type ;

- A callback function that will receive messages, compute the velocities and publish them in the topic;

- A ROS subscriber that binds the RGB camera topic to a callback ();

- A callback that will receive the published images from the camera topic in the form and implement a call to the ObjectDetection service of the R4A platform.

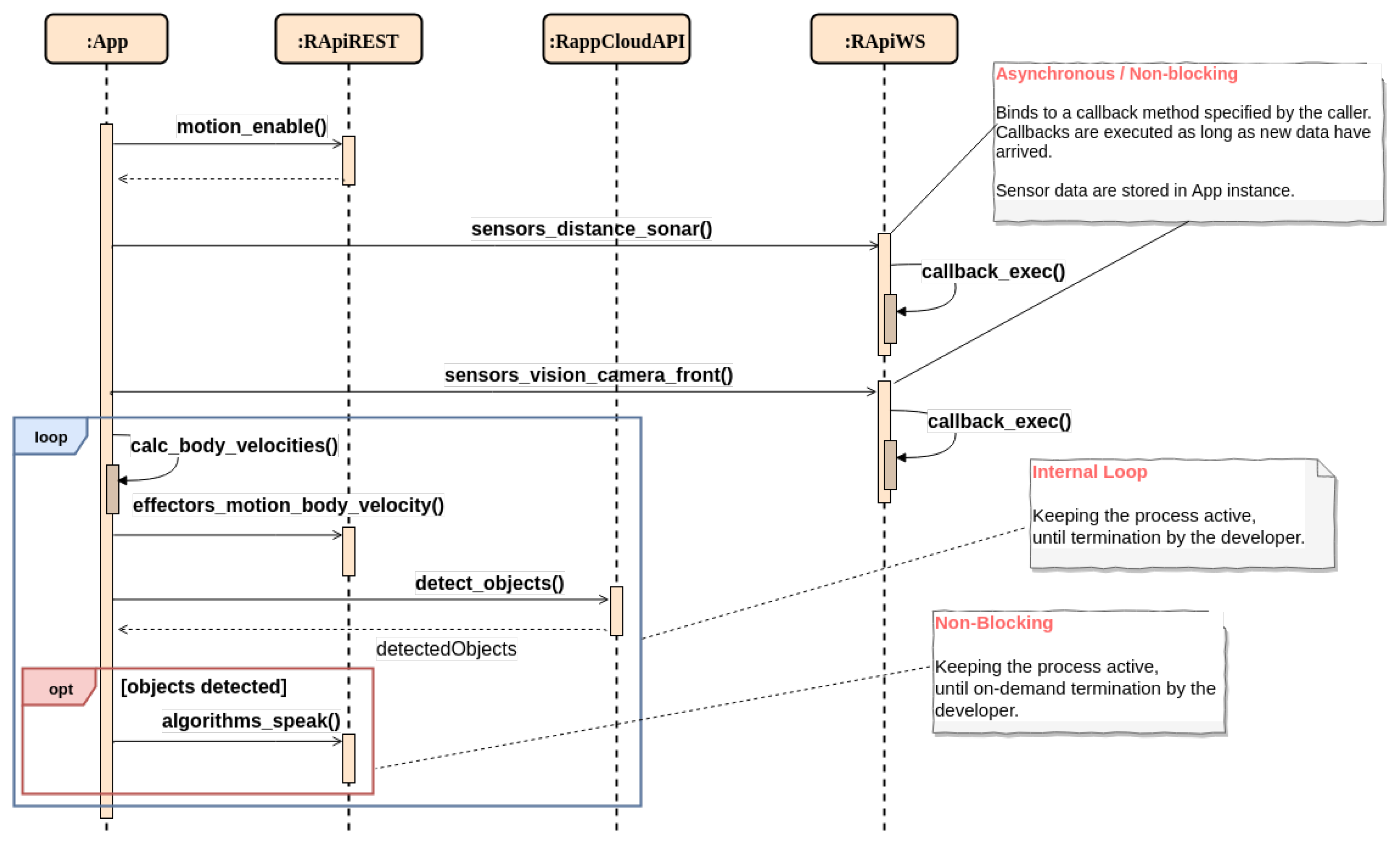

4.3. Robot-Agnostic Development of Applications

5. Discussion

5.1. Limitations

- Network existence necessity;

- Prohibited real-timeness;

- Not complete robotic agnosticity;

- Continuous robotic support effort;

- Extendability of robot resources.

5.2. Threats to Validity

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| R4A | Robotics4All |

| SW | Software |

| HW | Hardware |

| PC | Personal Computer |

| LLCA | Low-level Core Agent |

| HLCA | High-level Core Agent |

| RMM | Robot Message Broker and Memory Management |

| RTT | Robot Resource Transport |

| RAPI | Robot Application Interface |

| SLAM | Simultaneous Localization and Mapping |

| OS | Operating System |

| SDK | Software Development Kit |

| API | Application Interface |

| CI | Continues Integration |

| REST | Representation State Transfer |

| MDE | Model-Driven Engineering |

| QoS | Quality of Service |

References

- Catsoulis, J. Designing Embedded Hardware: Create New Computers and Devices; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2005. [Google Scholar]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Tsardoulias, E.; Mitkas, P. Robotic frameworks, architectures and middleware comparison. arXiv 2017, arXiv:1711.06842. [Google Scholar]

- Waszkowski, R. Low-code platform for automating business processes in manufacturing. IFAC-PapersOnLine 2019, 52, 376–381. [Google Scholar] [CrossRef]

- Elhadi, S.; Marzak, A.; Sael, N.; Merzouk, S. Comparative study of IoT protocols. In Smart Application and Data Analysis for Smart Cities (SADASC’18); Springer: Marrakesh, Morocco, 2018. [Google Scholar]

- Coste-Maniere, E.; Simmons, R. Architecture, the backbone of robotic systems. In Proceedings of the 2000 ICRA. Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 67–72. [Google Scholar]

- Albus, J.; McCain, H.; Lumia, R. NASA/NBS Standard Reference Model for Telerobot Control System Architecture (NASREM); NBS Tech. Technical report, Note 1235; NIST Research Information Center: Gaithersburg, MD, USA, 1987. [Google Scholar]

- Albus, J.S. Outline for a theory of intelligence. IEEE Trans. Syst. Man Cybern. 1991, 21, 473–509. [Google Scholar] [CrossRef] [Green Version]

- Brooks, R. A robust layered control system for a mobile robot. IEEE J. Robot. Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef] [Green Version]

- Arkin, R.C. Motor schema—Based mobile robot navigation. Int. J. Robot. Res. 1989, 8, 92–112. [Google Scholar] [CrossRef]

- Arkin, R.C. Towards Cosmopolitan Robots: Intelligent Navigation in Extended Man-Made Environments. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 1987. [Google Scholar]

- Brooks, A.; Kaupp, T.; Makarenko, A.; Williams, S.; Oreback, A. Towards component-based robotics. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 163–168. [Google Scholar]

- Stewart, D.B.; Khosla, P.K. Rapid development of robotic applications using component-based real-time software. In Proceedings of the 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems, Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995; Volume 1, pp. 465–470. [Google Scholar]

- Fernandez, J.A.; Gonzalez, J. NEXUS: A flexible, efficient and robust framework for integrating software components of a robotic system. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146), Leuven, Belgium, 20–20 May 1998; Volume 1, pp. 524–529. [Google Scholar]

- Fleury, S.; Herrb, M.; Chatila, R. A tool for the specification and the implementation of operating modules in a distributed robot architecture. Comput. Stand. Interfaces 1999, 6, 429. [Google Scholar] [CrossRef]

- Stasse, O.; Kuniyoshi, Y. Predn: Achieving efficiency and code re-usability in a programming system for complex robotic applications. In Proceedings of the 2000 ICRA. Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 81–87. [Google Scholar]

- Nesnas, I.A.; Volpe, R.; Estlin, T.; Das, H.; Petras, R.; Mutz, D. Toward developing reusable software components for robotic applications. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), Maui, HI, USA, 29 October–3 November 2001; Volume 4, pp. 2375–2383. [Google Scholar]

- Volpe, R.; Nesnas, I.; Estlin, T.; Mutz, D.; Petras, R.; Das, H. The CLARAty architecture for robotic autonomy. In Proceedings of the 2001 IEEE Aerospace Conference Proceedings (Cat. No. 01TH8542), Big Sky, MT, USA, 10–17 March 2001; Volume 1, pp. 1–121. [Google Scholar]

- Collett, T.H.; MacDonald, B.A.; Gerkey, B.P. Player 2.0: Toward a practical robot programming framework. In Proceedings of the Australasian conference on robotics and automation (ACRA 2005), Sydney, Australia, 5–7 December 2005; Citeseer: State College, PA, USA, 2005; p. 145. [Google Scholar]

- Bruyninckx, H. Open robot control software: The OROCOS project. In Proceedings of the 2001 ICRA, IEEE International Conference on Robotics and Automation (Cat. No. 01CH37164), Seoul, Korea, 21–26 May 2001; Volume 3, pp. 2523–2528. [Google Scholar]

- Magnenat, S.; Longchamp, V.; Mondada, F. ASEBA, an event-based middleware for distributed robot control. In Proceedings of the Workshops and Tutorials CD IEEE/RSJ 2007 International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; IEEE Press: New York, NY, USA, 2007. [Google Scholar]

- Cote, C.; Brosseau, Y.; Letourneau, D.; Raïevsky, C.; Michaud, F. Robotic software integration using MARIE. Int. J. Adv. Robot. Syst. 2006, 3, 10. [Google Scholar] [CrossRef] [Green Version]

- Uhl, K.; Ziegenmeyer, M. MCA2-an extensible modular framework for robot control applications. In Advances in Climbing and Walking Robots; World Scientific: Singapore, 2007; pp. 680–689. [Google Scholar]

- Calisi, D.; Censi, A.; Iocchi, L.; Nardi, D. OpenRDK: A modular framework for robotic software development. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1872–1877. [Google Scholar]

- Ando, N.; Suehiro, T.; Kotoku, T. A software platform for component based rt-system development: Openrtm-aist. In International Conference on Simulation, Modeling, and Programming for Autonomous Robots; Springer: Berlin/Heidelberg, Germany, 2008; pp. 87–98. [Google Scholar]

- Steck, A.; Lotz, A.; Schlegel, C. Model-driven engineering and run-time model-usage in service robotics. ACM Sigplan Not. 2011, 47, 73–82. [Google Scholar] [CrossRef] [Green Version]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In ICRA Workshop on Open Source Software; IEEE Press: Kobe, Japan, 2009; Volume 3, p. 5. [Google Scholar]

- Beetz, M.; Mösenlechner, L.; Tenorth, M. CRAM—A Cognitive Robot Abstract Machine for everyday manipulation in human environments. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 1012–1017. [Google Scholar]

- Tenorth, M.; Beetz, M. KnowRob—Knowledge processing for autonomous personal robots. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 4261–4266. [Google Scholar]

- Bruyninckx, H.; Klotzbücher, M.; Hochgeschwender, N.; Kraetzschmar, G.; Gherardi, L.; Brugali, D. The BRICS component model: A model-based development paradigm for complex robotics software systems. In Proceedings of the 28th Annual ACM Symposium on Applied Computing, Coimbra, Portugal, 18–22 March 2013; pp. 1758–1764. [Google Scholar]

- Schlegel, C.; Haßler, T.; Lotz, A.; Steck, A. Robotic software systems: From code-driven to model-driven designs. In Proceedings of the 2009 IEEE International Conference on Advanced Robotics, Singapore, 14–17 July 2009; pp. 1–8. [Google Scholar]

- Joyeux, S.; Albiez, J. Robot development: From components to systems. In Proceedings of the 6th National Conference on Control Architectures of Robots, Grenoble, France, 8–14 May 2011. [Google Scholar]

- Fernández-Madrigal, J.A.; Galindo, C.; González, J.; Cruz-Martín, E.; Cruz-Martín, A. A software engineering approach for the development of heterogeneous robotic applications. Robot. Comput.-Integr. Manuf. 2008, 24, 150–166. [Google Scholar] [CrossRef]

- Fernández-Madrigal, J.A. The BABEL Development System for Integrating Heterogeneous Robotic Software; Technology Report System Engineering and Automation Department, University of Malaga: Malaga, Spain, 2003. [Google Scholar]

- Tsardoulias, E.G.; Kintsakis, A.M.; Panayiotou, K.; Thallas, A.G.; Reppou, S.E.; Karagiannis, G.G.; Iturburu, M.; Arampatzis, S.; Zielinski, C.; Prunet, V.; et al. Towards an integrated robotics architecture for social inclusion–The RAPP paradigm. Cogn. Syst. Res. 2017, 43, 157–173. [Google Scholar] [CrossRef]

- Reppou, S.E.; Tsardoulias, E.G.; Kintsakis, A.M.; Symeonidis, A.L.; Mitkas, P.A.; Psomopoulos, F.E.; Karagiannis, G.T.; Zielinski, C.; Prunet, V.; Merlet, J.P.; et al. Rapp: A robotic-oriented ecosystem for delivering smart user empowering applications for older people. Int. J. Soc. Robot. 2016, 8, 539–552. [Google Scholar] [CrossRef] [Green Version]

- Szlenk, M.; Zieliński, C.; Figat, M.; Kornuta, T. Reconfigurable agent architecture for robots utilising cloud computing. In Progress in Automation, Robotics and Measuring Techniques; Springer: Berlin/Heidelberg, Germany, 2015; pp. 253–264. [Google Scholar]

- Soley, R. Model driven architecture. OMG White Pap. 2000, 308, 5. [Google Scholar]

- Fowler, M. Domain-Specific Languages; Pearson Education: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Pastor, O.; Molina, J.C. Model-Driven Architecture in Practice: A Software Production Environment Based on Conceptual Modeling; Springer: Berlin/Heidelberg, Germany, 2007; Volume 1. [Google Scholar]

- Caldiera, V.R.B.G.; Rombach, H.D. The goal question metric approach. Encycl. Softw. Eng. 1994, 528–532. [Google Scholar]

- Yan, H.; Hua, Q.; Wang, Y.; Wei, W.; Imran, M. Cloud robotics in smart manufacturing environments: Challenges and countermeasures. Comput. Electr. Eng. 2017, 63, 56–65. [Google Scholar] [CrossRef]

- Simoens, P.; Dragone, M.; Saffiotti, A. The Internet of Robotic Things: A review of concept, added value and applications. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418759424. [Google Scholar] [CrossRef]

- Schlegel, C.; Lotz, A.; Lutz, M.; Stampfer, D.; Inglés-Romero, J.F.; Vicente-Chicote, C. Model-driven software systems engineering in robotics: Covering the complete life-cycle of a robot. it-Inf. Technol. 2015, 57, 85–98. [Google Scholar] [CrossRef]

- Bubeck, A.; Weisshardt, F.; Verl, A. BRIDE-A toolchain for framework-independent development of industrial service robot applications. In Proceedings of the ISR/Robotik 2014, 41st International Symposium on Robotics, VDE, Munich, Germany, 2–3 June 2014; pp. 1–6. [Google Scholar]

- Jordan, S.; Haidegger, T.; Kovács, L.; Felde, I.; Rudas, I. The rising prospects of cloud robotic applications. In Proceedings of the 2013 IEEE 9th International Conference on Computational Cybernetics (ICCC), Tihany, Hungary, 8–10 July 2013; pp. 327–332. [Google Scholar]

- Beigi, N.K.; Partov, B.; Farokhi, S. Real-time cloud robotics in practical smart city applications. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–5. [Google Scholar]

| Model Class | Category | Examples |

|---|---|---|

| Sensors | Acoustic | Microphones |

| Vision | RGB/Depth camera | |

| Distance | LiDAR, Sonar, IR, point cloud | |

| Chemical | CO, humidity | |

| Electric | Battery voltage, power consumption | |

| Navigation | Accelerometers, odometry | |

| Position | GPS, compasses | |

| Speed | Tachometers, IMU | |

| Pressure | Tactiles, bumbers | |

| Temperature | Temperature sensors | |

| Effectors | Optical | LEDs, lights |

| Motion | Motors, base, posture | |

| Acoustic | Speakers, beepers |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panayiotou, K.; Tsardoulias, E.; Zolotas, C.; Symeonidis, A.L.; Petrou, L. A Framework for Rapid Robotic Application Development for Citizen Developers. Software 2022, 1, 53-79. https://doi.org/10.3390/software1010004

Panayiotou K, Tsardoulias E, Zolotas C, Symeonidis AL, Petrou L. A Framework for Rapid Robotic Application Development for Citizen Developers. Software. 2022; 1(1):53-79. https://doi.org/10.3390/software1010004

Chicago/Turabian StylePanayiotou, Konstantinos, Emmanouil Tsardoulias, Christoforos Zolotas, Andreas L. Symeonidis, and Loukas Petrou. 2022. "A Framework for Rapid Robotic Application Development for Citizen Developers" Software 1, no. 1: 53-79. https://doi.org/10.3390/software1010004

APA StylePanayiotou, K., Tsardoulias, E., Zolotas, C., Symeonidis, A. L., & Petrou, L. (2022). A Framework for Rapid Robotic Application Development for Citizen Developers. Software, 1(1), 53-79. https://doi.org/10.3390/software1010004