Abstract

Proximal nested sampling was introduced recently to open up Bayesian model selection for high-dimensional problems such as computational imaging. The framework is suitable for models with a log-convex likelihood, which are ubiquitous in the imaging sciences. The purpose of this article is two-fold. First, we review proximal nested sampling in a pedagogical manner in an attempt to elucidate the framework for physical scientists. Second, we show how proximal nested sampling can be extended in an empirical Bayes setting to support data-driven priors, such as deep neural networks learned from training data.

1. Introduction

In many of the sciences, not only is one interested in estimating the parameters of an underlying model, but deciding which model is best among a number of alternatives is of critical scientific interest. Bayesian model comparison provides a principled approach to model selection [1] that has found widespread application in the sciences [2].

Bayesian model comparison requires computation of the model evidence:

also called the marginal likelihood, where denotes data, parameters of interest, and M the model under consideration. We adopt the shorthand notation for the likelihood of and prior of . Evaluating the multi-dimensional integral of the model evidence is computationally challenging, particularly in high dimensions. While a number of highly successful approaches to computing the model evidence have been developed, such as nested sampling (e.g., [2,3,4,5,6,7,8]) and the learned harmonic mean estimator [9,10,11] Need to update arxiv number of normalizing flow harmonic paper when have it., previous approaches do not scale to the very high-dimensional settings of computational imaging, which is our driving motivation.

The proximal nested sampling framework was introduced recently by a number of authors of the current article in order to open up Bayesian model selection for high-dimensional imaging problems [12]. Proximal nested sampling is suitable for models for which the likelihood is log-convex, which are ubiquitous in the imaging sciences. By restricting the class of models considered, it is possible to exploit structure of the problem to enable computation in very high-dimensional settings of and beyond.

Proximal nested sampling draws heavily on convex analysis and proximal calculus. In this article we present a pedagogical review of proximal nested sampling, sacrificing some mathematical rigor in an attempt to provide greater accessibility. We also provide a concise review of convexity and proximal calculus to introduce the background underpinning the framework. We assume the reader is familiar with nested sampling, and hence we avoid repeating an introduction to nested sampling and instead refer the reader to other sources that provide excellent descriptions [2,3,8]. Finally, for the first time, we show in an empirical Bayes setting how proximal nested sampling can be extended to support data-driven priors, such as deep neural networks learned from training data.

2. Convexity and Proximal Calculus

We present a concise review of convexity and proximal calculus to introduce the background underpinning proximal nested sampling to make it more accessible.

2.1. Convexity

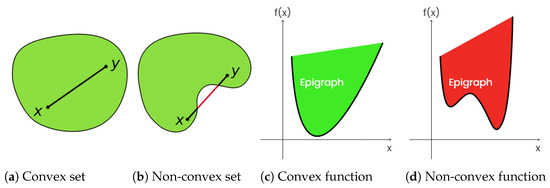

Proximal nested sampling draws on convexity, key concepts of which are illustrated in Figure 1. A set is convex if for any and we have . The epigraph of a function is defined by . The function f is convex if and only if its epigraph is convex. A convex function is lower semicontinuous if its epigraph is closed (i.e., includes its boundary).

Figure 1.

Proximal nested sampling considers likelihoods that are log-convex and lower semicontinuous. A lower semicontinuous convex function has a convex and closed epigraph.

2.2. Proximity Operator

Proximal nested sampling leverages proximal calculus [13,14], a key component of which is the proximity operator, or prox. The proximity operator of the function f with parameter is defined by

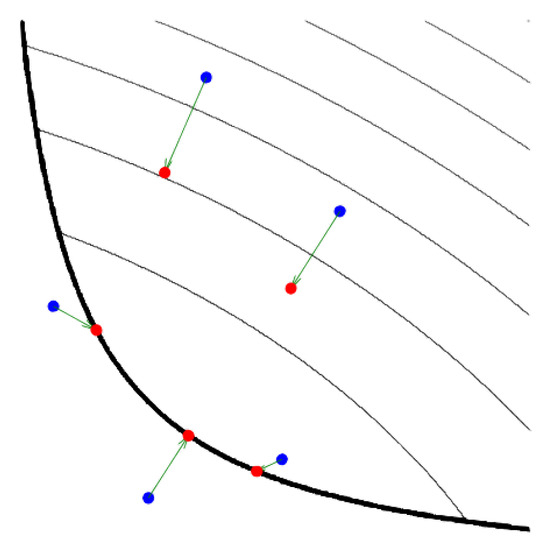

The proximity operator maps a point x towards the minimum of f while remaining in the proximity of the original point. The parameter controls how close the mapped point remains to x. An illustration is given in Figure 2.

Figure 2.

Illustration of the proximity operator (reproduced from [14]). The proximal operator maps the blue points to red points (i.e., from base to head of arrows). The thick black line defines the domain boundary, while the thin black lines define level sets (iso-contours) of f. The proximity operator maps points towards the minimum of f while remaining in the proximity of the original point.

The proximity operator can be considered as a generalisation of the projection onto a convex set. Indeed, the projection operator can be expressed as a prox by

with function f given by the characteristic function if and zero otherwise.

2.3. Moreau–Yosida Regularisation

The final component required in the development of proximal nested sampling is Moreau–Yosida regularisation (e.g., [14]). The Moreau–Yosida envelop of a convex function is given by the infimal convolution:

The Moreau–Yoshida envelope of a function can be interpreted as taking its convex conjugate, adding regularisation, before taking the conjugate again [14]. Consequently, it provides a smooth regularised approximation of f, which is very useful to enable the use of gradient-based computational algorithms (e.g., [15]).

The Moreau–Yosida envelope exhibits the following properties. First, controls the degree of regularisation with as . Second, the gradient of the Moreau–Yosida envelope of f can be computed through its prox by .

3. Proximal Nested Sampling

The challenge of nested sampling in high-dimensional settings is to sample from the prior distribution subject to a hard likelihood constraint [2,3,8]. Proximal nested sampling addresses this challenge for the case of log-convex likelihoods, which are widespread in computational imaging problems. In this section we review the proximal nested sampling framework [12] in a pedagogical manner, sacrificing some mathematical rigor in an attempt to improve readability and accessibility.

3.1. Constrained Sampling Formulation

Consider a prior and likelihood and , where the log-likehood is a convex lower semicontinuous function. The log-prior need only be differentiable or convex (it need not be convex if it is differentiable).

We consider sampling from the prior , such that for some likelihood value . Let and be the indicator function and characteristic function corresponding to this constraint, respectively, defined as

Since log is monotonic, is equivalent to for . Explicitly define the convex set of the likelihood constraint by . Then, is equivalent to , where if and zero otherwise.

Let represent the prior distribution with the hard likelihood constraint . Since , then we have

To sample from the constrained prior we require sampling techniques that firstly can scale to high-dimensional settings and that secondly can support the convex constraint .

3.2. Langevin MCMC Sampling

Langevin Markov chain Monte Carlo (MCMC) sampling has been demonstrated to be highly effective at sampling in high-dimensional settings by exploiting gradient information [15,16]. The Langevin stochastic differential equation associated with distribution is a stochastic process defined by

where is Brownian motion. This process converges to as time t increases and is therefore useful for generating samples from . In practice, we compute a discrete-time approximation of by the conventional Euler–Maruyama discretisation:

where is a sequence of standard Gaussian random variables and is a step size.

Equation (8) provides a strategy for sampling in high-dimensions. However, notice that the updates rely on the score of the target distribution . Nominally the target distribution must therefore be differentiable, which is not the case for our target of interest given by Equation (6). The prior may or may not be differentiable but the likelihood constraint certainly is not. Proximal versions of Langevin sampling have been developed to address the setting where the distribution is log-convex but not necessarily differentiable [15,16]. We follow a similar approach.

3.3. Proximal Nested Sampling Framework

The proximal nested sampling framework follows by taking the constrained sampling formulation of Equation (6), adopting Langevin MCMC sampling of Equation (8), and applying Moreau–Yosida regularisation of Equation (4) to the convex constraint to yield a differentiable target. This strategy yields (see [12]) the update equation:

where is the step size and is the Moreau–Yosida regularisation parameter.

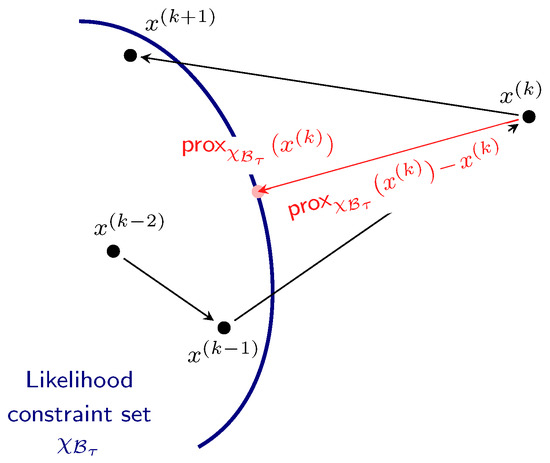

Further intuition regarding proximal nested sampling can be gained by examining the term , together with Figure 3. The vector points from the sample to its projection onto the likelihood constraint. If the sample is already in the likelihood-restricted prior support , i.e., , the term disappears and the Markov chain iteration simply involves the standard Langevin MCMC update. In contrast, if is not in , i.e., , then a step is taken in the direction , which acts to move the next iteration of the Markov chain in the direction of the projection of onto the convex set . This term therefore acts to push the Markov chain back into the constraint set if it wanders outside of it. (Note that proximal nested sampling has some similarity with Galilean [17] and constrained Hamiltonian [18] nested sampling. In these approaches, Makov chains are also considered and if the Markov chain steps outside of the likelihood-constraint then it is reflected by an approximation of the shape of the boundary.)

Figure 3.

Diagram illustrating proximal nested sampling. If a sample outside of the likelihood constraint is considered, then proximal nested sampling introduces a term in the direction of the projection of onto the convex set defining the likelihood constraint, thereby acting to push the Markov chain back into the constraint set if it wanders outside of it. A subsequent Metropolis–Hastings step can be introduced to enforce strict adherence to the convex likelihood constraint.

We have so far assumed that the (log) prior is differentiable (see Equation (9)). This may not be the case, as is typical for sparsity-promoting priors (e.g., for some wavelet dictionary ). Then, we make a Moreau–Yosida approximation of the log-prior, yielding the update equation:

For notational simplicity here we have adopted the same regularisation parameter for each Moreau–Yosida approximation.

With the current formulation we are not guaranteed to recover samples from the prior subject to the hard likelihood constraint due to the approximation introduced in the Moreu–Yosida regularisation and due to the approximation in discretising the underlying Langevin stochastic differential equation. We therefore introduce a Metropolis–Hastings correction step to eliminate the bias introduced by these approximations and ensure convergence to the required target density (see [12] for further details).

Finally, we adopt this strategy for sampling from the constrained prior in the standard nested sampling strategy to recover the proximal nested sampling framework. The algorithm can be initalised with samples from the prior as described by the update equations above but with the likelihood term removed, i.e., with .

3.4. Explicit Forms of Proximal Nested Sampling

While we have discussed the general framework for proximal nested sampling, we have yet to address the issue of computing the proximity operators involved. As Equation (2) demonstrates, computing proximity operators involves solving an optimisation problem. Only in certain cases are closed form solutions available [13]. Explicit forms of proximal nested sampling must therefore be considered for the problem at hand.

We focus on a common high-dimensional inverse imaging problem where we acquire noisy observations, , of an underlying image x via some measurement model , in the presence of Gaussian noise n (without loss of generality we consider independent and identically distributed noise here). We consider a Gaussian negative likelihood, , and a sparsity-promoting prior, , for some wavelet dictionary . The prox of the prior can be computed in closed-form by [13]

where is the soft thresholding function with threshold (recall is the scale of the sparsity-promoting prior, i.e., the regularisation parameter, defined above). However, the prox of the likelihood is not so straightforward. The prox for the likelihood can be recast as a saddle-point problem that can be solved iteratively by a primal dual method initialised by the current sample position (see [12] for further details):

- ;

- .

Combining these algorithms to efficiently compute prox operators with the proximal nested sampling framework, we can compute the model evidence to perform Bayesian model comparison in high-dimensional settings. We can also obtain posterior distributions with the usual weighted samples from the dead points of nested sampling. This allows one to recover, for example, point estimates such as the posterior mean image.

4. Deep Data-Driven Priors

While hand-crafted priors, such as wavelet-based sparsity promoting priors, are common in computational imaging, they provide only limited expressivity. If example images are available an empirical Bayes approach with data-driven priors can be taken, where the prior is learned from training data. Since proximal nested sampling requires only the log-likelihood to be convex, complex data-driven priors, such as represented by deep neural networks, can be integrated into the framework. Through Tweedie’s formula we describe how proximal nested sampling can be adapted to support data-driven priors, opening up Bayesian model selection for data-driven approaches. We take a similar approach to [19], where data-driven priors are integrated into Langevin MCMC sampling strategies, although in that work model selection is not considered.

4.1. Tweedie’s Formula and Data-Driven Priors

Tweedie’s formula is a remarkable result in Bayesian estimation credited to personal correspondence with Maurice Kenneth Tweedie [20]. Tweedie’s formula has gained renewed interest in recent years [19,21,22,23] due to its connection to score matching [24,25,26] and denoising diffusion models [27,28], which, as of this writing, provide state-of-the-art performance in deep generative modelling.

Tweedie’s result follows by considering the following scenario. Consider x sampled from a prior distribution and noisy observations . Tweedie’s formula gives the posterior expectation of x given z as

where is the marginal distribution of z (for further details see, e.g., [21]). The critical advantage of Tweedie’s formula is that it does not require knowledge of the underlying distribution but rather only the marginalised distribution of the observation. Equation (12) can be interpreted as a denoising strategy to estimate x from noisy observations z. Moreover, Tweedie’s formula can also be used to relate a denoiser (potentially a trained deep neural network) to the score .

In a data-driven setting, where the underlying prior is implicitly specified by training data (which are considered to be samples from the prior), there is no guarantee that the underlying prior, and therefore the posterior, is well-suited for gradient-based Bayesian computation such as Langevin sampling; e.g., it may not be differentiable. Therefore, we consider a regularised version of the prior defined by Gaussian smoothing:

This regularisation can also be viewed as adding a small amount of regularising Gaussian noise. We can therefore leverage Tweedie’s formula to relate the regularised prior distribution to a denoiser trained to recover x from noisy observations ; i.e., the score of the regualised prior can be related to the denoiser by

Denoisers are commonly integrated in proximal optimisation algorithms in replacement of proximity operators, giving rise to so-called plug-and-play (PnP) methods [29,30] and, more recently, also into Bayesian computational algorithms [19]. Typically, denoisers are represented by deep neural networks, which can be trained by injecting a small amount of noise in training data and learning to denoise the corrupted data. While a noise level needs to be chosen, as discussed above, this is considered a regularisation of the prior and so the denoiser need not be trained on the noise level of a problem at hand. In this manner, the same denoiser can be used for multiple subsequent problems (hence the PnP name). The learned score of the regularised prior inherits the same properties as the denoiser, such as smoothness, and hence the denoiser should be considered carefully. Well-behaved denoisers have been considered already in PnP methods (in order to provide convergence guarantees) and a popular approach for imaging problems is the DnCNN model [30], which is based on a deep convolutional neural network and that is (Lipschitz) continuous.

4.2. Proximal Nested Sampling with Data-Driven Priors

By Tweedie’s formula, the standard proximal nested sampling update of Equation (9) can be revised to integrate a learned denoiser, yielding

where we have included a regularisation parameter that allows us to balance the influence of the prior and the data fidelity terms [19]. We typically consider a deep convolutional neural network based on the DnCNN model [30] since it is (Lipschitz) continuous and has been demonstrated to perform very well in PnP settings [19,30]. Again, this sampling strategy can then be integrated into the standard nested sampling framework.

We can therefore support data-driven priors in the proximal nested sampling framework by integrating a deep denoiser that learns to denoise training data, using Tweedie’s formula to relate this to the score of a regularised data-driven prior.

5. Numerical Experiments

The new methodology presented allows us to perform Bayesian model comparison between a data-driven and hand-crafted prior (validation of proximal nested sampling in a setting where the ground truth can be computed directly has been performed already [12]). We consider a simple radio interferometric imaging reconstruction problem as an illustration. We assume the same observational model as Section 3.4, with white Gaussian noise giving a signal-to-noise ratio (SNR) of 15 dB. The measurement operator is a masked Fourier transform as a simple model of a radio interferometric telescope. The mask is built by randomly selecting of the Fourier coefficients. A Gaussian likelihood is used in both models. For the hand-crafted prior, we consider a sparsity-promoting prior using a Daubechis 6 wavelet dictionary. We base the data-driven prior on a DnCNN [30] model trained on galaxy images extracted from the IllustrisTNG simulations [31]. We also consider an IllustrisTNG galaxy simulation, not used in training, as the ground-truth test image. We generate samples following the proximal nested sampling strategies of Equations (10) and (15) for the hand-crafted and data-driven priors, respectively. Posterior inferences (e.g., posterior mean image) and the model evidence can then be computed from nested sampling samples in the usual manner. The step size is set to , the Moreau–Yosida regularisation parameter to , and the regularisation strength of wavelet-based model to . We consider noise level and set the regularisation parameter of the data-driven prior to . For the nested sampling methods, the number of live and dead samples is set to and , respectively. For the Langevin sampling, we use a thinning factor of 20 and set the number of burn-in iterations to .

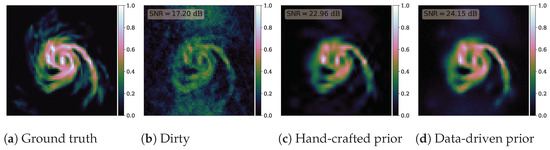

Results are presented in Figure 4. The data-driven prior results in a superior reconstruction with an improvement in SNR of dB, although it may be difficult to tell simply from visual inspection of the recovered images. Computing the SNR of the reconstructed images requires knowledge of the ground truth, which clearly is not accessible in realistic settings involving real observational data. The Bayesian model evidence, computed by proximal nested sampling, proves a way to compare the hand-crafted and data-driven models without requiring knowledge of the ground truth and is therefore applicable in realistic scenarios. We compute log evidences of for the hand-crafted prior and for the data-driven prior. Consequently, the data-driven model is preferred by the model evidence, which agrees with the SNR levels computed from the ground truth. These results are all as one might expect since learned data-driven priors are more expressive than hand-crafted priors and can better adapt to model high-dimensional images.

Figure 4.

Results of radio interferometric imaging reconstruction problem. (a) Ground truth galaxy image. (b) Dirty reconstruction based on pseudo-inverting the measurement operator . (c) Posterior mean reconstruction computed from proximal nested samples for the hand-crafted wavelet-sparsity prior. (d) Posterior mean reconstruction for the data-driven prior based on a deep neural network (DnCNN) trained on example images. Reconstruction SNR is shown on each image. The computed SNR levels demonstrate that the data-driven prior results in a superior reconstruction quality, although this may not be obvious from a visual assessment of the reconstructed images. Computing the reconstructed SNR requires knowledge of the ground truth, which is not available in realistic settings. The Bayesian model evidence proves a way to compare the hand-crafted and data-driven models without requiring knowledge of the ground truth. For this example, the Bayesian evidence correctly selects the data-driven prior as the best model.

6. Conclusions

Proximal nested sampling leverages proximal calculus to extend nested sampling to high-dimensional settings for problems involving log-convex likelihoods, which are ubiquitous in computational imaging. The purpose of this article is two-fold. First, we review proximal nested sampling in a pedagogical manner in an attempt to elucidate the framework for physical scientists. Second, we show how proximal nested sampling can be extended in an empirical Bayes setting to support data-driven priors, such as deep neural networks learned from training data. We show only preliminary results for learned proximal nested sampling and will present a more thorough study in a follow-up article.

Author Contributions

Conceptualization, J.D.M. and M.P.; methodology, J.D.M., X.C. and M.P.; software, T.I.L., M.A.P. and X.C.; validation, T.I.L., M.A.P. and X.C.; resources, J.D.M.; data curation, M.A.P.; writing—original draft preparation, J.D.M. and T.I.L.; writing—review and editing, J.D.M., T.I.L., M.A.P., X.C. and M.P.; supervision, J.D.M.; project administration, J.D.M.; funding acquisition, J.D.M., M.A.P. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by EPSRC grant number EP/W007673/1.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The ProxNest code and experiments are available at https://github.com/astro-informatics/proxnest (accessed on 13 July 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Robert, C.P. The Bayesian Choice; Springer: New York, NY, USA, 2007. [Google Scholar]

- Ashton, G.; Bernstein, N.; Buchner, J.; Chen, X.; Csányi, G.; Fowlie, A.; Feroz, F.; Griffiths, M.; Handley, W.; Habeck, M.; et al. Nested sampling for physical scientists. Nat. Rev. Methods Prim. 2022, 2, 39. [Google Scholar] [CrossRef]

- Skilling, J. Nested sampling for general Bayesian computation. Bayesian Anal. 2006, 1, 833–859. [Google Scholar] [CrossRef]

- Mukherjee, P.; Parkinson, D.; Liddle, A.R. A nested sampling algorithm for cosmological model selection. Astrophys. J. 2006, 638, L51–L54. [Google Scholar] [CrossRef]

- Feroz, F.; Hobson, M.P. Multimodal nested sampling: An efficient and robust alternative to MCMC methods for astronomical data analysis. Mon. Not. R. Astron. Soc. (MNRAS) 2008, 384, 449–463. [Google Scholar] [CrossRef]

- Feroz, F.; Hobson, M.P.; Bridges, M. MULTINEST: An efficient and robust Bayesian inference tool for cosmology and particle physics. Mon. Not. R. Astron. Soc. (MNRAS) 2009, 398, 1601–1614. [Google Scholar] [CrossRef]

- Handley, W.J.; Hobson, M.P.; Lasenby, A.N. POLYCHORD: Nested sampling for cosmology. Mon. Not. R. Astron. Soc. Lett. 2015, 450, L61–L65. [Google Scholar] [CrossRef]

- Buchner, J. Nested sampling methods. arXiv 2021, arXiv:2101.09675. [Google Scholar] [CrossRef]

- McEwen, J.D.; Wallis, C.G.R.; Price, M.A.; Docherty, M.M. Machine learning assisted Bayesian model comparison: The learnt harmonic mean estimator. arXiv 2022, arXiv:2111.12720. [Google Scholar]

- Spurio Mancini, A.; Docherty, M.M.; Price, M.A.; McEwen, J.D. Bayesian model comparison for simulation-based inference. RAS Tech. Instrum. 2023, 2, 710–722. [Google Scholar] [CrossRef]

- Polanska, A.; Price, M.A.; Spurio Mancini, A.; McEwen, J.D. Learned harmonic mean estimation of the marginal likelihood with normalising flows. Phys. Sci. Forum 2023, 9, 10. [Google Scholar] [CrossRef]

- Cai, X.; McEwen, J.D.; Pereyra, M. Proximal nested sampling for high-dimensional Bayesian model selection. Stat. Comput. 2022, 32, 87. [Google Scholar] [CrossRef]

- Combettes, P.; Pesquet, J.C. Proximal Splitting Methods in Signal Processing; Springer: New York, NY, USA, 2011; pp. 185–212. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends Optim. 2013, 1, 123–231. [Google Scholar]

- Pereyra, M. Proximal Markov chain Monte Carlo algorithms. Stat. Comput. 2016, 26, 745–760. [Google Scholar] [CrossRef]

- Durmus, A.; Moulines, E.; Pereyra, M. Efficient Bayesian computation by proximal Markov chain Monte Carlo: When Langevin meets Moreau. SIAM J. Imaging Sci. 2018, 1, 473–506. [Google Scholar] [CrossRef]

- Skilling, J. Bayesian computation in big spaces-nested sampling and Galilean Monte Carlo. In Proceedings of the AIP Conference 31st American Institute of Physics, Zurich, Switzerland, 29 July–3 August 2012; Volume 1443, pp. 145–156. [Google Scholar]

- Betancourt, M. Nested sampling with constrained hamiltonian monte carlo. AIP Conf. Proc. 2011, 1305, 165–172. [Google Scholar] [CrossRef]

- Laumont, R.; Bortoli, V.D.; Almansa, A.; Delon, J.; Durmus, A.; Pereyra, M. Bayesian imaging using Plug & Play priors: When Langevin meets Tweedie. SIAM J. Imaging Sci. 2022, 15, 701–737. [Google Scholar]

- Robbins, H. An Empirical Bayes Approach to Statistics. In Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, December 1954 and July–August 1955; University of California Press: Berkeley, CA, USA, 1956; Volume 3.1, pp. 157–163. [Google Scholar]

- Efron, B. Tweedie’s formula and selection bias. J. Am. Stat. Assoc. 2011, 106, 1602–1614. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Ye, J.C. Noise2score: Tweedie’s approach to self-supervised image denoising without clean images. Adv. Neural Inf. Process. Syst. 2021, 34, 864–874. [Google Scholar]

- Chung, H.; Sim, B.; Ryu, D.; Ye, J.C. Improving diffusion models for inverse problems using manifold constraints. arXiv 2022, arXiv:2206.00941. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. PMLR 2015, 37, 2256–2265. [Google Scholar]

- Song, Y.; Ermon, S. Generative modeling by estimating gradients of the data distribution. In Proceedings of the 33rd Annual Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada, 8–14 December 2019. [Google Scholar]

- Song, Y.; Ermon, S. Improved techniques for training score-based generative models. Adv. Neural Inf. Process. Syst. 2020, 33, 12438–12448. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Venkatakrishnan, S.V.; Bouman, C.A.; Wohlberg, B. Plug-and-play priors for model based reconstruction. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, IEEE, Austin, TX, USA, 3–5 December 2013; pp. 945–948. [Google Scholar]

- Ryu, E.; Liu, J.; Wang, S.; Chen, X.; Wang, Z.; Yin, W. Plug-and-play methods provably converge with properly trained denoisers. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 5546–5557. [Google Scholar]

- Nelson, D.; Springel, V.; Pillepich, A.; Rodriguez-Gomez, V.; Torrey, P.; Genel, S.; Vogelsberger, M.; Pakmor, R.; Marinacci, F.; Weinberger, R.; et al. The IllustrisTNG Simulations: Public Data Release. Comput. Astrophys. Cosmol. 2019, 6, 2. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).