Upscaling Reputation Communication Simulations †

Abstract

:1. Introduction

2. The Reputation Game

2.1. Principles

2.2. Steps towards Reality

2.3. Large Groups

2.3.1. Group Formation

2.3.2. One-to-Many Conversations

2.3.3. A Seemingly Infinite Network

3. Results and Discussion

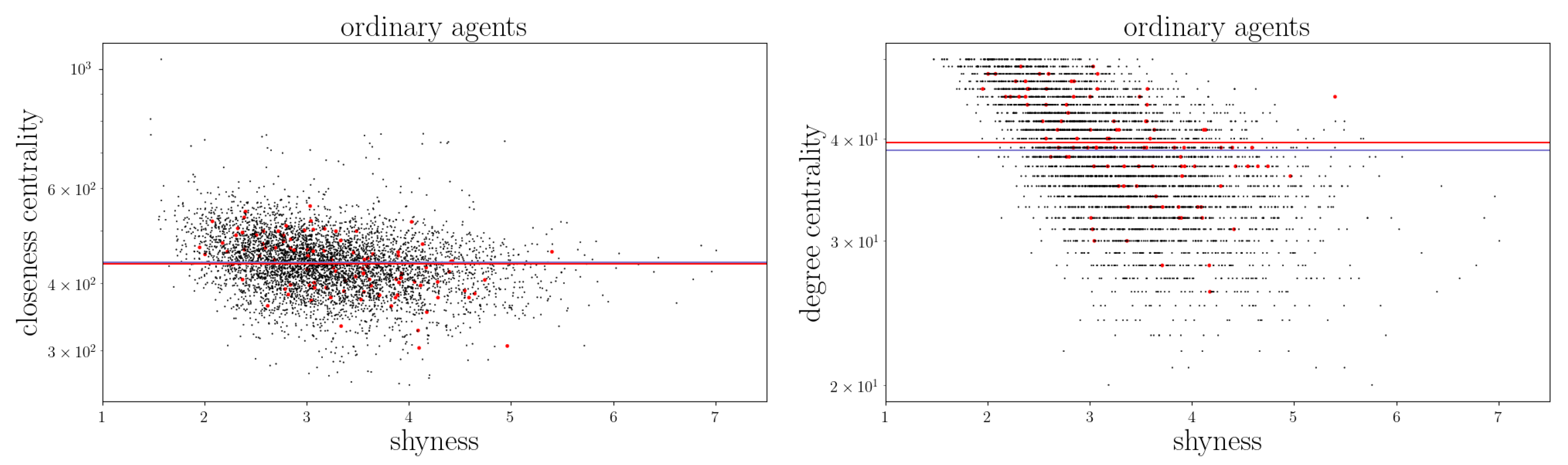

3.1. Network Structure

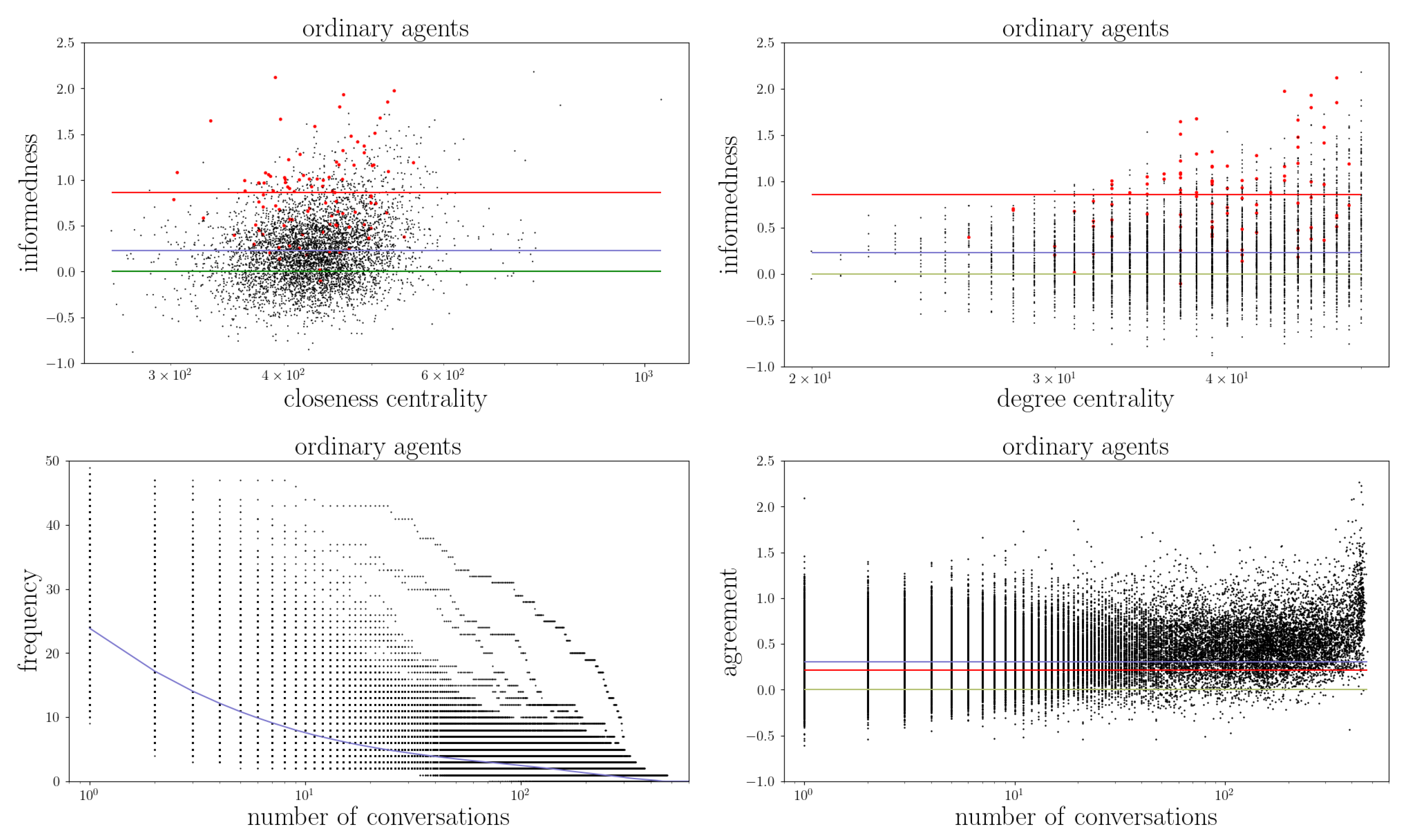

3.2. Information Transmission

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Enßlin, T.; Kainz, V.; Bœhm, C. A Reputation Game Simulation: Emergent Social Phenomena from Information Theory. arXiv 2021, arXiv:2106.05414. [Google Scholar] [CrossRef]

- Toma, C.L.; Hancock, J.T. Self-affirmation underlies Facebook use. Personal. Soc. Psychol. Bull. 2013, 39, 321–331. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Luo, Y. Degree centrality, betweenness centrality, and closeness centrality in social network. In Proceedings of the 2017 2nd International Conference on Modelling, Simulation and Applied Mathematics (MSAM2017), Bangkok, Thailand, 26–27 March 2017; Volume 132, pp. 300–303. [Google Scholar]

- Hasher, L.; Goldstein, D.; Toppino, T. Frequency and the conference of referential validity. J. Verbal Learn. Verbal Behav. 1977, 16, 107–112. [Google Scholar] [CrossRef]

| Agent a | Friendship | Shyness | Deception | Receiver | ||||

|---|---|---|---|---|---|---|---|---|

| Affinity | ∝ | ∝ | = | = | Strategy | Strategy | ||

| ordinary | ; else | critical |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kainz, V.; Bœhm, C.; Utz, S.; Enßlin, T. Upscaling Reputation Communication Simulations. Phys. Sci. Forum 2022, 5, 39. https://doi.org/10.3390/psf2022005039

Kainz V, Bœhm C, Utz S, Enßlin T. Upscaling Reputation Communication Simulations. Physical Sciences Forum. 2022; 5(1):39. https://doi.org/10.3390/psf2022005039

Chicago/Turabian StyleKainz, Viktoria, Céline Bœhm, Sonja Utz, and Torsten Enßlin. 2022. "Upscaling Reputation Communication Simulations" Physical Sciences Forum 5, no. 1: 39. https://doi.org/10.3390/psf2022005039

APA StyleKainz, V., Bœhm, C., Utz, S., & Enßlin, T. (2022). Upscaling Reputation Communication Simulations. Physical Sciences Forum, 5(1), 39. https://doi.org/10.3390/psf2022005039