Youth and ChatGPT: Perceptions of Usefulness and Usage Patterns of Generation Z in Polish Higher Education

Abstract

1. Introduction

2. Theoretical Background

2.1. Generation Z’s Attitude Toward ChatGPT

2.2. Roles of ChatGPT in Education

3. Materials and Methods

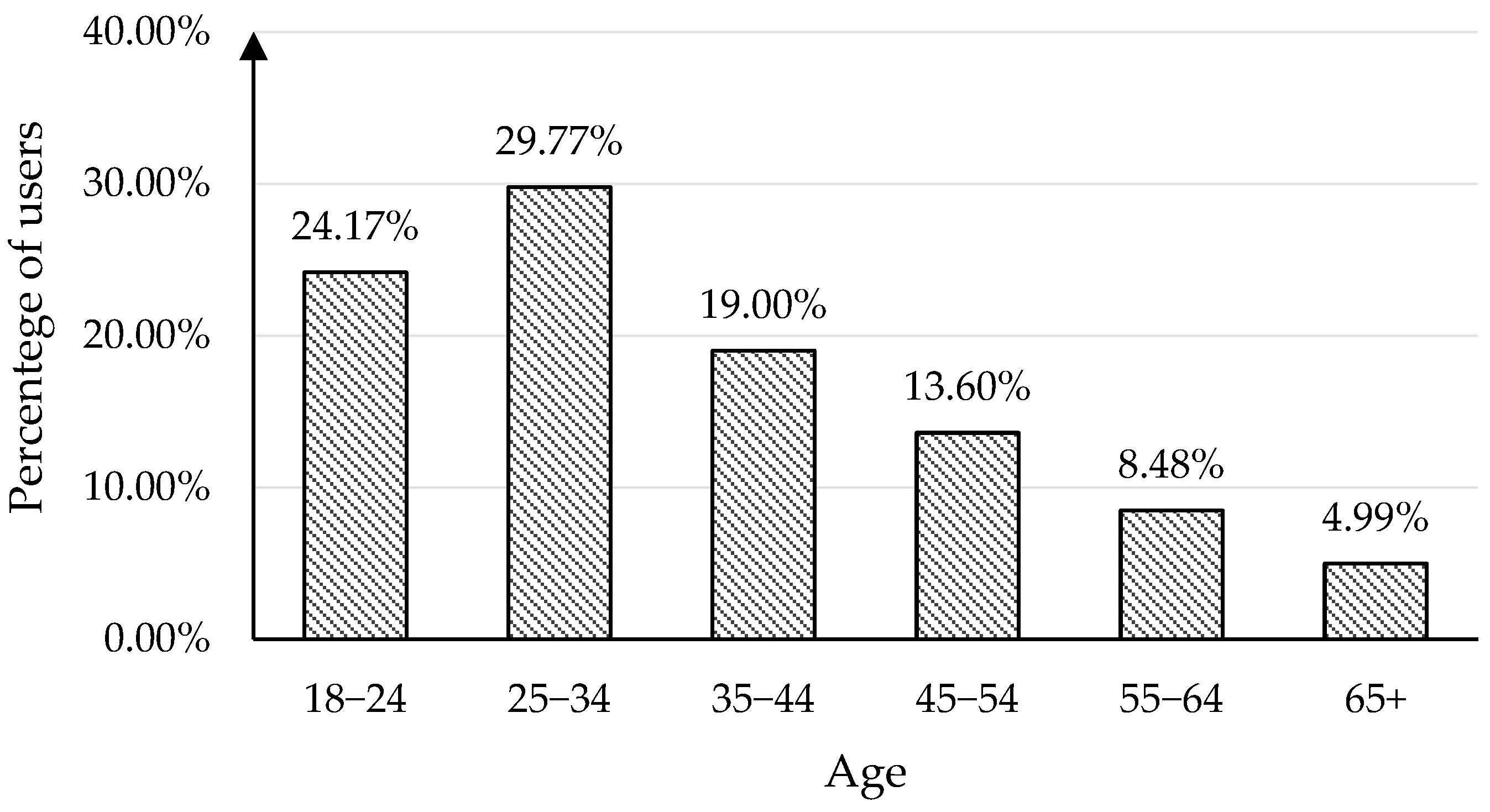

3.1. Characteristics of the Research Sample

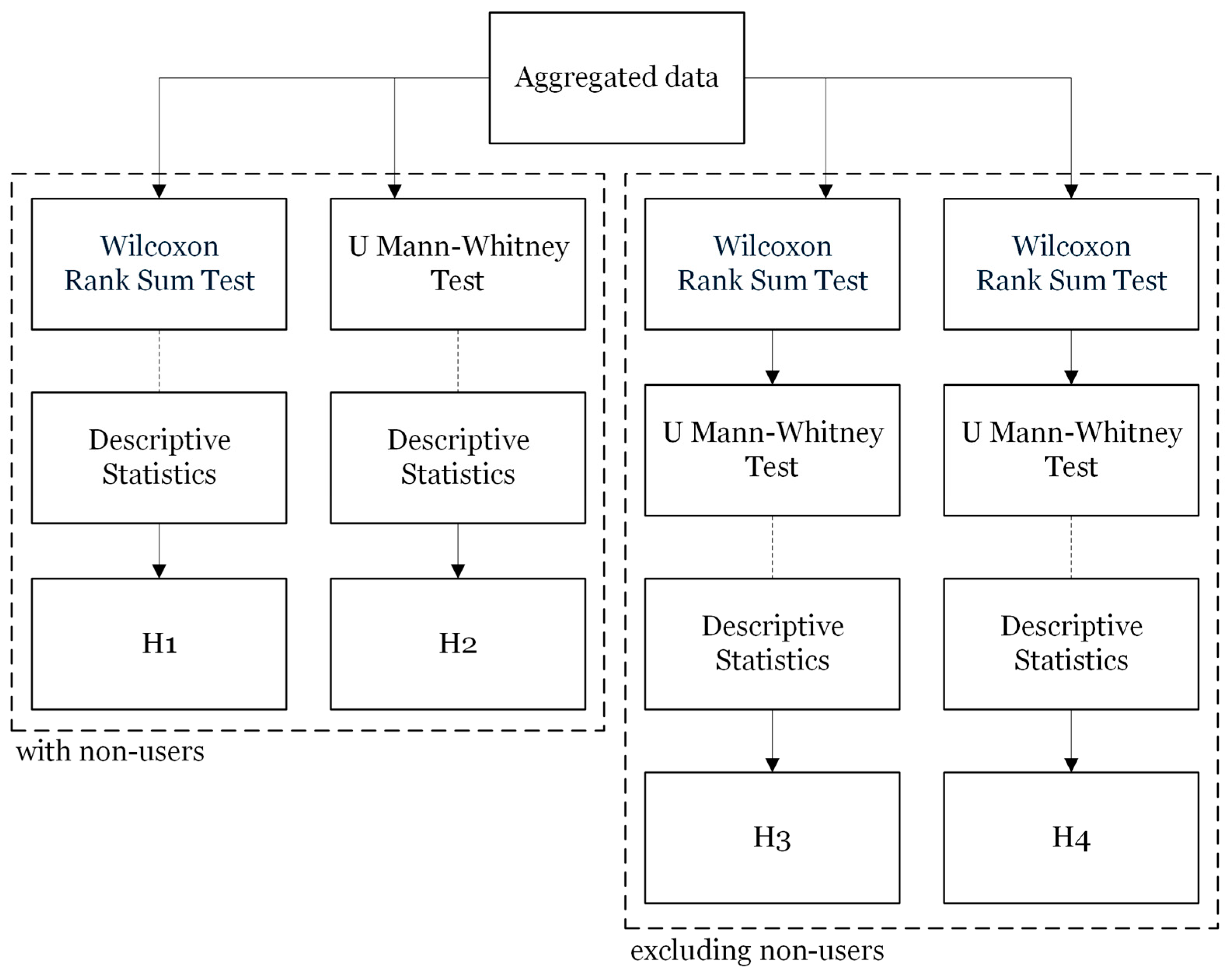

3.2. Research Framework

3.3. Hypothesis Development

4. Results

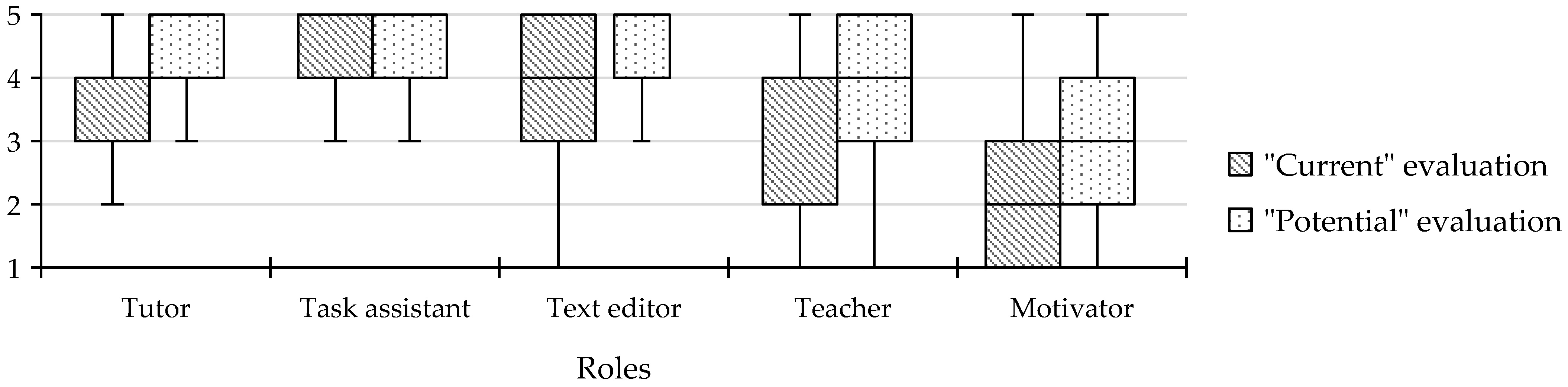

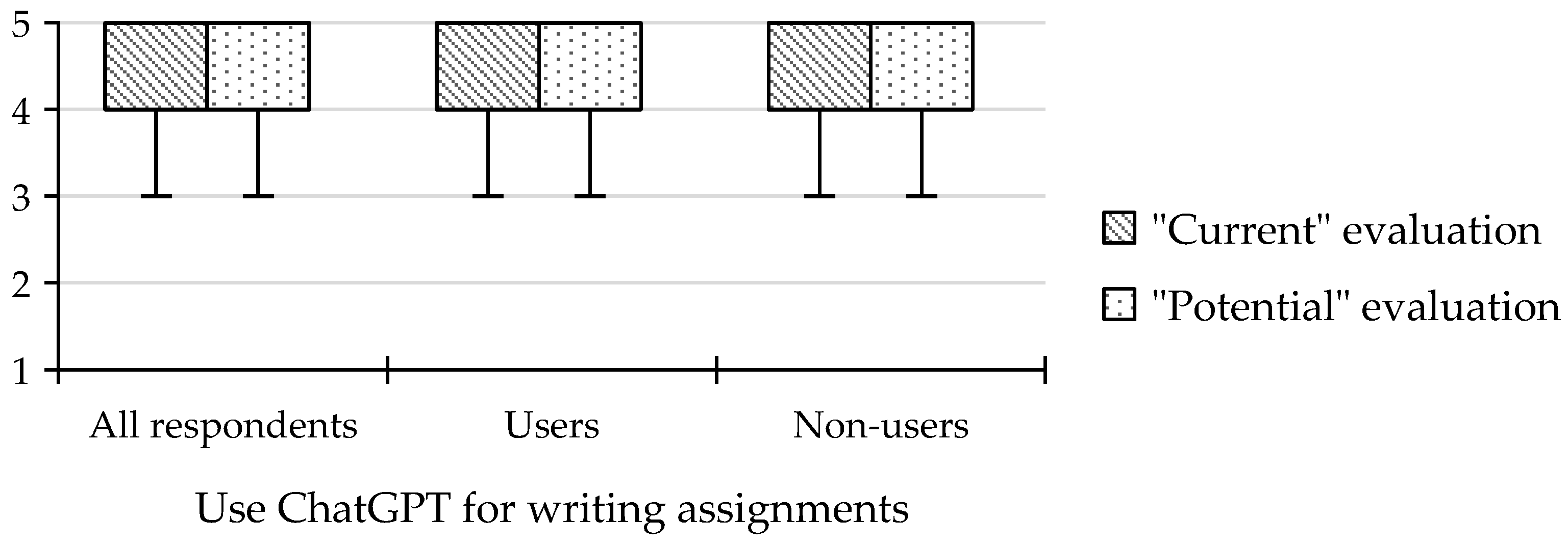

4.1. Perception of “Current” and “Potential” Usefulness of ChatGPT in Education

4.2. Evaluation of Instructional Roles Among Non-Users

4.3. Evaluation of ChatGPT’s Usefulness as a “Task Assistant” Among Users

4.4. Evaluation of ChatGPT’s Usefulness as a “Teacher” Among Users

5. Discussion

5.1. Perception of “Current” and “Potential” Usefulness of ChatGPT in Education

5.2. Evaluation of Instructional Roles Among Non-Users

5.3. Evaluation of ChatGPT’s Usefulness as an Assistant Among Users

5.4. Evaluation of ChatGPT’s Usefulness as a Teacher Among Users

- –

- personalization, that is, dynamic adjustment of content and difficulty to student needs (Looi & Jia, 2025);

- –

- metacognitive self-regulation, where ChatGPT supports planning and monitoring of learning (Dahri et al., 2024);

- –

- structuring self-regulated learning (SRL) activities, aligned with motivational frameworks (Chiu, 2024).

5.5. Implications for AI Literacy

5.6. Implications for Pedagogy

5.7. Ethical and Institutional Implications

6. Limitations and Future Recommendations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- Have you ever used ChatGPT?

- ☐

- Yes.

- ☐

- No.

- For what purposes do you use ChatGPT?(multiple options possible)

- ☐

- Assistance in writing routine letters (e.g., official letters, applications, etc.).

- ☐

- Assistance in writing academic papers, articles, etc.

- ☐

- Help in learning.

- ☐

- Searching for information.

- ☐

- Other (please specify) _____________________________________________

- For each role below, please rate how useful ChatGPT is in education now and how useful it will be, in your opinion, in the near future (2–3 years).(use the scale: 1—Definitely NOT useful; 2—Rather NOT useful; 3—Neither useful nor not useful; 4—Rather useful; 5—Definitely useful).

| Role | Characteristics | Current Usefulness | Potential Usefulness |

| Tutor | Explaining complex topics and answering students’ questions at various educational levels. | ||

| Assistant in educational tasks | For example, create an essay plan or write a few sentences of a given text for an essay, etc. | ||

| Text editor | Will correct the text, find errors, propose changes. | ||

| Teacher | Prepare quizzes, tests, and other educational materials that facilitate learning. | ||

| Motivator | Motivating individuals in education to continue their efforts, pointing out progress in learning, setting goals. |

References

- Acosta-Enriquez, B. G., Ballesteros, M. A. A., Vargas, C. G. A. P., Ulloa, M. N. O., Ulloa, C. R. G., Romero, J. M. P., Jaramillo, N. D. G., Orellana, H. U. C., Anzoátegui, D. X. A., & Roca, C. L. (2024). Knowledge, attitudes, and perceived Ethics regarding the use of ChatGPT among generation Z university students. International Journal for Educational Integrity, 20(1), 10. [Google Scholar] [CrossRef]

- Adel, A., Ahsan, A., & Davison, C. (2024). ChatGPT promises and challenges in education: Computational and ethical perspectives. Education Sciences, 14(8), 814. [Google Scholar] [CrossRef]

- Adiguzel, T., Kaya, M. H., & Cansu, F. K. (2023). Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemporary Educational Technology, 15(3), ep429. [Google Scholar] [CrossRef]

- AlAfnan, M. A., Dishari, N. S., Jovic, N. M., & Lomidze, N. K. (2023). ChatGPT as an educational tool: Opportunities, challenges, and recommendations for communication, business writing, and composition courses. Journal of Artificial Intelligence and Technology, 3(2), 60–68. [Google Scholar] [CrossRef]

- Allen, T. J., & Mizumoto, A. (2024). ChatGPT over my friends: Japanese English-as-a-Foreign-Language Learners’ preferences for editing and proofreading strategies. RELC Journal. [Google Scholar] [CrossRef]

- Almarashdi, H. S., Jarrah, A. M., Khurma, O. A., & Gningue, S. M. (2024). Unveiling the potential: A systematic review of ChatGPT in transforming mathematics teaching and learning. Eurasia Journal of Mathematics Science and Technology Education, 20(12), em2555. [Google Scholar] [CrossRef]

- Almashy, A., Ahmed, A. S. M. M., Jamshed, M., Ansari, M. S., Banu, S., & Warda, W. U. (2024). Analyzing the impact of CALL tools on English learners’ writing skills: A Comparative study of errors correction. World Journal of English Language, 14(6), 657. [Google Scholar] [CrossRef]

- Almulla, M. A. (2024). Investigating influencing factors of learning satisfaction in AI ChatGPT for research: University students perspective. Heliyon, 10(11), e32220. [Google Scholar] [CrossRef]

- Alsaweed, W., & Aljebreen, S. (2024). Investigating the accuracy of ChatGPT as a writing error correction tool. International Journal of Computer-Assisted Language Learning and Teaching, 14(1), 1–18. [Google Scholar] [CrossRef]

- Anders, B. A. (2023). Is using ChatGPT cheating, plagiarism, both, neither, or forward thinking? Patterns, 4(3), 100694. [Google Scholar] [CrossRef] [PubMed]

- Antoniou, C., Pavlou, A., & Ikossi, D. G. (2025). Let’s chat! Integrating ChatGPT in medical student assignments to enhance critical analysis. Medical Teacher, 47(5), 791–793. [Google Scholar] [CrossRef]

- Aydın, Ö., & Karaarslan, E. (2023). Is ChatGPT leading generative AI? What is beyond expectations? Academic Platform Journal of Engineering and Smart Systems, 11(3), 118–134. [Google Scholar] [CrossRef]

- Baig, M. I., & Yadegaridehkordi, E. (2025). Factors influencing academic staff satisfaction and continuous usage of generative artificial intelligence (GenAI) in higher education. International Journal of Educational Technology in Higher Education, 22(1), 5. [Google Scholar] [CrossRef]

- Bašić, Ž., Banovac, A., Kružić, I., & Jerković, I. (2023). ChatGPT-3.5 as writing assistance in students’ essays. Humanities and Social Sciences Communications, 10(1), 750. [Google Scholar] [CrossRef]

- Bender, S. M. (2024). Awareness of Artificial Intelligence as an essential digital literacy: ChatGPT and Gen-AI in the classroom. Changing English, 31(2), 161–174. [Google Scholar] [CrossRef]

- Berman, J., McCoy, L., & Camarata, T. (2024). LLM-generated multiple choice practice quizzes for pre-clinical medical students; use and validity. Physiology, 39(S1), 118–134. [Google Scholar] [CrossRef]

- Bettayeb, A. M., Talib, M. A., Altayasinah, A. Z. S., & Dakalbab, F. (2024). Exploring the impact of ChatGPT: Conversational AI in education. Frontiers in Education, 9, 1379796. [Google Scholar] [CrossRef]

- Bhaskar, P., & Rana, S. (2024). The ChatGPT dilemma: Unravelling teachers’ perspectives on inhibiting and motivating factors for adoption of ChatGPT. Journal of Information Communication and Ethics in Society, 22(2), 219–239. [Google Scholar] [CrossRef]

- Bhattacherjee, A. (2001). Understanding information systems continuance: An expectation-confirmation model. MIS Quarterly, 25(3), 351. [Google Scholar] [CrossRef]

- Biloš, A., & Budimir, B. (2024). Understanding the adoption dynamics of ChatGPT among generation Z: Insights from a modified UTAUT2 model. Journal of Theoretical and Applied Electronic Commerce Research, 19(2), 863–879. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), 43. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Lee, K. K. W. (2023). The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learning Environments, 10(1), 60. [Google Scholar] [CrossRef]

- Chaudhuri, J., & Terrones, L. (2024). Reshaping academic library information literacy programs in the advent of ChatGPT and other generative AI technologies. Internet Reference Services Quarterly, 29(1), 1–25. [Google Scholar] [CrossRef]

- Chiu, T. K. F. (2024). A classification tool to foster self-regulated learning with generative artificial intelligence by applying self-determination theory: A case of ChatGPT. Educational Technology Research and Development, 72(4), 2401–2416. [Google Scholar] [CrossRef]

- Clark, R. E. (1983). Reconsidering research on learning from media. Review of Educational Research, 53(4), 445–459. [Google Scholar] [CrossRef]

- Črček, N., & Patekar, J. (2023). Writing with AI: University students’ use of ChatGPT. Journal of Language and Education, 9(4), 128–138. [Google Scholar] [CrossRef]

- Dahri, N. A., Yahaya, N., Al-Rahmi, W. M., Aldraiweesh, A., Alturki, U., Almutairy, S., Shutaleva, A., & Soomro, R. B. (2024). Extended TAM based acceptance of AI-powered ChatGPT for supporting metacognitive self-regulated learning in education: A mixed-methods study. Heliyon, 10(8), e29317. [Google Scholar] [CrossRef]

- Da Silva, C. A. G., Ramos, F. N., De Moraes, R. V., & Santos, E. L. D. (2024). ChatGPT: Challenges and benefits in software programming for higher education. Sustainability, 16(3), 1245. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319. [Google Scholar] [CrossRef]

- Deng, R., Jiang, M., Yu, X., Lu, Y., & Liu, S. (2024). Does ChatGPT enhance student learning? A systematic review and meta-analysis of experimental studies. Computers & Education, 227, 105224. [Google Scholar] [CrossRef]

- Ding, L., Li, T., Jiang, S., & Gapud, A. (2023). Students’ perceptions of using ChatGPT in a physics class as a virtual tutor. International Journal of Educational Technology in Higher Education, 20(1), 63. [Google Scholar] [CrossRef]

- ElSayary, A. (2023). An investigation of teachers’ perceptions of using ChatGPT as a supporting tool for teaching and learning in the digital era. Journal of Computer Assisted Learning, 40(3), 931–945. [Google Scholar] [CrossRef]

- Fawns, T., Henderson, M., Matthews, K., Oberg, G., Liang, Y., Walton, J., Corbin, T., Bearman, M., Shum, S. B., McCluskey, T., McLean, J., Shibani, A., Bakharia, A., Lim, L., Pepperell, N., Slade, C., Chung, J., & Seligmann, A. (2024). Gen AI and student perspectives of use and ambiguity. Proceedings of Australasian Society for Computers in Learning in Tertiary Education, 132–134. [Google Scholar] [CrossRef]

- Fokides, E., & Peristeraki, E. (2024). Comparing ChatGPT’s correction and feedback comments with that of educators in the context of primary students’ short essays written in English and Greek. Education and Information Technologies, 30(2), 2577–2621. [Google Scholar] [CrossRef]

- Foroughi, C. K., Monfort, S. S., Paczynski, M., McKnight, P. E., & Greenwood, P. M. (2016). Placebo effects in cognitive training. Proceedings of the National Academy of Sciences, 113(27), 7470–7474. [Google Scholar] [CrossRef]

- García-López, I. M., González, C. S. G., Ramírez-Montoya, M., & Molina-Espinosa, J. (2024). Challenges of implementing ChatGPT on education: Systematic literature review. International Journal of Educational Research Open, 8, 100401. [Google Scholar] [CrossRef]

- Glasman, L. R., & Albarracín, D. (2006). Forming attitudes that predict future behavior: A meta-analysis of the attitude-behavior relation. Psychological Bulletin, 132(5), 778–822. [Google Scholar] [CrossRef] [PubMed]

- Goodhue, D. L., & Thompson, R. L. (1995). Task-Technology fit and individual performance. MIS Quarterly, 19(2), 213. [Google Scholar] [CrossRef]

- Guo, A. A., & Li, J. (2023). Harnessing the power of ChatGPT in medical education. Medical Teacher, 45(9), 1063. [Google Scholar] [CrossRef]

- Guo, K., & Wang, D. (2023). To resist it or to embrace it? Examining ChatGPT’s potential to support teacher feedback in EFL writing. Education and Information Technologies, 29(7), 8435–8463. [Google Scholar] [CrossRef]

- Gupta, A., Yousaf, A., & Mishra, A. (2020). How pre-adoption expectancies shape post-adoption continuance intentions: An extended expectation-confirmation model. International Journal of Information Management, 52, 102094. [Google Scholar] [CrossRef]

- Hamerman, E. J., Aggarwal, A., & Martins, C. (2024). An investigation of generative AI in the classroom and its implications for university policy. Quality Assurance in Education, 33(2), 253–266. [Google Scholar] [CrossRef]

- Han, J., & Li, N. M. (2024). Exploring ChatGPT-supported teacher feedback in the EFL context. System, 126, 103502. [Google Scholar] [CrossRef]

- Hsiao, C., & Tang, K. (2024). Beyond acceptance: An empirical investigation of technological, ethical, social, and individual determinants of GenAI-supported learning in higher education. Education and Information Technologies, 30(8), 10725–10750. [Google Scholar] [CrossRef]

- Huang, W., Jiang, J., King, R. B., & Fryer, L. K. (2025). Chatbots and student motivation: A scoping review. International Journal of Educational Technology in Higher Education, 22(1), 26. [Google Scholar] [CrossRef]

- Imran, M., & Almusharraf, N. (2023). Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Contemporary Educational Technology, 15(4), ep464. [Google Scholar] [CrossRef]

- Karataş, F., Abedi, F. Y., Gunyel, F. O., Karadeniz, D., & Kuzgun, Y. (2024). Incorporating AI in foreign language education: An investigation into ChatGPT’s effect on foreign language learners. Education and Information Technologies, 29(15), 19343–19366. [Google Scholar] [CrossRef]

- Karataş, F., & Yüce, E. (2024). AI and the future of teaching: Preservice teachers’ reflections on the use of artificial intelligence in open and distributed learning. The International Review of Research in Open and Distributed Learning, 25(3), 304–325. [Google Scholar] [CrossRef]

- Kavitha, K., Joshith, V. P., & Sharma, S. (2024). Beyond text: ChatGPT as an emotional resilience support tool for Gen Z—A sequential explanatory design exploration. E-Learning and Digital Media, 1–27. [Google Scholar] [CrossRef]

- Kiryakova, G., & Angelova, N. (2023). ChatGPT—A challenging tool for the university professors in their teaching practice. Education Sciences, 13(10), 1056. [Google Scholar] [CrossRef]

- Kıyak, Y. S., & Emekli, E. (2024). ChatGPT prompts for generating multiple-choice questions in medical education and evidence on their validity: A literature review. Postgraduate Medical Journal, 100(1189), 858–865. [Google Scholar] [CrossRef]

- Kohnke, L., Moorhouse, B. L., & Zou, D. (2023). ChatGPT for language teaching and learning. RELC Journal, 54(2), 537–550. [Google Scholar] [CrossRef]

- Koltovskaia, S., Rahmati, P., & Saeli, H. (2024). Graduate students’ use of ChatGPT for academic text revision: Behavioral, cognitive, and affective engagement. Journal of Second Language Writing, 65, 101130. [Google Scholar] [CrossRef]

- Lai, C., & Lin, C. (2025). Analysis of learning behaviors and outcomes for students with different knowledge levels: A case study of intelligent tutoring system for coding and learning (ITS-CAL). Applied Sciences, 15(4), 1922. [Google Scholar] [CrossRef]

- Lin, S., & Crosthwaite, P. (2024). The grass is not always greener: Teacher vs. GPT-assisted written corrective feedback. System, 127, 103529. [Google Scholar] [CrossRef]

- Lin, Z. (2023). Why and how to embrace AI such as ChatGPT in your academic life. Royal Society Open Science, 10(8), 230658. [Google Scholar] [CrossRef]

- Liu, F., Chang, X., Zhu, Q., Huang, Y., Li, Y., & Wang, H. (2024). Assessing clinical medicine students’ acceptance of large language model: Based on technology acceptance model. BMC Medical Education, 24(1), 1251. [Google Scholar] [CrossRef]

- Liu, Y., Park, J., & McMinn, S. (2024). Using generative artificial intelligence/ChatGPT for academic communication: Students’ perspectives. International Journal of Applied Linguistics, 34(4), 1437–1461. [Google Scholar] [CrossRef]

- Lo, C. K. (2023). What is the impact of CHATGPT on education? A rapid review of the literature. Education Sciences, 13(4), 410. [Google Scholar] [CrossRef]

- Looi, C., & Jia, F. (2025). Personalization capabilities of current technology chatbots in a learning environment: An analysis of student-tutor bot interactions. Education and Information Technologies, 30, 14165–14195. [Google Scholar] [CrossRef]

- Mahapatra, S. (2024). Impact of ChatGPT on ESL students’ academic writing skills: A mixed methods intervention study. Smart Learning Environments, 11(1), 9. [Google Scholar] [CrossRef]

- Malik, A., Khan, M. L., Hussain, K., Qadir, J., & Tarhini, A. (2024). AI in higher education: Unveiling academicians’ perspectives on teaching, research, and ethics in the age of ChatGPT. Interactive Learning Environments, 33(3), 2390–2406. [Google Scholar] [CrossRef]

- Menges, J. I., Tussing, D. V., Wihler, A., & Grant, A. M. (2016). When job performance is all relative: How family motivation energizes effort and compensates for intrinsic motivation. Academy of Management Journal, 60(2), 695–719. [Google Scholar] [CrossRef]

- Mirriahi, N., Marrone, R., Barthakur, A., Gabriel, F., Colton, J., Yeung, T. N., Arthur, P., & Kovanovic, V. (2025). The relationship between students’ self-regulated learning skills and technology acceptance of GenAI. Australasian Journal of Educational Technology, 41(2), 16–33. [Google Scholar] [CrossRef]

- Munaye, Y. Y., Admass, W., Belayneh, Y., Molla, A., & Asmare, M. (2025). ChatGPT in education: A systematic review on opportunities, challenges, and future directions. Algorithms, 18(6), 352. [Google Scholar] [CrossRef]

- Ngo, T. T. A. (2023). The perception by university students of the use of CHATGPT in education. International Journal of Emerging Technologies in Learning (IJET), 18(17), 4–19. [Google Scholar] [CrossRef]

- Nugroho, A., Andriyanti, E., Widodo, P., & Mutiaraningrum, I. (2024). Students’ appraisals post-ChatGPT use: Students’ narrative after using ChatGPT for writing. Innovations in Education and Teaching International, 62(2), 499–511. [Google Scholar] [CrossRef]

- Oates, A., & Johnson, D. (2025). ChatGPT in the classroom: Evaluating its role in fostering critical evaluation skills. International Journal of Artificial Intelligence in Education. [Google Scholar] [CrossRef]

- OpenAI. (2025, February 20). Building an AI-ready workforce: A look at college student ChatGPT adoption in the US. Available online: https://openai.com/global-affairs/college-students-and-chatgpt/ (accessed on 28 July 2025).

- Paradeda, R. B., Torres, D. T. B., & Takahashi, A. (2025). Generative AI in education: A study of undergraduate students’ expectations of ChatGPT. RENOTE, 22(3), 174–185. [Google Scholar] [CrossRef]

- Prajzner, A. (2023). Selected indicators of effect size in psychological research. Annales Universitatis Mariae Curie-Skłodowska Sectio J—Paedagogia-Psychologia, 35(4), 139–157. [Google Scholar] [CrossRef]

- Rejeb, A., Rejeb, K., Appolloni, A., Treiblmaier, H., & Iranmanesh, M. (2024). Exploring the impact of ChatGPT on education: A web mining and machine learning approach. The International Journal of Management Education, 22(1), 100932. [Google Scholar] [CrossRef]

- Rogers, E. M. (1983). Diffusion of innovations (3rd ed.). The Free Press. [Google Scholar]

- Rojas, A. J. (2024). An investigation into ChatGPT’s application for a scientific writing assignment. Journal of Chemical Education, 101(5), 1959–1965. [Google Scholar] [CrossRef]

- Saif, N., Khan, S. U., Shaheen, I., ALotaibi, F. A., Alnfiai, M. M., & Arif, M. (2023). Chat-GPT; validating Technology Acceptance Model (TAM) in education sector via ubiquitous learning mechanism. Computers in Human Behavior, 154, 108097. [Google Scholar] [CrossRef]

- Sallam, M., Salim, N., Barakat, M., & Al-Tammemi, A. (2023). ChatGPT applications in medical, dental, pharmacy, and public health education: A descriptive study highlighting the advantages and limitations. Narra J, 3(1), e103. [Google Scholar] [CrossRef]

- Shah, C. S., Mathur, S., & Vishnoi, S. K. (2024). Is ChatGPT enhancing youth’s learning, engagement and satisfaction? Journal of Computer Information Systems, 1–16. [Google Scholar] [CrossRef]

- Similarweb. (n.d.). chatgpt.com. Available online: https://pro.similarweb.com/#/digitalsuite/websiteanalysis/overview/website-performance/*/999/1m?webSource=Total&key=chatgpt.com (accessed on 28 July 2025).

- Sirisathitkul, C., & Jaroonchokanan, N. (2025). Implementing ChatGPT as tutor, tutee, and tool in physics and chemistry. Substantia, 9(1), 89–101. [Google Scholar] [CrossRef]

- Su, J., & Yang, W. (2023). Unlocking the power of ChatGPT: A framework for applying generative AI in education. ECNU Review of Education, 6(3), 355–366. [Google Scholar] [CrossRef]

- Su, Y., Lin, Y., & Lai, C. (2023). Collaborating with ChatGPT in argumentative writing classrooms. Assessing Writing, 57, 100752. [Google Scholar] [CrossRef]

- Tarchi, C., Zappoli, A., Ledesma, L. C., & Brante, E. W. (2024). The use of ChatGPT in source-based writing tasks. International Journal of Artificial Intelligence in Education, 35(2), 858–878. [Google Scholar] [CrossRef]

- Tiwari, C. K., Bhat, M. A., Khan, S. T., Subramaniam, R., & Khan, M. A. I. (2023). What drives students toward ChatGPT? An investigation of the factors influencing adoption and usage of ChatGPT. Interactive Technology and Smart Education, 21(3), 333–355. [Google Scholar] [CrossRef]

- Tummalapenta, S., Pasupuleti, R., Chebolu, R., Banala, T., & Thiyyagura, D. (2024). Factors driving ChatGPT continuance intention among higher education students: Integrating motivation, social dynamics, and technology adoption. Journal of Computers in Education. [Google Scholar] [CrossRef]

- Tversky, A., & Kahneman, D. (1974). Judgment under Uncertainty: Heuristics and Biases. Science, 185(4157), 1124–1131. [Google Scholar] [CrossRef]

- Vaccino-Salvadore, S. (2023). Exploring the ethical dimensions of using ChatGPT in language learning and beyond. Languages, 8(3), 191. [Google Scholar] [CrossRef]

- Vetter, M. A., Lucia, B., Jiang, J., & Othman, M. (2024). Towards a framework for local interrogation of AI ethics: A case study on text generators, academic integrity, and composing with ChatGPT. Computers & Composition/Computers and Composition, 71, 102831. [Google Scholar] [CrossRef]

- Wang, L., Chen, X., Wang, C., Xu, L., Shadiev, R., & Li, Y. (2023). ChatGPT’s capabilities in providing feedback on undergraduate students’ argumentation: A case study. Thinking Skills and Creativity, 51, 101440. [Google Scholar] [CrossRef]

- Wardat, Y., Tashtoush, M. A., AlAli, R., & Jarrah, A. M. (2023). ChatGPT: A revolutionary tool for teaching and learning mathematics. Eurasia Journal of Mathematics Science and Technology Education, 19(7), em2286. [Google Scholar] [CrossRef]

- Wu, T., Lee, H., Li, P., Huang, C., & Huang, Y. (2023). Promoting Self-Regulation progress and knowledge construction in blended learning via ChatGPT-Based Learning Aid. Journal of Educational Computing Research, 61(8), 3–31. [Google Scholar] [CrossRef]

- Xiao, Y., & Zhi, Y. (2023). An exploratory study of EFL learners’ use of ChaTGPT for language learning tasks: Experience and perceptions. Languages, 8(3), 212. [Google Scholar] [CrossRef]

- Xu, T., Weng, H., Liu, F., Yang, L., Luo, Y., Ding, Z., & Wang, Q. (2024). Current status of ChatGPT use in medical education: Potentials, challenges, and strategies. Journal of Medical Internet Research, 26, e57896. [Google Scholar] [CrossRef]

- Xu, X., Wang, X., Zhang, Y., & Zheng, R. (2024). Applying ChatGPT to tackle the side effects of personal learning environments from learner and learning perspective: An interview of experts in higher education. PLoS ONE, 19(1), e0295646. [Google Scholar] [CrossRef]

- Yang, J., Jin, H., Tang, R., Han, X., Feng, Q., Jiang, H., Zhong, S., Yin, B., & Hu, X. (2024). Harnessing the power of LLMs in practice: A survey on ChatGPT and beyond. ACM Transactions on Knowledge Discovery From Data, 18(6), 1–32. [Google Scholar] [CrossRef]

- Zhang, Y., Yang, X., & Tong, W. (2025). University students’ attitudes toward ChatGPT profiles and their relation to ChatGPT intentions. International Journal of Human-Computer Interaction, 41(5), 3199–3212. [Google Scholar] [CrossRef]

- Zou, M., & Huang, L. (2023). The impact of ChatGPT on L2 writing and expected responses: Voice from doctoral students. Education and Information Technologies, 29(11), 13201–13219. [Google Scholar] [CrossRef]

| Field of Study (Polish Classification) | Full-Time Bachelor | Part-Time Bachelor | Full-Time Master | Part-Time Master | Total |

|---|---|---|---|---|---|

| Humanities | 6 | 1 | 2 | - | 9 |

| Engineering and technology | 54 | 6 | 13 | 1 | 74 |

| Agricultural sciences | 1 | 1 | - | - | 2 |

| Social sciences | 137 | 58 | 59 | 6 | 260 |

| Natural sciences | 23 | 5 | 25 | - | 53 |

| Other | 8 | 2 | 1 | - | 11 |

| Total | 229 | 73 | 100 | 7 | 409 |

| Role | Short Description (TTF) | Example Literature |

|---|---|---|

| Tutor | Step-by-step explanations and Q&A that align with study tasks | (Almarashdi et al., 2024; Da Silva et al., 2024; A. A. Guo & Li, 2023; Sallam et al., 2023; Xiao & Zhi, 2023) |

| Task assistant | Outlining, planning, and summarizing that fit workflow support | (Črček & Patekar, 2023; Mahapatra, 2024; Nugroho et al., 2024; Y. Su et al., 2023; Zou & Huang, 2023) |

| Text editor | Clarity improvement, revision, and tone adjustment for writing tasks | (Almashy et al., 2024; Alsaweed & Aljebreen, 2024; Fokides & Peristeraki, 2024; S. Lin & Crosthwaite, 2024) |

| Teacher | Formative feedback and guidance for learning activities | (Berman et al., 2024; K. Guo & Wang, 2023; Han & Li, 2024; Kıyak & Emekli, 2024; Wang et al., 2023) |

| Motivator | Prompts to start and persist, effort regulation with human scaffolding | (Chiu, 2024; ElSayary, 2023; Karataş et al., 2024; X. Xu et al., 2024; Wu et al., 2023) |

| Role | N * | T | Z | p | r |

|---|---|---|---|---|---|

| Tutor | 204 | 3872.50 | 7.7973 | 0.0000 | 0.5459 |

| Task assistant | 155 | 2630.50 | 6.0999 | 0.0000 | 0.4900 |

| Text editor | 192 | 2761.50 | 8.4339 | 0.0000 | 0.6087 |

| Teacher | 205 | 3038.50 | 8.8417 | 0.0000 | 0.6175 |

| Motivator | 188 | 2560.00 | 8.4635 | 0.0000 | 0.6173 |

| Role | N | Median | Mode | Mode Count |

|---|---|---|---|---|

| “Current” evaluation | ||||

| Tutor | 409 | 4 | 4 | 212 |

| Assistant in educational tasks | 4 | 4 | 183 | |

| Text editor | 4 | 4 | 162 | |

| Teacher | 4 | 4 | 164 | |

| Motivator | 2 | 2 | 125 | |

| “Potential” evaluation | ||||

| Tutor | 409 | 4 | 5 | 203 |

| Assistant in educational tasks | 5 | 5 | 244 | |

| Text editor | 5 | 5 | 224 | |

| Teacher | 4 | 5 | 150 | |

| Motivator | 3 | 2 | 113 | |

| Role | N (Users) | N (Non-Users) | Avg. Rank (Users) | Avg. Rank (Non-Users) | U | Z (Corrected) | p | r | Cliff’s δ |

|---|---|---|---|---|---|---|---|---|---|

| Tutor | 362 | 47 | 187.9268 | 336.5000 | 2326.500 | 8.2307 | 0.0000 | 0.4008 | 0.7265 |

| Teacher | 206.2831 | 195.1170 | 8042.500 | −0.6653 | 0.5059 | −0.0301 | −0.0546 |

| Role | N | Median | Mode | Mode Count | Lower Quartile | Upper Quartile |

|---|---|---|---|---|---|---|

| Users | ||||||

| Tutor | 362 | 0 | 0 | 87 | −1 | 1 |

| Teacher | 0 | 0 | 188 | 0 | 1 | |

| Non-users | ||||||

| Tutor | 47 | 3 | 3 | 16 | 2 | 3 |

| Teacher | 0 | 0 | 29 | 0 | 1 | |

| Use of ChatGPT for Writing Assignments | N | Median | Mode | Mode Count |

|---|---|---|---|---|

| All respondents | 362 | 5 | 5 | 223 |

| Users | 145 | 5 | 5 | 103 |

| Non-users | 217 | 5 | 5 | 120 |

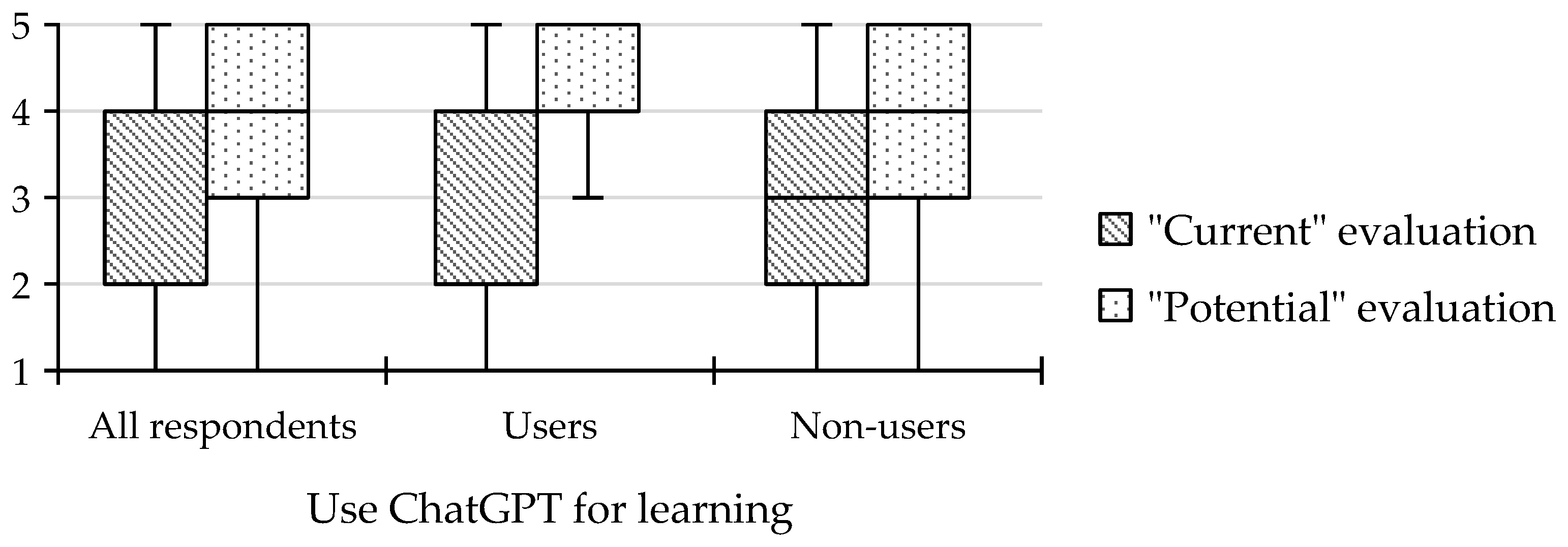

| Use of ChatGPT for Learning | N | Median | Mode | Mode Count |

|---|---|---|---|---|

| All respondents | 362 | 4 | 5 | 136 |

| Users | 247 | 4 | 5 | 104 |

| Non-users | 115 | 4 | 4 | 47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliński, M.; Sieciński, K. Youth and ChatGPT: Perceptions of Usefulness and Usage Patterns of Generation Z in Polish Higher Education. Youth 2025, 5, 106. https://doi.org/10.3390/youth5040106

Oliński M, Sieciński K. Youth and ChatGPT: Perceptions of Usefulness and Usage Patterns of Generation Z in Polish Higher Education. Youth. 2025; 5(4):106. https://doi.org/10.3390/youth5040106

Chicago/Turabian StyleOliński, Marian, and Kacper Sieciński. 2025. "Youth and ChatGPT: Perceptions of Usefulness and Usage Patterns of Generation Z in Polish Higher Education" Youth 5, no. 4: 106. https://doi.org/10.3390/youth5040106

APA StyleOliński, M., & Sieciński, K. (2025). Youth and ChatGPT: Perceptions of Usefulness and Usage Patterns of Generation Z in Polish Higher Education. Youth, 5(4), 106. https://doi.org/10.3390/youth5040106