Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families

Abstract

:1. Introduction

1.1. Onicescu’s Informational Energy

1.2. Onicescu’s Correlation Coefficient

1.3. Exponential Families

2. Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families

2.1. Closed-Form Formula

2.2. Divergences Related to Onicescu’s Correlation Coefficient

3. Some Illustrating Examples

3.1. Exponential Family of Exponential Distributions

3.2. Exponential Family of Poisson Distributions

3.3. Exponential Family of Univariate Normal Distributions

3.4. Exponential Family of Multivariate Normal Distributions

3.5. Exponential Family of Pareto Distributions

3.6. Instantiating Formula with a Computer Algebra System

- /* Pareto densities form an exponential family */

- assume(k>0);

- assume(a>0);

- Pareto(x,a):=a*(k**a)/(x**(a+1));

- /* check that it is a density (=1) */

- integrate(Pareto(x,a),x,k,inf);

- /* calculate Onicescu’s informational energy */

- integrate(Pareto(x,a)**2,x,k,inf);

- /* method bypassing the integral calculation */

- omega:k;

- (Pareto(omega,a)**2)/Pareto(omega,2*a+1);

4. Informational Energy and the Laws of Thermodynamics

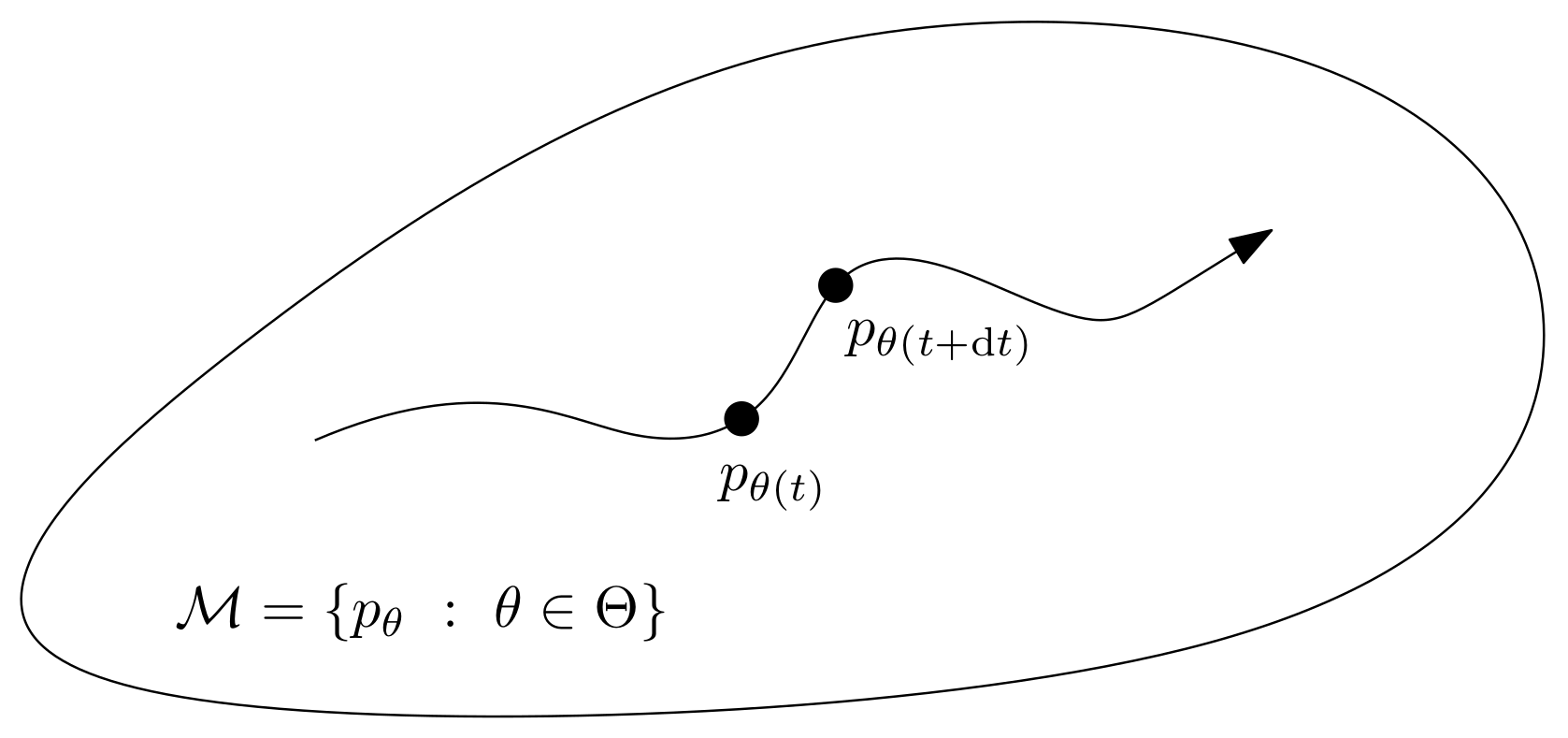

4.1. Exponential Family Manifolds

4.2. Location-Scale Manifolds

5. Summary and Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Billingsley, P. Probability and Measure, 3rd ed.; Wiley: Hoboken, NJ, USA, 1995. [Google Scholar]

- Iosifescu, M. Obituary notice: Octav Onicescu, 1892–1983. Int. Stat. Rev. 1986, 54, 97–108. [Google Scholar]

- Crepel, P.; Fienberg, S.; Gani, J. Statisticians of the Centuries; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Onicescu, O.; Stefanescu, V. Elements of Informational Statistics with Applications/Elemente de Statistica Informationala cu Aplicatii (in Romanian); Editura Tehnica: Bucharest, Romania, 1979. [Google Scholar]

- Onicescu, O. Théorie de l’information énergie informationelle. Comptes Rendus De L’Academie Des Sci. Ser. AB 1966, 263, 841–842. [Google Scholar]

- Pardo, L.; Taneja, I. Information Energy and Its Aplications. In Advances in Electronics and Electron Physics; Elsevier: Amsterdam, The Netherlands, 1991; Volume 80, pp. 165–241. [Google Scholar]

- Pardo, J.A.; Vicente, M. Asymptotic distribution of the useful informational energy. Kybernetika 1994, 30, 87–99. [Google Scholar]

- Nielsen, F.; Nock, R. On Rényi and Tsallis entropies and divergences for exponential families. arXiv 2011, arXiv:1105.3259. [Google Scholar]

- Vajda, I.; Zvárová, J. On generalized entropies, Bayesian decisions and statistical diversity. Kybernetika 2007, 43, 675–696. [Google Scholar]

- Ho, S.W.; Yeung, R.W. On the discontinuity of the Shannon information measures. IEEE Trans. Inf. Theory 2009, 55, 5362–5374. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Matsumoto, A.; Merlone, U.; Szidarovszky, F. Some notes on applying the Herfindahl–Hirschman Index. Appl. Econ. Lett. 2012, 19, 181–184. [Google Scholar] [CrossRef]

- Harremoës, P.; Topsoe, F. Inequalities between entropy and index of coincidence derived from information diagrams. IEEE Trans. Inf. Theory 2001, 47, 2944–2960. [Google Scholar] [CrossRef]

- Friedman, W.F. The Index of Coincidence and Its Applications in Cryptography; Department of Ciphers Publ 22; Riverbank Laboratories: Geneva, IL, USA, 1922. [Google Scholar]

- Simpson, E.H. Measurement of diversity. Nature 1949, 163, 688. [Google Scholar] [CrossRef]

- Nunes, A.P.; Silva, A.C.; Paiva, A.C.D. Detection of masses in mammographic images using geometry, Simpson’s Diversity Index and SVM. Int. J. Signal Imaging Syst. Eng. 2010, 3, 40–51. [Google Scholar] [CrossRef]

- Rao, C.R. Gini-Simpson index of diversity: A characterization, generalization and applications. Util. Math. 1982, 21, 273–282. [Google Scholar]

- Nielsen, F.; Boltz, S. The Burbea-Rao and Bhattacharyya centroids. IEEE Trans. Inf. Theory 2011, 57, 5455–5466. [Google Scholar] [CrossRef] [Green Version]

- Calin, O.; Udrişte, C. Geometric Modeling in Probability and Statistics; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Agop, M.; Gavriluţ, A.; Rezuş, E. Implications of Onicescu’s informational energy in some fundamental physical models. Int. J. Mod. Phys. B 2015, 29, 1550045. [Google Scholar] [CrossRef]

- Chatzisavvas, K.C.; Moustakidis, C.C.; Panos, C. Information entropy, information distances, and complexity in atoms. J. Chem. Phys. 2005, 123, 174111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alipour, M.; Mohajeri, A. Onicescu information energy in terms of Shannon entropy and Fisher information densities. Mol. Phys. 2012, 110, 403–405. [Google Scholar] [CrossRef]

- Ou, J.H.; Ho, Y.K. Shannon, Rényi, Tsallis Entropies and Onicescu Information Energy for Low-Lying Singly Excited States of Helium. Atoms 2019, 7, 70. [Google Scholar] [CrossRef] [Green Version]

- Andonie, R.; Cataron, A. An information energy LVQ approach for feature ranking. In Proceedings of the European Symposium on Artificial Neural Networks, Bruges, Belgium, 28–30 April 2004. [Google Scholar]

- Rizescu, D.; Avram, V. Using Onicescu’s informational energy to approximate social entropy. Procedia Soc. Behav. Sci. 2014, 114, 377–381. [Google Scholar] [CrossRef] [Green Version]

- Jenssen, R.; Principe, J.C.; Erdogmus, D.; Eltoft, T. The Cauchy–Schwarz divergence and Parzen windowing: Connections to graph theory and Mercer kernels. J. Frankl. Inst. 2006, 343, 614–629. [Google Scholar] [CrossRef]

- Nielsen, F.; Sun, K.; Marchand-Maillet, S. On Hölder projective divergences. Entropy 2017, 19, 122. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, F.; Garcia, V. Statistical exponential families: A digest with flash cards. arXiv 2009, arXiv:0911.4863. [Google Scholar]

- Barndorff-Nielsen, O. Information and Exponential Families; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Nielsen, F.; Nock, R. A closed-form expression for the Sharma–Mittal entropy of exponential families. J. Phys. A Math. Theor. 2011, 45, 032003. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, F.; Nock, R. On the chi square and higher-order chi distances for approximating f-divergences. IEEE Signal Process. Lett. 2013, 21, 10–13. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, F. Closed-form information-theoretic divergences for statistical mixtures. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1723–1726. [Google Scholar]

- Nielsen, F.; Nock, R. Entropies and cross-entropies of exponential families. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 3621–3624. [Google Scholar]

- Kampa, K.; Hasanbelliu, E.; Principe, J.C. Closed-form Cauchy-Schwarz PDF divergence for mixture of Gaussians. In Proceedings of the International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2578–2585. [Google Scholar]

- Nielsen, F.; Nock, R. Cumulant-free closed-form formulas for some common (dis)similarities between densities of an exponential family. arXiv 2020, arXiv:2003.02469. [Google Scholar]

- Ito, S.; Dechant, A. Stochastic time evolution, information geometry, and the Cramér-Rao bound. Phys. Rev. X 2020, 10, 021056. [Google Scholar] [CrossRef]

- Nielsen, F. The Many Faces of Information Geometry. Not. Am. Math. Soc. 2022, 69, 36–45. [Google Scholar] [CrossRef]

| Entropy | Informational Energy | |

|---|---|---|

| convexity | strictly concave | strictly convex |

| range | can be negative | always positive |

| uncertainty measure | augments with disorder | decreases with disorder |

| uniform discrete distribution u | ||

| (with alphabet size ) | ||

| bound | ||

| Inequality: | ||

| Family | Entropy | Informational Energy |

|---|---|---|

| Generic | ||

| Univar. normal | ||

| Multivar. normal | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nielsen, F. Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families. Foundations 2022, 2, 362-376. https://doi.org/10.3390/foundations2020025

Nielsen F. Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families. Foundations. 2022; 2(2):362-376. https://doi.org/10.3390/foundations2020025

Chicago/Turabian StyleNielsen, Frank. 2022. "Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families" Foundations 2, no. 2: 362-376. https://doi.org/10.3390/foundations2020025

APA StyleNielsen, F. (2022). Onicescu’s Informational Energy and Correlation Coefficient in Exponential Families. Foundations, 2(2), 362-376. https://doi.org/10.3390/foundations2020025