Trainable Noise Model as an Explainable Artificial Intelligence Evaluation Method: Application on Sobol for Remote Sensing Image Segmentation †

Abstract

:1. Introduction

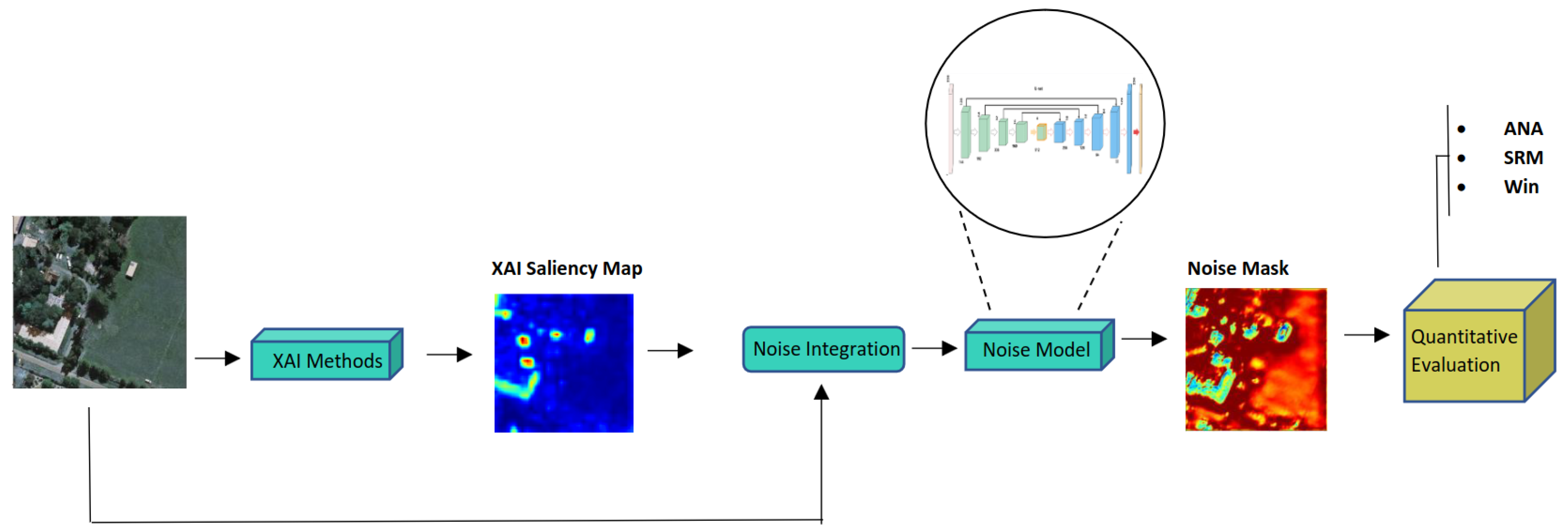

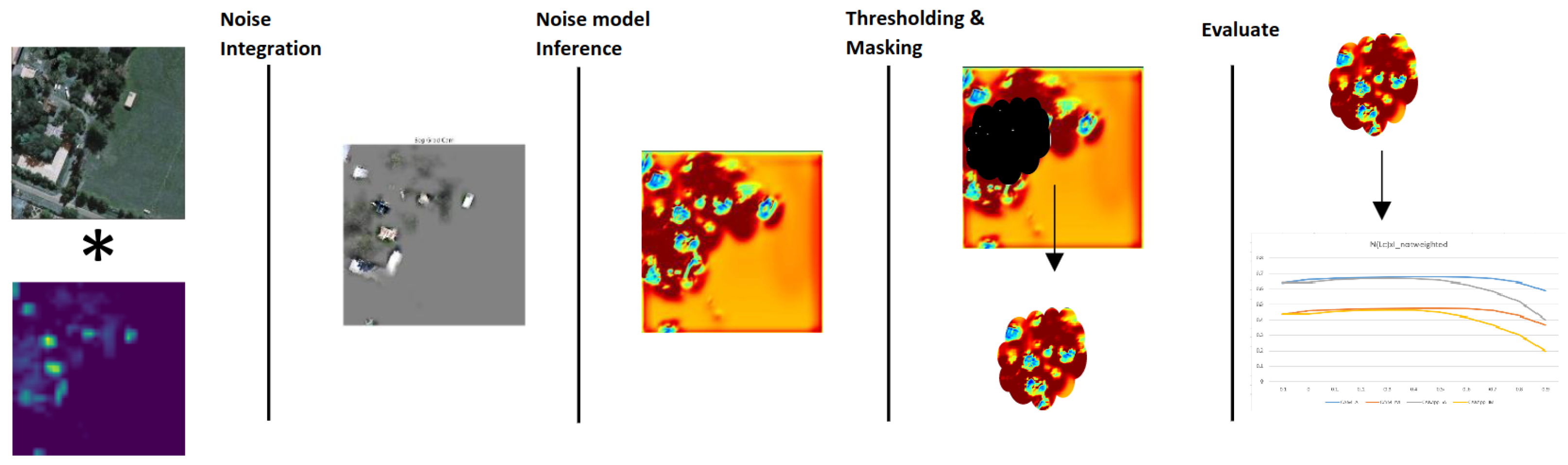

- We propose a quantitative XAI evaluation approach using a learnable noise model. Our evaluation methodology is based on feeding the saliency map combined with the input image to the noise model. Then, on the basis of the generated noise mask, statistical metrics are computed to quantitatively evaluate the performance of any XAI method.

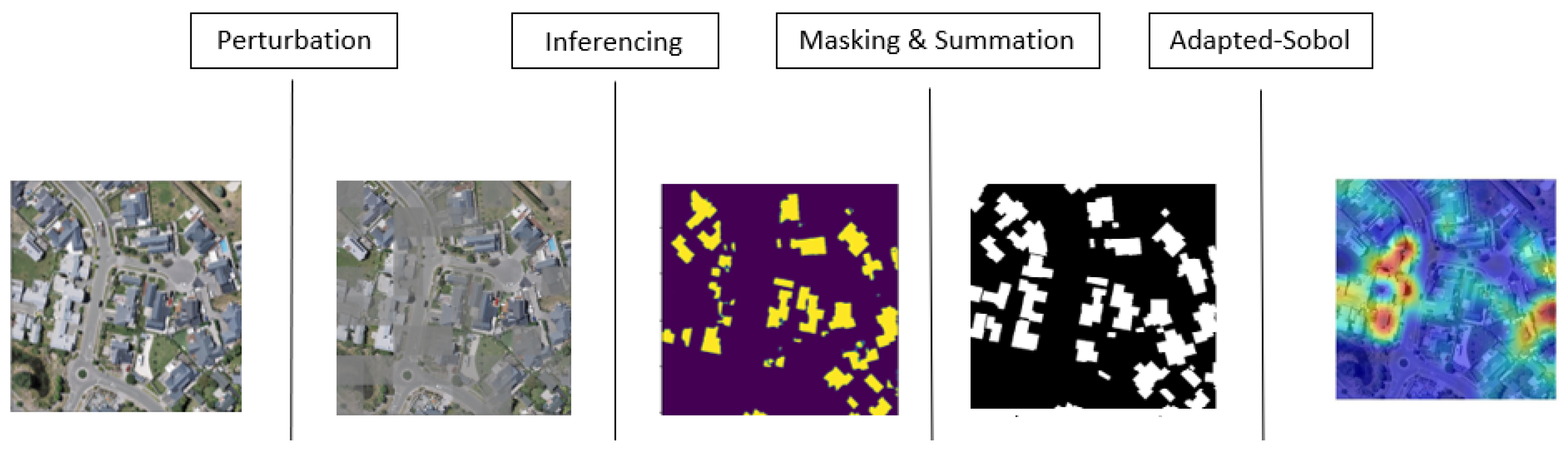

- We adapt the recently proposed perturbation-based Sobol XAI method from classification to semantic segmentation.

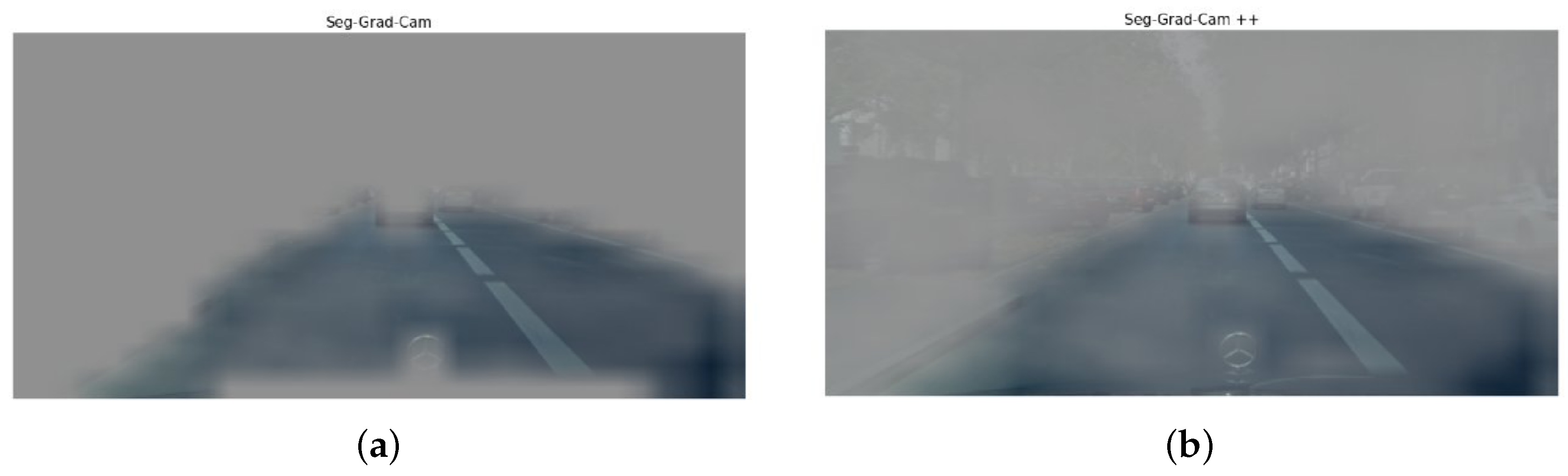

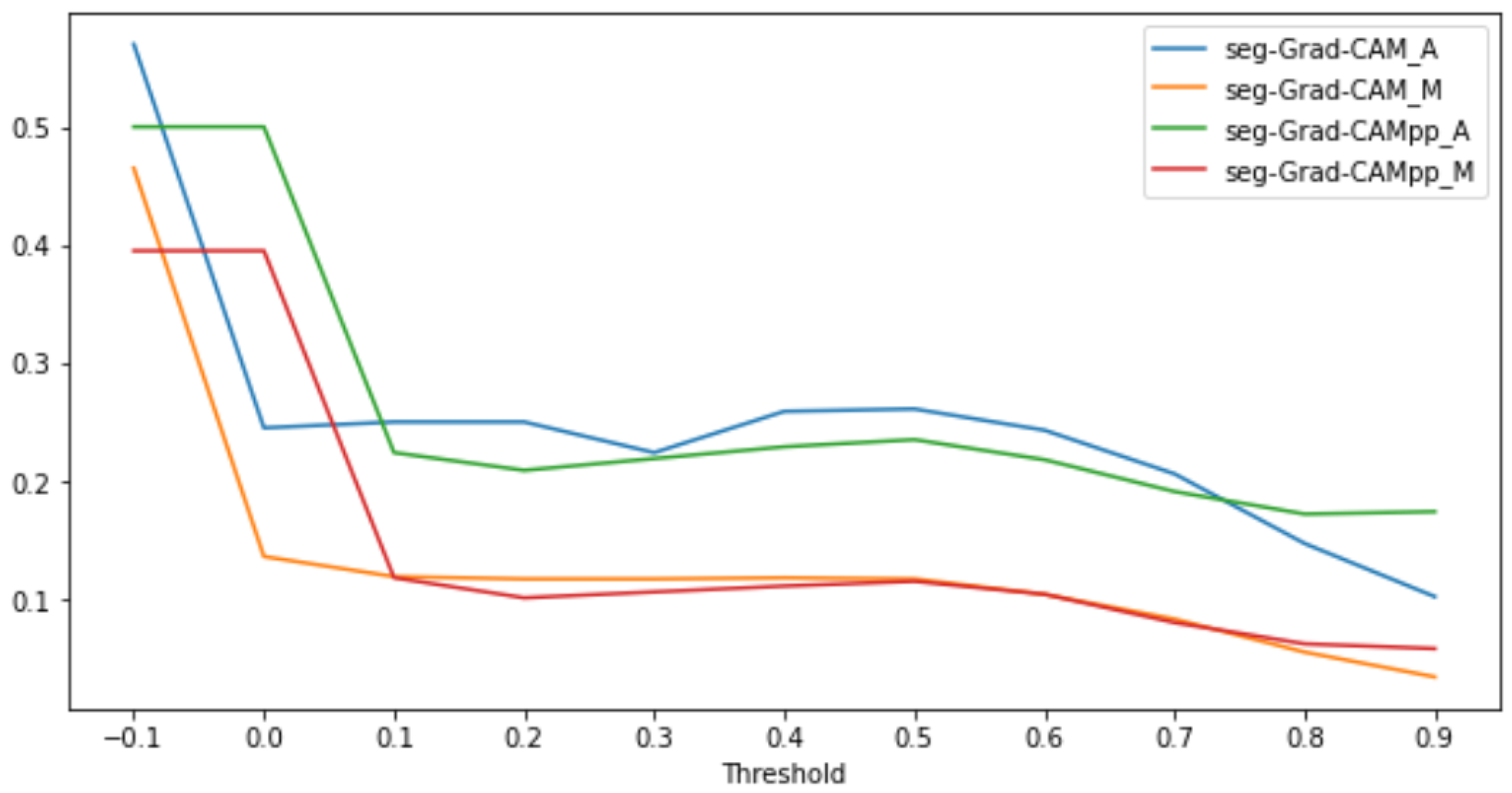

- We benchmark the performance of the adapted Sobol with the gradient-based XAI methods Seg-Grad-CAM and Seg-Grad-CAM++ using the WHU dataset for building footprint segmentation.

2. Proposed Trainable Noise Model XAI Evaluation

2.1. Methodology

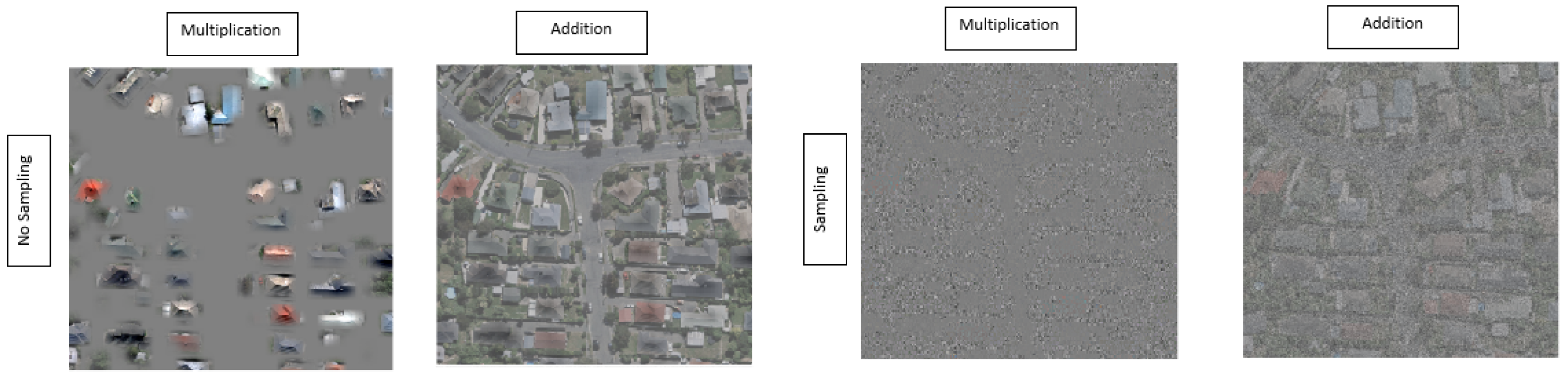

- Multiplication: The original input image is directly multiplied by the saliency map, highlighting regions of the image assumed important by the XAI method, as shown in Equation (1):

- Addition: By adding the saliency map to the original image, we augment the image with importance scores, potentially highlighting regions of interest, as shown in Equation (2):

- Normal sampling with Multiplication: Similar to the “Normal Sampling with Addition” method, but with multiplication instead of addition. This method emphasizes or de-emphasizes regions based on the importance scores and the sampled noise, as shown in Equation (3):

- Normal sampling with Addition: To introduce variability in the pixels of the explanation map, is sampled from a normal distribution. The resulting sampled values are then added to the original image, as shown in Equation (4):

2.2. Metrics

- Average Noise Added (ANA): This metric computes the mean value of the output of the U-noise model denoted by . A higher indicates that the XAI method introduces more noise to the input image, which means the lower this metric is, the better.

- Second raw moment (SRM): This metric represents the variance of the noise distribution. A higher suggests that the noise introduced by the trained noise model is spread further away from zero, which also means that the lower this metric is, the better.

3. Results

3.1. Cityscapes

3.2. WHU

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jung, H.; Oh, Y. Towards better explanations of class activation mapping. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1336–1344. [Google Scholar]

- Fel, T.; Cadène, R.; Chalvidal, M.; Cord, M.; Vigouroux, D.; Serre, T. Look at the variance! efficient black-box explanations with sobol-based sensitivity analysis. Adv. Neural Inf. Process. Syst. 2021, 34, 26005–26014. [Google Scholar]

- Koker, T.; Mireshghallah, F.; Titcombe, T.; Kaissis, G. U-noise: Learnable noise masks for interpretable image segmentation. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, Alaska, USA, 19–22 September 2021; IEEE: New York, NY, USA, 2021; pp. 394–398. [Google Scholar]

- Nielsen, I.E.; Dera, D.; Rasool, G.; Ramachandran, R.P.; Bouaynaya, N.C. Robust explainability: A tutorial on gradient-based attribution methods for deep neural networks. IEEE Signal Process. Mag. 2022, 39, 73–84. [Google Scholar] [CrossRef]

- Vinogradova, K.; Dibrov, A.; Myers, G. Towards interpretable semantic segmentation via gradient-weighted class activation mapping (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13943–13944. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, 27–30 June 2016. [Google Scholar]

- Nasrallah, H.; Samhat, A.E.; Shi, Y.; Zhu, X.X.; Faour, G.; Ghandour, A.J. Lebanon Solar Rooftop Potential Assessment Using Buildings Segmentation From Aerial Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 15, 4909–4918. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shreim, H.; Gizzini, A.K.; Ghandour, A.J. Trainable Noise Model as an Explainable Artificial Intelligence Evaluation Method: Application on Sobol for Remote Sensing Image Segmentation. Environ. Sci. Proc. 2024, 29, 49. https://doi.org/10.3390/ECRS2023-16609

Shreim H, Gizzini AK, Ghandour AJ. Trainable Noise Model as an Explainable Artificial Intelligence Evaluation Method: Application on Sobol for Remote Sensing Image Segmentation. Environmental Sciences Proceedings. 2024; 29(1):49. https://doi.org/10.3390/ECRS2023-16609

Chicago/Turabian StyleShreim, Hossein, Abdul Karim Gizzini, and Ali J. Ghandour. 2024. "Trainable Noise Model as an Explainable Artificial Intelligence Evaluation Method: Application on Sobol for Remote Sensing Image Segmentation" Environmental Sciences Proceedings 29, no. 1: 49. https://doi.org/10.3390/ECRS2023-16609

APA StyleShreim, H., Gizzini, A. K., & Ghandour, A. J. (2024). Trainable Noise Model as an Explainable Artificial Intelligence Evaluation Method: Application on Sobol for Remote Sensing Image Segmentation. Environmental Sciences Proceedings, 29(1), 49. https://doi.org/10.3390/ECRS2023-16609