1. Introduction

Phase holography has revolutionized the generation and reconstruction of three-dimensional images by encoding spatial information through manipulating the light beam’s phase [

1]. Generating phase holograms combines optical and computational approaches. A fundamental challenge of traditional optical methods, such as interferometry and diffraction, is that the object must be physically present in the experimental system. This requirement can limit recording capabilities [

2]. Holographic recording becomes impractical if the object is inaccessible, too large, or incompatible with laser illumination. Computer-generated holograms (CGH) address this limitation by allowing the generation and manipulation of holograms of any scene or object digitally without requiring its physical presence [

3]. This not only removes the restrictions associated with object size and accessibility but also opens up possibilities for applications in scientific visualization, virtual reality, and optical communications, where the flexibility and adaptability of CGHs are essential.

On the other hand, computational methods have gained popularity due to their flexibility and accuracy. These methods use algorithms to calculate the necessary phase to be printed or displayed on a spatial light modulator (SLM) [

2,

4]. Furthermore, advanced techniques have been developed for precise control of both phase and amplitude in computer-generated holograms, allowing for improved fidelity of three-dimensional reconstruction [

5].

Phase holograms are essential because of their applications in fields such as microscopy, metrology, medical visualization, and optical communications [

6,

7,

8]. In this context, optimizing phase hologram generation has become a crucial goal to improve the accuracy and efficiency of these applications, offering new capabilities in three-dimensional image capture and reconstruction [

2,

9]. In the field of microscopy, digital holography has emerged as an advanced method for phase imaging of semi-transparent and transparent objects. This approach allows for high resolution and contrast in imaging small specimens, facilitating quantitative characterization of the observed objects’ three-dimensional morphology and refractive index [

10]. Also, these capabilities have been essential in biomedical and biotechnology studies, where detailed and accurate images are paramount for analysis and diagnosis [

11]. Furthermore, digital holography has enabled the integration of confocal and widefield microscopy techniques, further expanding microscopic observation capabilities [

12,

13]. In medical visualization, phase holography has enabled significant advances in obtaining detailed and accurate images of biological structures. Digital holographic tomography, in particular, has emerged as a tool for capturing high-resolution three-dimensional images for biomedical research and clinical diagnosis; this technique enables the quantitative measurement of optical parameters of samples in the analysis of tissues and cells at the subcellular level [

1,

14]. The ability of this technology to produce high-quality images in real-time has innovated areas such as image-guided surgery and monitoring of dynamic processes in cellular biology [

15]. In metrology, phase holograms have proven helpful for surface measurement and three-dimensional reconstruction of microscopic structures due to their ability to capture both the amplitude and phase of reflected or transmitted light. Relevant in fabricating microelectromechanical devices (MEMS) and inspecting advanced materials [

16,

17]. Digital holography in optical metrology has facilitated the non-destructive inspection of industrial components, providing data on surface topography and defects at the nanoscale [

18]. Finally, phase holograms have become vital for efficiently encoding and decoding information in optical communications. The ability to manipulate the light phase with great precision enables high-speed data transmission, essential for developing advanced communication networks that can handle large volumes of information with low latency and high fidelity [

19].

Phase holograms are classified into two types: single-phase and dual-phase. Single-phase holograms exclusively modulate the phase of the incident light to form an image, resulting in an energy-efficient process that is less prone to reconstruction errors. On the other hand, dual-phase holograms decompose a complex-valued hologram into two-phase distributions, combining them to generate a more robust final image. This technique, while practical, often introduces unwanted diffraction orders, requiring filtering to enhance image quality [

20,

21].

One of the most recognized techniques in computer hologram generation is the Gerchberg-Saxton (GS) algorithm [

22], widely used in phase retrieval problems. GS is an iterative algorithm that alternates between the spatial and Fourier domains, adjusting the phase so that the reconstructed intensity matches a desired pattern in a specific plane. Although it has been instrumental in developing digital holography, its objective function is rigid. Usually, it requires a lens to perform the Fourier transform, which limits its flexibility and adaptation in different applications. Variants such as the weighted Gerchberg-Saxton (WGS) [

23] introduce weighting schemes to improve convergence, while the hybrid input-output (HIO) method [

24] allows adjustments in both phase and amplitude, offering greater robustness. However, these techniques maintain dependencies on fixed optical components and objective functions, which may restrict their applicability in contexts where greater flexibility or specific modifications in optimization are required.

Despite the multiple applications, challenges persist in generating phase holograms due to the non-convex nature of the objective function used in the optimization processes. Non-convex optimization in computational holography poses a significant challenge due to the propensity of stochastic gradient descent (SGD) algorithms to get trapped in local minima, which frequently prevents reaching globally optimal solutions and demands exhaustive tuning of hyperparameters to achieve effective convergence. Although pre-computation methods and including specialized loss functions, such as those based on standard deviation or relative entropy, can mitigate some convergence issues, these approaches still critically depend on the correct selection and tuning of input parameters. The sensitivity of these algorithms to the choice of hyperparameters further exacerbates the problem, as it requires fine-tuning specific to each holographic application, which increases the complexity and development time in synthesizing high-quality holograms. Several works analyze these challenges and propose solutions to generate phase holograms. D. P. Kingma and J. Ba [

25] present a new non-convex optimization algorithm that minimizes a custom cost function tailored to specific holographic applications, addressing the problem of local minima and the need for fine-tuning hyperparameters. C. Depeursinge [

17] presents a computer-generated hologram (CGH) method that incorporates a precomputation procedure and a standard deviation-based loss function, highlighting the challenges of non-convex optimization and the use of SGD.

Recent advances in multi-plane phase retrieval have significantly improved the capabilities of holographic imaging systems. Descloux et al. [

26] introduced a combined multi-plane phase retrieval and super-resolution optical fluctuation imaging method for four-dimensional (4D) cellular microscopy, achieving high-resolution volumetric imaging of dynamic cellular processes. Their approach uses a joint optimization framework that combines phase retrieval across multiple planes with super-resolution techniques, enabling the reconstruction of complex biological structures with improved spatial resolution. Similarly, Huang et al. [

27] proposed a dual-plane coupled phase retrieval method for holographic imaging without prior information, which reconstructs waves from complex objects without prior knowledge by leveraging data from two nearby planes. This method improves phase retrieval accuracy and reduces reconstruction artifacts by exploiting the coupling between the two planes, offering improved image quality in holographic systems.

In recent years, deep neural networks have emerged as a powerful tool in hologram generation and optimization, achieving significant advances in reconstruction quality and speed. Shi et al. [

28] proposed a deep neural network-based approach to generate photorealistic 3D holograms in real-time, using a deep learning architecture that learns to map 3D scenes to phase holograms directly. This method demonstrates a remarkable improvement in artifact removal and the reconstructed images’ visual quality, opening new possibilities for applications in real-time visualization. On the other hand, Peng et al. [

29] introduced neural holography with closed-loop training (camera-in-the-loop), where a neural network is trained using images captured directly from the real optical system. This approach allows the model to learn and compensate for imperfections and non-idealities of the physical system, resulting in highly accurate images.

While these methods focus on phase retrieval techniques to improve image quality, our proposed multi-plane optimization approach differs by targeting the optimization of phase hologram generation itself. By integrating advanced machine learning algorithms, such as RMSprop and Adam, and leveraging Graphics Processing Unit (GPU) acceleration, our method minimizes reconstruction error across multiple focal planes strategically selected during the hologram generation. This improves the robustness and accuracy of holographic reconstructions over a range of depths and enhances computational efficiency by using custom propagation layers implemented within a machine learning framework using TensorFlow and Keras.

Our research addresses this problem by significantly improving the objective function for phase hologram generation. The main contribution of this study is incorporating a modified objective function that minimizes the holographic reconstruction error at three different distances: one before the reference distance, another exactly at the reference distance, and a third after the reference distance. The proposal aims to mitigate the effects of non-convex optimization by providing a more robust and accurate reconstruction of the hologram over the entire range of relevant depths.

The improvement in the objective function leverages the angular spectrum theory and applies it to wave propagation. The angular spectrum theory is fundamental in analyzing electromagnetic wave propagation [

30]. The theory allows the decomposition of a wave into components of different propagation directions, known as angular components. Each of these components behaves like a plane wave, which facilitates understanding how complex waves propagate through different media; this technique is instrumental in digital holography, as it allows the modeling and simulation of wavefronts along multiple focus planes [

31]. By manipulating the angular spectrum of a hologram, it is possible to tune the phase of the light and improve the fidelity of the three-dimensional reconstruction, which is essential in applications requiring accurate depth representation, such as in holographic tomography [

32]. Optimizing the hologram’s phase to minimize errors across multiple focal planes achieves higher fidelity in three-dimensional reconstruction. The proposed approach is particularly relevant in applications with crucial depth accuracy representation, such as holographic tomography and medical visualization.

The primary objective of this study is to develop an improved method for generating phase holograms that minimize reconstruction error across multiple focal planes, not just at a single plane. We aim to:

Implement advanced machine learning algorithms, specifically RMSprop and Adam, to enhance optimization.

Employ GPU acceleration, a specialized processor designed for parallel computation, to reduce computational load and improve efficiency in tasks such as data processing and complex calculations.

Validate the proposed method through numerical simulations.

Our main contributions are:

We propose a novel multiplane optimization strategy that minimizes reconstruction errors at multiple focal distances. This approach improves the robustness and accuracy of holographic reconstructions.

We implement advanced optimization algorithms within a machine learning framework using TensorFlow and Keras, which enables efficient computation through GPU acceleration.

We provide a comprehensive comparison with traditional single-plane optimization methods, demonstrating the advantages of our approach in terms of convergence speed and reconstruction quality.

We validated the effectiveness of the proposed method through numerical simulations and evaluations of the quality of the reconstructed images.

While experimental validation is essential to demonstrate practical applicability, in this work, we focus on the theoretical foundations and computational modeling of multiplane optimization in phase holography. By establishing a solid theoretical foundation, we lay the groundwork for future experimental investigations, which will be addressed in subsequent studies.

The remainder of this paper is organized as follows:

Section 2 covers the theoretical foundations of phase holography and the angular spectrum method.

Section 3 discusses the optimization algorithms employed, including their mathematical formulations and relevance to the problem. In

Section 4, we describe in detail our approach to multiplane optimization, including specific aspects of its implementation and the computational setup used for execution.

Section 5 presents the results, including numerical simulations and experimental verification, followed by a comprehensive discussion. Finally,

Section 7 concludes the paper and suggests directions for future research.

4. Methodology

4.1. Proposed Multiplane Optimization

Multiplane Optimization in Phase Holography is a proposal designed to improve the accuracy of hologram generation by simultaneously addressing reconstruction errors at multiple distances. Unlike traditional methods that optimize hologram quality in a single plane, this strategy considers multiple propagation planes, ensuring that the hologram maintains high fidelity over a range of key distances. Traditionally, optimization is performed by minimizing the error at a single observation plane located at a distance z from the hologram. However, this approach often limits the reconstruction quality, as it overlooks possible variations in image quality at different propagation distances. To address this limitation, we propose a new multi-plane optimization strategy, which consists of minimizing the error of the reconstructed image at three key distances: , z, and , where d is an additional distance determined by a previous exhaustive search.

The objective function we propose seeks to minimize the average error at the three selected distances, thus allowing a more robust and accurate holographic reconstruction over a broader range of depths. The objective function proposal is defined as:

where

where

, , and represent the mean square error in the distances z, , and , respectively.

is the intensity of the reference image in the coordinates .

, , and are the reconstructed intensities in the coordinates at the distances z, , and , respectively.

N is the total number of pixels in the image.

After defining the optimization strategy, it is crucial to establish metrics to evaluate the generated holograms’ effectiveness comprehensively. The distance d is a critical parameter representing the separation between the central plane at a distance z and the adjacent planes at and . An exhaustive search was conducted to find the optimal value of d that minimizes the total MSE across these planes. The search range was to meters, with increments of meters. The optimal value was meters (90 μm). In multiplane optimization, we calculate the MSE at positions using the same target image as at z. This approach seeks to improve the robustness of the hologram against small variations in the axial position of the reconstruction plane. By penalizing the differences between the defocused image at and the focused target image, we force the algorithm to find a phase distribution that maintains high reconstruction quality over a range of distances around z. This increases the effective depth of focus and makes the system more tolerant of misalignments or uncertainties in the propagation distance.

4.2. Evaluating Image Quality

In addition to using the MSE as an error measure, we incorporate the Structural Similarity Index Measure (SSIM), and the Peak Signal-to-Noise Ratio (PSNR) to evaluate the quality of the reconstructed images. These metrics provide a comprehensive assessment of image fidelity, considering factors beyond simple pixel-wise differences.

SSIM is a metric designed to measure the perceived similarity between two images, considering changes in luminance, contrast, and structure. It was developed as a direct improvement over the UQI (Universal Quality Index) [

54], which already considered these factors but in a more limited way. SSIM introduces key improvements, better aligning with the human perception of visual quality [

55]. Mathematically, SSIM for two images

and

of size

is defined as:

where:

- –

and are the mean values of images and , respectively.

- –

and are the variances of and .

- –

is the covariance between and , calculated as:

The terms

and

are constants to avoid instability when the denominators approach zero. SSIM ranges from

to 1, where 1 indicates structurally identical images.

PSNR is a metric based on the pixel-wise intensity differences between two images and is defined as:

where

is the maximum possible pixel value of the image (e.g., 255 for 8-bit images), and MSE is the mean squared error between the images. By incorporating these additional metrics, a more comprehensive evaluation of the reconstructed image quality is achieved. This encompasses the average error, structural similarity, and perceived visual quality, allowing for a more precise and detailed analysis of the reconstruction performance.

4.3. Implementation Details

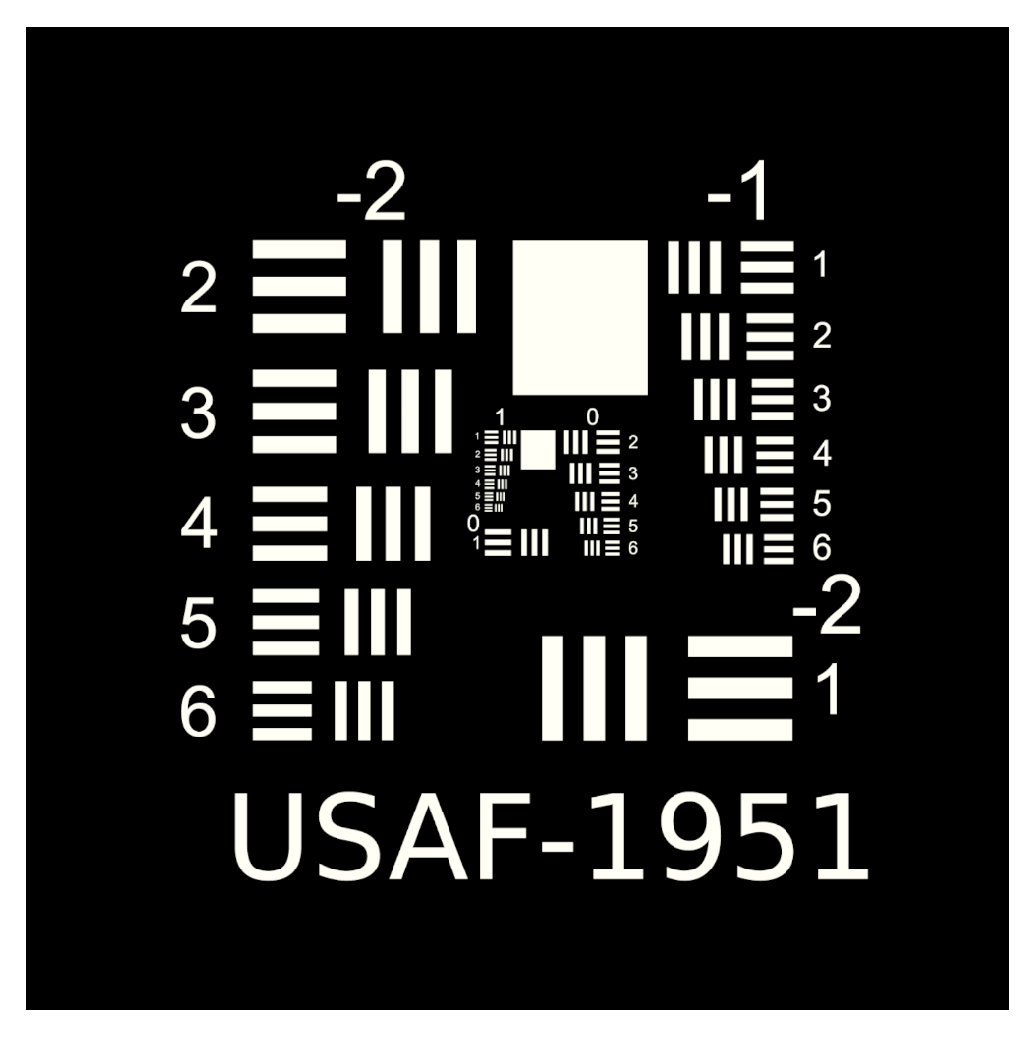

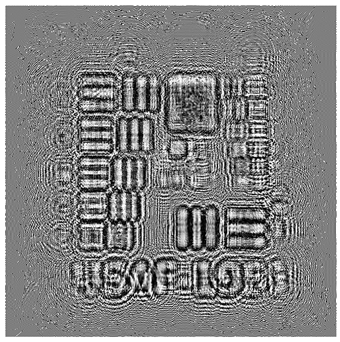

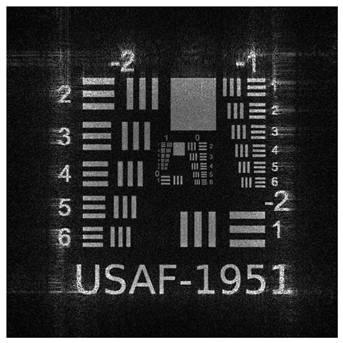

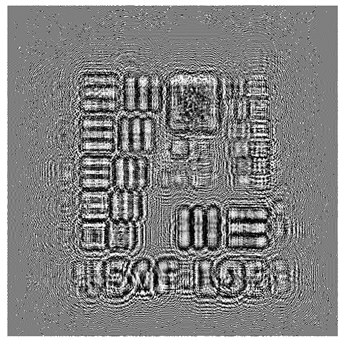

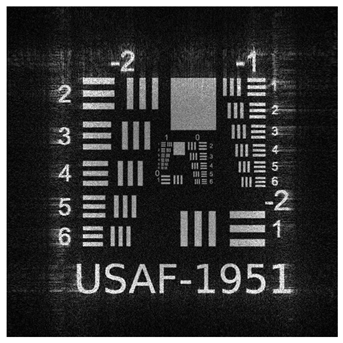

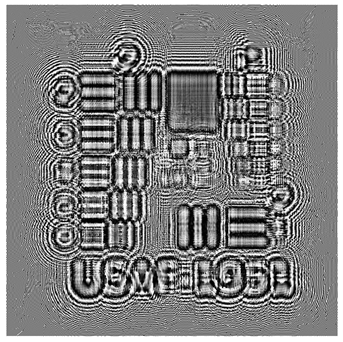

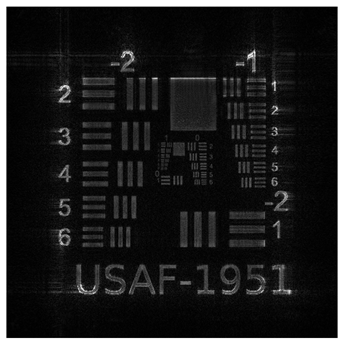

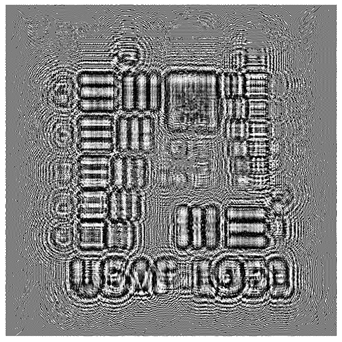

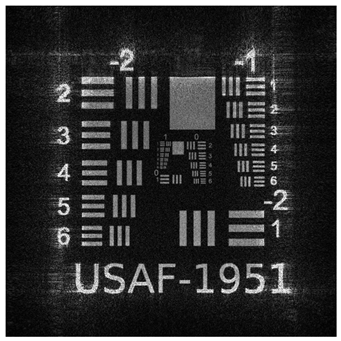

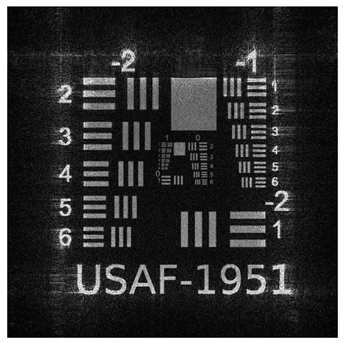

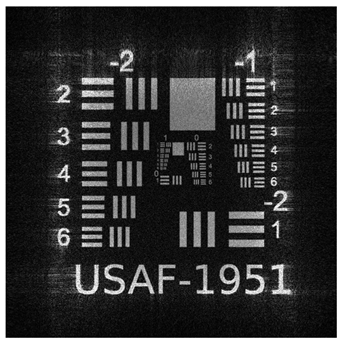

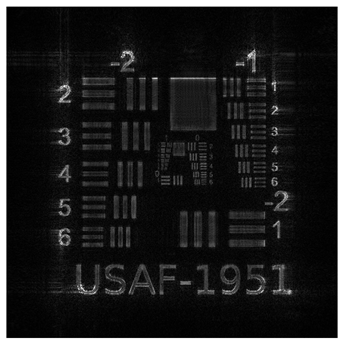

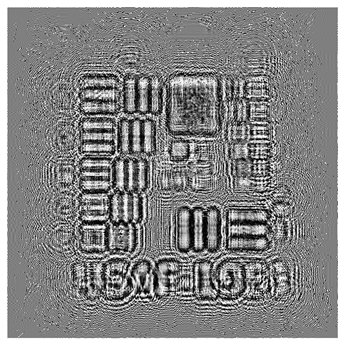

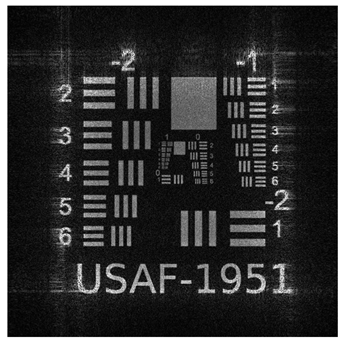

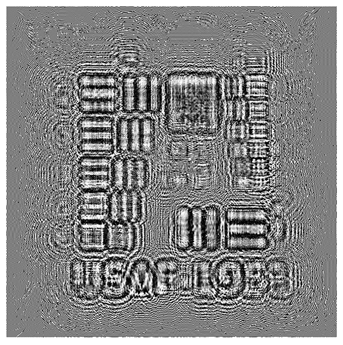

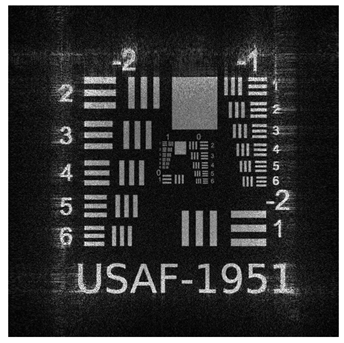

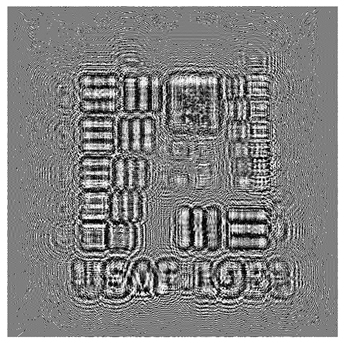

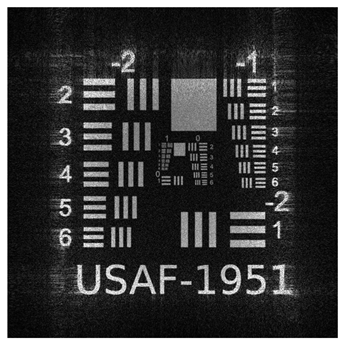

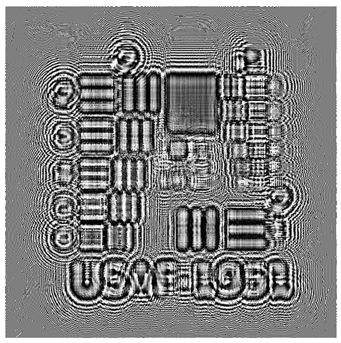

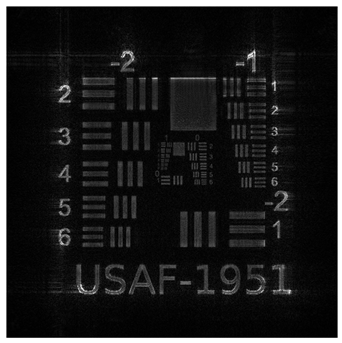

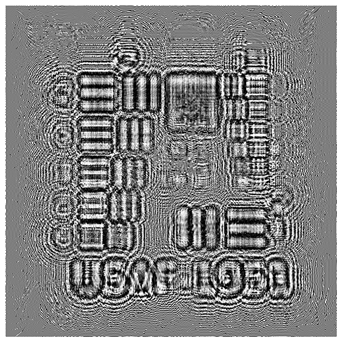

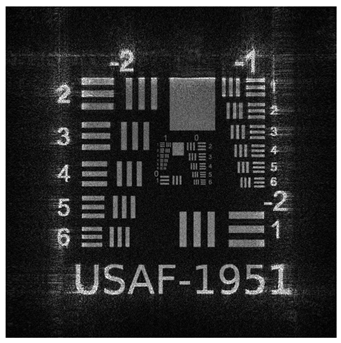

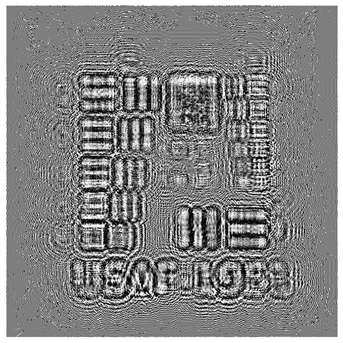

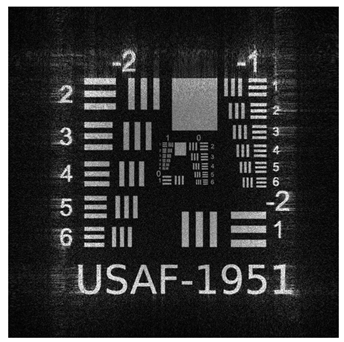

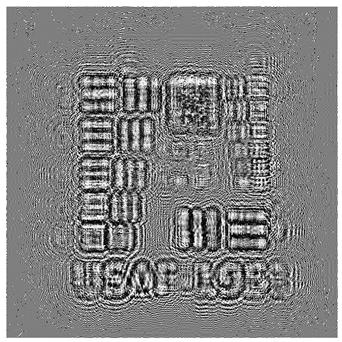

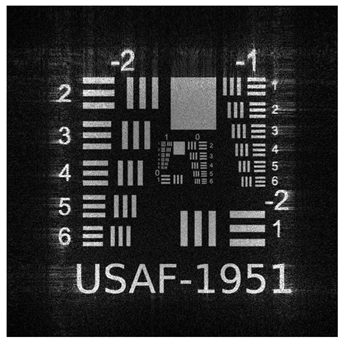

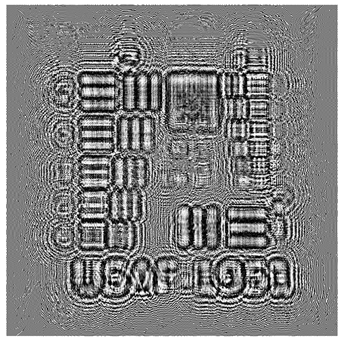

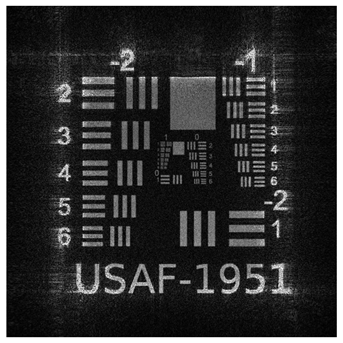

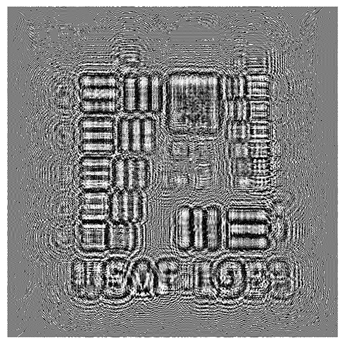

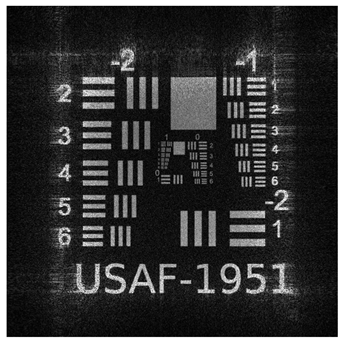

To validate the effectiveness of our multi plane optimization approach, we conducted tests using a widely employed standard pattern in optical system resolution evaluation: the USAF−1951 test chart (

Figure 1). This design rigorously assesses the optical system’s ability to resolve details at multiple resolution levels, providing a quantitative measure of the reconstructed image quality [

56].

The model utilizes the TensorFlow 2.6 library, integrating an intermediate layer into a neural network to optimize phase hologram generation. The implementation was carried out on an ASUS ROG Strix G16 computer featuring an Intel Core i9-14900HX processor and an NVIDIA GeForce RTX 4060 graphics card with 8 GB of GDDR6 memory and a 128-bit bus. These specifications fully leveraged the machine’s GPU, enabling efficient image processing and training of machine learning models. The software was developed using Python 3.7, CUDA 11.8, and TensorFlow 2.6, all fully compatible with this algorithm’s implementation. The advanced hardware ensures optimal performance on computationally intensive tasks, such as image processing and model parameter tuning during training. The model is evaluated using four optimizers: Adam, Nadam, RMSprop, and SGD. Implementing a holographic layer in TensorFlow simulates holograms propagating across the angular spectrum, and the optimization uses a GPU. During the optimization, the performance of the reconstruction error at the three key distances is evaluated.

The phase of the hologram will be calculated using the angular spectrum method described in the theoretical section. It will be optimized to minimize the error at distances , z, and . The parameter d is determined through a prior exhaustive search, optimizing the objective function to find the value of d that minimizes the total error across all three distances. The search involves varying d within a predetermined range and calculating the objective function for each case. Finally, it requires selecting the value of d that minimizes the total error for subsequent optimization.

4.4. Experimental Procedure

This study focuses on the proposed multiplane optimization method’s theoretical development and numerical simulation. We systematically analyze the method’s performance and effectiveness under controlled conditions by implementing the algorithms within a computational framework. This approach isolates the optimization strategy’s effects from the confounding variables in experimental setups, offering a clear understanding of the method’s underlying principles and potential benefits.

Phase Hologram Initialization: We compute the initial phase by performing a backpropagation of the object’s optical field, using an interference simulation between the object and reference wavefronts. This process incorporates structural information from the target image, ensuring a consistent and fair starting point for all optimization algorithms.

Hologram Propagation: Propagated the hologram using the angular spectrum method at three specific distances: , z, and .

MSE Calculation: For each propagated plane, the MSE is calculated between the reconstructed and target images.

Phase Hologram Update: The phase hologram is updated using the selected optimization algorithm (e.g., Adam, Nadam, RMSprop, or SGD). This step involves iteratively adjusting the hologram’s phase values to minimize the loss function calculated in the previous step.

Optimization Process Iteration: Steps 2 through 4 are repeated for a predefined number of iterations. This iterative process allows progressive refinement of the phase hologram, improving the reconstruction quality at each step and ensuring the optimization converges to an optimal solution.

Data Logging: During the optimization process, MSE values and reconstruction images are logged at specific iteration intervals. This systematic logging allows for monitoring the optimization progress, assessing continuous improvement in reconstruction quality, and detecting potential stagnation or premature convergence.

During the reconstruction of holograms, unwanted diffraction orders may appear that can distort the quality of the reconstructed image. In our methodology, the computational model significantly simplifies the problem by considering only the desired diffraction order, omitting the others to simplify the mathematical model, facilitate optimization, and reduce the computational cost. Also, to ensure a fair comparison between the different optimization algorithms, we use an initial phase based on an interference simulation between the object wavefronts and the reference wavefronts. Specifically, we perform a backpropagation of the object’s optical field, considering only the phase resulting from this backpropagation. This approach incorporates structural information of the object in the initial phase, improving the convergence and effectiveness of the optimization algorithms. Mathematically, the initial phase calculation process is described as

where

is an initial phase assigned based on a reference image. The Fourier transform

is calculated, the backpropagation factor

is applied, and the backpropagation field

is obtained by the inverse Fourier transform. The initial phase is set as

. This methodological choice ensures that all optimization algorithms start from the same physical condition, allowing a fair and consistent performance comparison.

Algorithm 1 shows the iterative optimization elements that adjust the hologram phase based on a multiplane loss function, thereby reducing reconstruction errors in three strategic planes. Implementing custom propagation layers in a simple neural network environment facilitates the evaluation of hologram performance in each plane, improving its stability and versatility in different applications. This proposal optimizes reconstruction accuracy at the desired distance and increases the hologram’s resilience to position variations, significantly expanding its applicability.

| Algorithm 1 Multiplane Optimization |

- 1:

Inputs: - 2:

Target image - 3:

Source image - 4:

Physical parameters: wavelength , distance , difference , sizes , , extents , - 5:

Initialization: - 6:

Compute spacings and coordinates: - 7:

, - 8:

x, y, , (spatial coordinates and frequencies) - 9:

Compute wave number in z: - 10:

- 11:

Define propagation distances: - 12:

, - 13:

Compute transfer functions: - 14:

, for - 15:

Process images to obtain amplitudes: - 16:

- 17:

- 18:

Estimate initial phase via inverse backpropagation - 19:

Optimization: - 20:

Initialize - 21:

for to T do - 22:

for to 3 do - 23:

Compute field: - 24:

- 25:

Propagate field: - 26:

- 27:

Obtain amplitude: - 28:

- 29:

end for - 30:

Compute loss: - 31:

Update phase: - 32:

(using optimizers: Adam, Nadam, SGD, RMSprop) - 33:

end for - 34:

Output: - 35:

Optimized phase mask - 36:

Reconstructed images - 37:

Metrics report: , , and

|