Automatic Weight-Bearing Foot Series Measurements Using Deep Learning

Abstract

1. Introduction

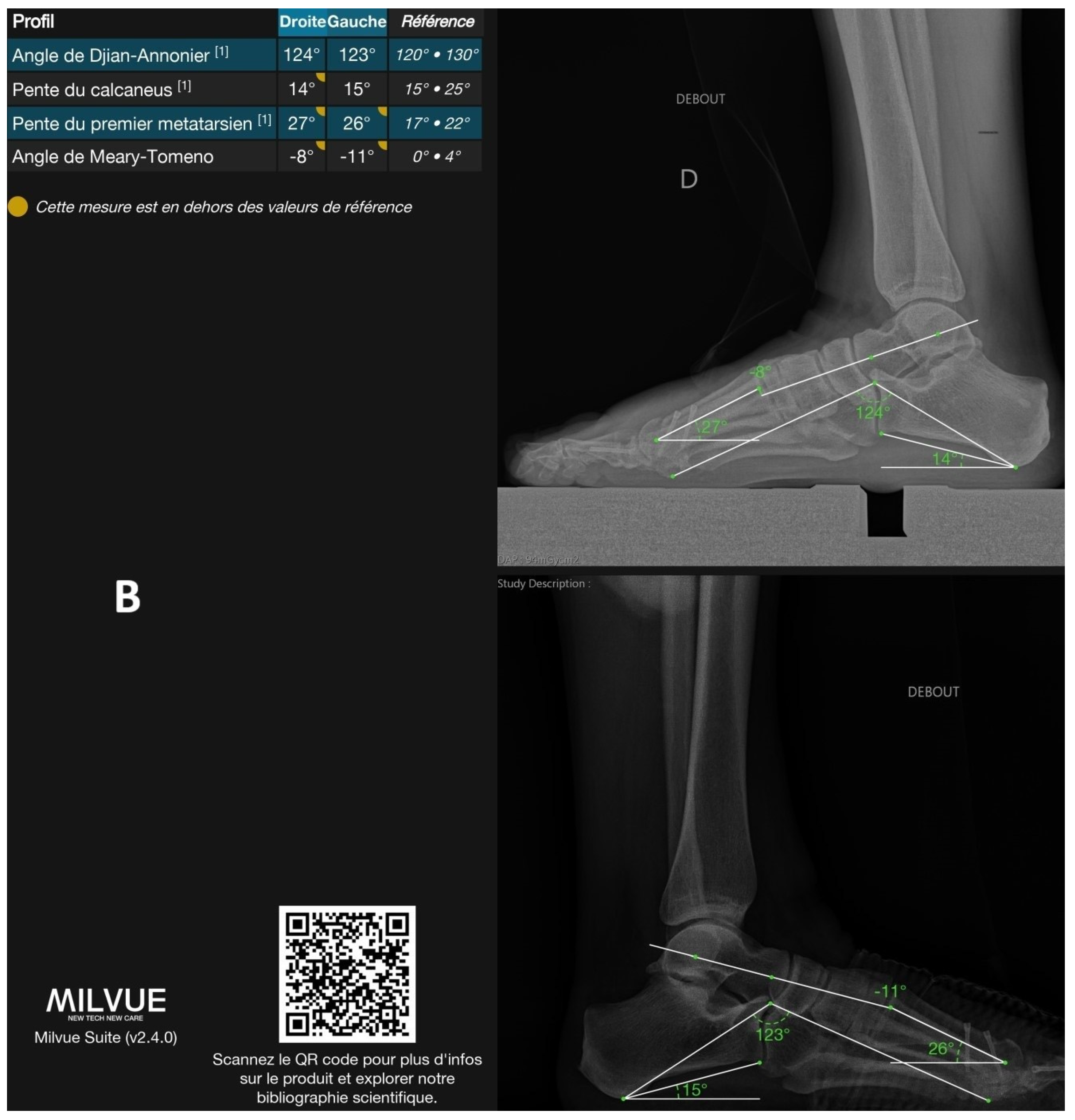

1.1. Clinical Context and Challenges

1.2. Related Work

1.3. Objectives of the Study

2. Materials and Methods

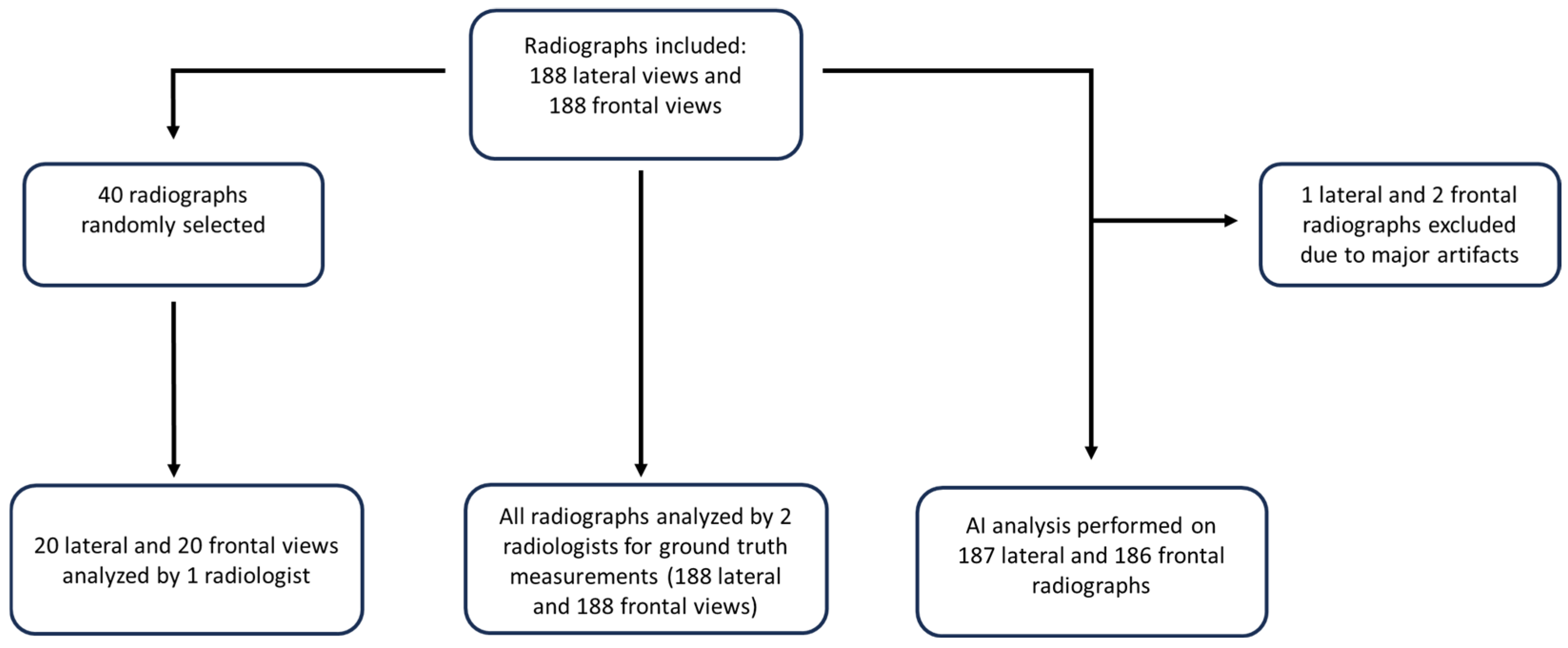

2.1. Study Design

2.2. Data Source and Processing

2.3. Ground-Truth Measurements and Inter-Reader Variability

2.4. Statistical Analyses

3. Results

3.1. General Results

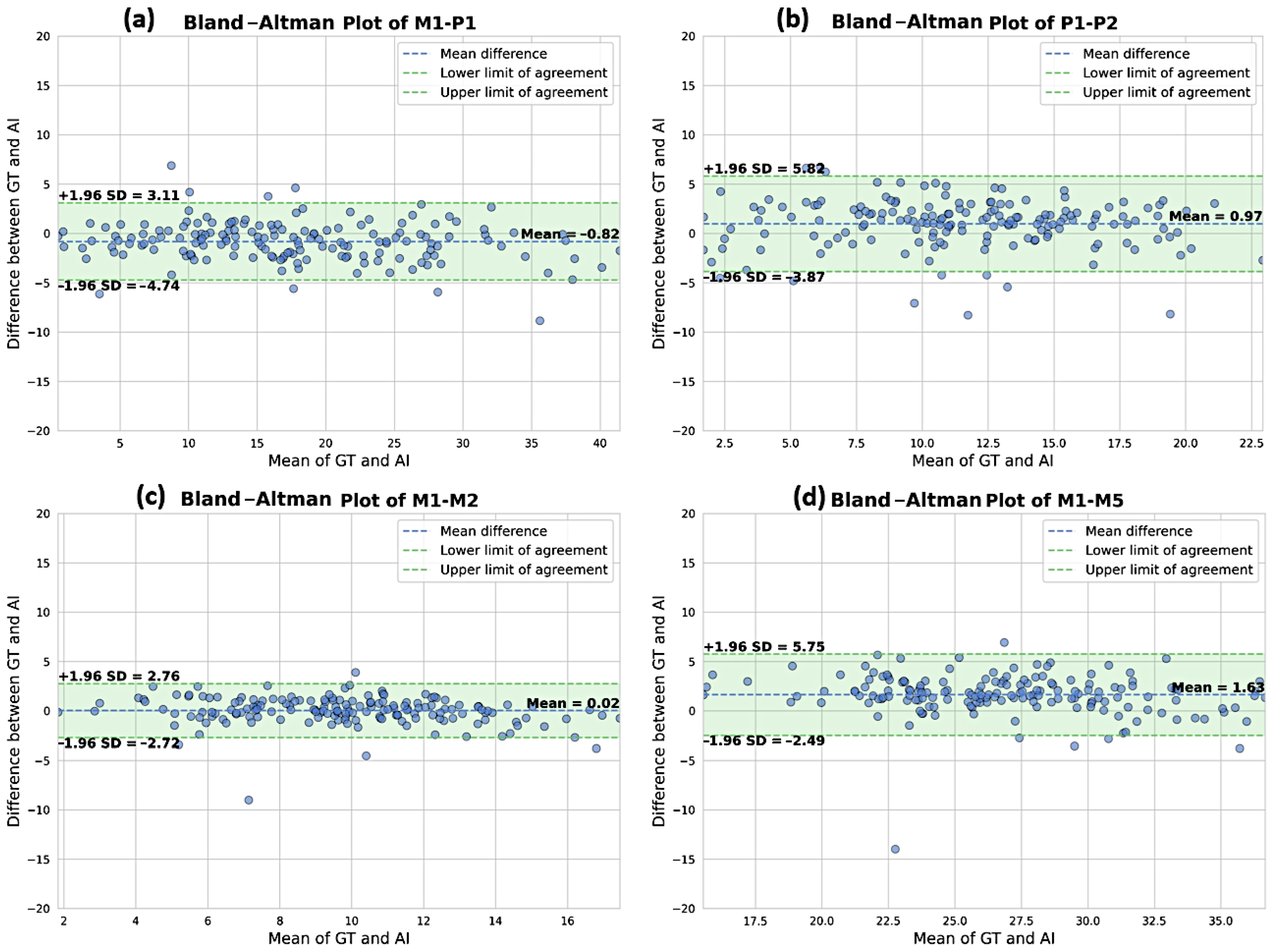

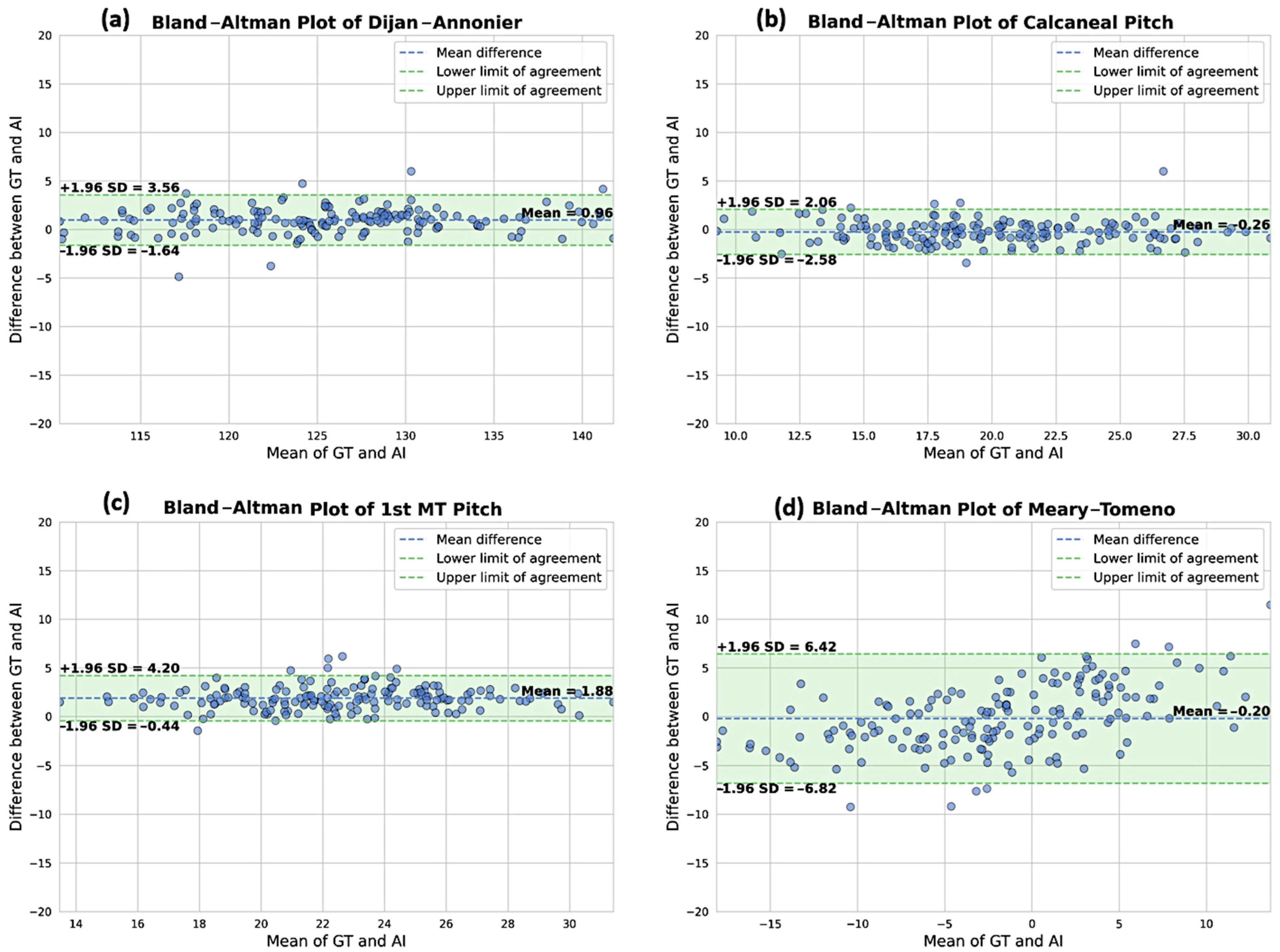

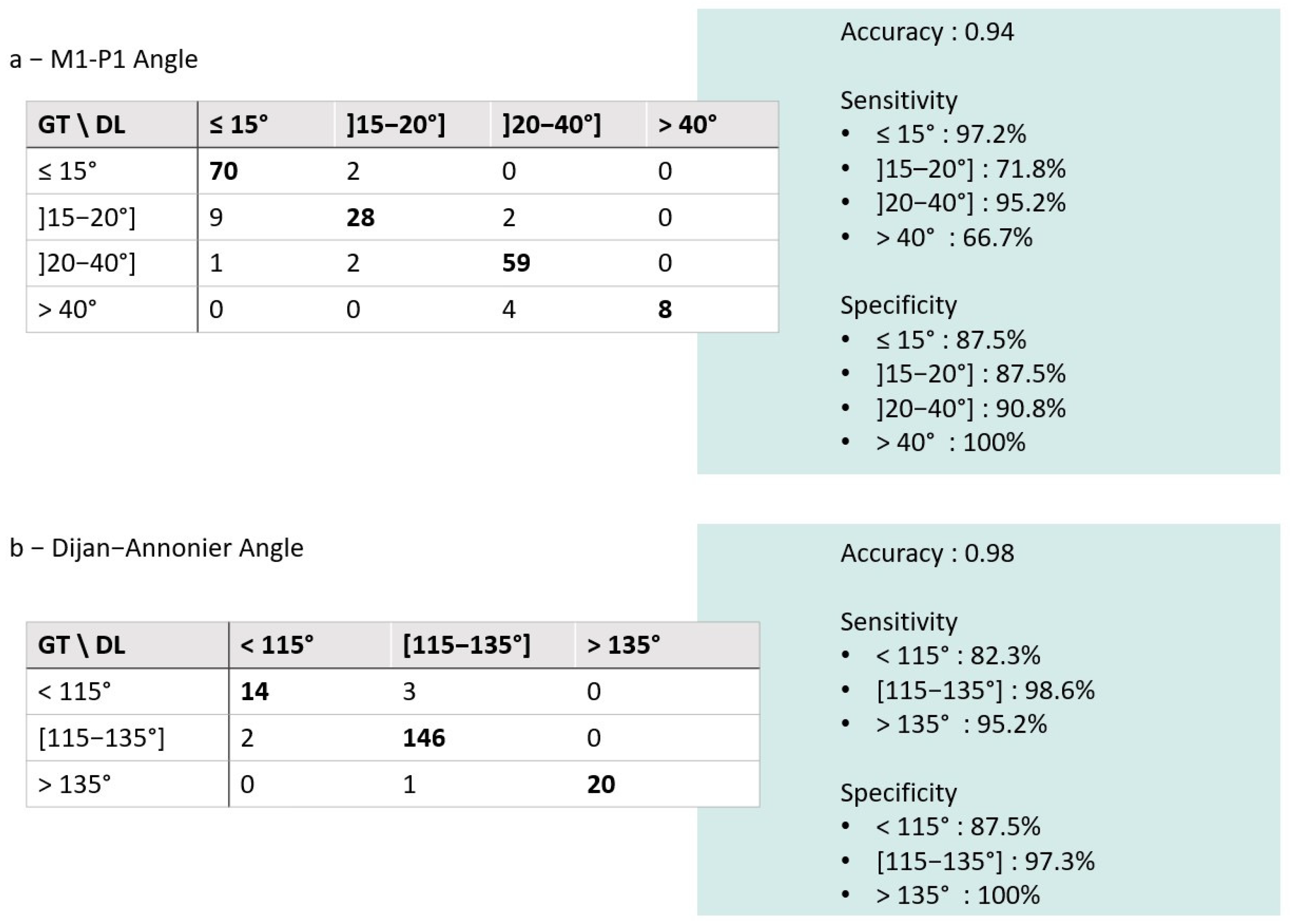

3.2. Comparison of the DL Solution to the Ground Truth

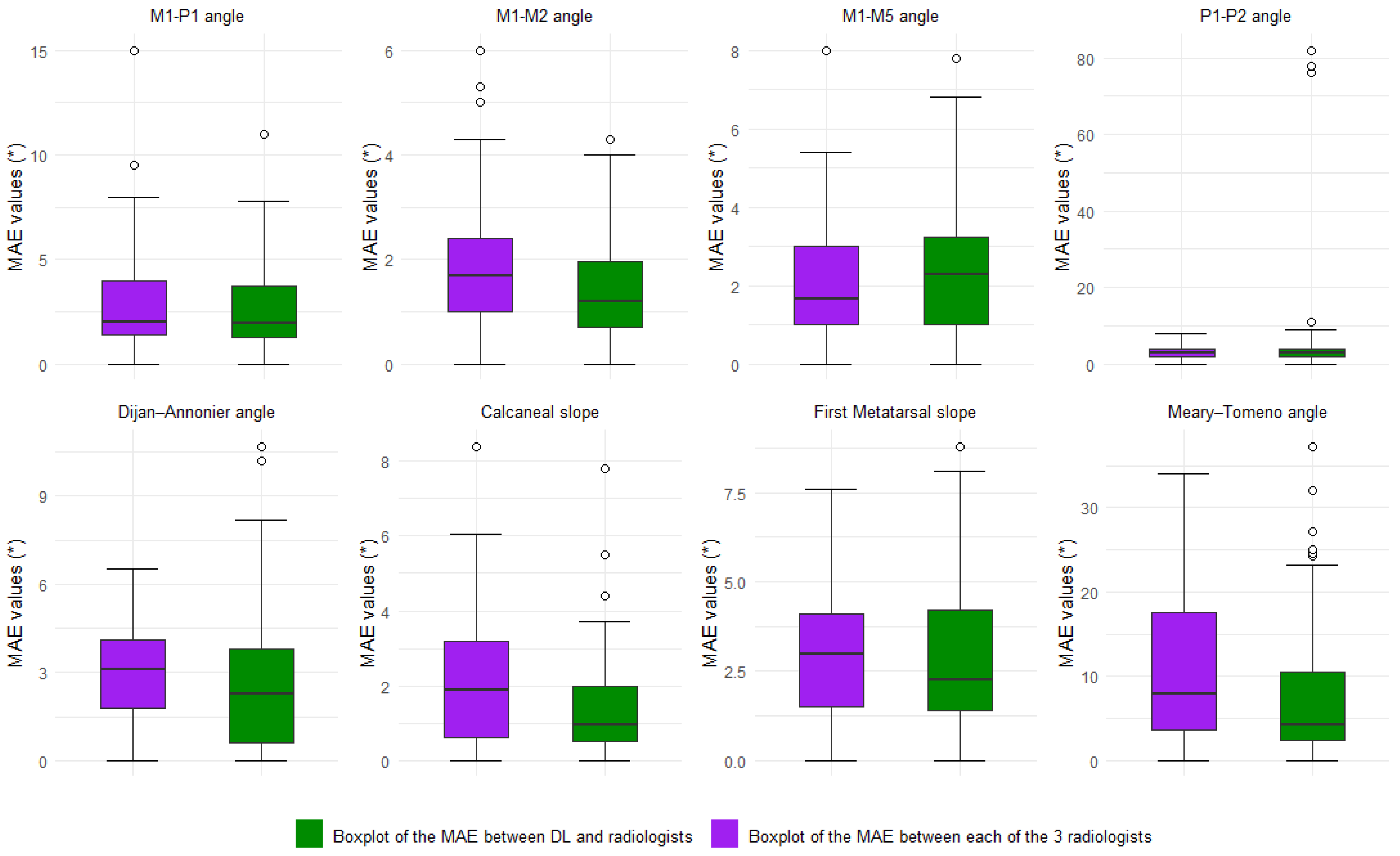

3.3. Inter-Reader Variability and Comparison to the DL Model

3.4. The Assessment of Time to Measurements

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| ROI | Region of Interest |

| GT | Ground Truth |

| IRB | Institutional Review Board |

| CLAIM | Checklist for Artificial Intelligence in Medical Imaging |

| PACS | Picture Archiving and Communication System |

| DICOM | Digital Imaging and Communications in Medicine |

| MAE | Mean Absolute Error |

| CI | Confidence Interval |

| NMAE | Normalized Mean Absolute Error |

| ICC | Intraclass Correlation Coefficient |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| Sn | Sensitivity (TP/(TP + FN)) |

| Sp | Specificity (TN/(TN + FP)) |

| SD | Standard Deviation |

| HVA | Hallux Valgus Angle |

| IMA | Intermetatarsal Angle |

| M1-P1, M1-M2, etc. | Abbreviations for Metatarsal and Phalangeal Angles (e.g., 1st Metatarsal–1st Phalanx) |

References

- Menz, H.B.; Morris, M.E.; Lord, S.R. Foot and Ankle Characteristics Associated with Impaired Balance and Functional Ability in Older People. J. Gerontol. A Biol. Sci. Med. Sci. 2005, 60, 1546–1552. [Google Scholar] [CrossRef] [PubMed]

- Nix, S.; Smith, M.; Vicenzino, B. Prevalence of Hallux Valgus in the General Population: A Systematic Review and Meta-Analysis. J. Foot Ankle Res. 2010, 3, 21. [Google Scholar] [CrossRef] [PubMed]

- Condon, F.; Kaliszer, M.; Conhyea, D.; O’ Donnell, T.; Shaju, A.; Masterson, E. The First Intermetatarsal Angle in Hallux Valgus: An Analysis of Measurement Reliability and the Error Involved. Foot Ankle Int. 2002, 23, 717–721. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef]

- Hussain, A.; Lee, C.; Hu, E.; Amirouche, F. Deep Learning Automation of Radiographic Patterns for Hallux Valgus Diagnosis. World J. Orthop. 2024, 15, 105–109. [Google Scholar] [CrossRef]

- Noh, W.-J.; Lee, M.S.; Lee, B.-D. Deep Learning-Based Automated Angle Measurement for Flatfoot Diagnosis in Weight-Bearing Lateral Radiographs. Sci. Rep. 2024, 14, 18411. [Google Scholar] [CrossRef]

- Hida, M.; Eto, S.; Wada, C.; Kitagawa, K.; Imaoka, M.; Nakamura, M.; Imai, R.; Kubo, T.; Inoue, T.; Sakai, K.; et al. Development of Hallux Valgus Classification Using Digital Foot Images with Machine Learning. Life 2023, 13, 1146. [Google Scholar] [CrossRef]

- Kwolek, K.; Liszka, H.; Kwolek, B.; Gądek, A. Measuring the Angle of Hallux Valgus Using Segmentation of Bones on X-Ray Images. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2019: Workshop and Special Sessions, Munich, Germany, 17–19 September 2019; Tetko, I.V., Kůrková, V., Karpov, P., Theis, F., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 313–325. [Google Scholar]

- Takeda, R.; Mizuhara, H.; Uchio, A.; Iidaka, T.; Makabe, K.; Kasai, T.; Omata, Y.; Yoshimura, N.; Tanaka, S.; Matsumoto, T. Automatic Estimation of Hallux Valgus Angle Using Deep Neural Network with Axis-Based Annotation. Skelet. Radiol. 2024, 53, 2357–2366. [Google Scholar] [CrossRef]

- Kim, Y.-C.; Choi, Y.-H. AI-Based Foot X-Ray Reading in Real-World: Evaluating the Accuracy of Assistive Decisions for Diagnosing Foot & Ankle Disorders. Foot Ankle Orthop. 2023, 8, 2473011423S00022. [Google Scholar] [CrossRef]

- de Carvalho, K.A.M.; Walt, J.S.; Ehret, A.; Tazegul, T.E.; Dibbern, K.; Mansur, N.S.B.; Lalevée, M.; de Cesar Netto, C. Comparison between Weightbearing-CT Semiautomatic and Manual Measurements in Hallux Valgus. Foot Ankle Surg. 2022, 28, 518–525. [Google Scholar] [CrossRef] [PubMed]

- Mongan, J.; Moy, L.; Kahn, C.E. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef] [PubMed]

- Zerouali, M.; Parpaleix, A.; Benbakoura, M.; Rigault, C.; Champsaur, P.; Guenoun, D. Automatic Deep Learning-Based Assessment of Spinopelvic Coronal and Sagittal Alignment. Diagn. Interv. Imaging 2023, 104, 343–350. [Google Scholar] [CrossRef]

- Cicchetti, D.V. Guidelines, Criteria, and Rules of Thumb for Evaluating Normed and Standardized Assessment Instruments in Psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Iliou, K.; Paraskevas, G.; Kanavaros, P.; Barbouti, A.; Vrettakos, A.; Gekas, C.; Kitsoulis, P. Correlation between Manchester Grading Scale and American Orthopaedic Foot and Ankle Society Score in Patients with Hallux Valgus. Med. Princ. Pract. 2016, 25, 21–24. [Google Scholar] [CrossRef]

- Vanderwilde, R.; Staheli, L.T.; Chew, D.E.; Malagon, V. Measurements on Radiographs of the Foot in Normal Infants and Children. J. Bone Joint Surg. Am. 1988, 70, 407–415. [Google Scholar] [CrossRef]

- Patton, D.; Ghosh, A.; Farkas, A.; Sotardi, S.; Francavilla, M.; Venkatakrishna, S.; Bose, S.; Ouyang, M.; Huang, H.; Davidson, R.; et al. Automating Angle Measurements on Foot Radiographs in Young Children: Feasibility and Performance of a Convolutional Neural Network Model. J. Digit. Imaging 2023, 36, 1419–1430. [Google Scholar] [CrossRef]

- Zirngibl, B.; Grifka, J.; Baier, C.; Götz, J. Hallux valgus: Etiology, diagnosis, and therapeutic principles. Orthopade 2017, 46, 283–296. [Google Scholar] [CrossRef]

- Wybier, M.; Mathieu, P.; Morvan, G.; Vuillemin-Bodaghi, V.; Guerini, H. Radiologie Osseuse: Cheville et Pied de l’adulte. J. Radiol. 2008, 89, 711–735. [Google Scholar] [CrossRef]

- Saro, C.; Johnson, D.N.; Martinez De Aragón, J.; Lindgren, U.; Felländer-Tsai, L. Reliability of Radiological and Cosmetic Measurements in Hallux Valgus. Acta Radiol. 2005, 46, 843–851. [Google Scholar] [CrossRef]

- Saltzman, C.L.; Brandser, E.A.; Berbaum, K.S.; DeGnore, L.; Holmes, J.R.; Katcherian, D.A.; Teasdall, R.D.; Alexander, I.J. Reliability of Standard Foot Radiographic Measurements. Foot Ankle Int. 1994, 15, 661–665. [Google Scholar] [CrossRef] [PubMed]

- Coughlin, M.J.; Freund, E.; Roger, A. Mann Award. The Reliability of Angular Measurements in Hallux Valgus Deformities. Foot Ankle Int. 2001, 22, 369–379. [Google Scholar] [CrossRef] [PubMed]

- Gibboney, M.D.; LaPorta, G.A.; Dreyer, M.A. Interobserver Analysis of Standard Foot and Ankle Radiographic Angles. J. Foot Ankle Surg. 2019, 58, 1085–1090. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, S.; Chockalingam, N.; El Fakhri, T. Radiographic Angles in Hallux Valgus: Comparison between Manual and Computer-Assisted Measurements. J. Foot Ankle Surg. 2010, 49, 523–528. [Google Scholar] [CrossRef]

| Parameters | N. of Cases | DL|GT ICC (95%CI) | DL|GT MAE (°) (95%CI) | DL|GT NMAE | DL|GT Bias (°) (95%CI) | |

|---|---|---|---|---|---|---|

| Frontal parameters | M1-P1 | 186 | 0.91 (0.87; 0.93) | 2.27 (1.56–3.55) | 19.7% | −0.44 (−1.25–0.91) |

| M1-M2 | 186 | 0.96 (0.94; 0.97) | 0.96 (0.82–1.12) | 40.5% | −0.01 (−0.21–0.20) | |

| M1-M5 | 186 | 0.94 (0.80; 0.97) | 2.15 (1.92–2.41) | 67.7% | 1.59 (1.26–1.92) | |

| P1-P2 | 186 | 0.51 (0.33; 0.63) | 3.16 (2.03–4.84) | 127.7% | 1.96 (0.76–3.65) | |

| Lateral parameters | Djian–Annonier | 187 | 0.99 (0.97; 0.99) | 1.38 (1.21–1.58) | 21.1% | 0.88 (0.61–1.10) |

| Calcaneal slope | 187 | 0.99 (0.98; 0.99) | 0.92 (0.81–1.04) | 20.0% | −0.25 (−0.42–−0.06) | |

| 1st MT slope | 187 | 0.93 (0.06; 0.98) | 1.90 (1.73–2.07) | 68.3% | 1.86 (1.68–2.04) | |

| Meary–Tomeno | 187 | 0.94 (0.92; 0.96) | 2.83 (2.49–3.16) | 61.4% | −0.07 (−0.61–0.53) |

| Parameters | ICC(2,3) All Rads (95%CI) | ICC(2,2) DL|GT (95%CI) | ICC(2,2) Rad|GT (95%CI) | |

|---|---|---|---|---|

| Frontal parameters | M1-P1 | 0.98 (0.96; 0.99) | 0.91 (0.87; 0.93) | 0.97 (0.93; 0.99) |

| M1-M2 | 0.92 (0.82; 0.97) | 0.96 (0.94; 0.97) | 0.89 (0.71; 0.96) | |

| M1-M5 | 0.95 (0.89; 0.98) | 0.94 (0.80; 0.97) | 0.93 (0.82; 0.98) | |

| P1-P2 | 0.91 (0.81; 0.96) | 0.51 (0.33; 0.63) | 0.87 (0.66; 0.95) | |

| Lateral parameters | Djian–Annonier | 0.99 (0.95; 0.99) | 0.99 (0.97; 0.99) | 0.98 (0.94; 0.99) |

| Calcaneal Slope | 0.97 (0.94; 0.99) | 0.99 (0.98; 0.99) | 0.96 (0.88; 0.98) | |

| 1st MT Slope | 0.89 (0.52; 0.96) | 0.93 (0.06; 0.98) | 0.93 (0.81; 0.97) | |

| Meary–Tomeno | 0.82 (0.61; 0.93) | 0.94 (0.92; 0.96) | 0.89 (0.66; 0.96) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanzilli, J.; Parpaleix, A.; de Oliveira, F.; Chaouch, M.A.; Tardieu, M.; Huard, M.; Guibal, A. Automatic Weight-Bearing Foot Series Measurements Using Deep Learning. AI 2025, 6, 144. https://doi.org/10.3390/ai6070144

Tanzilli J, Parpaleix A, de Oliveira F, Chaouch MA, Tardieu M, Huard M, Guibal A. Automatic Weight-Bearing Foot Series Measurements Using Deep Learning. AI. 2025; 6(7):144. https://doi.org/10.3390/ai6070144

Chicago/Turabian StyleTanzilli, Jordan, Alexandre Parpaleix, Fabien de Oliveira, Mohamed Ali Chaouch, Maxime Tardieu, Malo Huard, and Aymeric Guibal. 2025. "Automatic Weight-Bearing Foot Series Measurements Using Deep Learning" AI 6, no. 7: 144. https://doi.org/10.3390/ai6070144

APA StyleTanzilli, J., Parpaleix, A., de Oliveira, F., Chaouch, M. A., Tardieu, M., Huard, M., & Guibal, A. (2025). Automatic Weight-Bearing Foot Series Measurements Using Deep Learning. AI, 6(7), 144. https://doi.org/10.3390/ai6070144