Abstract

Mind wandering is a common issue among schoolchildren and academic students, often undermining the quality of learning and teaching effectiveness. Current detection methods mainly rely on eye trackers and electrodermal activity (EDA) sensors, focusing on external indicators such as facial movements but neglecting voice detection. These methods are often cumbersome, uncomfortable for participants, and invasive, requiring specialized, expensive equipment that disrupts the natural learning environment. To overcome these challenges, a new algorithm has been developed to detect mind wandering during reading aloud. Based on external indicators like the blink rate, pitch frequency, and reading rate, the algorithm integrates these three criteria to ensure the accurate detection of mind wandering using only a standard computer camera and microphone, making it easy to implement and widely accessible. An experiment with ten participants validated this approach. Participants read aloud a text of 1304 words while the algorithm, incorporating the Viola–Jones model for face and eye detection and pitch-frequency analysis, monitored for signs of mind wandering. A voice activity detection (VAD) technique was also used to recognize human speech. The algorithm achieved 76% accuracy in predicting mind wandering during specific text segments, demonstrating the feasibility of using noninvasive physiological indicators. This method offers a practical, non-intrusive solution for detecting mind wandering through video and audio data, making it suitable for educational settings. Its ability to integrate seamlessly into classrooms holds promise for enhancing student concentration, improving the teacher–student dynamic, and boosting overall teaching effectiveness. By leveraging standard, accessible technology, this approach could pave the way for more personalized, technology-enhanced education systems.

1. Introduction

Mind wandering is a phenomenon in which an individual engaged in a specific task begins to shift their focus to unrelated thoughts, thereby becoming distracted from the task at hand. This phenomenon significantly impacts productivity, learning, and task performance [1,2].

Mind wandering is a prevalent and universal phenomenon experienced by many people daily [3]. It occurs in school, during academic studies, and even throughout a doctorate. It is common for individuals to read a text, only for their minds to “drift away” during reading, which affects their comprehension and learning abilities. Although mind wandering during reading is widespread, it is only in recent years that researchers have begun to explore this phenomenon. It has since become a critical focus in cognitive science due to its significant impact on productivity, learning, and overall task performance [4]. Moreover, recent studies have assessed the level of understanding in classroom environments by detecting students’ emotional states during lectures, utilizing the Viola–Jones algorithm [5], which is widely used for object recognition, particularly for eye detection. There are also other emerging object detection methods, such as stereo imaging, which uses dual cameras to capture depth information [6], and the deep differentiation segmentation neural network, which employs deep learning for semantic segmentation to accurately identify objects in complex environments [7].

The detection of mind wandering holds immense potential across a wide range of applications [8]. It can enhance the quality of pedagogy in large classrooms, optimize individual learning potential, and improve time management for learners who find it challenging to recognize when their attention drifts away from educational content [9,10]. This technology could offer valuable insights for both educators and students, enabling a more targeted and effective learning experience.

Previous studies have explored various mind-wandering detection techniques, such as identification through facial features [11,12,13], eye-tracking, and electrodermal activity (EDA) sensors [14,15]. Alternative techniques include gaze-based mind-wandering detection [16], eye movement analysis using hidden Markov models to identify patterns associated with attentional shifts [17], and EEG signal classification [18]. Other research has highlighted the impact of different listening tasks on mind wandering [19]. The existing approaches today require complex, inaccessible, and sometimes expensive equipment. Additionally, the testing methods are often inconvenient for the subject, affecting identification accuracy. Furthermore, these approaches focus mainly on a single factor, which is a physical factor, and identification is based solely on that.

Despite these efforts, there remains a need for a precise detection technique that leverages both vocal characteristics and external biological markers indicative of mind wandering. The method should also be noninvasive, affordable, and accessible, allowing identification to be performed easily. Additionally, it is crucial to develop an algorithm that identifies mind wandering based on multiple criteria rather than a single criterion, thereby optimizing detection accuracy. This approach would support the hypothesis that mind wandering is a complex phenomenon involving a combination of factors and indicative signs that manifest concurrently with wandering thoughts.

To provide a suitable solution to the issues present in current approaches, we developed an algorithm that detects mind wandering based on several criteria, which are blinks, pitch frequency, and reading rate. This complex algorithm offers a convenient method for identifying mind wandering while reading aloud, as it relies on essential tools, such as a camera and a microphone, to collate the data. Another advantage of using these tools is the increased flexibility in user movement during the experiment, allowing for more natural behavior than other methods.

Our main goal was to detect the number of mind-wandering segments (10 s each) that a person experiences whilst reading and identify the specific sentences in which mind wandering occurs. This detection relies on identifying pitch-frequency abnormalities, analyzing blinking rates, and assessing the reading ratio concerning text difficulty.

This innovative and groundbreaking approach introduces a new concept: identifying mind wandering based on external and vocal factors to provide a more accurate assessment. We experimented with ten subjects who read a text aloud to test this approach. Following this, an analysis was performed using the algorithm, which achieved a high accuracy rate in identifying the sections where the subjects’ minds wandered.

Our research addresses a critical issue that impacts learning in schools, external courses, and higher education. Solving the problem of mind wandering has the potential to significantly enhance and streamline the learning process for a wide range of individuals, from students preparing for graduation exams to surgeons mastering advanced procedures before essential surgeries.

2. Experiment

Each participant was asked to read a text consisting of 1304 words. This text was specifically designed to eliminate any prior familiarity that could influence the results. The experiment involved ten student participants. Prior to the reading task, each participant was given a brief document explaining the concept of mind wandering. This document was carefully crafted to ensure that participants fully understood the objective of the study. Presenting this explanation immediately before the experiment was essential to ensure that the concept remained fresh in their minds during the reading task. Participants were instructed to raise their hand whenever they felt their mind had wandered, while continuing to read the text. The hand-raising served as a probe, enabling a comparison between the participants’ self-reports and the algorithm’s mind-wandering detections, thus facilitating the analysis of the results. The experiment was conducted in a controlled, quiet environment to minimize distractions and ensure that participants could fully engage with the reading task. This setting was chosen to foster optimal focus and reduce external factors that might interfere with the participant’s performance or the accuracy of the results. To monitor and record the participants, Zoom workspace (version 6.3.11) software was used for both video and audio capture, as shown in Figure 1a. To ensure optimal face and eye recognition using the Viola–Jones model, participants were initially instructed to blur their backgrounds while recording, as shown in Figure 1b. This facilitated smoother, higher-quality recognition. The reading process was continuous, without the repetition of words (to avoid counting the same word twice in cases of misspeaking), and participants were asked to avoid sharp movements to maintain the reliability of the analysis.

Figure 1.

Experimental recording setup. (a) External view showing the computer and camera. (b) Zoom video image.

The reading times of the participants varied, with the fastest completing the text in 6 min (36 segments), while the slowest took 9 min and 20 s (56 segments). Our algorithm effectively handled the large volume of images, achieving a video analysis performance of 60 frames per second (3600 frames per minute). Consequently, its performance varied according to the participants’ reading rates, processing 21,600 frames for the fastest reader and 34,800 frames for the slowest. The experiment was conducted in a controlled, quiet environment to minimize distractions and ensure full engagement with the reading task. This setting was chosen to foster optimal focus and reduce external factors that might interfere with the participant’s performance or the accuracy of the results. The algorithm was rigorously validated using dozens of short video and audio clips, each ranging from one to two minutes. These clips were carefully selected and analyzed to test the algorithm’s reliability and accuracy in detecting the desired patterns. By examining a diverse set of recordings, the algorithm’s ability to handle various scenarios and perform consistently across different inputs was ensured. This extensive validation process enabled the algorithm to be fine-tuned and confirmed its effectiveness in achieving the study’s objectives.

Each participant’s reading was analyzed using an algorithm based on three criteria to identify sections of the text where mind wandering was detected. The algorithm’s results were then compared to the participants’ hand raises to verify the accuracy of the number of instances and their corresponding locations.

3. Method

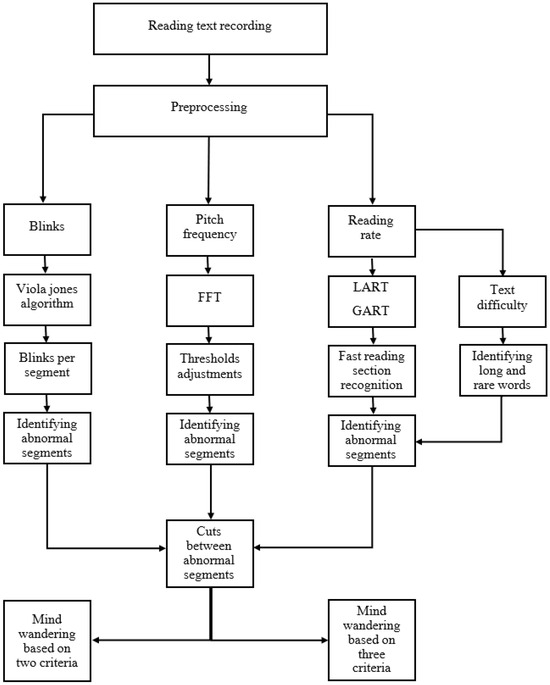

In our study, three primary criteria were used to evaluate and detect the phenomenon of mind wandering. These criteria were the blink rate, pitch-frequency abnormalities, and the average reading time ratio based on the text difficulty. Each criterion was selected for its relevance and impact on the research outcomes, as outlined below. Figure 2 illustrates our new algorithm, which detects mind wandering during reading. The process begins by recording a participant reading a target text, followed by a preprocessing step. Audio and video files were extracted and matched to the appropriate criteria during this step. In addition, we trimmed both the video and audio recordings to align precisely with the reading activity. The recording began as soon as the participant started reading and ended immediately after the text was completed. Additionally, both the video and audio recordings were meticulously trimmed to align precisely with the reading activity. Recording commenced when the participant began reading and ceased immediately upon text completion, ensuring that only relevant portions were analyzed and eliminating silent intervals. The processed files were then evaluated based on three key parameters, which were the blink frequency, pitch frequency, and reading rate. An outlier analysis was performed for each parameter, with outliers defined differently for each criterion. This analysis was conducted in 10 s segments. The data were then segmented, and we examined instances where anomalies were detected in two parameters as well as those occurring across all three.

Figure 2.

Block diagram of mind-wandering detection based on three criteria.

The algorithm was programmed by MATLAB software (version R2024a), along with the following toolboxes: The Computer Vision Toolbox enabled advanced image and video processing tasks, such as object detection and motion tracking, which were essential for analyzing visual data. The Image Processing Toolbox supported tasks like image enhancement, filtering, and segmentation, all crucial for preparing and refining visual content. The MATLAB audio toolbox provided specialized tools for audio analysis, allowing for the effective processing and analysis of sound data. Finally, the Signal Processing Toolbox facilitated the manipulation and transformation of signals, enabling the detailed analysis of audio and visual signals through filtering, spectral analysis, and other signal-related techniques.

Let us delineate each criterion individually.

3.1. Blink Rate

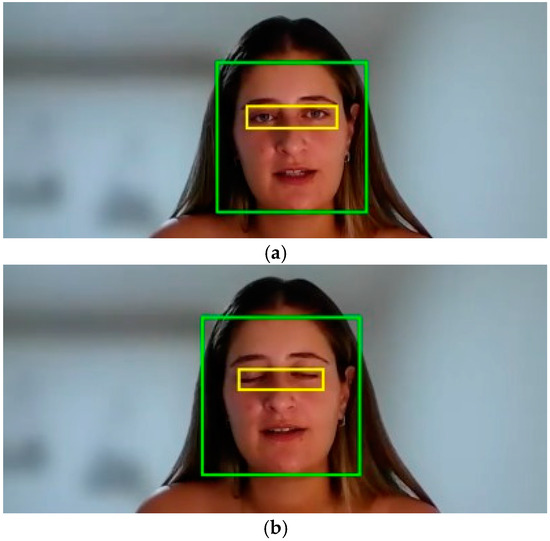

The first criterion, blink rate, focused on detecting and identifying above-average blink rates within a text segment. Studies have shown that a high blink rate can indicate mind wandering, suggesting the person may not fully engage with the text. An increased number of blinks may also indicate a lack of comprehension or difficulty in absorbing the content, signaling that the individual needs to focus more on the passage being read [20,21]. The criterion for identification was established as follows: each text segment was analyzed in 10 s intervals, with blinks recorded in each interval. As is shown in Figure 3, blinks were identified using the Viola–Jones algorithm, which is particularly effective for detecting small objects, in contrast to more advanced models such as YOLO. The Viola–Jones algorithm is widely used for real-time object recognition, particularly face and eye detection. This model relies on three fundamental steps for fast and efficient object identification. First, it quickly calculates features across image regions, reducing the computational load required to compute rectangular features. Using integral images, each pixel value represents the sum of the surrounding pixels, allowing for rapid calculation across entire rectangular areas.

Figure 3.

Blink detection during subject’s reading in (a) non-blink and (b) blink situations.

Additionally, Haar-like features detect specific patterns, such as edges, lines, and contrast variations within the image. For example, an eye-detection feature identifies darker regions (eyes) within lighter areas (skin). Finally, a cascade of classifiers, structured in sequential layers, filters out regions in the image that do not contain the object of interest. Each layer passes only plausible candidate regions to the next, enabling faster identification by concentrating computational resources on relevant areas while disregarding non-relevant regions.

Following eye detection, the blinking detection process is initiated. This involves segmenting the video data into individual frames, each converted to grayscale to enhance facial features. To further highlight the eye region, each eye-area frame is transformed into a binary image, where each pixel is classified as white or black. This binary conversion helps differentiate between open and closed eyes and darker and lighter areas within the eye region.

The binary classification process was achieved through adaptive thresholding, where each pixel’s classification was based on its surrounding intensity, accounting for variations in shadows and lighting within each frame. After obtaining these data in each frame, the algorithm calculated the eye aspect ratio (EAR) to measure changes in the vertical distance between the eyelids relative to the horizontal width of the eye. When the eye is open, the EAR remains relatively high; during a blink, when the eyes are closed, this value drops significantly.

To minimize false detections, the EAR value from each preceding frame was stored, and a blink was detected only when the current EAR value showed a substantial decrease compared to the previous frame and dropped below a specified threshold. A debounce time was implemented to prevent duplicate detections, ensuring that consecutive frames of the same blink were not counted as separate events. After determining the number of blinks in each segment, the total count across the entire text and its segments was calculated. This value was defined as the threshold, and segments exceeding it were identified as potential indicators of mind wandering.

This criterion was chosen based on the premise that the quick and real-time detection of abnormalities, combined with the established link between a high blinking frequency and mind wandering, provides a strong foundation for the overall algorithm that integrates these criteria.

3.2. Pitch Frequency

The second criterion we intended to assess concerning mind wandering was the reader’s pitch frequency, focusing on investigating the fundamental frequency. The frequency of the human voice is a complex characteristic that varies across individuals, offering valuable information about a speaker’s physiological features, emotional state, and linguistic context. Moreover, voice pitch analysis provides crucial insights across various fields, from medical diagnostics to cognitive science, making it a fundamental and indispensable element in many contemporary studies. When someone or something creates sound waves, those sound waves vibrate at different frequencies. The frequency of these vibrations determines the pitch of a sound. High-frequency sound waves produce high-pitched noises, while low-frequency sound waves produce low-pitched noises.

In our study, the audio signal was divided into short intervals of ten seconds each. This duration strikes a balance between temporal resolution and computational efficiency, being long enough to capture significant pitch variations and cognitive engagement patterns while still allowing for a manageable analysis of mind wandering [22]. Shorter intervals may not adequately represent the underlying mental processes during sustained-attention tasks.

After filtering out noise, a Hamming window was applied to each frame to minimize spectral leakage during the fast Fourier transform (FFT) process [23,24]. The frequency range was limited to 50–300 Hz to correspond with the typical pitch frequencies observed in adult speech during reading tasks. This ensured a focus on relevant pitch variations that may correlate with cognitive processes.

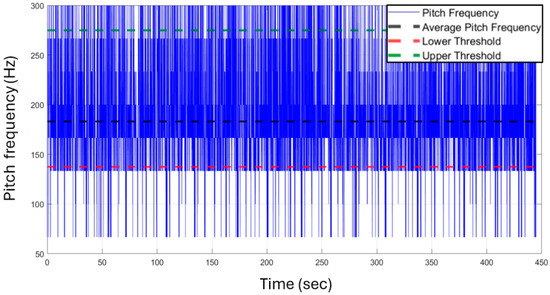

An algorithm was utilized to transform the signal into the frequency domain through the Fourier Transform to calculate the average frequency for each interval. During data collection on this frequency (such as amplitude, the autocorrelation function, and identification of peak and wave periodicity), the mean frequency was quantified by selecting the high-frequency bins in each segment and adjusting them to the pitch frequency within the interval. We determined the average frequency for the entire audio signal and established a threshold range. The upper bound was defined as 1.5 times the mean frequency and the lower bound as 0.75 times the mean frequency, which facilitates the detection of significant pitch deviations. Previous studies have demonstrated that this method reduces pitch tracking errors and reliably performs across various speakers [25].

Following the calculation of the threshold range, the average number of deviations within each interval was determined, as shown in Figure 4. Research over the years has shown that exceeding the upper limit of the threshold range is associated with mind wandering and, in some cases, even with stress or panic. Furthermore, findings indicate that readers tend to experience more mind wandering during reading aloud than silent reading. The intervals during which these pitch deviations occurred were documented and assessed for their potential as indicators of mind wandering. Presenting the average pitch frequency alongside the number of deviations observed in each segment underscores fluctuations in pitch throughout the reading process, offering valuable insights into the reader’s cognitive state.

Figure 4.

Pitch frequency and threshold detection during the subject’s speech.

3.3. Reading Rate

The last criterion is the reading rate, which is modulated by two main factors—LART and GART—and the difficulty of the text. First, it is necessary to explain two important concepts:

The global response time (GRT) is the average time it takes to move from one word to another, calculated over the entire recording duration up to a certain point.

The local response time (LRT) is the average time it takes to move from one word to another, calculated over a small, recent window of words.

Previous studies suggest that when the LRT < 0.55 GRT, this may indicate that participants’ minds are wandering [1]. The conclusion was that if there were two reading sections with the same time length and LRT1 < LRT2, the subject read more words in the first section within the same time interval. Based on this conclusion, we defined two new concepts:

The global average reading time (GART) is defined as the total number of words in the text divided by the total time taken to read it, measured in seconds, as shown in the following equation:

The local average reading time (LART) is defined as the number of words read over a 10 s interval, divided by the duration (10 s), as expressed in the following equation:

A calculation was subsequently performed throughout the text based on these intervals. The Butterworth filter (3) was applied to preserve audio quality [26], defined as follows:

where ω is the cutoff frequency, s is the complex frequency variable, and n is the filter order that affects the filter’s roll-off factor. Setting the cutoff frequency at 400 Hz effectively isolates the human voice’s essential components, especially lower-pitched elements that carry much of the speech’s intelligibility. This frequency choice allows core speech frequencies to pass while filtering out higher-frequency noise and non-speech sounds that may interfere with analysis. This form of the filter represents a low-pass filter that focuses explicitly on the low frequencies of the voice. These frequencies are ideal for analyzing a person’s voice while filtering unwanted noise. Next, we apply the voice activity detection (VAD) technique, which includes the power signal calculation (4), to identify and distinguish between speech and non-speech segments [27,28], using the signal power analysis equation:

N represents the total number of samples in the audio segment analyzed for its power, and x[n] is the discrete-time signal. This approach enables us to effectively distribute the signal’s power over the observed period, providing a reliable and accurate measure of the signal’s average power. After filtering the audio and calculating the signal’s power, the subsequent calculation was performed based on the LART and GART:

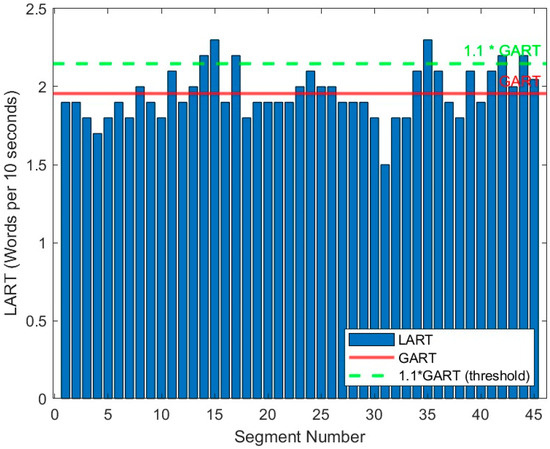

When the LART > GART, this indicates that the reading rate in an interval is higher than the global average reading rate. Since the interval is 10 s, the LART value may be more significant than expected, which may not clearly indicate whether the reader was rushing through this specific section. To increase the accuracy of our prediction, a confidence threshold of 10% was set arbitrarily. This confidence threshold has been found to be reliable for minimizing measurement errors, and contributes to a clear distinction between interval reading rates and the global average. As a result, a suspicion of mind wandering is flagged when the LART > 1.1 GART. Figure 5 shows the detection process of mind wandering using the reading rate condition.

Figure 5.

LART and GART are functions of segment number during reading aloud for mind-wandering detection.

The second factor influencing our reading ratio criterion is text difficulty, one of the primary factors affecting the reader’s performance [29].

Our study divided the text into 10 s segments (individualized for each participant), and two primary factors were evaluated in each segment. The first factor is the number of long words (those with more than four letters) in the segment. Each word is assigned a score: a long word receives a value of 1, while a short word is assigned a value of 0.

The second aspect examined was the frequency of words within the language [30]. A straightforward calculation was employed to assess word frequency: a word database containing millions of Hebrew words represented the frequency of words in the language.

We then summed the occurrences of all unique words in the text (excluding repetitions) and divided this total by the number of unique words. This yielded a threshold value used to define what constitutes a “frequent” word. Any word that appeared more frequently than this threshold (based on its occurrences in the language) was classified as a common word and assigned a value of 0. Conversely, a word that appeared less frequently than this threshold was considered uncommon and assigned a value of 1. Following this, a difficulty score was assigned to each text segment based on its composition, with the calculation based on the following equation:

A word could be extended and uncommon, thus receiving a maximum value of 2 for a segment’s score. This provided a score for each section, and by calculating the average score across all segments, we established a threshold that defines the average difficulty of a segment within the text. Segments with scores above this threshold were categorized as difficult-to-read sections.

Finally, exceptional sections in the criterion were those in which both conditions were met: the section was difficult and, in addition, met the exception criterion of the LART and GART.

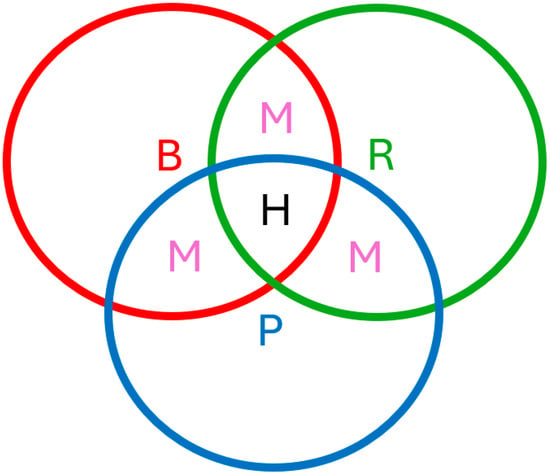

After applying the established criteria to each segment, the segmentation was conducted based on two and three criteria. Each segmentation was represented as a vector: a medium-probability vector, where a value of 1 indicates the presence of abnormalities within the same segment based on two criteria, and a high-probability vector, where a value of 1 indicates the presence of abnormalities based on a single criterion. Thus, the detection of mind wandering can be defined across three probability vector levels as follows:

In this equation, B represents blink rate, R represents reading rate, and P represents pitch frequency, providing varying insight into cognitive states. The low-probability case, characterized by the presence of only a single indicator, is intentionally disregarded. This is because the presence of a single signal, like blink or minor pitch-frequency variations, is not sufficient to reliably indicate mind wandering, as these may result from external factors such as environmental conditions, eye strain, or conversation dynamics. By focusing on medium- and high-probability scenarios, the model ensures the more accurate detection of mind wandering, supported by multiple physiological signals. This approach thereby reduces errors in the detection system and contributes to a more robust, reliable model.

In Figure 6, the relationship between the criteria (B—blinks, R—reading rate, P—pitch frequency) and the vector algorithm identification is explicitly shown. A medium-probability vector for mind wandering was generated for the segments where abnormalities were detected in two criteria. The high-probability vector for mind wandering was created for cases where abnormalities were detected in all requirements. A low-probability vector was added for cases where at least one of the criteria was met.

Figure 6.

Venn diagram of our abnormalities analysis: B—blinks, R—reading rate, P—pitch frequency, M—medium-probability vector, and H—high-probability vector.

4. Results

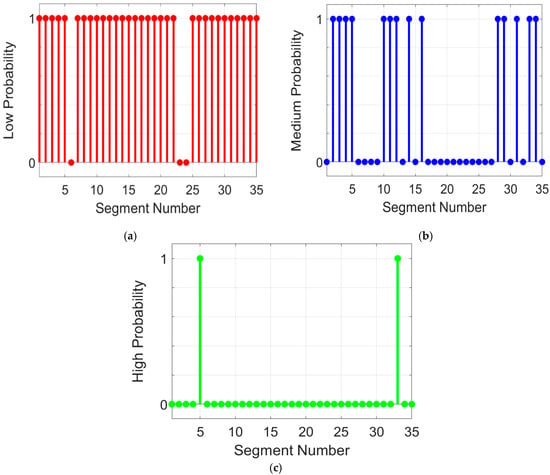

Figure 7a–c illustrate the mind-wandering result detections for subject five and the visualization of each vector, along with the final classification for each section based on the complete text analysis for the low-probability vector (Figure 7a), medium-probability vector (Figure 7b), and high-probability vector (Figure 7c). These figures clearly represent how each text segment is classified according to the number of criteria that identified abnormalities. This facilitates understanding the likelihood and significance of mind wandering in each segment. Figure 7b shows the timing of mind-wandering occurrences during the reading task, spanning segments 1–5, 10–12, 14, 16, 28–29, 31, and 33–34, with at least two indicators present. Segments 5 and 33 exhibit three indicators, as Figure 7c illustrates. From these results, our tool can extract relevant sentences from the text when medium and high probabilities of mind wandering are detected, which can then be used to enhance cognitive learning by targeting key moments of disengagement.

Figure 7.

Mind-wandering detection results over the segment number for subject 5: (a) low-probability vector. (b) medium-probability vector. (c) high-probability vector.

Table 1 presents the results for our participants, including total hand-raising throughout the text and the algorithm’s identification of medium and high probabilities of mind wandering. It also shows separation cases for medium probabilities, where two indicators (B and R, B and P, P and R) were used to detect mind wandering. As we can see, the algorithm predicts more mind wandering than the subject’s hand raises. This is plausible because a subject experiencing mind wandering may be unable to report it accurately, even in retrospect. Consequently, it is reasonable to expect the algorithm’s identifications to be more frequent.

Table 1.

Mind-wandering detection results from self-reported and algorithm for ten subjects.

5. Discussion

Our primary analysis focused on the medium-probability and high-probability vectors. After analyzing all subjects, we found that detecting mind wandering with a high probability within a specific segment based on the convergence of all three criteria posed significant challenges, as is evidenced by the low number of identifications presented in Table 1. This difficulty primarily stems from factors influencing the reading rate criterion, including text complexity and LART indices. As a result, it was relatively rare to observe simultaneous deviations across all requirements within a single ten-second segment. However, a thorough and high-quality analysis could be performed when examining the subject’s results and comparing them to the medium-probability vector.

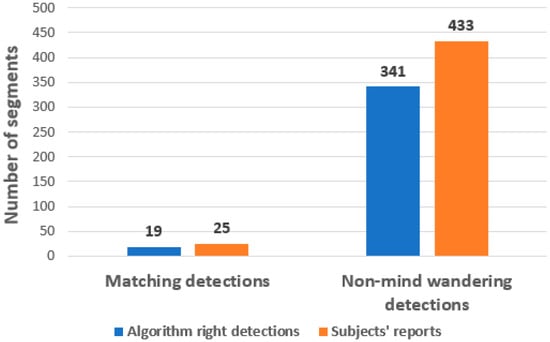

First, it is essential to define a key term, matching detection, which is central to our analysis. This term represents the match between the detection of mind wandering predicted by the algorithm and the self-reported mind wandering (hand raising). A match is valid if the algorithm detected mind wandering within a range of up to two segments (20 s) before the subject raised their hand. This is because participants’ reports of wandering thoughts are always made retrospectively, independent of real-time monitoring. Another important term is non-mind-wandering detections, which refers to instances when the model did not detect mind wandering, and the subjects did not self-report it (did not raise their hand).

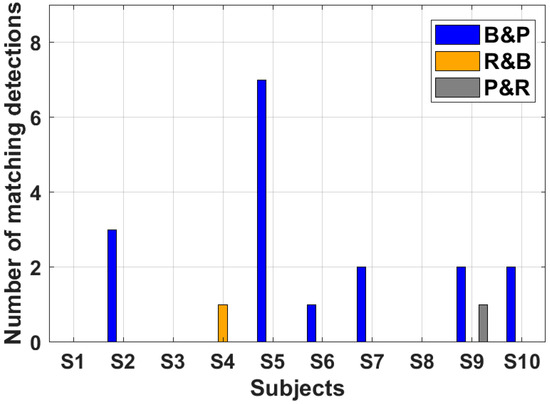

After an in-depth analysis of the detection results compared to the subjects’ hand raises, along with optimization and identification of ‘matching detections’ for each participant, Figure 8 shows that a specific pair of criteria, B and P (highlighted in blue), resulted in the highest number of correct identifications, with 17 accurate detections, representing 68% of the self-reported detections. By combining the results of R and B, and P and R, our detection accuracy improved by an additional 8%.

Figure 8.

Matching detection identification for each subject by two criteria.

As Figure 9 shows, out of a total of 25 mind wanderings that the subjects reported to us, the algorithm identified 19 of them, i.e., a recognition percentage of 76%; after a thorough analysis, the resulting conclusion is that the reading rate criterion dropped many of the identifications of the algorithm because it includes two conditions (reading time of the section and the difficulty of the text). In addition, a qualitative prediction for non-mind-wandering detections is shown in Figure 9. Out of 433 segments where participants did not report mind wandering (did not raise their hand), the algorithm correctly classified 341 segments, achieving an accuracy of 78.75%. This demonstrates the algorithm’s strong capability to accurately identify when mind wandering is not occurring, which is critical for distinguishing between mind wandering and focused states.

Figure 9.

Algorithm identifications out of total detections.

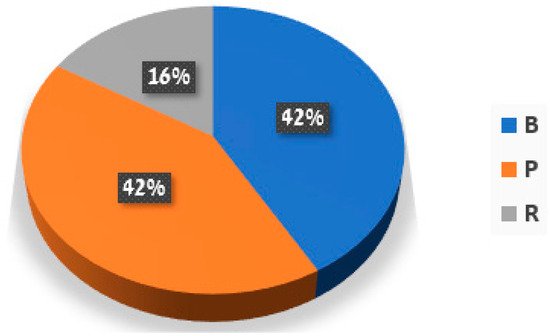

The next step in our analysis involved examining the division of the three criteria and the contribution of each criterion to detecting the mind-wandering phenomenon in the medium-probability vector. As you can see from Figure 10, the minority (16%) of identifications were based on the reading rate criterion. In contrast, the other two criteria played a more significant role in the detection, with 42% each.

Figure 10.

Contribution of each criterion to detecting mind wandering for medium probability.

Table 2 highlights the advantages of our detection method compared to other state-of-the-art approaches. Our method achieved the highest accuracy in detecting mind wandering, with a detection rate of 76% for mind wandering. This superior performance is expected due to the integration of two elements of detection, (B and P)∪(R and B)∪(P and R), which significantly enhance both reliability and accuracy. Additionally, our main feature is more straightforward and user-friendly than the more complex methods employed by previous studies. High precision, simplicity, and the ease of use mean that our method suits real-world applications, particularly in classroom environments. It provides a practical and efficient solution for monitoring student engagement and facilitating timely interventions that significantly enhance learning experiences and educational outcomes.

Table 2.

Comparison between mind-wandering detection methods.

Despite the strengths of the study, certain limitations must be considered when interpreting the results. First, the sample size was relatively small, consisting of only ten participants. Future studies should include a larger number of subjects to enhance the robustness and reliability of the findings. Second, the participant pool was composed primarily of university students. To improve the generalizability of the results, subsequent research should evaluate the effectiveness of the algorithm across a broader population, including school-aged students, postgraduate learners (e.g., PhD candidates), and adults involved in education-related professions, such as teachers, trainers, or instructional designers, who may also benefit from improved learning quality.

6. Conclusions

After analyzing the results and drawing conclusions, our research proposes a breakthrough method for noninvasively detecting mind wandering during reading. This approach, which utilizes a computer camera and microphone, implements a unique algorithm that enables the detection of wandering thoughts without needing additional external aids, as seen in other methods. These external aids often disrupt the natural reading process and, as a result, influence the accuracy of prediction outcomes.

This research successfully introduces a novel approach for detecting mind wandering by combining multiple signal indicators, including blink rate, reading rate, and pitch frequency. A key contribution of this research is the development of an algorithm that integrates multiple indicators, both visual and vocal, such as blink rate, reading rate, and pitch frequency, into a unified model for mind-wandering detection. Unlike previous methods that rely on a single indicator or specialized equipment, our algorithm—by analyzing three distinct factors—enables a more comprehensive and accurate detection of mind wandering. Furthermore, the ability to process these multiple criteria using standard, widely available equipment (such as a webcam and microphone) distinguishes this method from existing approaches, which often require costly and cumbersome tools. The proposed algorithm, capable of simultaneously analyzing multiple indicators, has proven its effectiveness in practical settings. In a validation test involving ten subjects reading aloud, the algorithm detected 76% of mind-wandering instances reported by participants. For non-mind-wandering segments, the model achieved a detection accuracy of 78.75%.

These experimental results underscore our algorithm’s high accuracy and reliability compared to self-reported data, mainly due to its ability to analyze multiple cognitive indicators simultaneously. Additionally, the practical advantages of our method are evident, as the required equipment consists of only a standard computer equipped with a webcam, microphone, and essential software like Zoom, making it noninvasive and easy to implement. This setup offers a flexible and accessible alternative to more complex detection systems that rely on specialized devices, such as eye-tracking for blink detection, voice analysis systems for pitch frequency, and gaze-tracking systems for reading rate. These more advanced tools, while accurate, often require significant calibration and specialized hardware and can be uncomfortable or restrictive in real-world settings.

In contrast, our method uses essential, widely available equipment, providing a low-cost, noninvasive solution that does not require complex hardware or extensive calibration. It can detect multiple cognitive indicators in real time without sacrificing accuracy. Furthermore, the algorithm is efficient and operates without requiring advanced computational power or deep learning models, making it a practical and accessible tool across various environments. Our system enhances user comfort and flexibility by avoiding specialized tools, allowing for seamless implementation in educational or professional settings with minimal resource demands.

An essential feature of our model is its ability to extract the relevant sentence where mind wandering is detected. This capability can be instrumental in real-time teaching environments, helping students and instructors focus on specific parts of the material that require further clarification. By identifying and presenting the key sentences where mind wandering occurs, the model minimizes the time spent on redundant content and ensures that the learning process is more targeted and efficient, ultimately leading to better comprehension and engagement.

Author Contributions

D.M. conceived the project, provided overall supervision, and guided all aspects of the research, with a particular focus on mentoring A.R., E.B.B., M.S. and N.A. throughout the process. M.Y. contributed significantly to the educational framework, particularly in developing the methodology for measuring reading rate and text difficulty. He also provided essential guidance on the overall methodological approach, including the design and validation of the experimental procedures. A.R., E.B.B. and M.S. were responsible for software development, conducting the experiments, and decoding the data. N.A. contributed to the educational analysis and played a key role in integrating educational outcomes with the software results. All authors contributed to the writing of the manuscript, and jointly reviewed and wrote the response letter to the reviewers. All figures and tables were prepared by A.R., E.B.B., M.S. and N.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was approved by the Institutional Review Board (IRB) of the Holon Institute of Technology. The research was conducted in accordance with the institutional ethical guidelines and the principles of the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study. Each participant received a detailed explanation of the experimental protocol and voluntarily agreed to take part by signing a consent form, in accordance with the ethical guidelines of the Holon Institute of Technology.

Data Availability Statement

The data collected and analyzed in this study are not publicly available due to ethical restrictions and privacy considerations. All relevant analyses derived from the data are presented in the manuscript. The raw image data and participant information are securely stored in accordance with institutional guidelines at the Holon Institute of Technology and are not shared to protect participant confidentiality.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Franklin, M.S.; Smallwood, J.; Schooler, J.W. Catching the mind in flight: Using behavioral indices to detect mindless reading in real-time. Psychon. Bull. Rev. 2011, 18, 992–997. [Google Scholar] [CrossRef] [PubMed]

- Unsworth, N.; McMillan, B.D. Mind wandering and reading comprehension: Examining the roles of working memory capacity, interest, motivation, and topic experience. J. Exp. Psychol. Learn. Mem. Cogn. 2013, 39, 832–842. [Google Scholar] [CrossRef] [PubMed]

- He, H.; Chen, Y.; Li, T.; Li, H.; Zhang, X. The role of focus back effort in the relationships among motivation, interest, and mind wandering: An individual difference perspectiv. Cogn. Res. Princ. Implic. 2023, 8, 43. [Google Scholar] [CrossRef]

- Franklin, M.S.; Mooneyham, B.W.; Baird, B.; Schooler, J.W. Thinking one thing, saying another: The behavioral correlates of mind-wandering while reading aloud. Psychon. Bull. Rev. 2014, 21, 205–210. [Google Scholar] [CrossRef]

- Avital, N.; Egel, I.; Weinstock, I.; Malka, D. Enhancing real-time emotion recognition in classroom environments using convolutional neural networks: A step towards optical neural networks for advanced data processing. Inventions 2024, 9, 113. [Google Scholar] [CrossRef]

- Tan, F.; Yu, Z.; Liu, K.; Li, T. Detection and positioning system for foreign body between subway doors and PSD. In Proceedings of the 2021 6th International Conference on Smart Grid and Electrical Automation (ICSGEA), Kunming, China, 29–30 May 2021; pp. 296–298. [Google Scholar]

- Tan, F.; Zhao, M.; Zhai, C. Foreign object detection in urban rail transit based on deep differentiation segmentation neural network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef]

- Leszczynski, M.; Chaieb, L.; Reber, T.P.; Derner, M.; Axmacher, N.; Fell, J. Mind wandering simultaneously prolongs reactions and promotes creative incubation. Sci. Rep. 2017, 7, 10197. [Google Scholar] [CrossRef]

- Lowder, M.W.; Gordon, P.C. Focus takes time: Structural effects on reading. Psychon. Bull. Rev. 2015, 22, 1733–1738. [Google Scholar] [CrossRef]

- Thomson, D.R.; Seli, P.; Besner, D.; Smilek, D. On the link between mind wandering and task performance over time. Conscious. Cogn. 2014, 27, 14–26. [Google Scholar] [CrossRef]

- Lee, T.; Kim, D.; Park, S.; Kim, D.; Lee, S.-J. Predicting mind-wandering with facial videos in online lectures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 2104–2113. [Google Scholar]

- Bosch, N.; D’Mello, S.K. Automatic detection of mind wandering from video in the lab and the classroom. IEEE Trans. Affect. Comput. 2021, 12, 910–921. [Google Scholar] [CrossRef]

- Turan, C.; Neergaard, K.D.; Lam, K.-M. Facial expressions of comprehension (FEC). IEEE Trans. Affect. Comput. 2022, 13, 20–34. [Google Scholar] [CrossRef]

- Brishtel, I.; Khan, A.A.; Schmidt, T.; Dingler, T.; Ishimaru, S.; Dengel, A. Mind wandering in a multimodal reading setting: Behavior analysis & automatic detection using eye-tracking and an EDA sensor. Sensors 2020, 20, 2546. [Google Scholar] [CrossRef] [PubMed]

- Hutt, S.; Wong, A.; Papoutsaki, A.; Baker, R.S.; Gold, J.I.; Mills, C. Webcam-based eye tracking to detect mind wandering and comprehension errors. Psychon. Bull. Rev. 2023, 56, 1–17. [Google Scholar] [CrossRef]

- Singha, S. Gaze-Based Mind Wandering Detection Using Deep Learning. Master’s Thesis, Texas A&M University, Texas, USA, 2021. [Google Scholar]

- Lee, H.-H.; Chen, Z.-L.; Yeh, S.-L.; Hsiao, J.H.; Wu, A.-Y. When eyes wander around: Mind-wandering as revealed by eye movement analysis with hidden Markov models. Sensors 2021, 21, 7619. [Google Scholar] [CrossRef]

- Avital, N.; Nahum, E.; Carmel Levi, G.; Malka, D. Cognitive state classification using convolutional neural networks on gamma-band EEG signals. Appl. Sci. 2024, 14, 5223. [Google Scholar] [CrossRef]

- Taraban, O.; Heide, F.; Woollacott, M.; Chan, D. The effects of a mindful listening task on mind-wandering. Mindfulness 2016, 8, 433–443. [Google Scholar] [CrossRef]

- Hollander, J.; Huette, S. Extracting blinks from continuous eye-tracking data in a mind-wandering paradigm. Conscious. Cogn. 2022, 100, 103303. [Google Scholar] [CrossRef] [PubMed]

- Smilek, D.; Carrier, J.S.; Cheyne, J.A. Out of mind, out of sight: Eye blinking as an indicator and embodiment of mind wandering. Psychol. Sci. 2009, 21, 786–789. [Google Scholar] [CrossRef]

- Cheng, M.J.; Rabiner, L.R.; Rosenberg, A.E.; McGonegal, C.A. Comparative performance study of several pitch detection algorithms. J. Acoust. Soc. Am. 1975, 58, 61–62. [Google Scholar] [CrossRef]

- Gautam, G.; Shrestha, S.; Cho, S. Spectral analysis of rectangular, Hanning, Hamming, and Kaiser windows for digital FIR filter. Int. J. Adv. Smart Converg. 2015, 4, 138–144. [Google Scholar] [CrossRef]

- Kumar, G.G.; Sahoo, S.K.; Meher, P.K. 50 years of FFT algorithms and applications. Circuits Syst. Signal Process. 2019, 38, 5665–5698. [Google Scholar] [CrossRef]

- Hirst, D.J.; de Looze, C. Measuring speech: Fundamental frequency and pitch. In Cambridge Handbook of Phonetics; Cambridge University Press: Cambridge, UK, 2021; pp. 336–361. [Google Scholar]

- Podder, P.; Hasan, M.M.; Islam, M.R.; Sayeed, M. Design and implement Butterworth, Chebyshev-I, and elliptic filters for speech signal analysis. Int. J. Comput. Appl. 2014, 98, 12–18. [Google Scholar]

- Graf, S.; Herbig, T.; Buck, M.; Schmidt, G. Features for voice activity detection: A comparative analysis. EURASIP J. Adv. Signal Process. 2015, 91, 1–14. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, X.-L. An efficient voice activity detection algorithm by combining statistical model and energy detection. EURASIP J. Adv. Signal Process. 2011, 18, 1–9. [Google Scholar] [CrossRef][Green Version]

- Mills, C.; Graesser, A.; Risko, E.F.; D’Mello, S.K. Cognitive coupling during reading. J. Exp. Psychol. Gen. 2017, 146, 872–883. [Google Scholar] [CrossRef]

- Leroy, G.; Kauchak, D. The effect of word familiarity on actual and perceived text difficulty. J. Am. Med. Inform. Assoc. 2013, 21, 169–172. [Google Scholar] [CrossRef]

- Drummond, J.; Litman, D. In the zone: Towards detecting student zoning out using supervised machine learning. In Proceedings of the International Conference on Intelligent Tutoring Systems, Pittsburgh, PA, USA, 14–18 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 306–315. [Google Scholar]

- Dias da Silva, M.R.; Postma, M. Wandering minds, wandering mice: Computer mouse tracking as a method to detect mind wandering. Comput. Hum. Behav. 2020, 112, 106464. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).