ConvNeXt with Context-Weighted Deep Superpixels for High-Spatial-Resolution Aerial Image Semantic Segmentation

Abstract

1. Introduction

- (1)

- Global context projection: The framework explicitly models scene–object relationships by projecting global contextual information into local feature representations, enhancing the understanding of spatial dependencies across large scenes.

- (2)

- Context-weighted superpixel embeddings: The framework generates superpixel-level feature embeddings weighted by global context, enabling discriminative representation of region-level semantic information while preserving fine-grained details.

- (3)

- Superpixel-guided upsampling: The framework incorporates region-level shape priors from superpixels to guide the upsampling process, optimizing edge details and reducing spatial misalignment in segmentation predictions.

2. Related Works

2.1. General Semantic Segmentation

2.2. Semantic Segmentation in Aerial Images

3. Methods

3.1. Symmetric Encoder

3.2. Deep Superpixel Module with Integrated Contextual Attention

3.3. Decoder

3.4. Loss Function

4. Experimental Setting

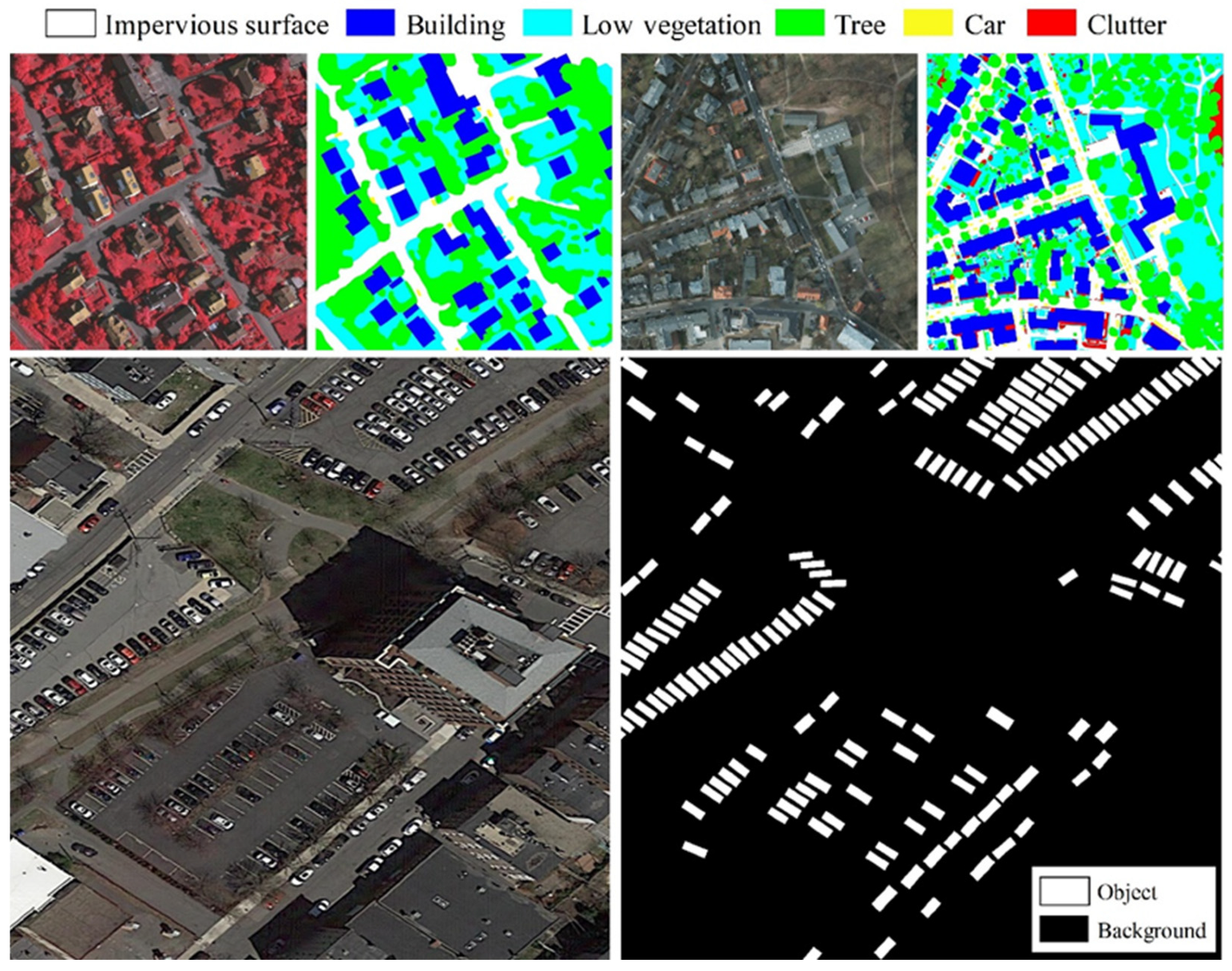

4.1. Datasets

- Vaihingen Dataset.

- 2.

- Potsdam Dataset.

- 3.

- UV6K Dataset.

4.2. Implementation Details

5. Results and Analysis

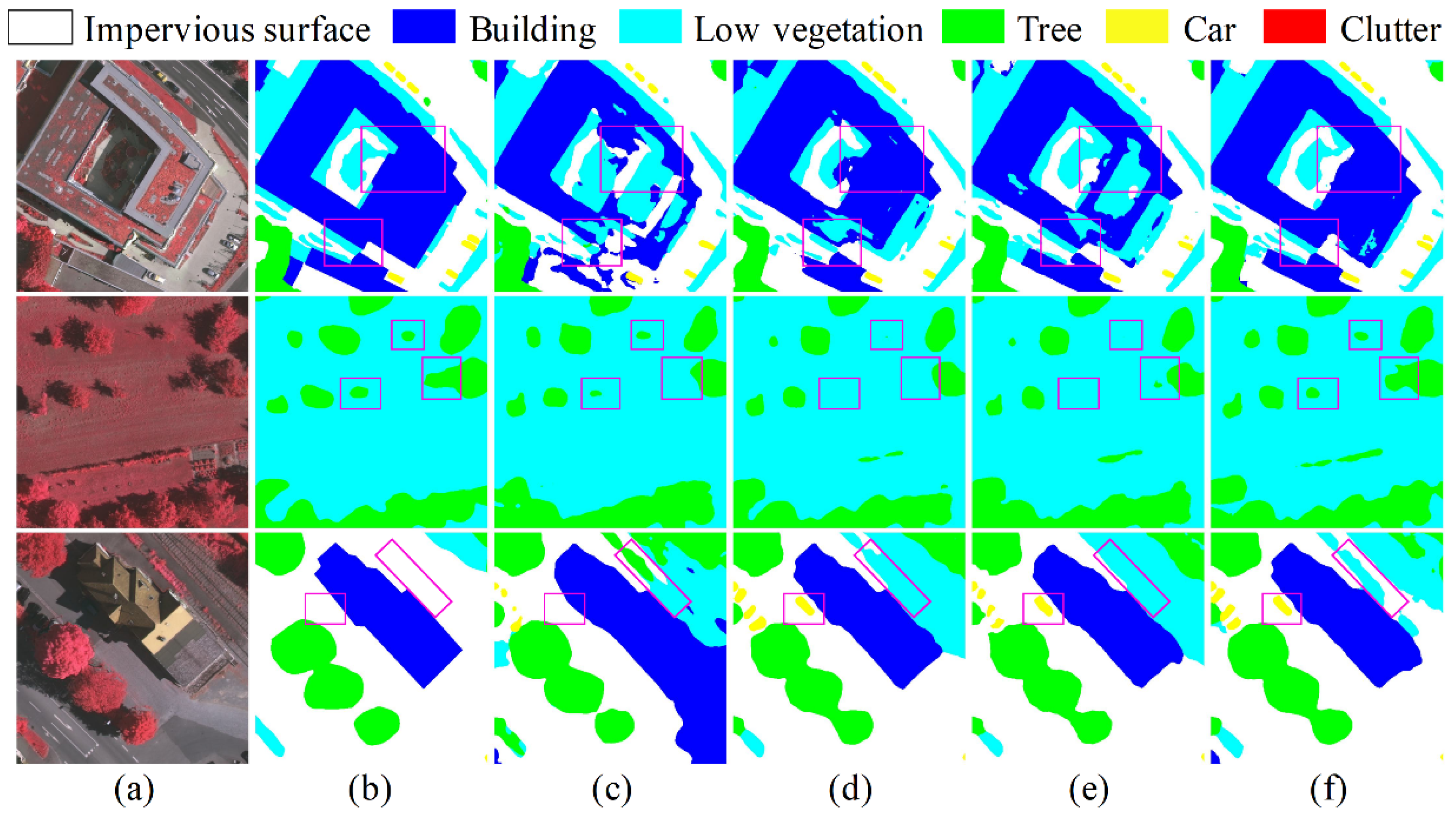

5.1. Results and Comparison on the Vaihingen Dataset

5.2. Results and Comparison on the Potsdam Dataset

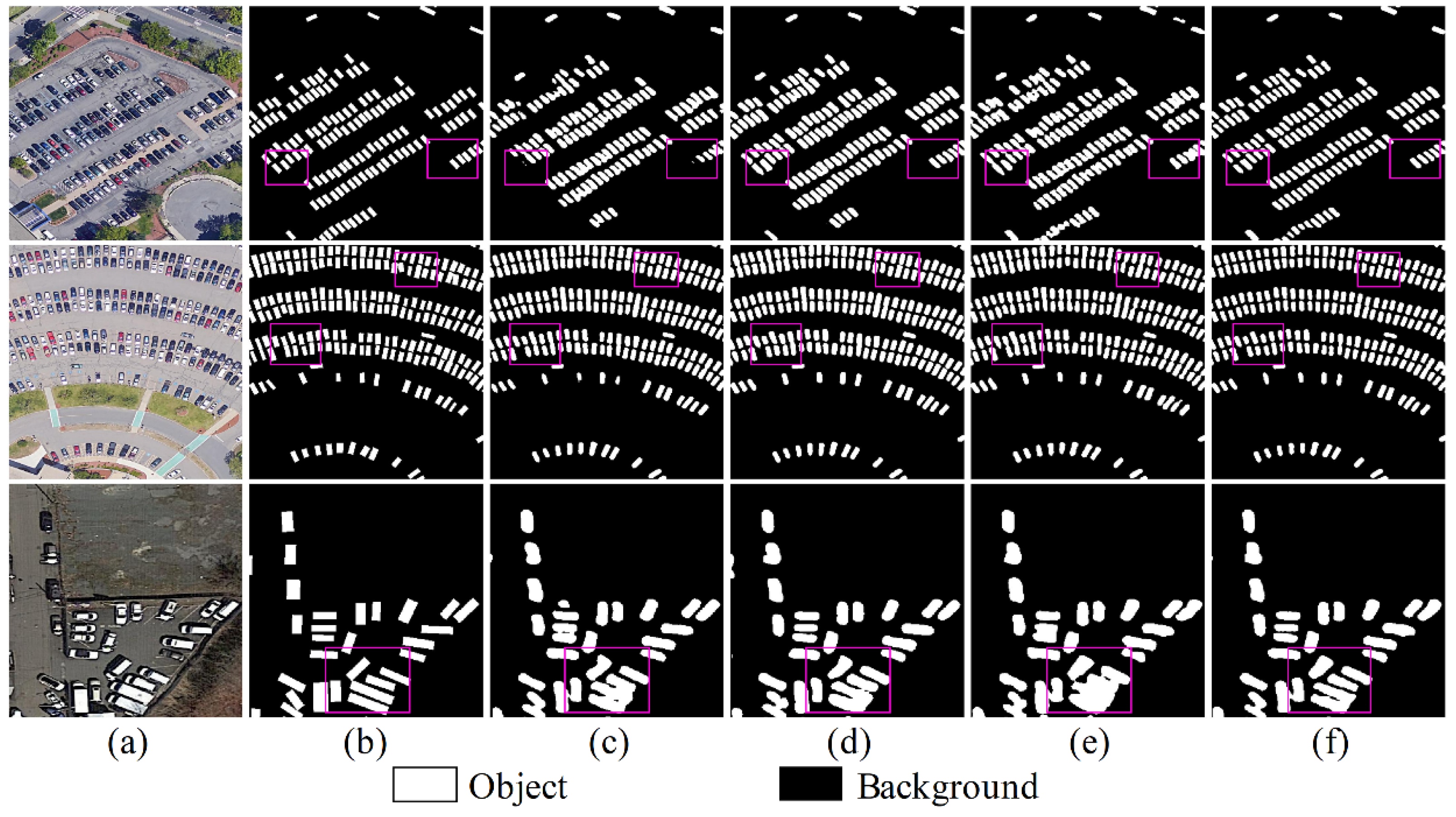

5.3. Results and Comparison on the UV6K Dataset

5.4. Ablation Studies

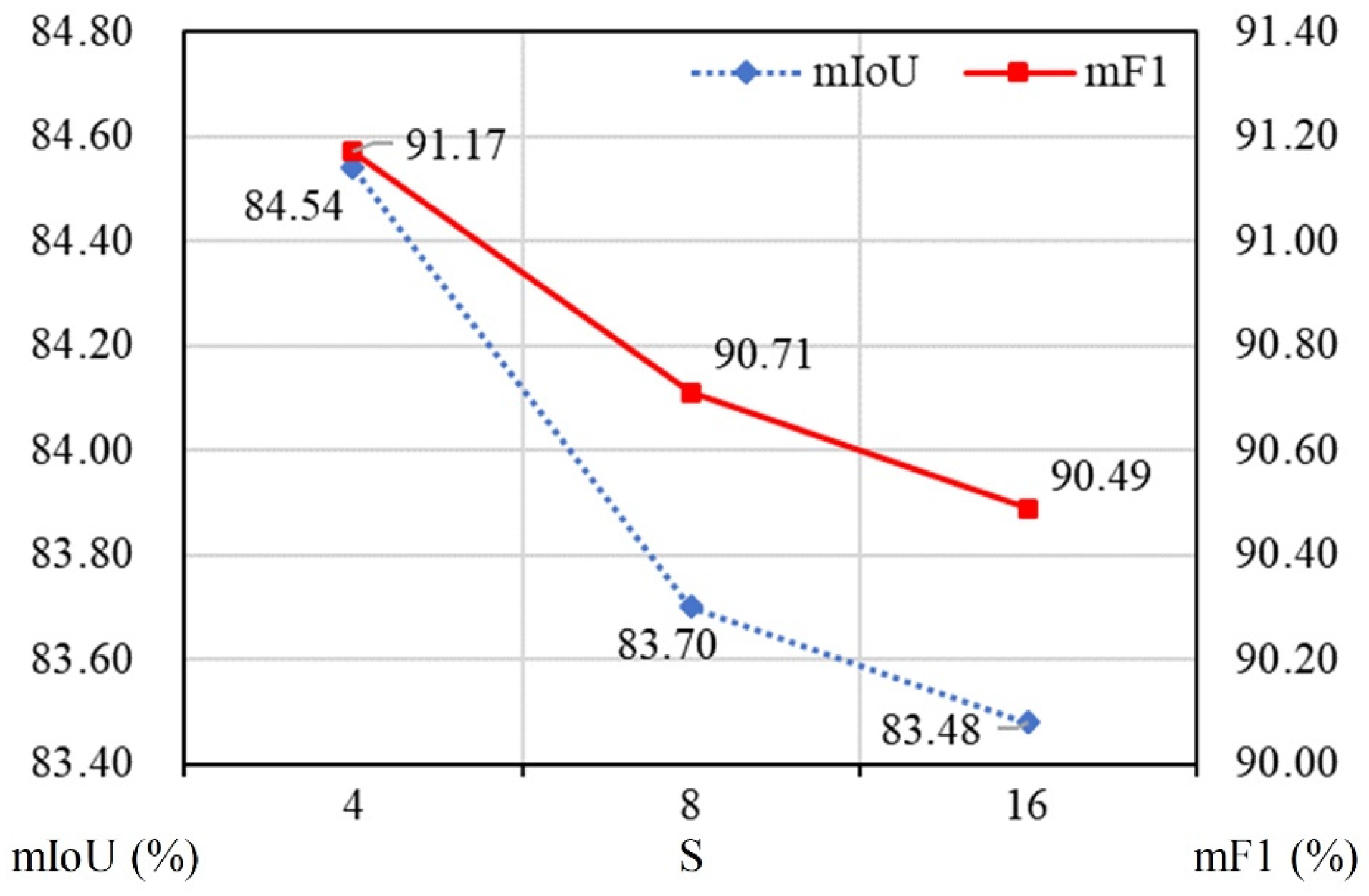

5.5. Sensitivity Analysis of the Spixel Module

5.6. Model Complexity Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Name | Kernel | Stride | Input | OutDim |

|---|---|---|---|---|

| Input | ||||

| Image | H × W × 3 | |||

| Stem | ||||

| Conv0 | 4 × 4, 128 | 4 | Image | |

| Encoder feature extractor | ||||

| Conv_S2 | 1 | Conv0 | ||

| Conv_S3 | 2 | Conv_S2 | ||

| Conv_S4 | 2 | Conv_S3 | ||

| Conv_S5 | 2 | Conv_S4 | ||

| PPM_feat | Pyramid Pooling Module | 1 | Conv_S5 | |

| Method | IoU(%) | mIoU (%) | mF1 (%) | ||||

|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | |||

| the proposed model | 88.52 ± 0.25 | 93.79 ± 0.15 | 74.86 ± 0.45 | 82.54 ± 0.19 | 82.57 ± 0.74 | 84.46 ± 0.09 | 91.07 ± 0.10 |

| Method | IoU(%) | mIoU (%) | mF1 (%) | ||||

|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | |||

| the proposed model | 92.50 ± 0.12 | 97.30 ± 0.15 | 83.17 ± 0.27 | 82.85 ± 0.20 | 96.44 ± 0.11 | 90.45 ± 0.12 | 93.58 ± 0.08 |

| Method | Backbone | IoU | F1 |

|---|---|---|---|

| the proposed model | ConvNeXt-T | 64.40±0.06 | 78.35±0.05 |

References

- Lin, Y.; Zhang, M.; Gan, M.; Huang, L.; Zhu, C.; Zheng, Q.; You, S.; Ye, Z.; Shahtahmassebi, A.; Li, Y.; et al. Fine Identification of the Supply–Demand Mismatches and Matches of Urban Green Space Ecosystem Services with a Spatial Filtering Tool. J. Clean. Prod. 2022, 336, 130404. [Google Scholar] [CrossRef]

- Lin, Y.; An, W.; Gan, M.; Shahtahmassebi, A.; Ye, Z.; Huang, L.; Zhu, C.; Huang, L.; Zhang, J.; Wang, K. Spatial Grain Effects of Urban Green Space Cover Maps on Assessing Habitat Fragmentation and Connectivity. Land 2021, 10, 1065. [Google Scholar] [CrossRef]

- He, T.; Hu, Y.; Guo, A.; Chen, Y.; Yang, J.; Li, M.; Zhang, M. Quantifying the Impact of Urban Trees on Land Surface Temperature in Global Cities. ISPRS J. Photogramm. Remote Sens. 2024, 210, 69–79. [Google Scholar] [CrossRef]

- Fang, C.; Fan, X.; Wang, X.; Nava, L.; Zhong, H.; Dong, X.; Qi, J.; Catani, F. A Globally Distributed Dataset of Coseismic Landslide Mapping via Multi-Source High-Resolution Remote Sensing Images. Earth Syst. Sci. Data 2024, 16, 4817–4842. [Google Scholar] [CrossRef]

- Victor, N.; Maddikunta, P.K.R.; Mary, D.R.K.; Murugan, R.; Chengoden, R.; Gadekallu, T.R.; Rakesh, N.; Zhu, Y.; Paek, J. Remote Sensing for Agriculture in the Era of Industry 5.0—A Survey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5920–5945. [Google Scholar] [CrossRef]

- Lehouel, K.; Saber, C.; Bouziani, M.; Yaagoubi, R. Remote Sensing Crop Water Stress Determination Using CNN-ViT Architecture. AI 2024, 5, 618–634. [Google Scholar] [CrossRef]

- Banerjee, S.; Reynolds, J.; Taggart, M.; Daniele, M.; Bozkurt, A.; Lobaton, E. Quantifying Visual Differences in Drought-Stressed Maize through Reflectance and Data-Driven Analysis. AI 2024, 5, 790–802. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Barron, J.T.; Papandreou, G.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Task-Specific Edge Detection Using CNNs and a Discriminatively Trained Domain Transform. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4545–4554. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Wang, J.; Zheng, Y.; Wang, M.; Shen, Q.; Huang, J. Object-Scale Adaptive Convolutional Neural Networks for High-Spatial Resolution Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 283–299. [Google Scholar] [CrossRef]

- Liu, S.; Zhao, D.; Zhou, Y.; Tan, Y.; He, H.; Zhang, Z.; Tang, L. Network and Dataset for Multiscale Remote Sensing Image Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 2851–2866. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the ICCV, Virtual, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Meng, X.; Yang, Y.; Wang, L.; Wang, T.; Li, R.; Zhang, C. Class-Guided Swin Transformer for Semantic Segmentation of Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517505. [Google Scholar] [CrossRef]

- Sun, D.; Bao, Y.; Liu, J.; Cao, X. A Lightweight Sparse Focus Transformer for Remote Sensing Image Change Captioning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18727–18738. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, F.; Dong, J.; Du, Q. Global and Local Attention-Based Transformer for Hyperspectral Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2024, 22, 5500405. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 11966–11976. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ye, Z.; Fu, Y.; Gan, M.; Deng, J.; Comber, A.; Wang, K. Building Extraction from Very High Resolution Aerial Imagery Using Joint Attention Deep Neural Network. Remote Sens. 2019, 11, 2970. [Google Scholar] [CrossRef]

- Li, R.; Liu, W.; Yang, L.; Sun, S.; Hu, W.; Zhang, F.; Li, W. DeepUNet: A Deep Fully Convolutional Network for Pixel-Level Sea-Land Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3954–3962. [Google Scholar] [CrossRef]

- Liu, B.; Li, B.; Sreeram, V.; Li, S. MBT-UNet: Multi-Branch Transform Combined with UNet for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2024, 16, 2776. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on Semantic Segmentation Using Deep Learning Techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Ye, Z.; Tan, X.; Dai, M.; Chen, X.; Zhong, Y.; Zhang, Y.; Ruan, Y.; Kong, D. A Hyperspectral Deep Learning Attention Model for Predicting Lettuce Chlorophyll Content. Plant Methods 2024, 20, 22. [Google Scholar] [CrossRef] [PubMed]

- Ma, F.; Sun, X.; Zhang, F.; Zhou, Y.; Li, H.-C. What Catch Your Attention in SAR Images: Saliency Detection Based on Soft-Superpixel Lacunarity Cue. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5200817. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 205–218. [Google Scholar]

- Liu, W.; Liu, J.; Luo, Z.; Zhang, H.; Gao, K.; Li, J. Weakly Supervised High Spatial Resolution Land Cover Mapping Based on Self-Training with Weighted Pseudo-Labels. Int. J. Appl. Earth Obs. Geoinform. 2022, 112, 102931. [Google Scholar] [CrossRef]

- Liu, S.; Cao, S.; Lu, X.; Peng, J.; Ping, L.; Fan, X.; Teng, F.; Liu, X. Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images. Sensors 2025, 25, 261. [Google Scholar] [CrossRef]

- Chen, D.; Ma, A.; Zhong, Y. Semi-Supervised Knowledge Distillation Framework for Global-Scale Urban Man-Made Object Remote Sensing Mapping. Int. J. Appl. Earth Obs. Geoinform. 2023, 122, 103439. [Google Scholar] [CrossRef]

- Dong, D.; Ming, D.; Weng, Q.; Yang, Y.; Fang, K.; Xu, L.; Du, T.; Zhang, Y.; Liu, R. Building Extraction from High Spatial Resolution Remote Sensing Images of Complex Scenes by Combining Region-Line Feature Fusion and OCNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4423–44381. [Google Scholar] [CrossRef]

- Dai, L.; Zhang, G.; Zhang, R. RADANet: Road Augmented Deformable Attention Network for Road Extraction From Complex High-Resolution Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5602213. [Google Scholar] [CrossRef]

- Lyu, Y.; Vosselman, G.; Xia, G.-S.; Yilmaz, A.; Yang, M.Y. UAVid: A Semantic Segmentation Dataset for UAV Imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A Comprehensive Review on Recent Applications of Unmanned Aerial Vehicle Remote Sensing with Various Sensors for High-Throughput Plant Phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. FarSeg++: Foreground-Aware Relation Network for Geospatial Object Segmentation in High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13715–13729. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Yu, S.; Jiang, S. A Domain Adaptation Method for Land Use Classification Based on Improved HR-Net. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4400911. [Google Scholar] [CrossRef]

- Ma, Y.; Deng, X.; Wei, J. Land Use Classification of High-Resolution Multispectral Satellite Images with Fine-Grained Multiscale Networks and Superpixel Post Processing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3264–3278. [Google Scholar] [CrossRef]

- Formichini, M.; Avizzano, C.A. A Comparative Analysis of Deep Learning-Based Segmentation Techniques for Terrain Classification in Aerial Imagery. AI 2025, 6, 145. [Google Scholar] [CrossRef]

- Zhang, C.; Harrison, P.A.; Pan, X.; Li, H.; Sargent, I.; Atkinson, P.M. Scale Sequence Joint Deep Learning (SS-JDL) for Land Use and Land Cover Classification. Remote Sens. Environ. 2020, 237, 111593. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, H.; Lu, F.; Xue, R.; Yang, G.; Zhang, L. Breaking the Resolution Barrier: A Low-to-High Network for Large-Scale High-Resolution Land-Cover Mapping Using Low-Resolution Labels. ISPRS J. Photogramm. Remote Sens. 2022, 192, 244–267. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Z.; Qi, G.; Hu, G.; Zhu, Z.; Huang, X. Remote Sensing Micro-Object Detection under Global and Local Attention Mechanism. Remote Sens. 2024, 16, 644. [Google Scholar] [CrossRef]

- Zhao, W.; Peng, S.; Chen, J.; Peng, R. Contextual-Aware Land Cover Classification with U-Shaped Object Graph Neural Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6510705. [Google Scholar] [CrossRef]

- Dong, B.; Zheng, Q.; Lin, Y.; Chen, B.; Ye, Z.; Huang, C.; Tong, C.; Li, S.; Deng, J.; Wang, K. Integrating Physical Model-Based Features and Spatial Contextual Information to Estimate Building Height in Complex Urban Areas. Int. J. Appl. Earth Obs. Geoinf. 2024, 126, 103625. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A Novel Transformer Based Semantic Segmentation Scheme for Fine-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Ye, Z.; Lin, Y.; Dong, B.; Tan, X.; Dai, M.; Kong, D. An Object-Aware Network Embedding Deep Superpixel for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2024, 16, 3805. [Google Scholar] [CrossRef]

- Zhou, X.; Zhou, L.; Gong, S.; Zhong, S.; Yan, W.; Huang, Y. Swin Transformer Embedding Dual-Stream for Semantic Segmentation of Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 175–189. [Google Scholar] [CrossRef]

- Liang, S.; Hua, Z.; Li, J. Hybrid Transformer-CNN Networks Using Superpixel Segmentation for Remote Sensing Building Change Detection. Int. J. Remote Sens. 2023, 44, 2754–2780. [Google Scholar] [CrossRef]

- Fang, F.; Zheng, K.; Li, S.; Xu, R.; Hao, Q.; Feng, Y.; Zhou, S. Incorporating Superpixel Context for Extracting Building from High-Resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 1176–1190. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11209, pp. 432–448. [Google Scholar]

- Yang, F.; Sun, Q.; Jin, H.; Zhou, Z. Superpixel Segmentation with Fully Convolutional Networks. In Proceedings of the CVPR, Seattle, WA, USA, 14–19 June 2020; pp. 13961–13970. [Google Scholar]

- Yuan, Y.; Chen, X.; Wang, J. Object-Contextual Representations for Semantic Segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 173–190. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Ma, X.; Wu, Q.; Zhao, X.; Zhang, X.; Pun, M.-O.; Huang, B. SAM-Assisted Remote Sensing Imagery Semantic Segmentation with Object and Boundary Constraints. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5636916. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.-O. RS3Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Cai, Y.; Fan, L.; Fang, Y. SBSS: Stacking-Based Semantic Segmentation Framework for Very High-Resolution Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5600514. [Google Scholar] [CrossRef]

| Method | Backbone | IoU (%) | mIoU (%) | mF1 (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | ||||

| DeepLabv3+ [12] | ResNet-101 | 73.76 | 84.48 | 63.96 | 77.46 | 61.29 | 72.19 | 83.28 |

| OCRNet [59] | HRNet-48 | 87.62 | 92.84 | 74.55 | 82.46 | 82.73 | 84.04 | 90.71 |

| SegFormer [34] | Mit-b5 | 87.46 | 92.72 | 72.81 | 82.13 | 78.13 | 82.65 | 90.00 |

| UNetformer [22] | ResNet-18 | 85.76 | 92.78 | 67.33 | 83.22 | 80.75 | 81.97 | 89.85 |

| RS3Mamba [63] | R18-MambaT | 86.62 | 93.83 | 67.84 | 83.66 | 81.97 | 82.78 | 90.34 |

| SAM-RS [62] | Swin-B | 88.20 | 94.53 | 69.16 | 84.53 | 83.64 | 84.01 | 91.08 |

| ESPNet [53] | ConvNeXt-T | 88.40 | 93.63 | 74.50 | 82.12 | 82.94 | 84.32 | 91.06 |

| the proposed model | ConvNeXt-T | 88.70 | 93.84 | 74.69 | 82.35 | 83.13 | 84.54 | 91.17 |

| Method | Backbone | IoU (%) | mIoU (%) | mF1 (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | ||||

| DeepLabv3+ [12] | ResNet-101 | 87.27 | 92.94 | 76.24 | 75.2 | 93.2 | 84.97 | 89.88 |

| GCNet [19] | ResNet-50 | 87.47 | 93.24 | 77.04 | 79.67 | 91.70 | 85.82 | / |

| OCRNet [59] | HRNet-48 | 91.53 | 96.04 | 80.7 | 80.75 | 95.86 | 88.98 | 92.69 |

| SegFormer [34] | Mit-b5 | 91.85 | 96.58 | 82.21 | 82.21 | 95.47 | 89.66 | 93.11 |

| SBSS-MS [64] | ConvNeXt-T | 88.95 | 94.88 | 79.42 | 81.82 | 93.34 | 87.68 | / |

| ESPNet [53] | ConvNeXt-T | 92.40 | 97.21 | 82.59 | 82.50 | 95.95 | 90.13 | 93.54 |

| the proposed model | ConvNeXt-T | 92.63 | 97.50 | 83.26 | 82.95 | 96.59 | 90.59 | 93.69 |

| Method | Backbone | IoU | F1 |

|---|---|---|---|

| DeepLabv3+ [12] | ResNet-101 | 53.63 | 69.82 |

| OCRNet [59] | HRNet48 | 56.79 | 72.44 |

| Segformer [34] | Mit_b5 | 63.68 | 77.81 |

| Farseg++ [43] | ResNet-50 | 64.40 | 78.30 |

| ESPNet [53] | ConvNeXt-T | 63.28 | 77.51 |

| the proposed model | ConvNeXt-T | 64.46 | 78.39 |

| Method | ConvNeXt-T | GC-Module | Spixel | mIoU (%) | mF1 (%) | Δparams (M) |

|---|---|---|---|---|---|---|

| Baseline | √ | 83.13 | 90.37 | 0 | ||

| w/global context | √ | √ | 83.62 | 90.58 | 1.190 | |

| w/deep superpixel | √ | √ | 84.18 | 90.98 | 0.005 | |

| The proposed model | √ | √ | √ | 84.54 | 91.17 | 1.195 |

| Model | Backbone | FLOPs (G) | Parameter (M) | Memory (MB) | FPS (Image/s) | MIoU (%) |

|---|---|---|---|---|---|---|

| DeepLabv3+ [12] | ResNet-101 | 127.45 | 60.21 | 1913 | 13.58 | 72.19 |

| OCRNet [59] | HRNet-48 | 82.74 | 70.53 | 1080 | 9.17 | 84.04 |

| SegFormer [34] | Mit-b5 | 3.14 | 2.55 | 1185 | 6.46 | 82.65 |

| Unetformer [22] | ResNet-18 | 5.87 | 11.68 | 167 | 2.87 | 81.97 |

| RS3Mamba [63] | R18-MambaT | 28.25 | 43.32 | 2332 | / | 82.78 |

| SAM-RS [62] | Swin-B | 50.84 | 96.14 | 5176 | 3.50 | 84.01 |

| ConvNeXt-U [37] | ConvNeXt | 15.61 | 80.30 | 669 | 16.13 | 60.43 |

| the proposed model | ConvNeXt-T | 133.21 | 83.69 | 1087 | 2.99 | 84.54 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, Z.; Lin, Y.; Gan, M.; Tan, X.; Dai, M.; Kong, D. ConvNeXt with Context-Weighted Deep Superpixels for High-Spatial-Resolution Aerial Image Semantic Segmentation. AI 2025, 6, 277. https://doi.org/10.3390/ai6110277

Ye Z, Lin Y, Gan M, Tan X, Dai M, Kong D. ConvNeXt with Context-Weighted Deep Superpixels for High-Spatial-Resolution Aerial Image Semantic Segmentation. AI. 2025; 6(11):277. https://doi.org/10.3390/ai6110277

Chicago/Turabian StyleYe, Ziran, Yue Lin, Muye Gan, Xiangfeng Tan, Mengdi Dai, and Dedong Kong. 2025. "ConvNeXt with Context-Weighted Deep Superpixels for High-Spatial-Resolution Aerial Image Semantic Segmentation" AI 6, no. 11: 277. https://doi.org/10.3390/ai6110277

APA StyleYe, Z., Lin, Y., Gan, M., Tan, X., Dai, M., & Kong, D. (2025). ConvNeXt with Context-Weighted Deep Superpixels for High-Spatial-Resolution Aerial Image Semantic Segmentation. AI, 6(11), 277. https://doi.org/10.3390/ai6110277