Attention-Based Multi-Agent RL for Multi-Machine Tending Using Mobile Robots

Abstract

1. Introduction

- Address a realistic machine-tending scenario with decentralized execution, part picking, and delivery;

- Design a novel dense reward function specifically for this scenario;

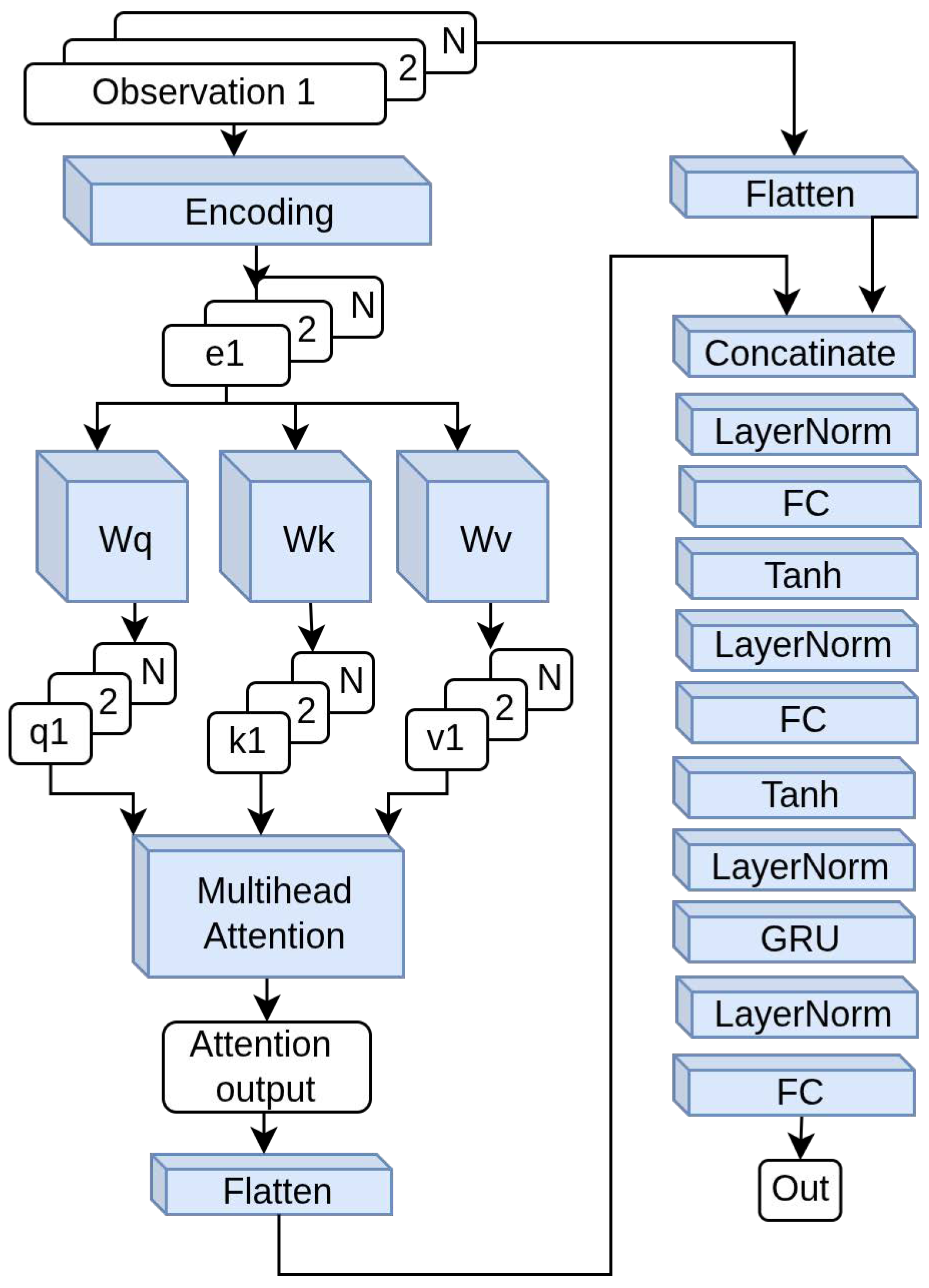

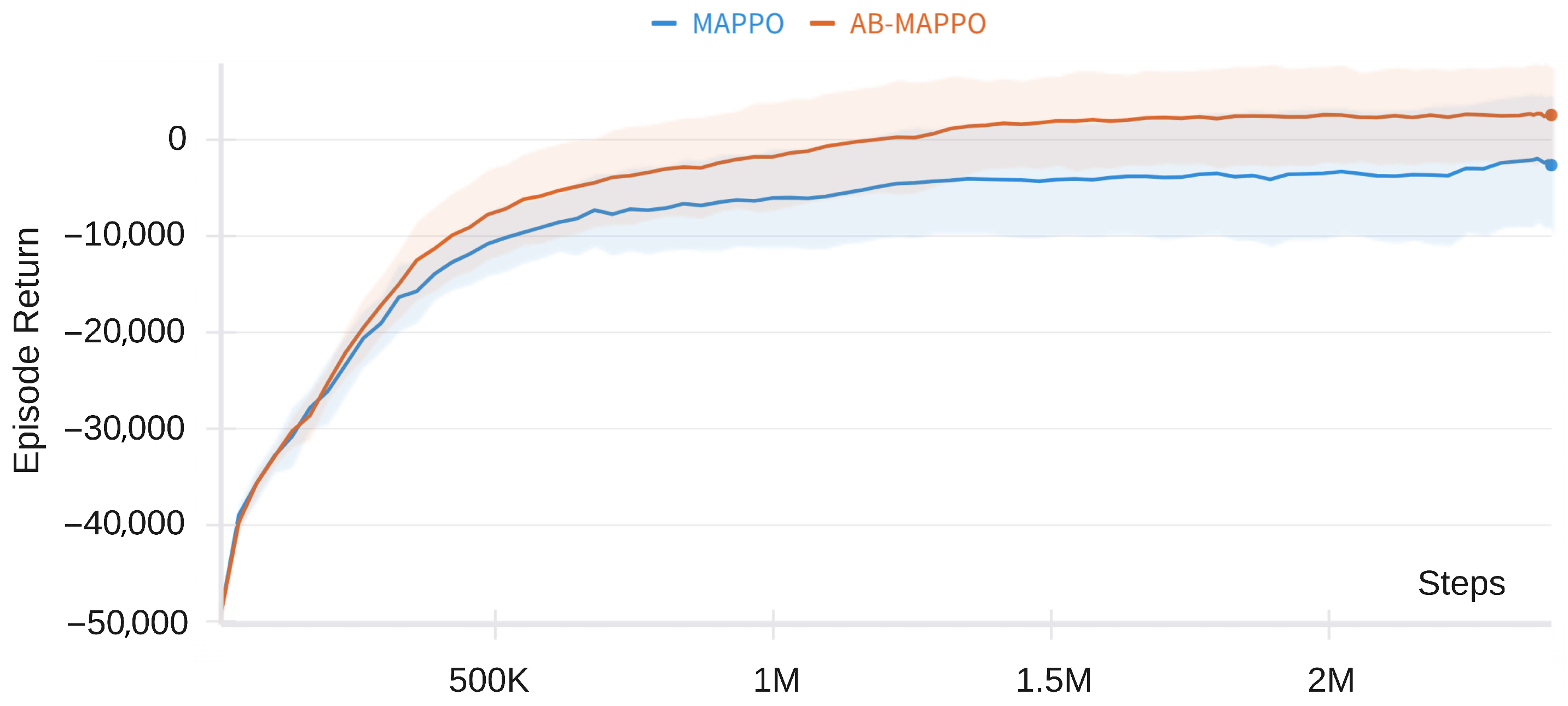

- Design an attention-based encoding technique and incorporate it into a working new model (AB-MAPPO).

2. Background and Related Work

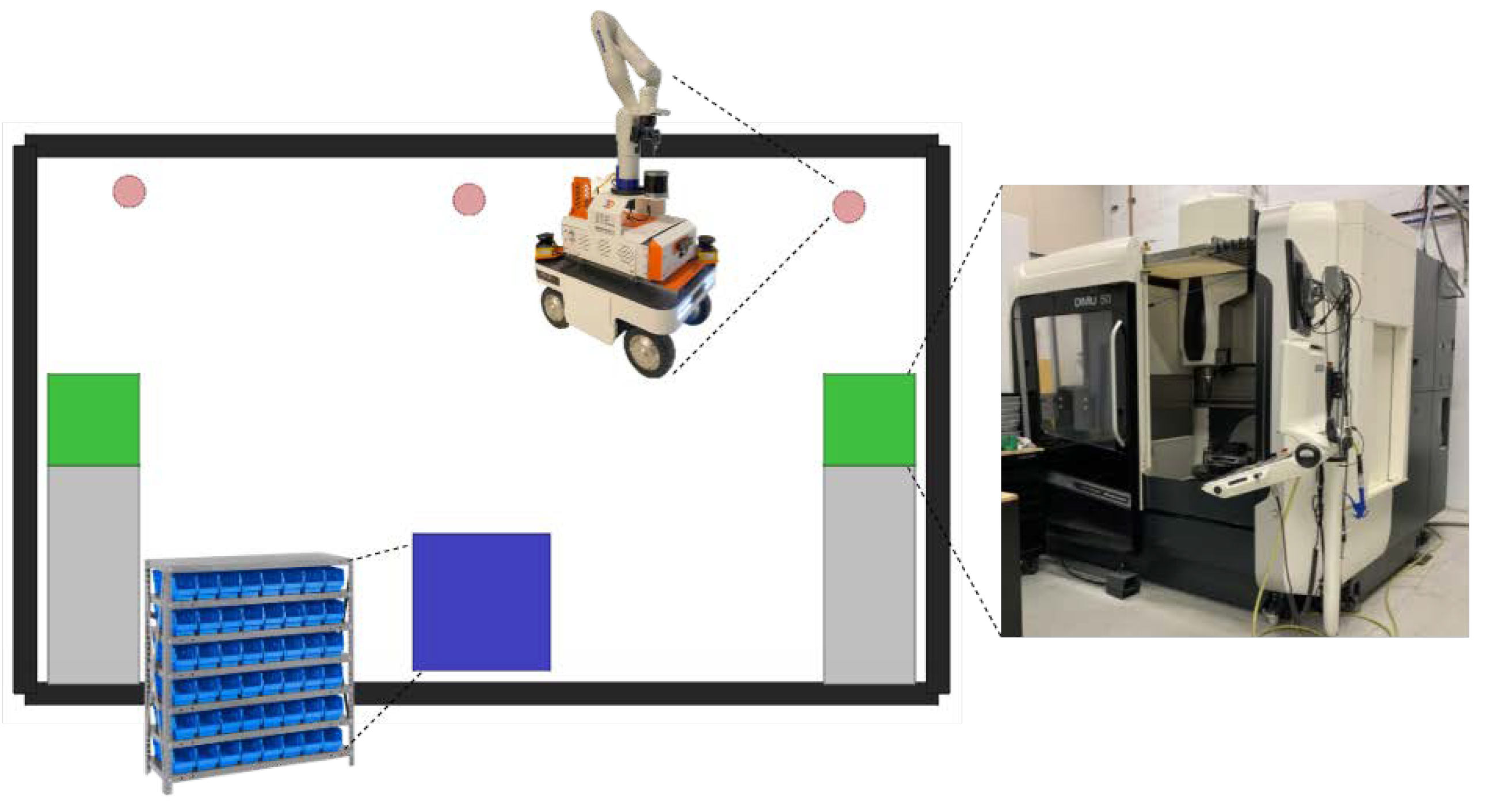

2.1. Machine Tending

2.2. RL for Machine Tending

3. Problem Definition

- Coordination: Agents autonomously determine their targets to optimize machine usage and minimize collision risks.

- Navigation: Agents must navigate efficiently to and from machines, while maintaining safety by avoiding collisions with other agents and static obstacles while transporting parts to the storage area.

- Cooperative–Competitive Dynamics: Agents compete for parts; the first to arrive at a machine can claim the part, requiring others to wait for the next availability.

- Temporal Reasoning: Agents must sequence their actions correctly, ensuring machines are fed or parts are collected and delivered at appropriate times.

4. Methodology

4.1. MAPPO Backbone

4.2. Novel Attention-Based Encoding for MAPPO

4.3. Observation Design

- The agent’s absolute position and a “has part” flag indicating whether it has picked up a part but not yet placed it.

- The relative positions of each machine, accompanied by a flag indicating the readiness of a part at each location.

- The relative position of the storage area.

- The relative positions of other agents, along with their respective “has part” flags.

4.4. Reward Design

- Pick Reward (): Granted when an agent without a part reaches a machine with a ready part.

- Place Reward (): Awarded when an agent with a part reaches the storage area to place the part.

- Collision Penalty (): Incurred upon collision with agents, walls, machines, or the storage area.

- Progress towards the closest machine with a ready part () and the storage area () is rewarded to provide continuous feedback. The rewards are calculated based on the reduction in distance to the target () between consecutive steps, scaled by a factor ().

- To discourage idle machines, a penalty is applied for uncollected parts () at each step, calculated based on the number of steps a part (i) remains uncollected () multiplied by a scaling factor (u).

- Imposed to encourage movement, particularly when no other rewards or penalties are being applied.

5. Experiments

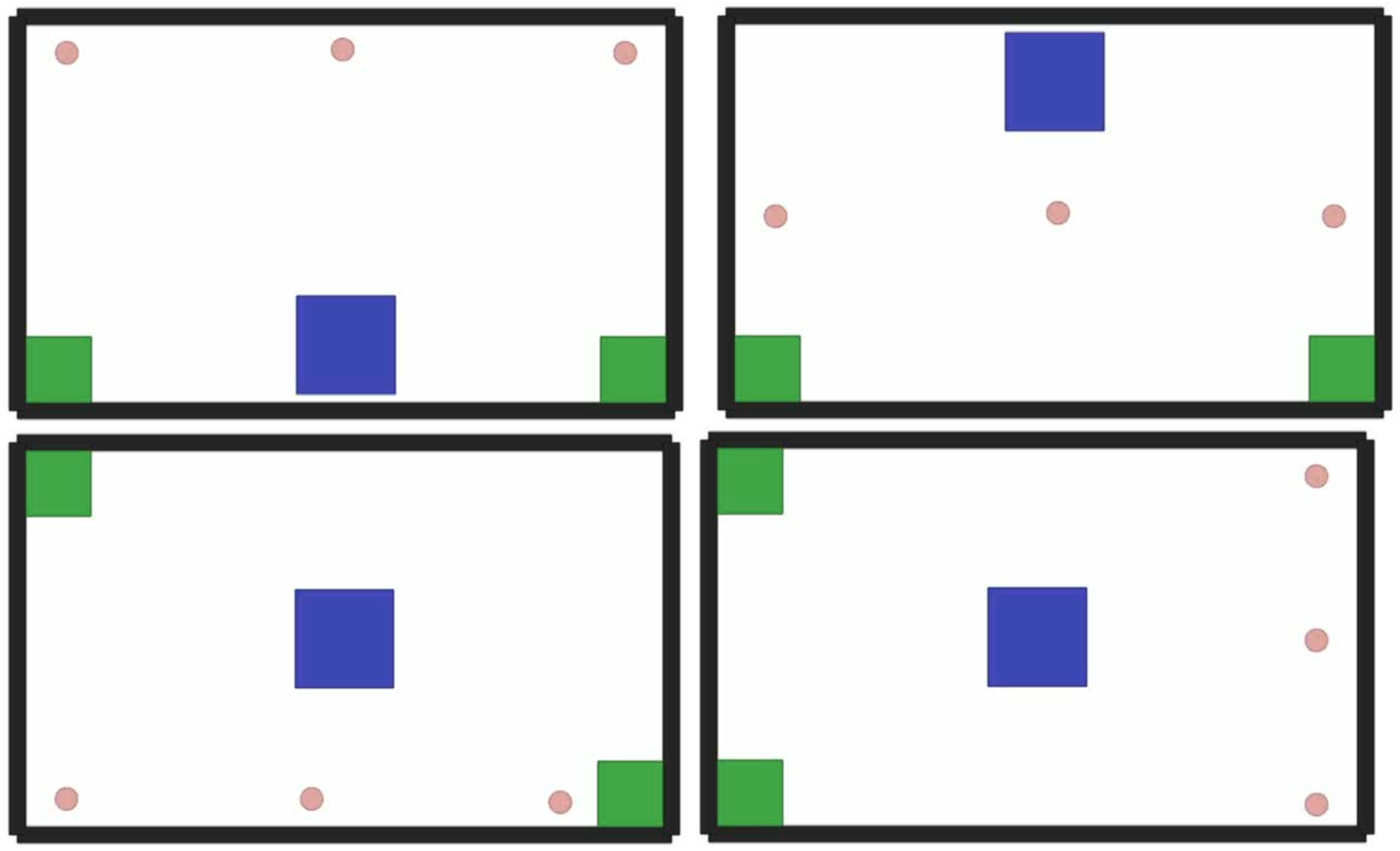

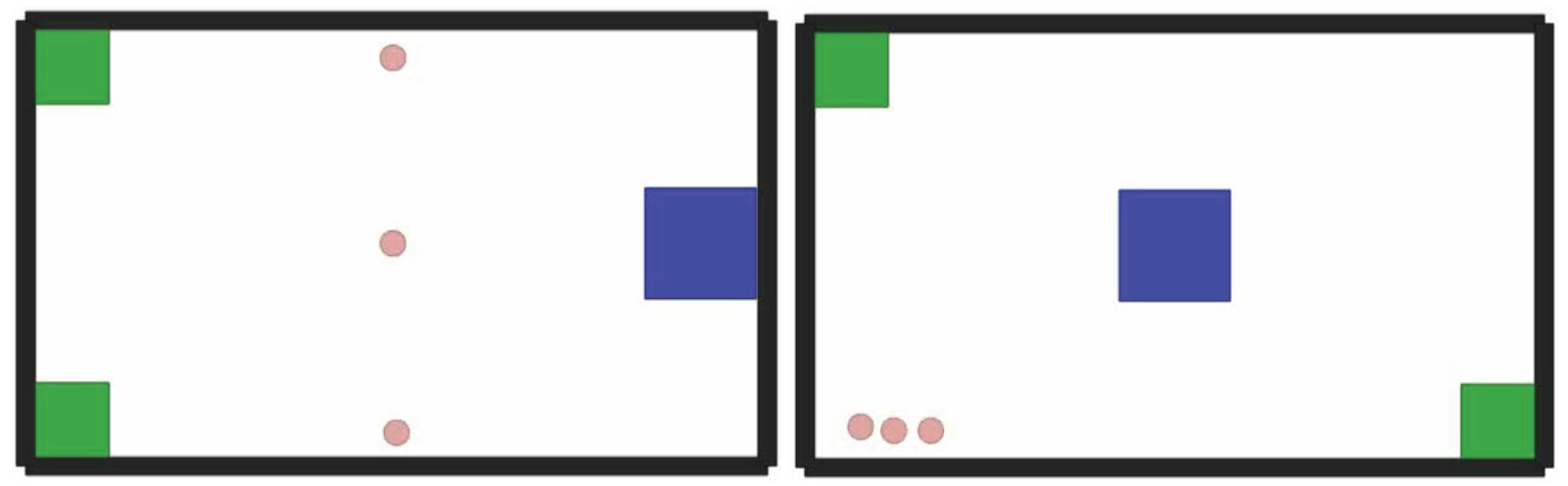

5.1. Simulation Setup

5.2. Evaluation Procedure

- Total Number of Collected Parts: Measures the efficiency of part collection by agents.

- Total Number of Delivered Parts: Assesses the effectiveness of the delivery process.

- Total Number of Collisions: Calculated as the cumulative collisions across all agents, indicating the safety of navigation and interaction.

- Machine Utilization (MU):where represents the utilization rate of machine i, calculated by dividing the number of parts collected from machine i () by the maximum parts it could have produced (). The average utilization across all machines () is then determined by averaging the values for all machines (M).

- Agent Utilization (AU):where is the utilization for agent i, based on the number of parts it collected () relative to the average expected parts per agent (). The average utilization for all agents () is the mean of values across all agents (N).

5.3. Results and Adaptability

6. Ablation Study

6.1. Experiments on Observation Design

6.2. Experiments on Reward Design

6.3. Experiments on Combinations of Options

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Model and Training Parameters

| Parameter | Value |

|---|---|

| Reward Sharing | False |

| Pick Reward | 15 |

| Place Reward | 30 |

| Collision Penalty | −10 |

| Distance Rewards Scale | 10 |

| Fixed Uncollected Parts Penalty | True |

| Uncollected Penalty | −1 |

| Time Penalty | −0.01 |

| Encoding vector Size | 18 |

| Object embedding Size | 18 |

| Number of Attention Heads | 3 |

| Attention Embedding Size | 18 |

| Learning Rate | 7 × |

| Optimizer | Adam |

Appendix B. Simulation Setup Details

| Parameter | Value |

|---|---|

| Machine mass | 1 Kg (the default value in VMAS) |

| Machine length | 20 cm |

| Machine width | 20 cm |

| Agent mass | 1 Kg |

| Agent radius | 3.5 cm |

| Storage mass | 1 Kg |

| Storage length | 0.3 |

| Storage width | 0.3 |

| Collidables | Agents, walls, machine blockers, machines, storage |

| Positions noise | Zero |

| Velocity damping | Zero |

References

- Kugler, L. Addressing labor shortages with automation. Commun. ACM 2022, 65, 21–23. [Google Scholar] [CrossRef]

- Heimann, O.; Niemann, S.; Vick, A.; Schorn, C.; Krüger, J. Mobile Machine Tending with ROS2: Evaluation of system capabilities. In Proceedings of the 2023 IEEE 28th International Conference on Emerging Technologies and Factory Automation (ETFA), Sinaia, Romania, 12–15 September 2023; IEEE: New York, NY, USA, 2023; pp. 1–4. [Google Scholar]

- Fan, T.; Long, P.; Liu, W.; Pan, J. Fully distributed multi-robot collision avoidance via deep reinforcement learning for safe and efficient navigation in complex scenarios. arXiv 2018, arXiv:1808.03841. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Singh, B.; Kumar, R.; Singh, V.P. Reinforcement learning in robotic applications: A comprehensive survey. Artif. Intell. Rev. 2022, 55, 945–990. [Google Scholar] [CrossRef]

- Guo, X.; Shi, D.; Yu, J.; Fan, W. Heterogeneous Multi-Agent Reinforcement Learning for Zero-Shot Scalable Collaboration. arXiv 2024, arXiv:2404.03869. [Google Scholar] [CrossRef]

- Zhou, T.; Zhang, F.; Shao, K.; Li, K.; Huang, W.; Luo, J.; Wang, W.; Yang, Y.; Mao, H.; Wang, B.; et al. Cooperative multi-agent transfer learning with level-adaptive credit assignment. arXiv 2021, arXiv:2106.00517. [Google Scholar]

- Lobos-Tsunekawa, K.; Srinivasan, A.; Spranger, M. MA-Dreamer: Coordination and communication through shared imagination. arXiv 2022, arXiv:2204.04687. [Google Scholar] [CrossRef]

- Agarwal, A.; Kumar, S.; Sycara, K. Learning transferable cooperative behavior in multi-agent teams. arXiv 2019, arXiv:1906.01202. [Google Scholar] [CrossRef]

- Long, Q.; Zhou, Z.; Gupta, A.; Fang, F.; Wu, Y.; Wang, X. Evolutionary population curriculum for scaling multi-agent reinforcement learning. arXiv 2020, arXiv:2003.10423. [Google Scholar] [CrossRef]

- Chen, G.; Yao, S.; Ma, J.; Pan, L.; Chen, Y.; Xu, P.; Ji, J.; Chen, X. Distributed non-communicating multi-robot collision avoidance via map-based deep reinforcement learning. Sensors 2020, 20, 4836. [Google Scholar] [CrossRef]

- Li, M.; Belzile, B.; Imran, A.; Birglen, L.; Beltrame, G.; St-Onge, D. From assistive devices to manufacturing cobot swarms. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; IEEE: New York, NY, USA, 2023; pp. 234–240. [Google Scholar]

- Jia, F.; Jebelli, A.; Ma, Y.; Ahmad, R. An Intelligent Manufacturing Approach Based on a Novel Deep Learning Method for Automatic Machine and Working Status Recognition. Appl. Sci. 2022, 12, 5697. [Google Scholar] [CrossRef]

- Al-Hussaini, S.; Thakar, S.; Kim, H.; Rajendran, P.; Shah, B.C.; Marvel, J.A.; Gupta, S.K. Human-supervised semi-autonomous mobile manipulators for safely and efficiently executing machine tending tasks. arXiv 2020, arXiv:2010.04899. [Google Scholar]

- Chen, Z.; Alonso-Mora, J.; Bai, X.; Harabor, D.D.; Stuckey, P.J. Integrated task assignment and path planning for capacitated multi-agent pickup and delivery. IEEE Robot. Autom. Lett. 2021, 6, 5816–5823. [Google Scholar] [CrossRef]

- Behbahani, S.; Chhatpar, S.; Zahrai, S.; Duggal, V.; Sukhwani, M. Episodic Memory Model for Learning Robotic Manipulation Tasks. arXiv 2021, arXiv:2104.10218. [Google Scholar] [CrossRef]

- Burgess-Limerick, B.; Haviland, J.; Lehnert, C.; Corke, P. Reactive Base Control for On-The-Move Mobile Manipulation in Dynamic Environments. IEEE Robot. Autom. Lett. 2024, 9, 2048–2055. [Google Scholar] [CrossRef]

- Zhu, K.; Zhang, T. Deep reinforcement learning based mobile robot navigation: A review. Tsinghua Sci. Technol. 2021, 26, 674–691. [Google Scholar] [CrossRef]

- Wu, P.; Escontrela, A.; Hafner, D.; Abbeel, P.; Goldberg, K. Daydreamer: World models for physical robot learning. In Proceedings of the Conference on Robot Learning. PMLR, Atlanta, GA, USA, 6–9 November 2023; pp. 2226–2240. [Google Scholar]

- Iriondo, A.; Lazkano, E.; Susperregi, L.; Urain, J.; Fernandez, A.; Molina, J. Pick and place operations in logistics using a mobile manipulator controlled with deep reinforcement learning. Appl. Sci. 2019, 9, 348. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.M.; Wu, Y. The surprising effectiveness of PPO in cooperative multi-agent games. Adv. Neural Inf. Process. Syst. 2022, 35, 24611–24624. [Google Scholar]

- Lowe, R.; Wu, Y.I.; Tamar, A.; Harb, J.; Pieter Abbeel, O.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. Adv. Neural Inf. Process. Syst. 2017, 30, 6380–6392. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Huang, H.; Chen, T.; Wang, H.; Hu, R.; Luo, W.; Yao, Z. Mappo method based on attention behavior network. In Proceedings of the 2022 10th International Conference on Information Systems and Computing Technology (ISCTech), Guilin, China, 28–30 December 2022; IEEE: New York, NY, USA, 2022; pp. 301–308. [Google Scholar]

- Phan, T.; Ritz, F.; Altmann, P.; Zorn, M.; Nüßlein, J.; Kölle, M.; Gabor, T.; Linnhoff-Popien, C. Attention-based recurrence for multi-agent reinforcement learning under stochastic partial observability. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 27840–27853. [Google Scholar]

- Agrawal, A.; Won, S.J.; Sharma, T.; Deshpande, M.; McComb, C. A multi-agent reinforcement learning framework for intelligent manufacturing with autonomous mobile robots. Proc. Des. Soc. 2021, 1, 161–170. [Google Scholar] [CrossRef]

- Abouelazm, A.; Michel, J.; Zöllner, J.M. A review of reward functions for reinforcement learning in the context of autonomous driving. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju, Republic of Korea, 2–5 June 2024; IEEE: New York, NY, USA; pp. 156–163. [Google Scholar]

- Bettini, M.; Kortvelesy, R.; Blumenkamp, J.; Prorok, A. VMAS: A Vectorized Multi-Agent Simulator for Collective Robot Learning. In Proceedings of the 16th International Symposium on Distributed Autonomous Robotic Systems, Montbéliard, France, 28–30 November 2022. [Google Scholar]

- Samvelyan, M.; Rashid, T.; De Witt, C.S.; Farquhar, G.; Nardelli, N.; Rudner, T.G.; Hung, C.M.; Torr, P.H.; Foerster, J.; Whiteson, S. The starcraft multi-agent challenge. arXiv 2019, arXiv:1902.04043. [Google Scholar]

- Kurach, K.; Raichuk, A.; Stańczyk, P.; Zając, M.; Bachem, O.; Espeholt, L.; Riquelme, C.; Vincent, D.; Michalski, M.; Bousquet, O.; et al. Google research football: A novel reinforcement learning environment. In Proceedings of the AAAI conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 4501–4510. [Google Scholar]

- Papoudakis, G.; Christianos, F.; Schäfer, L.; Albrecht, S.V. Benchmarking Multi-Agent Deep Reinforcement Learning Algorithms in Cooperative Tasks. In Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

| Model | MAPPO | AB-MAPPO |

|---|---|---|

| Collected * | 10.73(1.7) | 12.63(1.39) |

| Delivered * | 9.79(1.69) | 10.96(1.13) |

| Collisions | 2.16(0.6) | 1.92(0.84) |

| Avr(MU) * | 0.54(0.02) | 0.63(0.01) |

| Avr(AU) * | 0.54(0.03) | 0.63(0.06) |

| Exp | Collected | Delivered | Collisions | Avr(MU) | Avr(AU) |

|---|---|---|---|---|---|

| Baseline(B) | 7.66(0.7) | 6.29(0.7) | 13.47(4.2) | 0.38(0.2) | 0.38(0.0) |

| B + Ready steps (PRS) | 1.26(0.1) | 0.01(0.0) | 10.25(3.3) | 0.06(0.0) | 0.06(0.0) |

| B − Velocity (RV) | 9.25(1.1) | 7.8(1.1) | 13.96(1.8) | 0.46(0.1) | 0.46(0.0) |

| B − Normalization (RN) | 7.78(1.2) | 6.24(1.3) | 8.67(2.5) | 0.39(0.2) | 0.39(0.1) |

| B + Corner rep. (PCR) | 9.18(0.5) | 7.74(0.5) | 7.72(1.3) | 0.46(0.2) | 0.46(0.0) |

| B + Blockers info. (PBI) | 8.15(1.0) | 6.64(1.0) | 9.22(1.9) | 0.41(0.3) | 0.41(0.0) |

| B − Walls info. (RWI) | 7.49(0.1) | 5.78(0.2) | 9.78(2.0) | 0.38(0.3) | 0.37(0.1) |

| Exp | Collected | Delivered | Collisions | Avr(MU) | Avr(AU) |

|---|---|---|---|---|---|

| Baseline(B) | 7.66(0.7) | 6.29(0.7) | 13.47(4.2) | 0.38(0.2) | 0.38(0.0) |

| B − Time Penalty (RT) | 6.72(1.8) | 5.4(1.9) | 18.33(9.0) | 0.34(0.0) | 0.34(0.0) |

| B − Reward Sharing (RRS) | 8.44(0.7) | 7.39(0.5) | 27.25(9.8) | 0.42(0.2) | 0.42(0.1) |

| B − Utilization Penalty (RUP) | 4.79(0.6) | 3.46(0.2) | 6.58(2.1) | 0.24(0.1) | 0.24(0.0) |

| B + Increasing Util. Pen. (IUP) | 1.55(0.1) | 0.28(0.2) | 167.48(53.7) | 0.08(0.0) | 0.08(0.0) |

| B − Distance Rewards (RDR) | 6.57(2.6) | 4.99(3.4) | 7.57(4.6) | 0.33(0.2) | 0.33(0.1) |

| Exp | Collected | Delivered | Collisions | Avr(MU) | Avr(AU) |

|---|---|---|---|---|---|

| RV + PBI | 9.03(1.4) | 7.56(1.5) | 11.59(1.1) | 0.45(0.2) | 0.45(0.1) |

| RV + RRS | 10.2(1.0) | 8.74(0.8) | 15.02(1.0) | 0.51(0.1) | 0.51(0.1) |

| RV + PCR | 9.8(0.7) | 8.16(1.0) | 7.64(2.0) | 0.49 (0.2) | 0.49(0.1) |

| RV + PCR + RSS + RRS | 8.51(0.6) | 7.43(0.5) | 21.26(1.6) | 0.43(0.4) | 0.43(0.1) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdalwhab, A.B.M.; Beltrame, G.; Kahou, S.E.; St-Onge, D. Attention-Based Multi-Agent RL for Multi-Machine Tending Using Mobile Robots. AI 2025, 6, 252. https://doi.org/10.3390/ai6100252

Abdalwhab ABM, Beltrame G, Kahou SE, St-Onge D. Attention-Based Multi-Agent RL for Multi-Machine Tending Using Mobile Robots. AI. 2025; 6(10):252. https://doi.org/10.3390/ai6100252

Chicago/Turabian StyleAbdalwhab, Abdalwhab Bakheet Mohamed, Giovanni Beltrame, Samira Ebrahimi Kahou, and David St-Onge. 2025. "Attention-Based Multi-Agent RL for Multi-Machine Tending Using Mobile Robots" AI 6, no. 10: 252. https://doi.org/10.3390/ai6100252

APA StyleAbdalwhab, A. B. M., Beltrame, G., Kahou, S. E., & St-Onge, D. (2025). Attention-Based Multi-Agent RL for Multi-Machine Tending Using Mobile Robots. AI, 6(10), 252. https://doi.org/10.3390/ai6100252