1. Introduction

In the era of globalization and high mobility, cross-border activities such as tourism, student exchange programs, and business travel have become an integral part of modern life. As the number of people traveling to various countries increases, the ability to understand and use foreign currencies has become an increasingly important necessity. According to reports from the International Monetary Fund (IMF) and the Central Intelligence Agency (CIA), there are more than 180 types of official currencies circulating globally, excluding digital or crypto currencies, most of which have not yet obtained legal status in many countries [

1]. These currencies are used in approximately 195 countries and territories, each with unique physical characteristics such as variations in shape, size, material, and design.

Although this diversity reflects the richness of global culture, it also poses challenges for travelers, especially older adults and individuals with visual impairments, in recognizing and handling local currencies. Even frequent travelers often struggle to distinguish denominations and adapt to exchange rate fluctuations [

2]. Similarities in color, size, or design among currencies can cause confusion, particularly when denominations are printed in foreign scripts. Additional factors—such as damaged banknotes, poor lighting, or time pressure during transactions—further hinder accurate identification. These issues can lead to discomfort or financial losses, underscoring the need for practical and accessible currency identification systems [

3,

4,

5].

Various approaches have been developed to recognize coins and banknotes. Conventional methods, such as mechanical and electromagnetic techniques, rely on measuring physical properties and magnetic responses; however, these are generally used in large machines in shopping centers or banks and are not suitable for portable use [

6]. For banknote recognition, technologies like magnetic, UV, and infrared sensors are commonly applied in ATMs and vending machines, but these methods require specialized hardware and are difficult to adapt to new or updated currency designs [

7]. As a modern alternative, image-based methods supported by Artificial Intelligence (AI) are increasingly utilized. By leveraging cameras to detect coins and banknotes, these approaches offer greater flexibility and adaptability than traditional methods [

8,

9].

There is a wide range of deep learning frameworks and models that have been applied to image analysis in both research and practice. Well-known libraries, such as TensorFlow [

10], Keras [

11], and PyTorch [

12] are frequently employed in prior studies to provide flexible infrastructures for developing and deploying convolutional and transformer-based models, enabling scalable training across diverse hardware platforms. Landmark advances in image classification, such as AlexNet [

13], demonstrated the power of deep convolutional neural networks on large-scale datasets like ImageNet, while region-based approaches such as R-CNN [

14] extended these methods to object detection through region proposals combined with CNN features. Collectively, these developments illustrate the breadth of deep learning methods for image analysis, highlighting the trade-offs between accuracy, computational cost, and deployment feasibility.

Prior work has explored banknote recognition under challenging conditions, including perspective changes, clutter, partial occlusion, and even overlapping notes. For example, ref. [

15] proposed a three-stage Faster R-CNN pipeline that includes coin detection, while ref. [

16] applied robust feature-matching with homography and shape analysis for overlapping banknotes. However, these systems typically target a single currency at a time (often banknotes only) and are not deployed as an on-device deep learning model with an optional server-assisted mode or integrated currency-conversion functionality.

In this study, an AI model based on YOLOv11 object detection technology was developed to recognize foreign coins and banknotes, even when they are partially occluded within a single image. The system also integrates a feature to convert detected values into the user’s preferred currency based on the latest exchange rates, supporting real-time, practical use in diverse environments. The primary contributions of this work can be summarized as follows:

Simultaneous recognition of both coins and banknotes from multiple currencies within a single image, including scenarios where items are overlapping or partly occluded.

Hybrid inference strategy that integrates an embedded TensorFlow Lite (TFLite) model for real-time on-device detection with an optional server-assisted mode for higher accuracy.

Integrated currency conversion module that provides real-time value translation based on current exchange rates.

The remainder of this paper is organized as follows.

Section 2 reviews related works, including YOLO development and prior studies on currency recognition.

Section 3 describes the proposed system design, covering the overall architecture, dataset construction and model comparison.

Section 4 details the system development, including development environment, model training, server design, mobile model conversion, and application features.

Section 5 evaluates system performance, reporting response time, real-world accuracy under occlusion, and comparative analysis against existing research. Finally,

Section 6 concludes the paper with summary of findings, limitations and deployment considerations.

2. Related Works

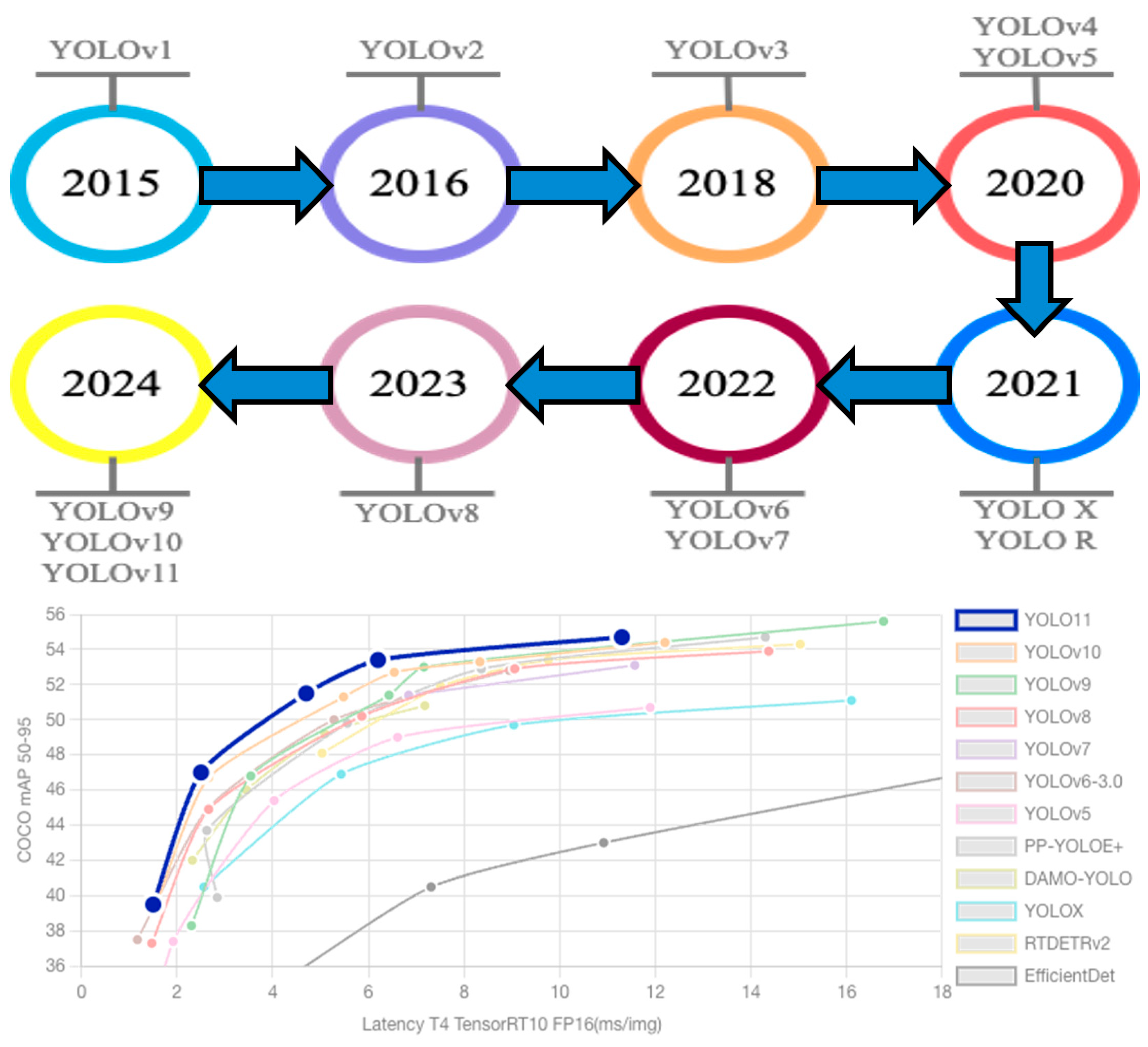

You Only Look Once (YOLO) is a state-of-the-art object detection framework that has advanced substantially since its introduction by Joseph Redmon in 2015. Utilizing a grid-based mechanism to predict bounding boxes and class probabilities simultaneously, YOLO enables high-speed and efficient detection, making it suitable for real-time applications [

17]. Over time, it has incorporated architectural improvements such as multi-scale anchor boxes, optimized backbone networks, and advanced loss functions like Complete Intersection over Union (CIoU), enhancing its accuracy and adaptability to diverse object scales. A summary of YOLO’s development from 2015 to 2024 is presented in

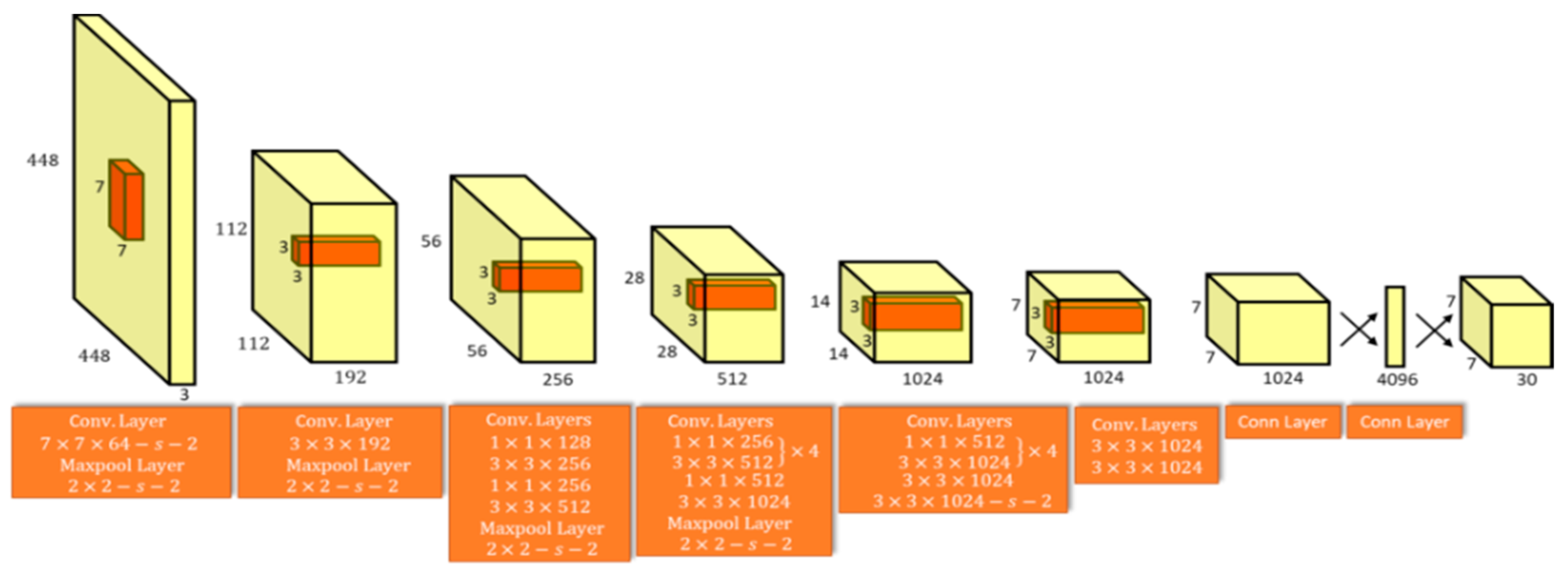

Figure 1, and the overall architecture is shown in

Figure 2.

Compared to two-stage detectors such as Faster R-CNN, YOLO achieves significantly lower latency by performing detection in a single stage, directly predicting class probabilities and bounding box coordinates from full images. This single-stage design minimizes computational overhead, reduces redundant region proposals, and allows YOLO to maintain high accuracy while running efficiently on consumer-grade hardware. A central advantage of YOLO lies in this combination of speed and efficiency, which has established it as a benchmark among lightweight detection models [

18,

19]. Successive YOLO versions have introduced modules such as the Cross-Stage Partial with Spatial Attention (C2PSA), the Spatial Pyramid Pooling Fast (SPPF), and enhanced backbone networks, which collectively improve feature extraction of small or densely packed objects. These characteristics make YOLO particularly advantageous for applications that demand a balance of speed, accuracy, and resource efficiency.

As shown in

Figure 2, the overall YOLO architecture consists of a backbone network that extracts hierarchical features from input images, a neck module that aggregates multi-scale feature maps through mechanisms such as feature pyramid networks (FPN) or path aggregation networks (PAN), and the head, which performs dense prediction of bounding box coordinates, objectness scores, and class probabilities. This streamlined pipeline enables end-to-end training and inference while preserving real-time detection capability.

Previous research related to currencies identification such as coins and banknotes has been conducted; this research addresses some of the major challenges and research gaps which is summarized in

Table 1.

As shown in

Table 1, several prior studies have addressed currency recognition under challenging conditions. As mentioned previously, refs. [

15,

16] proposed a method that can detect coins and banknotes under folding, partial occlusion and overlapping scenario using three-stage Faster R-CNN pipeline and a selective feature-matching approach combined with homography and shape analysis. While these studies advanced the field, both were limited to single-currency settings and desktop-based implementations, without multi-currency simultaneous recognition, mobile deployment, or currency conversion capabilities.

Other studies [

20,

21,

22,

23,

24,

25,

26] primarily applied YOLO-based or handcrafted methods across various currencies and settings. These works achieved notable recognition accuracy but typically focused on either coins or banknotes, often in isolation, and lacked full support for real-time mobile inference. In addition, most relied on either web platforms, classical computer vision pipelines, or specialized hardware such as line-scan sensors, reducing portability and usability in everyday contexts.

Taken together, these prior works can be grouped into three categories: (i) deep learning pipelines such as Faster R-CNN [

15] or selective feature-matching [

16], which were robust in controlled tasks but remained limited to single-currency desktop applications; (ii) YOLO-based approaches [

20,

21,

22], which reported strong performance but typically addressed either coins or banknotes separately, with limited attention to overlapping or occluded cases; and (iii) alternative methods such as Teachable Machine [

23,

24], SIFT-based approaches [

20], or line-scan hardware systems [

25], which either lacked robustness, required handcrafted features, or depended on specialized equipment. These recurring limitations highlight that no prior work has simultaneously addressed mobile deployment, mixed-currency recognition, and robustness under occlusion in a unified system.

In summary, studies [

15,

16,

18,

19,

20,

21,

22,

23,

24,

25,

26] highlight significant progress but do not offer fully integrated, real-time mobile solutions. They lack the ability to handle both coins and banknotes simultaneously across multiple currency while also supporting mixed denominations, occlusion, and real-time conversion.

The novelty of this research lies in its holistic integration of key features from [

20,

21], combined with several enhancements specifically targeting the detection of mixed and partly covered currency. To achieve this, the proposed system leverages a high-performance object detector model trained on a purpose-built dataset and embedded within a mobile application that employs a hybrid inference strategy—combining lightweight TensorFlow Lite on-device detection with optional server-side refinement.

3. System Design

3.1. System Architecture and Workflow

As illustrated in

Figure 3, the system architecture consists of three core components: a mobile application, an object detection model (deployed in embedded and server-side forms), and an external API for currency exchange. The mobile application integrates a lightweight embedded model capable of performing real-time, offline detection of coins and banknotes directly from the camera, including cases where items are partly covered or overlapping.

For improved accuracy, users can capture an image and transmit it to the server, which hosts a full-scale object detection model that produces more precise identification results in structured JSON format. Additionally, the system supports real-time currency conversion via an open API, allowing users to view equivalent values in their preferred currency.

The workflow, shown in

Figure 4, begins with collecting images of coins and banknotes under various conditions to ensure dataset diversity and robustness, particularly for recognizing mixed and partially occluded currency. The dataset is labeled in Roboflow with bounding boxes and currency classes. Preprocessing and augmentation steps—including rotation, flipping, and brightness adjustments—are applied to improve generalization.

Several object detection algorithms, including SSD, RetinaNet, YOLOv8, and YOLOv11, are compared to identify the most accurate and efficient model. The selected model is configured and trained using the prepared dataset, then converted into a mobile-friendly format. Finally, it is integrated into the application, which supports real-time inference both offline and online and provides visual detection and currency conversion features to enhance user convenience.

3.2. Dataset

The dataset used in this study consists of 46 denomination classes from four widely circulated currencies: the US Dollar (USD), Euro (EUR), Chinese Yuan (CNY), and Korean Won (KRW). Each class includes 200 images, totaling 9200 class-balanced samples. Images were collected from open-access sources such as Kaggle, Google Images, Medium, and personal collections to capture diverse real-world conditions, including cases where coins and banknotes are mixed or partially covered. The personal collection samples were captured using various smartphone cameras (iOS and Android-based). Only currently circulating denominations were included to ensure practical relevance. All images were curated to maintain consistency and quality, verifying class balance and removing anomalies.

Annotation was performed in Roboflow using rectangular bounding boxes to define currency objects. Each labeled object was tagged with its currency and denomination, serving as ground truth for training. The dataset was first partitioned into training, validation, and test sets using a 7:2:1 ratio. Augmentation was then applied only to the training set to improve model robustness. The augmentation operations included geometric transformations (rotation, zoom crop), color adjustments (grayscale, brightness, exposure), noise injection, and motion blur to simulate real-world imperfections. After augmentation, the dataset expanded to 22,198 images.

Preprocessing steps included resizing images to 320 × 320 pixels, contrast enhancement, and pixel normalization.

Figure 5 illustrates images of the coins and banknotes used in the dataset, while

Table 2 summarizes the classes and the distribution of images before and after augmentation.

3.3. Model Comparison

Four popular one-stage detectors: YOLOv8, YOLOv11, SSD, and RetinaNet were evaluated to identify the most suitable model for recognizing mixed and partly covered foreign coins and banknotes. These models were selected because each has been considered state-of-the-art at different points in the development of object detection, with distinct trade-offs in speed, accuracy, and architectural design [

27]. SSD introduced real-time one-stage detection with relatively lightweight computation, RetinaNet addressed the class imbalance problem using focal loss, and the YOLO family has continually advanced single-stage detectors by improving speed and accuracy for real-time tasks.

Other detectors such as Faster R-CNN, EfficientDet, and DETR were not adopted in this study due to their comparatively higher computational demands, slower inference speeds, or lack of mobile-oriented implementations, which make them less suitable for deployment on smartphones. By focusing on SSD, RetinaNet, and the latest YOLO variants, this paper prioritize to balance representativeness of major one-stage detector families with practical feasibility for real-time mobile applications. Furthermore, specifically for YOLO this paper used the medium-sized variants (YOLOv8-m and YOLOv11-m).

All models were trained on the same dataset under identical conditions to ensure fair and reproducible results. Training parameters were standardized where possible, with 500 epochs, 320 × 320 image resolution, and a batch size of 32. Stochastic Gradient Descent (SGD) was used as the optimizer across all models, with a common weight decay of 0.0005 and momentum values close to 0.9.

Model performance was assessed using three key metrics: Average Precision (AP), Average Recall (AR), and Mean Average Precision (mAP). AP measures precision in reducing false positives, AR evaluates the ability to detect all relevant objects, and mAP provides an overall performance score across classes and Intersection over Union (IoU) thresholds. The results guided the selection of the most effective model—YOLOv11—for integration into the system.

Let

TP,

FP, and

FN denote True Positives, False Positives, and False Negatives, respectively. The equations for the performance metrics are:

The p(r) in Equation (3) denotes to precision-recall curve, N in Equation (4) represent the number of classes, and APi in Equation (4) is the average precision for class i. Furthermore, mAP@0.5 is the mean AP computed at an IoU threshold of 0.5 and mAP@0.5:0.95 is the mean AP averaged across multiple IoU thresholds from 0.5 to 0.95 in increments of 0.05.

4. System Development

4.1. Development Environment

The proposed system was implemented as an Android mobile application with a backend server supporting full-scale inference. Android was chosen for its global availability, affordability, and flexible development environment [

28]. Development was carried out on a dual-boot PC (Windows 11 and Ubuntu 24.10) with an Intel Core i9-14900K processor (Intel Corporation, Santa Clara, CA, USA), 64 GB RAM, and an NVIDIA RTX 4090 GPU (Intel Corporation, Santa Clara, CA, USA). Ubuntu was used for model training with CUDA acceleration, while Windows hosted app development and API testing.

The heavy neural computation for both training and full-scale inference was performed on this same PC, which was responsible for dataset preprocessing, model training, and server-assisted inference. This setup enabled high-throughput and low-latency detection when the mobile device was connected. In contrast, the Android application executed inference on-device using a TensorFlow Lite version of YOLOv11, optimized for reduced model size and computational load. This division ensures that the system can operate independently (offline) while also benefiting from enhanced performance when server connectivity is available.

The mobile application, built in Kotlin using Android Studio (v2024.1.1.12), was tested on a Google Pixel 4XL (Android 14) equipped with a Qualcomm Snapdragon 855 processor (Qualcomm Incorporated, San Diego, CA, USA), which includes an Octa-core CPU, Adreno 640 GPU, and 6 GB of RAM. The backend API and model training workflows were developed with FastAPI (v0.116.1) and Python (v3.12.2), enabling asynchronous communication and efficient processing of mixed and partly covered currency detection.

4.2. Model Training and Performance Comparison

The four object detection models were trained using the same dataset under identical settings that were kept as consistent as possible with 500 epochs, 320 × 320 image resolution, a batch size of 32, a weight decay of 0.0005, and SGD optimizer.

The YOLOv8 and v11 models used initial learning rate of 0.01, momentum of 0.937 and a cosine annealing learning rate schedule with warm-up during the first three epochs. RetinaNet used initial learning rate of 0.001 and momentum of 0.9, together with a CosineAnnealingLR scheduler (Tmax = 200). Lastly, SSD used initial learning rate of 0.001, momentum of 0.9, and a gradual cosine decay schedule.

Using these parameters, these models were evaluated to identify the most suitable algorithm for detecting mixed and partly covered foreign currency. Performance was measured by precision, recall, mAP@0.5, and mAP@0.5:0.95.

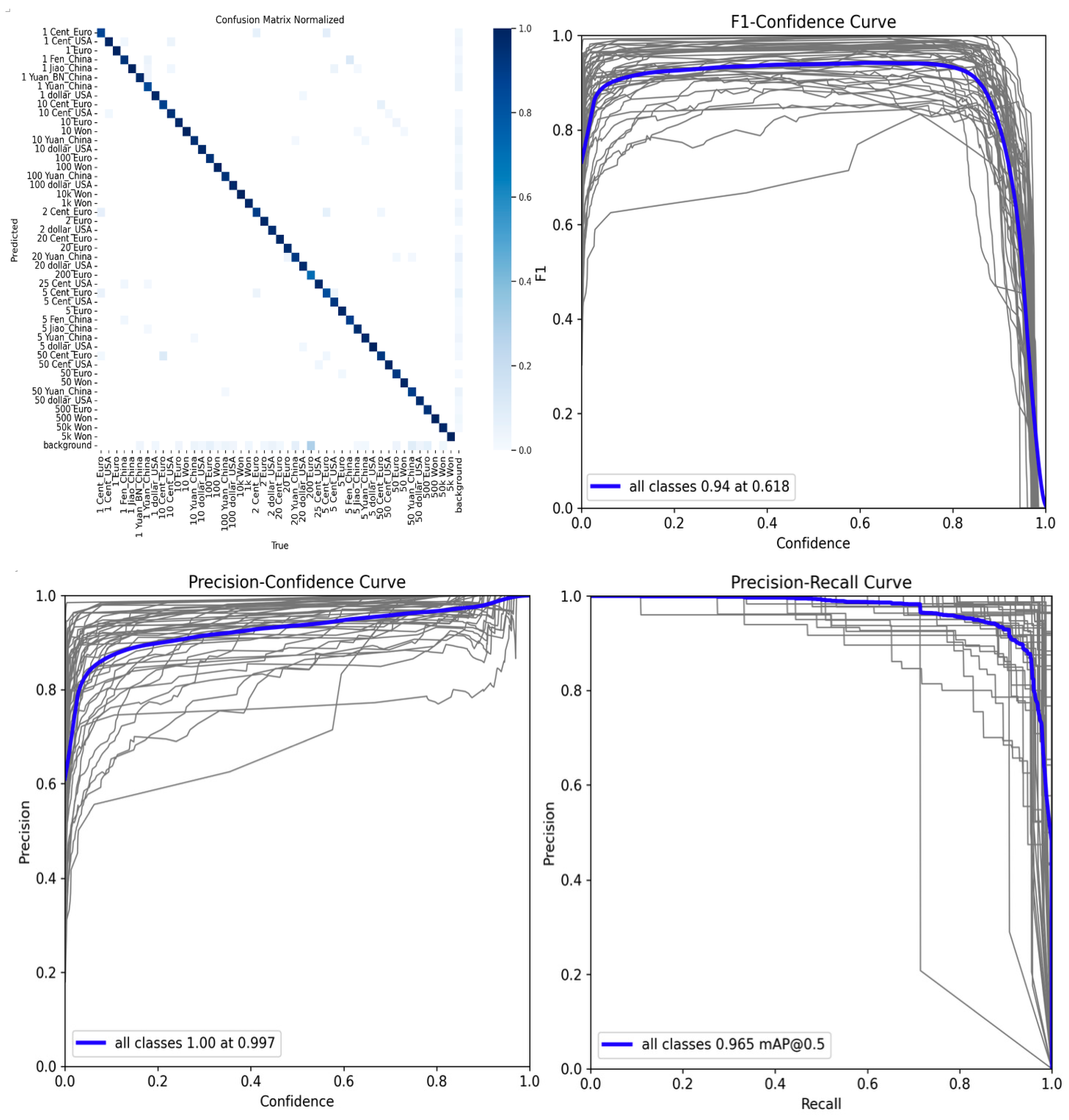

YOLOv11 achieved the highest scores, with a precision of 0.951, recall of 0.935, mAP@0.5 of 0.965, and mAP@0.5:0.95 of 0.906, demonstrating excellent robustness under diverse conditions, including overlapping and occluded currency. YOLOv8 also performed strongly but slightly below YOLOv11. SSD showed moderate accuracy and struggled with small or partly covered objects, while RetinaNet produced the lowest results, with frequent missed detections.

Based on these findings, YOLOv11 was selected as the most suitable model for deployment within the mobile application.

Table 3 summarizes the performance comparison of all four models. Supporting visualizations, including the confusion matrix, precision-recall curve, F1-confidence score curve, and loss curve, are presented in

Figure 6, further illustrating YOLOv11’s stable learning behavior.

For completeness, the mathematical formulation of YOLOv11 and its training process is summarized as follows. YOLOv11 formulates object detection as a direct regression and classification problem. For each grid cell, the network outputs a vector:

where (

bx,

by,

bw,

bh) are the bounding box center coordinates and dimensions, c is the objectness score, and (

p1,

p2, …,

pK) are class probabilities for K currency denominations.

The training objective minimizes a composite loss function:

where

LCIoU penalizes bounding box regression errors via Complete IoU loss.

Lcls is cross-entropy classification loss, and

Lobj is binary cross-entropy loss for objectness prediction.

Model parameters

θ are updated iteratively using SGD:

where

η denotes the learning rate. This formulation provides the mathematical foundation of the applied model and training procedure.

The precision-recall, F1-confidence, and precision-confidence plots in

Figure 6 show that the thin gray curves correspond to individual currency classes, while the bold blue curve represents the averaged performance across all classes. In the loss and metric plots, the solid blue lines indicate the raw training and validation values, whereas the dotted orange lines show smoothed trends for improved readability. These results confirm that the model converged stably, with consistent precision and recall across classes, demonstrating robustness under the mixed-currency detection task.

4.3. Server Development

The server module was implemented using FastAPI, a lightweight, high-performance web framework for asynchronous RESTful API development in Python [

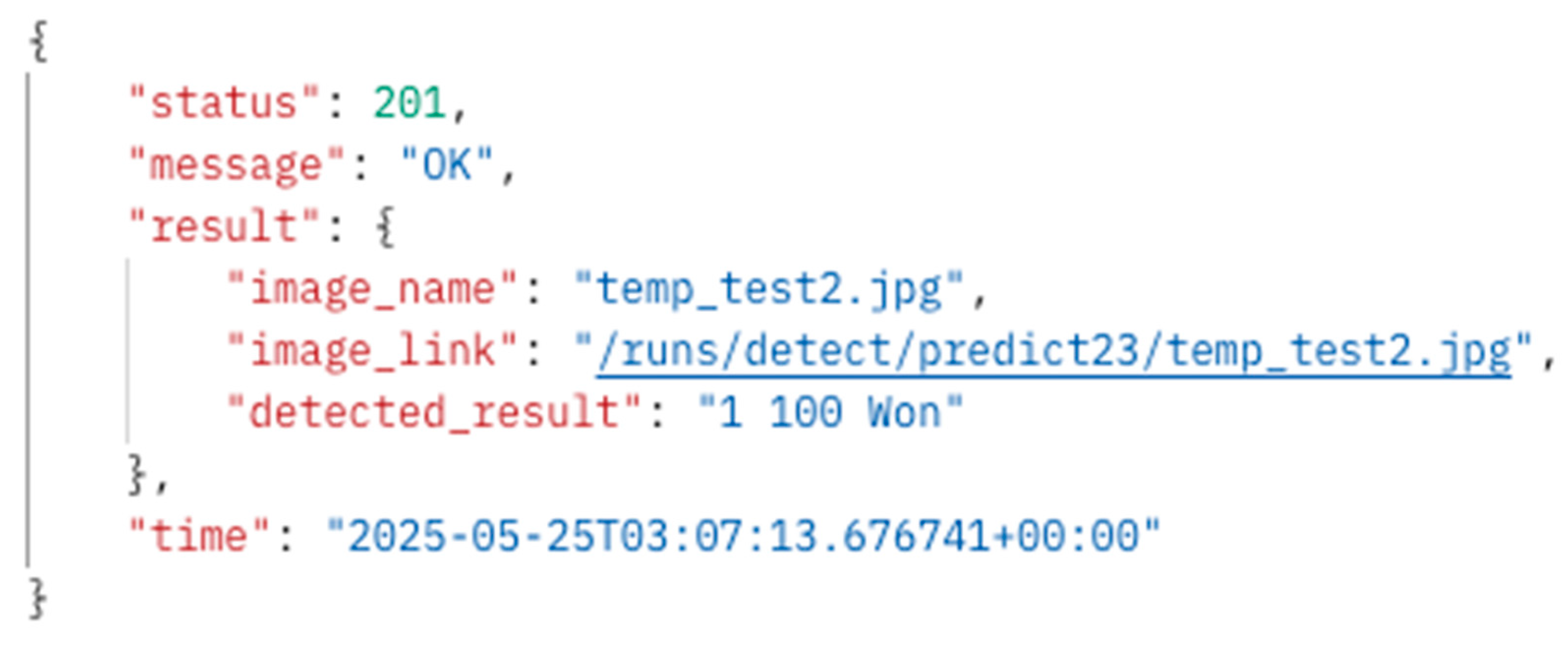

29]. This API serves as the backend endpoint for receiving images from the mobile application, running inference with the full-size trained YOLOv11 model via the Ultralytics library, and returning results in structured JSON format.

When an image is received, the server saves it to a temporary directory, loads the detection model, and processes the input to generate annotated images with bounding boxes. It then analyzes the output to quantify each detected currency denomination and formats the results into human-readable descriptions (e.g., “2 500 Won”, “1 1 Dollar”). The server also creates a static URL for retrieving the annotated image. Finally, the API returns a JSON response containing the detected denominations, filename, image URL, and a UTC timestamp.

Figure 7 shows an example response with the relevant metadata.

4.4. Model Conversion for Mobile Application

The trained YOLOv11 model required conversion into a format suitable for mobile inference to enable real-time object detection directly on devices. It was exported from its native PyTorch format to TensorFlow Lite (TFLite), optimized for low memory usage, fast performance, and efficient deployment on Android.

The conversion process begins by loading the original PyTorch model (.pt) and using the Ultralytics export() utility to sequentially transform it through ONNX and TensorFlow SavedModel formats before finalizing as a TFLite (.tflite) file. The resulting model is lightweight and compatible with Android’s TensorFlow Lite Interpreter, enabling on-device inference with reduced latency and improved privacy, as images can be processed locally without server communication [

30].

After export, the TFLite model is reloaded for test inference to validate consistency with the original PyTorch version. While TFLite conversion greatly reduces model size and computational demands, it can introduce trade-offs, such as slight decreases in detection accuracy or limited support for certain custom operations [

31].

4.5. Model Application Development

4.5.1. Real-Time Detection Using Embedded Model

This mobile application component performs real-time object detection using the embedded TensorFlow Lite version of the YOLOv11 model stored as a .tflite file. At startup, the app loads the model and metadata, including class labels and anchor boxes. The live camera feed is processed via Android’s CameraX and ImageAnalysis frameworks for asynchronous frame analysis.

Each frame is passed to the model, which outputs detected objects with class labels and confidence scores. Labels are mapped to currency denominations, converted into numeric values (e.g., “50 Cent_USA” to 0.5 USD), and aggregated by currency type. Detected amounts and bounding boxes are rendered dynamically on the interface, providing immediate visual feedback. This offline implementation is optimized for low-latency inference and supports real-time recognition of mixed and partly covered currency, even in limited-connectivity scenarios.

4.5.2. Backend Inference for High-Accuracy Detection

This function provides a server-assisted mode to achieve higher detection accuracy. When the capture button is pressed, the current frame is encoded in JPEG format and sent to the server, which processes the image using the full-scale YOLOv11 model beyond the computational limits of mobile devices. After inference, the backend returns an annotated image with bounding boxes and a summary of detected denominations. The application parses the JSON response and displays both the visual output and the numerical breakdown of recognized currency. This approach is particularly valuable in scenarios requiring higher precision, such as cluttered backgrounds or partially covered banknotes and coins.

4.5.3. Currency Conversion Feature

The final stage of the workflow allows users to convert detected currency totals into a target currency using up-to-date exchange rates. When the results page loads, the app queries a public currency API to retrieve available currency, which are displayed in a dropdown menu.

After the user selects a target currency and initiates conversion, the app fetches exchange rates for each detected base currency (USD, KRW, CNY, EUR) relative to the selected target. It then calculates and aggregates the converted amounts into a single summary. This feature provides a clear overview of total value in the preferred currency, supporting practical use cases for travelers handling mixed and partly covered currency.

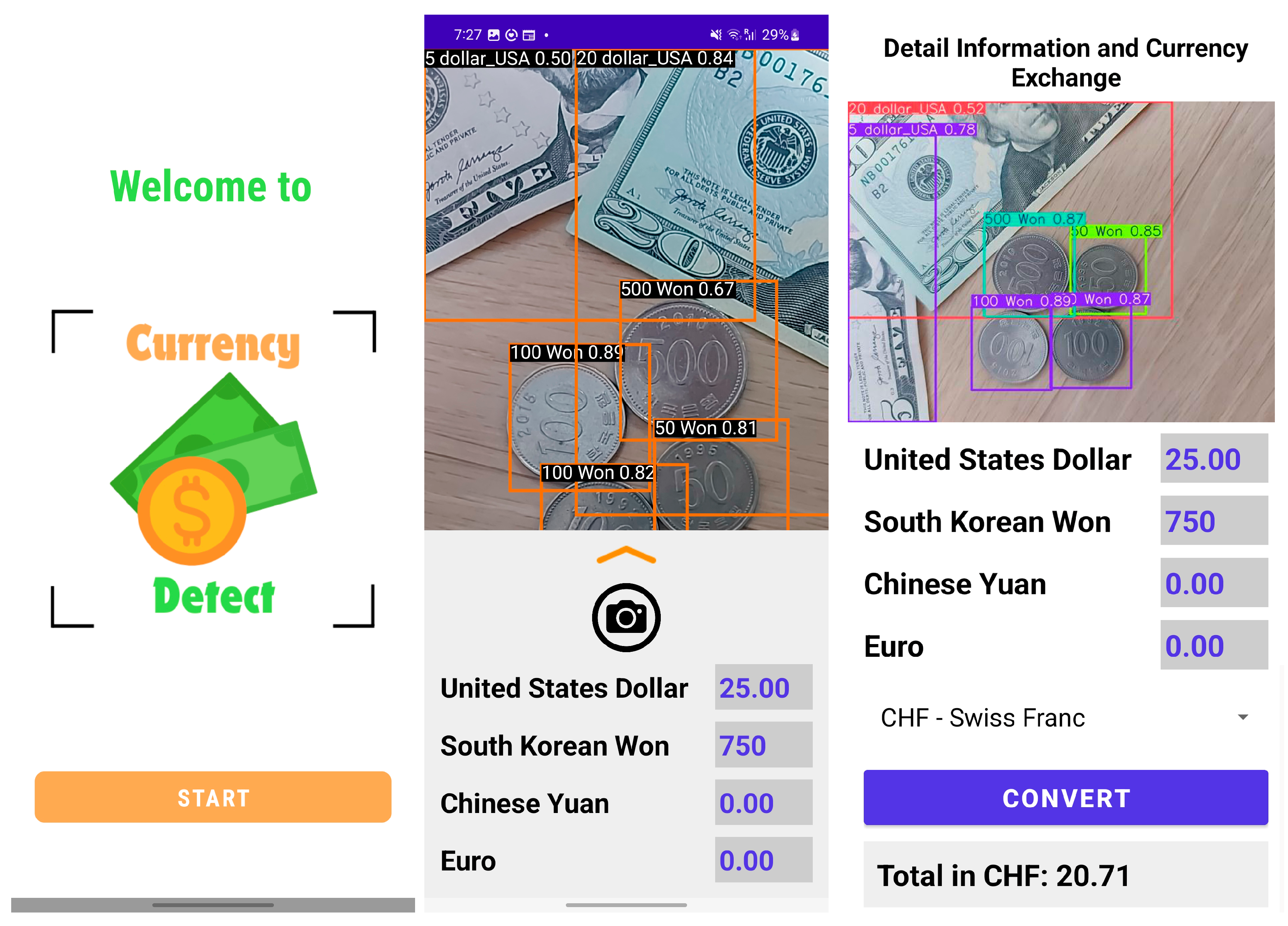

4.5.4. Mobile Application Screenshot

Figure 8 illustrates the mobile application interface. The left panel shows the welcome screen with a minimalist layout featuring the logo and a START button to begin detection. The central view depicts real-time recognition, where the embedded YOLOv11 model continuously processes camera input to localize and classify coins and banknotes, including mixed and partly covered currency. Detected objects are outlined with bounding boxes and labeled with class names and confidence scores, while currency totals are calculated dynamically for USD, KRW, CNY, and EUR. The right panel displays the results screen after server-side inference, showing the annotated image with improved accuracy, currency totals, a dropdown menu for selecting the target currency, and a CONVERT button to calculate converted amounts.

5. Performance Evaluation

This section evaluates the performance of the proposed system, focusing on response time and detection accuracy. For response time measurement, two experiments were conducted: onboard inference and server-side inference. Onboard processing time was recorded over 100 trials to quantify latency between pointing the camera at currency and receiving detection results. Similarly, server-based response time was measured across 100 trials, capturing the interval from image capture to retrieval of annotated results.

Accuracy assessment consisted of 400 test trials across various scenarios, involving random arrangements of mixed and partly covered currency in different positions and orientations. Detected outputs were compared against ground truth labels to compute the accuracy. This dual evaluation provides a comprehensive understanding of the system’s responsiveness, precision, and suitability for real-world use.

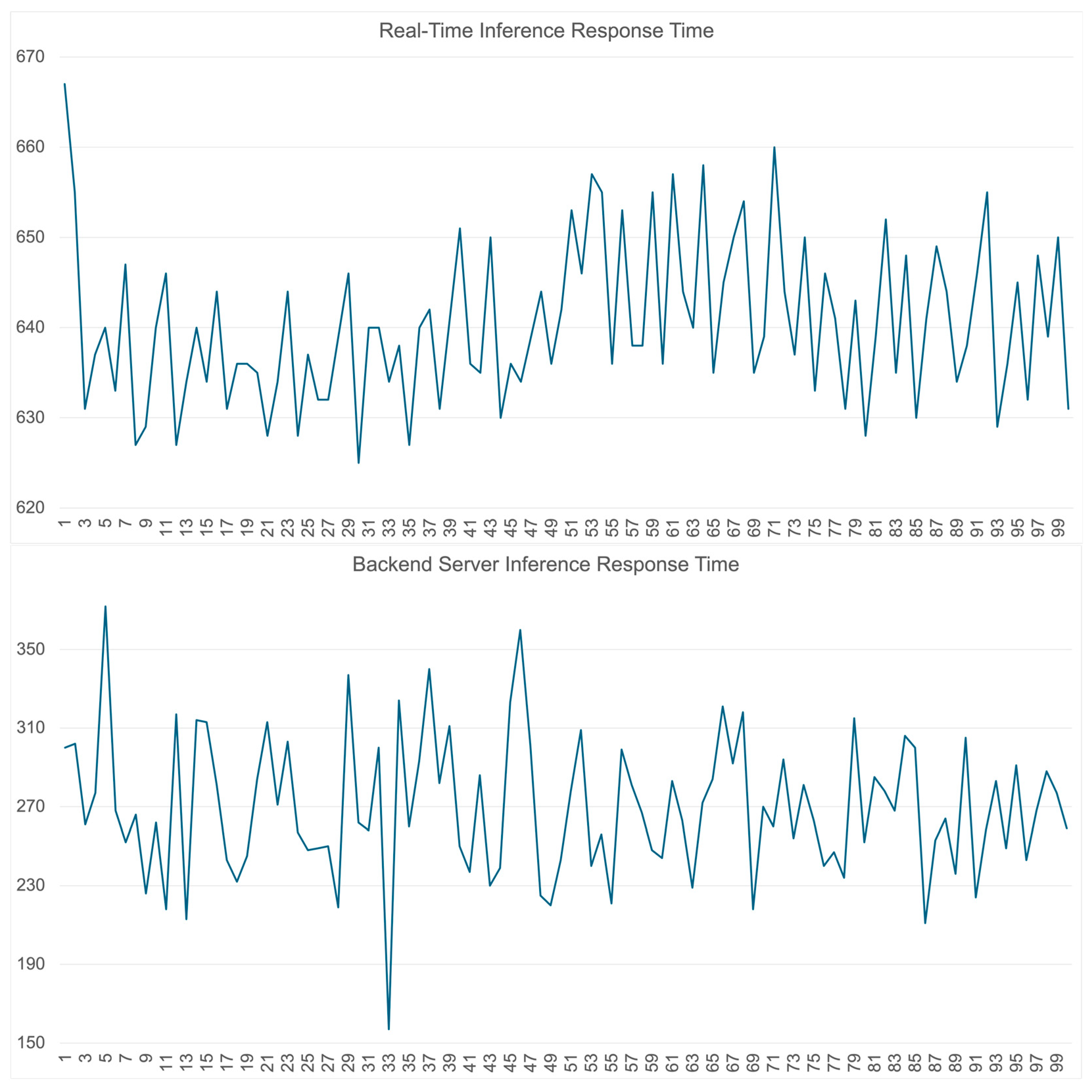

5.1. Response Time

100 trials were conducted for both real-time onboard and server-side inference to assess system responsiveness. Real-time inference exhibited a minimum response time of 625 ms, a maximum of 667 ms, and an average of 640.38 ms (standard deviation: 8.73 ms), indicating stable performance. In contrast, server-based inference achieved a lower mean response time of 269.73 ms, ranging from 157 ms to 372 ms, but showed higher variability (standard deviation: 35.36 ms). This improvement is attributed to the high-performance server with GPU acceleration, which reduces computational load on the device.

Figure 9 illustrates the comparative response time distributions.

While server-based inference demonstrated superior speed, it is more susceptible to variability due to factors such as network conditions and server workload. Notably, the server in this study was hosted locally with minimal latency. In practical deployments—especially when using cellular networks or remote services—response times are likely to fluctuate.

In contrast, on-device inference, though slower, offers complete independence from network connectivity and provides consistent performance. Importantly, although the average latency of 640.38 ms (≈1.56 FPS) does not meet the strict computer vision definition of “real-time” which supposedly around 24–30 FPS), it constitutes sub-second responsiveness that is acceptable for interactive mobile applications. In this paper, the term “real-time” therefore refers to application-level responsiveness rather than video frame-rate benchmarks. Additionally, the measured response time remains efficient relative to existing benchmarks. As reported in [

32], the standard YOLOv11-m model running on a CPU averages 889.04 ms per inference, considerably slower than the 640.38 ms achieved here. This improvement is due to the scaled-down TensorFlow Lite implementation optimized for mobile platforms.

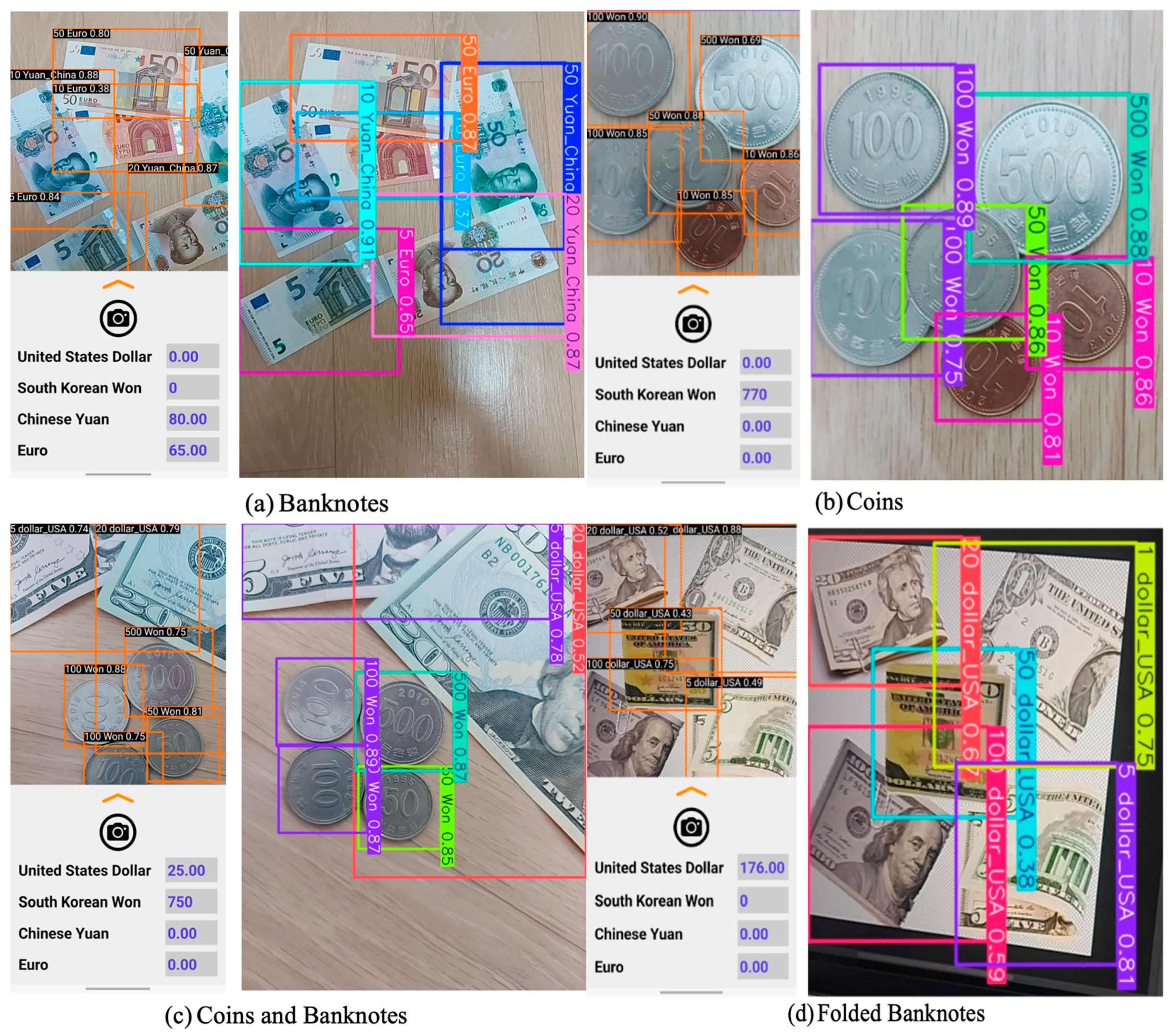

5.2. Real World Accuracy

The evaluation tested accuracy on folded or partially covered coins and banknotes to assess performance under realistic conditions with occlusion and deformation. Each test included random arrangements of different denominations and currencies in varied positions and orientations to emulate diverse usage environments. The smartphone camera was pointed directly at the arranged objects, capturing images under natural usage conditions before running inference.

For this category, 400 inference trials were conducted. During each trial, the system performed both real-time onboard detection and server-side inference. Leveraging the hybrid architecture, which automatically selects the more accurate result, the evaluation recorded only the higher-performing output per trial.

Table 4 summarizes the detailed results.

The results showed that across 400 trials, the average accuracy for folded or partially covered currency was 93.7%. These findings indicate that although partial occlusion, shadowing, and deformed contours present challenges, the system maintains robust performance under complex conditions.

Real-time inference on the device offers immediate feedback and works independently of network conditions. In contrast, server-based inference leverages greater computational resources to improve accuracy in visually challenging scenarios. This complementary design balances speed and precision, enabling the system to adapt to diverse operational needs.

Figure 10 shows example detection results from both modes.

In addition to partially covered and folded scenario, an impact of environmental disturbances was considered such as destroyed banknotes. The system was not explicitly tested on destroyed or heavily damaged banknotes, as the primary application is to assist travelers and users in recognizing valid currency that can still be used or stored for later use. Severely damaged or destroyed notes generally lack financial utility, and their recognition falls outside the intended scope of this work. However, the system was evaluated under varying illumination conditions, including brightly lit environments, dim indoor settings, and cases where the smartphone flash was activated.

Across these scenarios, the detector maintained stable accuracy, demonstrating resilience to moderate lighting variations. Therefore, although the system is not designed for destroyed currency, it is adaptable to real-world variability in lighting and environmental conditions.

5.3. Comparison with Existing Research

A comparative analysis was conducted to benchmark the proposed system against existing approaches in foreign currency recognition.

Table 5 summarizes the detection accuracy reported in related studies.

The inference in this study achieved an accuracy of 93.67%, outperforming most prior methods, including YOLOv5-based [

21], Teachable Machine-based [

23,

24], and SIFT-based [

25] implementations. Only refs. [

20,

26] reported higher accuracies (97% and 100%, respectively).

The exceptionally high accuracy reported in [

26] must be understood in the context of its acquisition setup. Their method employed a commercial line-scan sensor with LED illumination, producing standardized, noise-free images of single banknotes under controlled conditions. Our system, by contrast, processes natural smartphone photographs that include background clutter, shadows, glare, and partial occlusion, while supporting mixed scenes of coins and banknotes from multiple currencies within a single frame. Furthermore, ref. [

26] focused on classification of isolated banknotes by denomination and orientation, whereas this paper’s task requires full object detection and localization.

Similarly, the strong results in [

20] (97% accuracy) were achieved under simpler conditions. That study focused exclusively on banknotes, involved fewer classes, and did not account for complex real-world scenarios such as overlapping coins and banknotes. In addition, their implementation was web-based and server-side only, without the constraints of on-device mobile deployment. These factors contributed to higher accuracy but reduce applicability in mobile, real-time contexts.

Taken together, while refs. [

20,

26] report slightly higher raw accuracies, their approaches rely on narrower tasks, controlled environments, or specialized hardware. By contrast, the system in this paper delivers competitive accuracy while also addressing the far more challenging scenario of real-time, on-device recognition of mixed, occluded, and multi-currency items, supported by an integrated currency conversion module.

Overall, this solution demonstrates a balanced trade-off among accuracy, practicality, and ease of use. By leveraging YOLOv11’s advanced object detection, it delivers competitive performance while supporting real-time recognition of mixed and partly covered currency, making it well suited for travelers, older adults, and visually impaired users.

6. Conclusions and Future Studies

This research presents a real-time mobile object detection system for recognizing mixed and partly covered foreign coins and banknotes using YOLOv11 deep learning techniques. Developed as an Android application, it combines embedded on-device inference with an optional backend server to improve accuracy and provide live currency conversion. Evaluations confirmed the system’s ability to detect multiple denominations in a single image, even when items are overlapping, folded, or partially occluded.

Among the four tested models—YOLOv8, YOLOv11, SSD, and RetinaNet—YOLOv11 demonstrated the highest performance, achieving a precision of 0.951, recall of 0.935, and mAP@0.5 of 0.965. Accordingly, the final system integrates a TensorFlow Lite version of YOLOv11-m for offline inference and a full-scale version deployed via FastAPI for higher-accuracy scenarios.

Key features include a real-time camera interface, server-assisted analysis, dynamic results display, and integrated currency conversion. Performance testing showed robust results: real-time mobile inference achieved a response time of 640.38 ms, while backend inference reduced latency to 269.73 ms. In real-world accuracy tests, the system correctly detected and identified 93.67% of partly covered or deformed currency.

These findings validate the effectiveness of on-device processing for offline operation with predictable performance and server-based inference for improved precision in visually complex conditions. Overall, the system provides a practical and accessible solution to support travelers, older adults, and visually impaired users.

Nevertheless, the proposed system has several limitations. First, the dataset is currently restricted to four major currencies (USD, EUR, CNY, KRW) and does not yet cover the full diversity of global currencies. Second, although the system is robust to overlap and partial occlusion, extreme cases of clutter, folding, or strong glare may still reduce detection accuracy. Third, the present implementation has been developed and tested on Android devices only, limiting cross-platform accessibility. Finally, while the hybrid inference strategy balances accuracy and latency, the server-assisted mode relies on stable network connectivity, which may not always be available in practice. Addressing these limitations is part of our planned future work, including expansion to additional currencies, optimization for iOS devices, and the integration of self-retraining capabilities to improve long-term adaptability.

Future work will explore self-retraining capabilities through a community-driven contribution system. Users will be able to upload images of unsupported currencies and annotate them with bounding boxes and labels via the application interface. These user-contributed samples will be aggregated into a centralized repository, and once sufficient data are collected for a given denomination, the system will trigger a periodic server-managed retraining process to incorporate the new classes into the detection pipeline. Updated models will then be redistributed to client devices through automated updates, ensuring that the system remains current and extensible. This feature will enable a continual learning and semi-supervised object detection, where models adapt dynamically to new data distributions.

In parallel, broader user studies will be conducted to evaluate the system’s effectiveness in real-world use. These studies will measure indicators such as recognition accuracy, error rate, task completion time, and subjective user experience among both travelers and visually impaired participants.

Additional plans include iOS support, incorporating voice interaction and audio feedback for accessibility, and optimizing backend services for large-scale deployment scenarios.

By pursuing these enhancements, the system aims to remain adaptive, inclusive, and scalable, while also contributing to research on adaptive model retraining and user-centered evaluation of AI systems.