Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling

Abstract

1. Introduction

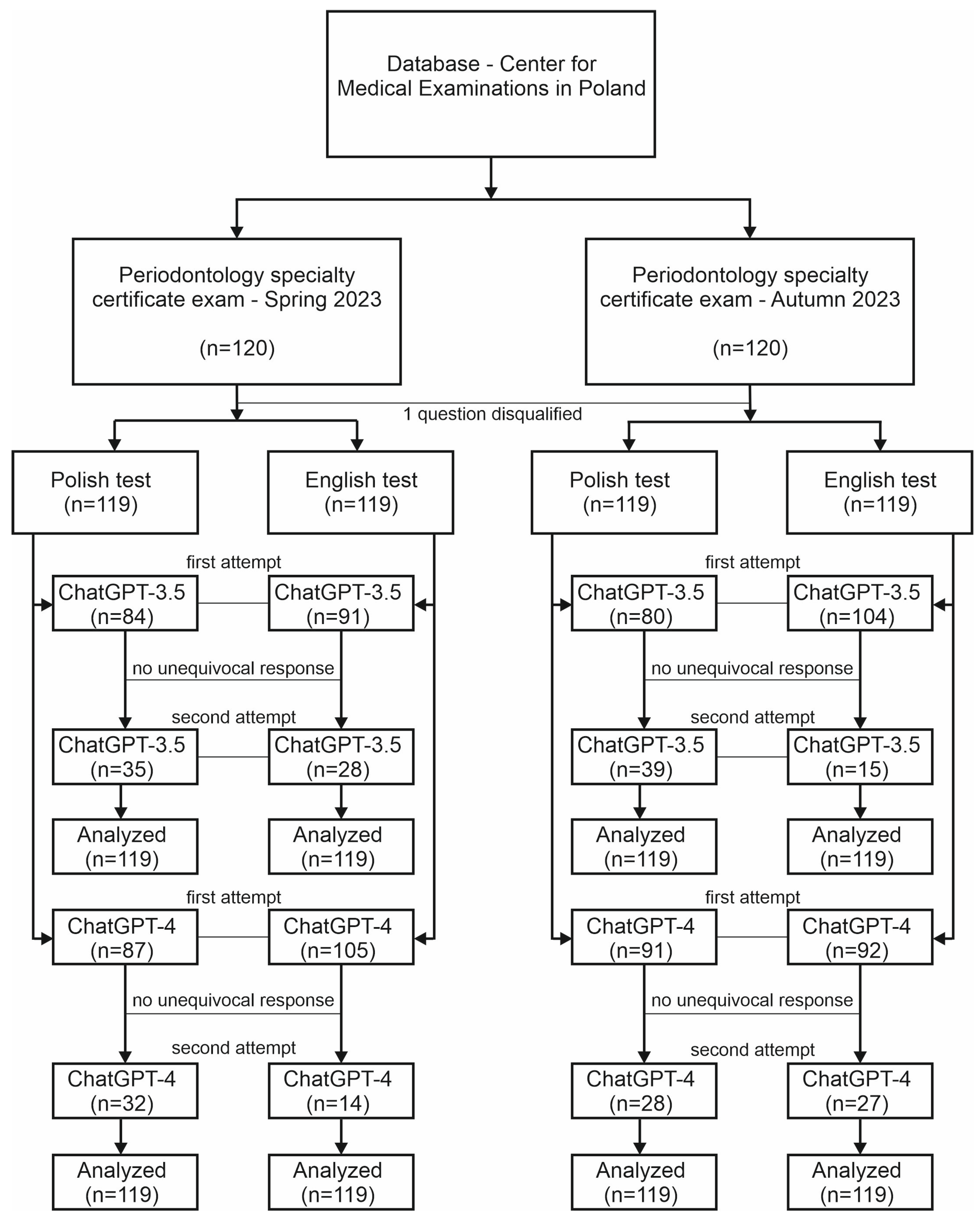

2. Materials and Methods

2.1. Research Strategy and Questions

2.2. Statistical Analysis

3. Results

3.1. ChatGPT Prompts—The First and Second Attempts

3.2. Comparison of ChatGPT-3.5 and ChatGPT-4

3.3. Comparison of Examination Languages—Polish and English

3.4. Performance of ChatGPT-3.5 and ChatGPT-4 Based on the Difficulty Index of Questions

3.5. Assessment of the Agreement of Incorrect Responses in the Periodontology Specialty Certificate Examination for Dentists and ChatGPT-3.5/ChatGPT-4

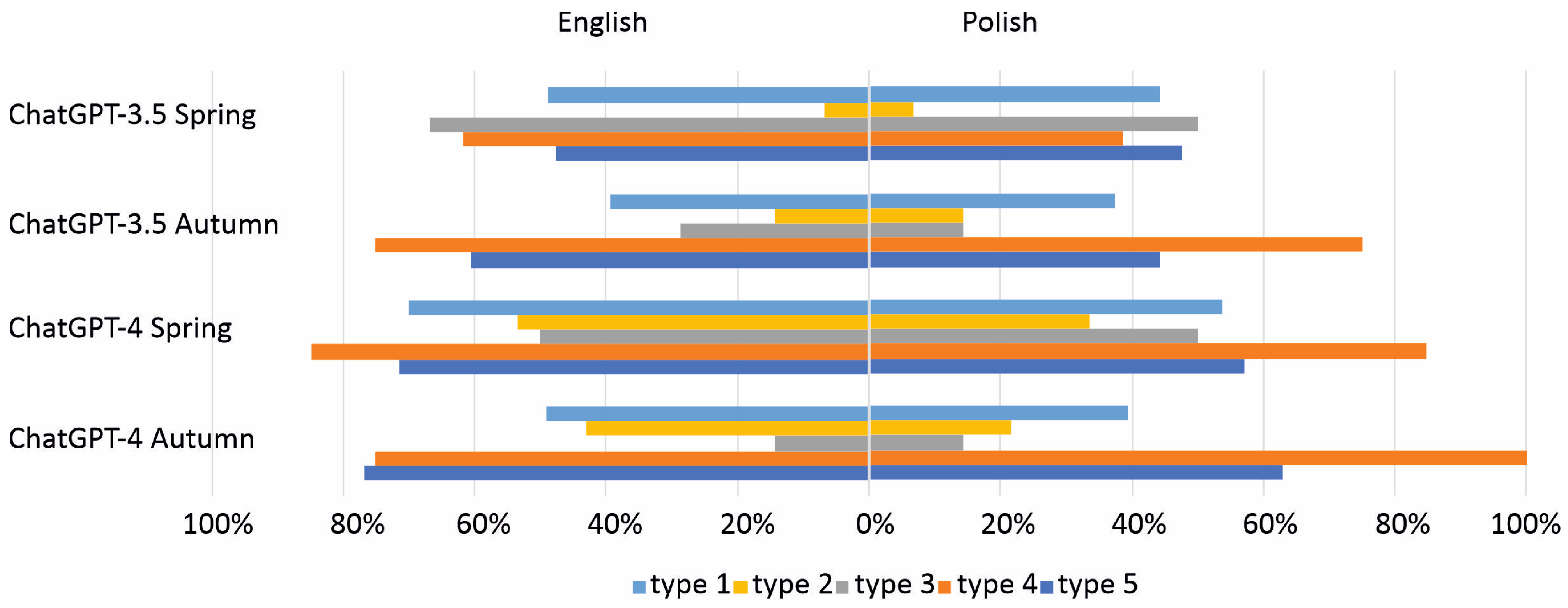

3.6. Comparison of Examination Languages, Polish and English, by Question Type

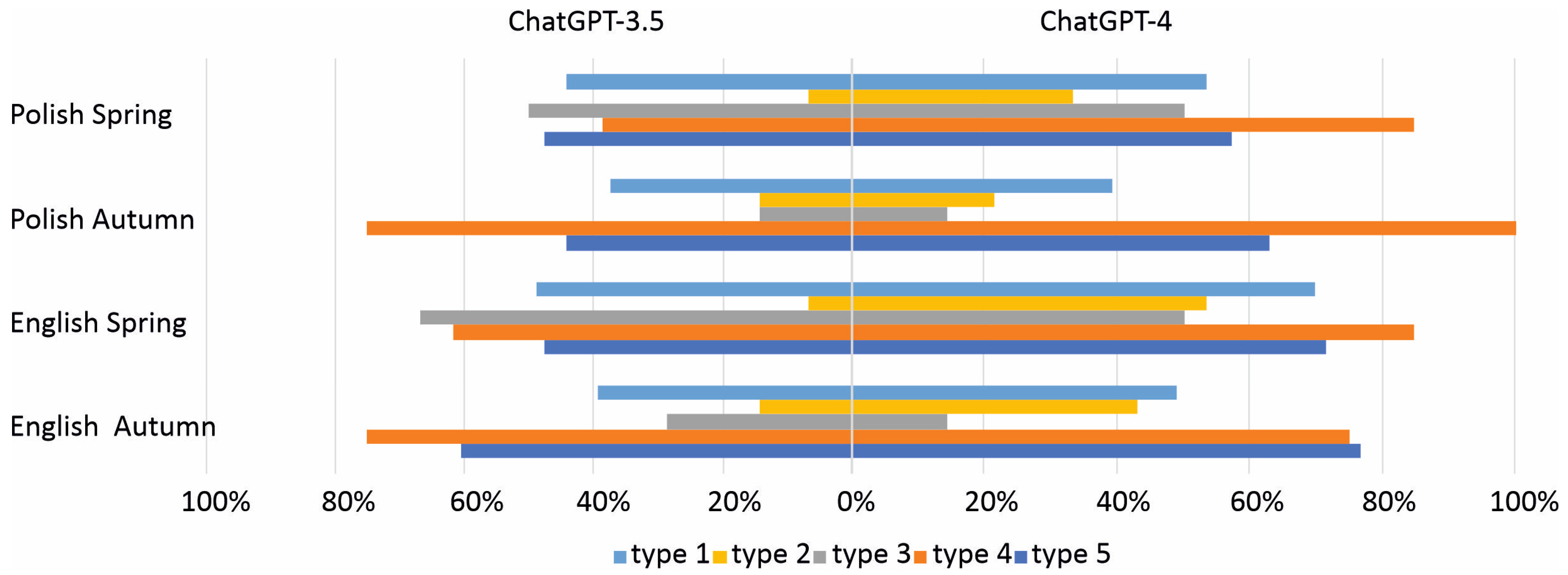

3.7. Comparison of ChatGPT-3.5 and ChatGPT-4 by Question Type

3.8. Application of Logistic Regression in Performance of Conversational AI Models in Decision Making for Clinical Periodontology

4. Discussion

5. Limitations

6. Conclusions

- ChatGPT-4 can pass the Specialty Certificate Examination in Periodontology.

- ChatGPT-4 significantly outperforms its older version in answering periodontology-related queries.

- ChatGPT-3.5 and ChatGPT-4 operate more efficiently in English than in Polish.

- ChatGPT sometimes provides invalid answers.

- ChatGPT encounters difficulties with different questions than dentists.

- The efficiency of ChatGPT is lower for more difficult queries.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chiu, C. Language and Culture; Nanyang Technological University: Singapore, 2011; Volume 4. [Google Scholar] [CrossRef]

- Hauser, M.D.; Chomsky, N.; Fitch, W.T. The Faculty of Language: What Is It, Who Has It, and How Did It Evolve? Science 2002, 298, 1569–1579. [Google Scholar] [CrossRef] [PubMed]

- Pereira, S.C.; Mendonça, A.M.; Campilho, A.; Sousa, P.; Lopes, C.T. Automated Image Label Extraction from Radiology Reports—A Review. Artif. Intell. Med. 2024, 149, 102814. [Google Scholar] [CrossRef] [PubMed]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural Language Processing: State of the Art, Current Trends and Challenges. Multim. Tools Appl. 2022, 82, 3713–3744. [Google Scholar] [CrossRef] [PubMed]

- Zhai, C. Statistical Language Models for Information Retrieval. In Proceedings of the Human Language Technology Conference of the NAACL, New York, NY, USA, 22–27 April 2007; Hanover: Worcester, MA, USA, 2007; pp. 3–4. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.cs.ubc.ca/~amuham01/LING530/papers/radford2018improving.pdf (accessed on 14 November 2023).

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Khosla, M.; Setty, V.; Anand, A. A Comparative Study for Unsupervised Network Representation Learning. IEEE Trans. Knowl. Data Eng. 2020, 33, 1807–1818. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.-L.; Tang, Y. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Schreiner, M. GPT-4 Architecture, Datasets, Costs and More Leaked; THE DECODER. 2023. Available online: https://the-decoder.com/gpt-4-architecture-datasets-costs-and-more-leaked/ (accessed on 19 November 2023).

- Curtis, N. To ChatGPT or not to ChatGPT? The impact of artificial intelligence on academic publishing. Pediatr. Infect. Dis. J. 2023, 42, 275. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Ansah, L.O. Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning. SSRN J. 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef] [PubMed]

- Jung, L.B.; Gudera, J.A.; Wiegand, T.L.T.; Allmendinger, S.; Dimitriadis, K.; Koerte, I.K. ChatGPT Passes German State Examination in Medicine with Picture Questions Omitted. Dtsch. Arztebl. Int. 2023, 120, 373–374. [Google Scholar] [CrossRef] [PubMed]

- Jeblick, K.; Schachtner, B.; Dexl, J.; Mittermeier, A.; Stüber, A.T.; Topalis, J.; Weber, T.; Wesp, P.; Sabel, B.O.; Ricke, J.; et al. ChatGPT Makes Medicine Easy to Swallow: An Exploratory Case Study on Simplified Radiology Reports. Eur. Radiol. 2024, 34, 2817–2825. [Google Scholar] [CrossRef] [PubMed]

- Ossowska, A.; Kusiak, A.; Świetlik, D. Artificial Intelligence in Dentistry—Narrative Review. Int. J. Environ. Res. Public Health 2022, 19, 3449. [Google Scholar] [CrossRef]

- Ossowska, A.; Kusiak, A.; Świetlik, D. Evaluation of the Progression of Periodontitis with the Use of Neural Networks. J. Clin. Med. 2022, 11, 4667. [Google Scholar] [CrossRef]

- Ossowska, A.; Kusiak, A.; Świetlik, D. Progression of Selected Parameters of the Clinical Profile of Patients with Periodontitis Using Kohonen’s Self-Organizing Maps. J. Pers. Med. 2023, 13, 346. [Google Scholar] [CrossRef]

- Decuyper, M.; Maebe, J.; Van Holen, R.; Vandenberghe, S. Artificial intelligence with deep learning in nuclear medicine and radiology. EJNMMI Phys. 2021, 8, 81. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Papachristou, K.; Panagiotidis, E.; Makridou, A.; Kalathas, T.; Masganis, V.; Paschali, A.; Aliberti, M.; Chatzipavlidou, V. Artificial intelligence in Nuclear Medicine Physics and Imaging. Hell. J. Nucl. Med. 2023, 26, 57–65. [Google Scholar] [CrossRef] [PubMed]

- Świetlik, D.; Białowąs, J.; Kusiak, A.; Cichońska, D. Memory and forgetting processes with the firing neuron model. Folia Morphol. 2018, 77, 221–233. [Google Scholar] [CrossRef]

- Świetlik, D. Simulations of Learning, Memory, and Forgetting Processes with Model of CA1 Region of the Hippocampus. Complexity 2018, 2018, 1297150. [Google Scholar] [CrossRef]

- Świetlik, D.; Białowąs, J.; Kusiak, A.; Cichońska, D. A computational simulation of long-term synaptic potentiation inducing protocol processes with model of CA3 hippocampal microcircuit. Folia Morphol. 2018, 77, 210–220. [Google Scholar] [CrossRef] [PubMed]

- Świetlik, D.; Kusiak, A.; Ossowska, A. Computational Modeling of Therapy with the NMDA Antagonist in Neurodegenerative Disease: Information Theory in the Mechanism of Action of Memantine. Int. J. Environ. Res. Public Health 2022, 19, 4727. [Google Scholar] [CrossRef] [PubMed]

- Świetlik, D.; Kusiak, A.; Krasny, M.; Białowąs, J. The Computer Simulation of Therapy with the NMDA Antagonist in Excitotoxic Neurodegeneration in an Alzheimer’s Disease-like Pathology. J. Clin. Med. 2022, 11, 1858. [Google Scholar] [CrossRef]

- Alsayed, A.A.; Aldajani, M.B.; Aljohani, M.H.; Alamri, H.; Alwadi, M.A.; Alshammari, B.Z.; Alshammari, F.R. Assessing the Quality of AI Information from ChatGPT Regarding Oral Surgery, Preventive Dentistry, and Oral Cancer: An Exploration Study. Saudi Dent. J. 2024, 36, 1483–1489. [Google Scholar] [CrossRef]

- Fatani, B. ChatGPT for Future Medical and Dental Research. Cureus 2023, 15, e37285. [Google Scholar] [CrossRef]

- Uribe, S.E.; Maldupa, I. Estimating the Use of ChatGPT in Dental Research Publications. J. Dent. 2024, 149, 105275. [Google Scholar] [CrossRef]

- Janakiram, C.; Dye, B.A. A Public Health Approach for Prevention of Periodontal Disease. Periodontology 2000 2020, 84, 202–214. [Google Scholar] [CrossRef]

- Alan, R.; Alan, B.M.; Alan, R.; Alan, B.M. Utilizing ChatGPT-4 for Providing Information on Periodontal Disease to Patients: A DISCERN Quality Analysis. Cureus 2023, 15, e46213. [Google Scholar] [CrossRef]

- Tastan Eroglu, Z.; Babayigit, O.; Ozkan Sen, D.; Ucan Yarkac, F. Performance of ChatGPT in Classifying Periodontitis According to the 2018 Classification of Periodontal Diseases. Clin. Oral Investig. 2024, 28, 407. [Google Scholar] [CrossRef]

- Nicikowski, J.; Szczepański, M.; Miedziaszczyk, M.; Kudliński, B. The Potential of ChatGPT in Medicine: An Example Analysis of Nephrology Specialty Exams in Poland. Clin. Kidney J. 2024, 17, sfae193. [Google Scholar] [CrossRef] [PubMed]

- Ali, R.; Tang, O.Y.; Connolly, I.D.; Zadnik Sullivan, P.L.; Shin, J.H.; Fridley, J.S.; Asaad, W.F.; Cielo, D.; Oyelese, A.A.; Doberstein, C.E.; et al. Performance of ChatGPT and GPT-4 on neurosurgery written board examinations. Neurosurgery 2023, 93, 1353–1365. [Google Scholar] [CrossRef] [PubMed]

- Lahat, A.; Sharif, K.; Zoabi, N.; Shneor Patt, Y.; Sharif, Y.; Fisher, L.; Shani, U.; Arow, M.; Levin, R.; Klang, E. Assessing Generative Pretrained Transformers (GPT) in Clinical Decision-Making: Comparative Analysis of GPT-3.5 and GPT-4. J. Med. Internet Res. 2024, 26, e54571. [Google Scholar] [CrossRef] [PubMed]

- Khorshidi, H.; Mohammadi, A.; Yousem, D.M.; Abolghasemi, J.; Ansari, G.; Mirza-Aghazadeh-Attari, M.; Acharya, U.R.; Ardakani, A.A. Application of ChatGPT in Multilingual Medical Education: How Does ChatGPT Fare in 2023’s Iranian Residency Entrance Examination. Inform. Med. Unlocked 2023, 41, 101314. [Google Scholar] [CrossRef]

- Wang, Y.-M.; Shen, H.-W.; Chen, T.-J. Performance of ChatGPT on the Pharmacist Licensing Examination in Taiwan. J. Chin. Med. Assoc. 2023, 86, 653–658. [Google Scholar] [CrossRef]

- Ando, K.; Sato, M.; Wakatsuki, S.; Nagai, R.; Chino, K.; Kai, H.; Sasaki, T.; Kato, R.; Nguyen, T.P.; Guo, N.; et al. A Comparative Study of English and Japanese ChatGPT Responses to Anaesthesia-Related Medical Questions. BJA Open 2024, 10, 100296. [Google Scholar] [CrossRef]

- Liu, M.; Okuhara, T.; Chang, X.; Shirabe, R.; Nishiie, Y.; Okada, H.; Kiuchi, T. Performance of ChatGPT across Different Versions in Medical Licensing Examinations Worldwide: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2024, 26, e60807. [Google Scholar] [CrossRef]

- Lewandowski, M.; Łukowicz, P.; Świetlik, D.; Barańska-Rybak, W. ChatGPT-3.5 and ChatGPT-4 Dermatological Knowledge Level Based on the Specialty Certificate Examination in Dermatology. Clin. Exp. Dermatol. 2024, 49, 686–691. [Google Scholar] [CrossRef]

- Bhayana, R.; Krishna, S.; Bleakney, R.R. Performance of ChatGPT on a Radiology Board-style Examination: Insights into Current Strengths and Limitations. Radiology 2023, 307, 230582. [Google Scholar] [CrossRef]

- Li, S. Exploring the Clinical Capabilities and Limitations of ChatGPT: A Cautionary Tale for Medical Applications. Int. J. Surg. 2023, 109, 2865. [Google Scholar] [CrossRef]

- Chomsky, N.; Roberts, I.; Watumull, J.N.C. The False Promise of ChatGPT. The New York Times. 2023, p. 8. Available online: www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html (accessed on 19 November 2023).

- Azaria, A. ChatGPT: More Human-Like Than Computer-Like, but Not Necessarily in a Good Way. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence (ICTAI), Atlanta, GA, USA, 6–8 November 2023. [Google Scholar]

- Alkaissi, H.; McFarlane, S.I. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M.; Salim, N.A.; Al-Tammemi, A.B.; Barakat, M.; Fayyad, D.; Hallit, S.; Harapan, H.; Hallit, R.; Mahafzah, A. ChatGPT Output Regarding Compulsory Vaccination and COVID-19 Vaccine Conspiracy: A Descriptive Study at the Outset of a Paradigm Shift in Online Search for Information. Cureus 2023, 15, e35029. [Google Scholar] [CrossRef] [PubMed]

- Deiana, G.; Dettori, M.; Arghittu, A.; Azara, A.; Gabutti, G.; Castiglia, P. Artificial Intelligence and Public Health: Evaluating ChatGPT Responses to Vaccination Myths and Misconceptions. Vaccines 2023, 11, 1217. [Google Scholar] [CrossRef] [PubMed]

- Babayiğit, O.; Eroglu, Z.T.; Sen, D.O.; Yarkac, F.U. Potential Use of ChatGPT for Patient Information in Periodontology: A Descriptive Pilot Study. Cureus 2023, 15, 11. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Zhang, J.; Abdul-Masih, J.; Zhang, S.; Yang, J. Performance of ChatGPT and Dental Students on Concepts of Periodontal Surgery. Eur. J. Dent. Educ. 2024. [Google Scholar] [CrossRef]

- Meskó, B. Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. J. Med. Internet Res. 2023, 25, e50638. [Google Scholar] [CrossRef]

- Gao, C.A.; Howard, F.M.; Markov, N.S.; Dyer, E.C.; Ramesh, S.; Luo, Y.; Pearson, A.T. Comparing Scientific Abstracts Generated by ChatGPT to Real Abstracts with Detectors and Blinded Human Reviewers. NPJ Digit. Med. 2023, 6, 75. [Google Scholar] [CrossRef]

- Eggmann, F.; Weiger, R.; Zitzmann, N.U.; Blatz, M.B. Implications of Large Language Models Such as ChatGPT for Dental Medicine. J. Esthet. Restor. Dent. 2023, 35, 1098–1102. [Google Scholar] [CrossRef]

- Zaman, M. ChatGPT for Healthcare Sector: SWOT Analysis. Int. J. Res. Ind. Eng. 2023, 12, 221–233. [Google Scholar] [CrossRef]

| Specialty Certificate Exam | Session | ChatGPT-3.5 (n = 119) | ChatGPT-4 (n = 119) | p-Value * |

|---|---|---|---|---|

| Polish | Spring 2023 | 48 (40.3%) | 66 (55.5%) | 0.0195 |

| Autumn 2023 | 44 (37.0%) | 55 (46.2%) | 0.1480 | |

| English | Spring 2023 | 54 (45.4%) | 82 (68.9%) | 0.0002 |

| Autumn 2023 | 53 (44.5%) | 68 (57.1%) | 0.0518 |

| Specialty Certificate Exam | Session | ChatGPT-3.5 | ChatGPT-4 | p-Value * |

|---|---|---|---|---|

| Polish | n = 84 | n = 87 | ||

| Spring 2023 | 37 (44.1%) | 60 (69.0%) | 0.0010 | |

| n = 80 | n = 91 | 0.0103 | ||

| Autumn 2023 | 30 (37.5%) | 52 (57.1%) | ||

| English | n = 91 | n = 105 | ||

| Spring 2023 | 46 (50.6%) | 77 (73.3%) | 0.0010 | |

| n = 104 | n = 92 | |||

| Autumn 2023 | 50 (48.1%) | 62 (67.4%) | 0.0064 |

| Specialty Certificate Exam | Session | ChatGPT-3.5 | ChatGPT-4 | p-Value * |

|---|---|---|---|---|

| Polish | n = 35 | n = 32 | ||

| Spring 2023 | 11 (31.4%) | 6 (18.8%) | 0.2336 | |

| n = 39 | n = 28 | |||

| Autumn 2023 | 14 (35.9%) | 3 (10.7%) | 0.0195 | |

| English | n = 28 | n = 14 | ||

| Spring 2023 | 8 (28.6%) | 5 (35.7%) | 0.6369 | |

| n = 15 | n = 27 | |||

| Autumn 2023 | 3 (20.0%) | 6 (22.2%) | 0.8664 |

| ChatGPT | Session | Polish (n = 119) | English (n = 119) | p-Value * |

|---|---|---|---|---|

| ChatGPT-3.5 | Spring 2023 | 48 (40.3%) | 54 (45.4%) | 0.4319 |

| Autumn 2023 | 44 (37.0%) | 53 (44.5%) | 0.2351 | |

| ChatGPT-4 | Spring 2023 | 66 (55.5%) | 82 (68.9%) | 0.0325 |

| Autumn 2023 | 55 (46.2%) | 68 (57.1%) | 0.0918 |

| Group | Incorrect Answers | Correct Answers | p-Value * |

|---|---|---|---|

| ChatGPT-3.5 Spring 2023 | n = 71 | n = 48 | |

| mean (SD) | 0.79 (0.22) | 0.86 (0.21) | |

| range (min–max) | 0.25–1.00 | 0.25–1.00 | |

| median (Q1–Q3) | 0.75 (0.75–1.00) | 1.00 (0.75–1.00) | 0.0477 |

| ChatGPT-4 Spring 2023 | n = 53 | n = 66 | |

| mean (SD) | 0.75 (0.25) | 0.87 (0.17) | |

| range (min–max) | 0.25–1.00 | 0.50–1.00 | |

| median (Q1–Q3) | 0.75 (0.50–1.00) | 1.00 (0.75–1.00) | 0.0125 |

| ChatGPT-3.5 Autumn 2023 | n = 75 | n = 44 | |

| mean (SD) | 0.73 (0.27) | 0.76 (0.29) | |

| range (min–max) | 0.00–1.00 | 0.00–1.00 | |

| median (Q1–Q3) | 0.83 (0.50–1.00) | 0.83 (0.67–1.00) | 0.5308 |

| ChatGPT-4 Autumn 2023 | n = 64 | n = 55 | |

| mean (SD) | 0.71 (0.27) | 0.79 (0.28) | |

| range (min–max) | 0.00–1.00 | 0.00–1.00 | |

| median (Q1–Q3) | 0.83 (0.50–1.00) | 0.83 (0.67–1.00) | 0.0414 |

| Group | Incorrect Answers | Correct Answers | p-Value * |

|---|---|---|---|

| ChatGPT-3.5 Spring 2023 | n = 65 | n = 54 | |

| mean (SD) | 0.78 (0.24) | 0.87 (0.17) | |

| range (min–max) | 0.25–1.00 | 0.50–1.00 | |

| median (Q1–Q3) | 0.75 (0.75–1.00) | 1.00 (0.75–1.00) | 0.0487 |

| ChatGPT-4 Spring 2023 | n = 37 | n = 82 | |

| mean (SD) | 0.72 (0.23) | 0.86 (0.20) | |

| range (min–max) | 0.25–1.00 | 0.25–1.00 | |

| median (Q1–Q3) | 0.75 (0.50–1.00) | 1.00 (0.75–1.00) | 0.0009 |

| ChatGPT-3.5 Autumn 2023 | n = 66 | n = 53 | |

| mean (SD) | 0.73 (0.27) | 0.76 (0.29) | |

| range (min–max) | 0.00–1.00 | 0.00–1.00 | |

| median (Q1–Q3) | 0.83 (0.50–1.00) | 0.83 (0.67–1.00) | 0.2867 |

| ChatGPT-4 Autumn 2023 | n = 51 | n = 68 | |

| mean (SD) | 0.68 (0.29) | 0.80 (0.25) | |

| range (min–max) | 0.00–1.00 | 0.00–1.00 | |

| median (Q1–Q3) | 0.67 (0.50–1.00) | 0.83 (0.67–1.00) | 0.0213 |

| Specialty Certificate Exams | Session | ChatGPT-3.5 Cohen’s Kappa [95% CI *] | ChatGPT-4 Cohen’s Kappa [95% CI] |

|---|---|---|---|

| Polish | Spring 2023 | 0.38 [0.24, 0.52] | 0.41 [0.22, 0.60] |

| Autumn 2023 | 0.55 [0.41, 0.70] | 0.51 [0.33, 0.69] | |

| English | Spring 2023 | 0.53 [0.38, 0.68] | 0.51 [0.30, 0.72] |

| Autumn 2023 | 0.49 [0.34, 0.64] | 0.38 [0.19, 0.58] |

| Variable | Univariate | Multivariate | ||

|---|---|---|---|---|

| OR (95% CI) | p-Value | OR (95% CI) | p-Value | |

| Type of question | ||||

| 1 | 0.90 (0.62–1.30) | 0.5556 | ||

| 2 | 0.25 (0.13–0.50) | 0.0001 | 0.29 (0.14–0.60) | 0.0008 |

| 3 | 0.53 (0.23–1.25) | 0.1461 | ||

| 4 | 2.77 (1.32–5.83) | 0.0071 | 2.98 (1.35–6.58) | 0.0068 |

| 5 | 1.67 (1.15–2.44) | 0.0076 | 1.55 (1.02–2.34) | 0.0397 |

| Session | ||||

| Autumn | 0.78 (0.54–1.11) | 0.1671 | ||

| Spring | 1.29 (0.90–1.85) | 0.1671 | ||

| AI Model | ||||

| ChatGPT-3.5 | 0.61 (0.42–0.88) | 0.0077 | 0.58 (0.40–0.86) | 0.0057 |

| ChatGPT-4 | 1.64 (1.14–2.36) | 0.0077 | 1.71 (1.17–2.51) | 0.0057 |

| Difficulty index | 3.57 (1.66–7.69) | 0.0011 | 4.07 (1.86–8.92) | 0.0005 |

| Variable | Univariate | Multivariate | ||

|---|---|---|---|---|

| OR (95% CI) | p-Value | OR (95% CI) | p-Value | |

| Type of question | ||||

| 1 | 0.82 (0.57–1.19) | 0.3007 | ||

| 2 | 0.31 (0.17–0.56) | 0.0001 | 0.36 (0.19–0.68) | 0.0018 |

| 3 | 0.51 (0.23–1.16) | 0.1077 | ||

| 4 | 2.51 (1.15–5.51) | 0.0212 | 2.97 (1.27–6.92) | 0.0118 |

| 5 | 1.91 (0.30–2.80) | 0.0010 | 1.82 (1.19–2.80) | 0.0063 |

| Session | ||||

| Autumn | 0.78 (0.54–1.11) | 0.1680 | ||

| Spring | 1.29 (0.90–1.85) | 0.1680 | ||

| AI Model | ||||

| ChatGPT-3.5 | 0.48 (0.33–0.69) | 0.0001 | 0.44 (0.30–0.65) | <0.0001 |

| ChatGPT-4 | 2.09 (1.45–3.01) | 0.0001 | 1.82 (1.19–2.80) | <0.0001 |

| Difficulty index | 4.59 (2.16–9.76) | 0.0001 | 5.58 (2.53–12.27) | <0.0001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Camlet, A.; Kusiak, A.; Świetlik, D. Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling. AI 2025, 6, 3. https://doi.org/10.3390/ai6010003

Camlet A, Kusiak A, Świetlik D. Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling. AI. 2025; 6(1):3. https://doi.org/10.3390/ai6010003

Chicago/Turabian StyleCamlet, Albert, Aida Kusiak, and Dariusz Świetlik. 2025. "Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling" AI 6, no. 1: 3. https://doi.org/10.3390/ai6010003

APA StyleCamlet, A., Kusiak, A., & Świetlik, D. (2025). Application of Conversational AI Models in Decision Making for Clinical Periodontology: Analysis and Predictive Modeling. AI, 6(1), 3. https://doi.org/10.3390/ai6010003