From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems

Abstract

:1. Introduction

2. Theoretical Foundations of Trustworthy Processes for Operationalizing AI Governance

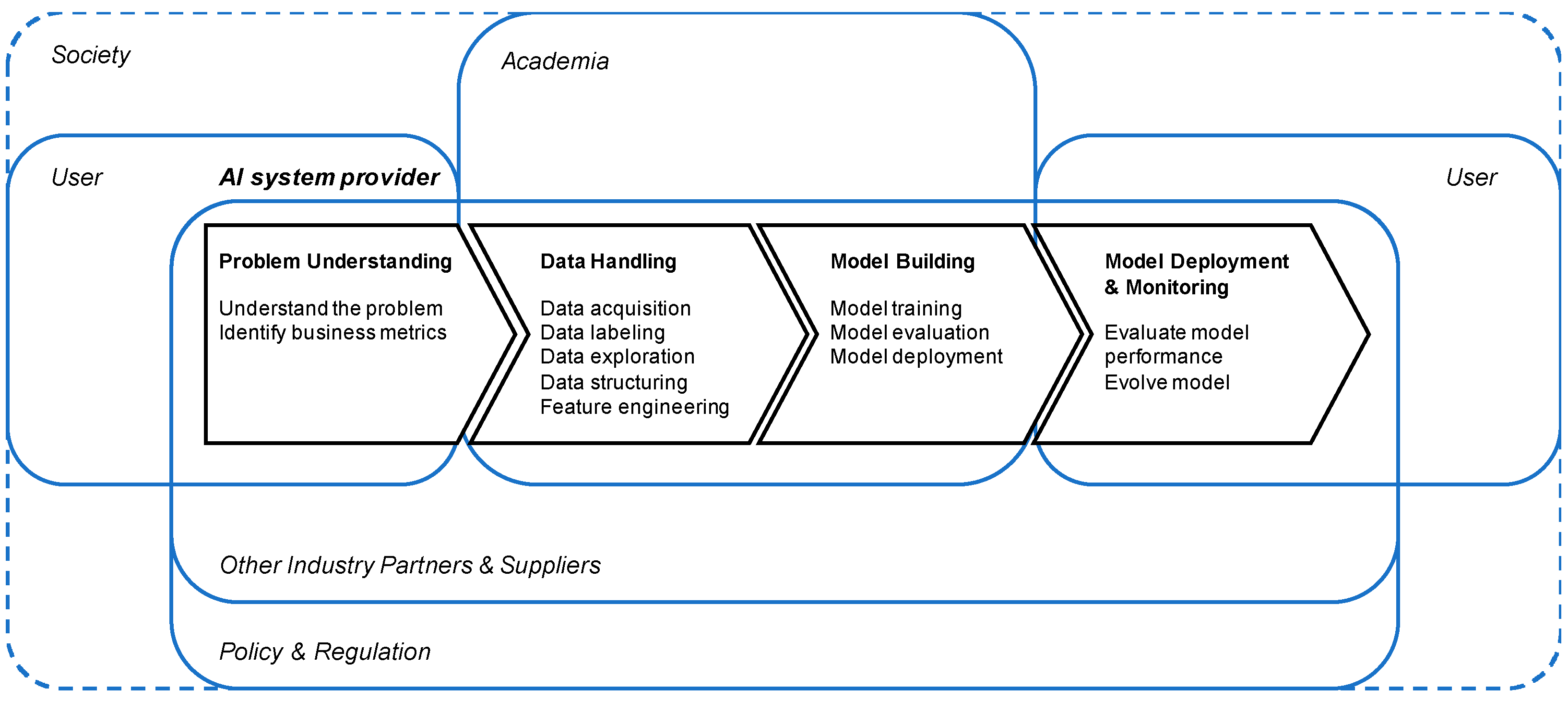

2.1. The Need for Trust in the AI Ecosystem

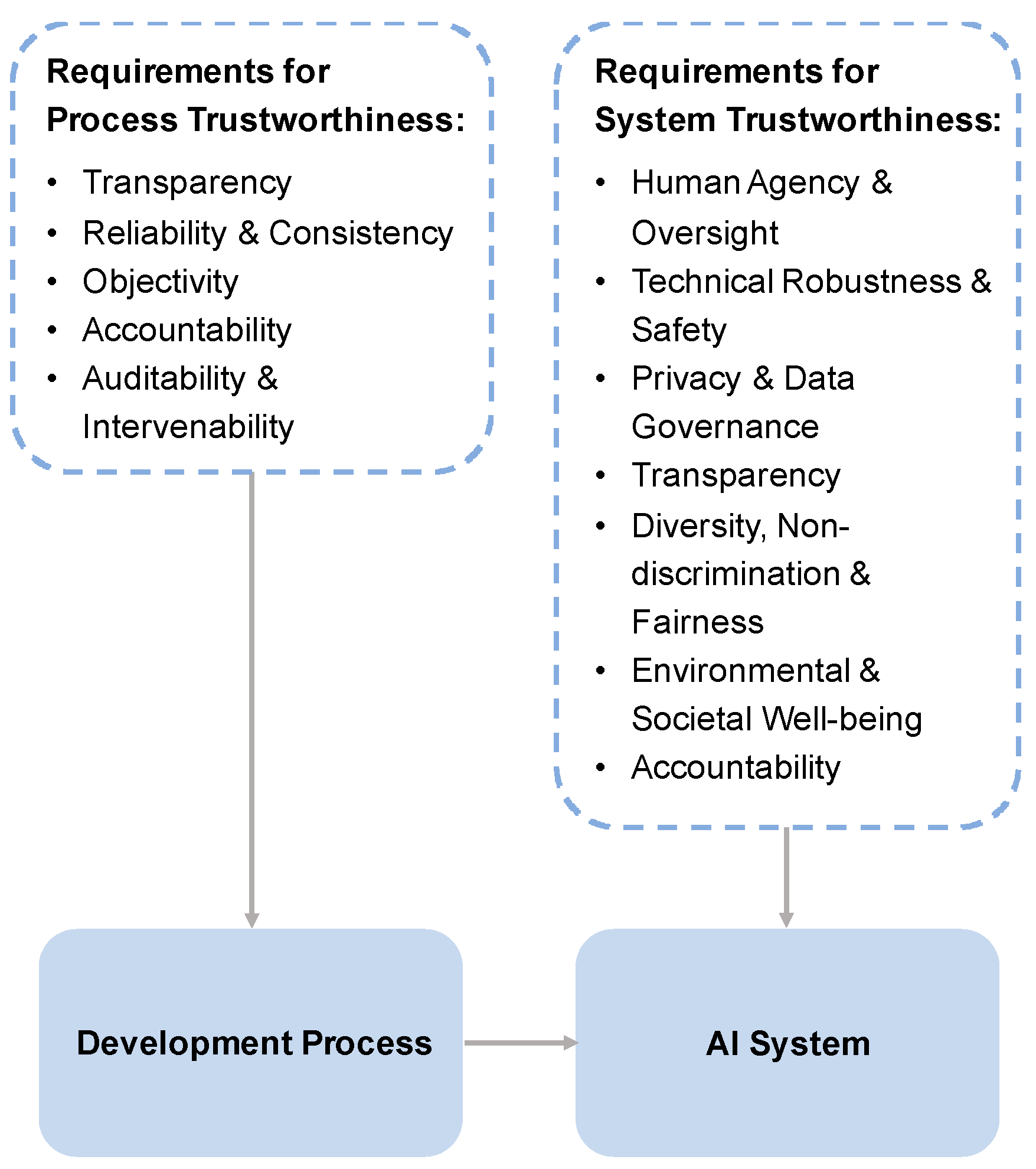

2.2. Characteristics of Trustworthy AI Development Processes

2.3. Moving towards Trustworthy AI Development

3. Methodology

3.1. Data Collection

3.2. Data Analysis

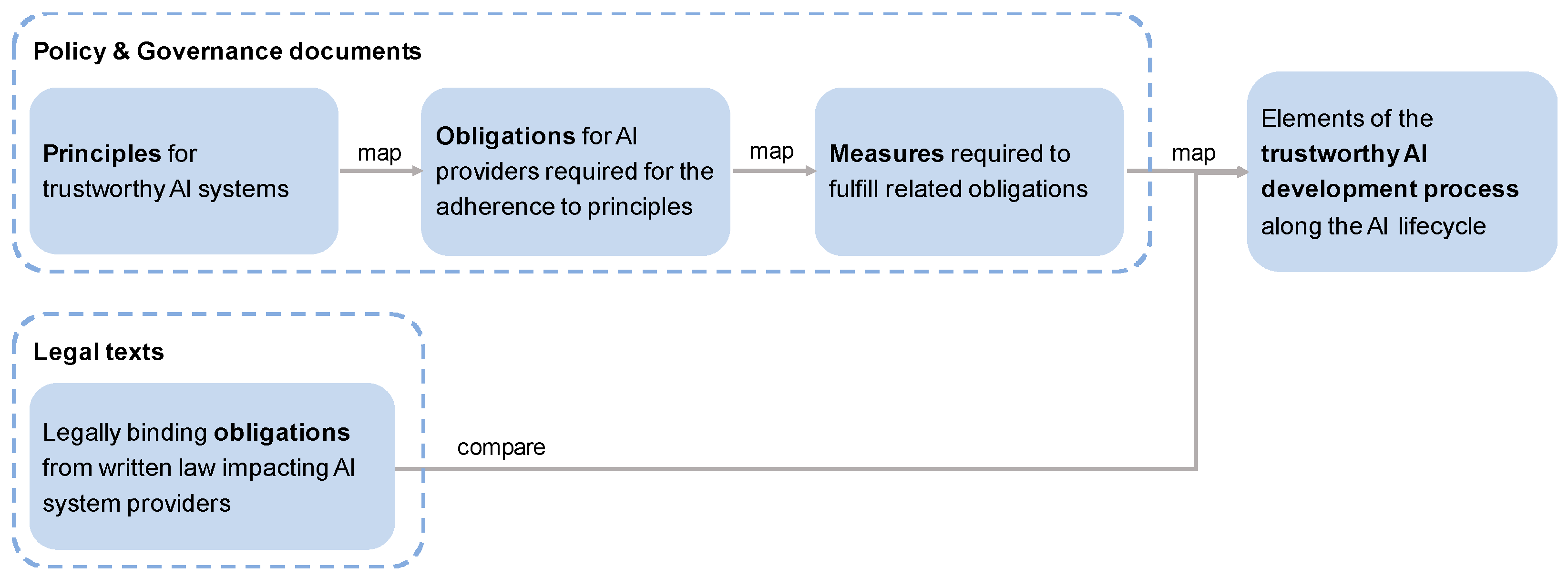

4. From Trustworthy Principles to a Trustworthy Development Process

4.1. From Principles to Obligations

4.2. From Obligations to Measures

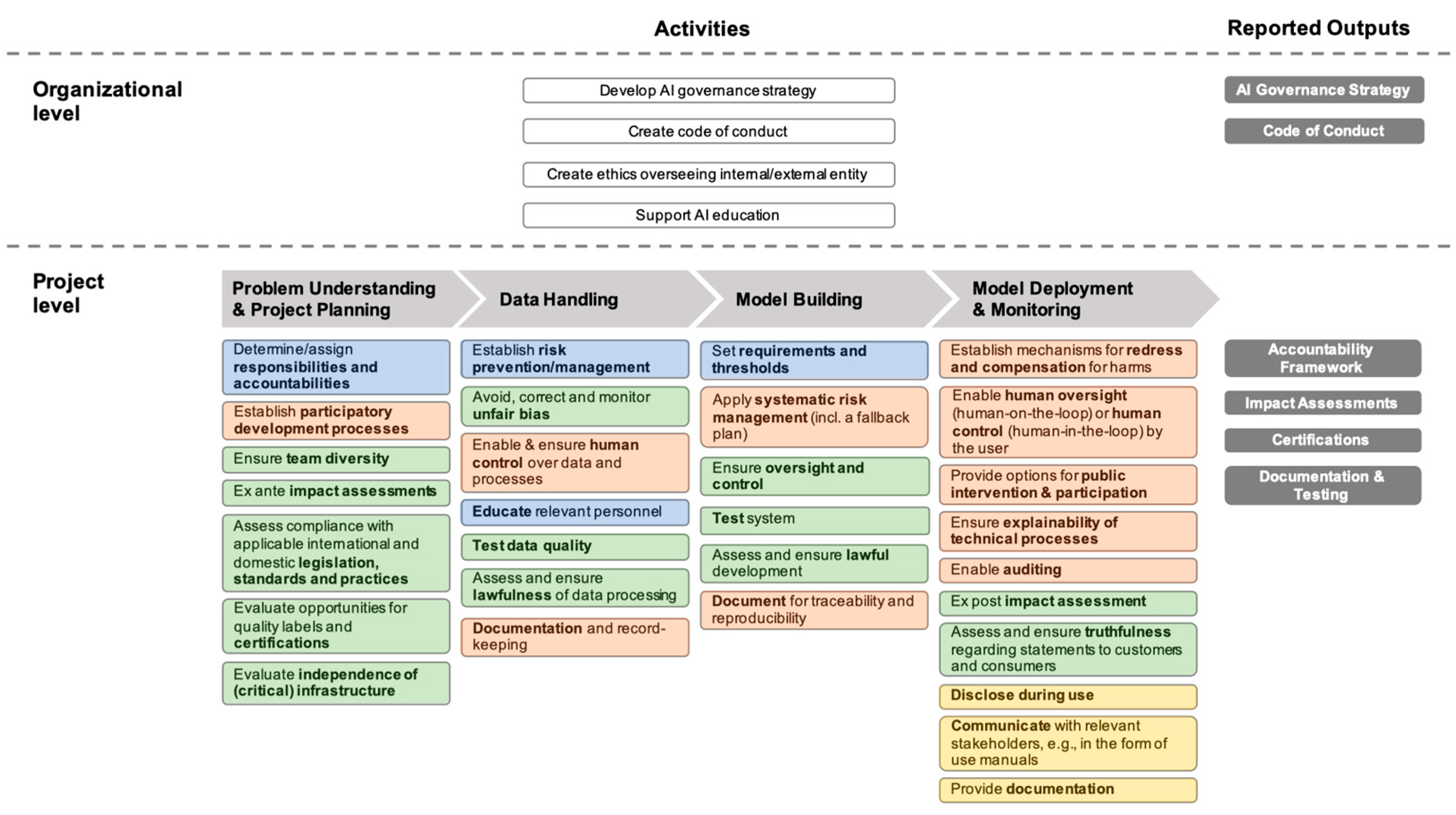

4.2.1. Measures According to AI Governance Documents

4.2.2. Comparison to Legal Perspective

4.3. From Measures to Process

5. Discussion

5.1. Process Trustworthiness of Current Responsible AI Development

5.2. Next Steps in Trustworthy AI Development

5.3. Limitations

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- High-Level Expert Group on Artificial Intelligence (AI HLEG). Ethics Guidelines for Trustworthy AI; European Commission: Brussels, Belgium, 2019. [Google Scholar]

- Bartneck, C.; Lütge, C.; Wagner, A.; Welsh, S. An Introduction to Ethics in Robotics and AI; Springer Nature: Berlin, Germany, 2021. [Google Scholar]

- Mittelstadt, B. Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 2019, 1, 501–507. [Google Scholar] [CrossRef]

- Ryan, M.; Stahl, B.C. Artificial intelligence ethics guidelines for developers and users: Clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 2020, 19, 61–86. [Google Scholar] [CrossRef]

- Larsson, S. On the governance of artificial intelligence through ethics guidelines. Asian J. Law Soc. 2020, 7, 437–451. [Google Scholar] [CrossRef]

- Deshpande, A.; Sharp, H. Responsible AI Systems: Who are the Stakeholders? In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society (AIES 22), New York, NY, USA, 7–8 February 2022; pp. 227–236. [Google Scholar]

- Georgieva, I.; Lazo, C.; Timan, T.; van Veenstra, A.F. From AI ethics principles to data science practice: A reflection and a gap analysis based on recent frameworks and practical experience. AI Ethics 2022, 2, 697–711. [Google Scholar] [CrossRef]

- Ayling, J.; Chapman, A. Putting AI ethics to work: Are the tools fit for purpose? AI Ethics 2021, 2, 405–429. [Google Scholar] [CrossRef]

- Li, B.; Qi, P.; Liu, B.; Di, S.; Liu, J.; Pei, J.; Yi, J.; Zhou, B. Trustworthy AI: From Principles to Practices. ACM Comput. Surv. 2021, 55, 1–46. [Google Scholar] [CrossRef]

- Morley, J.; Floridi, L.; Kinsey, L.; Elhalal, A. From what to how: An initial review of publicly available AI ethics tools, methods and research to translate principles into practices. In Ethics, Governance, and Policies in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; pp. 153–183. [Google Scholar] [CrossRef]

- Hohma, E.; Boch, A.; Trauth, R.; Lütge, C. Investigating accountability for Artificial Intelligence through risk governance: A workshop-based exploratory study. Front. Psychol. 2023, 14, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Stix, C. Actionable principles for artificial intelligence policy: Three pathways. Sci. Eng. Ethics 2021, 27, 15. [Google Scholar] [CrossRef]

- Dafoe, A. AI Governance: A Research Agenda; Governance of AI Program, Future of Humanity Institute, University of Oxford: Oxford, UK, 2018; Volume 1442. [Google Scholar]

- Miller, G.J. Stakeholder roles in artificial intelligence projects. Proj. Leadersh. Soc. 2022, 3, 100068. [Google Scholar] [CrossRef]

- Wieringa, M. What to account for when accounting for algorithms: A systematic literature review on algorithmic accountability. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* 20), New York, NY, USA, 27–30 January 2020; pp. 1–18. [Google Scholar]

- De Silva, D.; Alahakoon, D. An artificial intelligence life cycle: From conception to production. Patterns 2022, 3, 100489. [Google Scholar] [CrossRef]

- Haakman, M.; Cruz, L.; Huijgens, H.; van Deursen, A. AI lifecycle models need to be revised. Empir. Softw. Eng. 2021, 26, 95. [Google Scholar] [CrossRef]

- Suresh, H.; Guttag, J. A framework for understanding sources of harm throughout the machine learning life cycle. In Proceedings of the Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO ’21), New York, NY, USA, 5–9 October 2021; pp. 1–9. [Google Scholar] [CrossRef]

- de Souza Nascimento, E.; Ahmed, I.; Oliveira, E.; Palheta, M.P.; Steinmacher, I.; Conte, T. Understanding development process of machine learning systems: Challenges and solutions. In Proceedings of the 2019 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Porto de Galinhas, Brazil, 19–20 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Rybalko, D.; Portilla, I.; Kozhaya, J.; Ishizaki, K.; Hall, K.; Madan, N. AI Model Lifecycle Management: What is ModelOps? A Technical Perspective; IBM Point of View: Armonk, NY, USA, 2020. [Google Scholar]

- Paulus, S.; Mohammadi, N.G.; Weyer, T. Trustworthy software development. In Proceedings of the Communications and Multimedia Security: 14th IFIP TC 6/TC 11 International Conference, CMS 2013, Magdeburg, Germany, 25–26 September 2013. pp. 233–247.

- Yang, Y.; Wang, Q.; Li, M. Process trustworthiness as a capability indicator for measuring and improving software trustworthiness. In Proceedings of the Trustworthy Software Development Processes: International Conference on Software Process, ICSP 2009, Vancouver, BC, Canada, 16–17 May 2009; pp. 389–401. [Google Scholar]

- Safonov, V.O. Using Aspect-Oriented Programming for Trustworthy Software Development; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Systems and Software Engineering—System Life Cycle Processes, ISO/IEC/IEEE. 2015. Available online: https://www.iso.org/standard/81702.html (accessed on 29 September 2023).

- Developing Cyber-Resilient Systems: A Systems Security Engineering Approach, NIST. 2021. Available online: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-160v2r1.pdf (accessed on 29 September 2023).

- Shiang-Jiun, C.; Yu-Chun, P.; Yi-Wei, M.; Cheng-Mou, C.; Chi-Chin, T. Trustworthy Software Development—Practical view of security processes through MVP methodology. In Proceedings of the 2022 24th International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Republic of Korea, 13–16 February 2022; pp. 412–416. [Google Scholar]

- IEEE Standards Association. Addressing Ethical Concerns During Systems Design; IEEE Standards Association: Piscataway, NJ, USA, 2021; Volume 7000. [Google Scholar]

- IEEE Standards Association. Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems; IEEE Standards Association: Piscataway, NJ, USA, 2019. [Google Scholar]

- Weller, A. Transparency: Motivations and challenges. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 23–40. [Google Scholar]

- Wanner, J.; Herm, L.-V.; Heinrich, K.; Janiesch, C. The effect of transparency and trust on intelligent system acceptance: Evidence from a user-based study. Electron. Mark. 2022, 32, 2079–2102. [Google Scholar] [CrossRef]

- Tyler, T.R. Why Do People Rely on Others? Social Identity and Social Aspects of Trust. In Trust in Society; Cook, K.S., Ed.; Russell Sage Foundation: New York, NY, USA, 2001; pp. 285–306. [Google Scholar]

- Mökander, J.; Floridi, L. Operationalising AI governance through ethics-based auditing: An industry case study. AI Ethics 2023, 3, 451–468. [Google Scholar] [CrossRef] [PubMed]

- Brundage, M.; Avin, S.; Wang, J.; Belfield, H.; Krueger, G.; Hadfield, G.; Khlaaf, H.; Yang, J.; Toner, H.; Fong, R. Toward trustworthy AI development: Mechanisms for supporting verifiable claims. arXiv 2020, arXiv:2004.07213. [Google Scholar]

- Raji, I.D.; Smart, A.; White, R.N.; Mitchell, M.; Gebru, T.; Hutchinson, B.; Smith-Loud, J.; Theron, D.; Barnes, P. Closing the AI accountability gap: Defining an end-to-end framework for internal algorithmic auditing. In Proceedings of the the 2020 Conference on Fairness, Accountability, and Transparency (FAT* 20), New York, NY, USA, 27–30 January 2020; pp. 33–44. [Google Scholar]

- NIST. AI Risk Management Framework: Initial Draft; NIST: Gaithersburg, MD, USA, 2022. [Google Scholar]

- ISO. ISO/IEC JTC 1/SC 42 Artificial Intelligence. Available online: https://www.iso.org/committee/6794475/x/catalogue/p/1/u/0/w/0/d/0 (accessed on 19 December 2022).

- ISO/IEC. Information Technology—Artificial Intelligence—Overview of Trustworthiness in Artificial Intelligence; ISO: Geneva, Switzerland, 2020; Available online: https://www.iso.org/standard/77608.html (accessed on 29 September 2023).

- ISO/IEC. Information Technology—Artificial Intelligence—Guidance on Risk Management; ISO: Geneva, Switzerland, 2023; Available online: https://www.iso.org/standard/77304.html (accessed on 29 September 2023).

- Vakkuri, V.; Kemell, K.-K.; Kultanen, J.; Abrahamsson, P. The current state of industrial practice in artificial intelligence ethics. IEEE Softw. 2020, 37, 50–57. [Google Scholar] [CrossRef]

- Burr, C.; Leslie, D. Ethical assurance: A practical approach to the responsible design, development, and deployment of data-driven technologies. AI Ethics 2023, 3, 73–98. [Google Scholar] [CrossRef]

- Ashmore, R.; Calinescu, R.; Paterson, C. Assuring the machine learning lifecycle: Desiderata, methods, and challenges. ACM Comput. Surv. (CSUR) 2021, 54, 111. [Google Scholar] [CrossRef]

- AI Assurance Guide. Available online: https://cdeiuk.github.io/ai-assurance-guide/ (accessed on 21 September 2023).

- Ada Lovelace Institute. NMIP Algorithmic Impact Assessment User Guide; Ada Lovelace Institute: Londond, UK, 2022. [Google Scholar]

- High-Level Expert Group on Artificial Intelligence (AI HLEG). Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment; European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Vakkuri, V.; Kemell, K.-K.; Kultanen, J.; Siponen, M.; Abrahamsson, P. Ethically aligned design of autonomous systems: Industry viewpoint and an empirical study. arXiv 2019, arXiv:1906.07946. [Google Scholar]

- Greenstein, B.; Rao, A. PwC 2022 AI Business Survey. Available online: https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-business-survey.html (accessed on 29 September 2023).

- Wong, G.; Greenhalgh, T.; Westhorp, G.; Buckingham, J.; Pawson, R. RAMESES publication standards: Meta-narrative reviews. J. Adv. Nurs. 2013, 69, 987–1004. [Google Scholar] [CrossRef]

- Snyder, H. Literature review as a research methodology: An overview and guidelines. J. Bus. Res. 2019, 104, 333–339. [Google Scholar] [CrossRef]

- Aiethicist.org. Artificial Intelligence Resources; Aiethicist.org: 2022. Available online: https://www.aiethicist.org (accessed on 21 September 2023).

- OECD. Recommendation of the Council on Artificial Intelligence; OECD/LEGAL/0449; OECD: Paris, France, 2019. [Google Scholar]

- UNESCO. Recommendation on the Ethics of Artificial Intelligence; UNESCO: Paris, France, 2021. [Google Scholar]

- US Federal Trade Commission (FTC). Aiming for Truth, Fairness, and Equity in Your Company’s Use of AI; US Federal Trade Commission (FTC): Washington, DC, USA, 2021. [Google Scholar]

- CEN-CENELEC Focus Group. Road Map on Artificial Intelligence (AI); CEN-CENELEC: Brussels, Belgium, 2020. [Google Scholar]

- Elam, M.; Reich, R. Stanford HAI Artificial Intelligence Bill of Rights: A White Paper for Standford’s Institute for Human-Centered Artificial Intelligence; Stanford Human-Centered Artificial Intelligence: Stanford, CA, USA, 2022. [Google Scholar]

- Felländer, A.; Rebane, J.; Larsson, S.; Wiggberg, M.; Heintz, F. Achieving a Data-driven Risk Assessment Methodology for Ethical AI. Digit. Soc. 2021, 1, 1–13. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Reisman, D.; Schultz, J.; Crawford, K.; Whittaker, M. Algorithmic Impact Assessments: A Practical Framework for Public Agency Accountability. AI Now 2018. Available online: https://www.nist.gov/system/files/documents/2021/10/04/aiareport2018.pdf (accessed on 21 September 2023).

- The Responsible Machine Learning Principles: A Practical Framework to Develop AI Responsibl. The Institute for Ethical AI & Machine Learning: London, UK. Available online: https://ethical.institute/index.html (accessed on 21 September 2023).

- Loi, M.; Matzener, A.; Muller, A.; Spielkamp, M. Automated Decision-Making Systems in the Public Sector: An Impact Assessment Tool for Public Authorities; AW AlgorithmWatch gGmbH: Berlin, Germany, 2021. [Google Scholar]

- The Public Voice. Universal Guidelines for Artificial Intelligence; The Public Voice: Burlington, VT, USA, 2018. [Google Scholar]

- European Union. Summaries of EU Legislation; European Union: 2022. Available online: https://eur-lex.europa.eu/browse/summaries.html (accessed on 21 September 2023).

- Braun, V.; Clarke, V. Thematic Analysis; American Psychological Association: Worcester, MA, USA, 2012. [Google Scholar]

- Vaismoradi, M.; Snelgrove, S. Theme in qualitative content analysis and thematic analysis. Forum Qual. Sozialforschung/Forum: Qual. Soc. Res. 2019, 20. [Google Scholar] [CrossRef]

- Mäntymäki, M.; Minkkinen, M.; Birkstedt, T.; Viljanen, M. Defining organizational AI governance. AI Ethics 2022, 2, 603–609. [Google Scholar] [CrossRef]

- Regulation 2021/0106; Proposal for a Regulation of the Euopean Parliament and of the Council: Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. European Union: Brussels, Belgium, 2021.

- Regulation 2016/679; Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). European Union: Brussels, Belgium, 2016.

- Regulation 2017/0003; Proposal for a Regulation of the European Parliament and of the Council Concerning the Respect for Private Life and the Protection of Personal Data in Electronic Communications and Repealing Directive 2002/58/EC (Regulation on Privacy and Electronic Communications). European Union: Brussels, Belgium, 2017.

- Regulation 2022/0047; Proposal for a Regulation of the European Parliament and of the Council on Harmonised Rules on Fair Access to and Use of Data (Data Act). European Union: Brussels, Belgium, 2022.

- Regulation 2020/0340; Proposal for a Regulation of the European Parliament and of the Council on European Data Governance (Data Governance Act). European Union: Brussels, Belgium, 2020.

- Regulation 2021/0170; Proposal for a Regulation of the European Parliament and of the Council on General Product Safety, Amending Regulation (EU) No 1025/2012 of the European Parliament and of the Council, and Repealing Council Directive 87/357/EEC and Directive 2001/95/EC of the European Parliament and of the Council. European Union: Brussels, Belgium, 2021.

- Directive 2022/0302; Proposal for a Directive of the European Parliament and of the Council on Liability for Defective Products. European Union: Brussels, Belgium, 2022.

- Almada, M.; Petit, N. The EU AI Act: Between Product Safety and Fundamental Rights. Available SSRN 2022. [Google Scholar] [CrossRef]

- Directive 2022/0303; Proposal for a Directive of the European Parliament and of the Council on Adapting Non-Contractual Civil Liability Rules to Artificial Intelligence (AI Liability Directive). European Union: Brussels, Belgium, 2022.

- European Commission. The Digital Services Act Package. Available online: https://digital-strategy.ec.europa.eu/en/policies/digital-services-act-package (accessed on 29 September 2023).

- Regulation (EU) 2022/2065; European Parliament and of the Council of 19 October 2022 on a Single Market for Digital Services and Amending Directive 2000/31/EC (Digital Services Act) . European Union: Brussels, Belgium, 2022.

- Regulation 2020/0374; Proposal for a Regulation of the European Parliament and of the Council on Contestable and Fair Markets in the Digital Sector (Digital Markets Act). European Union: Brussels, Belgium, 2020.

- Directive 2005/29/EC; European Parliament and of the Council of 11 May 2005 Concerning Unfair Business-to-Consumer Commercial Practices in the Internal Market and Amending Council Directive 84/450/EEC, Directives 97/7/EC, 98/27/EC and 2002/65/EC of the European Parliament and of the Council and Regulation (EC) No 2006/2004 of the European Parliament and of the Council (‘Unfair Commercial Practices Directive’). European Union: Brussels, Belgium, 2005.

- Charter 2000/C 364/01; Charter of Fundamental Rights of the European Union (2000/C 364/01). European Union: Brussels, Belgium, 2000.

- Kriebitz, A.; Lütge, C. Artificial intelligence and human rights: A business ethical assessment. Bus. Hum. Rights J. 2020, 5, 84–104. [Google Scholar] [CrossRef]

- Seppälä, A.; Birkstedt, T.; Mäntymäki, M. From ethical AI principles to governed AI. In Proceedings of the 42nd International Conference on Information Systems (ICIS2021), Austin, TX, USA, 12–15 December 2021; pp. 1–17. [Google Scholar]

- Hohma, E.; Boch, A.; Trauth, R. Towards an Accountability Framework for Artificial Intelligence Systems; TUM IEAI Whitepaper; TUM Institute for Ethics in Artificial Intelligence: Munich, Germany, 2022. [Google Scholar]

- Anagnostou, M.; Karvounidou, O.; Katritzidaki, C.; Kechagia, C.; Melidou, K.; Mpeza, E.; Konstantinidis, I.; Kapantai, E.; Berberidis, C.; Magnisalis, I. Characteristics and challenges in the industries towards responsible AI: A systematic literature review. Ethics Inf. Technol. 2022, 24, 37. [Google Scholar] [CrossRef]

- Gefen, D. E-commerce: The role of familiarity and trust. Omega 2000, 28, 725–737. [Google Scholar] [CrossRef]

- Stern, M.J.; Coleman, K.J. The multidimensionality of trust: Applications in collaborative natural resource management. Soc. Nat. Resour. 2015, 28, 117–132. [Google Scholar] [CrossRef]

- Hohma, E.; Burnell, R.; Corrigan, C.C.; Luetge, C. Individuality and fairness in public health surveillance technology: A survey of user perceptions in contact tracing apps. IEEE Trans. Technol. Soc. 2022, 3, 300–306. [Google Scholar] [CrossRef]

- Burton, J.W.; Stein, M.K.; Jensen, T.B. A systematic review of algorithm aversion in augmented decision making. J. Behav. Decis. Mak. 2020, 33, 220–239. [Google Scholar] [CrossRef]

| Human Agency and Oversight | Technical Robustness and Safety | Privacy and Data Governance | Transparency | Diversity, Non-Discrimination, and Fairness | Societal and Environmental Well-Being | Accountability |

|---|---|---|---|---|---|---|

| Ensure human autonomy/agency/determination Respect and protect fundamental/human rights Ensure human oversight Enable system termination Promote human augmentation | Ensure safety Ensure accuracy Ensure security Ensure reliability Ensure robustness Ensure validity Ensure reproducibility Ensure resilience to attack Ensure traceability Establish a fallback plan Ensure system quality Ensure verification | Ensure privacy Ensure data protection Ensure data quality Control data access Ensure lawful data processing Prevent data misuse/overuse Ensure data security Ensure data integrity Foster data risk awareness | Enable explainability of technical processes Communicate system capabilities and limitations Explain related human decisions/ reasoning Ensure traceability of datasets and processes Inform about AI interaction Promote AI education Allow access for auditing Communicate intended use Ensure explicability Allow for intervention Ensure independence Ensure transparency on responsibilities Ensure truthfulness | Avoid/Correct/ Monitor unfair bias Ensure non-discrimination Ensure diversity and inclusion Ensure equity, equality, and solidarity Ensure accessibility Ensure lawful development Enable multi-stakeholder engagement Enable compensation and remedy in case of discrimination Ensure peace and justice Define fairness Enable opportunity for correction | Prevent and reduce harm Monitor social impact Do more good than harm Ensure environmental friendliness Ensure proportionality to legitimate aim Ensure sustainability Monitor democratic impact Prevent misuse Establish multi-stakeholder dialog Ensure right foundation Ensure scientific foundation | Ensure auditability Provide documentation and information Assess general impacts Determine/assign responsibilities Allow for redress Establish appropriate oversight Establish ethics overseeing internal/external entity Establish measurement mechanisms Ensure public engagement Control access Foster accountability by design Create codes of conduct Collect feedback Ensure harm compensation |

| Non-Binding | Binding * | |

|---|---|---|

| Plan | Create codes of conduct | Develop AI governance strategies regarding:

Set requirements and thresholds for:

|

| Create and establish | Establish participatory development processes through:

Ensure team diversity regarding backgrounds, cultures, disciplines Establish risk prevention/management regarding:

Educate relevant personnel Ensure oversight and control regarding:

| Apply systematic risk management (incl. a fallback plan) Enable human oversight (human-on-the-loop) or human control (human-in-the-loop) by the user to:

|

| Assess and evaluate | Ex ante impact assessments regarding:

Evaluate independence of (critical) infrastructure Ex post impact assessment regarding:

| Assess compliance with applicable international and domestic legislation, standards, and practices Test data quality regarding:

Assess and ensure truthfulness regarding statements to customers and consumers |

| Document and communicate | Support AI education through:

| Documentation and record-keeping of:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hohma, E.; Lütge, C. From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems. AI 2023, 4, 904-925. https://doi.org/10.3390/ai4040046

Hohma E, Lütge C. From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems. AI. 2023; 4(4):904-925. https://doi.org/10.3390/ai4040046

Chicago/Turabian StyleHohma, Ellen, and Christoph Lütge. 2023. "From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems" AI 4, no. 4: 904-925. https://doi.org/10.3390/ai4040046

APA StyleHohma, E., & Lütge, C. (2023). From Trustworthy Principles to a Trustworthy Development Process: The Need and Elements of Trusted Development of AI Systems. AI, 4(4), 904-925. https://doi.org/10.3390/ai4040046