Abstract

The union of Edge Computing (EC) and Artificial Intelligence (AI) has brought forward the Edge AI concept to provide intelligent solutions close to the end-user environment, for privacy preservation, low latency to real-time performance, and resource optimization. Machine Learning (ML), as the most advanced branch of AI in the past few years, has shown encouraging results and applications in the edge environment. Nevertheless, edge-powered ML solutions are more complex to realize due to the joint constraints from both edge computing and AI domains, and the corresponding solutions are expected to be efficient and adapted in technologies such as data processing, model compression, distributed inference, and advanced learning paradigms for Edge ML requirements. Despite the fact that a great deal of the attention garnered by Edge ML is gained in both the academic and industrial communities, we noticed the lack of a complete survey on existing Edge ML technologies to provide a common understanding of this concept. To tackle this, this paper aims at providing a comprehensive taxonomy and a systematic review of Edge ML techniques, focusing on the soft computing aspects of existing paradigms and techniques. We start by identifying the Edge ML requirements driven by the joint constraints. We then extensively survey more than twenty paradigms and techniques along with their representative work, covering two main parts: edge inference, and edge learning. In particular, we analyze how each technique fits into Edge ML by meeting a subset of the identified requirements. We also summarize Edge ML frameworks and open issues to shed light on future directions for Edge ML.

1. Introduction

The tremendous success of Artificial Intelligence (AI) technologies [1] in the past few years has been driving both industrial and societal transformations through domains such as Computer Vision (CV), Natural Language Processing (NLP), Robotics, Industry 4.0, Smart Cities, etc. This success is mainly brought by deep learning, providing the conventional Machine Learning (ML) techniques with the capabilities of processing raw data and discovering intricate structures [2]. Daily human activities are now immersed with AI-enabled applications from content search and service recommendation to automatic identification and knowledge discovery.

The existing ML models, especially deep learning models, such as GPT-4 [3], Segment Anything [4], OVSeg [5], Make-A-Video [6], and Stable Diffusion [7], tend to rely on complex model structures and large model size to provide competitive performances. For instance, the largest WuDao 2.0 model [8] trained on 4.9TB of data has surpassed state-of-the-art levels on nine benchmark tasks with a striking 1.75 trillion parameters. As a matter of fact, large models have clear advantages on multi-modality, multi-task, and benchmark performance. However, such models require a relatively very large training datasets to be built as well as a large amount of computing resources during the training and inference phases. This dependency makes them usually closed to public access, and unsuitable to be directly deployed for end devices or even the small/medium enterprise level to provide real-time, offline, or privacy-oriented services.

In parallel with ML development, Edge Computing (EC) was firstly proposed in 1990 [9]. The main principle behind EC is to bring the computational resources at locations closer to end-users. This was intended to deliver cached content, such as images and videos, that are usually communication-expensive, and prevent heavy interactions with the main servers. This idea has later evolved to host applications on edge computing resources [10]. The recent and rapid proliferation of connected devices and intelligent systems has been further pushing EC from the traditional base station level or the gateway level to the end device level. This offers numerous technical advantages such as low latency, mobility, and location awareness support to delay-sensitive applications [11]. This serves as a critical enabler for emerging technologies like 6G, extended reality, and vehicle-to-vehicle communications, to mention only a few.

Edge ML [12], as the ML instantiation powered by EC and a union of ML and EC, has brought the processing in ML to the network edge and adapted ML technologies to the edge environment. In this work, the edge environment refers to the end-user side pervasive environment composed of devices from both the base station level and the end device level. In classical ML scenarios, users run ML applications on their resource-constrained devices (e.g., mobile phones, and Internet of Things (IoT) sensors and actuators), while the core service is performed on the cloud server. In Edge ML, either optimized models and services are deployed and executed in the end-user’s device, or the ML models are directly built on the edge side. This computing paradigm provides ML applications with advantages such as real-time immediacy, low latency, offline capability, enhanced security and privacy, etc.

In order to illustrate the transformative potential and versatility of Edge ML across different sectors, we briefly explore several application sectors where Edge ML has already demonstrated substantial impact. The applications represent just a fraction of the potential of Edge ML, while the breadth and depth of Edge ML applications are expanding with the technique’s evolution and its increased adoption.

- Healthcare: In healthcare, Edge ML enables real-time patient monitoring and personalized treatment strategies. Wearable sensors and smart implants equipped with Edge ML can process data locally, providing immediate health feedback [13]. This advancement permits the early detection of health irregularities and swift responses to potential emergencies, while also maintaining patient data privacy by avoiding the need for data transmission for analysis. Furthermore, in telemedicine, Edge ML could be used to interpret diagnostic imaging locally and provide immediate feedback to remote healthcare professionals, improving patient care efficiency and outcomes.

- Autonomous Vehicles: Edge ML is a key enabler for the advancements in the field of autonomous vehicles, which includes both Unmanned Aerial Vehicles (UAVs) and self-driving cars. These vehicles are packed with a myriad of sensors and cameras that generate enormous amounts of data per second [14]. Processing this data in real-time is crucial for safe and efficient operation, and sending all the data to a cloud server is impractical due to latency and bandwidth constraints. Edge ML, with its capability to process data at the edge, can help in reducing the latency, and enhancing real-time responses.

- Smart City: Edge ML plays a crucial role in the realization of smart cities [15], where real-time data processing is paramount. Applications such as intelligent traffic light control, waste management, and urban planning greatly benefit from ML models that can analyze sensor data on-site and respond promptly to changes in the urban environment. Moreover, Edge ML can power public safety applications such as real-time surveillance systems for crime detection and prevention. Here, edge devices like surveillance cameras equipped with ML algorithms can detect unusual activities or behaviours and alert relevant authorities in real time, potentially preventing incidents and enhancing overall city safety.

- Industrial IoT (IIoT): In the realm of Industrial IoT [16], Edge ML is instrumental in predictive maintenance and resource management. With ML models running at the edge, real-time anomaly detection can be carried out to anticipate equipment failures and proactively schedule maintenance. Additionally, Edge ML can optimize operational efficiency by monitoring production line performance, tracking resource usage, and automating quality control processes.

However, the Edge ML’s core research challenge remains how to adapt ML technologies to edge environmental constraints such as limited computation and communication resources, unreliable network connections, data sensitivity, etc., while keeping similar or acceptable performance. Research work was carried out in the past few years tackling different aspects of this meta-challenge, such as: model compression [17], transfer learning [18], and federated learning [19].

With the above-mentioned promising results in diverse areas, we noticed that very little work has been realized to deliver a systematic view of relevant Edge ML techniques, rather focusing on Edge ML in specific contexts. One example worth reporting is Wang et al. [20,21], who present a comprehensive survey on the convergence of edge computing and deep learning, which covers aspects of hardware, communication, models, as well as edge applications and edge optimization. The work is a good reference as an Edge ML technology stack. On the other hand, the analysis of edge ML paradigms are rather brief without a comprehensive analysis of diverse related problems and the matching solutions. Abbas et al. [22] review the role and impact of the relevant ML techniques in addressing the safety, security, and privacy challenges in the specific context of the IoT systems. Mustafa et al. [23] center around two significant themes in edge computing: Wireless Power Transfer (WPT) and Mobile Edge Computing (MEC). Their work surveys the methodologies of offloading tasks in MEC and WPT to end devices, and analyzes how the conjunction of WPT and MEC offloading can help overcome limitations in smart device battery lifetime and task execution delay. Murshed et al. [24] introduce a machine learning survey at the network edge, for which the training, inference, and deployment aspects are briefly summarized, and the technique coverage is limited and only focuses on representative techniques such as federated learning and quantization.

Compared to the existing works that briefly review the representative techniques, our paper aims to provide a panoramic view of Edge ML requirements, and offers a comprehensive technique review for edge machine learning on the soft computing aspects of model training and model inference. Throughout our review process, we strive for comprehensiveness, including more than twenty technique categories and over fifty techniques in this paper. Our work fills a significant gap in the literature by providing a single point of reference that offers extensive coverage of the Edge ML field. The paper delivers a more complete picture of the landscape of Edge ML, allowing readers to understand the full breadth and depth of the available techniques, their respective advantages and limitations, and their fit within different Edge ML contexts.

In contrast with [23], our paper concentrates on the integration of ML techniques in the edge environment, dealing with the aspects of data processing, model compression, distributed inference, and advanced learning paradigms to explore a broader range of techniques and paradigms. In comparison with [24], which covers representative works in topics of training, inference, applications, frameworks, software, and hardware, our paper focuses on model training and inference computing aspects, including a far richer list of techniques (e.g., in the edge learning section, we include thirteen technique categories benefiting Edge ML, compared to the three training techniques presented in [24]). We also analyze in detail the Edge ML requirements to provide a broad taxonomy and show how each technique can satisfy different Edge ML requirements as a systematic review.

Specifically, our paper answers the three following questions:

- What are the computational and environmental constraints and requirements for ML on the edge?

- What are the Edge ML techniques to train intelligent models or enable model inference while meeting Edge ML requirements?

- How can existing ML techniques fit into an edge environment regarding these requirements?

To answer the three above questions, this review is realized by firstly identifying the Edge ML requirements, and then individually reviewing existing ML techniques and analyzing if and how each technique can fit into edge by fulfilling a subset of the requirements. Following this methodology, our goal is to be as exhaustive as possible in the work coverage and provide a panoramic view of all relevant Edge ML techniques with a special focus on machine learning for model training and inference at the edge. Other topics, such as Edge ML hardware [25] and edge communication [26], are beyond the scope of this paper. As such, we do not discuss them in this review.

The remainder of the paper is organized as follows: Section 2 introduces the Edge ML motivation driven by the requirements. Section 3 provides an overview of all the surveyed edge ML techniques. In Section 4 and Section 5, we describe each technique and analyze them, respectively, in relation to Edge ML requirements. Section 6 summarizes the technique review part, and Section 7 briefly introduces the frameworks supporting Edge ML implementation. Section 8 identifies the open issues and future directions in Edge ML. Section 9 concludes our work and sheds light on future perspectives.

2. Edge Machine Learning: Requirements

In the context of machine learning, be it supervised learning, unsupervised learning, or a reinforcement learning, an ML task could be either a training or an inference. As in every technology, it is critical to understand the underlying requirements that ensure proper expectations. By definition, the edge infrastructure is generally resource-constrained in terms of the following: computation power, i.e., processor and memory; storage capacity, i.e., auxiliary storage; and communication capability, i.e., network bandwidth. ML models, on the other hand, are commonly known to be hardware-demanding, with computationally expensive and memory-intensive features. Consequently, the union of EC and ML exhibits both constraints from edge environment and ML models. When designing edge-powered ML solutions, requirements from both the hosting environment and the ML solution itself need to be considered and fulfilled for suitable, effective, and efficient results.

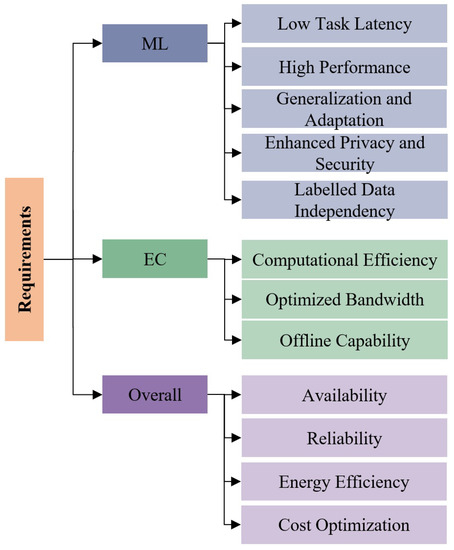

We introduce in this section the Edge ML requirements, structured in three categories: (i) ML requirements, (ii) EC requirements, and (iii) overall requirements, which are composite indicators from ML and EC for Edge ML performance. The three categories of requirements are summarized in Figure 1.

Figure 1.

Edge ML Requirements.

2.1. ML Requirements

We foresee five main requirements an ML system should consider: (i) Low Task Latency, (ii) High Performance, (iii) Generalization and Adaptation, (iv) Labelled Data Independence, and (v) Enhanced Privacy and Security. We detail these in the following.

- Low Task Latency: Task latency refers to the end-to-end processing time for one ML task, in seconds (s), and is determined by both ML models and the supporting computation infrastructure. Low task latency is important to achieve fast or real-time ML capabilities, especially for time-critical use-cases such as autonomous driving. We use the term task latency instead of latency to differentiate this concept from communication latency, which describes the time for sending a request and receiving an answer.

- High Performance: The performance of an ML task is represented by its results and measured by general performance metrics such as top-n accuracy, and f1-score in percentage points (pp), as well as use-case-dependent benchmarks such as General Language Understanding Evaluation (GLUE) benchmark for NLP [27] or Behavior Suite for reinforcement learning [28].

- Generalization and Adaptation: The models are expected to learn the generalized representation of data instead of the task labels, so as to be easily generalized to a domain instead of specific tasks. This brings the models’ capability to solve new and unseen tasks and realize a general ML directly or with a brief adaptation process. Furthermore, facing the disparity between learning and prediction environments, ML models can be quickly adapted to specific environments to solve the environmental specific problems.

- Enhanced Privacy and Security: The data acquired from edge carry much private information, such as personal identity, health status, and messages, preventing these data from being shared in a large extent. In the meantime, frequent data transmission over a network threatens data security as well. The enhanced privacy and security requires the corresponding solution to process data locally and minimize the shared information.

- Labelled Data Independence: The widely applied supervised learning in modern machine learning paradigms requires large amounts of data to train models and generalize knowledge for later inference. However, in practical scenarios, we cannot assume that all data in the edge are correctly labeled. The independence of labelled data indicates the capability of an Edge ML solution to solve one ML task without labelled data or with few labelled data.

2.2. EC Requirements

Three main edge environmental requirements of EC impact the overall Edge ML technology: (i) Computational Efficiency, (ii) Optimized Bandwidth, and (iii) Offline Capability, summarized below.

- Computational Efficiency: Refers to the efficient usage of computational resources to complete an ML task. This includes both processing resources measured by the number of arithmetic operations (OPs), and the required memory measured in MB.

- Optimized Bandwidth: Refers to the optimization of the amount of data transferred over network per task, measured by MB/Task. Frequent and large data exchanges over a network can raise communication and task latency. An optimized bandwidth usage expects Edge ML solutions to balance the data transfer over the network and local data processing.

- Offline Capability: The edge connectivity of edge devices is often weak and/or unstable, requiring operations to be performed on the edge directly. The offline capability refers to the ability to solve an ML task when network connections are lost or without a network connection.

2.3. Overall Requirements

The global requirements are composite indicators from ML and environmental requirements for Edge ML performance. We specify four overall requirements in this category: (i) Availability, (ii) Reliability, (iii) Energy Efficiency, and (iv) Cost Optimization.

- Availability: Refers to the percentage of time (in percentage points (pp)) that an Edge ML solution is operational and available for processing tasks without failure. For edge ML applications, availability is paramount because these applications often operate in real-time or near-real-time environments, and downtime can result in severe operational and productivity loss.

- Reliability: Refers to the ability of a system or component to perform its required functions under stated conditions for a specified period of time. Reliability can be measured using various metrics such as Mean Time Between Failures (MTBF) and Failure Rate.

- Energy Efficiency: Energy efficiency refers to the number of ML tasks obtained per power unit, in Task/J. The energy efficiency is determined by both the computation and communication design of Edge ML solutions and their supporting hardware.

- Cost optimization: Similar to energy consumption, edge devices are generally low-cost compared to cloud servers. The cost here refers to the total cost of realizing one ML task in an edge environment. This is again determined by both the Edge ML software implementation and its supporting infrastructure usage.

It should be noted that, depending on the nature of Edge ML applications, one Edge ML solution does not necessarily fulfill all the requirements above. The exact requirements for each specific Edge ML application vary according to each requirement’s critical level to an application. For example, for autonomous driving, the task latency requirement is much more critical than the power consumption and cost optimization requirements.

3. Techniques Overview

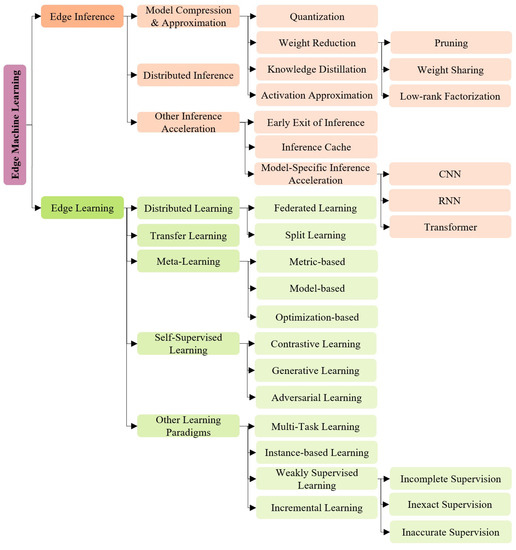

Figure 2 shows a global view of edge Machine Learning techniques reviewed in this paper. We structure the related techniques into: (i) edge inference, and (ii) edge learning. The edge inference category introduces the technologies to accelerate the task latency of ML model inference. This is performed through, e.g., compressing existing models to consume less hardware resources or by dividing existing models into several parts for parallel inference collaboration. The edge learning category introduces solutions to directly build ML models on the edge side by learning locally from edge data. We detail the categories in the next sections.

Figure 2.

Edge ML Technique Overview.

Before introducing the details of each reviewed technique, we go through three basic machine learning paradigms, i.e., supervised learning, unsupervised learning, and reinforcement learning, to lay the theoretical foundation of ML. Briefly, supervised learning involves using an ML model to learn a mapping function between input data and the target variable from the labeled dataset. Unsupervised learning directly describes or extracts relationships in unlabeled data without any guidance from labelled data. Reinforcement learning is the process that an ML agent continuously interacts with its environment, performs actions to obtain awards, and learns to achieve a goal by the trial-and-error method.

Extending the work from [29], we give below the formal definition of the three basic learning paradigms. The objective of the definition is not merely to offer a conventional understanding of these methods, but more importantly, to create a conceptual bridge between these mainstream learning techniques and their specialized applications and adaptations for edge learning techniques, which helps to understand how these well-established AI techniques transform when applied to the context of Edge computing. Breakthroughs have been made in all three ML learning paradigms to derive meaningful data insights and bring intelligent capabilities, and the reviewed techniques in this paper all fit into the three general machine learning paradigms.

3.1. Supervised Learning

Supervised learning learns a function mapping inputs to the corresponding outputs with the help of a labeled dataset D of m samples , in which are ML model parameters (e.g., weights and biases in the case of neural network). The learning process aims at finding optimal or sub-optimal values for specific to the dataset D that minimizes an empirical loss function through a training process (e.g., backward propagation in the case of neural network) as:

where stands for “supervised learning”. In practice, the labelled dataset D is often divided into training, validation, and testing datasets to train the model, guide the training process, and evaluate model performance after training, respectively [30].

Finding globally optimal values of is computationally expensive, while in practice the training process is commonly an approximation to find sub-optimal values guided by a predefined meta-knowledge including the initial model parameters , the training optimizer, and learning rate in the case of neural network, as:

where is an optimization procedure that uses predefined meta-knowledge , dataset D and loss function to continuously update the model’s parameters and output final .

3.2. Unsupervised Learning

Training an ML model in the unsupervised manner is mostly similar to the supervised learning processing, except that the learned function mapping input to the same input or other inputs. Unsupervised learning only uses unlabeled dataset of n sample to determine values specific to the dataset that minimize an empirical loss function through a training process, as:

where stands for “unsupervised learning”. Furthermore, the same approximation is applied to unsupervised learning to efficiently fit the to :

In addition to the above unsupervised learning paradigm which is used to train ML models, other unsupervised learning techniques such as clustering [31] apply predefined algorithms and computing steps to directly generate expected outputs (e.g., data clusters) from . In such context, the unsupervised learning approximates the values of specific algorithms’ hyperparameters as:

3.3. Reinforcement Learning

In the classic scenario of reinforcement learning where agents know the state at any given time step, the reinforcement learning paradigm can be formalized into a Markov Decision Process (MDP) as , where S is the set of states, A the set of actions, P the transition probability distribution defining the transition probability from to via , the reward function, the probability distribution over initial states, the discount factor prioritizing short- or long-term rewards by respectively decreasing or increasing it, T the maximum number of time steps. At a time step , a policy function , usually represented by a model in the case of deep reinforcement learning, is used to determine the action that an agent performs at state , where is the parameters of the policy function; after the action , the agent receives an award and enters into a new state . The interaction between agent and environment stops until a criterion is met, such as the rewards are maximized.

The objective of the reinforcement learning is to make agents learn to act and maximize the received rewards as follows:

where stands for “reinforcement learning”, and is the expectation over possible trajectories .

Similar to supervised and unsupervised learning, the sub-optimum of are searched via an approximation process:

where is an optimization procedure that uses predefined meta-knowledge , the given MDP M and loss function to produce final .

4. Edge Inference

Edge inference techniques seek to enable large model inference on edge devices and accelerate the inference efficiency. The techniques can be categorized into three main groups: (i) model compression and approximation, (ii) distributed inference, and (iii) other inference acceleration techniques.

4.1. Model Compression and Approximation

A large amount of redundancy among the ML model parameters (e.g., neural network weights) has been observed [32], showing that a small subset of the weights is sufficient to reconstruct the entire neural network. Model compression and approximation are methods to transform ML models into smaller-size or approximate models with low-complexity computations. This is performed with the objective to reduce the memory use and the arithmetic operations during the inference, while keeping acceptable performances. Model compression and approximation can be broadly classified into three categories [33]: (i) Quantization, (ii) Weight Reduction, and (iii) Activation Function Approximation. We discuss these categories in the following:

4.1.1. Quantization

Quantization is the process of converting ML model parameters (i.e., weights and bias in neural networks) and activation outputs, represented in Floating Point (FP) format of high precision such as FP64 or FP32, into a low-precision format and then perform computing tasks such as training or inference. Different formats of quantization can be summarized as:

- Low-Precision Floating-Point Representation: A floating-point parameter describes binary numbers in the exponential form with an arbitrary binary point position such as 32-bit floating point (FP32), 16-bit floating point (FP16), 16-bit Brain Floating Point (BFP16) [34].

- Fixed-Point Representation: A fixed-point parameter [35] uses predetermined precision and binary point locations. Compared to a high-precision floating-point representation, the fixed-point parameter representation can offer faster, cheaper, and more power-efficient arithmetic operations.

- Binarization and Terrorization: Binarization [36] is the quantization of parameters into just two values, typically −1, 1 with a scaling factor. The terrorization [37] on the other hand adds the value 0 to the binary value set to express 0 in models.

- Logarithmic Quantization: In a logarithmic quantization [38], parameters are quantized into powers of two with a scaling factor. Work in [39] shows that a weight’s representation range is more important than its precision in preserving network accuracy. Thus, logarithmic representations can cover wide ranges using fewer bits, compared to the other above-mentioned linear quantization formats.

In addition to the main exploited data types, AI-specific data formats and several quantization contributions exist in the literature and are introduced in Table 1.

Table 1.

AI-specific data formats and model quantization works.

To produce the corresponding quantized model, post-training quantization and quantization-aware training can be applied. Given an existing trained model, post-training quantization directly converts the trained model parameters and/or activation according to the conversion needs, to reduce model size and improve task latency during the inference phase. On the other hand, and instead of quantizing existing models, quantization-aware training is a method that trains an ML model by emulating inference-time quantization, which has proved to be better for model accuracy [57]. During the training of a neural network, quantization-aware training simulates low-precision behavior in the forward pass, while the backward pass based on backward propagation remains the same. The training process takes into account both errors from training data labels as well as quantization errors which accumulate in the total loss of the model, and hence the optimizer tries to reduce them by adjusting the parameters accordingly. Similarly, the work [58] analyzes the significant trade-off between energy efficiency and model accuracy, showing that the application of repair methods (e.g., ReAct-Net [59]), could largely offset the accuracy loss after quantization. Zhou et al. [60] analyzed various data precision combinations, concluding that accuracy deteriorates rapidly when weights are quantized to fewer than four bits. The work [61] investigates the impact of data representation and bit-width reduction on CNN resilience, particularly in the context of safety-critical and resource-constrained systems. The results indicate that fixed-point data representation offers a superior trade-off between memory footprint reduction and resilience to hardware faults, especially for the LeNet-5 network, achieving a 4× memory footprint reduction at the expense of less than 0.45% critical faults without requiring network retraining.

Overall, moving from high-precision floating-point to lower-precision data representations is especially useful for ML models on edge devices with only low precision operation support such as Application-Specific Integrated Circuit (ASIC) and Field Programmable Gate Arrays (FPGA) to facilitate the trade-off between task accuracy and task latency. Quantization reduces the precision of parameters and/or activation, and thereby decreases the inference task latency by reducing the consumption of computing resources, while the workload reduction brought by cheaper arithmetic operations leads to energy and cost optimization as well. Moreover, quantization techniques make it feasible to run models on low-resource edge devices, increasing the availability of the application by allowing it to function with low connectivity. One the other hand, the techniques can also reduce the application robustness or resilience to hardware faults. Reduced precision leads to a model that is more susceptible to errors due to slight changes in the input or due to hardware faults, and thus decreases the reliability.

4.1.2. Weight Reduction

Weight reduction is a class of methods that removes redundant parameters from through pruning and parameter approximation. We reviewed the three following categories of methods in this paper:

- Pruning. The process of removing redundant or non-critical weights and/or nodes from models [17]: weight-based pruning removes connections between nodes (e.g., neurons in neural network) by setting relevant weights to zero to make the ML models sparse, while node-based pruning removes all target nodes from the ML model to make the model smaller.

- Weight Sharing. The process of grouping similar model parameters into buckets and reuse shared weights in different parts of the model to reduce model size or among models [62] to facilitate the model structure design.

- Low-rank Factorization. The process of decomposing the weight matrix into several low-rank matrices by uncovering explicit latent structures [63].

A node-based pruning method is introduced in [64] to remove redundant neurons in trained CNNs. In this work, similar neurons are grouped together following a similarity evaluation based on squared Euclidean distances and then pruned away. Experiments showed that the pruning method can remove up to 35% nodes in AlexNet with a 2.2% accuracy loss on the dataset of ImageNet [65]. A grow-and-prune paradigm is proposed in [66] to complement network pruning to learn both weights and compact Deep neural networks(DNNs) architectures during training. The method iteratively tunes the architecture with gradient-based growth and pruning of neurons and weight. Experimental results showed the compression ratio of 15.7× and 30.2× for AlexNet and VGG-16 network, respectively. This delivers a significant parameter and arithmetic operation reduction relative to pruning-only methods. In practice, pruning is often combined with a post-tuning or a retraining process to improve the model accuracy after pruning [67]. A Dense–Sparse–Dense training method is presented in [68], which introduces a post-training step to re-dense and recover the original model symmetric structure to increase the model capacity. This was shown to be efficient as it improves the classification accuracy by 1.1% to 4.3% on ResNet-50 [69], ResNet-18 [69], and VGG-16 [70]. The pruning method of SparseGPT is proposed in [71], showing that the large-scale generative pretrained transformer (GPT) family models can be pruned to at least 50% sparsity in one-shot, without any retraining, at minimal loss of accuracy. The driving idea behind this is an approximate sparse regression solver that runs entirely locally and relies solely on weight updates designed to preserve the input–output relationship for each layer. We also examine the layer-wise weight-pruning method presented in [72]. The method relies on a differential evolutionary layer-wise weight pruning operating in two distinct phases—a model-pruning phase, which analyzes each layer’s pruning sensitivity and guides the pruning process, and a model-fine-tuning phase, where removed connections are considered for recovery to improve the model’s capacity. Notably, the approach achieved impressive compression ratios of at least 10× across different models, with a standout 29× compression achieved for AlexNet. Another notable development in the field of structural pruning is the Dependency Graph (DepGraph) method [73]. This method represents a breakthrough in tackling the complex task of any structural pruning across a broad variety of neural architectures, from CNNs and RNNs to GNNs and Transformers. DepGraph introduces an automated system that models the dependency between layers to effectively group parameters that can be pruned together. The method demonstrates a promising performance across a multitude of tasks and architectures, including ResNet, DenseNet, MobileNet, and Vision transformer for images, GAT for graph, DGCNN for 3D point cloud, alongside LSTM for language.

The aforementioned pruning methods are static, as they permanently change the original network structure which may lead to a decrease in model capability. On the other hand, dynamic pruning [74] determines at run-time which layers, image channels (for CNN), or neurons would not participate in further model computing during a task. A dynamic channel pruning is proposed in [75]. This method dynamically selects which channel to skip or to process using feature boosting and suppression, which is achieved by the use of a side network trained together along the CNN to guide channel amplification and omission. This work achieved a 2× acceleration on ResNet-18 with 2.54% top-1 and 1.46% top-5 accuracy loss, respectively.

A multi-scale weight-sharing method is introduced in [76] to share weights among the convolution kernels of the same layer. To share kernel weights for multiple scales, the shared tuple of kernels is designed to have the same shape, and different kernels in the shared tuple are applied to different scales. With approximately 25% fewer parameters, the shared-weight ResNet model provides similar performance compared to the baseline ResNets [69]. Instead of looking up tables to locate the shared weight for each connection, HashedNets is proposed in [77] to randomly group connection weights into hash buckets via a low-cost hash function. These weights are tuned to adjust to the HashedNets weight sharing architecture with standard back-propagation during the training. Evaluations showed that HashedNets achieved a compression ratio of 64% with an around 0.7% accuracy improvement against a five-layer CNN baseline with the MNIST dataset [78]. Furthermore, the recent work [79] uses a gradient-based method to determine a threshold for attention scores at runtime, thereby effectively pruning inconsequential computations without significantly affecting model accuracy. Their proposed bit-serial architecture, known as LeOPArd, leverages this gradient-based thresholding to enable early termination, resulting in a significant boost in computational speed (1.9× on average) and energy efficiency (3.9× on average), with a minor trade-off in accuracy (<0.2% degradation). The related work of pruning is summarized in Table 2.

Table 2.

Summary of pruning works and main results.

Structured matrices use repeated patterns within matrices to represent model weights to reduce the number of parameters. The circulant matrix, in which all row vectors are composed of the same elements and each row vector is shifted one element to the right relative to the preceding row vector, are often used as the structured matrix to provide a good compression and accuracy for RNN-type models [80,81]. The Efficient Neural Architecture Search (Efficient NAS) via parameter sharing is proposed in [82], in which only one shared set of model parameters is trained for several model architectures, also known as child models. The shared weights are used to compute the validation losses of different architectures. Sharing parameters among child models allows efficient NAS to deliver strong empirical performances for neural network design and use fewer GPU FLOP than automatic model design approaches. The NAS approach has been successfully applied to design model architectures for different domains [83] including CV and NLP.

As for low-rank factorization, to find the optimal decomposed matrices to substitute the original weight matrix, Denton et al. [84] analyze three decomposition methods on pre-trained weight matrices: (i) singular-value decomposition, (ii) canonical polyadic decomposition, and (iii) blustering approximation. Experimental results on a 15-layer CNN demonstrate that singular-value decomposition achieved the best performance by a compression ratio of 2.4× to 13.4× on different layers along with a 0.84% point of top-one accuracy loss in the ImageNet dataset. A more recent work [85] proposes a data-aware low-rank compression method (DRONE) for weight matrices of fully-connected and self-attention layers in large-scale NLP models. As weight matrices in NLP models, such as BERT [86], do not show obvious low-rank structures, a low-rank computation could still exist when the input data distribution lies in a lower intrinsic dimension. The proposed method considers both the data distribution term and the weight matrices to provide a closed-form solution for the optimal rank-k decomposition. Experimental results show that DRONE can achieve 1.92× speedup on the Microsoft Research Paraphrase Corpus (MRPC) [87] task with only 1.5% loss in accuracy, and when DRONE is combined with distillation, it reaches 12.3× speedup on natural language inference tasks of MRPC, Corpus of Linguistic Acceptability (CoLA) [88], and Semantic Textual Similarity (STS) [89].

Overall, weight reduction directly reduces the ML model size by removing uncritical parameters. When performing tasks after weight reduction, ML models use less memory and require fewer arithmetic operations, which directly reduce the task latency with less workload and improve the computational resource efficiency. This is critical for time-sensitive applications to improve the perceived availability and responsiveness of the system. In addition, such improvements contribute to optimized energy consumption and cost. Similar to quantization, the weight-reduction techniques potentially make the model less resilient to certain types of hardware faults and such decrease the reliability. For example, a fault that affects a critical weight in a pruned network might have a bigger impact on the output than the same fault in an unpruned network, simply because there are fewer weights to ‘absorb’ the fault.

4.1.3. Knowledge Distillation

Knowledge Distillation is a procedure where a neural network is trained on the output of another network along with the original targets in order to transfer knowledge between the ML model architectures [90]. In this process, a large and complex network, or an ensemble model, is trained with a labelled dataset for a better task performance. Afterwards, a smaller network is trained with the help of the cumbersome model via a loss function L, measuring the output difference of the two models. This small network should be able to produce comparable results, and in the case of over-fitting, it can even be made capable of replicating the results of the cumbersome network.

A knowledge-distillation framework for a fast objects detection task is proposed in [91]. To address the specific challenges of object detection in the form of regression, region proposals, and less-voluminous labels, two aspects are considered: (i) a weighted cross-entropy loss, to address the class imbalance, and (ii) a teacher-bounded loss, to handle the regression component and adaptation layers to better learn from intermediate teacher distributions. Evaluations with the datasets of Pattern Analysis, Statistical Modelling and Computational Learning (PASCAL) [92], Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) [93], and COCO showed accuracy improvements by 3% to 5%. Wen et al. [94] argued that overly uncertain supervision of teachers can negatively influence the model’s results. This is due to the fact that the knowledge from a teacher is useful but still not exactly right compared with a ground truth. Knowledge adjustment and dynamic temperature distillation are introduced in this work to penalize incorrect supervision and overly uncertain predictions from the teacher, making student models more discriminatory. Experiments on CIFAR-100 [95], CINIC-10 [96], and Tiny ImageNet [97] showed nearly state-of-the-art method accuracy.

MiniVit [98] proposes to compress vision transformers with weight sharing across layers and weight distillation. A linear transformation is added on each layer’s shared weights to increase weight diversity. Three types of distillation for transformer blocks are considered in this work: (i) prediction-logit distillation, (ii) self-attention distillation, and (iii) hidden-state distillation. Experiments showed MiniViT can reduce the size of the pre-trained Swin-B transformer by 48% while achieving an increase of 1.0% in Top-1 accuracy on ImageNet.

Overall, knowledge distillation directly reduces the ML model size by simplifying model structures. Compared to the source model, the target model has a more compact and distilled structure with less parameters. Hence the workload of a task is reduced, leading to a better computational efficiency, higher availability, low task latency, and optimized energy consumption and cost. The distilled models potentially have higher reliability because they exert less stress on the hardware, reducing the likelihood of hardware faults or overheating. However, depending on the specific setup, the faults could have a greater impact on the model applications.

4.1.4. Activation Approximation

Besides the neural network’s size complexity, i.e., in terms of the number of parameters, and architecture complexity, i.e., in the terms of layers, activation functions also impact the task latency of a neural network. Activation functions approximation replaces non-linear activation functions (e.g., sigmoid and tanh) in ML models with less computationally expensive functions (e.g., ReLU) to simplify the calculation or convert the computationally expensive calculation to series of lookup tables.

In an early work [99], the Piece-wise Linear Approximation of Non-linear Functions (PLAN) was studied. The sigmoid function was approximated by a combination of straight lines, and the gradient of the lines were chosen such that all the multiplications were replaced by simple shift operations. Compared to sigmoid and tanh, Hu et al. [100] show that ReLU, among other linear functions, is not only less computationally expensive but also proved to be more robust to handle the neural network vanishing gradient problem, in which the error dramatically decreases along with the back-propagation process in deep neural networks.

Activation approximation improves the computing resource usage by reducing the required number of arithmetic operations in ML models, and thus decreases the task latency with an acceptable increase in task error.

4.2. Distributed Inference

Distributed Inference divides ML models into different partitions and carries out a collaborative inference by allocating partitions to be distributed over edge resources and computing in a distributed manner [101].

The target edge resources to distribute the inference task can be broadly divided into three levels: (i) local processors in the same edge device [102], (ii) interconnected edge devices [101], and (iii) edge devices and cloud servers [103]. Among the three levels, an important research challenge is to identify the partition points of ML models by measuring data exchanges between layers to balance the usage of local computational resources and bandwidth among distributed resources.

To tackle the tightly coupled structure of CNN, a model parallelism optimization is proposed in [104], where the objective is to distribute the inference on edge devices via a decoupled CNN structure. The partitions are optimized based on channel group to partition the convolutional layers and then an input-based method to partition the fully connected layers, further exposing the high degree of parallelism. Experiments show that the decoupled structure can accelerate the inference of large-scale ResNet-50 by 3.21× and reduce 65.3% memory use with 1.29% accuracy improvement. Another distributed inference framework is also proposed in [105] to decompose a complex neural network into small neural networks and apply class-aware pruning on each small neural network on the edge device. The inference is performed in parallel while considering the available resources on each device. The evaluation shows that the framework achieves up to 17× speed-up when distributing a variant of VGG-16 over 20 edge devices, with around a 0.5% loss in accuracy.

Distributed inference can improve the end-to-end task latency by increasing the computing parallelism over a distributed architecture. At a price of bandwidth usage and network dependency, the overall energy efficiency and cost are optimized. By distributing the inference task, the load on individual devices is reduced, allowing more tasks to be processed concurrently, which can increase the availability of the ML application. In a distributed configuration, if one node fails, the task can be reassigned to another node, thereby increasing the overall system reliability.

4.3. Other Inference Acceleration Techniques

There exist other ways for accelerating inference in the literature. These have been categorized in a separate category as they are not as popular as the previously discussed techniques. These include: (i) Early Exit of Inference (EEoI), (ii) Inference Cache, and (iii) Model-Specific Inference Acceleration. We briefly review them in the following.

4.3.1. Early Exit of Inference (EEoI)

The Early Exit of Inference (EEoI) is powered by a deep network architecture augmented with additional side branch classifiers [106]. This allows prediction results for a large portion of test samples to exit the network early via these branches when samples can already be inferred with high confidence.

BranchyNet, proposed in [106], is based on the observation that features learned at an early layer of a network may often be sufficient for the classification of many data points. By adding branch structures and exit criteria to neural networks, BranchyNet is trained by solving a joint optimization problem on the weighted sum of the loss functions associated with the exit points. During the inference, BranchyNet uses the entropy of a classification result as a measure of confidence in the prediction at each exit point and allows the input sample to exit early if the model is confident in the prediction. Evaluations have been conducted with LeNet [78], AlexNet, and ResNet on MNIST, CIFAR-10 datasets, showing BranchyNet can improve accuracy and significantly reduce the inference time of the network by 2×–6×.

To improve the modularity of the EEoI methods, a plug-and-play technique named Patience-based Early Exit is proposed in [107] for single-branch models (e.g., ResNet, Transformer). The work couples an internal classifier with each layer of a pre-trained language model and dynamically stops inference when the intermediate predictions of the internal classifiers remain unchanged for a pre-defined number of steps. Experimental results with the ALBERT model [108] show that the technique can reduce the task latency by up to 2.42× and slightly improve the model accuracy by preventing it from overthinking and exploiting multiple classifiers for prediction.

EEoI can statistically improve the latency of inference tasks by reducing the inference workload at the price of a decrease in the accuracy. By increasing throughput, the technique leads to better availability. The side branch classifiers slightly increase the memory use during inference, while the task computational efficiency is higher as in most of cases where side branch classifiers can stop the inference earlier. In scenarios where a high level of certainty is needed, an early exit might introduce a higher probability of error, potentially compromising the reliability of the system. Generally, a correctly designed and trained EEoI technique is able to improve energy efficiency and optimize cost.

4.3.2. Inference Cache

Inference Cache saves models or models’ inference results to facilitate future inferences of similar interest. This is motivated by the fact that ML tasks requested by nearby users within the coverage of an edge node may exhibit spatio-temporal locality [109]. For example, users within the same area might request recognition tasks for the same object of interest, which introduces redundant computation of deep learning inference.

Besides the Cachier [109], which caches ML models with edge server for recognition applications and shows 3× speedup in task latency, DeepCache [110] targets the cache challenge for a continuous vision task. Given input video streams, DeepCache firstly discovers the similarity between consecutive frames and identifies reusable image regions. During inference, DeepCache maps the matched reusable regions on feature maps and fills the reusable regions with cached feature map values instead of real Convolutional Neural Network (CNN) execution. Experiments show that DeepCache saves up to 47% inference execution time and reduces system energy consumption by 20% on average. A hybrid approach, semantic memory design (SMTM), is proposed in [111], combining inference cache with EEoI. In this work, low-dimensional caches are compressed with an encoder from high-dimensional feature maps of hot-spot classes. During the inference, SMTM extracts the intermediate features per layer and matches them with the cached features in fast memory: once matched, SMTM skips the rest of the layers and directly outputs the results. Experiments with AlexNet, GoogLeNet [112], ResNet50, and MobileNet V2 [113] show that SMTM can speed up the model inference over standard approaches with up to 2× and prior cache designs with up to 1.5× with only 1% to 3% accuracy loss.

Inference cache methods show their advantages of reducing task latency on continuous inference tasks or task batches. Since the prediction is usually made together with current input and previous caches, the accuracy can drop slightly. On the computational efficiency front, the cache lookup increases the computing workload and memory usage, while the global computational efficiency is improved across tasks, as the inference computation for each data sample does not start from scratch. Energy consumption and cost are reduced in the context of tasks sharing spatio-temporal similarity.

4.3.3. Model-Specific Inference Acceleration

Besides the above-mentioned edge inference techniques that can, in theory, be applied to most of ML model structures, other research efforts aim at accelerating the inference process for specific model structures. We briefly review the representative methods of inference acceleration for three mainstream neural network structures: (i) CNN, (ii) Recurrent Neural Network (RNN), and (iii) Transformers.

For CNN models, MobileNets [46] constructs small and low-latency models based on depth-wise separable convolution. This factorizes a standard convolution into a depth-wise convolution and a convolution, as a trade off between latency and accuracy during inference. The latest version of MobileNets V3 [114] adds squeeze and excitation layers [115] to the expansion-filtering-compression block in MobileNets V2 [113]. As a result, it gives unequal weights to different channels from the input when creating the output feature maps. Combined with a later neural architecture search and NetAdapt [116], MobileNets V3-Large reaches 75.2% accuracy and 156ms inference latency on ImageNet classification with a single-threaded core on a Google Pixel 1 phone. GhostNet [117] also uses a depth-wise convolution to reduce the required high parameters and FLOPs induced by normal convolution: given an input image, instead of applying the filters on all the channels to generate one channel of the output, the input tensors are sliced into individual channels and the convolution is then applied only on one slice. During inference, x% of the input is processed by standard convolution and the output of this is then passed to the second depth-wise convolution to generate the final output. Experiments demonstrate that GhostNet can achieve higher recognition performance, i.e., 75.7% better top-1 accuracy than MobileNets V3 with similar computational cost on the ImageNet dataset. However, follow-up evaluations show that depth-wise convolution is more suitable for ARM/CPU and not friendly for GPU; thus, it does not provide a significant inference speedup in practice.

A real-time RNN acceleration framework is introduced in [118] to accelerate RNN inference for automatic speech recognition. The framework consists of a block-based structured pruning and several specific compiler optimization techniques including matrix reorder, load-redundant elimination, and a compact data format for pruned model storage. Experiments achieve real-time RNN inference with a Gated Recurrent Unit (GRU) model on an Adreno 640-embedded GPU and show no accuracy degradation when the compression rate is not higher than 10×.

Motivated by the way we pay visual attention to different regions of an image or correlate words in one sentence, a transformer is proposed in [119] showing encouraging results in various machine learning domains [120,121]. On the downside, transformer models are usually slower than competitive CNN models [122] in terms of task latency due to the massive number of parameters, quadratic-increasing computation complexity with respect to token length, non-foldable normalization layers, and lack of compiler-level optimizations. Current research efforts, such as [123,124], mainly focus on simplifying the transformer architecture to fundamentally improve inference latency, among which the recent EfficientFormer [125] achieves 79.2% top-1 accuracy on ImageNet-1K with only 1.6ms inference latency on an iPhone 12. In this work, a latency analysis is conducted to identify the inference bottleneck on different layers of vision transformer, and the EfficientFormer relies on a dimension-consistent structure design paradigm that leverages hardware-friendly 4D MetaBlocks and powerful 3D multi-scale hierarchical framework blocks along with a latency-driven slimming method to deliver real-time inference at MobileNet speed.

Generally, model-specific inference acceleration techniques lower the workload of an inference task and thus reduce the task latency within the same edge environment, resulting in higher availability. Though computational resources usage can vary among techniques, most work reports an acceptable accuracy loss in exchange for a considerable decrease in resources usage. In the case of model over-fitting, inference acceleration can improve the accelerated model accuracy. The total energy consumption and cost are therefore reduced. Under the assumption that the cached data are properly managed, the ML system provides consistent responses to the same input, which can enhance the reliability of the system.

5. Edge Learning

Edge learning techniques directly build ML models on native edge devices with local data. Distributed learning, transfer learning, meta-learning, self-supervised learning, and other learning paradigms fitting into Edge ML are reviewed in this section to tackle different aspects of Edge ML requirements.

5.1. Distributed Learning

Compared to cloud-based learning in which raw or pre-processed data are transmitted to cloud for model training, distributed learning (DL) in the edge divides the model training workload onto the edge nodes, i.e., edge servers and/or edge clients, to jointly train models with a cloud server by taking advantage of individual edge computational resources. Modern distributed learning approaches tend to only transmit locally updated model parameters or locally calculated outputs to the aggregation servers, i.e., cloud or edge, or the next edge node: in the server–client configuration, the aggregation server constructs the global model with all shared local updates [126]. On the other hand, in the peer-to-peer distributed learning setup, the model construction is achieved in an incremental manner along with the participating edge nodes together [127].

Distributed learning can be applied to all three basic ML paradigms, namely, supervised learning, unsupervised learning, and reinforcement learning. Instead of learning from one optimization procedure , distributed learning constructs a global model by aggregating the optimization results of all participant nodes, as formalized by Equation (8):

where is the optimization procedure driven by the meta-knowledge of the participant node , and n is the number of distributed learning nodes. stands for the data used for learning, which can be for example the labelled dataset D for supervised learning, the unlabelled dataset for unsupervised learning, or the MDP M for reinforcement learning. is the corresponding loss on the given data and ⨆ is the aggregation algorithm (e.g., FedAvg [128] in the case of Federated Learning) to update the model by the use of all participants’ optimization results (e.g., model parameters, gradients, outputs, etc.).

The edge distributed learning results into two major advantages:

- Enhanced privacy and security: Edge data often contain sensitive information related to personal or organizational matters that the data owners are reluctant to share. By transmitting only updated model parameters instead of the data, the distributed learning on the edge trains ML models in a privacy-preserving manner. Moreover, the reduced frequency of data transmission enhances the data security by restraining sensitive data only to the edge environment.

- Communication and bandwidth optimization: Uploading data to the cloud leads to a large transmission overhead and is the bottleneck of current learning paradigm [129]. A significant amount of communication is reduced by processing data in the edge nodes, and bandwidth usage optimized via edge distributed learning.

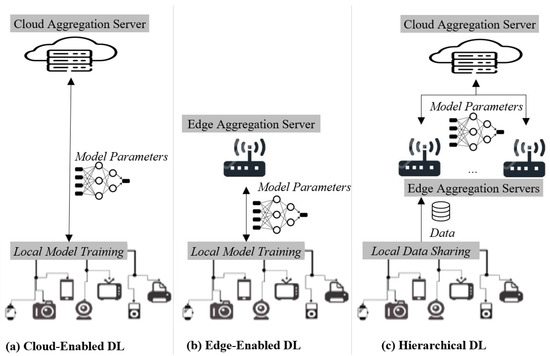

From the architectural perspective, there are three main organizational architectures [19,20] that exist to achieve distributed learning in the server–client configuration, as illustrated in Figure 3 and introduced as follows:

Figure 3.

The distributed learning architectures available in the literature.

- Cloud-enabled DL. Given a number of distributed and interconnected edge nodes, cloud-enabled DL (see Figure 3a) constructs the global model by aggregating in the cloud the local models’ parameters. These parameters are computed directly in each edge device. Periodically, the cloud server shares the global model parameters to all edge nodes so that the upcoming local model updates are made on the latest global model.

- Edge-enabled DL. In contrast to cloud-enabled DL, Edge-enabled DL (see Figure 3b) uses a local and edge server to aggregate model updates from its managed edge devices. Edge devices, with the management range of an edge server, contribute to the global model training on the edge aggregation server. Since the edge aggregation server is located near the edge devices, edge-enabled DL does not necessitate communications between the edge and the cloud, which thus reduces the communication latency and brings task offline capability. On the other hand, edge-enabled DL is often resource-constrained and can only support a limited number of clients. This usually results in a degradation in task performance over time.

- Hierarchical DL. Hierarchical DL employs both cloud and edge aggregation servers to build the global model. Generally, edge devices within the range of a same-edge server transmit local data to the corresponding edge aggregation server to individually train local models, and then local models’ parameters are shared with the cloud aggregation server to construct the global model. Periodically, the cloud server shares the global model parameters to all edge nodes (i.e., servers and devices), so that the upcoming local model updates are made on the latest global model. By this means, several challenges of distributed learning, such as Non-Identically Distributed Data (Non-IID) [130], imbalanced class [131], and the heterogeneity of edge devices [132] with diverse computation capabilities and network environments, can be targeted in the learning design. In fact, as each edge aggregation server is only responsible for training the local model with the collected data, the cloud aggregation server does not need to deal with data diversity and device heterogeneity across the edge nodes.

In the following, we review two distributed learning paradigms in the context of Edge ML: (i) federated learning, and (ii) split learning.

5.1.1. Federated Learning

Federated Learning (FL) [126] enables edge nodes to collaboratively learn a shared model while keeping all the training data on edge nodes, decoupling the ability to conduct machine learning from the need to store the data in the cloud. In each communication round, the aggregation server distributes the global model’s parameters to edge training nodes, and each node trains its local model instance with newly received parameters and local data. The updated model parameters are then transmitted to the aggregation server to update the global model. The aggregation is commonly realized via federated average (FedAvg) [128] or Quantized Stochastic Gradient Descent (QSGD) [133] for neural networks, involving multiple local Stochastic Gradient Descent (SGD) updates and one aggregation by the server in each communication round.

FL is being widely studied in the literature. In particular, the survey in [19] summarizes and compares more than forty existing surveys on FL and edge computing regarding the covered topics. According to the distribution of training data and features among edge nodes, federated learning can be divided into three categories [134]: (i) Horizontal Federated Learning (HFL), (ii) Vertical Federated Learning (VFL), and (iii) Federated Transfer Learning (FTL). HFL refers to the federating learning paradigm where training data across edge nodes share the feature space but have different ones in samples. VFL federates models trained from data sharing the sample IDs but different feature space across edge nodes. Finally, FTL refers to the paradigm where data across edge nodes are correlated but differ in both samples and feature space.

HFL is widely used to handle homogeneous feature spaces across distributed data. In addition to the initial work of FL [126], showing considerable latency and throughput when performing the query suggestion task in mobile environments. HFL is highly popular in the healthcare domain [135] where it is, for instance, used to learn from different electronic health records across medical organizations without violating patients’ privacy and improve the effectiveness of data-hungry analytical approaches. To tackle the limitation that HFL does not handle heterogeneous feature spaces, the continual horizontal federated learning (CHFL) approach [136] splits models into two columns corresponding to common features and unique features, respectively, and jointly trains the first column by using common features through HFL and locally trains the second column by using unique features. Evaluations demonstrate that CHFL can handle uncommon features across edge nodes and outperform the HFL models with are only based on common features.

As a more challenging subject than HFL, VFL is studied in [137] to answer the entity resolution question, which aims at finding the correspondence between samples of the datasets and learning from the union of all features. Since loss functions are normally not separable over features, a token-based greedy entity-resolution algorithm is proposed in [137] to integrate the constraint of carrying out entity resolution within classes on a logistic regression model. Furthermore, most studies of VFL only support two participants and focus on binary class logistic regression problems. A Multi-participant Multi-class Vertical Federated Learning (MMVFL) framework is proposed in [138]. MMVFL enables label sharing from its owner to other VFL participants in a privacy-preserving manner. Experiment results on two benchmark multi-view learning datasets, i.e., Handwritten and Caltech7 [139], show that MMVFL can effectively share label information among multiple VFL participants and match multi-class classification performance of existing approaches.

As an extension of the federated learning paradigm, FTL deals with the learning problem of correlated data from different sample spaces and feature spaces. FedHealth [140] is a framework for wearable healthcare targeting the FTL as a union of FL and transfer learning. The framework performs data aggregation through federated learning to preserve data privacy and builds relatively personalized models by transfer learning to provide adapted experiences in edge devices. To address the data scarcity in FL, an FTL framework for cross-domain prediction is presented in [141]. The idea of the framework is to share existing applications’ knowledge via a central server as a base model, and new models can be constructed by converting a base model to their target-domain models with limited application-specific data using a transfer learning technique. Meanwhile, the federated learning is implemented within a group to further enhance the accuracy of the application-specific model. The simulation results on COCO and PETS2009 [142] datasets show that the proposed method outperforms two state-of-the-art machine learning approaches by achieving better training efficiency and prediction accuracy.

Besides the privacy-preserving nature of FL [143], and in addition to the research efforts on HFL, VFL, and FTL, challenges have been raised in federated learning oriented to security [144], communication [145], and limited computing resources [146]. This is important as edge devices usually have higher task and communication latency and are in vulnerable environments. In fact, low-cost IoT and Cyber-Physical System (CPS) devices are generally vulnerable to attacks due to the lack of fortified system security mechanisms. Recent advances in cyber-security for federated learning [147] reviewed several security attacks targeting FL systems and the distributed security models to protect locally residual data and shared model parameters. With respect to the parameter aggregation algorithm, the commonly used FedAvg employs the aggregation server to centralize model parameters; thus, attacking the central server breaks the FL’s security and privacy. Decentralized FedAvg with momentum (DFedAvgM) [148] is presented on edge nodes that are connected by an undirected graph. In DFedAvgM, all clients perform stochastic gradient descent with momentum and communicate with their neighbors only. The convergence is proved under trivial assumptions, and evaluations with ResNet-20 on CIFAR-10 dataset demonstrate no significant accuracy loss when local epoch is set to 1.

From a communication perspective, although FL evades transmitting training data over a network, the communication latency and bandwidth usage for weights or gradients shared among edge nodes are inevitably introduced. The trade-off between communication optimization and the aggregation convergence rate is studied in [149]. A communication-efficient federated learning method with Periodic Averaging and Quantization (FedPAQ) is introduced. In FedPAQ, models are updated locally at edge devices and only periodically averaged at the aggregation server. In each communication round between edge training devices and the aggregation server, only a fraction of devices participate in the parameters aggregation. Finally, a quantization method is applied to quantize local model parameters before sharing with the server. Experiments demonstrate a communication–computation trade-off to improve communication bottleneck and FL scalability. Furthermore, knowledge distillation is used in communication-efficient federated learning technique FedKD [150]. In FedKD, a small mentee model and a large mentor model learn and distill knowledge from each other. It should be noted that only the mentee model is shared by different edge nodes and learns collaboratively to reduce the communication cost. In such a configuration, different training nodes have different local mentor models, which can better adapt to the characteristics of local datasets to achieve personalized model learning. Experiments with datasets on personalized news recommendations, text detection, and medical named entity recognition show that FedKD maximally can reduce 94.89% of communication cost and achieve competitive results with centralized model learning.

Federated learning on resource-constrained devices limit both communication and learning efficiency. The balance between convergence rate and allocated resources in FL is studied in [151], where an FL algorithm FEDL is introduced to treat the resource allocation as an optimization problem. In FEDL, each node solves its local training approximately till a local accuracy level is achieved. The optimization is based on the Pareto efficiency model [152] to capture the trade-off between the wall-clock training time and edge nodes energy consumption. Experimental results show that FEDL outperforms the vanilla FedAvg algorithm in terms of convergence rate and test accuracy. Moreover, computing resources can be not only limited but also heterogeneous at edge devices. A heterogeneity-aware federated learning method, Helios, is proposed in [153] to tackle the computational straggler issue. This implies that the edge devices with weak computational capacities among heterogeneous devices may significantly delay the synchronous parameter aggregation. Helios identifies each device’s training capability and defines the corresponding neural network model training volumes. For straggling devices, a soft-training method is proposed to dynamically compress the original identical training model into the expected volume through a rotating neuron training approach. Thus, the stragglers can be accelerated while retaining the convergence for local training as well as federated collaboration. Experiments show that Helios can provide up to training acceleration and maximum 4.64% convergence accuracy improvement in various collaboration settings.

Table 3 summarizes the reviewed works related to FL topics and challenges. Besides the efforts for security, communication, and resources, a personalized federated learning paradigm is proposed in [154], so that each client has their own personalized model as a result of federated learning. As the existence of a connected subspace containing diverse low-loss solutions between two or more independent deep networks has been discovered, the work combines this property with the model mixture-based personalized federated learning method for improved performance of personalization. Experiments on several benchmark datasets demonstrated that the method achieves consistent gains in both personalization performance and robustness to problematic scenarios possible in realistic services.

Table 3.

FL related work.

Overall, FL is designed primarily to protect data privacy during model training. Sharing models and performing distributed training increases the computation parallelism and reduces the communication cost, and thus reduces both the end-to-end training task latency and the communication latency. Moreover, specific FL design can provide enhanced security, optimized bandwidth usage and efficient computing resource usage. The edge-enabled FL as an instance of the edge-enabled DL can further bring offline capability to ML models. Generally, since local devices can continue training on the local data even if the network connection is down, which can improve the availability of the application during network failures or disruptions. Implementation requires the careful management and coordination of updates from multiple devices, handling devices with differing computational capabilities, and dealing with potential delays in communication. These factors can impact the reliability of applications of Federated Learning.

5.1.2. Split Learning

As another distributed collaborative training paradigm of ML models for data privacy, Split Learning (SpL) [155] divides neural networks into multiple sections. Each section is trained on a different node, either a server or a client. During the training phase, the forward process firstly computes the input data within each section and transmits the outputs of the last layer of each section to the next section. Once the forward process reaches the last layer of the last section, a loss is computed on the given input. The backward propagation shares the gradients reversely within each section and from the first layer of the last section to the previous sections. During the backward propagation, the model parameters are updated in the meantime. The data used during the training process are stored across servers or clients which take part in the collaborative training. However, none of the involved edge nodes can review data from other sections. The neural network split into sections and trained via SpL is called Split Neural Network (SNN).

The SpL method proposed in [155] splits the training between high-performance servers and edge clients, and orchestrates the training over sections into three steps: (i) training request, (ii) tensor transmission, and (iii) weights update. Evaluations with VGG and Resnet-50 models on MNIST, CIFAR-10, and ImageNet datasets show a significant reduction in the required computation operations and communication bandwidth by edge clients. This is because only the first few layers of SNN are computed on the client side, and only the gradients of few layers are transmitted during backward propagation. When a large number of clients are involved, the validation accuracy and convergence rate of SpL are higher than FL, as general non-convex optimization averaging models in a parameter space could produce an arbitrarily bad model [156].

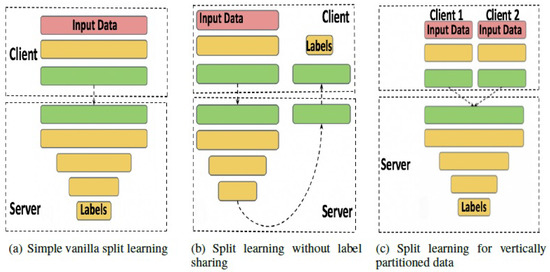

The configuration choice to split a neural network across servers and clients are subject to design requirements and available computational resources. The work in [157] presents several configurations of SNN catering to different data modalities, of which Figure 4 illustrates three representative configurations: (i) in vanilla SpL, each client trains a partial deep network up to a specific layer known as the cut layer, and the outputs at the cut layer are sent to a server which completes the rest of the training. During the parameters update, the gradients are back-propagated at the server from its last layer until the cut layer. The rest of the back propagation is completed by the clients. (ii) In the configuration of SpL without label sharing, the SNN is wrapped around at the end layers of the servers. The outputs of the server layers are sent back to clients to obtain the gradients. During backward propagation, the gradients are sent from the clients to servers and then back again to clients to update the corresponding sections of the SNN. (iii) SpL for vertically partitioned data allows multiple clients holding different modalities of training data. In this configuration, each client holding one data modality trains a partial model up to the cut layer, and the cut layer from all the clients are then concatenated and sent to the server to train the rest of the model. This process is continued back and forth to complete the forward and backward propagation. Although the configurations show some versatile applications for SNN, other configurations remain to be explored.

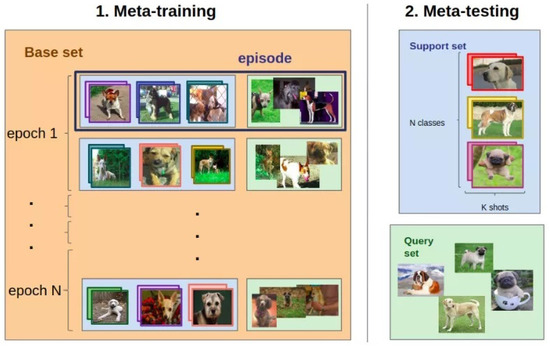

Figure 4.