Abstract

Despite several solutions and experiments have been conducted recently addressing image super-resolution (SR), boosted by deep learning (DL), they do not usually design evaluations with high scaling factors. Moreover, the datasets are generally benchmarks which do not truly encompass significant diversity of domains to proper evaluate the techniques. It is also interesting to remark that blind SR is attractive for real-world scenarios since it is based on the idea that the degradation process is unknown, and, hence, techniques in this context rely basically on low-resolution (LR) images. In this article, we present a high-scale (8×) experiment which evaluates five recent DL techniques tailored for blind image SR: Adaptive Pseudo Augmentation (APA), Blind Image SR with Spatially Variant Degradations (BlindSR), Deep Alternating Network (DAN), FastGAN, and Mixture of Experts Super-Resolution (MoESR). We consider 14 datasets from five different broader domains (Aerial, Fauna, Flora, Medical, and Satellite), and another remark is that some of the DL approaches were designed for single-image SR but others not. Based on two no-reference metrics, NIQE and the transformer-based MANIQA score, MoESR can be regarded as the best solution although the perceptual quality of the created high-resolution (HR) images of all the techniques still needs to improve.

1. Introduction

Medical imaging [1,2,3,4], internet video delivery [5,6,7], surveillance and security via person identification [8,9,10], and remote sensing [11,12,13,14,15,16] are just some examples of real-world applications where image super-resolution (SR) has been used. In SR, we aim at recovering high-resolution (HR) images from low-resolution (LR) ones. This is a non-trivial and generally ill-posed problem since multiple HR images exist corresponding to a unique LR image [17]. It is important to remark that, in this article, image resolution means precisely the dimensionality of the image. For instance, an image has a resolution of pixels. This is not to be confused with other definitions of resolution existing in certain communities, such as remote sensing (spatial resolution, radiometric resolution, temporal resolution, …).

Several classical methods have been designed for image SR, such as bicubic interpolation and Lanczos resampling [18], edge-based methods [19], statistical methods [20], among others. But, naturally, with all the developments related to deep learning (DL) and deep neural networks (DNNs) [21], a significant number of strategies have been proposed addressing SR via DL/DNNs as reported in recent secondary studies [11,17]. Among the DL techniques, convolutional neural networks (CNNs) [13,22,23,24], generative adversarial networks (GANs) [12,14,25,26], and attention-based networks [15,27,28] have been employed to solve image SR problems.

The majority of studies published up to now focus on supervised SR where models are trained with both LR images and the corresponding HR ones [11,17]. But, in reality, it is not easy to have images from the same scene but with distinct resolutions so that having the pairs LR-HR for training is not as direct as it is supposed to be. Hence, in unsupervised SR [29], only unpaired LR-HR images are available for training, so that the models are more able to learn real-world LR-HR mappings.

But an even more attractive strategy for real-world scenarios is blind image SR [30,31] which is based on the idea that the degradation process/kernels is/are unknown, and, hence, techniques in this context rely basically on LR images, not requiring the high-resolution ones. There is an increasing interest in blind image SR.

Despite the huge number of proposed techniques and experiments boosted by DL techniques [11,17], they do not usually design evaluations with high scaling factors, capping it at 2× or 4×. One of the exceptions is the experiment presented in [11] where authors considered 2×, 4×, and 8× scaling factors. However, the authors considered a multi-sensor remote sensing dataset consisting of mostly publicly available very HR satellite images. Even if the images are from different satellites and regions, eventually the diversity of the images and feature spaces are not enough to proper evaluate SR techniques.

Several other datasets have been used for training image SR approaches, such as BSDS300 [32], BSDS500 [33], DIV2K [34], PIRM [35], Set5 [36], Set14 [37], and Urban100 [38]. These datasets comprise different categories but still lack of images of other domains. For instance, all the datasets above do not seem to present images obtained via satellite sensors or medical images. It is interesting to stress that images taken by sensors embedded in satellites or airplanes have considerable differences compared to natural images due to different shooting content and shooting methods [39]. Moreover, as previously remarked, evaluations are usually limited to the 4× scaling factor. In [40], results are presented for the 8× scaling factor but considering only the Set5 and Set14 datasets. We believe that performing experiments addressing not only high scaling factors (e.g., 8×) but also datasets of different domains is very important to better identify the most adequate image SR approaches.

Image quality assessment (IQA) metrics (methods) are roughly divided into two categories: full-reference (FR-IQA) and no-reference (NR-IQA) [41]. In FR-IQA, we evaluate the similarity between a distorted image and a given reference image, and classical metrics which have been extensively used are peak signal-to-noise ratio (PSNR) [11] and structural similarity index measure (SSIM) [42]. On the other hand, NR-IQA metrics are proposed to assess image quality without a reference image, being more suitable for perceptual quality. Natural image quality evaluator (NIQE) [43] and perception index (PI) [35] are two traditional metrics. However, recent metrics can be devised based on DNNs, as presented in the New Trends in Image Restoration and Enhancement (NTIRE) workshop held in 2022 at the Conference on Computer Vision and Pattern Recognition (CVPR 2022) [41]. And, for the first time, NTIRE 2022 Challenge on Perceptual Image Quality Assessment had a track addressing NR-IQA. We believe that for evaluating approaches in a completely “blind” setting, NR-IQA metrics are more interesting since they do not demand reference images, only the LR ones.

In this article, we present a high-scale (8×) controlled experiment which evaluates five recent DL techniques tailored for blind image SR: Adaptive Pseudo Augmentation (APA) [44], Blind Image SR with Spatially Variant Degradations (BlindSR) [45], Deep Alternating Network (DAN) [46], FastGAN [47], and Mixture of Experts Super-Resolution (MoESR) [48]. Relying basically on public sources, we adapt and create 14 LR image datasets (each one with 100 samples) from five different broader domains: Aerial, Fauna, Flora, Medical, and Satellite. Another distinctive characteristic of our evaluation is that some of the DL approaches were designed for single-image SR but others not.

Two NR-IQA metrics were selected, being the classical NIQE and the recent vision transformer(ViT)-based multi-dimension attention network for no-reference image quality assessment (MANIQA) score [49], to assess the techniques. The MANIQA model was the winner of the NTIRE 2022’s NR-IQA track obtaining a performance considerably higher than classical strategies, such as PI and NIQE.

The contributions of this study are:

- We design and execute a controlled experiment considering five recent blind image SR approaches and focusing on a specific large scaling factor (8×). Note that we decided to present a more detailed analysis of the results, trying to explain in more depth the behaviours of the approaches. We also perform a correlation analysis taking into account the HR images produced by the two best DL techniques. We believe that independent and unbiased evaluations are important to indicate the most suitable approaches to be selected by professionals who need to work with image SR in their practical settings;

- We adapt and create 14 LR image datasets from five different broader domains: Aerial, Fauna, Flora, Medical, and Satellite. We believe that making available these datasets to the community, obtained from quite distinct domains, is interesting to provide other possibilities to evaluate the blind image SR techniques considering not only single-image but non-single-image techniques (we had indeed done this);

- We consider a recent DNN-based NR-IQA metric (MANIQA score) in addition to a classical one (NIQE), and present some remarks by using such metrics.

This article is structured as follows. Section 2 briefly presents the theoretical background and related work. In Section 3, we show in detail the design of our experiment. Results are in Section 4 while Section 5 discusses some important points. In Section 6, conclusions and feature directions are pointed out.

2. Background

This section presents an overview of the theoretical background related to image SR. More details can be seen elsewhere [11,17]. At the end, we also discuss about related work. The goal of image SR is to recover the corresponding HR images from the LR images. The LR image, , is usually modelled as the output of the following degradation

where means a degradation mapping function, is the corresponding HR image, and represents the parameters of the degradation process. When the degradation process ( and ) is unknown, which is very common to happen, and only LR images are available for the techniques, we have the so called blind image SR. In this case, the idea is to recover an HR approximation, , of the ground truth HR image, , from the LR image as presented below

where is the SR model and are the parameters of .

Despite of the fact that the degradation process is usually unknown, several studies model the degradation as follows

where ↓ is a downsampling operation and s is a scaling factor. But, some studies [50] do such a degradation modelling as presented below

where means the convolution between a blur kernel, , and the HR image, , and s is a scaling factor. Moreover, is some additive white Gaussian noise with standard deviation .

2.1. Metrics

As previously mentioned, there are several metrics for IQA, being full-reference (FR-IQA) or without relying on a reference (NR-IQA). We present here a brief discussion about the two NR-IQA metrics we have considered in this research: NIQE [43] and MANIQA score [49].

The main motivation to develop NIQE is that, at the time it was proposed, NR-IQA models required knowledge about anticipated distortions in the form of training samples and corresponding human opinion scores. Thus, NIQE is a completely blind approach which only uses measurable deviations from statistical regularities observed in natural images, without training on human-rated distorted images and, hence, without any exposure to distorted images. The idea is to construct a “quality aware” collection of statistical features based on a successful space domain natural scene statistic (NSS) model.

NIQE is derived by computing 36 identical NSS features from patches of the same size, , from the image to be analysed, fitting them with a multivariate Gaussian model (MVG) [51], then comparing its MVG fit to the natural MVG model. They considered a patch of dimension (resolution) , although other dimensions were also evaluated. The quality of the distorted image is expressed as the distance between the quality aware NSS feature model and the MVG fit to the features extracted from the distorted image, as shown below

where and are the mean vectors of the natural MVG model and the distorted image’s MVG model, respectively. Furthermore, and are the covariance matrices of the natural MVG model and the distorted image’s MVG model, respectively. Thus, the lower the NIQE value, the better the perceptual quality of the generated HR image.

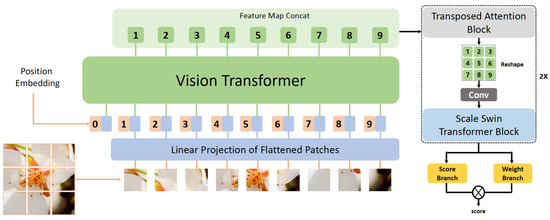

While NIQE is a very traditional metric, the MANIQA score is a very recent one. Top approach of the NTIRE 2022’s NR-IQA track [41], the motivation for the development of the MANIQA model and its corresponding score is that NR-IQA metrics/methods are limited when assessing images created by GAN-based image restoration algorithms [26,52]. As shown in Figure 1, the MANIQA model consists of four components: feature extractor using ViT [53], transposed attention block, scale swin transformer block, and a dual-branch structure for patch-weighted quality prediction.

Figure 1.

The MANIQA model. The score is the metric related to the image. Source: adapted from [49].

In more detail, a distorted image is cropped into patches of dimension 8 × 8. Then, the patches are inputted into the ViT for extracting the features. Transposed attention block and scale swin transformer block are used to strengthen the global and local interaction. A dual-branch structure is proposed for predicting the weight and score of each patch. Notice that the higher the MANIQA score, the better the perceptual quality of the generated HR image.

2.2. Related Work

This study is a controlled experiment within blind image SR via DL/DNNs. Basically every study within blind image SR presents experimental evaluations and, to a lesser or greater extent, they are related to ours. As mentioned in Section 1, even if there are many techniques and experiments proposed boosted by DL techniques, as corroborated by secondary studies [11,17], in general the studies do not consider high scaling factors such as 8×. When there are exceptions [11,40], there is no significant diversity of images and feature spaces to proper evaluate the image SR approaches, taking into account quite distinct broader domains such as medicine images, images obtained by satellites via sensors with different characteristics, and images more “usual” like those of animal’s faces.

It is worth noting that dealing with this range of different domains is important to better classify the techniques in terms of their performances. In other words, the wider/general the assessments are, the better. Furthermore, independent evaluations, like the one presented in this article, are valuable to guide professionals in making the correct decisions in choosing the most appropriate blind image SR solution. Our study addresses all these previous points and these are the main differences between our effort and other already published articles.

3. Experiment Design

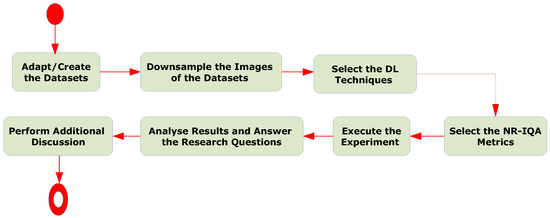

In this section, we describe the main design options of the controlled experiment to assess the performance of the five DL techniques for blind image SR: APA, BlindSR, DAN, FastGAN, and MoESR. Figure 2 presents the workflow (activity diagram) related to this study as a whole. Such a figure emphasises the main steps, detailed below, related to the design of the experiment and ends with an additional discussion of relevant points observed when carrying out the experiment (also detailed below).

Figure 2.

The workflow of this study.

3.1. Research Questions and Variables

The research questions (RQs) related to this experiment are:

- RQ_1—Which out of the five algorithms for blind image SR is the best regarding the metrics NIQE and MANIQA score? And which can be considered the best overall?

- RQ_2—Does the two top approaches present similar behaviours when deriving HR images?

The motivation for RQ_1 is self-explained. Regarding RQ_2, the idea here is to perceive whether the two best approaches present similar behaviours. In other words, are the images detected as having the best, as well as the worst, quality perceptions, based on the MANIQA scores, somewhat “common” to both best DL techniques? Our goal here is to see whether the best algorithms “agree” in terms of the HR images they create based only on the LR ones. The independent variables are the DL models. The dependent variables are the values of the metrics, i.e., NIQE and MANIQA score.

3.2. Datasets

The 14 datasets comprise five different broader domains: Aerial, Fauna, Flora, Medical, and Satellite. Note that the Aerial broader domain means data/images obtained at altitudes lower than 100 km (62 miles; Kármán Line) above the mean sea level. For example, images obtained by sensors attached/embedded in airplanes or unmanned aerial vehicles (drones). The Satellite broader domain relates to the space and it implies data/images obtained from sensors, usually onboard satellites, which are at a minimum altitude of 100 km above the mean sea level.

Table 1 and Table 2 present details about the datasets we created based on publicly released images. In Table 2, note that the original resolution of the images in the datasets are diverse. Some source datasets, like condoaerial and massachbuildings, are formed by HR images where all samples have the same resolution. The plantpat dataset has HR images too but there are several different resolutions. Others like catsfaces and isaid have HR, LR, and even medium resolution images. Three Satellite datasets, amazonia1, cbers4a, and deepglobe, are composed only by LR images and all have the same resolution.

Table 1.

Datasets: description and source.

Table 2.

Datasets: original resolution of the images.

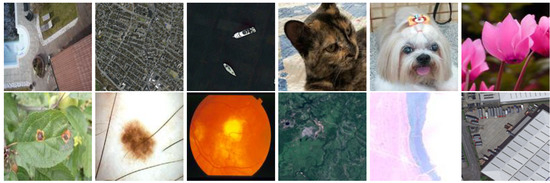

Given the different domains and image resolutions (in some cases, as shown in Table 2, we just had at our disposal LR images), we therefore decided to default the input LR images to a resolution of 128 × 128 pixels. Thus, we downsampled the images which have higher resolution than that based on the bicubic interpolation method as many others have been doing [11,17]. Figure 3 presents some LR images from the datasets we created.

Figure 3.

LR images from our datasets. Top row, left to right: condoaerial, massachbuildings, ships, catsfaces, dogsfaces, flowers; Bottom row, left to right: plantpat, melanomaisic, structretina, amazonia1, cbers4a, isaid.

Note that some criticise doing such a resizing because real-world cameras actually accomplish a series of operations (demosaicing, denoising, …) to finally produce 8-bit Red-Green-Blue (RGB) images [17]. Thus, RGB images have lost lots of original signals, becoming significantly different from the original images taken by the camera. Therefore, it would not be interesting to directly use the manually downsampled RGB images obtained via, for instance, a bicubic interpolation method for image SR.

However, we must emphasise that our main goal is to perform an experiment using DL techniques for blind image SR, based on different domains, in order to provide some sort of recommendation to professionals who need to choose a technique that best fits their needs. Although the issues cited earlier exist, they impact equally all the DL techniques and hence they do not compromise our analysis.

In addition to standardising the resolution of the LR input images, we also considered the same small number of images in the datasets: 100 samples. There are more details about this point in the next section.

3.3. Amount of Required Images

Taking into account the amount of required images, recent DL techniques for image SR are created assuming a single image, known as single-image SR, but they can also be devised assuming that there are a few (limited) number of samples, which we can call few-shot image SR. Note that, here, we consider that few-shot SR assumes few but more than one image. There are also other possibilities, like zero-shot SR presented by [54], where authors coped with unsupervised SR by training image-specific SR networks at test time rather than training a model on huge datasets.

Traditionally, when researchers propose a new single-image SR technique, they naturally compare their approach to other single-image SR strategies. However, it would be nice to compare single-image SR techniques to non-single-image ones to perceive whether it is advantageous to consider approaches which rely on a unique image or, eventually, it is better to adopt solutions which require more than one image but not so many of them.

Thus, three of the selected techniques were designed addressing single-image SR, i.e., BlindSR, DAN, and MoESR. The other two, APA and FastGAN, are GAN-based approaches requiring a few samples indeed and were not conceived for single-image SR. Since the two latter did require more than one sample but not too many, we limited the size of the datasets to 100 LR images, as mentioned in the previous section. In Section 3.4, we provide an overview of such DL strategies.

All runnings were performed using a Bull Sequana X1120 computing node of the SDumont supercomputer (https://sdumont.lncc.br/machine.php?pg=machine (accessed on 23 July 2023)), where such a node has 4× NVIDIA Volta V100 graphics processing units (GPUs). Each run was limited to four days being considered the latest model when the execution exceeded this time.

3.4. DL Techniques

In this section, we briefly describe the selected DL techniques starting with the single-image approaches. In [45], authors proposed a framework that can achieve blind image SR in a fully automated manner, while also meeting the practical scaling needs of video production. We name it here as BlindSR and such a framework is composed of three main components. The first one is a degradation-aware SR network used to generate an HR image based on a LR input image and the corresponding blur kernel. Secondly, a kernel discriminator is trained to analyse the output HR image and predict errors caused by incorrect blur kernel input. Finally, an optimisation procedure aims to recover both the degradation kernel and the HR image by minimising the predicted error using their kernel discriminator.

DAN solves the blind image SR problem via an alternating optimisation algorithm which restores an HR image and estimates the corresponding blur kernel alternately [46]. Thus, these two subproblems are handled both via convolutional neural modules namely Restorer and Estimator, respectively. More specifically, the Restorer restores an HR image based on the predicted Estimator’s kernel, and the Estimator estimates a blur kernel with the help of the restored HR image.

Some blind SR techniques train a unique degradation-aware network (for multiple kernels) on external datasets like BlindSR described above [45]. But there are performance problems with these approaches. There are also self-supervised techniques [55] but they are usually costly and there is limited information to learn from a single image. Aiming at benefiting from the best characteristics of both types of solution, MoESR [48] was proposed considering different experts for different degradation kernels. For every input image, the technique predicts the degradation kernel and super-resolve the LR image using the most adequate kernel-specific expert. To predict the degradation kernel, they use two components in combination. Image Sharpness Evaluator (ISE) assesses the sharpness of the images generated by the experts. These evaluations are used by the Kernel Estimation Network (KEN) to estimate the kernel and select the best pretrained expert network.

Regarding the non-single-image techniques, the greatest motivation to create APA [44] is related to the main challenge in training GANs with limited (few) data: the risk of discriminator overfitting which can lead to unstable training dynamics [56,57]. To deal with this issue, the technique called Adaptive Pseudo Augmentation, or APA, regularises the discriminator without introducing any external augmentations or regularisation terms. Unlike previous approaches that relied on standard data augmentations [56,57], APA leverages the generator within the GAN itself to provide the augmentation, a more natural way of regularisation of the overfitting of the discriminator. In accordance with the authors, the APA approach is simple and more adaptable to different settings and training conditions than model regularisation, without requiring manual tuning.

Other few-shot image SR solution and based on GANs is FastGAN [47]. Its authors were driven by the realisation that training GANs on high-fidelity (HR) images often necessitates a vast number of training images and large-scale GPU clusters. To address the challenge of few-shot image synthesis via GAN with minimal computing costs, FastGAN was proposed as a lightweight GAN architecture which produces high-quality results at a resolution of 1024 × 1024 pixels. Their technique involves incorporating two techniques: a skip-layer channel-wise excitation module and a self-supervised discriminator trained as a feature-encoder.

3.5. Metrics

As we have already mentioned, NIQE and the MANIQA score were the selected metrics. NIQE was calculated considering the HR images (1024 × 1024 pixels) generated by the techniques. However, according to its authors, the MANIQA model [49] is best suited for 224 × 224 pixel images. Thus, we downsampled the created HR image (1024 × 1024 pixels) to a resolution of 224 × 224 pixels in order to calculate the MANIQA score.

Note that such a degradation approach accomplished to calculate the MANIQA score was the same for all generated HR images and, therefore, there is no favouring of a certain technique in relation to the obtained value. Furthermore, we got the MANIQA score considering a subset of the datasets and HR images (1024 × 1024) and we did not notice a great difference in the scores compared to when we used the reduced images (224 × 224). Thus, we followed the authors’ suggestions and considered the images in the lowest resolution (224 × 224) as input for calculating the MANIQA score.

As shown in Table 2, and as we have already pointed out, the original images of some datasets are HR ones. But there are datasets which have only original LR images and not the corresponding HR samples. In addition to that, NR-IQA metrics are more in line with the reality when we deal with a blind setting. These are the reasons to select only NR-IQA metrics for our experiment.

4. Results

In this section, we present the results of our experiment considering the research questions we have defined earlier.

4.1. RQ_1

4.1.1. NIQE

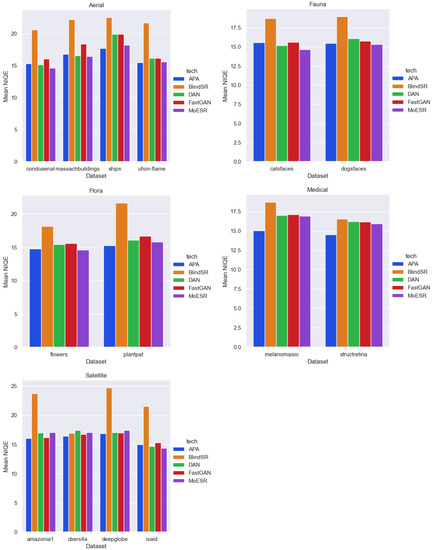

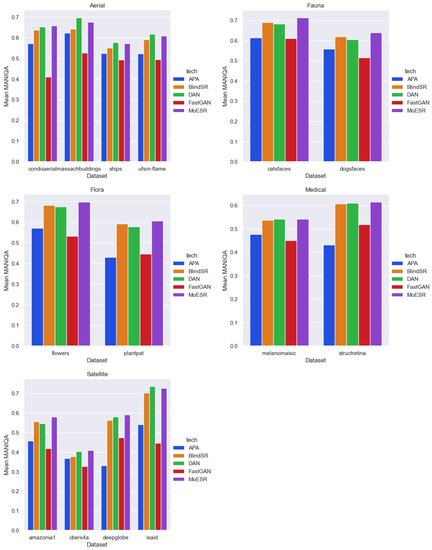

Table 3 presents the NIQE values in the perspective of the broader domain. In this case, we consider the mean NIQE values for all the datasets of a certain domain where the best (minimum) and worst (maximum) values, and the techniques which produced them, are shown. On the other hand, Table 4 presents a finer analysis per dataset and also considering the mean NIQE values. Recall that the lower the NIQE value, the better the perceptual quality. Figure 4 shows all mean NIQE values considering all broader domains and datasets.

Table 3.

Mean NIQE values: broader domains.

Table 4.

Mean NIQE values: datasets.

Figure 4.

Mean NIQE values: all broader domains and datasets. From top left: Aerial, Fauna, Flora, Medical, and Satellite.

Results show that if we consider the broader domain (Table 3), MoESR was the most outstanding approach being the best in four out of the five domains. Only in the Medical domain APA was the top technique. Moreover, BlindSR was the worst strategy with the maximum NIQE values for all domains. However, in the analysis per dataset (Table 4), APA was the best technique obtaining the minimum NIQE values in eight out of 14 datasets. MoESR was the best in six of them. BlindSR was, again, the worst of all the techniques.

Since MoESR was the best in one perspective and APA was the winner in the other analysis, we performed an improvement evaluation to reach a final decision in accordance with the NIQE value. In other words, the point is not only to say that a DL technique is better than the other but how much better it is. The improvement metric, , is calculated as follows

where B and W are the best and worst value of the metric, respectively, comparing both techniques under the same dataset. In Table 5, we show the improvement of MoESR over APA regarding NIQE where in all datasets in this table, MoESR was superior than APA. As highlighted in bold, the catsfaces dataset was the one where MoESR got the highest improvement (6.472). In this case, and . Hence, to know the APA’s NIQE (W), we calculate:

Table 5.

Improvement of MoESR over APA.

The idea is that the improvement is the additional gain that the best approach has compared to the worst one. This is because the lower the NIQE value the better.

In Table 6 is the other way around, where we can see the improvement of APA over MoESR. The reasoning is the same as presented in Equations (6) and (7). Thus, the highest improvement (12.489) of APA over MoESR was obtained in the melanomaisic dataset. Note that this improvement of APA is almost twice the best improvement of MoESR. Furthermore, the average improvement () is also higher favouring APA over MoESR. Thus, based on the NIQE metric, the best approach was APA followed by MoESR. The worst was BlindSR.

Table 6.

Improvement of APA over MoESR.

4.1.2. MANIQA

In Table 7, the MANIQA scores are shown in the perspective of the broader domain while Table 8 presents the per dataset analysis. As previously, we show the mean values of the metric. Recall that the higher the MANIQA score, the better the perceptual quality. Figure 5 shows all mean MANIQA scores considering all broader domains and datasets.

Table 7.

Mean MANIQA scores: broader domains.

Table 8.

Mean MANIQA scores: datasets.

Figure 5.

Mean MANIQA scores: all broader domains and datasets. From top left: Aerial, Fauna, Flora, Medical, and Satellite.

In the broader domain perspective, MoESR was the best (three out of five top results) followed by DAN (two best positions). Unlike the NIQE metric, where APA was considered the best DL technique overall, it performed poorly in accordance with the MANIQA score, occupying the penultimate place (in two domains, Flora and Medical, APA was the worst approach). FastGAN was the worst of all the techniques. Again unlike the results presented in the previous section, here we have complete agreement between the broader domain and the per dataset perspectives. Thus, considering each dataset on its own, MoESR got again the top place (nine best MANIQA scores) while DAN was the second best technique (five best MANIQA scores). Likewise, APA got the penultimate place (three worst MANIQA scores) and FastGAN was the worst of all the DL techniques.

Despite such an agreement, we accomplished the improvement analysis to check these results considering the two best DL models: MoESR and DAN. But, we slightly changed the way to calculate the improvement metric as shown below

where B and W are the best and worst value of the metric, respectively, comparing both techniques under the same dataset. And now, the idea is that the improvement is the additional gain that the worst approach needs to reach the best one. This is because the higher the MANIQA score the better. Thus, we use the following equation:

Table 9 presents the improvement of DAN over MoESR while Table 10 shows the opposite (MoESR over DAN). As we can see in bold in both tables, the highest improvement of MoESR (6.069) is almost twice the highest due to DAN (3.245). Moreover, MoESR’s average improvement is also better than the DAN’s average improvement. These results confirm the previous conclusions and MoESR was the best technique followed by DAN.

Table 9.

Improvement of DAN over MoESR.

Table 10.

Improvement of MoESR over DAN.

Considering both metrics, NIQE and MANIQA score, we can state that MoESR was the most outstanding approach. It presented a consistent performance under NIQE, being the second best technique, and, as we have just said, it got the top place based on the MANIQA score. Note that we saw contradictory performances regarding APA where it was the best strategy evaluated via NIQE and almost the worst approach, if we take into account the MANIQA score.

4.2. RQ_2

Question RQ_2 is about the similarity of behaviours between the two best approaches according to the MANIQA score: MoESR and DAN. Here, our intention is to see whether the two best models “agree” in terms of the HR images they create. Thus, we took the 10 images with the best MANIQA scores from both techniques, for each dataset, and generated two sets and , meaning the scores of the images from MoESR and DAN, respectively. Hence, we just calculated the cardinality of a new set () derived from the intersection between the previous two sets:

We also did the same and calculated the cardinality of the set of non-common elements, , such that:

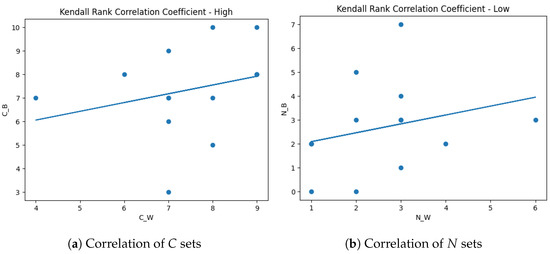

For instance, for the condoaerial dataset, . This implies that seven out of the 10 images with the highest MANIQA scores are common to MoESR and DAN, and, hence, there is an agreement between both techniques regarding these images. Thus, , representing that three out of the 10 images are not common showing disagreement. The average value of the cardinalities of all common sets is while for the non-common case is .

We did the same with the 10 images with lowest scores and created the sets and due to MoESR and DAN, respectively. Likewise, we calculated the cardinality of and for each dataset, just replacing the high sets with the low ones in the Equations (10) and (11). For example, for the amazonia1 dataset, and , meaning that six images with the lowest scores are common and four are not common. The average value of the cardinalities of all common sets is while for the non-common case is .

Finally, we obtained the Kendall Rank Correlation Coefficient (Kendall’s coefficient) for two situations. The first case is considering and and the second situation is based on and . In both cases, showing a good correlation between each pair of variables. Figure 6 shows the correlation in detail where C_W, C_B, N_W, N_B means , , , , respectively.

Figure 6.

Correlation analysis.

The interpretation of these results is that the images detected as having the best, as well as the worst, perceptual qualities, based on the MANIQA scores, are somewhat “common” to both techniques. Hence, we can conclude that both approaches present similar behaviours. It is important to emphasise that the relevance of this analysis is to realise whether the two best approaches generate HR images with better or worse perception qualities in a relatively uniform manner, taking the LR images as input.

5. Discussion

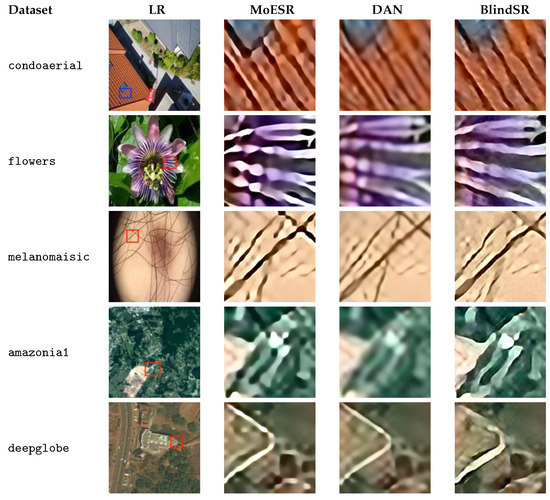

We start this section by stressing some points based on a visual analysis. Figure 7 shows two LR images and pieces of the corresponding HR images generated by the three single-image blind SR techniques. The top HR image generated by DAN, based on an LR image of the isaid Satellite dataset, is the one with the highest MANIQA score (0.850084) of all images. We clearly see that the roof divisions of the building appear more vivid in the image created by DAN. MoESR’s image appears blurred while BlindSR’s image seems to present something like a salt-and-pepper noise.

Figure 7.

Visual analysis: highest and lowest MANIQA scores.

On the other hand, the bottom HR image created by BlindSR is the one with the lowest MANIQA score (0.240234) of all images. The LR image is from the cbers4a Satellite dataset where the scene presents water and land. This is a cloudy scene obtained by the CBERS-4A satellite and it is interesting to realise that such bright images from cbers4a, in general, produced the lowest MANIQA scores (see Figure 5).

Other LR images and the corresponding pieces of HR images created by the three single-image blind SR models are presented in Figure 8. MoESR produced the highest MANIQA score for the top images (condoaerial, flowers, melanomaisic, amazonia1) while DAN produced the highest score for the bottom one (deepglobe).

Figure 8.

Other created HR images by the single-image blind SR approaches.

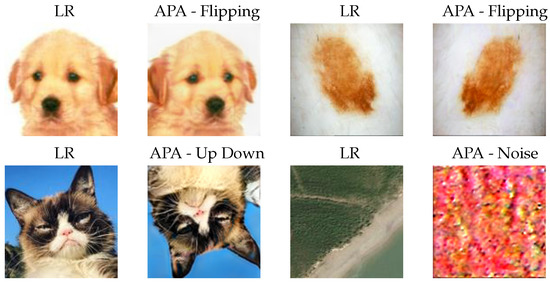

Since APA and FastGAN are GAN-based non-single image techniques, some known issues of GANs appeared here. In order to use APA in a custom dataset, three phases are required: prepare the dataset, training, and inference for generating images. As usual, the training is the more demanded step and APA indeed exceeded the limit we set up (four days) not finishing the training. We considered its latest model for inference. We used 4× NVIDIA Volta V100 GPUs and the datasets are very small (100 samples). It is important to mention that other DL techniques demanded only one out of the four GPUs and the limit was enough for them. Thus, APA is a very “heavy” model. Furthermore, some HR images were flipped and others upside down compared to the source LR images. There are even cases where the images were not indeed generated (it is likely that the images are basically noise).

Figure 9 shows these cases where we have the LR image and the corresponding APA’s HR image. Note that we downsampled the APA images to 128 × 128 pixels for this figure to compare to the LR images. Moreover, the deepglobe image is here just to show a sample of the dataset since APA’s output seems to be basically noise.

Figure 9.

Some issues presented by APA. Top row, left to right: dogsfaces, melanomaisic; Bottom row, left to right: catsfaces, deepglobe.

As for FastGAN, the required phases to use it are training and inference, and its training is considerably faster/lighter than APA. But both FastGAN and APA presented a very known problem related to GANs: mode collapse [58]. It happens when the generator model produces a not very significant set of images that fail to capture the full diversity of the real data distribution. Thus, the fake created samples are quite similar or even identical. In Figure 10, we see some images from FastGAN where mode collapse occurs. Again, we downsampled the HR images for better presentation.

Figure 10.

Mode collapse in FastGAN: condoaerial.

Based on the MANIQA scores (Section 4.1.2), APA and FastGAN were the worst techniques. Thus, we can conclude based on the results of our experiment that, for blind image SR, single-image and non-GAN-based approaches are the best way to go.

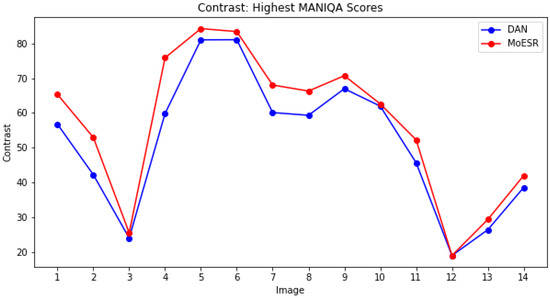

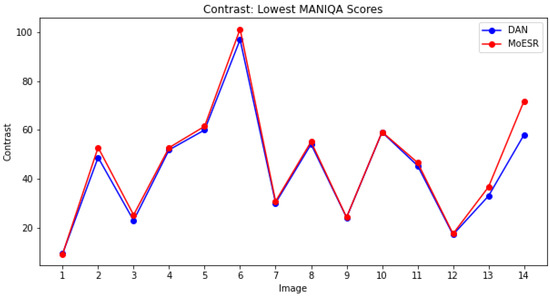

Considering the best two DL techniques in accordance with the MANIQA score, we may say that the HR images created by MoESR are sharper than the ones of DAN. Figure 11 presents the measures of the maximum overall contrast of the images with the highest MANIQA scores taking into account both techniques and the 14 datasets. For instance, for the amazonia1 dataset, the image derived by MoESR has higher MANIQA score than the one of DAN and, thus, we considered the former and the corresponding DAN’s image. As for isaid, DAN’s image got higher MANIQA score and, thus, this image and the corresponding MoESR’s image were analysed. Figure 12 follows the same reasoning but now the images are the ones with the lowest MANIQA scores.

Figure 11.

Highest MANIQA scores: contrast.

Figure 12.

Lowest MANIQA scores: contrast.

Since the higher the maximum overall contrast the sharper the image, we can realise that the images of MoESR are sharper than the ones of DAN. But, note that perceptual quality is not necessarily the same thing as sharpness. An image with higher contrast does not imply that it has a better perceptual quality than one with lower contrast.

A possible explanation for the images created by MoESR being sharper than DAN’s images, and sometimes present oversharpening, is the combination of the perfomances of ISE and KEN. The ISE component is trained to detect blurry or oversharpened regions and predicts errors from the ground-truth image. Since KEN uses the sharpness measures from ISE to estimate the kernel and select the best pretrained model, misleading evaluations of sharpness by ISE may compromise the decision made by the KEN component.

On the other hand, HR images generated by DAN are generally blurry which might lead to a poor perceptual quality. In DAN, the kernel is initialised by Dirac function, and it is also reshaped and then reduced by principal component analysis (PCA) [59]. The kernel is reduced by PCA and, thus, the estimator only needs to estimate the PCA result of the blur kernel. There is naturally loss of information when using PCA for dimensionality reduction, and recent evaluations show that PCA results are not as reliable and robust as it is usually assumed to be [60]. This is a possible explanation for this issue related to DAN.

As mentioned in Section 4, there is not an agreement between the ranking of best and worst techniques considering a classical metric (NIQE) and a DNN-based one (MANIQA score). While APA presented the best performance under NIQE, it is almost the worst solution according to the MANIQA score. Thus, we believe that relying on a more recent NR-IQA metric, like the MANIQA score and which presented quite superior performance [41] than traditional metrics, is more advisable.

Notice that we do not have the mean opinion scores (MOSs) of the HR images as we do not submit them for observers to assign it. But, looking at the HR images generated by all DL techniques for all sets (see the datasets repository of this research), it is not difficult to see that the perception quality of the images as a whole needs to improve. Note that, in MoESR and DAN, the generation of the HR images had to be done in two stages, using the 2× and 4× scaling factors in sequence. In general, there are several blurred images and others with oversharpening. Despite the sophistication of the evaluated DNNs, we believe that new approaches, addressing larger scaling factors, are necessary for the future.

6. Conclusions

Given the significant number of DL techniques/DNNs that are being created at a rapid pace, it is relevant to perform independent and unbiased controlled experiments to suggest the most suitable approaches for professionals. This article is in line with this reasoning, where we presented a large scaling factor (8×) experiment which evaluated recent DL techniques tailored for blind image SR: APA, BlindSR, DAN, FastGAN, and MoESR. Some of the techniques were designed for single-image while others for non-single-image blind SR. In addition to selecting a larger scaling factor, another difference in this study is that we showed a more detailed analysis of the results, also focusing on explaining in depth the behaviours of the approaches. Mostly based on publicly released sources of images, we adapted and created 14 LR image datasets from five different broader domains to provide a significant range of distinct images to the techniques. We also considered two NR-IQA metrics, the classical NIQE and the DNN-based MANIQA score.

Results show that the MoESR model was the most outstanding approach followed by DAN. GAN-based approaches presented classical issues, such as mode collapse and noise, in some cases. The outcomes of our experiment suggest that single-image and non-GAN-based techniques are more promising for blind image SR, although such a conclusion needs more experimentation to be completely confirmed. By visually inspecting the HR images created by the techniques, we may state that, despite the remarkable solutions, new strategies are required for larger scaling factor requirements.

As future directions, we firstly intend to carry out even higher scaling factor experiments, such as 16×. We will also increase not only the amount of blind image SR approaches to assess but also the number of datasets to get more evidence about the conclusions we have made. Other recent NR-IQA metrics will also be considered in these new controlled experiments.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Supporting code (https://github.com/vsantjr/DL_BlindSR (accessed on 23 July 2023)) as well as the datasets (https://www.kaggle.com/datasets/valdivinosantiago/dl-blindsr-datasets (accessed on 23 July 2023)) related to this study are publicly available.

Acknowledgments

This research was developed within the project Classificação de imagens via redes neurais profundas e grandes bases de dados para aplicações aeroespaciais (Image classification via Deep neural networks and large databases for aeroSpace applications—IDeepS). The IDeepS (https://github.com/vsantjr/IDeepS (accessed on 23 July 2023)) project is supported by the Laboratório Nacional de Computação Científica (LNCC/MCTI, Brazil) via resources of the SDumont supercomputer (http://sdumont.lncc.br (accessed on 23 July 2023)). Mateus de Souza Miranda helped in the creation of the amazonia1 and cbers4a datasets.

Conflicts of Interest

The author declares no conflict of interest.

References

- Yamashita, K.; Markov, K. Medical Image Enhancement Using Super Resolution Methods. In Proceedings of the Computational Science—ICCS, Amsterdam, The Netherlands, 3–5 June 2020; Krzhizhanovskaya, V.V., Závodszky, G., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Brissos, S., Teixeira, J., Eds.; Springer: Cham, Switzerland, 2020; pp. 496–508. [Google Scholar]

- Huang, Y.; Shao, L.; Frangi, A.F. Simultaneous Super-Resolution and Cross-Modality Synthesis of 3D Medical Images Using Weakly-Supervised Joint Convolutional Sparse Coding. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5787–5796. [Google Scholar] [CrossRef]

- Ahmad, W.; Ali, H.; Shah, Z.; Azmat, S. A new generative adversarial network for medical images super resolution. Sci. Rep. 2022, 12, 9533. [Google Scholar] [CrossRef]

- Gupta, R.; Sharma, A.; Kumar, A. Super-Resolution using GANs for Medical Imaging. Procedia Comput. Sci. 2020, 173, 28–35. [Google Scholar] [CrossRef]

- Liborio, J.M.; Melo, C.; Silva, M. Internet Video Delivery Improved by Super-Resolution with GAN. Future Internet 2022, 14, 364. [Google Scholar] [CrossRef]

- Yeo, H.; Jung, Y.; Kim, J.; Shin, J.; Han, D. Neural Adaptive Content-Aware Internet Video Delivery. In Proceedings of the 13th USENIX Conference on Operating Systems Design and Implementation, Carlsbad, CA, USA, 8–10 October 2018; pp. 645–661. [Google Scholar]

- Yeo, H.; Do, S.; Han, D. How will Deep Learning Change Internet Video Delivery? In Proceedings of the HotNets-XVI: Proceedings of the 16th ACM Workshop on Hot Topics in Networks, Palo Alto, CA, USA, 30 November–1 December 2017; pp. 57–64. [Google Scholar]

- Rasti, P.; Uiboupin, T.; Escalera, S.; Anbarjafari, G. Convolutional Neural Network Super Resolution for Face Recognition in Surveillance Monitoring. In Proceedings of the Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 13–15 July 2016; Perales, F.J., Kittler, J., Eds.; Springer: Cham, Switzerland, 2016; pp. 175–184. [Google Scholar]

- Zhou, E.; Fan, H.; Cao, Z.; Jiang, Y.; Yin, Q. Learning Face Hallucination in the Wild. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 3871–3877. [Google Scholar] [CrossRef]

- Zhu, S.; Liu, S.; Loy, C.C.; Tang, X. Deep Cascaded Bi-Network for Face Hallucination. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 614–630. [Google Scholar]

- Wang, P.; Bayram, B.; Sertel, E. A comprehensive review on deep learning based remote sensing image super-resolution methods. Earth-Sci. Rev. 2022, 232, 104110. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Wang, G.; Lu, T.; Jiang, J. Edge-Enhanced GAN for Remote Sensing Image Superresolution. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5799–5812. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Jiang, J.; Xiao, J.; Yao, Y. Deep Distillation Recursive Network for Remote Sensing Imagery Super-Resolution. Remote Sens. 2018, 10, 1700. [Google Scholar] [CrossRef]

- Xu, Y.; Luo, W.; Hu, A.; Xie, Z.; Xie, X.; Tao, L. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sens. 2022, 14, 2425. [Google Scholar] [CrossRef]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single Image Super-Resolution via a Holistic Attention Network. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 191–207. [Google Scholar]

- Zhang, J.; Xu, T.; Li, J.; Jiang, S.; Zhang, Y. Single-Image Super Resolution of Remote Sensing Images with Real-World Degradation Modeling. Remote Sens. 2022, 14, 2895. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Hoi, S.C.H. Deep Learning for Image Super-Resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3365–3387. [Google Scholar] [CrossRef]

- Duchon, C.E. Lanczos Filtering in One and Two Dimensions. J. Appl. Meteorol. Climatol. 1979, 18, 1016–1022. [Google Scholar] [CrossRef]

- Sun, J.; Xu, Z.; Shum, H.Y. Image super-resolution using gradient profile prior. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Xiong, Z.; Sun, X.; Wu, F. Robust Web Image/Video Super-Resolution. IEEE Trans. Image Process. 2010, 19, 2017–2028. [Google Scholar] [CrossRef] [PubMed]

- Egger, J.; Pepe, A.; Gsaxner, C.; Jin, Y.; Li, J.; Kern, R. Deep learning-a first meta-survey of selected reviews across scientific disciplines, their commonalities, challenges and research impact. PeerJ Comput. Sci. 2021, 7, e773. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Kim, J.; Lee, J.; Lee, K. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Cheng, X.; Li, X.; Yang, J.; Tai, Y. SESR: Single Image Super Resolution with Recursive Squeeze and Excitation Networks. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 147–152. [Google Scholar] [CrossRef]

- Bulat, A.; Yang, J.; Tzimiropoulos, G. To Learn Image Super-Resolution, Use a GAN to Learn How to Do Image Degradation First. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 187–202. [Google Scholar]

- Gu, J.; Lu, H.; Zuo, W.; Dong, C. Blind Super-Resolution with Iterative Kernel Correction. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1604–1613. [Google Scholar] [CrossRef]

- Yue, Z.; Zhao, Q.; Xie, J.; Zhang, L.; Meng, D.; Wong, K.K. Blind Image Super-resolution with Elaborate Degradation Modeling on Noise and Kernel. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2118–2128. [Google Scholar] [CrossRef]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar] [CrossRef]

- Arbeláez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef]

- Agustsson, E.; Timofte, R. NTIRE 2017 Challenge on Single Image Super-Resolution: Dataset and Study. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1122–1131. [Google Scholar] [CrossRef]

- Blau, Y.; Mechrez, R.; Timofte, R.; Michaeli, T.; Zelnik-Manor, L. The 2018 PIRM Challenge on Perceptual Image Super-Resolution. In Proceedings of the Computer Vision—ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018; Leal-Taixé, L., Roth, S., Eds.; Springer: Cham, Switzerland, 2019; pp. 334–355. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Morel, M.A. Low-Complexity Single-Image Super-Resolution based on Nonnegative Neighbor Embedding. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012; Bowden, R., Collomosse, J., Mikolajczyk, K., Eds.; BMVA Press: Durham, UK, 2012; pp. 1–10. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. In Proceedings of the Curves and Surfaces, Avignon, France, 24–30 June 2010; Boissonnat, J.D., Chenin, P., Cohen, A., Gout, C., Lyche, T., Mazure, M.L., Schumaker, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 711–730. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar] [CrossRef]

- Yuan, Z.; Tang, C.; Yang, A.; Huang, W.; Chen, W. Few-Shot Remote Sensing Image Scene Classification Based on Metric Learning and Local Descriptors. Remote Sens. 2023, 15, 831. [Google Scholar] [CrossRef]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep Back-Projection Networks for Super-Resolution. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1664–1673. [Google Scholar] [CrossRef]

- Gu, J.; Cai, H.; Dong, C.; Ren, J.S.; Timofte, R.; Gong, Y.; Lao, S.; Shi, S.; Wang, J.; Yang, S.; et al. NTIRE 2022 Challenge on Perceptual Image Quality Assessment. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 950–966. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Jiang, L.; Dai, B.; Wu, W.; Loy, C.C. Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 21655–21667. [Google Scholar]

- Cornillère, V.; Djelouah, A.; Yifan, W.; Sorkine-Hornung, O.; Schroers, C. Blind Image Super-Resolution with Spatially Variant Degradations. ACM Trans. Graph. 2019, 38, 1–13. [Google Scholar] [CrossRef]

- Luo, Z.; Huang, Y.; Li, S.; Wang, L.; Tan, T. Unfolding the Alternating Optimization for Blind Super Resolution. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 5632–5643. [Google Scholar]

- Liu, B.; Zhu, Y.; Song, K.; Elgammal, A. Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Emad, M.; Peemen, M.; Corporaal, H. MoESR: Blind Super-Resolution using Kernel-Aware Mixture of Experts. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 4009–4018. [Google Scholar] [CrossRef]

- Yang, S.; Wu, T.; Shi, S.; Lao, S.; Gong, Y.; Cao, M.; Wang, J.; Yang, Y. MANIQA: Multi-dimension Attention Network for No-Reference Image Quality Assessment. arXiv 2022, arXiv:2204.08958. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Zhang, L. Learning a single convolutional super-resolution network for multiple degradations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3262–3271. [Google Scholar] [CrossRef]

- Anderson, M.; Adali, T.; Li, X.L. Joint Blind Source Separation With Multivariate Gaussian Model: Algorithms and Performance Analysis. IEEE Trans. Signal Process. 2012, 60, 1672–1683. [Google Scholar] [CrossRef]

- Gu, J.; Shen, Y.; Zhou, B. Image Processing Using Multi-Code GAN Prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3009–3018. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Shocher, A.; Cohen, N.; Irani, M. Zero-Shot Super-Resolution using Deep Internal Learning. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3118–3126. [Google Scholar] [CrossRef]

- Bell-Kligler, S.; Shocher, A.; Irani, M. Blind Super-Resolution Kernel Estimation Using an Internal-GAN. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alche-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Karras, T.; Aittala, M.; Hellsten, J.; Laine, S.; Lehtinen, J.; Aila, T. Training Generative Adversarial Networks with Limited Data. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 12104–12114. [Google Scholar]

- Zhao, S.; Liu, Z.; Lin, J.; Zhu, J.-Y.; Han, S. Differentiable Augmentation for Data-Efficient GAN Training. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 7559–7570. [Google Scholar]

- Srivastava, A.; Valkov, L.; Russell, C.; Gutmann, M.U.; Sutton, C. VEEGAN: Reducing Mode Collapse in GANs using Implicit Variational Learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Von Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Mackiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Elhaik, E. Principal Component Analyses (PCA)-based findings in population genetic studies are highly biased and must be reevaluated. Sci. Rep. 2022, 12, 14683. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).