Advantage Actor-Critic for Autonomous Intersection Management

Abstract

1. Introduction

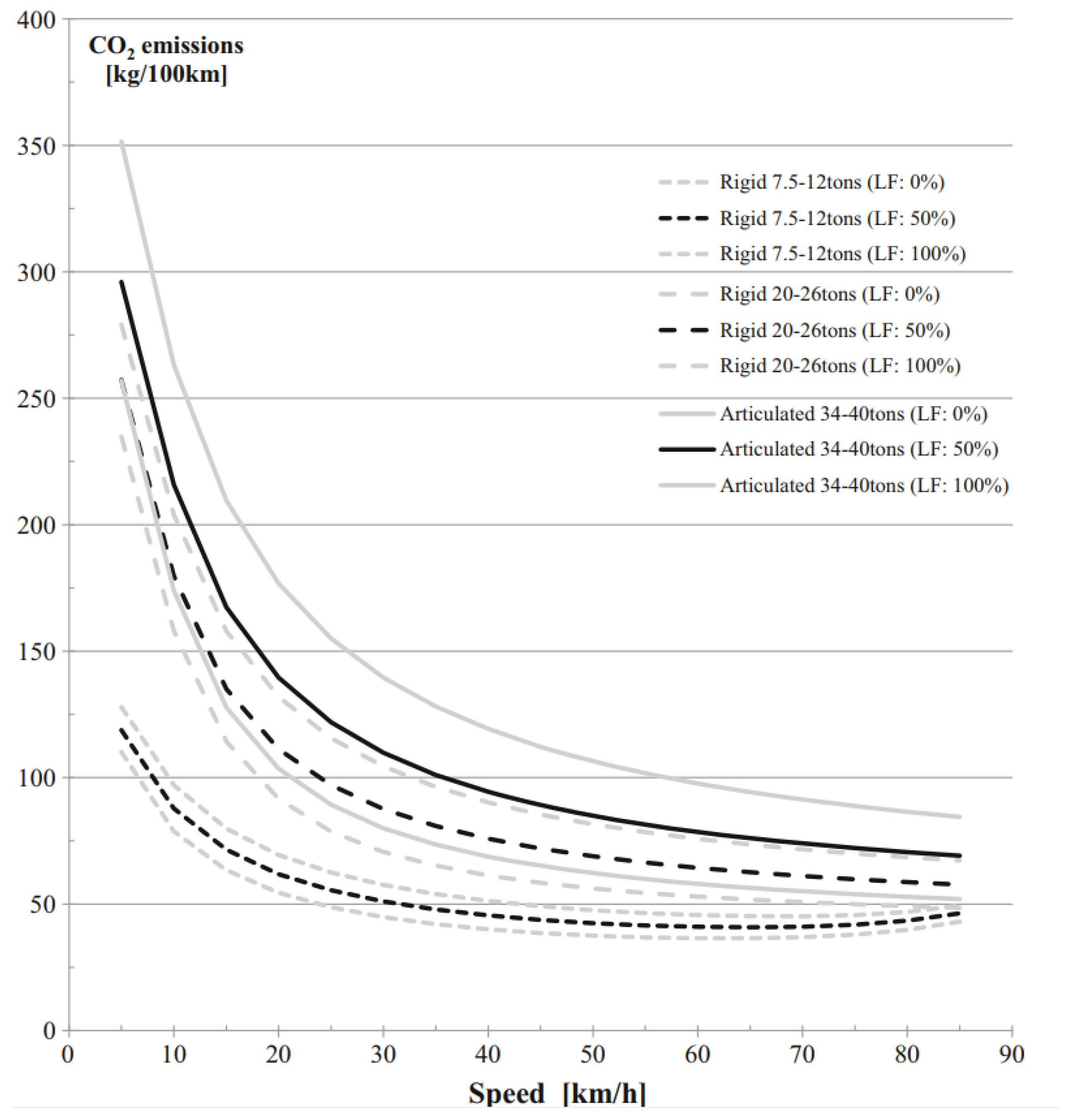

1.1. Background

1.2. Motivation

2. Related Work

2.1. Autonomous Intersection Management

2.2. Resource Reservation Methods

2.3. Trajectory Planning Methods

2.4. Reinforcement Learning for Traffic Control

2.5. Intersection Throughput and Fairness

3. Autonomous Intersection Management

- Priority Assignment ModelThe model maintains a priority list and waiting list for assigned and unassigned vehicle. It receives requests from vehicles and stores then in the waiting list. At each time step, the priority assignment model assigns vehicles a priority in certain lanes, as determined by the agent, then adds the vehicles to the priority list. Generally, the different types of applicable intersection models are crossroad, roundabout, misaligned intersection, ramp merge, deformed intersection, X-intersection, T-intersection, and Y-intersection.

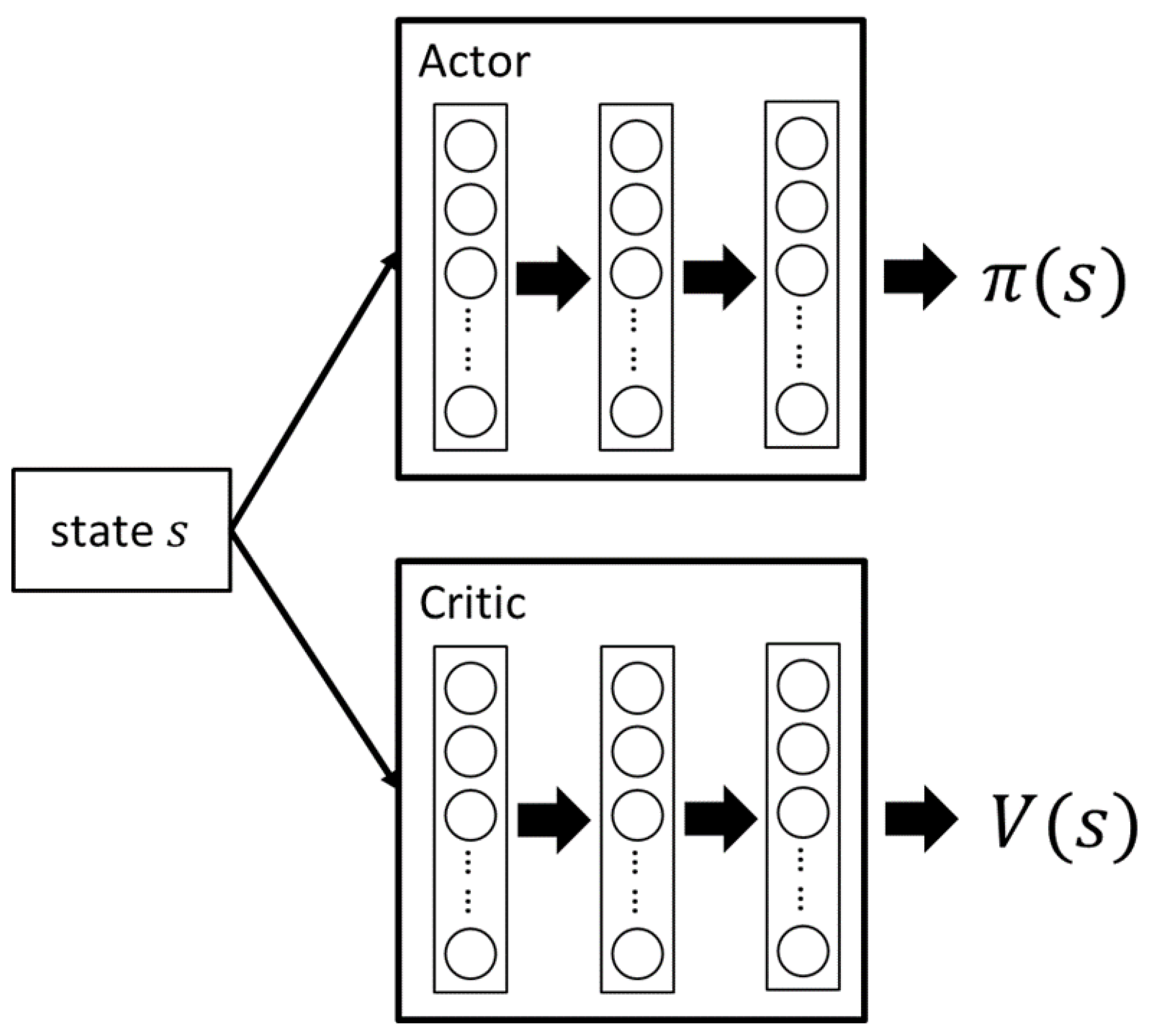

- AgentThe agent is responsible for carrying out the priority assignment policy by selecting vehicles to cross the intersection. It continually revises the policy based on previous experience. At every timestamp, the agent collects the state information from the environment and chooses vehicles to pass. The agent sends actions to the priority assignment model. After priority assignment, the action is executed and the vehicles which are allowed to pass receive the priority needed to cross the intersection. The state then changes to and the agent receives a reward . The main object of the agent used in this study is to develop an effective and optimal policy in order to increase its cumulative reward.

- EnvironmentThe environment refers to the information obtained from monitoring the traffic environment; it consists of the size of the queue, vehicle waiting times, and number of vehicles inside the intersection.

4. Advantage Actor-Critic for Autonomous Intersection Management

4.1. State Space

4.2. Action Space

4.3. Reward

4.4. Learning

| Algorithm 1: The A2C-based Intersection Management Algorithm. |

|

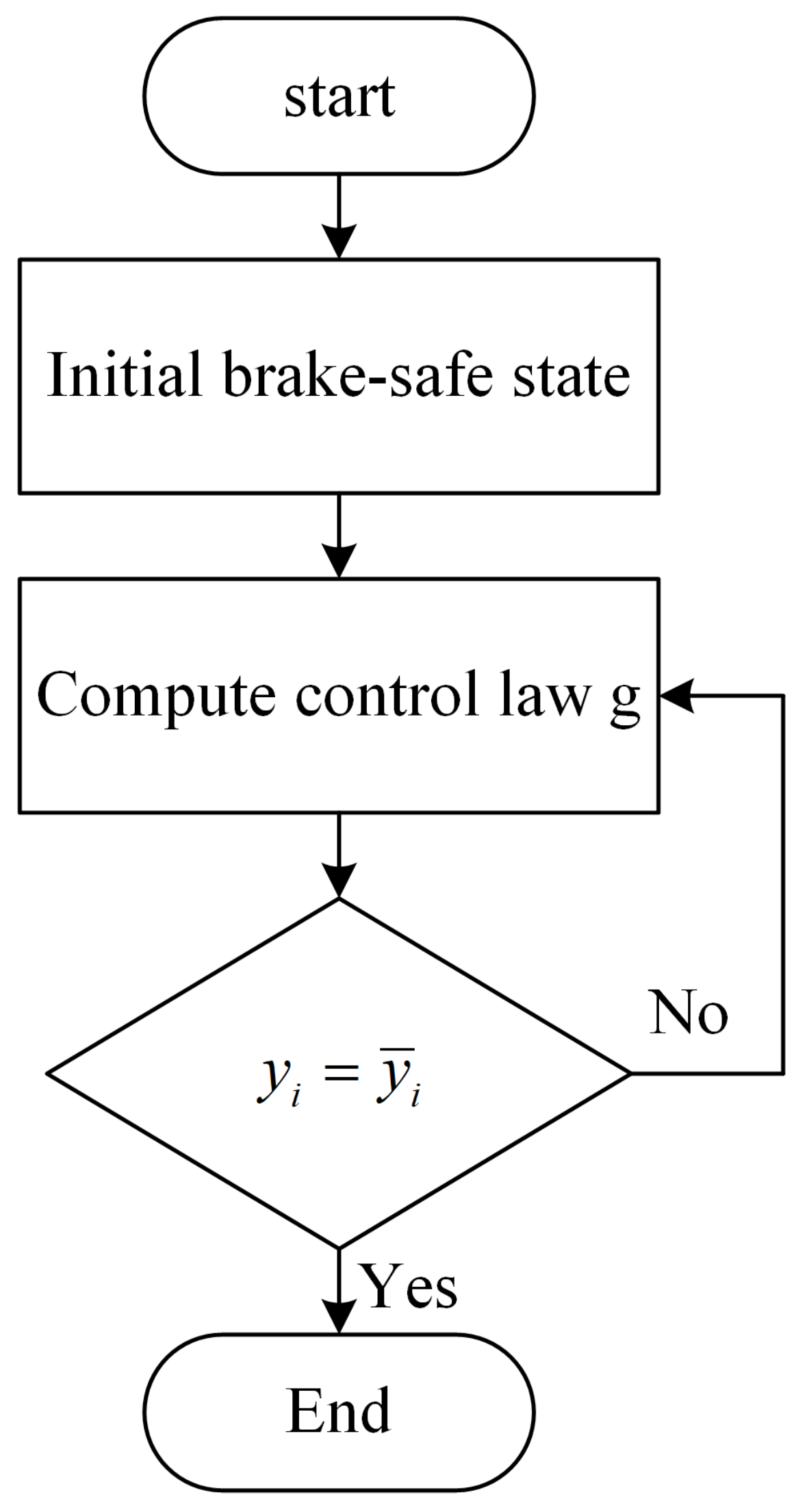

4.5. Brake-Safe Control

5. Experiments and Results Discussion

5.1. Simulation Environment

5.2. Simulation Setup

5.3. Simulation Results

- Average Wait Time (AWT): If the velocity of a vehicle in an incoming lane is smaller than 0.1 m/s, we regard the vehicle as in the wait queue. The Average Wait Time (AWT) is represented bywhere denotes the wait time of vehicle i and N denotes the number of vehicles waiting.

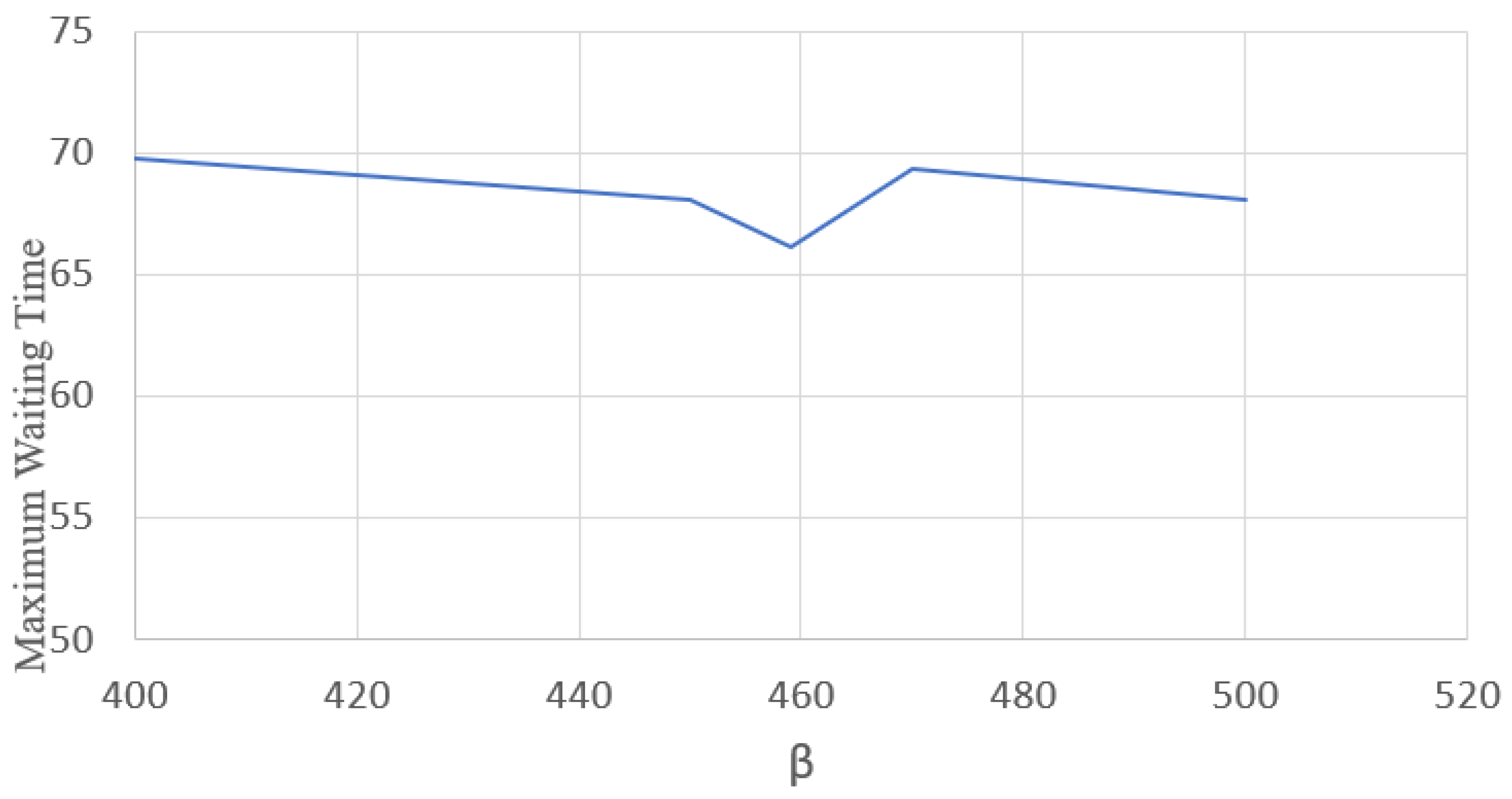

- Maximum Wait Time (MWT): The maximum wait time for vehicles during the simulation is called the Maximum Wait Time. It expresses how long a vehicle needs to wait in the worst case.

- Throughput: The vehicles exiting an intersection during the simulation is called the throughput.

- Fairness: The fairness metric used in our simulations is Jain’s fairness index [8], represented bywhere is a waiting vehicle i and N is the number of vehicles. If all vehicles have the same wait time, the fairness index is 1. The fairness decreases as the disparity in the wait time of vehicles increases.

5.4. Evaluation of A2CAIM

5.5. Comparison with FFS and GAMEOPT Methods

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Chang, G.L.; Xiang, H. The Relationship between Congestion Levels and Accidents; Technical Report, MD-03-SP 208B46; State Highway Administration: Baltimore, MD, USA, 2003. [Google Scholar]

- Kellner, F. Exploring the impact of traffic congestion on CO2 emissions in freight distribution networks. Logist. Res. 2016, 9, 21. [Google Scholar] [CrossRef]

- Kamal, M.A.S.; Imura, J.; Hayakawa, T.; Ohata, A.; Aihara, K. Intersection Coordination Scheme for Smooth Flows of Traffic Without Using Traffic Lights. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1136–1147. [Google Scholar] [CrossRef]

- IEEE Std 1609.0-2013; IEEE Guide for Wireless Access in Vehicular Environments (WAVE)—Architecture. IEEE: Piscataway, NJ, USA, 2014; pp. 1–78.

- Dresner, K.; Stone, P. A Multiagent Approach to Autonomous Intersection Management. J. Artif. Intell. Res. 2008, 31, 591–656. [Google Scholar] [CrossRef]

- Qian, X.; Altché, F.; Grégoire, J.; de La Fortelle, A. Autonomous Intersection Management systems: Criteria, implementation and evaluation. IET Intell. Transp. Syst. 2017, 11, 182–189. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the 33rd International Conference on Machine Learning (PMLR), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Jain, R.K.; Chiu, D.M.W.; Hawe, W.R. A Quantitative Measure of Fairness and Discrimination; Eastern Research Laboratory, Digital Equipment Corporation: Hudson, MA, USA, 1984. [Google Scholar]

- Cascetta, E. Transportation Systems Engineering: Theory and Methods; Springer Science & Business Media: Berlin, Germany, 2013; Volume 49. [Google Scholar]

- Parks-Young, A.; Sharon, G. Intersection Management Protocol for Mixed Autonomous and Human-Operated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 1–11. [Google Scholar] [CrossRef]

- Bindzar, P.; Macuga, D.; Brodny, J.; Tutak, M.; Malindzakova, M. Use of Universal Simulation Software Tools for Optimization of Signal Plans at Urban Intersections. Sustainability 2022, 14, 2079. [Google Scholar] [CrossRef]

- Li, G.; Wu, J.; He, Y. ActorRL: A Novel Distributed Reinforcement Learning for Autonomous Intersection Management. arXiv 2022, arXiv:2205.02428. [Google Scholar]

- Musolino, G.; Rindone, C.; Vitetta, A. Models for Supporting Mobility as a Service (MaaS) Design. Smart Cities 2022, 5, 206–222. [Google Scholar] [CrossRef]

- Schepperle, H.; Böhm, K. Agent-based traffic control using auctions. In International Workshop on Cooperative Information Agents; Springer: Berlin, Germany, 2007; pp. 119–133. [Google Scholar]

- Naumann, R.; Rasche, R.; Tacken, J.; Tahedi, C. Validation and simulation of a decentralized intersection collision avoidance algorithm. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Boston, MA, USA, 12 November 1997; pp. 818–823. [Google Scholar]

- Naumann, R.; Rasche, R.; Tacken, J. Managing autonomous vehicles at intersections. IEEE Intell. Syst. Their Appl. 1998, 13, 82–86. [Google Scholar] [CrossRef]

- VanMiddlesworth, M.; Dresner, K.; Stone, P. Replacing the stop sign: Unmanaged intersection control for autonomous vehicles. In Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems, Estoril, Portugal, 12–16 May 2008; Volume 3, pp. 1413–1416. [Google Scholar]

- Gregoire, J.; Bonnabel, S.; de La Fortelle, A. Optimal cooperative motion planning for vehicles at intersections. arXiv 2013, arXiv:1310.7729. [Google Scholar]

- Gregoire, J.; Bonnabel, S.; De La Fortelle, A. Priority-Based Coordination of Robots. CoRR. abs/1602.01783. 2014. Available online: https://hal.archives-ouvertes.fr/hal-00828976/file/priority-based-coordination-of-robots.pdf (accessed on 5 December 2022).

- Chen, L.; Englund, C. Cooperative intersection management: A survey. IEEE Trans. Intell. Transp. Syst. 2016, 17, 570–586. [Google Scholar] [CrossRef]

- Chen, L.; Englund, C. Manipulator trajectory planning based on work subspace division. Concurr. Comput. Pract. Exp. 2022, 34, 570–586. [Google Scholar]

- Li, C.-G.; Wang, M.; Sun, Z.-G.; Lin, F.-Y.; Zhang, Z.-F. Urban Traffic Signal Learning Control Using Fuzzy Actor-Critic Methods. In Proceedings of the Fifth International Conference on Natural Computation, Tianjin, China, 14–16 August 2009; Volume 1, pp. 368–372. [Google Scholar]

- Jin, J.; Ma, X. Adaptive Group-Based Signal Control Using Reinforcement Learning with Eligibility Traces. In Proceedings of the IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 2412–2417. [Google Scholar]

- Mikami, S.; Kakazu, Y. Genetic Reinforcement Learning for Cooperative Traffic Signal Control. In Proceedings of the First IEEE Conference on Evolutionary Computation. IEEE World Congress on Computational Intelligence, Orlando, FL, USA, 27–29 June 1994; Volume 1, pp. 223–228. [Google Scholar]

- Ha-li, P.; Ke, D. An intersection signal control method based on deep reinforcement learning. In Proceedings of the 10th International Conference on Intelligent Computation Technology and Automation (ICICTA), Changsha, China, 9–10 October 2017; pp. 344–348. [Google Scholar]

- Zhang, C.; Jin, S.; Xue, W.; Xie, X.; Chen, S.; Chen, R. Independent Reinforcement Learning for Weakly Cooperative Multiagent Traffic Control Problem. IEEE Trans. Veh. Technol. 2021, 7, 7426–7436. [Google Scholar] [CrossRef]

- Chanloha, P.; Usaha, W.; Chinrungrueng, J.; Aswakul, C. Performance Comparison between Queueing Theoretical Optimality and Q-Learning Approach for Intersection Traffic Signal Control. In Proceedings of the Fourth International Conference on Computational Intelligence, Modelling and Simulation, Kuantan, Malaysia, 25–27 September 2012; pp. 172–177. [Google Scholar]

- Liu, W.; Liu, J.; Peng, J.; Zhu, Z. Cooperative Multi-agent Traffic Signal Control system using Fast Gradient-descent Function Approximation for V2I Networks. In Proceedings of the IEEE International Conference on Communications (ICC), Sydney, Australia, 10–14 June 2014; pp. 2562–2567. [Google Scholar]

- Teo, K.T.K.; Yeo, K.B.; Chin, Y.K.; Chuo, H.S.E.; Tan, M.K. Agent-Based Traffic Flow Optimization at Multiple Signalized Intersections. In Proceedings of the 8th Asia Modelling Symposium, Taipei, Taiwan, 23–25 September 2014; pp. 21–26. [Google Scholar]

- Prashanth, L.A.; Bhatnagar, S. Threshold Tuning Using Stochastic Optimization for Graded Signal Control. IEEE Trans. Veh. Technol. 2012, 61, 3865–3880. [Google Scholar] [CrossRef]

- El-Tantawy, S.; Abdulhai, B. An Agent-based Learning Towards Decentralized and Coordinated Traffic Signal Control. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Portugal, 19–22 September 2010; pp. 665–670. [Google Scholar]

- Araghi, S.; Khosravi, A.; Johnstone, M.; Creighton, D. Q-learning Method for Controlling Traffic Signal Phase Time in a Single Intersection. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1261–1265. [Google Scholar]

- Yau, K.A.; Qadir, J.; Khoo, H.L.; Ling, M.H.; Komisarczuk, P. A Survey on Reinforcement Learning Models and Algorithms for Traffic Signal Control. ACM Comput. Surv. 2017, 50, 1–34. [Google Scholar] [CrossRef]

- Pasin, M.; Scheuermann, B.; Moura, R.F.d. Vanet-based Intersection Control with a Throughput/Fairness Tradeoff. In Proceedings of the 8th IFIP Wireless and Mobile Networking Conference (WMNC), Munich, Germany, 5–7 October 2015; pp. 208–215. [Google Scholar]

- Wu, J.; Ghosal, D.; Zhang, M.; Chuah, C. Delay-Based Traffic Signal Control for Throughput Optimality and Fairness at an Isolated Intersection. IEEE Trans. Veh. Technol. 2018, 67, 896–909. [Google Scholar] [CrossRef]

- Madrigal Arteaga, V.M.; Pérez Cruz, J.R.; Hurtado-Beltrán, A.; Trumpold, J. Efficient Intersection Management Based on an Adaptive Fuzzy-Logic Traffic Signal. Appl. Sci. 2022, 12, 6024. [Google Scholar] [CrossRef]

- Guney, M.A.; Raptis, I.A. Scheduling-based optimization for motion coordination of autonomous vehicles at multilane intersections. J. Robot. 2020, 2020, 6217409. [Google Scholar] [CrossRef]

- Wang, M.I.C.; Wen, C.H.P.; Chao, H.J. Roadrunner+: An Autonomous Intersection Management Cooperating with Connected Autonomous Vehicles and Pedestrians with Spillback Considered. ACM Trans. Cyber-Phys. Syst. (TCPS) 2021, 6, 1–29. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, L.; Lu, Z.; Wang, L.; Wen, X. Robust autonomous intersection control approach for connected autonomous vehicles. IEEE Access 2020, 8, 124486–124502. [Google Scholar] [CrossRef]

- Suriyarachchi, N.; Chandra, R.; Baras, J.S.; Manocha, D. GAMEOPT: Optimal Real-time Multi-Agent Planning and Control at Dynamic Intersections. arXiv 2022, arXiv:2202.11572. [Google Scholar]

- Janisch, J. Let’s Make an A3C: Theory. Available online: https://jaromiru.com/2017/02/16/lets-make-an-a3c-theory/ (accessed on 16 February 2017).

- Thomas, P.S.; Brunskill, E. Policy Gradient Methods for Reinforcement Learning with Function Approximation and Action-Dependent Baselines. arXiv 2017, arXiv:abs/1706.06643. [Google Scholar]

- Vitetta, A. Sustainable Mobility as a Service: Framework and Transport System Models. Information 2022, 13, 346. [Google Scholar] [CrossRef]

- Abdoos, M.; Mozayani, N.; Bazzan, A.L.C. Traffic Light Control in Nonstationary Environments Based on Multi Agent Q-learning. In Proceedings of the 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1580–1585. [Google Scholar]

- Krajzewicz, D.; Erdmann, J.; Behrisch, M.; Bieker, L. Recent Development and Applications of SUMO—Simulation of Urban MObility. Int. J. Adv. Syst. Meas. 2012, 5, 128–138. [Google Scholar]

| Phases -I EWG | Phases -II EWY | Phases -III EWLG | Phases -IV EWLY |

|---|---|---|---|

| turn right from west or east | Wait | Turn left west or east | Wait |

| Phases -V SNG | Phases -VI SNY | Phases -VII SNLG | Phases -VIII SNLY |

turn right from north or south | Wait | Turn left from north or south | Wait |

| CPU | Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz |

| Memory | 16 GB - DDR4 |

| Operating System | Windows 10 (64-bit) |

| Programming Language | Python 2.7 |

| Destination | West | East | South | North | |

|---|---|---|---|---|---|

| Origin | |||||

| West | - | 0.3 | 0.035 | 0.085 | |

| East | 0.3 | - | 0.085 | 0.035 | |

| South | 0.085 | 0.035 | - | 0.2 | |

| North | 0.035 | 0.085 | 0.2 | - | |

| Simulation Parameter | Value |

|---|---|

| Car moving length | 5 m |

| Cooperative zone car length | 50 m |

| Maximum velocity | 10 m/s |

| Maximum accelerate | 4 m/s |

| Minimum accelerate | −5 m/s |

| Brake-safe control time step | 0.1 s |

| Parameter | Value |

|---|---|

| Simulation length | 200 s |

| Inflow rate | 100 vel/min |

| Discount factor | 0.99 |

| Timestep (s) | AWT (s) | MWT (s) | Throughput (Vehicles) | Fairness |

|---|---|---|---|---|

| 2.5 | 36.76 | 79.05 | 162.0 | 0.67 |

| 3.0 | 37.59 | 66.09 | 193.1 | 0.73 |

| 3.5 | 39.83 | 79.64 | 204.9 | 0.67 |

| 4.0 | 32.93 | 65.63 | 213.3 | 0.70 |

| Inflow Rate (Vehicles/min) | 125 | 100 | 75 | 50 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | A2CAIM | GAMEOPT | FFS | A2CAIM | GAMEOPT | FFS | A2CAIM | GAMEOPT | FFS | A2CAIM | GAMEOPT | FFS |

| AWT (s) | 33.6 | 37.1 | 38 | 32.93 | 36 | 31.73 | 27.34 | 24 | 24.25 | 15.51 | 17.2 | 7.74 |

| MWT (s) | 65 | 110 | 120 | 65.63 | 108 | 108.51 | 63.32 | 82 | 89.56 | 59.14 | 70 | 43.01 |

| Throughput (vehicles) | 230.2 | 210.4 | 220 | 213.3 | 180 | 227.1 | 193.2 | 150 | 204 | 157.5 | 120 | 138.7 |

| Fairness | 0.78 | 0.6 | 0.4 | 0.7 | 0.5 | 0.32 | 0.63 | 0.42 | 0.24 | 0.43 | 0.4 | 0.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayeelyan, J.; Lee, G.-H.; Hsu, H.-C.; Hsiung, P.-A. Advantage Actor-Critic for Autonomous Intersection Management. Vehicles 2022, 4, 1391-1412. https://doi.org/10.3390/vehicles4040073

Ayeelyan J, Lee G-H, Hsu H-C, Hsiung P-A. Advantage Actor-Critic for Autonomous Intersection Management. Vehicles. 2022; 4(4):1391-1412. https://doi.org/10.3390/vehicles4040073

Chicago/Turabian StyleAyeelyan, John, Guan-Hung Lee, Hsiu-Chun Hsu, and Pao-Ann Hsiung. 2022. "Advantage Actor-Critic for Autonomous Intersection Management" Vehicles 4, no. 4: 1391-1412. https://doi.org/10.3390/vehicles4040073

APA StyleAyeelyan, J., Lee, G.-H., Hsu, H.-C., & Hsiung, P.-A. (2022). Advantage Actor-Critic for Autonomous Intersection Management. Vehicles, 4(4), 1391-1412. https://doi.org/10.3390/vehicles4040073