A Machine Learning Approach to Detect Denial of Sleep Attacks in Internet of Things (IoT)

Abstract

1. Introduction

- Dataset Creation: We constructed an elaborate dataset that models various battery-depleting DoSl attacks on a ContikiOS-based IoT setting with COOJA. This dataset has power consumption and node-level features where instances are labelled for supervised learning [5].

- Machine Learning Evaluation: We performed an extensive test of classical ML models, both linear and non-linear, in a multi-class classification system. The models were also tested with respect to identifying real nodes and different attack situations such as attacks involving sleep deprivation, UDP-flooding, wormhole, and externally generated legitimate-request attacks.

- Deep Learning Application: We showed that DL could be applied to the dataset by training an ANN and an LSTM-based RNN model to reveal the existence of complex attack patterns, which conventional ML methods could not reveal.

- Analysis and Future Directions: We interpret the results of the tested models about their strengths and weaknesses, and we suggest future work, such as more considerations on the implementation in real time, as well as validation on real-life IoT architecture.

2. Related Works

3. Implementation and Evaluation of the Proposed Solution

3.1. Dataset Generation Process

- UDP Flood Attack Simulation: For this attack, the network consisted of twelve motes arranged in a mesh topology utilizing the RPL (Routing Protocol for Low-Power and Lossy Networks) stack over UDP. The simulation ran continuously for three hours, during which each mote’s power consumption in various operational modes was recorded using the Powertrace application. To simulate realistic network activity patterns and avoid synchronization artifacts, mote response time intervals were randomized for each hour, effectively diversifying the duty cycles and capturing a broad range of operational conditions.

- Wormhole Attack Simulation: This simulation was conducted using the same twelve node network topology, with a key modification applied to the MAC layer of ContikiOS (the framer-nullmac.c file). This modification disabled the transmission of acknowledgement (ACK) frames from receivers to senders. As a result, victim nodes extended their listening periods, remaining active longer than usual while waiting for ACK that never arrived. This behavior led to increased radio-on time and accelerated battery depletion, a tactic commonly used in energy-draining attacks. The simulation spanned three hours, during which power consumption data was collected from ten motes to evaluate the impact of the wormhole attack on their energy profiles.

- Externally Generated Legitimate Request Attack: This third attack type resembles barrage attacks where victim nodes are overwhelmed by a high volume of valid, seemingly benign requests. This scenario involved six motes, split evenly between victim and normal operation roles. The motes ran the Sky-websense application within ContikiOS, while an external Java program orchestrated periodic communications at randomized intervals to simulate legitimate requests that kept victim motes awake, thereby accelerating battery depletion. This setup was constrained by computational and simulation limits but was instrumental in capturing the nuanced behavior of legitimate request flooding attacks.

3.2. Dataset Features and Their Advantages

3.3. Machine Learning Model Development and Evaluation

3.3.1. Logistic Regression

3.3.2. Support Vector Machine (SVM)

3.3.3. K-Nearest Neighbors (KNN)

3.3.4. Decision Tree (DT)

3.3.5. Random Forest (RF)

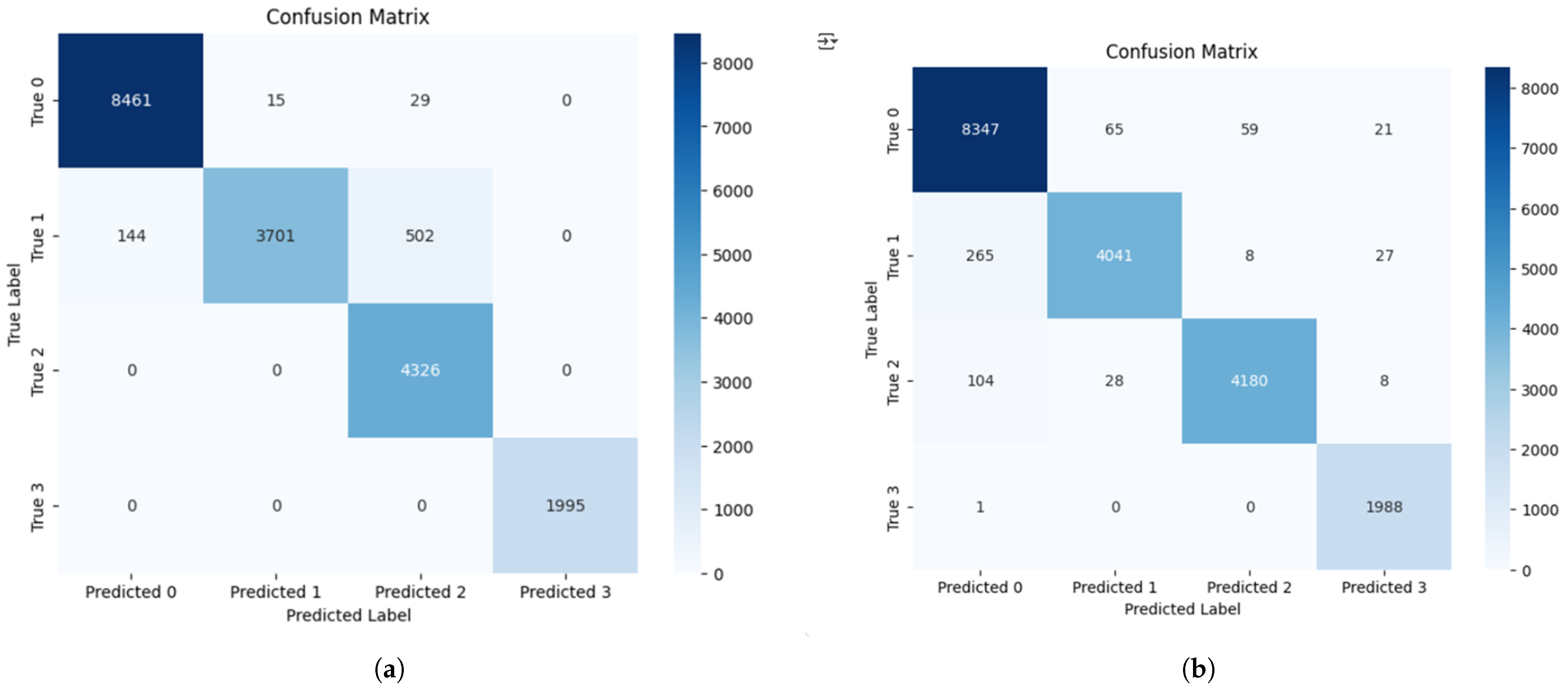

3.3.6. Artificial Neural Networks (ANN)

3.3.7. Recurrent Neural Network (LSTM)

4. Results and Evaluation of the Developed Models

4.1. Classification Accuracy and Overall Performance

4.2. F1-Score and Class-Wise Evaluation

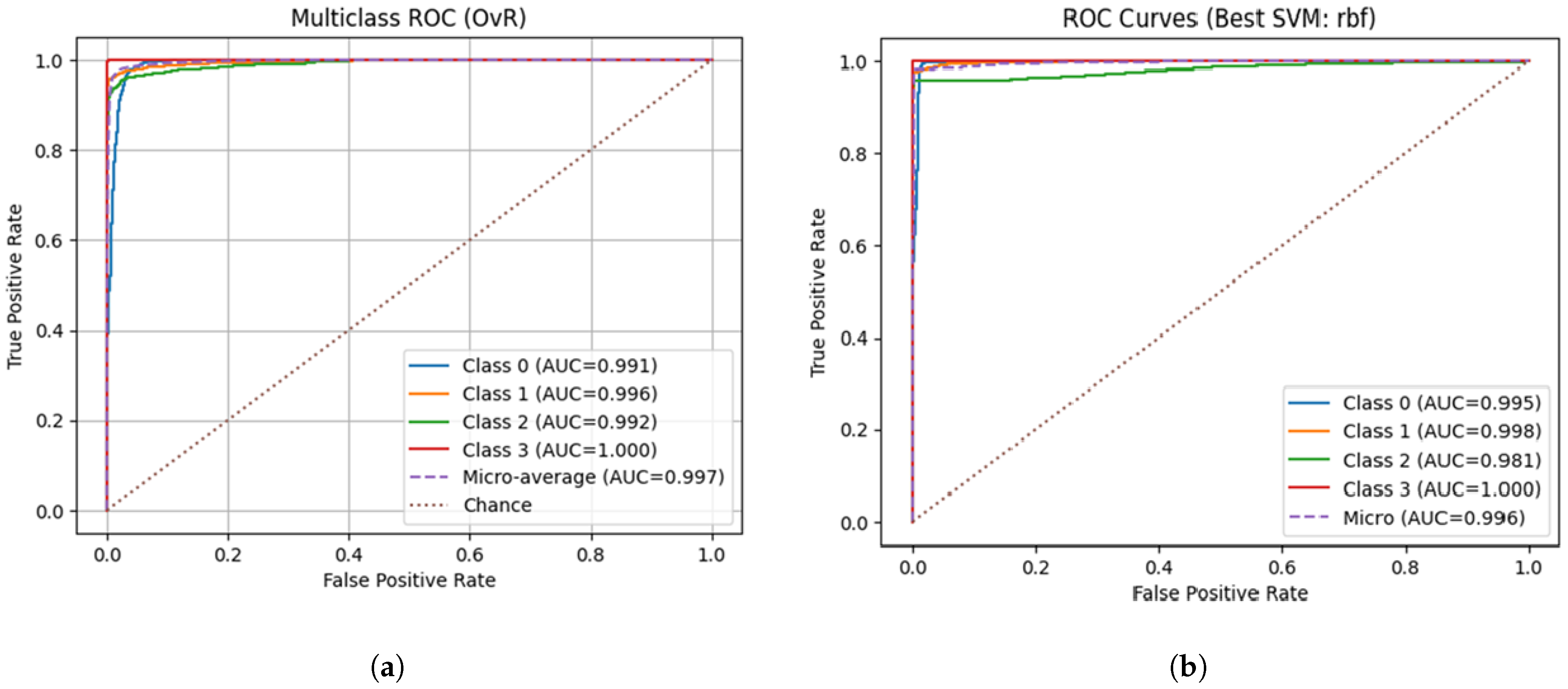

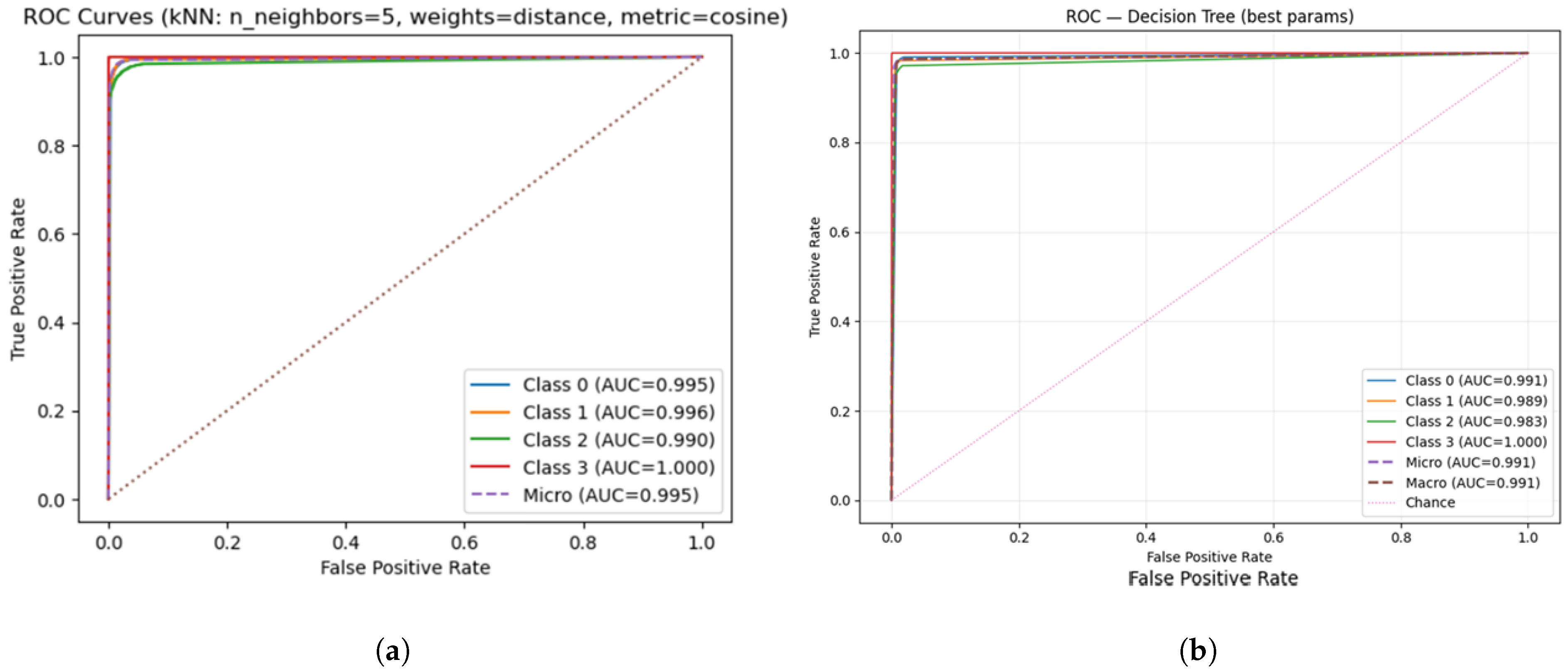

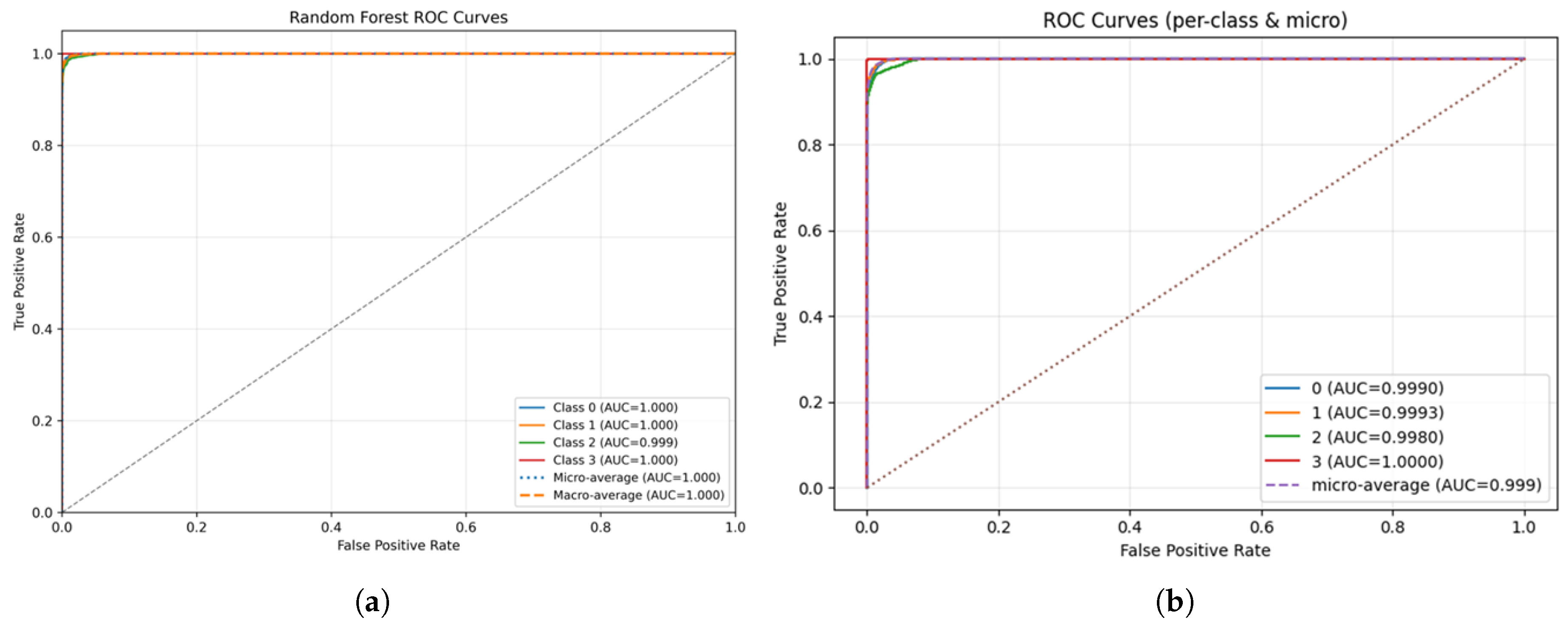

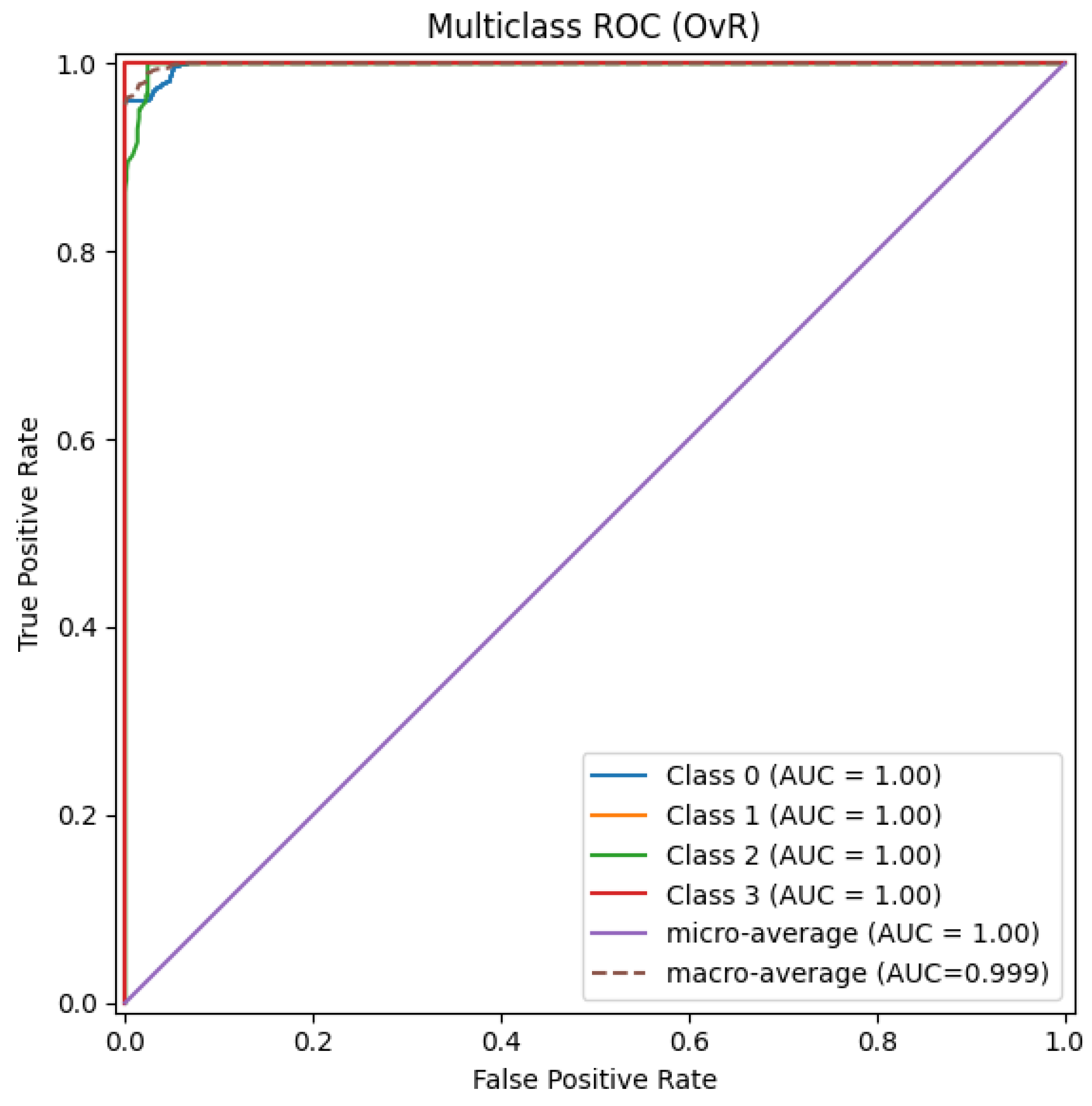

4.3. Receiver Operating Characteristic (ROC) Analysis

4.4. Temporal Split Evaluation and Model Generalization

4.5. Ablation on Cumulative Energy Counters

4.6. Feasibility and Resource Cost Considerations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| DoSl | Denial of Sleep |

| ML | Machine Learning |

| DL | Deep Learning |

| ROC | Receiver Operating Characteristic |

| UDP | User Datagram Protocol |

| SMACK | Short Message Authentication ChecK |

| WBAN | Wireless Body Area Network |

| WSN | Wireless Sensor Networks |

| CoAP | Constrained Application Protocol |

| mW | milliwatts |

| mJ | millijoules |

| BSD | Berkeley Software Distribution |

| lpm | Low-Power Mode |

| LR | Logistic Regression |

| SVM | Support Vector Machine |

| KNN | K-Nearest Neighbors |

| DT | Decision Tree |

| RF | Random Forest |

| ANN | Artificial Neural Networks |

| LSTM | Long Short-Term Memory |

| RNN | Recurrent Neural Network |

| IoMT | Internet of Medical Things |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| AUC | Area Under the Curve |

References

- Haseeb, M.; Hussain, H.I.; Slusarczyk, B.; Jermsittiparsert, K. Industry 4.0: A Solution Towards Technology Challenges of Sustainable Business Performance. J. Open Innov. Technol. Mark. Complex. 2019, 5, 154. [Google Scholar] [CrossRef]

- Joglekar, S.; Kadam, S.; Dharmadhikari, S. Industry 5.0: Analysis, Applications and Prognosis. In Global Perspectives on the Next Generation of Service Science; IGI Global: Hershey, PA, USA, 2023; pp. 1–28. [Google Scholar]

- Pirretti, M.; Zhu, S.; Vijaykrishnan, N.; McDaniel, P.; Kandemir, M.; Brooks, R. The Sleep Deprivation Attack in Sensor Networks: Analysis and Methods of Defense. Int. J. Distrib. Sens. Netw. 2006, 2, 267–287. [Google Scholar] [CrossRef]

- Boubiche, D.E.; Bilami, A. A Defense Strategy against Energy Exhausting Attacks in Wireless Sensor Networks. J. Emerg. Technol. Web Intell. 2013, 5, 18–27. [Google Scholar] [CrossRef]

- Dissanayake, I.; Weerasinghe, H.D.; Welhenge, A. A generation of dataset towards an Anomaly-Based Intrusion Detection System to detect Denial of Sleep Attacks in Internet of Things (IoT). In Proceedings of the 2022 22nd International Conference on Advances in ICT for Emerging Regions (ICTer), Colombo, Sri Lanka, 30 November–1 December 2022; pp. 92–97. [Google Scholar] [CrossRef]

- Gupta, R.; Srivastava, D.; Sahu, M.; Tiwari, S.; Ambasta, R.K.; Kumar, P. Artificial intelligence to deep learning: Machine intelligence approach for drug discovery. In A Step towards Advanced Drug Discovery; Springer: Singapore, 2021; pp. 1–25. [Google Scholar]

- Ye, W.; Heidemann, J.; Estrin, D. An Energy-Efficient MAC Protocol for Wireless Sensor Networks. In Proceedings of the Twenty-First Annual Joint Conference of the IEEE Computer and Communications Societies, New York, NY, USA, 23–27 June 2002; Volume 3, pp. 1560–1569. [Google Scholar]

- van Dam, T.; Langendoen, K. An adaptive energy efficient MAC protocol for wireless sensor networks. In Proceedings of the 1st International Conference on Embedded Networked Sensor Systems, Los Angeles, CA, USA, 5–7 November 2003; ACM: New York, NY, USA, 2003; pp. 171–180. [Google Scholar]

- Polastre, J.; Hill, J.; Culler, D. Versatile Low Power Media Access for Wireless Sensor Networks. In Proceedings of the 2nd International Conference on Embedded Networked Sensor Systems, Baltimore, MD, USA, 3–5 November 2004; ACM: New York, NY, USA, 2004; pp. 95–107. [Google Scholar]

- Buettner, M.; Yee, G.V.; Anderson, E.; Han, R. X-MAC: A Short Preamble MAC Protocol for Duty-Cycled Wireless Sensor Networks. In Proceedings of the 4th International Conference on Embedded Networked Sensor Systems, Boulder, CO, USA, 31 October–3 November 2006; ACM: Boulder, CO, USA, 2006; pp. 307–320. [Google Scholar]

- Dunkels, A. The ContikiMAC Radio Duty Cycling Protocol; SICS Technical Report T2011:13; Swedish Institute of Computer Science: Stockholm, Sweden, 2011. [Google Scholar]

- Gehrmann, C.; Tiloca, M.; Hoglund, R. SMACK: Short Message Authentication ChecK Against Battery Exhaustion in the Internet of Things. In Proceedings of the 2015 12th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Seattle, WA, USA, 22–25 June 2015; IEEE: Chicago, IL, USA, 2015; pp. 1–6. [Google Scholar]

- Maddikunta, P.K.R.; Srivastava, G.; Gadekallu, T.R.; Deepa, N.; Boopathy, P. Predictive model for battery life in IoT networks. IET Intell. Transp. Syst. 2020, 14, 1388–1395. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A Detailed Analysis of the KDD CUP 99 Data Set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; IEEE: Chicago, IL, USA, 2009; pp. 1–9. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A Comprehensive Data set for Network Intrusion Detection systems. In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; IEEE: Chicago, IL, USA, 2015; pp. 1–6. [Google Scholar]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy, Funchal, Portugal, 22–24 January 2018; SCITEPRESS: Funchal, Portugal, 2018; pp. 108–116. [Google Scholar]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the Development of Realistic Botnet Dataset in the Internet of Things for Network Forensic Analytics: Bot-IoT Dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef]

- Hamza, A.; Gharakheili, H.H.; Benson, T.A.; Sivaraman, V. Detecting Volumetric Attacks on IoT Devices via SDN-Based Monitoring of MUD Activity. In Proceedings of the 2019 ACM Symposium on SDN Research, San Jose, CA, USA, 3–4 April 2019; pp. 1–9. [Google Scholar]

- Essop, I.; Ribeiro, J.C.; Papaioannou, M.; Zachos, G.; Mantas, G.; Rodriguez, J. Generating Datasets for Anomaly-Based Intrusion Detection Systems in IoT and Industrial IoT Networks. Sensors 2021, 21, 1528. [Google Scholar] [CrossRef] [PubMed]

- Mohd, N.; Singh, A.; Bhadauria, H.S. A Novel SVM Based IDS for Distributed Denial of Sleep Strike in Wireless Sensor Networks. Wirel. Pers. Commun. 2020, 111, 1999–2022. [Google Scholar] [CrossRef]

- Mukhtar, M.; Lashari, M.H.; Alhussein, M.; Karim, S.; Aurangzeb, K. Energy-Efficient Framework to Mitigate Denial of Sleep Attacks in Wireless Body Area Networks. IEEE Access 2024, 12, 66584–66598. [Google Scholar] [CrossRef]

- Alsaedi, A.; Mahmood, A.; Moustafa, N.; Tari, Z.; Anwar, A. TON_IoT Telemetry Dataset: A New Generation Dataset of IoT and IIoT for Data-Driven Intrusion Detection Systems. IEEE Access 2020, 8, 165130–165150. [Google Scholar] [CrossRef]

- Peterson, J.M.; Leevy, J.L.; Khoshgoftaar, T.M. A Review and Analysis of the Bot-IoT Dataset. In Proceedings of the 2021 IEEE International Conference on Service-Oriented System Engineering (SOSE), Oxford, UK, 23–26 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 47–56. [Google Scholar] [CrossRef]

- Koppula, M.; Leo Joseph, L.M.I. A Real-World Dataset “IDSIoT2024” for Machine Learning/Deep Learning-Based Cyber Attack Detection System for IoT Architecture. In Proceedings of the 3rd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT 2025), Coimbatore, India, 17–19 July 2025; IEEE: Piscataway, NJ, USA, 2025. [Google Scholar] [CrossRef]

- Tareq, I.; Elbagoury, B.M.; El-Regaily, S.; El-Horbaty, E.-S.M. Analysis of ToN-IoT, UNW-NB15, and Edge-IIoT Datasets Using DL in Cybersecurity for IoT. Appl. Sci. 2022, 12, 9572. [Google Scholar] [CrossRef]

- Elsadig, M.A. Detection of Denial-of-Service Attack in Wireless Sensor Networks: A Lightweight Machine Learning Approach. IEEE Access 2023, 11, 101246–101260. [Google Scholar] [CrossRef]

- Bani-Yaseen, T.; Tahat, A.; Kastell, K.; Edwan, T.A. Denial-of-Sleep Attack Detection in NB-IoT Using Deep Learning. J. Telecommun. Digit. Econ. 2022, 10, 1–17. [Google Scholar] [CrossRef]

- Sharma, A.; Rani, S.; Shabaz, M. An Optimized Stacking-Based TinyML Model for Attack Detection in IoT Networks. PLoS ONE 2025, 20, e0329227. [Google Scholar] [CrossRef] [PubMed]

- Muzakkar, B.A.; Mohamed, M.A.; Kadir, M.F.A.; Mohamad, Z.; Jamil, N. Recent advances in energy efficient-QoS aware MAC protocols for wireless sensor networks. Int. J. Adv. Comput. Res. 2018, 8, 11–26. [Google Scholar] [CrossRef]

- Han, K.; Luo, J.; Liu, Y.; Vasilakos, A.V. Algorithm Design for Data Communications in Duty-Cycled Wireless Sensor Networks: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 1222–1245. [Google Scholar] [CrossRef]

- Tang, L.; Sun, Y.; Gurewitz, O.; Johnson, D.B. PW-MAC: An Energy-Efficient Predictive-Wakeup MAC Protocol for Wireless Sensor Networks. In Proceedings of the 2011 Proceedings IEEE INFOCOM, Shanghai, China, 10–15 April 2011; IEEE: Chicago, IL, USA, 2011; pp. 261–272. [Google Scholar]

- Goumidi, H.; Pierre, S. Real-Time Anomaly Detection in IoMT Networks Using Stacking Model and a Healthcare Specific Dataset. IEEE Access 2025, 13, 70352–70365. [Google Scholar] [CrossRef]

| Reference | Approach or Dataset | Attack Types | Models | Key Outcomes | Notes |

|---|---|---|---|---|---|

| MAC Protocols [7,8,9,10,11,29,30,31] | Low-power MAC protocols (duty cycling, sync) | General energy-saving | N/A | Reduces battery use, but limited vs. sleep deprivation attacks | Struggle with battery exhaustion attacks |

| SMACK [12] | Authentication mechanism at app layer (CoAP), tested on CC2538 | Battery exhaustion (general) | N/A | Energy-efficient but limited vs. barrage attacks | Packet validation overhead |

| [19] | COOJA simulation dataset (approximately 11k benign + approximately 11k malicious) | UDP flood only | N/A | Dataset limited to single attack type | Not enough for broad IDS |

| Our previous work [5] | COOJA simulation | UDP flood, wormhole, barrage-like | N/A | Broader attack coverage | No ML implementation was carried out by that time, and only the dataset preparation approaches were given. |

| [20] | Opnet simulation, 1190 samples | Battery exhaustion | SVM | 90.8% accuracy; high TPR | Smaller dataset, accuracy might drop with scale |

| [21] | Energy-efficient framework by optimizing energy utilization | Battery exhaustion with repetitive tasks | N/A | Increased lifetime | Smaller simulation, with only repetitive tasks concerned |

| [22,23,24,25] | Traffic-centric IoT/IIoT | Multi-class intrusions (DoS/DDoS, etc.) | CART; DenseNet121; InceptionTime; various ML | Good accuracy, low training cost | Predominantly packet/flow features; lacks energy/sleep-state telemetry, limited for DoSl detection |

| [26,27] | Energy-aware IDS directions | Denial of Service attacks, Hello FLood DoSL | DT, KNN, RF, XGBoost; LSTM, GRU | 99.5% (WSN-DS); 98.99% accuracy and ROC-AUC 0.9889 for DoSl in NB-IoT | Traffic-based features; limited-attack focus; no device-level power telemetry |

| [28] | ToN-IoT dataset (≈461 k samples, 10 attack types); TinyML stacking on MCU | General multi-class intrusions | TinyML-Stacking (compact DT + small NN → LR meta) | 99.98% accuracy; 0.12 ms inference; 0.01 mW on Arduino Nano 33 BLE Sense | Traffic-centric; no sleep-state/energy telemetry |

| Feature Title | Description |

|---|---|

| all_cpu | Power consumption of CPU from the beginning |

| all_lpm | Power consumption of low-power mode from the beginning |

| all_transmit | Power consumed for data transmission from the beginning |

| all_listen | Power consumed to listen to data from the beginning |

| all_idle_listen | Power consumed for idle listening from the beginning |

| cpu | Power consumption of CPU for that duty cycle |

| lpm | Power consumption of low-power mode for that duty cycle |

| transmit | Power consumed for data transmission for that duty cycle |

| listen | Power consumed to listen to data for that duty cycle |

| idle_listen | Power consumed for idle listening for that duty cycle |

| Feature | Correlation Ratio () |

|---|---|

| all_cpu | 0.334 |

| all_lpm | 0.192 |

| all_transmit | 0.400 |

| all_listen | 0.321 |

| all_idle_listen | 0.331 |

| cpu | 0.337 |

| lpm | 0.324 |

| transmit | 0.057 |

| listen | 0.334 |

| idle_listen | 0.499 |

| Model | Parameter (s) | Held-Out 25% Test Set | K-Fold Cross Validation |

|---|---|---|---|

| Logistic Regression | penalty = l2, solver = lbfgs, C = 965.9184849666412, tol = , class_weight = None | 96.42% | 96.43% |

| SVM | kernel = rbf, C = 194.94884560528726, gamma = 0.639323158597893, class_weight = balanced, tol = , max_iter = 100,000 | 98.32% | 98.39% |

| KNN | n_neighbors = 5, weights = distance, metric = cosine | 97.33% | 97.60% |

| Decision Tree | criterion = gini, splitter = random, max_depth = 40 min_samples_split = 2, min_samples_leaf = 2, max_features = None, class_weight = None, ccp_alpha = 0.0 | 97.86% | 97.29% |

| Random Forest | n_estimators = 410, max_depth = 29, min_samples_split = 10, min_samples_leaf = 7, bootstrap = True, ccp_alpha = 0.0, max_features = 0.8430774202347376, class_weight = balanced | 98.57% | 98.65% |

| ANN | n_hidden_layers = 2, activation_hidden = relu, use_bn = True, dropout = 0.07816451844305984, l2 = 0.0018067201114756084, optimizer = nadam, lr = 0.0024901124769445967, lr_schedule = cosine, batch_size = 512, epochs = 130, units_0 = 1024, units_1 = 256 | 96.65% | 98.22 ± 0.39% |

| LSTM | batch_size = 64, epochs = 120, units_1 = 128, bidir_1 = False, drop_1 = 0.372, use_second = True, units_2 = 96, bidir_2 = False, drop_2 = 0.251, dense_units = 256, dense_drop = 0.021, optimizer = nadam, lr = 0.0018 | 97.92 % (15 % chronological holdout) | Chronological split (no random k-fold) |

| Model | F1 Score (per Class) | F1-Macro | |||

|---|---|---|---|---|---|

| Non-Compromised | UDP Flood | Wormhole | Legitimate Request | ||

| Logistic Regression | 0.9643 | 0.9655 | 0.9473 | 0.9943 | 0.9679 |

| SVM | 0.9850 | 0.9822 | 0.9735 | 0.9975 | 0.9845 |

| KNN | 0.9806 | 0.9675 | 0.9538 | 0.9946 | 0.9741 |

| Decision Tree | 0.9839 | 0.9745 | 0.9637 | 0.9956 | 0.9794 |

| Random Forest | 0.9898 | 0.9806 | 0.9784 | 0.9950 | 0.9859 |

| ANN | 0.9761 | 0.9531 | 0.9469 | 0.9937 | 0.9675 |

| LSTM | 0.9779 | 1.0000 | 0.9502 | 1.0000 | 0.9820 |

| Model | Accuracy | F1macro |

|---|---|---|

| Logistic Regression | 0.870 | 0.839 |

| SVM (RBF) | 0.573 | 0.509 |

| kNN | 0.886 | 0.866 |

| Decision Tree | 0.844 | 0.856 |

| Random Forest | 0.760 | 0.780 |

| ANN | 0.964 | 0.962 |

| RNN | 0.969 | 0.971 |

| Model | All Features | No Cumulative | Accuracy (%) |

|---|---|---|---|

| LR | 0.870 | 0.668 | −20.2 |

| SVM | 0.573 | 0.611 | +3.8 |

| KNN | 0.886 | 0.685 | −20.1 |

| DT | 0.844 | 0.753 | −9.1 |

| RF | 0.760 | 0.677 | −8.3 |

| ANN | 0.964 | 0.736 | −22.8 |

| RNN | 0.969 | 0.911 | −5.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dissanayake, I.; Welhenge, A.; Weerasinghe, H.D. A Machine Learning Approach to Detect Denial of Sleep Attacks in Internet of Things (IoT). IoT 2025, 6, 71. https://doi.org/10.3390/iot6040071

Dissanayake I, Welhenge A, Weerasinghe HD. A Machine Learning Approach to Detect Denial of Sleep Attacks in Internet of Things (IoT). IoT. 2025; 6(4):71. https://doi.org/10.3390/iot6040071

Chicago/Turabian StyleDissanayake, Ishara, Anuradhi Welhenge, and Hesiri Dhammika Weerasinghe. 2025. "A Machine Learning Approach to Detect Denial of Sleep Attacks in Internet of Things (IoT)" IoT 6, no. 4: 71. https://doi.org/10.3390/iot6040071

APA StyleDissanayake, I., Welhenge, A., & Weerasinghe, H. D. (2025). A Machine Learning Approach to Detect Denial of Sleep Attacks in Internet of Things (IoT). IoT, 6(4), 71. https://doi.org/10.3390/iot6040071