Acoustic Trap Design for Biodiversity Detection

Abstract

1. Introduction

2. Related Work

3. System Design

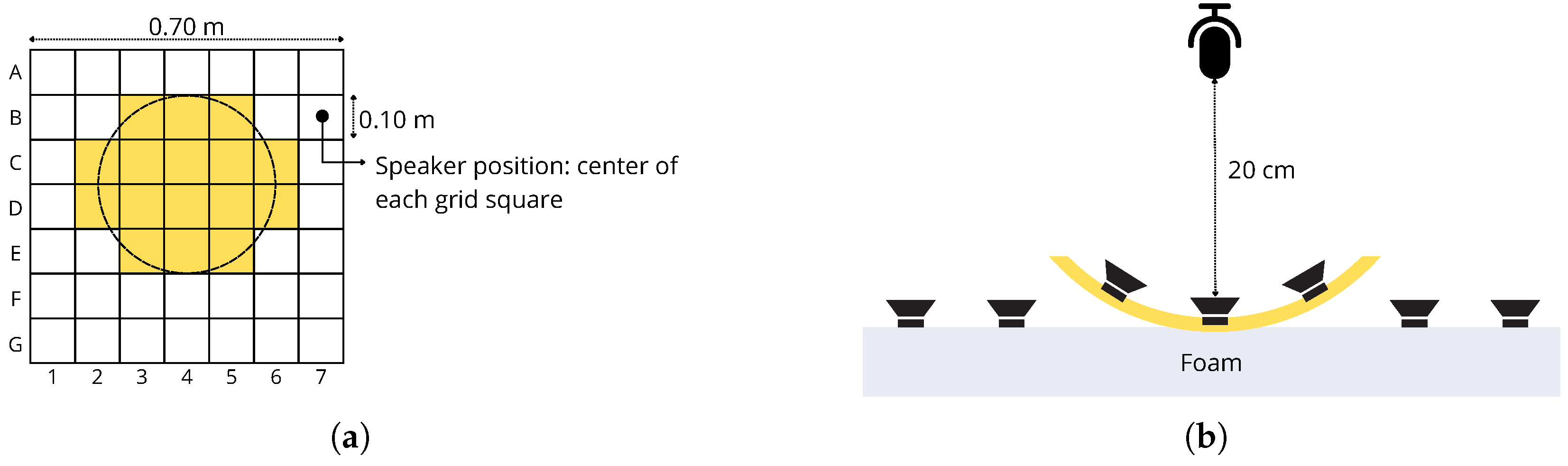

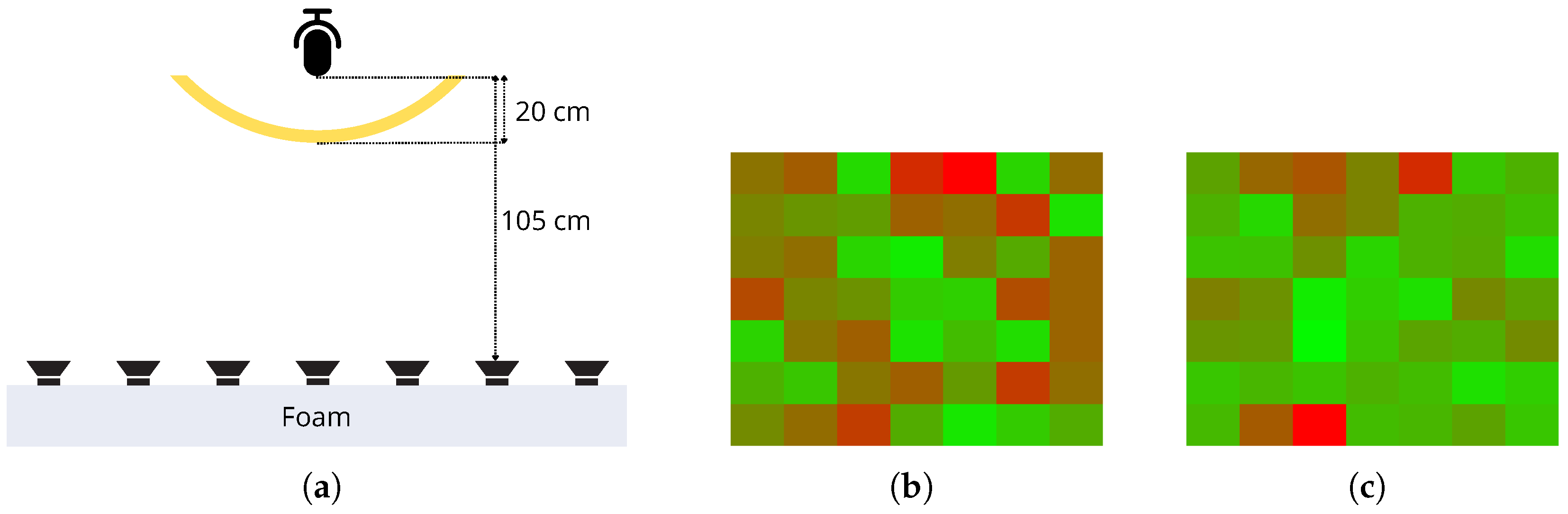

3.1. Mechanical Design

- The microphone had to be protected from wind and rain;

- The passage for the cable connecting the microphone to the hardware system had to be weatherproof.

- The hardware system itself (as described in Section 3.2) also required protection from rain;

- The height of the satellite dish relative to the ground had to be adjustable, so that it could be aligned with the height of the surrounding vegetation.

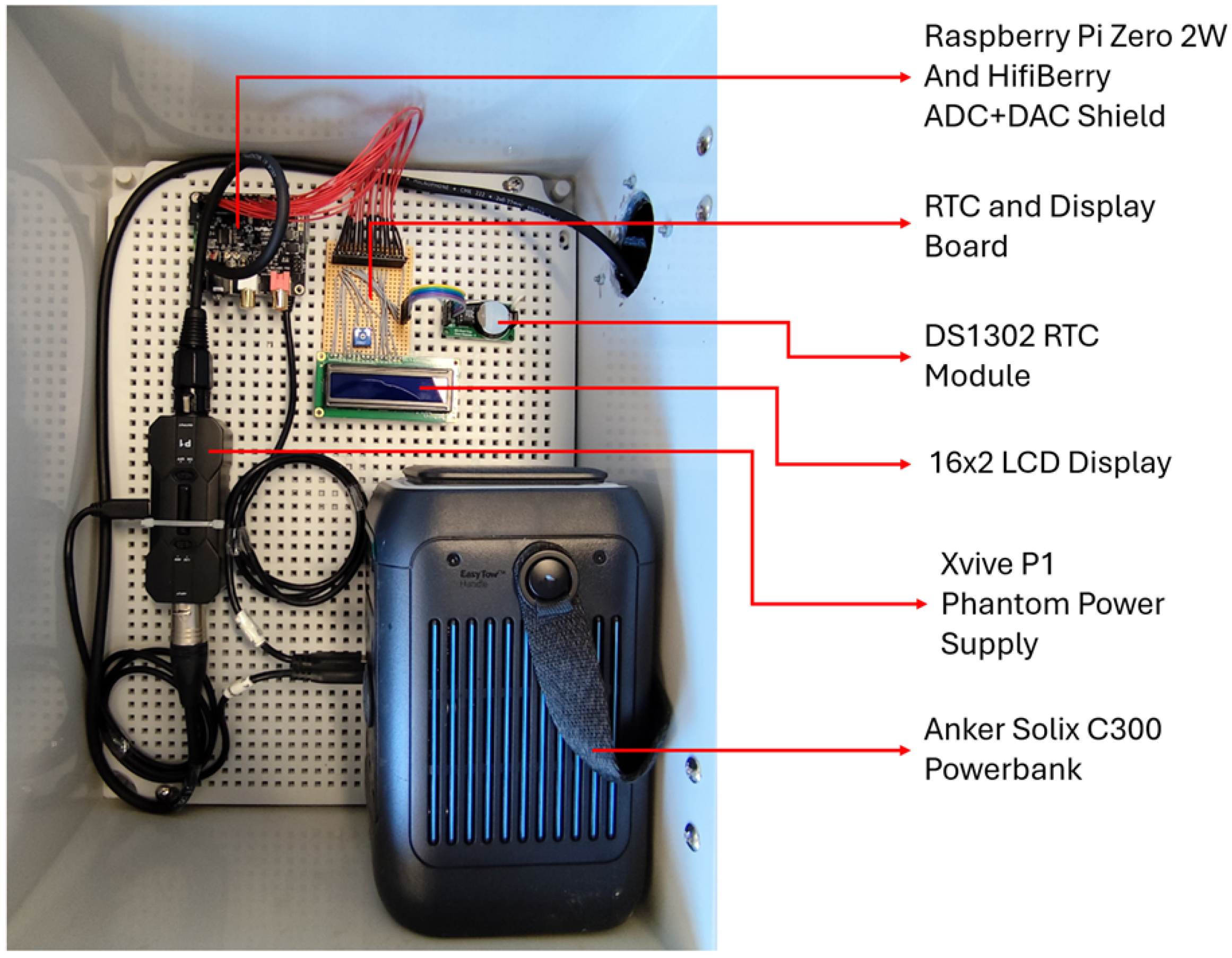

3.2. Hardware Design

3.2.1. Electronic Design

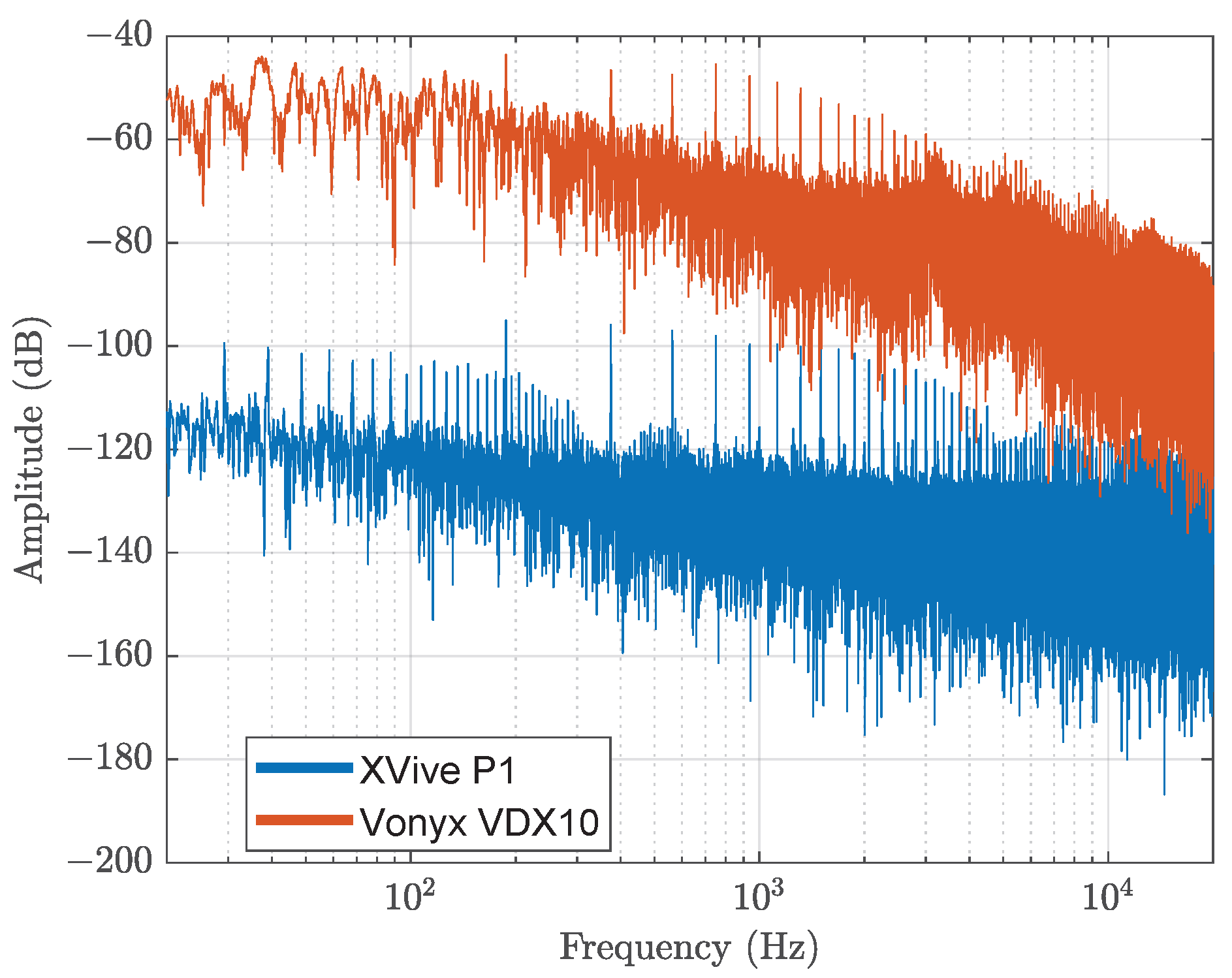

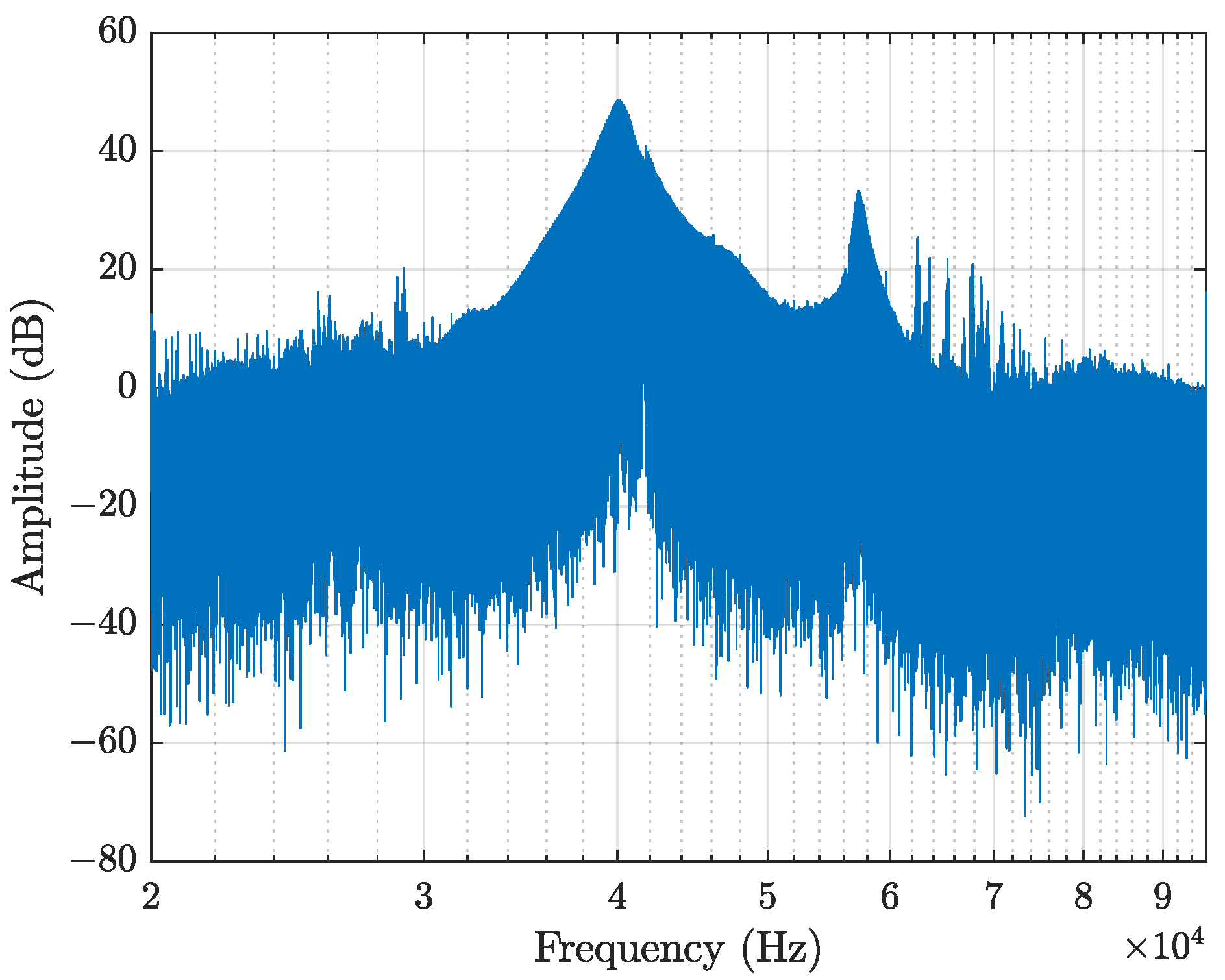

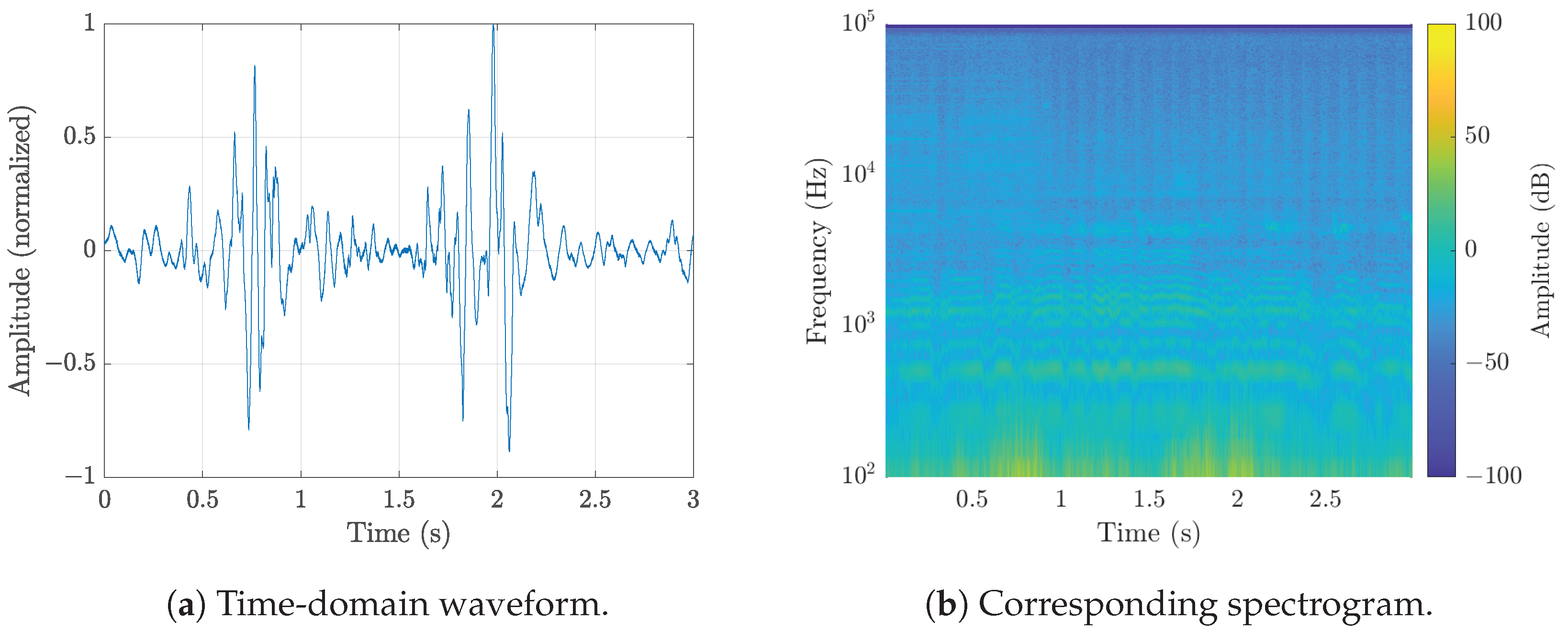

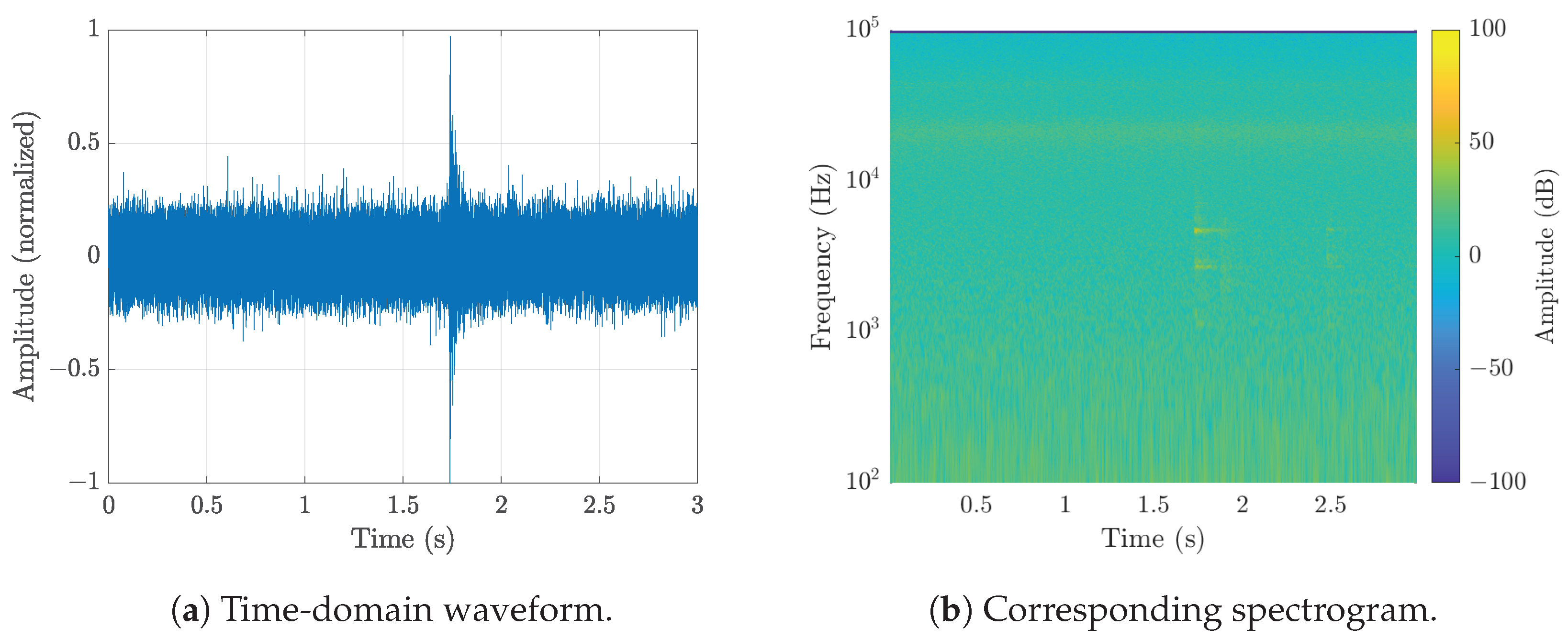

3.2.2. Microphone

3.3. Software Design

3.3.1. Audio Recording and Compression

3.3.2. Raspberry Pi Integration

4. Evaluation of the System

4.1. Runtime Analysis

- 1.

- Raspberry Pi Zero 2 W together with the Hifi Berry DAC+ADC Pro and RTC shield.

- 2.

- Xvive P1 Phantom Power Supply with an connected artificial microphone load (cf. Section 3.2.2).

4.2. Real World Experiments

5. Insights, Lessons Learned, and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DAC | Digital Analog Converter |

| ADC | Analog Digital Converter |

| GPIO | General Purpose Input/Output |

| RTC | Real-Time Clock |

| LCD | Liquid Crystal Display |

| PCB | Printed Circuit Board |

| SNR | Signal-to-Noise Ratio |

| FLAC | Free Lossless Audio Codec |

| FFmpeg | Forward Moving Picture Experts Group |

| WAV | Waveform Audio File Format |

| PCM | Pulse Code Modulation |

| AI | Artificial Intelligence |

References

- Zwerts, J.A.; Stephenson, P.; Maisels, F.; Rowcliffe, M.; Astaras, C.; Jansen, P.A.; van Der Waarde, J.; Sterck, L.E.; Verweij, P.A.; Bruce, T.; et al. Methods for wildlife monitoring in tropical forests: Comparing human observations, camera traps, and passive acoustic sensors. Conserv. Sci. Pract. 2021, 3, e568. [Google Scholar]

- Mutanu, L.; Gohil, J.; Gupta, K.; Wagio, P.; Kotonya, G. A Review of Automated Bioacoustics and General Acoustics Classification Research. Sensors 2022, 22, 8361. [Google Scholar] [CrossRef]

- Chung, A.W.L.; To, W.M. Mapping Soundscape Research: Authors, Institutions, and Collaboration Networks. Acoustics 2025, 7, 38. [Google Scholar] [CrossRef]

- Stowell, D. Computational bioacoustics with deep learning: A review and roadmap. PeerJ 2022, 10, e13152. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Sato, K.; Gautam, B.P. A Methodological Literature Review of Acoustic Wildlife Monitoring Using Artificial Intelligence Tools and Techniques. Sustainability 2023, 15, 7128. [Google Scholar] [CrossRef]

- Alberti, S.; Stasolla, G.; Mazzola, S.; Casacci, L.P.; Barbero, F. Bioacoustic IoT Sensors as Next-Generation Tools for Monitoring: Counting Flying Insects through Buzz. Insects 2023, 14, 924. [Google Scholar] [CrossRef]

- Passias, A.; Tsakalos, K.A.; Rigogiannis, N.; Voglitsis, D.; Papanikolaou, N.; Michalopoulou, M.; Broufas, G.; Sirakoulis, G.C. Insect Pest Trap Development and DL-Based Pest Detection: A Comprehensive Review. IEEE Trans. AgriFood Electron. 2024, 2, 323–334. [Google Scholar] [CrossRef]

- Kyalo, H.; Tonnang, H.; Egonyu, J.; Olukuru, J.; Tanga, C.; Senagi, K. Automatic synthesis of insects bioacoustics using machine learning: A systematic review. Int. J. Trop. Insect Sci. 2025, 45, 101–120. [Google Scholar] [CrossRef]

- European Parliament and Directorate-General for Parliamentary Research Services; Daheim, C.; Poppe, K.; Schrijver, R. Precision Agriculture and the Future of Farming in Europe—Scientific Foresight Study; European Parliament: Brussels, Belgium, 2016. [Google Scholar]

- Dokic, K.; Blaskovic, L.; Mandusic, D. From machine learning to deep learning in agriculture – the quantitative review of trends. IOP Conf. Ser. Earth Environ. Sci. 2020, 614, 012138. [Google Scholar] [CrossRef]

- Xie, J.; Hu, K.; Zhu, M.; Guo, Y. Data-driven analysis of global research trends in bioacoustics and ecoacoustics from 1991 to 2018. Ecol. Inform. 2020, 57, 101068. [Google Scholar] [CrossRef]

- Schoeman, R.P.; Erbe, C.; Pavan, G.; Righini, R.; Thomas, J.A. Analysis of Soundscapes as an Ecological Tool. In Exploring Animal Behavior Through Sound: Volume 1: Methods; Springer International Publishing: Cham, Switzerland, 2022; pp. 217–267. [Google Scholar] [CrossRef]

- Zhang, M.; Yan, L.; Luo, G.; Li, G.; Liu, W.; Zhang, L. A novel insect sound recognition algorithm based on MFCC and CNN. In Proceedings of the 2021 6th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 19–21 November 2021; IEEE: New York, NY, USA, 2021; pp. 289–294. [Google Scholar]

- Hill, A.P.; Prince, P.; Piña Covarrubias, E.; Doncaster, C.P.; Snaddon, J.L.; Rogers, A. AudioMoth: Evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol. Evol. 2018, 9, 1199–1211. [Google Scholar] [CrossRef]

- Vella, K.; Capel, T.; Gonzalez, A.; Truskinger, A.; Fuller, S.; Roe, P. Key Issues for Realizing Open Ecoacoustic Monitoring in Australia. Front. Ecol. Evol. 2022, 9. [Google Scholar] [CrossRef]

- Verma, R.; Kumar, S. AviEar: An IoT-based Low Power Solution for Acoustic Monitoring of Avian Species. IEEE Sensors J. 2024, 24, 42088–42102. [Google Scholar] [CrossRef]

- Manzano-Rubio, R.; Bota, G.; Brotons, L.; Soto-Largo, E.; Pérez-Granados, C. Low-cost open-source recorders and ready-to-use machine learning approaches provide effective monitoring of threatened species. Ecol. Inform. 2022, 72, 101910. [Google Scholar] [CrossRef]

- Larsen, A.S.; Schmidt, J.H.; Stapleton, H.; Kristenson, H.; Betchkal, D.; McKenna, M.F. Monitoring the phenology of the wood frog breeding season using bioacoustic methods. Ecol. Indic. 2021, 131, 108142. [Google Scholar] [CrossRef]

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- Nokelainen, O.; Lauha, P.; Andrejeff, S.; Hänninen, J.; Inkinen, J.; Kallio, A.; Lehto, H.J.; Mutanen, M.; Paavola, R.; Schiestl-Aalto, P.; et al. A mobile application–based citizen science product to compile bird observations. Citiz. Sci. Theory Pract. 2024, 9, 24. [Google Scholar] [CrossRef]

- Sanborn, A.F.; Phillips, P.K. Scaling of sound pressure level and body size in cicadas (Homoptera: Cicadidae; Tibicinidae). Ann. Entomol. Soc. Am. 1995, 88, 479–484. [Google Scholar] [CrossRef]

- Madhusudhana, S.; Klinck, H.; Symes, L.B. Extensive data engineering to the rescue: Building a multi-species katydid detector from unbalanced, atypical training datasets. Philos. Trans. R. Soc. B 2024, 379, 20230444. [Google Scholar] [CrossRef] [PubMed]

- Müller, J.; Mitesser, O.; Schaefer, H.M.; Seibold, S.; Busse, A.; Kriegel, P.; Rabl, D.; Gelis, R.; Arteaga, A.; Freile, J.; et al. Soundscapes and deep learning enable tracking biodiversity recovery in tropical forests. Nat. Commun. 2023, 14, 6191. [Google Scholar] [CrossRef]

- Branding, J.; von Hörsten, D.; Wegener, J.K.; Böckmann, E.; Hartung, E. Towards noise robust acoustic insect detection: From the lab to the greenhouse. KI-Künstliche Intell. 2023, 37, 157–173. [Google Scholar] [CrossRef]

- Ribeiro, A.P.; da Silva, N.F.F.; Mesquita, F.N.; Araújo, P.d.C.S.; Rosa, T.C.; Mesquita-Neto, J.N. Machine learning approach for automatic recognition of tomato-pollinating bees based on their buzzing-sounds. PLoS Comput. Biol. 2021, 17, e1009426. [Google Scholar] [CrossRef] [PubMed]

- Çoban, E.B.; Perra, M.; Pir, D.; Mandel, M.I. EDANSA-2019: The Ecoacoustic Dataset from Arctic North Slope Alaska. In Proceedings of the DCASE, Nancy, France, 3–4 November 2022. [Google Scholar]

- Karar, M.E.; Reyad, O.; Abdel-Aty, A.H.; Owyed, S.; Hassan, M.F. Intelligent IoT-Aided Early Sound Detection of Red Palm Weevils. Comput. Mater. Contin. 2021, 69, 4095–4111. [Google Scholar] [CrossRef]

- Zamanian, H.; Pourghassem, H. Insect identification based on bioacoustic signal using spectral and temporal features. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017; IEEE: New York, NY, USA, 2017; pp. 1785–1790. [Google Scholar]

- Noda, J.J.; Travieso-González, C.M.; Sánchez-Rodríguez, D.; Alonso-Hernández, J.B. Acoustic classification of singing insects based on MFCC/LFCC fusion. Appl. Sci. 2019, 9, 4097. [Google Scholar] [CrossRef]

- Prince, P.; Hill, A.; Piña Covarrubias, E.; Doncaster, P.; Snaddon, J.L.; Rogers, A. Deploying acoustic detection algorithms on low-cost, open-source acoustic sensors for environmental monitoring. Sensors 2019, 19, 553. [Google Scholar]

- Yin, M.S.; Haddawy, P.; Ziemer, T.; Wetjen, F.; Supratak, A.; Chiamsakul, K.; Siritanakorn, W.; Chantanalertvilai, T.; Sriwichai, P.; Sa-ngamuang, C. A deep learning-based pipeline for mosquito detection and classification from wingbeat sounds. Multimed. Tools Appl. 2023, 82, 5189–5205. [Google Scholar]

- Branding, J.; von Hörsten, D.; Böckmann, E.; Wegener, J.K.; Hartung, E. InsectSound1000 An insect sound dataset for deep learning based acoustic insect recognition. Sci. Data 2024, 11, 475. [Google Scholar] [CrossRef]

- Faiß, M.; Stowell, D. Adaptive representations of sound for automatic insect recognition. PLoS Comput. Biol. 2023, 19, e1011541. [Google Scholar] [CrossRef]

- Hexasoft. Acheta Domestica Femelle. 2006. CC0 1.0 Universal Public Domain Dedication. Available online: https://commons.wikimedia.org/wiki/File:Acheta_domestica_femelle.png (accessed on 13 September 2025).

- Almbauer, M. Erdhummel (Bombus terrestris) 2. 2018. CC0 1.0 Universal Public Domain Dedication. Available online: https://commons.wikimedia.org/wiki/File:Erdhummel_(Bombus_terrestris)2.jpg (accessed on 13 September 2025).

- Heng, V. Episyrphus balteatus. 2022. CC0 1.0 Universal Public Domain Dedication. Available online: https://commons.wikimedia.org/wiki/File:Episyrphus_balteatus_200921170.jpg (accessed on 13 September 2025).

- Krisp, H. Rote Keulenschrecke (Gomphocerippus rufus) Weiblich. 2020. CC BY 4.0 International. Available online: https://commons.wikimedia.org/wiki/File:Rote_Keulenschrecke_Gomphocerippus_rufus_weiblich.jpg (accessed on 13 September 2025).

- Gabler, P. Tettigonia viridissima. 2021. CC0 1.0 Universal Public Domain Dedication. Available online: https://commons.wikimedia.org/wiki/File:Tettigonia_viridissima_156296293.jpg (accessed on 13 September 2025).

- Santer, R.D.; Allen, W.L. Optimising the colour of traps requires an insect’s eye view. Pest Manag. Sci. 2024, 80, 931–934. [Google Scholar]

- Yin, M.S.; Haddawy, P.; Nirandmongkol, B.; Kongthaworn, T.; Chaisumritchoke, C.; Supratak, A.; Sa-Ngamuang, C.; Sriwichai, P. A lightweight deep learning approach to mosquito classification from wingbeat sounds. In Proceedings of the Conference on Information Technology for Social Good, Rome, Italy, 9–11 September 2021; pp. 37–42. [Google Scholar]

- Sarria-S, F.A.; Morris, G.K.; Windmill, J.F.; Jackson, J.; Montealegre-Z, F. Shrinking wings for ultrasonic pitch production: Hyperintense ultra-short-wavelength calls in a new genus of neotropical katydids (Orthoptera: Tettigoniidae). PLoS ONE 2014, 9, e98708. [Google Scholar] [CrossRef]

- Microchip Technology. ATmega328/P: 8-bit AVR Microcontrollers with 32K Bytes In-System Programmable Flash; Microchip Technology Inc.: Chandler, AZ, USA, 2016. [Google Scholar]

- Microchip Technology. SAM3X/SAM3A 32-Bit ARM Cortex-M3 Microcontroller; Microchip Technology Inc.: Chandler, AZ, USA, 2012. [Google Scholar]

- Lytrix. Teensy4-i2s-TDM: Teensy 4 I2S TDM Audio Library with AK4619 Support. 2025. Available online: https://github.com/Lytrix/Teensy4-i2s-TDM (accessed on 25 August 2025).

- Raspberry Pi Foundation. Raspberry Pi Zero 2 W Technical Specifications; Technical Product Page; Raspberry Pi Foundation: Cambridge, UK, 2021. [Google Scholar]

- HiFiBerry Team. HiFiBerry DAC+ADC Pro Hardware Specification; Technical Datasheet; HiFiBerry: Zurich, Switzerland, 2023; Available online: https://www.hifiberry.com/docs/data-sheets/datasheet-dac-adc-pro/ (accessed on 21 July 2025).

- Maxim Integrated. DS1302: Trickle-Charge Timekeeping Chip; Rev 2; Datasheet; Maxim Integrated: San Jose, CA, USA, 2022. [Google Scholar]

- Balingbing, C.B.; Kirchner, S.; Siebald, H.; Kaufmann, H.H.; Gummert, M.; Van Hung, N.; Hensel, O. Application of a multi-layer convolutional neural network model to classify major insect pests in stored rice detected by an acoustic device. Comput. Electron. Agric. 2024, 225, 109297. [Google Scholar] [CrossRef]

- Banga, K.S.; Kotwaliwale, N.; Mohapatra, D.; Babu, V.B.; Giri, S.K.; Bargale, P.C. Assessment of bruchids density through bioacoustic detection and artificial neural network (ANN) in bulk stored chickpea and green gram. J. Stored Prod. Res. 2020, 88, 101667. [Google Scholar] [CrossRef]

- Robles-Guerrero, A.; Saucedo-Anaya, T.; Guerrero-Mendez, C.A.; Gómez-Jiménez, S.; Navarro-Solís, D.J. Comparative study of machine learning models for bee colony acoustic pattern classification on low computational resources. Sensors 2023, 23, 460. [Google Scholar] [CrossRef]

- Zhang, R.R. PEDS-AI: A novel unmanned aerial vehicle based artificial intelligence powered visual-acoustic pest early detection and identification system for field deployment and surveillance. In Proceedings of the 2023 IEEE Conference on Technologies for Sustainability (SusTech), Portland, OR, USA, 19–22 April 2023; IEEE: New York, NY, USA, 2023; pp. 12–19. [Google Scholar]

- XVive. P1 Portable Phantom Power. Available online: https://xvive.com/audio/product/p1-portable-phantom-power/ (accessed on 17 July 2025).

- Vonyx. VDX10 Phantom Power. Available online: https://www.maxiaxi.de/vonyx-vdx10-phantomspeisung-48-volt-universal-phantom-power-supply-phantom-leistungsversorgung-mit-adapter-fur-studio-kondensator-mikrofone/ (accessed on 17 July 2025).

- Morris, G.; Mason, A. Covert stridulation: Novel sound generation by a South American katydid. Naturwissenschaften 1995, 82, 96–98. [Google Scholar] [CrossRef]

- Anderson, M.; Anderson, B. An Analysis of Data Compression Algorithms in the Context of Ultrasonic Bat Bioacoustics. Bachelor’s Thesis, Linnaeus University, Department of Computer Science and Media Technology, Växjö, Sweden, 2022. [Google Scholar]

- Low, M.L.; Naranjo, M.; Yack, J.E. Survival sounds in insects: Diversity, function, and evolution. Front. Ecol. Evol. 2021, 9, 641740. [Google Scholar] [CrossRef]

- Nordic Semiconductor. Power Profiler Kit II Current Measurement Tool for Embedded Development, 1st ed.; Nordic Semiconductor: Trondheim, Norway, 2020. [Google Scholar]

- Deutscher Wetterdienst (DWD). Climate Data Center (CDC)—Precipitation and Wind Data for Lower-Saxony, April 2025. 2025. Available online: https://www.dwd.de (accessed on 6 August 2025).

| Microphone | Used in Study | Price (July 2025) | SNR | Sensitivity | Directivity |

|---|---|---|---|---|---|

| Adafruit-I2S MEMS microphone a | Balingbing et al., 2024 [48] | $6.95 (∼€6.00) | 65 dB | −26 dBV/Pa | Omnidirectional |

| CZN-15E Electret Condenser Microphone b | Banga et al., 2020 [49] | €0.50 | 60 dB | −58 dBV/Pa | Omnidirectional |

| Brüel & Kjær-Type 4955 microphone c | Branding et al., 2023 [24] | €5,200.00 | 87.5 dB | 0.83 dBV/Pa | Omnidirectional |

| Electret MAX4466 d | Robles-Guerrero et al., 2023 [50] | $6.95 (∼€6.00) | 60 dB | −44 dBV/Pa | Omnidirectional |

| Primo Low coast EM172 e | Yin et al., 2023 [31] | £12.78 (∼€15) | 80 dB | −28 dBV/Pa | Omnidirectional |

| Røde Videomic Me Cardioid Mini-Shotgun mic f | Zhang, 2023 [51] | €79.99 | 75 dB | −33 dBV/Pa | Directional |

| Behringer B-5 g | Present study | €31.00 | 78 dB for cardioid, and 76 dB for omnidirectional | −38 dBV/Pa | Two interchangeable capsules: omnidirectional and directional |

| Power Consumption in [mW] | Estimated Runtime in [h] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Raspberry Pi | Phantom | System total | Battery capacity in [Wh] | |||||||

| mean | std | mean | std | mean | std | 10 | 20 | 50 | 100 | 288 |

| 1170.72 | 213.96 | 326.84 | 1.56 | 1497.56 | 214.04 | 6.68 | 13.36 | 33.39 | 66.78 | 192.31 |

| Trap No. | Size (GB) | File Count |

|---|---|---|

| 1 | 189 | 35,189 |

| 2 | 192 | 33,736 |

| 3 | 158 | 29,057 |

| 4 | 179 | 36,385 |

| 5 | 191 | 34,864 |

| 6 | 192 | 35,306 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seyidbayli, C.; Fengler, B.; Szafranski, D.; Reinhardt, A. Acoustic Trap Design for Biodiversity Detection. IoT 2025, 6, 58. https://doi.org/10.3390/iot6040058

Seyidbayli C, Fengler B, Szafranski D, Reinhardt A. Acoustic Trap Design for Biodiversity Detection. IoT. 2025; 6(4):58. https://doi.org/10.3390/iot6040058

Chicago/Turabian StyleSeyidbayli, Chingiz, Bárbara Fengler, Daniel Szafranski, and Andreas Reinhardt. 2025. "Acoustic Trap Design for Biodiversity Detection" IoT 6, no. 4: 58. https://doi.org/10.3390/iot6040058

APA StyleSeyidbayli, C., Fengler, B., Szafranski, D., & Reinhardt, A. (2025). Acoustic Trap Design for Biodiversity Detection. IoT, 6(4), 58. https://doi.org/10.3390/iot6040058