Abstract

This paper presents a novel video encoding and decoding method aimed at enhancing security and reducing storage requirements, particularly for CCTV systems. The technique merges two video streams of matching frame dimensions into a single stream, optimizing disk space usage without compromising video quality. The combined video is secured using an advanced encryption standard (AES)-based shift algorithm that rearranges pixel positions, preventing unauthorized access. During decoding, the AES shift is reversed, enabling precise reconstruction of the original videos. This approach provides a space-efficient and secure solution for managing multiple video feeds while ensuring accurate recovery of the original content. The experimental results demonstrate that the transmission time for the encoded video is consistently shorter compared to transmitting the video streams separately. This, in turn, leads to about reduction in energy consumption across diverse outdoor and indoor video datasets, highlighting significant improvements in both transmission efficiency and energy savings by our proposed scheme.

1. Introduction

In recent times, advances in computing technologies, along with the fall in the cost of Closed-Circuit Television (CCTV) cameras, have led to their widespread deployment for various industrial and consumer use cases, commonly referred to as Internet of Video Things () applications [1]. These include surveillance for law and order, environmental monitoring, smart city management, retail use-cases, and intelligent transportation solutions, to name a few. In particular, CCTV cameras for surveillance purposes have become a ubiquitous sight on streets worldwide and in indoor settings.

The rapid growth of digital video applications in surveillance systems has intensified the need for scalable, efficient, and secure storage solutions. CCTV systems [2] generate massive amounts of video data, especially in static environments where only objects in motion are recorded within the frame. As the use of these systems expands, the effective management of large video datasets, while ensuring the security and integrity of sensitive footage, has emerged as a significant challenge for both industry and academia.

Traditional methods for storing and transmitting video data often fall short when applied to modern surveillance environments. Originally designed for entertainment or broadcasting purposes, these methods are not optimized for the long-term storage, secure access, or quality preservation required by surveillance footage. Moreover, the large volume of video data from multiple camera feeds exacerbates the problem, driving up storage costs and complicating data retrieval. Network bandwidth limitations further hinder the real-time transmission of high-resolution video streams, particularly in distributed surveillance networks.

At the core of this issue is the trade-off among storage efficiency, video quality, and security. To address these limitations, we propose a novel framework specifically designed for surveillance applications. Our approach merges two video streams from two different cameras where frame dimensions from both streams are the same, offering both storage efficiency and security. By combining two video streams frame-by-frame, our method reduces storage requirements by half without sacrificing video quality. The encoding and decoding processes are designed to maintain all important visual information required for surveillance tasks. The system employs intelligent compression and pixel rearrangement techniques that minimize data loss while keeping key details intact. This ensures that, upon decoding, the two original video streams can be effectively reconstructed with negligible quality degradation sufficient to support reliable monitoring and analysis.

Security is another critical concern in surveillance systems, as unauthorized access to video data could have severe consequences. To protect the integrity of video data, we embed Advanced Encryption Standard () [3] encryption directly into the encoding process. This ensures that video data remain secure during both storage and transmission, without adding significant computational overhead or degrading video quality. Integrating encryption within the encoding framework also eliminates the need for additional post-processing steps, providing seamless protection.

Our proposed framework is particularly suitable for surveillance systems that generate large volumes of video data and operate under bandwidth constraints. For example, in large-scale CCTV networks, our method allows multiple feeds to be recorded simultaneously in a single stream, significantly reducing the required storage space on central servers. When needed, the original footage can be fully reconstructed without any loss of detail. The experimental results demonstrate that our proposed approach achieves a significant reduction in video data size compared to the raw uncompressed data. Furthermore, the proposed method also achieves significantly lower transmission time and energy consumption, with an average reduction of in transmission time and about in energy consumption compared to transmitting the videos separately using the codec. This makes our framework a practical solution for modern surveillance systems, where both storage efficiency and security are critical.

The remainder of this paper is organized as follows: Section 2 reviews existing approaches to video storage and security in surveillance systems. Section 3 outlines the core challenges our work addresses. Section 4 highlights the major contributions of our research, while Section 5 delves into the technical details of our proposed encoding and decoding framework. Section 6 presents experimental results demonstrating the effectiveness of our approach in real-world surveillance environments. Finally, Section 7 offers concluding remarks and discusses future research directions.

2. Literature Survey

Efficient video transmission and compression are crucial for multimedia applications, as they aim to reduce bandwidth usage while preserving video quality. Traditional methods have largely focused on frame-by-frame compression, but recent research has introduced more advanced techniques that leverage frame-level analysis, including splicing, interpolation, and reconstruction. These approaches have proven effective in enhancing compression efficiency and improving transmission performance.

A typical video compression system consists of two primary components: an encoder that compresses the video and a decoder that reconstructs it, together forming a codec. Modern video compression techniques can be broadly categorized into three main types: intra-frame, inter-frame, and block-based compression.

Inter-frame-based compression has advanced significantly, as demonstrated by an approach utilizing adaptive Fuzzy Inference Systems () [4]. This method focuses on compressing video by extracting and compressing statistical features from adjacent frames. The adaptive FIS is trained to compress the features of two adjacent frames into one, effectively reducing data redundancy between frames. The results show that this method successfully compresses video frames using an inter-frame approach, significantly improving compression efficiency. Another effective approach is the differential reference frame coder [5], which combines differential coding with intra-prediction to minimize spatial redundancy in video frames. This method reduces memory bandwidth consumption by applying semi-fixed-length coding to the residuals from the differential coding process. Evaluations on HD 1080p video sequences showed a compression ratio exceeding , with the added benefit of supporting random access to reference frame blocks. In the realm of image compression, fractal block coding has emerged as a promising technique [6]. This method relies on two key principles: (i) image redundancy can be minimized by leveraging the self-transformable properties of images at the block level, and (ii) original images can be approximated using fractal transformations. By generating a fractal code, the encoder iteratively applies transformations to converge on a fractal approximation of the original image. This technique has demonstrated the effective compression of monochrome images with rates between and bits per pixel, rivaling vector quantization methods.

Building on the principles of fractal compression, a more advanced fractal video encoder has been developed, offering improved performance [7]. This searchless fractal encoder uses adaptive spatial subdivision to eliminate the need for domain block matching, which previously slowed down the encoding process. As a result, this technique achieves faster encoding and decoding, outperforming the state-of-the-art encoder at low bit rates, particularly for high-motion video sequences, as measured by the structural dissimilarity metric and encoding speed.

Video compression continues to be a critical area of research, especially as the demand for high-resolution content grows. The challenges of meeting bandwidth constraints while supporting high-quality video have driven the development of increasingly sophisticated compression algorithms. A comprehensive review of recent advancements in this field [8] highlights the growing role of artificial intelligence in compression techniques. AI-based models, particularly those leveraging deep learning, are emerging as the most promising candidates for setting new industry standards in video compression, offering both computational efficiency and scalability for high-definition video.

Image encryption and authentication have gained significant attention in recent years due to increasing concerns over secure image transmission and verification. Xue et al. [9] proposed a novel multi-image authentication, encryption, and compression scheme that integrates Double Random Phase Encoding () with Compressive Sensing () to simultaneously address image security and authentication challenges. Their approach leverages a random sampling matrix to downsample multiple plaintext images and combines them into a single composite image, which is then encrypted using to generate batch authentication information. This method enhances authentication efficiency by embedding authentication data and ciphertext images into a carrier image within an Encryption-Then-Compression () framework. The scheme outperforms traditional single-image and double-image authentication methods by improving authentication quality and reducing the size of authentication information, enabling effective batch authentication without compromising image reconstruction quality. This work highlights the potential of combining compressive sensing and DRPE techniques to advance secure and efficient multi-image transmission systems.

Begum et al. [10] proposed a secure and efficient compression technique that integrates encryption with the Burrows–Wheeler Transform () to ensure both data confidentiality and compression performance. Their approach scrambles the input data using a secret key and applies , followed by Move-To-Front () and Run-Length Encoding () to achieve high compression ratios. The method embeds cryptographic principles of confusion and diffusion into the compression workflow, providing strong security without compromising compression efficiency. The experimental results demonstrated a compression ratio close to , and a security analysis confirmed sensitivity to the secret key and plaintext. Their approach works only on a single video data stream.

Abdo et al. [11] proposed a hybrid approach for enhancing the security and compression of data streams in cloud computing environments. Their technique combines multiple layers of robust symmetric encryption algorithms with the compression algorithm to ensure both data confidentiality and transmission efficiency. This dual-layered method is designed to be suitable for real-time applications, achieving compression gains between and . The approach’s effectiveness was validated through performance metrics such as processing time, space-saving percentage, and the randomness test, with results confirming a confidence in ciphertext randomness. This makes the method highly reliable for securing sensitive cloud data without compromising on compression quality. However, their approach does not consider the case of combining two video streams.

Majumder et al. [12] presented an energy-efficient, lossless transmission scheme for transmitting streaming video data over a wireless channel from visual sensors. Their approach exploits temporal correlations in video frames to eliminate redundant information. However, their proposed approach works only on single video streams and does not incorporate any aspect of data security.

“System and Method for H.265 Encoding” presents a two-stage motion estimation approach to improve the efficiency of encoding [13,14]. It begins with a coarse search to identify approximate motion vectors, followed by a refined local search for precise vector selection based on cost optimization. The method also uses adjacent motion vector filtering from neighboring s to enhance prediction accuracy. To support real-time encoding, the system adopts a seven-stage pipelined architecture, enabling parallel processing across encoding tasks. This design significantly reduces computational load while maintaining high compression performance, making it a valuable advancement in encoding techniques.

3. Problem Statement

The rapid advancement of video compression techniques has led to substantial reductions in data size while preserving high visual fidelity. Methods such as inter-frame compression, fractal block coding, and adaptive fuzzy inference systems [4,5,6,7] have effectively minimized redundancies within video data, thereby enhancing compression efficiency and transmission speed. Innovations like searchless fractal encoders [7] have further boosted performance, particularly in high-motion scenarios typical of surveillance environments.

Despite these improvements, significant challenges remain in the context of video surveillance systems. Continuous recording from multiple cameras generates massive volumes of video data, placing considerable strain on storage infrastructure. As the deployment of high-resolution cameras expands, the demand for storage grows exponentially. Traditional compression techniques, while effective to some extent, often fall short in addressing the dual requirements of substantial storage reduction and strong data security.

Additionally, transmitting continuous surveillance streams to centralized storage servers significantly increases communication costs, particularly in terms of energy consumption and transmission time. These limitations further emphasize the necessity for a comprehensive solution tailored to the unique constraints and requirements of surveillance environments.

In surveillance applications, the integrity and fidelity of the video content are paramount. Even minor quality degradation can compromise the utility of footage for critical tasks such as forensic analysis and evidence collection. Consequently, there is an urgent need for a novel approach that can simultaneously optimize storage, maintain video quality, and ensure the bandwidth-efficient, secure transmission and archiving of video data.

4. Our Contribution

In response to the challenges of secure and efficient video storage in surveillance systems, we introduce a novel framework that enhances both video security and storage optimization through an innovative encoding and decoding mechanism. Our approach merges two video streams frame-by-frame into a single composite frame, significantly reducing the overall storage footprint. By employing an encoding format, we ensure that the original quality of each video stream is fully preserved during the merging and retrieval processes, avoiding any loss of detail that could compromise the effectiveness of surveillance footage.

A key component of our contribution is the integration of an advanced shifting technique directly within the encoding process. This enhances the security of the combined video streams, ensuring that video data remain protected throughout storage and transmission, while mitigating risks of unauthorized access or tampering. Importantly, our method allows for the seamless reconstruction of the original video streams without any degradation in quality, making it ideally suited for security-critical applications such as CCTV surveillance systems. By securely combining two video feeds into a single stream and applying robust encryption, our technique achieves substantial reductions in storage requirements while maintaining the full fidelity of the original videos. This approach is particularly valuable in surveillance environments where the secure management of large volumes of video data is essential, yet storage space is limited.

Our experimental results demonstrate that our proposed scheme achieves a substantial reduction in energy consumption across various video datasets encompassing both indoor and outdoor scenarios, highlighting significant improvements in both transmission efficiency and energy savings. Our solution not only optimizes storage efficiency but also ensures that the security of sensitive surveillance footage is never compromised, providing a practical and scalable solution for modern surveillance systems.

5. Proposed Framework

In this work, we present a novel encoding and decoding framework aimed at optimizing video transmission and storage efficiency while enhancing security. The proposed technique merges two video streams on a frame-by-frame basis using a predefined set of rules, followed by the application of shifting encryption [15] to secure the data. After transmission, the combined video is decoded and the original streams are reconstructed at the receiver’s end.

Our proposed approach offers two advantages: reduced storage requirements and enhanced security. By merging the two video streams, the total disk space needed is significantly reduced, offering a more efficient solution compared to transmitting each video separately. Additionally, the combination of video streams and AES encryption provides a robust layer of protection, making it much harder for unauthorized entities to access the original content. The framework is specifically designed for video streams with matching frame sizes to ensure seamless merging and accurate reconstruction during the decoding process.

The video processing begins by extracting frames from the input video streams and saving them as Portable Network Graphics () [16] images. This approach preserves the original quality of the frames, ensuring no data are lost during the extraction process and capturing every detail accurately. Once the frames are extracted, the corresponding frames from both videos are combined. This process involves taking the pixel data from each frame and alternating pixels between the two to create a single, combined frame. These combined frames are then saved for further use.

In cases where one video contains more frames than the other, the extra frames from the longer video are appended to the combined set. This ensures that all frames are included in the final output, preserving the integrity of both video streams. Furthermore, the video stream may be compressed using the video codec to generate a further reduction in the size of the video data to be transmitted.

To secure the combined frames, encryption is applied. The shift algorithm rearranges the pixel positions within each frame, making the data unreadable to unauthorized users without altering the actual pixel values. This step enhances the security of the data, ensuring that they remain protected during transmission.

During the decryption process, the re-shift algorithm is used to restore the pixel positions to their original order. By applying the same shift pattern that was used during encryption, the combined frames are accurately reconstructed, maintaining the original structure of the data. Finally, the original video streams are retrieved from the combined frames by examining each frame and separating the pixel data belonging to each of the two original videos. This process effectively reconstructs the individual frames, allowing for the full recovery of both video streams.

5.1. Encoder Side-Combining Process

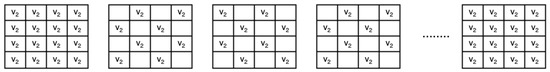

The process begins by extracting frames from the videos and saving them as images to maintain high quality, thereby ensuring accurate visual representation. To determine how many frames can be combined, we take the smaller number of frames between and , ensuring that each frame is properly processed without omission. Once the frames are extracted and the frame count is determined, the combining phase begins.

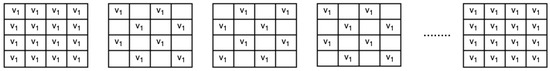

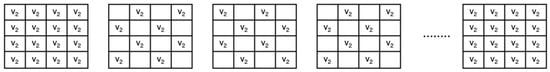

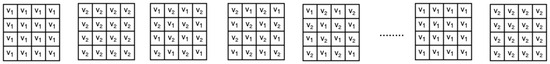

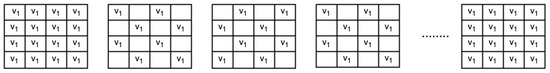

In the initial step, the first frame from and the first frame from are transmitted exactly as they are, with no modifications, as depicted in Figure 1 and Figure 2 using a grid representation. For the second frame, an alternating pattern is applied. Odd rows use pixels from for even columns and pixels from for odd columns. For even rows, even columns take pixels from , while odd columns use pixels from . This pattern is applied consistently throughout the process, alternating the pixel arrangement for each frame. For the third frame, the roles reverse: Odd rows now use pixels from for even columns and for odd columns, while even rows take pixels from for even columns and for odd columns. This alternating pattern continues for all subsequent frames in the combined video, as shown in Figure 3. Once all frames have been combined, any remaining frames from are appended in full, followed by the extra frames from . This approach ensures that the original structure of both videos is maintained at the expense of some information loss due to the skipping of alternate rows from each frame as mentioned in the encoding Algorithm 1. The missing pixels are recreated through interpolation by the decoding Algorithm 2 described in Section 5.4.

Figure 1.

depicted in frames.

Figure 2.

depicted in frames.

Figure 3.

Combined video in frames.

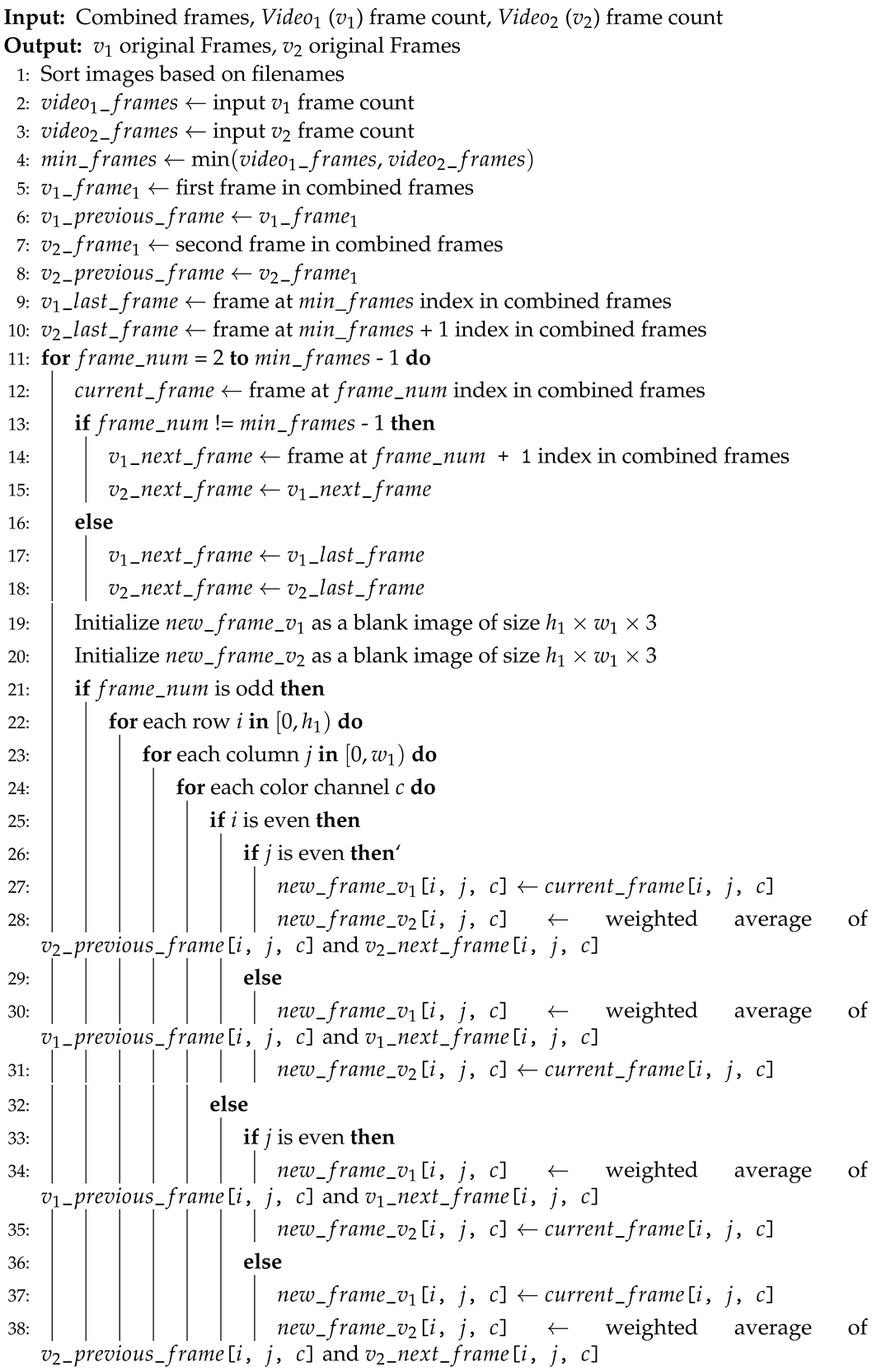

| Algorithm 1 Encoder Side |

|

| Algorithm 2 Decoder Side |

|

|

After the combined frames are generated and stored as high-quality images to preserve pixel integrity, the final sequence may optionally be encoded using the (HEVC) video codec at a frame rate of 30 fps. The codec employs block-based hybrid compression techniques, including motion estimation, transform coding, quantization, and entropy coding, to significantly reduce the bit rate while maintaining visual quality. When applied, this source coding step further compresses the combined video, enabling more efficient transmission and storage.

5.2. AES Encryption

To further enhance the security of the combined video, the frames undergo encryption using the shift algorithm. Each frame is secured with a unique 128-bit key, ensuring that the pixel arrangement differs from frame to frame. This method prevents unauthorized users from easily deciphering the original content, as the decryption requires access to the specific key used for each frame. The encryption process begins with key generation for each frame, followed by the creation of a shift table. This table specifies how the pixels are rearranged within each frame, using encryption in Electronic CodeBook () [17] mode. The pixel indices are encrypted, and a shift value is extracted from the first four bytes of the encrypted data, which is then used to swap pixels and randomize the frame’s layout.

The shift table is applied to each frame, scrambling pixel positions and, thereby, effectively masking the original visual content. For simulation purposes, each frame’s key is securely stored in a JavaScript Object Notation () file [18], allowing the pixel arrangement to be accurately reversed during decryption. In practical deployments, however, any standard secure key exchange protocol—such as Diffie–Hellman, RSA-encrypted key exchange, or TLS—can be used to transmit the key securely. Once encryption is complete, the frames are reassembled into a video stream and transmitted over the network, ready for reconstruction on the decoder side.

Figure 4 and Figure 5 depict the original frames extracted from and , respectively. Figure 6 illustrates the combined frames generated by merging the inputs using an alternating pixel pattern. Subsequently, Figure 7 shows the -based pixel shift encryption applied to the combined frames, demonstrating the secure scrambling of pixel arrangements.

Figure 4.

frames in .

Figure 5.

frames in .

Figure 6.

Combined video in frames.

Figure 7.

Pixel shifts for frame encoding.

5.3. Reverse AES Pixel Shift

To undo the AES pixel shift applied during the encoding, the shift table generated for each frame is used. The shift table maps the pixel positions before and after the encryption, and the restoration involves reversing this mapping:

- Retrieving the AES Key: The key used during the encoding of each frame is retrieved from a file. This key is crucial for regenerating the shift table used to scramble the pixels.

- Recreating the Shift Table: Using the retrieved key, the original shift table is recreated. This table specifies how the pixels were rearranged during encryption.

- Generating the Reverse Shift Table: A reverse shift table is constructed to map the current pixel positions back to their original locations, effectively undoing the AES-based randomization.

- Restoring the Image: The reverse shift table is applied to each frame, restoring the pixel positions to their original state. This process is repeated for every frame in the combined video sequence, ensuring accurate recovery of the original pixel arrangement.

5.4. Decoder Side—Reconstruction Process

To restore the original videos from their combined and encrypted form, several crucial steps are undertaken. The first step involves identifying the total number of frames in both and . This is essential to ensure all frames are accounted for, thus facilitating complete reconstruction of both videos.

The combined video contains alternating frames from the two source videos, and the extraction of frames is performed as follows: The first frame of the combined video is assigned to , while the second frame is allocated to . As the extraction process continues with subsequent frames, a method is applied to recover any missing pixels. To recover the missing pixels, the decoder analyzes surrounding frames and performs interpolation by averaging the corresponding pixel values from adjacent frames. This technique effectively fills any gaps in the data, ensuring the integrity of the reconstructed video streams. It is important to note that the first frame from and the first frame from are transmitted without any modifications, as these serve as reference frames necessary for reconstructing subsequent frames. For example, as illustrated in Figure 8, to restore a missing pixel in a particular position, the decoder takes the average of the pixel values at that position from and . This process continues until all interleaved frames from both videos are fully extracted and restored to their original state.

Figure 8.

Reconstructed depicted in frames.

After handling the interleaved frames, attention turns to any remaining frames that were originally part of either or but were appended at the end of the combined video. These residual frames are separated and assigned back to their respective videos. Once all frames from both videos have been fully reconstructed as shown in Figure 8 and Figure 9, the final step involves converting the frame sequences back into their respective video formats. This completes the restoration process, ensuring that both videos are accurately recovered and ready for playback.

Figure 9.

Reconstructed depicted in frames.

Figure 10.

Reconstructed frames in .

Figure 11.

Reconstructed frames in .

The proposed framework efficiently optimizes video transmission and storage while providing a robust layer of security. The combination of alternating frame merging and pixel-shift encryption ensures reduced disk space usage and enhanced protection against unauthorized access. This novel approach not only secures video data but also ensures seamless reconstruction of the original streams.

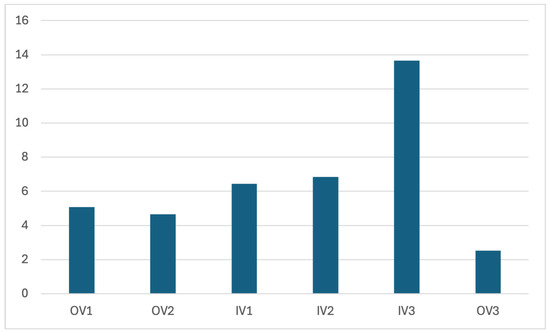

6. Experimental Results

In this section, we present the results of our experimental evaluation, obtained through simulation using the proposed framework. For the purpose of evaluating our proposed scheme, we have used a total of six video datasets encompassing a variety of scenes for both outdoor and indoor scenarios—three from the Urbantracker project [19,20] and two from the WiseNET CCTV video datasets—which provides multi-camera multi-space video sets for various indoor settings [21,22]. The WiseNET project captures a diverse set of human actions in an indoor environment such as walking around, standing, sitting, motionless, entering, or leaving a space and group merges and splits. The details of the individual videos used in our experiments are as follows:

- Outdoor Video 1 (): The first outdoor video corresponds to the René Lévesque video from Urbantracker [19]. It captures video an office building, offering a view of three intersections and containing about 20 objects per scene simultaneously. The resolution of the video is , consisting of a total of 1000 frames encoded using the codec at 30 Frames Per Second (fps) with a Variable Bit Rate (VBR).

- Outdoor Video 2 (): These video data correspond to the St Marc video dataset from Urbantracker. It captures the intersection of Saint Marc and Maisonneuve in Montréal, and provides a scenario where people move as a group. The video resolution is and each scene has up to 14 objects simultaneously, including vehicles, bicycles, and pedestrians. The video dataset contains 1000 frames and was encoded with a variable bit rate using the codec at 30 fps.

- Outdoor Video 3 (): The third outdoor video dataset that we have utilized comes from the Sherbrooke video in Urbantracker and captures the Sherbrooke/Amherst intersection in Montréal. The CCTV camera in this video placed a couple of meters above the ground and captures cars and pedestrians, with each scene contains about seven objects simultaneously. The video resolution is . The dataset was created using 1001 frames and encoded using the codec at 30 fps with a variable bit rate.

- Indoor Video 1 (): This indoor CCTV camera footage was obtained from the set_2 video dataset in the Wisenet project [21] and corresponds to the video file video2_1.avi. The video resolution is . The video was encoded using the Xvid MPEG-4 codec at 30 fps with a variable bit rate.

- Indoor Video 2 (): These indoor video data were filmed at resolution and obtained from the video file video2_3.avi of the set_2 video dataset in the Wisenet project. The video was encoded using the Xvid MPEG-4 codec at 30 fps with a variable bit rate.

- Indoor Video 3 (): These indoor video data were taken from the Atrium video in the Urbantracker project, filmed at École Polytechnique Montréal. They offer a view of pedestrians moving around from inside of the building. The video resolution is , and the dataset consists of 4540 frames encoded using the Xvid MPEG-4 codec at 30 fps with a variable bit rate.

Figure 12 and Figure 13 show sample frames from the different video datasets used in our experiments. The samples show that datasets considered by us represent a diverse set of deployment scenarios with varying background and foreground information.

Figure 12.

Sample frames of , and , respectively.

Figure 13.

Sample frames of , and , respectively.

6.1. Quality and Size Assessment Metrics

For the purpose of evaluating the information loss from our proposed method of encoding two videos simultaneously, we employed multiple methods to assess the similarity between the original and the reconstructed video frames. Additionally, since IoT deployment scenarios often involve the use of battery-powered devices and utilize wireless transmission protocols, we evaluated the impact of our proposed scheme on key transmission metrics such as transmission time and energy requirement to transmit the video files based on the standard, operating at Gbps on the 5 GHz band [23,24,25].

6.1.1. Peak Signal-to-Noise Ratio (PSNR)

The Peak Signal-to-Noise Ratio () [26] is computed in decibels and serves as a measure of quality between the original and compressed (or reconstructed) images. Higher values correspond to better image quality.

The is computed using the Mean-Square Error (), which represents the cumulative squared difference between the original and compressed images. A lower indicates lower reconstruction error. The is given by the following equations:

Here, M and N denote the dimensions of the image, and R represents the maximum possible pixel value (e.g., 255 for an 8-bit image). A value above 30 dB typically indicates high-quality reconstruction.

6.1.2. Structural Similarity Index (SSIM)

The Structural Similarity Index () [27] quantifies image similarity by considering luminance, contrast, and structure. This metric evaluates the perceived quality of an image by comparing corresponding windows of two images. The between two image windows x and y is given by

where and are the means of x and y, and are their variances, and is the covariance between x and y. Constants and are used to stabilize the division, defined as

With L being the dynamic range of the pixel values, and , . values range from to 1, where values closer to 1 indicate higher structural similarity between images.

6.1.3. Transmission Time

The Transmission Time () represents the total duration from the start to the end of transmitting a message [23]. It is calculated using the formula

In this equation, Message Size refers to the size of the video being transmitted, while denotes the available transmission rate, which, in this case, is Gbps [25]. For example, if the video size is MB, the transmission time would be approximately 184 ms.

6.1.4. Energy Consumption

Energy consumption refers to the total amount of energy used during the transmission process. It is typically measured in s (J). The () is given by

where is transmitted power in s and R is the data rate, which is Gbps in this case. According to standards [25], the average transmitted power is 20 dBm. To convert power from to , we use the following formula:

The energy required to transmit a single bit is calculated as

The total energy consumption () for transmitting a video is

6.1.5. Video Multi-Method Assessment Fusion (VMAF)

Video Multi-method Assessment Fusion (VMAF) [28] is a perceptual video quality metric developed by to assess the visual quality of video content by combining multiple elementary quality features using machine learning. It integrates metrics such as detail loss, blockiness, and sharpness to more accurately reflect the human perception of video quality. VMAF scores range from 0 to 100, where a score of 100 denotes perfect perceptual fidelity with the reference video, and a score closer to 0 indicates significant quality degradation.

- 90–100: Excellent quality—visually indistinguishable from the original.

- 80–90: Very good—minor differences visible only on close inspection.

- 70–80: Good—some visible artifacts but acceptable.

- 50–70: Fair—noticeable degradation, reduced viewing experience.

- <50: Poor—significant degradation with distracting artifacts.

Due to its strong correlation with subjective human visual assessments, VMAF has become a widely adopted standard in video compression and streaming research.

6.1.6. Video Quality Metric (VQM)

Video Quality Metric (VQM) [29] is a full-reference perceptual video quality assessment tool developed by the National Telecommunications and Information Administration (NTIA). It quantifies perceived visual degradation by comparing a distorted video against its original version using a set of features derived from spatial, temporal, and chromatic information. VQM evaluates the video based on features such as edge energy loss ( loss), gain of detail ( gain), horizontal-vertical detail loss ( loss), chroma spread, chroma extreme, and temporal activity changes ( gain). These features are weighted and combined through a linear regression model trained on subjective human ratings.

Each component in the VQM equation corresponds to a specific type of perceptual distortion or enhancement:

- (Spatial Information Loss): measures the loss of fine edge detail or sharpness in the distorted video compared to the original. A higher loss results in lower perceived quality and, thus, the term has a negative weight.

- (Horizontal-Vertical Detail Loss): captures degradation in directional edge features, particularly horizontal and vertical structures. An increase in this term raises the VQM score, indicating reduced quality.

- (Horizontal-Vertical Detail Gain): detects the introduction of unnatural detail, such as ringing or edge enhancement. While it may appear as additional detail, such gains often reflect distortions and are penalized.

- (Chroma Spread): reflects the degree of inconsistency or dispersion in the chroma (color) components. A wider spread suggests color degradation or inconsistency.

- (Spatial Information Gain): identifies artificially introduced spatial details, which may result from oversharpening or encoding artifacts. This term has a strong negative weight, indicating that such gains significantly degrade perceptual quality.

- (Contrast-Temporal Activity Gain): measures unnatural changes in contrast over time, such as flickering or unstable motion, which negatively affect viewing experience.

- (Chroma Extreme): detects extreme and non-natural chromatic shifts not present in the reference video, contributing to color-related distortions.

The VQM score typically ranges from 0 (perfect quality) to >20 (very poor quality):

- 0–3: Excellent—minimal or no perceptual distortion.

- 3–6: Good—slight degradation but overall acceptable.

- 6–10: Fair—noticeable distortions affect viewing comfort.

- >10: Poor—severe visual artifacts; degraded user experience.

6.2. Quality and Size Assessment Results

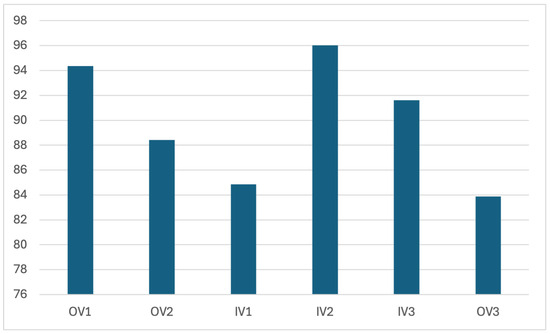

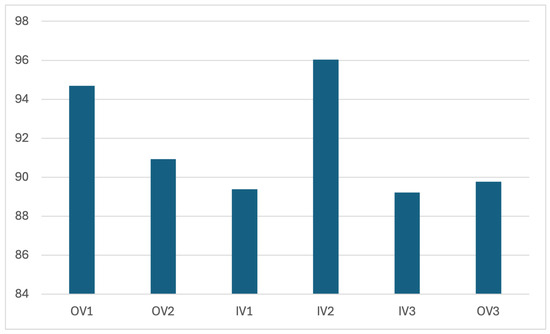

In order to assess the performance of our proposed scheme for the various visual quality and size metrics discussed in Section 6.1, we first considered the scenario where no video compression algorithm is used to encode either the raw frames or the frames resulting from our proposed approach. The results of our experiments are presented in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6 and Figure 14, Figure 15, Figure 16 and Figure 17.

Table 1.

Comparison of file sizes, , and values for and .

Table 2.

Comparison of video transmission time and energy consumption for and .

Table 3.

Comparison of file sizes, PSNR and SSIM values for and .

Table 4.

Comparison of transmission time and energy consumption for and .

Table 5.

Comparison of file sizes, PSNR, and SSIM values for and .

Table 6.

Comparison of transmission time and energy consumption for and .

Figure 14.

VMAF values for 100 frames.

Figure 15.

VMAF values for 300 frames.

Figure 16.

VQM values for 100 frames.

Figure 17.

VQM values for 300 frames.

The first deployment scenario we considered was where two sets of outdoor CCTV video data are combined. Table 1 presents a comparison between the original and encoded file sizes for videos and , along with the corresponding and values obtained by comparing the original and decoded videos. The evaluation was conducted using test files with varying frame counts and file sizes (measured in ), which are available in the repository [30]. The results indicate that the encoded version of the videos requires significantly less storage space, demonstrating the efficiency of our framework in transmitting both videos together. Higher values of indicate lower distortion and better signal retention, while values closer to 1 signify high structural similarity of the reconstructed videos relative to the originals.

Table 2 compares the transmission characteristics of and transmitted individually versus when they are combined using our proposed method. The third column shows the combined information for Videos and transmitted individually, along with their respective frame sizes (measured in ). The fourth column depicts the output of our proposed algorithm, which combines Videos 1 and 2, detailing the frames and their sizes (measured in ). The Transmission Time column lists the calculated transmission time (measured in milliseconds) for both the combined video streams () and the encoded video. The final column, Energy Consumed (measured in ), provides a comparison of the energy consumption data.

Our experimental results indicate that both transmission time and energy consumption were significantly reduced for the encoded video compared to the combined video streams . For smaller frame sizes (e.g., ), the encoded video reduced transmission time by approximately , with similar energy savings of around . As the frame sizes increased (e.g., ), the encoded video achieved even greater reductions, with transmission time cut by almost and energy consumption reduced by nearly . The results in Table 2 demonstrate that the encoding technique not only enhances transmission efficiency but also provides substantial energy savings, making it an effective solution for optimizing both time and power consumption in video transmission.

In order to evaluate the performance of our proposed scheme on indoor CCTV video data, we experimented with a combination of feeds and . Table 3 presents a comparison of the original, encoded videos frame counts and file sizes on Indoor Video 1 () and Indoor Video 2 (), along with and values, while Table 4 provides the transmission time and energy consumption data comparison for and .

The third deployment scenario we considered was combining an outdoor video feed with an indoor video feed. For this, we utilized and . Table 5 and Table 6 capture the scenario where is combined with indoor video data from .

From Table 2, Table 4, and Table 6, we see that for 300 frames from each of the video streams, the gain in both transmission time and energy efficiency is about . Combined with the fact that the and values of all the videos indicate high quality of the respective reconstructed videos (as seen from Table 1, Table 3 and Table 5), the above results clearly show the benefits of our proposed scheme.

6.2.1. VMAF Results

6.2.2. VQM Results

The VQM values obtained for the proposed method using 100 frames and 300 frames, respectively, for each video in the three deployment scenarios, are presented in Figure 16 and Figure 17.

The relatively high VQM score observed for the video, as shown in Figure 16 and Figure 17, is primarily attributed to the poor quality of the original frames themselves. To support this, we evaluated the quality of the original frames using no-reference image quality metrics such as [31] and blurriness. The average score for the original frames was found to be , which indicates moderate to heavy distortion, as scores above 50 typically signify noticeable artifacts, noise, or blurriness. Additionally, the average blurriness score was , suggesting that while the frames are not extremely blurred, they do exhibit a moderate level of softness. These findings confirm that the original input frames for were of suboptimal quality, which directly impacts the VQM results. Since VQM is a full-reference metric that compares the reconstructed video against the original, any degradation in the source frames inherently affects the measured quality of the output. Therefore, the elevated VQM values for do not indicate poor reconstruction performance but rather reflect the limitations of the original video quality.

6.3. Comparison with

Given the current popularity of based encoding of videos for CCTV camera feeds, we evaluated our proposed encoding method with the codec method for three deployment scenarios— + , + , and + . First, the combined set of frames in each original video pair was encoded using the codec. Similarly, the set of video frames obtained by applying our proposed scheme were also encoded using the codec. We then compared the performance of the based encoding with our proposed approach coupled with encoding. The results of our experiments are depicted in Table 7 and Table 8. In Table 8, video bitrate is defined as the amount of video data transmitted per unit time. Typically, a higher bitrate implies larger file size and better quality of the video. The bitrate can be calculated using the formula

Here, the file size is measured in s (), and the duration is obtained by dividing the number of frames by the frame rate.

In our tests, we used a frame rate of 30 frames per second ().

Table 7.

Quality and size comparison of proposed scheme with H.265.

Table 8.

Comparison of proposed scheme with : transmission time, energy and bitrate.

Our experimental results demonstrate that the proposed encoding scheme combined with encoding preserves high visual quality, as indicated by the and values under the Decoded Video-1 and Decoded Video-2 columns in Table 7.

From Table 8, we see that our approach consistently achieves lower transmission time and energy consumption, with an average reduction of in transmission time and in energy consumption compared to -based encoding for the original video-pairs.

In addition to generating considerable size reductions, a combination of our proposed scheme with the encoding scheme also achieves a reduction in bitrate (as seen from Table 8) without sacrificing video quality (as observed from the and values in Table 7), thereby highlighting the efficiency of our approach in terms of bandwidth usage.

Table 9 depicts the compression of the original raw files obtained by using the encoding scheme vis-à-vis our proposed scheme combined with encoding. We measured the resulting file size obtained by the two encoding methods against the raw file sizes and calculated the compression ratio obtained for each encoding method using the following formula:

Table 9.

Video data compression results.

Collectively, these results clearly validate the advantages of our proposed scheme in achieving efficient and high-quality video compression while maintaining good visual quality of the individual videos.

7. Conclusions

The proposed framework presents a cutting-edge solution for enhancing the storage efficiency and security of video data, particularly in CCTV surveillance systems. By utilizing a novel pixel-alternating technique to merge frames from multiple video sources, our method significantly reduces storage requirements while preserving the clarity and coherence of surveillance footage. The seamless integration of encryption within the encoding and decoding process ensures robust protection against unauthorized access and tampering, both during storage and transmission.

A key advantage of this approach is its adaptability to variations in frame counts, enabling smooth and precise video reconstruction without compromising visual detail. This preservation of integrity is crucial for forensic analysis in security applications. Additionally, the system’s modular design allows for easy integration into diverse video processing workflows, making it a versatile and scalable solution for contemporary surveillance needs. Experimental results highlight a substantial reduction in both transmission time and energy consumption for the encoded video compared to individually transmitted videos.

As part of our future work, we plan to extend the proposed approach by deploying it on embedded hardware platforms such as the Raspberry Pi and NVIDIA Jetson Nano. This will facilitate real-time video processing and enable seamless integration into practical, resource-constrained environments. Additionally, we aim to explore enhanced security mechanisms for robust video data transmission, with a particular focus on applications in CCTV surveillance systems.

In summary, our proposed framework optimizes the management of large-scale video data in surveillance systems, combining efficient storage solutions with advanced security measures. We observed an average gain in transmission time and energy efficiency for all the three different deployment scenarios. Its implementation in CCTV systems not only enhances the accessibility and protection of critical footage but also addresses the growing challenges of video storage and security in modern surveillance environments. As such, it represents a significant advancement in the field, offering a reliable and scalable response to the evolving demands of video data management.

Author Contributions

Conceptualization, A.B.; methodology, A.B.; software, S.C.S.; validation, A.B. and K.S.; formal analysis, A.B. and S.C.S.; investigation and data curation, A.B. and S.C.S.; writing—original draft preparation and writing—review and editing, S.C.S., A.B., and K.S.; supervision, A.B. and K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

This study utilized two publicly available datasets, each of which is referenced in Section 6.

Acknowledgments

During the preparation of this manuscript/study, the authors used ChatGPT-4 and Grammarly for the purposes of grammar, sentence structure, spelling, and punctuation corrections. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, C.W. Internet of Video Things: Next-generation IoT with Visual Sensors. IEEE Internet Things J. 2020, 7, 6676–6685. [Google Scholar] [CrossRef]

- Caputo, A.C. Digital Video Surveillance and Security; Butterworth-Heinemann: Oxford, UK, 2014. [Google Scholar]

- Abdullah, A. Advanced Encryption Standard (AES) Algorithm to Encrypt and Decrypt Data. Int. J. Sci. Res. 2017, 6, 11. [Google Scholar]

- Putra, A.B.W.; Gaffar, A.F.O.; Wajiansyah, A.; Qasim, I.H. Feature-Based Video Frame Compression Using Adaptive Fuzzy Inference System. In Proceedings of the 2018 IEEE International Symposium on Advanced Intelligent Informatics (SAIN), Yogyakarta, Indonesia, 29–30 August 2018; pp. 49–55. [Google Scholar]

- Silveira, D.; Povala, G.; Amaral, L.; Zatt, B.; Agostini, L.; Porto, M. A Real-Time Architecture for Reference Frame Compression for High Definition Video Coders. In Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS), Lisbon, Portugal, 24–27 May 2015. [Google Scholar] [CrossRef]

- Jacquin, A.E. Image coding based on a fractal theory of iterated contractive image transformations. IEEE Trans. Image Process. 1992, 1, 18–30. [Google Scholar] [CrossRef] [PubMed]

- Lima, V.; Schwartz, W.; Pedrini, H. Fast Low Bit-Rate 3D Searchless Fractal Video Encodin. In Proceedings of the 2011 24th SIBGRAPI Conference on Graphics, Patterns and Images, Alagoas, Brazil, 28–31 August 2011; pp. 189–196. [Google Scholar] [CrossRef]

- Ibaba, A.; Adeshina, S.; Aibinu, A.M. A Review of Video Compression Optimization Techniques. In Proceedings of the 2021 1st International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 15–16 July 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Xue, L.; Ai, C.; Ge, Z. Multi-image authentication, encryption and compression scheme based on double random phase encoding and compressive sensing. Phys. Scr. 2025, 100, 035539. [Google Scholar] [CrossRef]

- Begum, M.B.; Deepa, N.; Uddin, M.; Kaluri, R.; Abdelhaq, M.; Alsaqour, R. An efficient and secure compression technique for data protection using burrows-wheeler transform algorithm. Heliyon 2023, 9, e17602. [Google Scholar] [CrossRef] [PubMed]

- Abdo, A.; Karamany, T.S.; Yakoub, A. A hybrid approach to secure and compress data streams in cloud computing environment. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 101999. [Google Scholar] [CrossRef]

- Majumder, P.; Sinha, B.P.; Sinha, K. Deep Learning Aided Energy-Efficient Lossless Video Data Transmission from IoVT Visual. In Innovations in Systems and Software Engineering; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Zhang, S.; Chen, H.; Zhang, S.; Delong, H.E. System and Method for H. 265 Encoding. U.S. Patent 11,956,452, 9 April 2024. [Google Scholar]

- Li, Z.-N.; Drew, M.; Liu, J. Modern video coding standards: H.264, H.265, and H.266. Fundam. Multimed. 2021, 423–478. [Google Scholar] [CrossRef]

- Liu, S.; Li, Y.; Jin, Z. Research on Enhanced AES Algorithm Based on Key Operations. In Proceedings of the 2023 IEEE 5th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 11–13 October 2023; pp. 318–322. [Google Scholar] [CrossRef]

- Roelofs, G. Chapter 19. PNG Lossless Image Compression. In Lossless Compression Lossless Compression Handbook; Academic Press: Cambridge, MA, USA, 2003. [Google Scholar] [CrossRef]

- Rahmadani, R.; Maulana, B.; Mendoza, M.; Hutabarat, A.; Aritonang, L. Combination of Rot13 Cryptographic Algorithm and ECB (Electronic Code Book) in Data Security. In Proceedings of the 4th Annual Conference of Engineering and Implementation on Vocational Education, Medan, Indonesia, 20 October 2022. [Google Scholar] [CrossRef]

- Pezoa, F.; Reutter, J.; Suarez, F.; Ugarte, M.; Vrgoč, D. Foundations of JSON Schema. In Proceedings of the ACM SIGMOD International Conference on Management of Data, San Francisco, CA, USA, 26 June–1 July 2016; pp. 263–273. [Google Scholar] [CrossRef]

- Urban Tracker Dataset. Available online: https://www.jpjodoin.com/urbantracker/dataset.html (accessed on 1 May 2025).

- Jodoin, J.-P.; Bilodeau, G.-A.; Saunier, N. Urban Tracker: Multiple Object Tracking in Urban Mixed Traffic. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV14), Steamboat Springs, CO, USA, 24–26 March 2014. [Google Scholar]

- WiseNET CCTV Video Dataset. Available online: https://www.kaggle.com/datasets/abdelrhmannile/wisenet?resource=download (accessed on 25 May 2025).

- Marroquin, R.; Dubois, J.; Nicolle, C. Ontology for a Panoptes building: Exploiting contextual information and a smart camera network. Semant. Web 2018, 9, 803–828. [Google Scholar] [CrossRef]

- Forouzan, B.A. Data Communications and Networking; McGraw-Hill: New York, NY, USA, 2013. [Google Scholar]

- WiFi Standards Explained: Compare 802.11be, 802.11ac, 802.11ax and More. Available online: https://community.fs.com/article/802-11-standards-explained.html (accessed on 1 May 2025).

- Cisco Catalyst 9136 Series Access Point Hardware Installation Guide. Available online: https://www.cisco.com/c/en/us/td/docs/wireless/access_point/c9136i/install-guide/b-hig-9136i/appendix.pdf (accessed on 1 May 2025).

- PSNR Documentation. Available online: https://www.mathworks.com/help/vision/ref/psnr.html (accessed on 1 May 2025).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Rassool, R. VMAF reproducibility: Validating a perceptual practical video quality metric. In Proceedings of the 2017 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Cagliari, Italy, 7–9 June 2017; pp. 1–2. [Google Scholar]

- Pinson, M.H.; Wolf, S. A new standardized method for objectively measuring video quality. IEEE Trans. Broadcast. 2004, 50, 312–322. [Google Scholar] [CrossRef]

- Video Simulation Data Repository. Available online: https://github.com/swarnalatha-97/Video-simulation-data/tree/main (accessed on 1 May 2025).

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).