IoT Security-Quality-Metrics Method and Its Conformity with Emerging Guidelines

Abstract

:1. Introduction

2. Background and Necessity for This Study

2.1. Question 1: Does Any Existing Literature or Standard Covering Cybersecurity-Quality-Control Measures for IoT throughout the Product Lifecycle Exist?

2.2. Question 2: Does Any Reason for Visualizing the Cybersecurity Control Measures Exist?

3. Past Research on IoT Security Quality

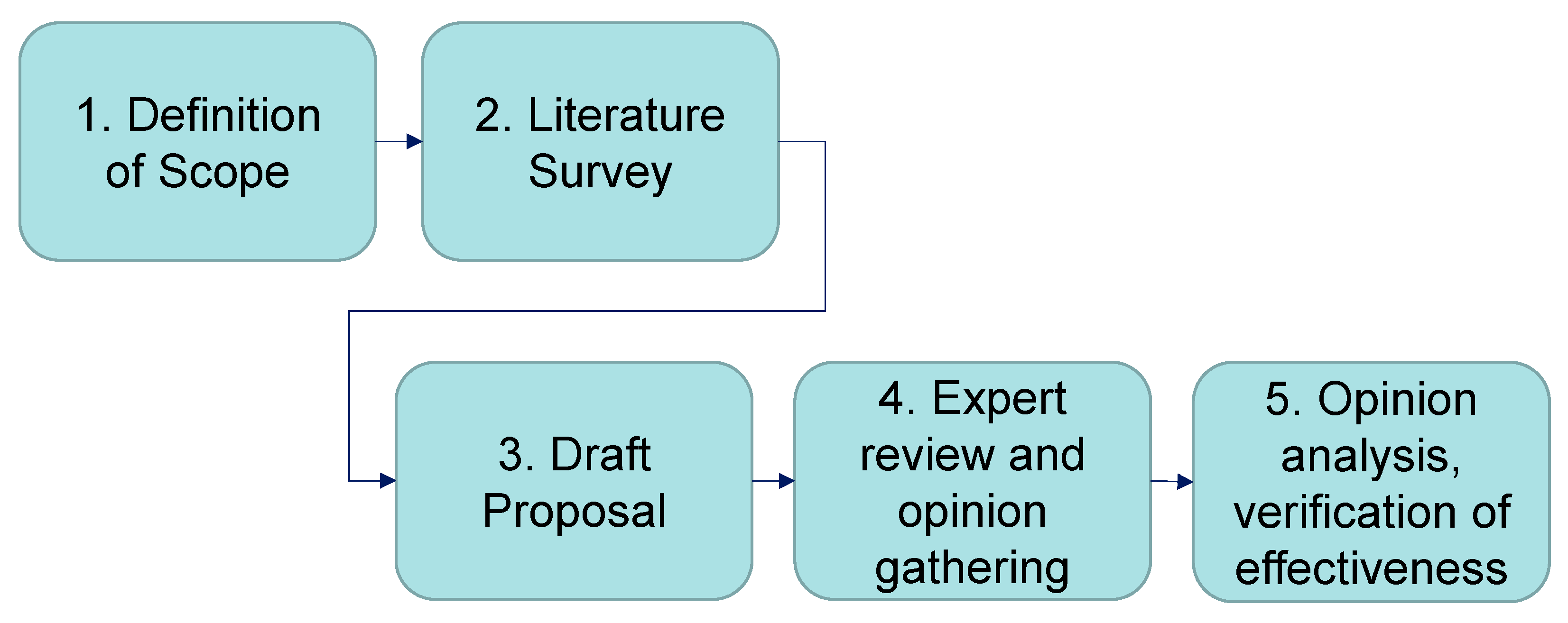

3.1. Research Method

3.2. Definition of Scope (Step 1)

3.3. Results of Literature Review (Step 2)

4. Itemizing IoT Security-Quality Metrics

4.1. Definition of IoT Security

4.2. Requirements of IoT Security Quality

4.3. Transparency Model of IoT Security Quality

- The “security by design” area comprises two parts, namely, the process quality and corresponding product quality [46]. Those in charge of product planning and those in charge of determining basic specifications are mainly responsible for this area.

- The “security assurance assessment” area involves the evaluation results. Those in charge of product development and those in charge of quality assurance are responsible for this area.

- The “security production” phase entails the items of security management during production. Those in charge of manufacturing the product are responsible for this area.

- The “security operation” phase encompasses aftersales security monitoring and response to incidents. Those in charge of customer support, maintenance, and product security incident response team (PSIRT) are responsible for this area.

- The “compliance with law, regulation, international standard” area implies that the public or industry requirements have been fulfilled. Compliance with industry standards and regulations is relevant to all areas. All members, not just the product manager, are responsible for this area.

4.4. Draft Proposal Development of Security-Quality Metrics

4.4.1. Software Quality

4.4.2. GQM Method

4.4.3. Setting Goals for Each Area

4.4.4. Setting Sample Questions and Metrics for Each Goals (Step 3–4)

- (a)

- Do the metrics make sense to IoT vendors?

- (b)

- What are the criteria for the metrics?

- (c)

- Will they interfere with the existing development process?

5. Evaluation of Various IoT Security Guidelines with the Sample Metrics

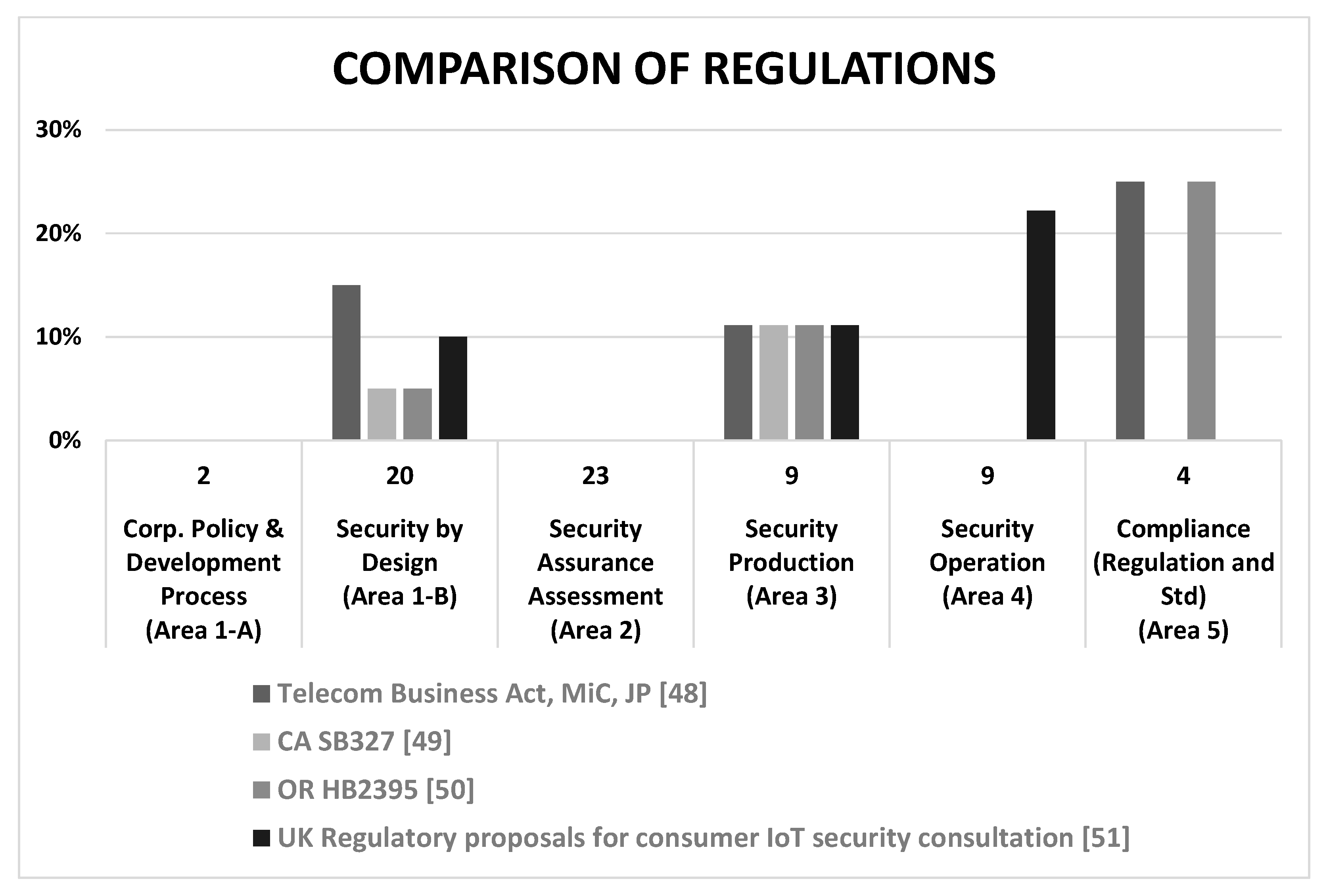

5.1. Regulations

5.2. Baseline Guidance

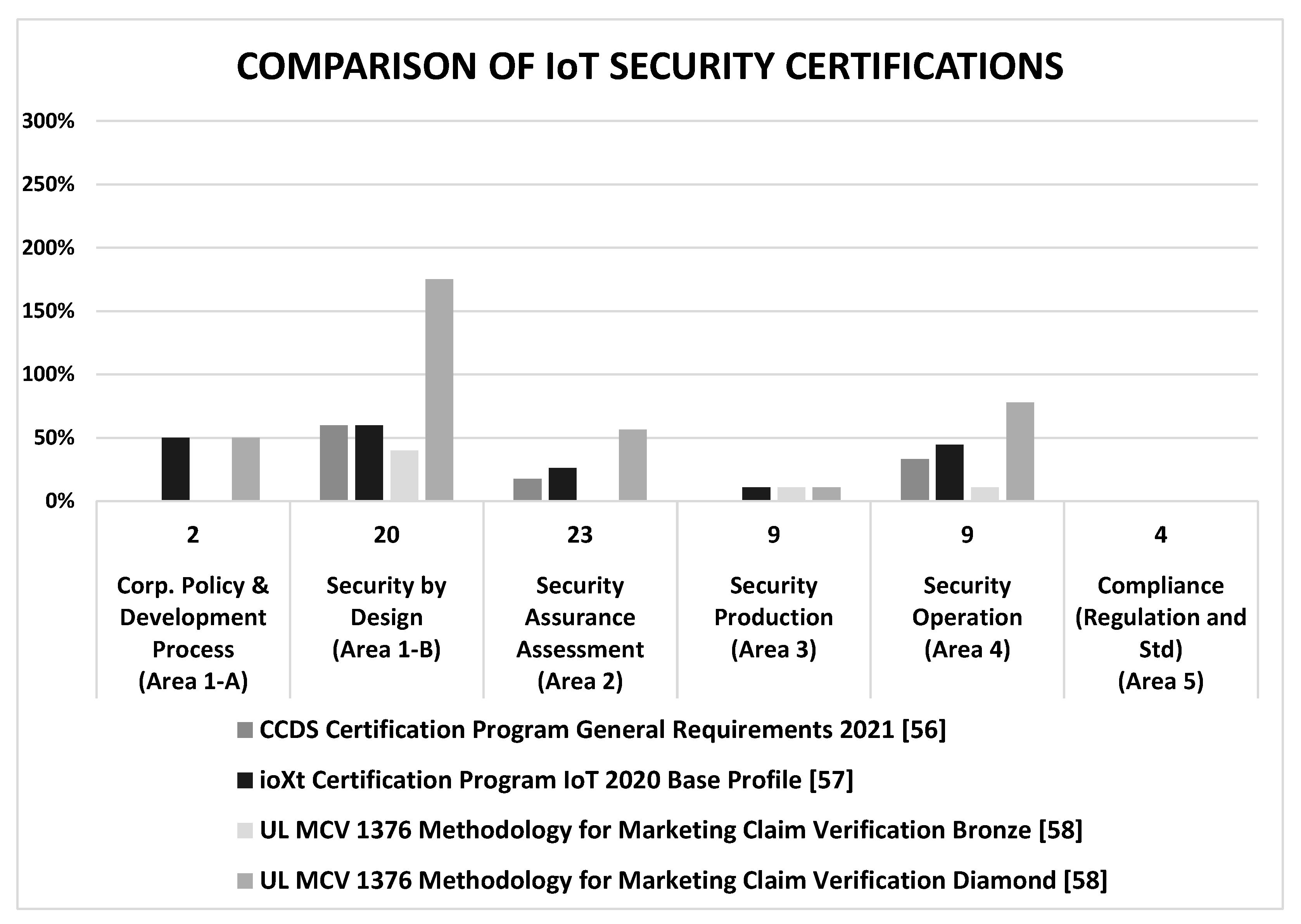

5.3. Certifications

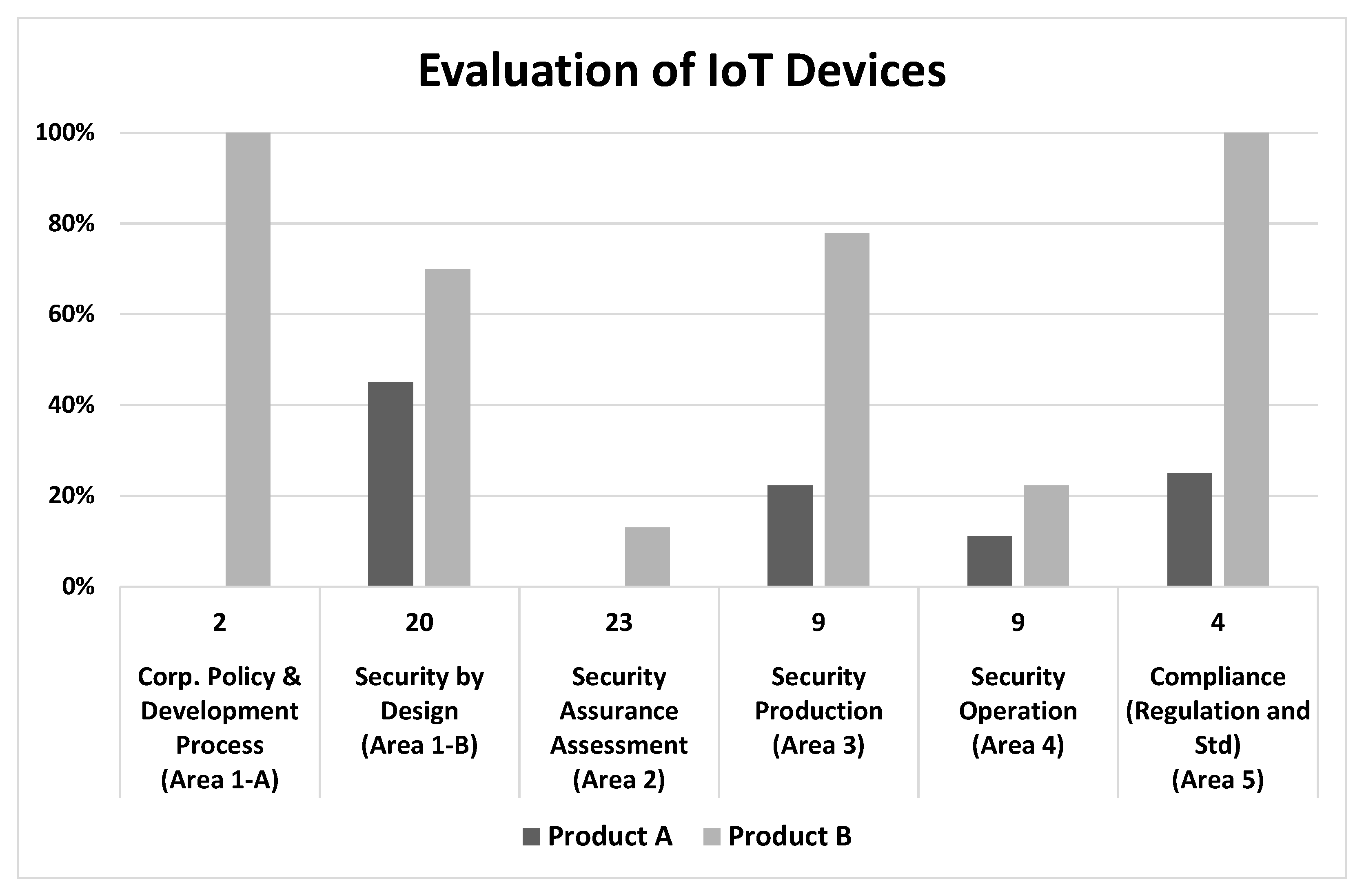

6. Evaluation of IoT Devices with the Proposed Method

6.1. Target IoT Devices

- They are consumer products that can be purchased online and in stores.

- A full-HD high-definition recording is the main selling point.

- GPS location recording.

- Wi-Fi (wireless) connectivity with a smartphone.

- 16 GB storage space.

- Easy to install and start using by powering from a cigar socket.

- Downloadable applications for smartphones and PCs that can be connected to and functionally linked with a dashcam.

- Product design: shape and color.

- Price: Product A is cheaper than Product B.

6.2. Evaluation with the Proposed Method

6.3. Evaluation Result

7. Discussion of Findings

8. Future Directions

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Area 1: Security by Design

| Question | Sub-Question | Metrics |

|---|---|---|

| Does the company recognize the importance of handling product security? | Does the company have a product-security policy? | It is documented. = 1 There is no policy defined. = 0 |

| Is the product-security-development process defined? | It is documented. = 1 There is no process defined. = 0 |

| Question | Sub-Question | Metrics |

|---|---|---|

| Is security considered from the planning/design stage? | Is threat analysis performed? | There is an analysis result. = 1 It is not performed, or no result. = 0 |

| Is risk assessment based on threat analysis performed? | There is an assessment result. = 1 It is not performed, or no result. = 0 | |

| Are threats selected for countermeasures based on risk assessment and risk mitigation countermeasure design implemented? | There is a list of threats to be protected. = 1 There is no list of threats to be treated. = 0 | |

| There is a security countermeasure design document. = 1 There is no countermeasure design. = 0 | ||

| Is the threat excluded from countermeasures clear? | There is a list of accepted threats. = 1 There is no list of accepted threats. = 0 | |

| Are the methods for reducing threats excluded from countermeasures and alerts described in manuals, etc.? | There is a document for users. = 1 There is no document. = 0 | |

| Is the handling of personal information taken into consideration? | There is a personal information list to handle. = 1 There is no list or care. = 0 | |

| Are secure development methods adopted? | Are secure coding rules applied? | Secure coding rules are applied. = 1 There is no rule applied. = 0 |

| Are all the software components composing the product listed? | Is the adopted OS clear? | The OS name and version are clear. = 1 It is not clear. = 0 |

| Is the adopted open-source software clear? | All of the open-source software names and versions are clear. = 1 Some or none of OSS is clear. = 0 | |

| Is the adopted outsourced software clear? | Vendor name, component name, version, and country of origin of the outsourced software can be confirmed. = 1 It is not clear. = 0 | |

| Is the self-designed software clear? | The software name and version are confirmed. = 1 It is not clear. = 0 | |

| Outsourcing vendor, component name, and version are confirmed. = 1 It is not clear = 0 | ||

| Is there a security maintenance feature for the IoT product? | Is there software update capability? | The product is capable of updating software. = 2 (automatic), = 1 (manual) There is no update capability. = 0 |

| Is there a software configuration self-verification function? (For automatic updates) | There is a function. = 1 There is no function. = 0 | |

| Is there an access control feature? | There is a function. = 1 There is no function. = 0 | |

| Is there an encryption feature? | There is a function. = 1 There is no function. = 0 | |

| Is there a logging function? | There is a function. = 1 There is no function. = 0 | |

| Is there a deactivation function or a fallback operation function when the security maintenance service ends? | There is a function. = 1 There is no function. = 0 | |

| Is the IoT product designed with consideration of disposal? | Is there a function to delete user data for disposal? | There is a function. = 1 There is no function. = 0 |

Appendix A.2. Area 2: Security Assurance Assessment

| Question | Sub-Question | Metrics |

|---|---|---|

| Is the product evaluated to ensure it is secure as designed? | Does the source code violate secure coding rules? | There are assessment results that comply with the rules. = 1 There is no result. = 0 |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It is not confirmed. = 0 | ||

| Has static analysis of the source code confirmed that there are no vulnerabilities in the source code? | There are the results of the static analysis. = 1 There is no result. = 0 | |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Has the software no known vulnerabilities? | There are the evaluation results with the date. = 1 There is no result. = 0 | |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Have the latest security patches applied on the OS/OSS been confirmed? | There is a confirmation result. = 1 It is not confirmed. = 0 | |

| The version of the applied patch is confirmed. = 1 There is no confirmation. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Has the implementation of preventive measures for HW analysis been confirmed? | There is confirmation of the blockade of JTAG, UART, etc. = 1 There is no confirmation. = 0 | |

| Are unnecessary communication ports open and is it verified that the open ports are not vulnerable? | There are the evaluation results with the date. = 1 There is no result. = 0 | |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Is it verified that there are no zero-day vulnerabilities? (Has a fuzzing assessment been performed?) | There are the evaluation results with the date. = 1 There is no result. = 0 | |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Have the security features and vulnerabilities of the outsourced software been evaluated? (Has the acceptance assessment been conducted?) | There are the evaluation results with the date. = 1 There is no result. = 0 | |

| Assessment tool name and version are confirmed. = 1 Those are not confirmed. = 0 | ||

| The name of the evaluator is verified. = 1 It cannot be confirmed. = 0 | ||

| Has the security service level of the cloud services been verified? | There is a contract (SLA clause) in place and confirmed. = 1 There is no confirmation. = 0 |

Appendix A.3. Area 3: Security Production

| Question | Sub-Question | Metrics |

|---|---|---|

| Is the product produced in a secure manufacturing process? | Is the identity of the line manager verified for in-house production? | All employees are identified. = 1 Not all of the person in the factory are identified. = 0 |

| There is a record of the access control to the production site. = 1 There is no record of access control. = 0 | ||

| Has the ODM producer’s manufacturing process been verified? | Company name and country of production are confirmed. = 1 It is hard to confirm who manufactures. = 0 | |

| The results of the production process audit are confirmed. = 1 There is no confirmation. = 0 | ||

| Is production under control to be produced with genuine parts? | Certificates of authorized parts are verified. = 1 There is no confirmation, = 0 | |

| Is the production process capable of setting each device with unique IDs and passwords? | It is capable of setting unique IDs and passwords to each device. = 1 It is not capable. = 0 | |

| Is there security measure in place for the production system? | Is it possible to detect cyberattacks such as malware infiltration, virus infections and others on production systems? | It is capable of attack detection. = 1 It is not capable. = 0 |

| Are security measures in place for production systems? | Security measures to the production system are in place. = 1 There is no security countermeasure on the production system. = 0 | |

| Is coordination in place with CSIRT for incident response? | CSIRT is cooperating for factory incident. = 1 There is no incident response readiness. = 0 |

Appendix A.4. Area 4: Security Operation

| Question | Sub-Question | Metrics |

|---|---|---|

| Is there a product security response team for the products in the market? | Is there an operating system to monitor vulnerability information for products? | SOC (security operation system) is in place. = 1 There is no system to monitor vulnerability. = 0 |

| Is there an incident response system for products? | PSIRT (product security incident response team) is in place. = 1 There is no response system. = 0 | |

| Is the incident response process defined? | The incident response process is documented. = 1 There is no process defined. = 0 | |

| Is there a contact point for receiving vulnerability information? | The contact information is publicly available. = 1 There is no contact information. = 0 | |

| Is there a personal information handling policy and management system in place? | There are a policy and a management system. = 1 There is no policy and management system. = 0 | |

| Is there a system for the stable operation of IoT products? | Is there a system monitoring the operational status of the cloud services which IoT products works with? | The cloud operator’s contact information is clarified. = 1 There is no means to check the cloud operation. = 0 |

| It is capable of checking the status of cloud operation. = 1 It is not capable of checking the cloud operation. = 0 | ||

| Is it capable of managing customer information for service in use? | It is capable of managing customer information based on the management rules documented. = 1 It is not capable. = 0 | |

| Are restrictions on product security support clearly stated? | Is the warranty period and exemption for security service/maintenance provided? | Security service/maintenance that the company provide is clarified. = 1 It is not clarified. = 0 |

Appendix A.5. Area 5: Law Regulation and International Standard

| Question | Sub-Question | Metrics |

|---|---|---|

| Does the product comply with the laws and regulations about the product security of the region to be sold? | Does the product meet legal and regulatory requirements? | There are the evaluation results that meet the requirements. = 1 There is no evaluation result. = 0 |

| Does the product have the required certifications or conformity statements, if necessary? | After confirming the necessity of certification/conformity certificate, the acquisition result can be confirmed. = 1 The need for a certification/conformity certificate has not been confirmed. = 0 | |

| Does the product comply with the required international standards? | Does the product have the required certifications or conformity statements, if necessary? | After confirming the necessity of certification/conformity certificate, the acquisition result can be confirmed. = 1 The need for a certification/conformity certificate has not been confirmed. = 0 |

| Does the product comply with private security certification? | Has the product acquired the certification of conformity with the standard that is decided to be required or voluntarily acquired? | After confirming the necessity or voluntary acquiring of certification/conformity certificate, the acquisition result can be confirmed. = 1 The need for a certification/conformity certificate has not been decided. = 0 |

Appendix B

| Name of Source | Doc Type | Year | Country | Issued by | Org Type |

|---|---|---|---|---|---|

| Telecom Business Act [48] | Law/Regulation | 2020 | Japan | Ministry of Internal Affairs and Communications | Government |

| State Bill 327 [49] | Law/Regulation | 2020 | USA | State of California | Government |

| House Bill 2395 [50] | Law/Regulation | 2020 | USA | State of Oregon | Government |

| Consumer IoT Security Consultation [51] | Law/Regulation | 2020 | UK | Department for Digital, Culture, Media & Sport | Government |

| EN 303 645 v2.1 [55] | Baseline Standard | 2020 | EU | European Communications Standards Institute (ETSI) | SDO |

| NISTIR 8259 [52], 8259A [53] | Baseline Standard | 2020 | USA | National Institute of Standards and Technology (NIST) | SDO |

| Baseline Security Recommendations for IoT [37] | Baseline Standard | 2017 | EU | ENISA (European Union Agency for Cybersecurity) | Government |

| The C2 Consensus on IoT Device Security Baseline Capabilities [54] | Baseline Standard | 2019 | USA | Council to Secure the Digital Economy (CSDE) | Industry |

| IoT Common Security Requirements Guidelines 2021 [56] | Certification | 2020 | Japan | Consumer Connected Device Security Council (CCDS) | Industry |

| ioXt 2020 Base Profile ver.1.0 [57] | Certification | 2020 | USA | ioXt Alliance, Inc. | Industry |

| Methodology for Marketing Claim Verification: Security Capabilities Verified to level Bronze/Silver/Gold/Platinum/Diamond, UL MCV 1376 [58] | Certification | 2019 | USA | UL LLC | Industry |

References

- Xu, T.; Wendt, J.B.; Potkonjak, M. Security of IoT Systems: Design Challenges and Opportunities; IEEE/ACM ICCAD: San Jose, CA, USA, November 2014. [Google Scholar] [CrossRef]

- Oh, S.; Kim, Y.-G. Security Requirements Analysis for the IoT; Platcon: Busan, Korea, 2017. [Google Scholar] [CrossRef]

- Alaba, F.A.; Othman, M.; Abaker, I.; Hashem, T.; Alotaibi, F. Internet of Things security: A survey. J. Netw. Comput. Appl. 2017, 88, 10–28. [Google Scholar] [CrossRef]

- Mahmoud, R.; Yousuf, T.; Aloul, F.; Zualkernan, I. Internet of things (IoT) security: Current status, challenges and prospective measures. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015. [Google Scholar] [CrossRef]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Voas, J. DDoS in the IoT: Mirai and Other Botnets. Computer 2017, 50, 80–84. [Google Scholar] [CrossRef]

- Krebs, B. Who Makes the IoT Things Under Attack? Krebs on Security, Oct. 3, 2016, Virginia, USA. Available online: https://krebsonsecurity.com/2016/10/who-makes-the-iot-things-under-attack/ (accessed on 15 September 2021).

- The Hong Kong Computer Emergency Response Team Coordination Centre (HKCERT) and the Hong Kong Productivity Council (HKPC). Device (Wi-Fi) Security Study. Mar. 2020, Hong Kong, China. Available online: https://www.hkcert.org/f/blog/263544/95140340-8c09-4c9a-8c32-cedb3eb26056-DLFE-14407.pdf (accessed on 15 September 2021).

- Baxley, B. From BIAS to Sweyntooth: Eight Bluetooth Threats to Network Security; Bastille Networks: San Francisco, CA, USA, December 2020; Available online: https://www.infosecurity-magazine.com/opinions/bluetooth-threats-network/ (accessed on 15 September 2021).

- Vaccari, I.; Cambiaso, E.; Aiello, M. Remotely Exploiting AT Command Attacks on ZigBee Networks. Secur. Commun. Netw. 2017, 2017, 1723658. [Google Scholar] [CrossRef] [Green Version]

- Vallois, V.; Guenane, F.; Mehaoua, A. Reference Architectures for Security-by-Design IoT: Comparative Study. In Proceedings of the 2019 Fifth Conference on Mobile and Secure Services (MobiSecServ), Miami Beach, FL, USA, 2–3 March 2019. [Google Scholar] [CrossRef]

- ISO/IEC DIS 27400. Cybersecurity: IoT Security and Privacy—Guidelines; ISO: Geneva, Switzerland, 2021; Available online: https://www.iso.org/standard/44373.html (accessed on 13 December 2021).

- Gillies, A. Improving the quality of information security management systems with ISO 27000. TQM J. 2011, 23, 367–376. [Google Scholar] [CrossRef]

- Baldini, G.; Skarmeta, A.; Fourneret, E.; Neisse, R.; Legeard, B.; Gall, F.L. Security certification and labelling in Internet of Things. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016. [Google Scholar] [CrossRef]

- Costa, D.M.; Eixeira, E.N.; Werner, C.M.L. Software Process Definition using Process Lines: A Systematic Literature Review. In Proceedings of the XLIV Latin American Computer Conference (CLEI), Sao Paulo, Brazil, 1–5 October 2018. [Google Scholar] [CrossRef]

- Haufe, K.; Brandis, K.; Colomo-Palacios, R.; Stantchev, V.; Dzombeta, S. A process framework for information security management. Int. J. Inf. Syst. Proj. Manag. 2016, 4, 27–47. [Google Scholar] [CrossRef]

- Siddiqui, S.T. Significance of Security Metrics in Secure Software Development. Int. J. Appl. Inf. Syst. 2017, 12, 10–15. [Google Scholar] [CrossRef]

- Pino, F.J.; García, F.; Piattini, M. Software process improvement in small and medium software enterprises: A systematic review. Softw. Qual. J. 2008, 16, 237–261. [Google Scholar] [CrossRef]

- Humphrey, W.S. Managing the Software Process; Addison-Wesley: Boston, MA, USA, 1989; pp. 247–286. ISBN 978-0-201-18095-4. [Google Scholar]

- Jones, C. Patterns of large software systems, Failure and success. Computer 1995, 28, 86–87. [Google Scholar] [CrossRef]

- ISO/IEC 30141. Internet of Things (loT)—Reference Architecture; ISO: Geneva, Switzerland, 2018; Available online: https://www.iso.org/standard/65695.html (accessed on 18 October 2021).

- Atta, N.; Talamo, C. Digital Transformation in Facility Management (FM). IoT and Big Data for Service Innovation. In Digital Transformation of the Design, Construction and Management Processes of the Built Environment; Research for Development; Springer: Berlin, Germany, December 2019; pp. 267–278. [Google Scholar] [CrossRef] [Green Version]

- CCMB-2017-04-001. Common Criteria for Information Technology Security Evaluation Part 1: Introduction and General Model Ver. 3.1, Rev. 5, The Common Criteria. April 2017; p. 2. Available online: https://www.commoncriteriaportal.org/files/ccfiles/CCPART1V3.1R5.pdf (accessed on 15 September 2021).

- ISASecure. IEC 62443—EDSA Certification. Available online: https://www.isasecure.org/en-US/Certification/IEC-62443-EDSA-Certification-(In-Japanese) (accessed on 15 September 2021). (In Japanese).

- Mendes, N.; Madeira, H.; Durães, J. Security Benchmarks for Web Serving Systems. In Proceedings of the 2014 IEEE 25th International Symposium on Software Reliability Engineering (ISSRE), Naples, Italy, 3–6 November 2014. [Google Scholar] [CrossRef]

- Oliveira, R.; Raga, M.; Laranjeiro, N.; Vieira, M. An approach for benchmarking the security of web service frameworks. Future 2020, 110, 833–848. [Google Scholar] [CrossRef]

- Bernsmed, K.; Jaatun, M.G.; Undheim, A. Security in service level agreements for cloud computing. In Proceedings of the 1st International Conference on Cloud Computing and Services Science (CLOSER 2011), Noordwijkerhout, The Netherlands, 7–9 May 2011; Available online: https://jaatun.no/papers/2011/CloudSecuritySLA-Closer.pdf (accessed on 15 September 2021).

- Keoh, S.L.; Kumar, S.S. Securing the Internet of Things: A Standardization Perspective. IEEE Internet Things J. 2014, 1, 265–275. [Google Scholar] [CrossRef]

- Granjal, J.; Monteiro, E.; Silva, J.S. Security for the Internet of Things: A Survey of Existing Protocols and Open Research Issues. IEEE Commun. Surv. Tutor. 2015, 17, 1294–1312. [Google Scholar] [CrossRef]

- Aversano, L.; Bernardi, M.L.; Cimitile, M.; Pecori, R. A systematic review on Deep Learning approaches for IoT security. Comput. Sci. Rev. 2021, 40, 100389. [Google Scholar] [CrossRef]

- Ahmad, R.; Alsmadi, I. Machine learning approaches to IoT security: A systematic literature review. Internet Things 2021, 14, 100365. [Google Scholar] [CrossRef]

- Samann, F.E.F.; Zeebaree, S.R.; Askar, S. IoT Provisioning QoS based on Cloud and Fog Computing. J. Appl. Sci. Technol. Trends 2021, 2, 29–40. [Google Scholar] [CrossRef]

- Zikria, Y.B.; Ali, R.; Afzai, M. Next-Generation Internet of Things (IoT): Opportunities, Challenges, and Solutions. Sensors 2021, 21, 1174. [Google Scholar] [CrossRef] [PubMed]

- Fizza, K.; Barnerjee, A.; Mitra, K.; Jayaraman, P.P.; Ranjan, R.; Patel, P.; Georgakopoulos, D. QoE in IoT: A vision, survey and future directions. Discov. Internet Things 2021, 1, 4. [Google Scholar] [CrossRef]

- Report on the Current Status and Awareness of Security Measures among IoT Product and Service Developers; Information-Technology Promotion Agency: Tokyo, Japan, March 2018; Available online: https://www.ipa.go.jp/files/000065094.pdf (accessed on 15 September 2021).

- Ito, K.; Morisaki, S.; Goto, A. A Study toward Quality Metrics for IoT Device Cybersecurity Capability; International Studies Association (ISA) Asia-Pacific: Singapore, July 2019; p. 22. Available online: https://pure.bond.edu.au/ws/portalfiles/portal/34024127/ISA_AP_Singapore_2019_Full_Program.pdf (accessed on 15 September 2021).

- Wohlin, C. Guidelines for Snowballing in Systematic Literature Studies and a Replication in Software Engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering (EASE ’14), London, UK, 13–14 May 2014; Article No. 38. pp. 1–10. [Google Scholar]

- Baseline Security Recommendations for IoT; European Union Agency for Cybersecurity (ENISA): Attiki, Greece, 2017; ISBN 978-92-9204-236-3. [CrossRef]

- Framework for Improving Critical Infrastructure Cybersecurity v1.1; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, April 2018. [CrossRef]

- IoT Security Guidelines for Endpoint Ecosystem, Ver. 2.2; The GSM Association (GSMA): Atlanta, GA, USA, February 2020; Available online: https://www.gsma.com/iot/wp-content/uploads/2020/05/CLP.13-v2.2-GSMA-IoT-Security-Guidelines-for-Endpoint-Ecosystems.pdf (accessed on 15 September 2021).

- Abdulrazig, A.; Norwawi, N.; Basir, N. Security measurement based on GQM to improve application security during requirements stage. Int. J. Cyber-Secur. Digit. Forensics (IJCSDF) 2012, 1, 211–220. Available online: http://oaji.net/articles/2014/541-1394063127.pdf (accessed on 15 September 2021).

- Yahya, F.; Walters, R.J.; Wills, G. Using Goal-Question-Metric (GQM) Approach to Assess Security in Cloud Storage. Lect. Notes Comput. Sci. 2017, 10131, 223–240. [Google Scholar] [CrossRef] [Green Version]

- Dodson, D.; Souppaya, M.; Scarfone, K. Mitigating the Risk of Software Vulnerabilities by Adopting a Secure Software Development Framework (SSDF); NIST: Gaithersburg, MD, USA, April 2020. [CrossRef]

- Security Development Lifecycle (SDL). Microsoft. 2008. Available online: https://www.microsoft.com/en-us/securityengineering/sdl/ (accessed on 15 September 2021).

- Secure SDLC (Software Development Life Cycle). Synopsys. Available online: https://www.synopsys.com/blogs/software-security/secure-sdlc/ (accessed on 15 September 2021).

- SDLC (Secure Development Life Cycle); PwC: Tokyo, Japan, 2019; Available online: https://www.pwc.com/jp/ja/services/digital-trust/cyber-security-consulting/product-cs/sdlc.html (accessed on 15 September 2021). (In Japanese)

- Cybersecurity Strategy; National Center of Incident Readiness and Strategy of Cybersecurity (NISC): Tokyo, Japan, September 2015. Available online: https://www.nisc.go.jp/active/kihon/pdf/cs-senryaku-kakugikettei.pdf (accessed on 15 September 2021). (In Japanese)

- Basili, V.; Caldiera, C.; Rombach, D. Goal, question, metric paradigm. In Encyclopedia of Software Engineering; Wiley: Hoboken, NJ, USA, 1994; Volume 2, pp. 527–532. ISBN 1-54004-8. Available online: http://www.kiv.zcu.cz/~brada/files/aswi/cteni/basili92goal-question-metric.pdf (accessed on 15 September 2021).

- Terminal Conformity Regulation of Telecommunications Business Law; Part 10 of Section 34; Ministry of Internal Affairs and Communications of Japan: Tokyo, Japan, January 2020. Available online: https://elaws.e-gov.go.jp/document?lawid=360M50001000031 (accessed on 15 September 2021). (In Japanese)

- California Senate Bill No. 327. Sep. 2018, CA, USA. Available online: https://leginfo.legislature.ca.gov/faces/billTextClient.xhtml?bill_id=201720180SB327 (accessed on 15 September 2021).

- Oregon House Bill 2395. July 2019, OR, USA. Available online: https://legiscan.com/OR/text/HB2395/id/2025565/Oregon-2019-HB2395-Enrolled.pdf (accessed on 15 September 2021).

- Department for Digital, Culture, Media & Sport, UK. Consultation on Regulatory Proposal on Consumer IoT Security. 2020. Available online: https://www.gov.uk/government/consultations/consultation-on-regulatory-proposals-on-consumer-iot-security (accessed on 15 September 2021).

- Foundational Cybersecurity Activities for IoT Device Manufacturers; NISTIR 8259; NIST: Gaithersburg, MD, USA, 2020. [CrossRef]

- IoT Device Cybersecurity Capability Core Baseline; NISTIR 8259A; NIST: Gaithersburg, MD, USA, 2020. [CrossRef]

- Council to Secure the Digital Economy. The C2 Consensus on IoT Device Security Baseline Capabilities. 2019, USA. Available online: https://securingdigitaleconomy.org/wp-content/uploads/2019/09/CSDE_IoT-C2-Consensus-Report_FINAL.pdf (accessed on 15 September 2021).

- Cybersecurity for Consumer Internet of Things: Baseline Requirements, EN 303 645. V2.1.1; ETSI: Sophia Antipolis, France, June 2020; Available online: //www.etsi.org/deliver/etsi_en/303600_303699/303645/02.01.01_60/en_303645v020101p.pdf (accessed on 15 September 2021).

- IoT Common Security Requirements Guidelines 2021; Connected Consumer Device Security Council of Japan (CCDS): Tokyo, Japan, November 2020; Available online: https://www.ccds.or.jp/english/contents/CCDS_SecGuide-IoTCommonReq_2021_v1.0_eng.pdf (accessed on 15 September 2021).

- ioXt 2020 Base Profile Ver. 1.00; ioXt Alliance, Inc.: Newport Beach, CA, USA, April 2020; Available online: https://static1.squarespace.com/static/5c6dbac1f8135a29c7fbb621/t/5ede6a88e6a927219ee86bb2/1591634577949/ioXt_2020_Base_Profile_1.00_C-03-29-01.pdf (accessed on 15 September 2021).

- UL MCV 1376, Methodology for Marketing Claim Verification: Security Capabilities Verified to Level Bronze/Silver/Gold/Platinum/Diamond (IoT Security Rating); Underwriter Laboratories LLC.: Northbrook, IL, USA, June 2019; Available online: https://www.shopulstandards.com/PurchaseProduct.aspx?UniqueKey=40671 (accessed on 13 December 2021).

- Botella, P.; Burgues, X.; Carvallo, J.P.; Franch, X. ISO/IEC 9126 in practice: What do we need to know? In Proceedings of the 1st Software Measurement European Forum (SMEF), Rome, Italy, 28–30 January 2004. [Google Scholar]

- Olsson, T.; Runeson, P. V-GQM: A feed-back approach to validation of a GQM study. In Proceedings of the Seventh International Software Metrics Symposium, London, UK, 4–6 April 2001. [Google Scholar] [CrossRef] [Green Version]

- Othman, B.; Angin, L.; Weffers, P.; Bhargava, B. Extending the Agile Development Process to Develop Acceptably Secure Software. IEEE Trans. Dependable Secur. Comput. 2014, 11, 497–509. [Google Scholar] [CrossRef] [Green Version]

- Oueslati, H.; Rahman, M.M.; Othmane, L.B. Literature Review of the Challenges of Developing Secure Software Using the Agile Approach. In Proceedings of the 2015 10th International Conference on Availability, Reliability and Security (ARES), Toulouse, France, 24–27 August 2015. [Google Scholar] [CrossRef]

- Yasar, H.; Kontostathis, K. Where to Integrate Security Practices on DevOps Platform. Int. J. Secur. Softw. Eng. 2016, 7, 39–50. [Google Scholar] [CrossRef]

- Constante, F.M.; Soares, R.; Pinto-Albuquerque, M.; Mendes, D.; Beckers, K. Integration of Security Standards in DevOps Pipelines: An Industry Case Study. In Product-Focused Software Process Improvement (PROFES 2020); Springer: Turin, Italy, 2020; pp. 434–451. [Google Scholar] [CrossRef]

- Guide to Secure the Quality of IoT Devices and Systems; Information Technology Promotion Agency of Japan (IPA): Tokyo, Japan, February 2019; Available online: https://www.ipa.go.jp/files/000064877.pdf (accessed on 15 September 2021). (In Japanese)

- Jinesh, M.K.; Abhiraj, K.S.; Bolloiu, S.; Brukbacher, S. Future-Proofing the Connected World: 13 Steps to Develop Secure Iot. Jan.; CSA: Bellingham, WA, USA, 2016. [Google Scholar] [CrossRef]

- Security Guidelines for Product Categories—Automotive on-Board Devices—Ver. 2.0; CCDS: Tokyo, Japan, May 2017. Available online: http://ccds.or.jp/english/contents/CCDS_SecGuide-Automotive_On-board_Devices_v2.0_eng.pdf (accessed on 15 September 2021).

- IoT Security Assessment Checklist Ver. 3; GSMA: Atlanta, GA, USA, September 2018; Available online: https://www.gsma.com/security/resources/clp-17-gsma-iot-security-assessment-checklist-v3-0/ (accessed on 15 September 2021).

- IoT Security Evaluation and Assessment Guideline; CCDS: Tokyo, Japan, June 2017; Available online: http://ccds.or.jp/public/document/other/guidelines/CCDS_IoT%E3%82%BB%E3%82%AD%E3%83%A5%E3%83%AA%E3%83%86%E3%82%A3%E8%A9%95%E4%BE%A1%E6%A4%9C%E8%A8%BC%E3%82%AC%E3%82%A4%E3%83%89%E3%83%A9%E3%82%A4%E3%83%B3_rev1.0.pdf (accessed on 15 September 2021). (In Japanese)

| Requirements | Aspect |

|---|---|

| R1: Describing the development process transparently | 1: Security policy of an IoT vendor |

| 2: Quality of security development process | |

| R2: Describing the cybersecurity capability properly | Quality of product cybersecurity performance |

| R3: Responding to the market needs and/or requirements | 1: Covering the requirements by law or regulation |

| 2: Following the recommendations of international standards and guidelines | |

| R4: Security support program (post-market) | Security monitoring, receiving the vulnerability input, update, etc. |

| R5: Any items gaining the user’s trust | - |

| Area | Goals |

|---|---|

| 1-A. Security by Design (Corporate Policy & Development Process Standard) | G1A-1: To provide secure products which gain the trust of customers. |

| G1A-2: To define the corporate standard of secure development processes so that all products provided can be manufactured with security throughout the product lifecycle. | |

| 1-B. Security by Design (Security measures, Secure Development) | G1B: To develop secure products based on the defined development standard from the planning stage of the product lifecycle. |

| 2. Security Assurance Assessment | G2: To evaluate and confirm that secure products are developed as designed. |

| 3. Security Production | G3-1: To carry out production with a secure production operating system to avoid containing security risks. |

| G3-2: To secure the supply continuity. | |

| 4. Security Operation | G4: To take prompt actions to minimize the damage to customers when a security risk becomes apparent in the provided product. |

| 5. Compliance with Law, Regulation, and International Standard | G5: To provide products complying with laws, regulations, and international standards of the destination market. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ito, K.; Morisaki, S.; Goto, A. IoT Security-Quality-Metrics Method and Its Conformity with Emerging Guidelines. IoT 2021, 2, 761-785. https://doi.org/10.3390/iot2040038

Ito K, Morisaki S, Goto A. IoT Security-Quality-Metrics Method and Its Conformity with Emerging Guidelines. IoT. 2021; 2(4):761-785. https://doi.org/10.3390/iot2040038

Chicago/Turabian StyleIto, Kosuke, Shuji Morisaki, and Atsuhiro Goto. 2021. "IoT Security-Quality-Metrics Method and Its Conformity with Emerging Guidelines" IoT 2, no. 4: 761-785. https://doi.org/10.3390/iot2040038

APA StyleIto, K., Morisaki, S., & Goto, A. (2021). IoT Security-Quality-Metrics Method and Its Conformity with Emerging Guidelines. IoT, 2(4), 761-785. https://doi.org/10.3390/iot2040038