The coronavirus (COVID-19) pandemic continues to have a global impact on our society since its initial spread began in late 2019 [

1]. One of the most visually perceptible ways the coronavirus has affected society’s ritual errands and routines is through the widespread use, and in many cases government or commercial mandates, of facial masks intended to slow the spread of the virus by impeding transfer of respiratory droplets via the nose and mouth [

2]. Several US states now legally mandate that people wear masks before entering a public space where it is not feasibly practical to socially distance, such as a grocery store. As of early 2021, additional federal mandates [

3,

4] have been put into place, further extending those requirements.

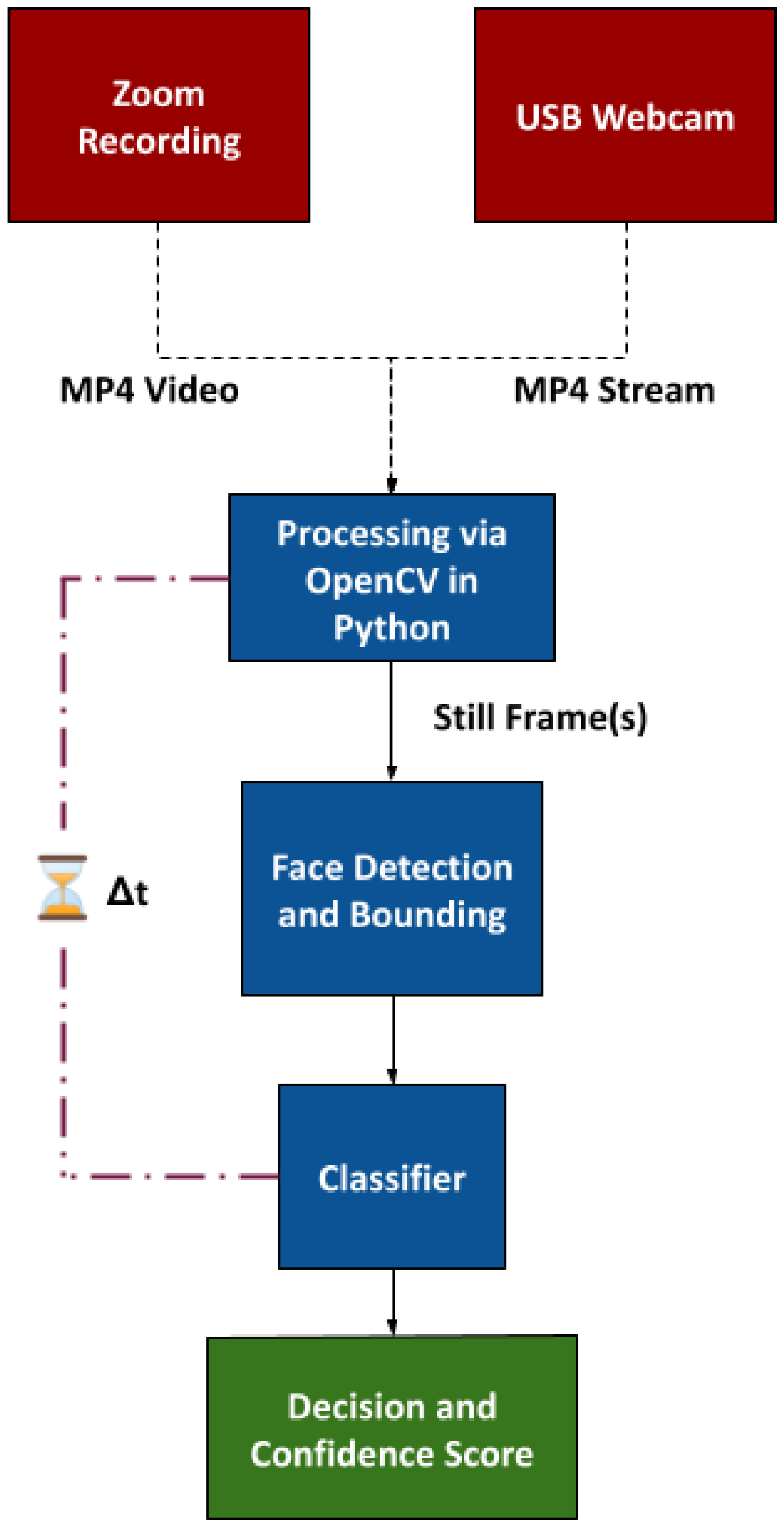

Independent of the questions associated with mask efficacy or policy, this paper seeks to answer how effectively we can gauge compliance with masking guidelines, as it is uncertain as to how many people actually follow these mandates. Further, the paper seeks to validate the technical capability and implementation feasibility of a sub-$100 Raspberry Pi-scale solution that is suitable for installation at the front door of a store or other congested passageway.

1.1. Motivation

A widespread problem since the inception of the pandemic has been not just the virus itself, but its airborne spread, which is believed to be directly correlated with mask usage [

5]. One particular challenge in the present environment is that of mask adherence, which has largely been delegated to ‘gatekeeper’ store employees that can themselves get sick and/or lead to altercations when prompting incoming patrons to don their masks [

6,

7,

8]. Industry is increasingly adopting

Internet of Things (IoT) devices that incorporate machine learning for various applications [

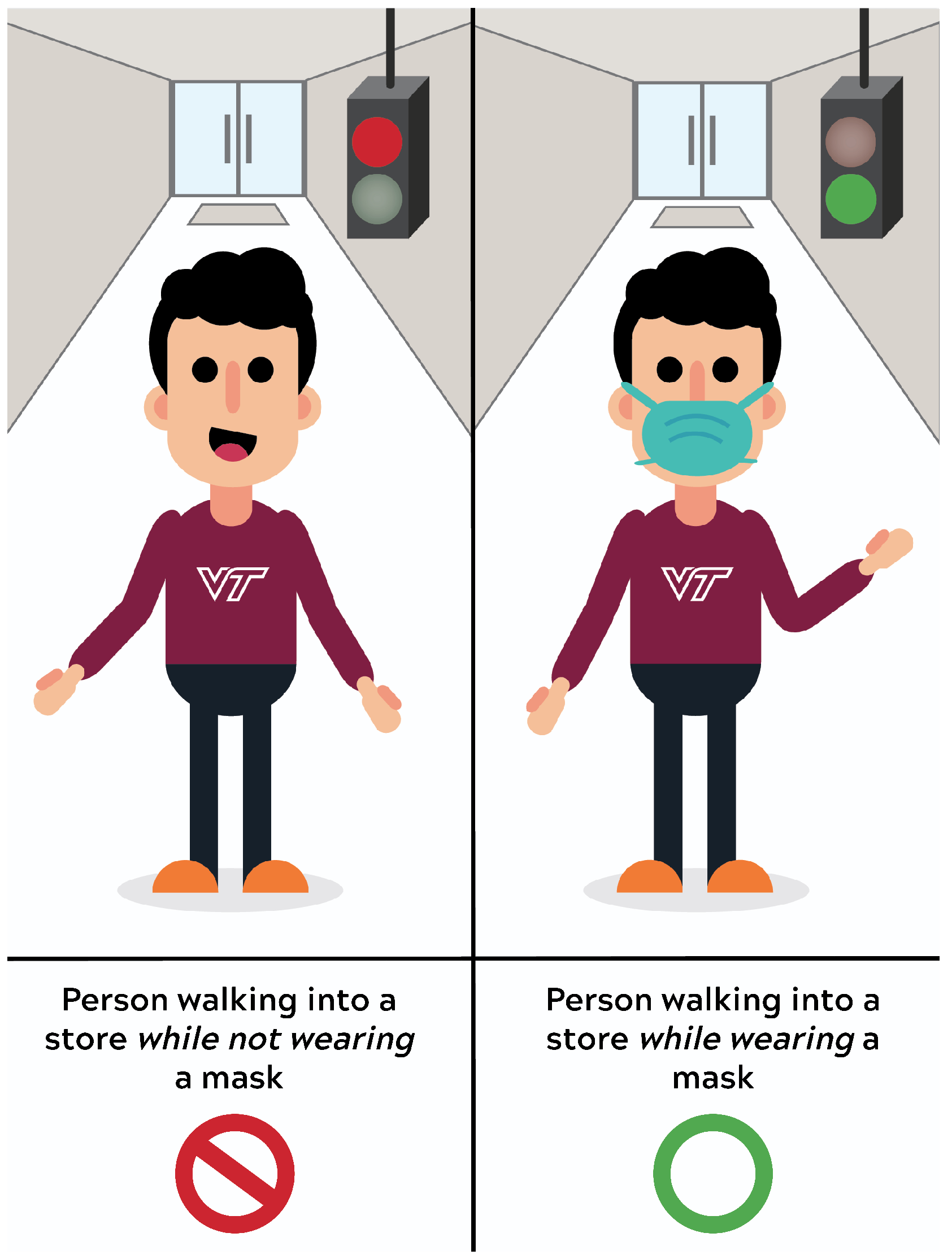

9]. It is clear that an IoT device presents an ideal solution for helping society adapt to the challenges presented by the pandemic. Therefore, we want to test the feasibility of a more automated machine-based solution that is capable of giving a binary decision as to whether individuals are wearing masks, which may be converted to a visual green/red light that indicates permission to enter; a notional example of this process is depicted in

Figure 1. For maximum marketability and deployment versatility, the solution is designed to be capable of processing collected data without requiring cloud or other third-party computing services to ensure that inference decisions are relayed to users reliably and immediately. Our solution lays the initial foundation and identifies the challenges of what we envision will be a fully-automatic ‘mask compliance’ assessment IoT device that can record, analyze, upload, and communicate data to other entities such as epidemiologists, state health departments, human resources personnel, or consumer marketing researchers.

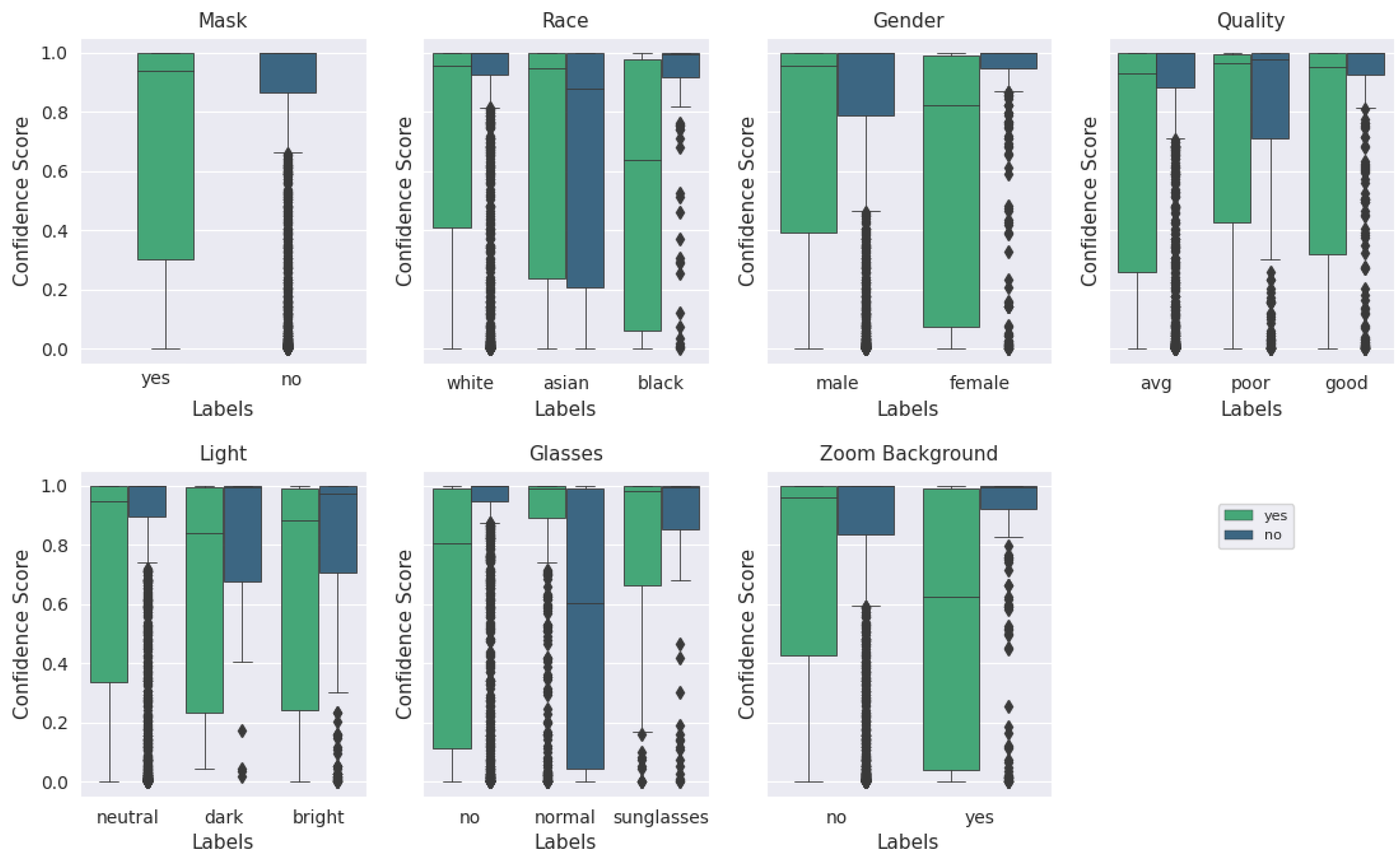

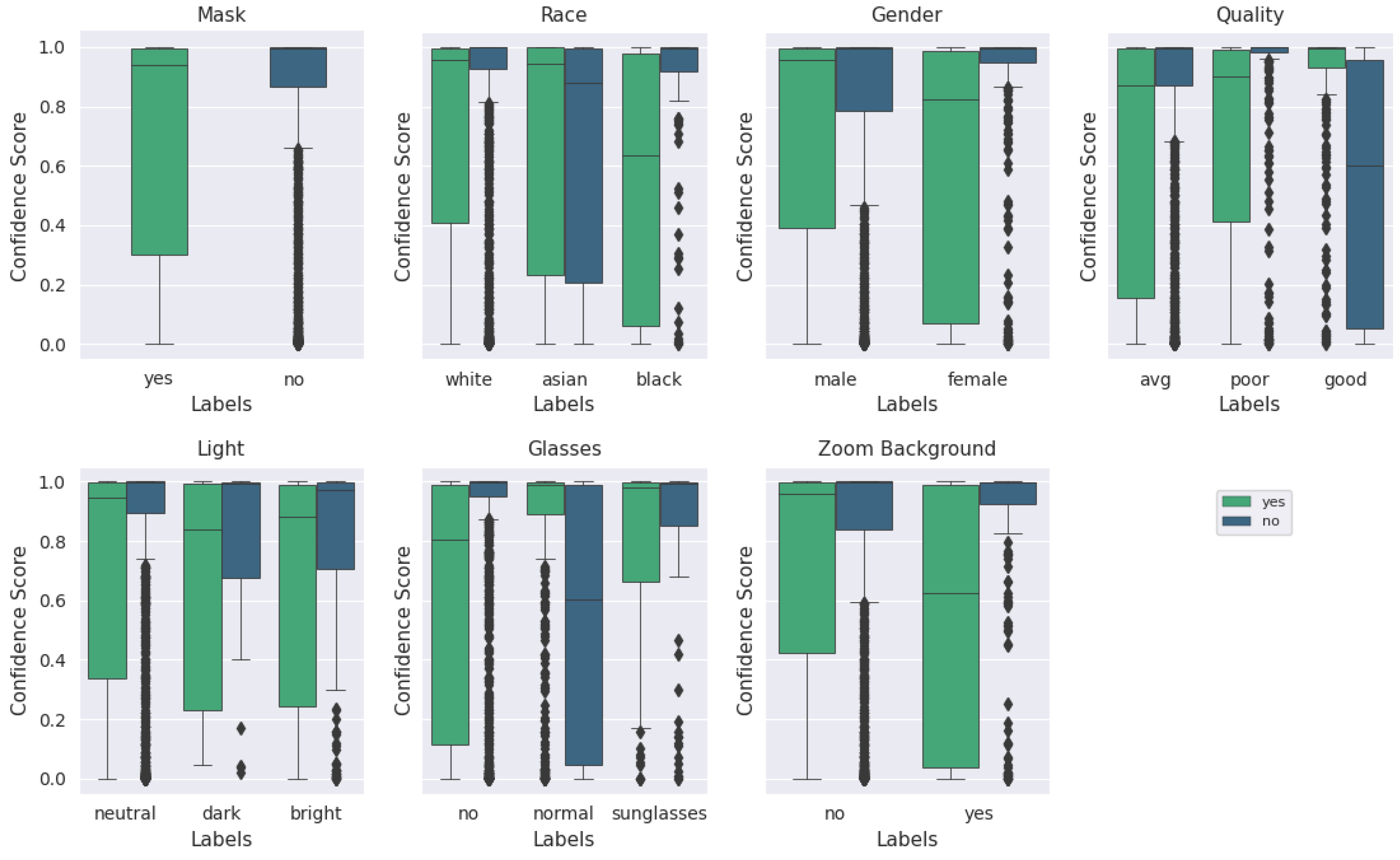

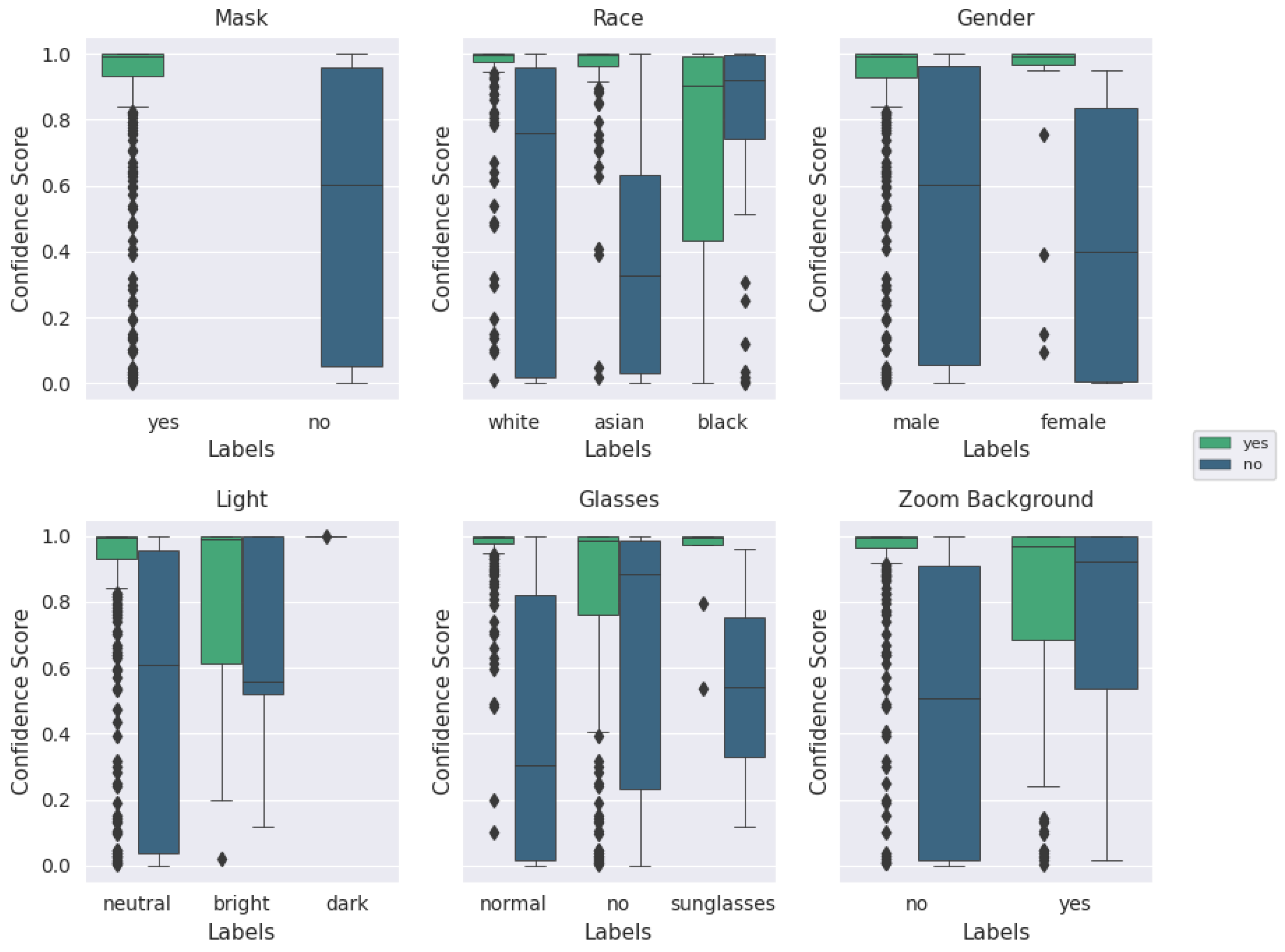

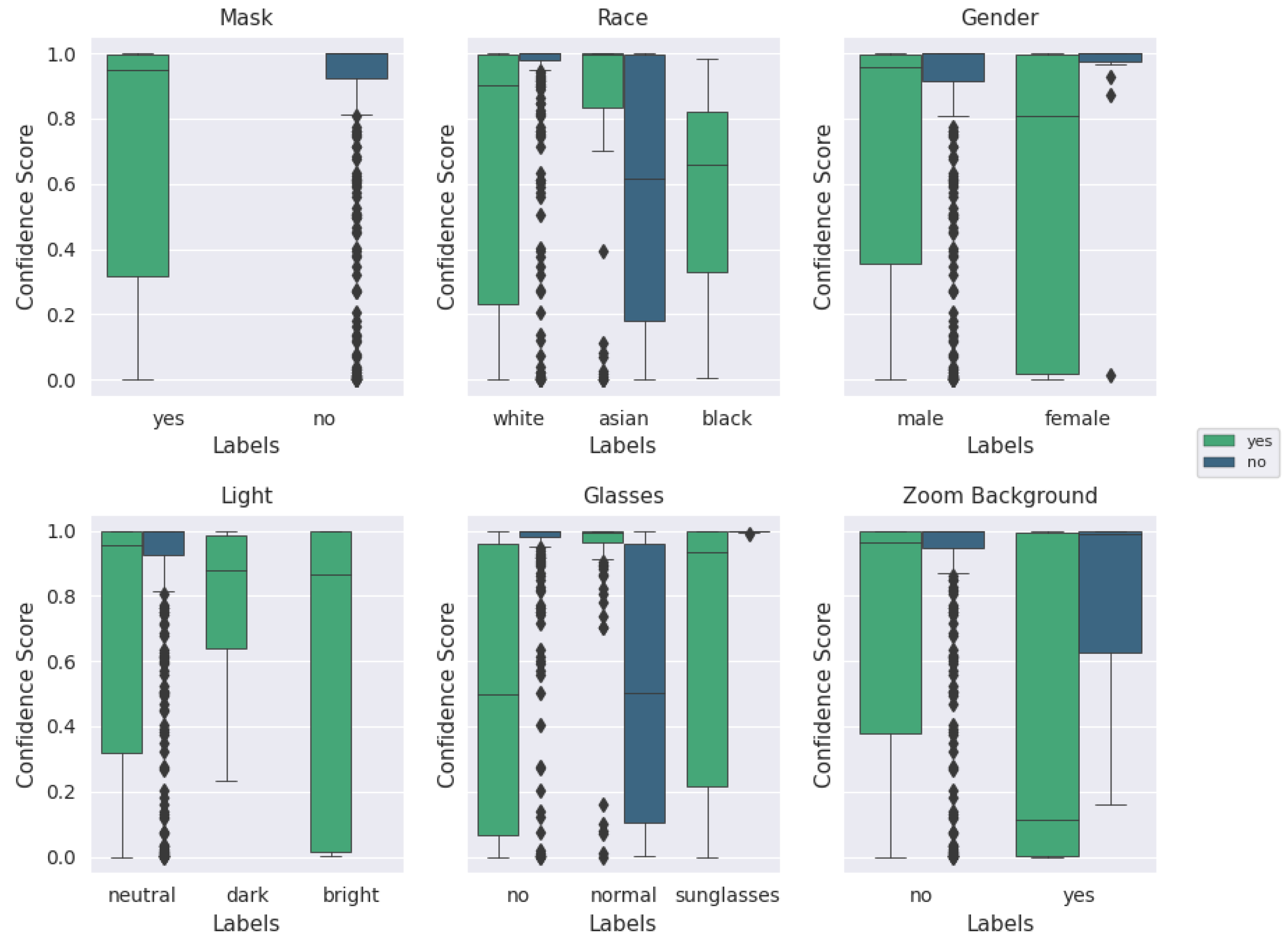

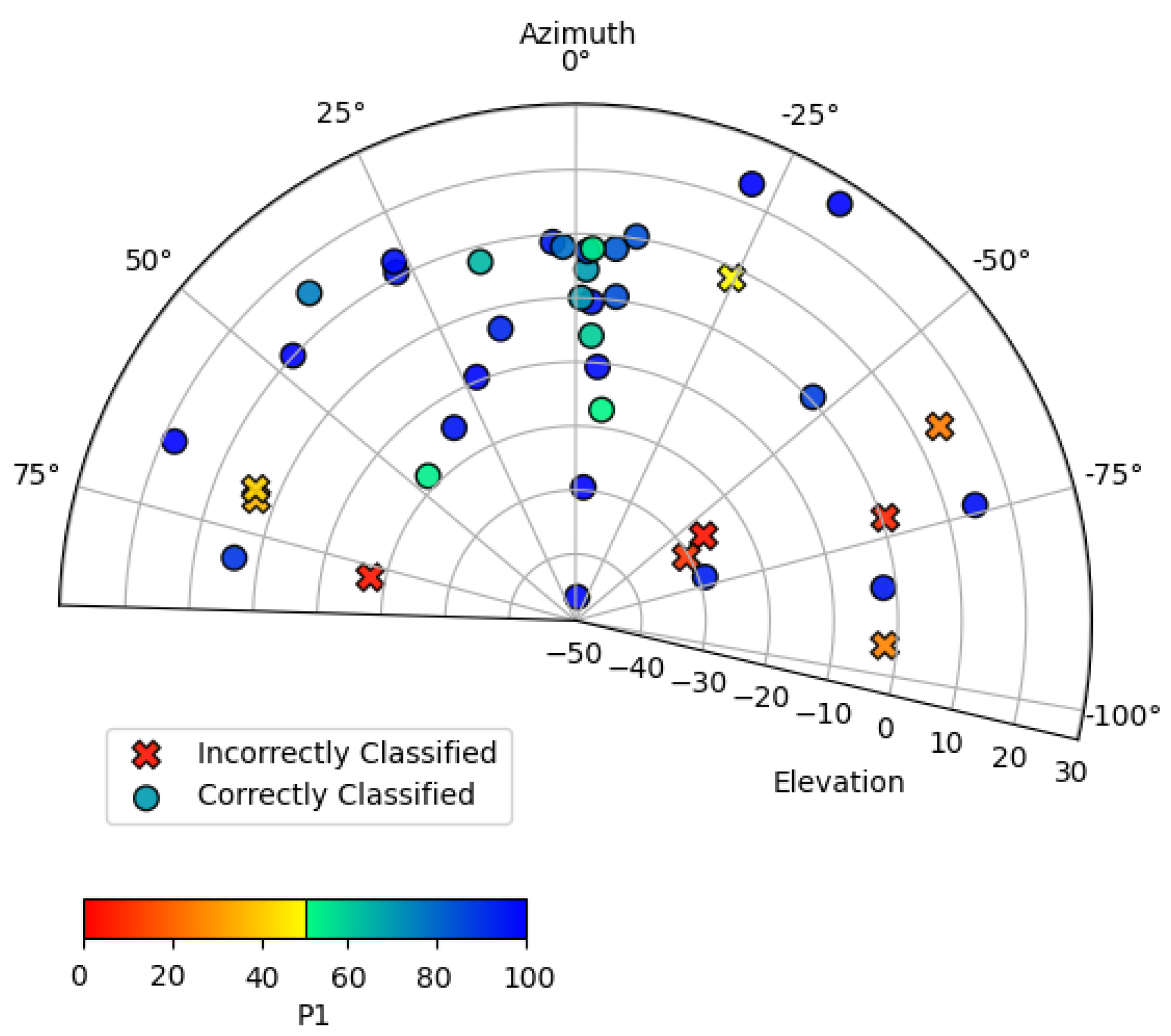

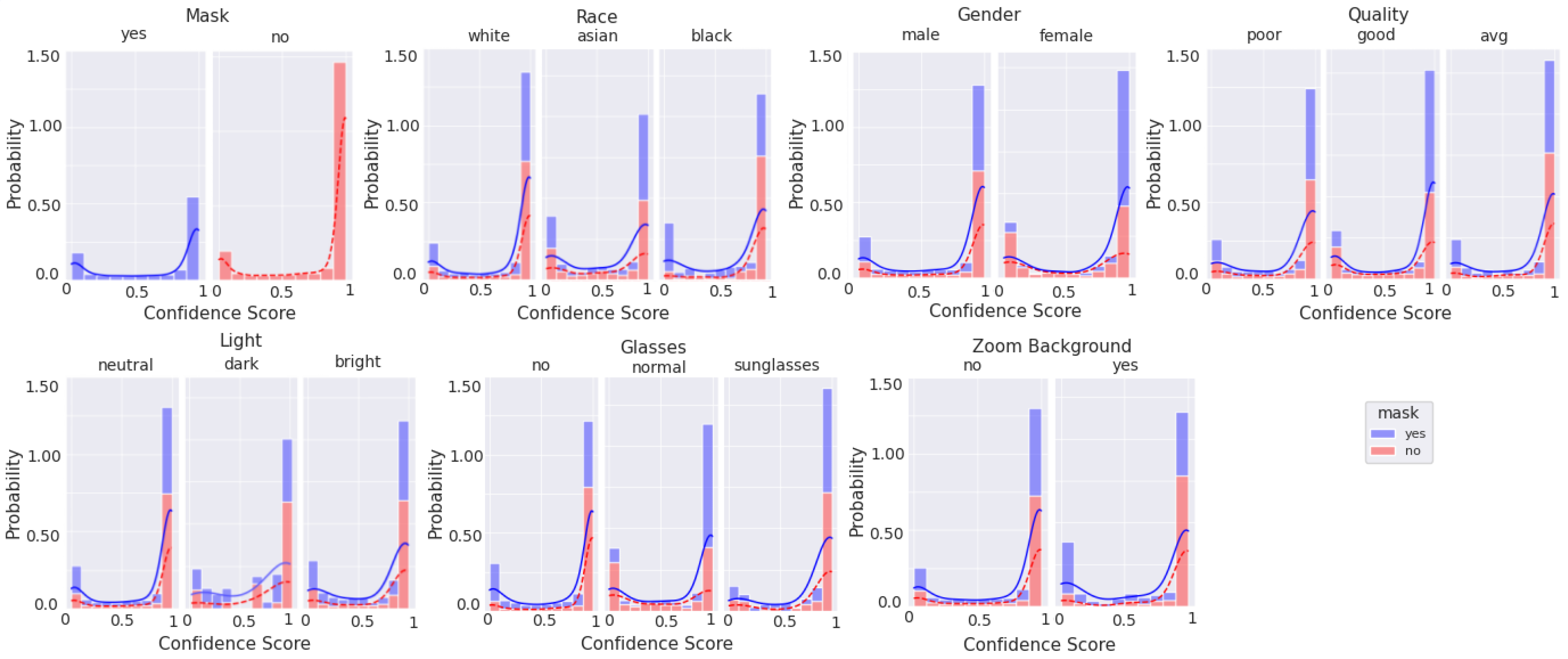

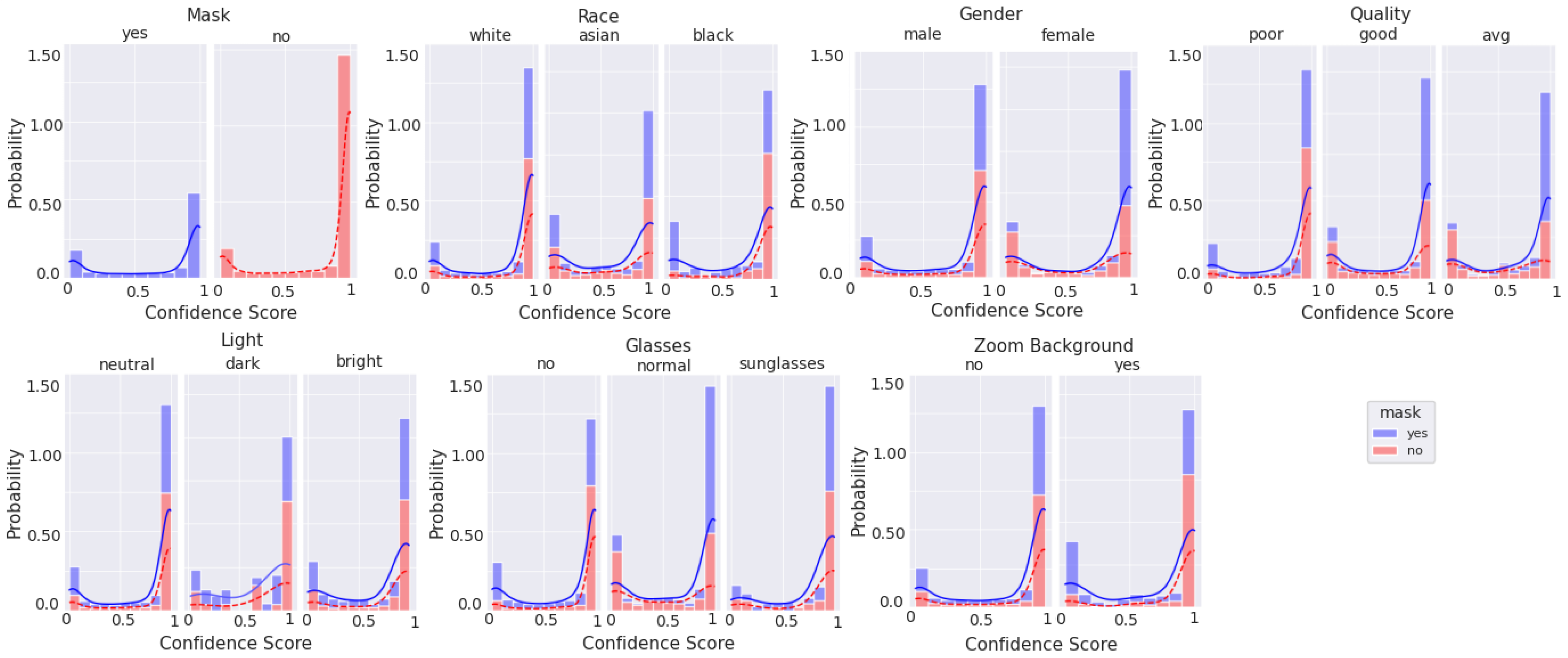

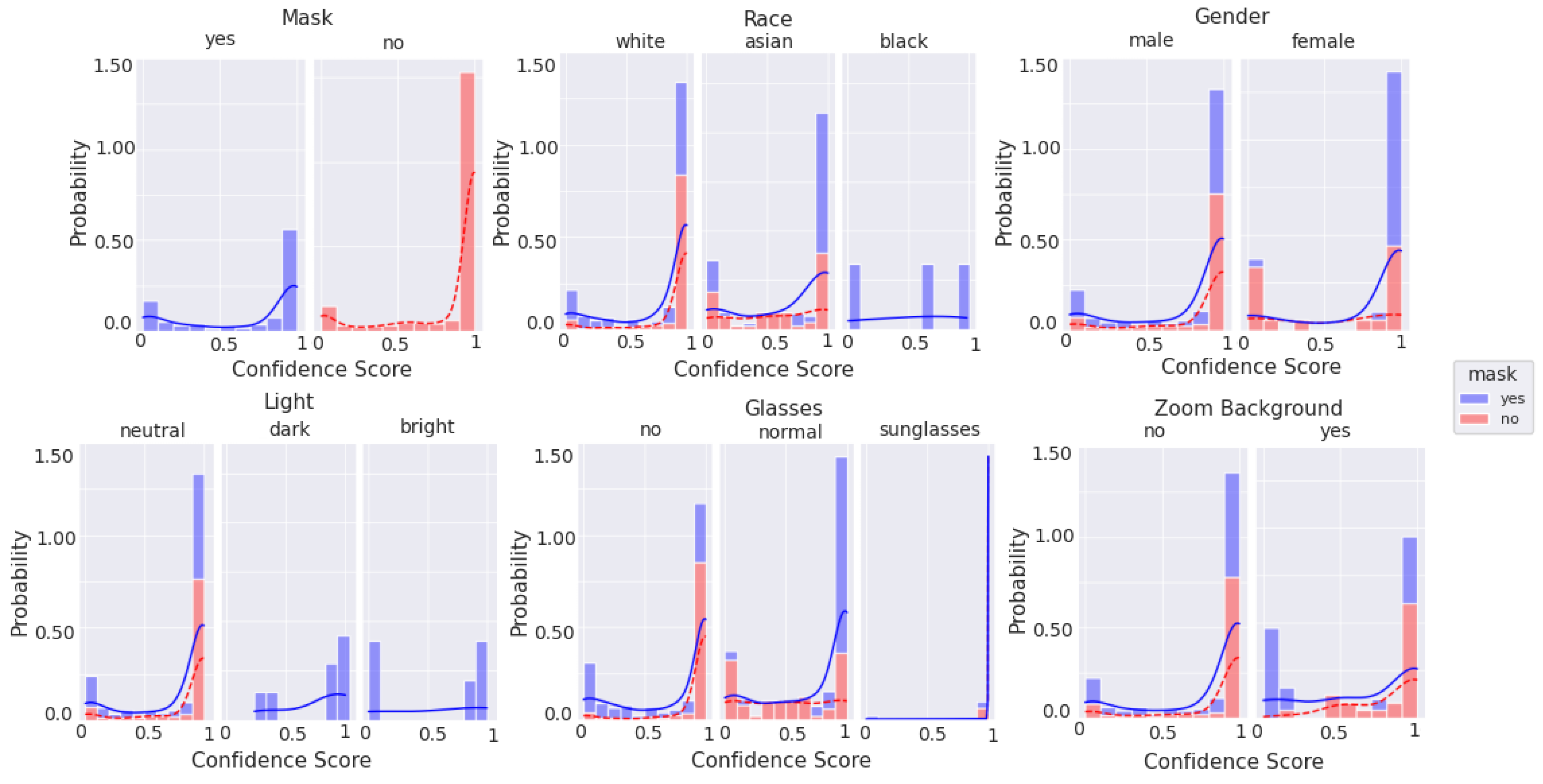

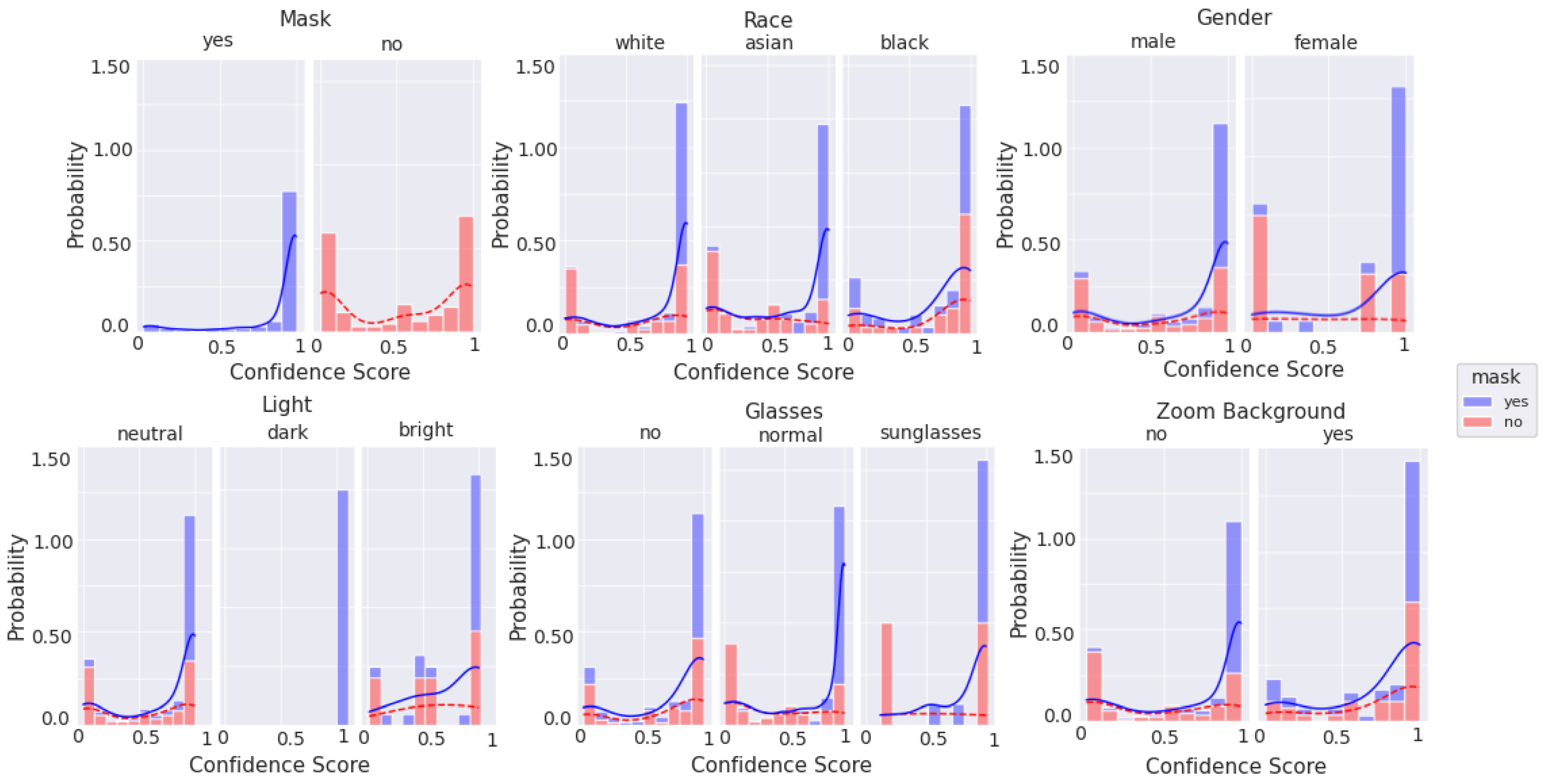

Lab-based mask recognition performance on pristine images routinely achieves over 99% accuracy using these machine learning techniques [

10]. However, in order to be acceptable in a practical context, that performance level must be retained when implemented in a real-world context, using non-pristine images, and in a cost/form factor that is realistic for a store to deploy. Further, knowing that many machine-learning solutions are susceptible to degradation resulting from training dataset mismatch with respect to ethnicity [

11], gender [

12], image quality [

13], lighting conditions [

14], and combinations of these parameters with other characteristics [

15,

16,

17], we chose to intentionally bombard the neural net model with different presentation attacks to quantify how quickly performance degrades.

1.3. Prior Work

There are several existing mechanisms for face

detection [

18,

19], which seeks to identify the presence and location of a face, as opposed to face

recognition [

20], which seeks to find an identity for a face, which is not the focus of this paper. These mechanisms include feature-based object detection, [

21], local binary patterns [

22,

23], and deep learning-based approaches [

24,

25,

26].

Viola-Jones face detection [

21] is an object detection algorithm for identifying faces in an image using a Haar feature based cascade of classifiers. Haar features [

27] are the difference of pixel intensity sums taken across adjacent rectangular subwindows in detection windows; these features are then used to find regions such as the eyes and nose of a human face based on their intensity levels. Integral images are used to represent the input images, which decreases the computation time of calculating Haar features. A modified Adaboost algorithm is used for classifier construction by selecting and utilizing the most relevant features, based on a threshold set by positive and negative image examples [

28,

29]. The classifiers are then cascaded, which ensures a low false-positivity rate.

Convolutional neural networks (CNNs) are a type of neural network that can be applied to myriad tasks, such as image classification, audio processing, and object recognition. Face detection and recognition is a natural application for CNN algorithms, which have many different architectures. For example, MobileNetv2 [

30] is a model architecture that incorporates inverted residual blocks with linear bottlenecks to decrease network complexity and size. The ResNet [

31] (residual neural network) architecture utilizes deep residual learning to decrease deep network complexity and necessary computations, while also increasing performance. ‘Shortcuts’ between layers are used to preserve the inputs from previous layers, which are then added to the outputs of the succeeding layers. Common configurations of ResNet include 18, 34, 50, 101, or 152 convolutional layers; after the initial convolutional layer, one max-pooling layer is used; before the final fully-connected layer, one average-pooling layer is used. MTCNN [

25] (multitask cascaded convolutional neural networks) is a framework that uses cascaded CNNs to infer face and landmark locations from images. Three stages of CNNs are used to find and calibrate bounding boxes as well as find coordinates for the nose, left and right mouth corners, and left and right eyes of a human face.

One significant difficulty within facial detection and recognition systems is occlusions, which introduce large variations to typical facial features. A wide variety of facial detection and recognition methods have been formulated and refined for high accuracy in the case of occlusions in the past few decades. In [

32], an adversarial face detector is trained to detect and segment occluded faces and areas on the face. Three varieties of facial datasets, including masks, were assembled in [

33], including images scraped from the internet and images that were photo-edited to show faces with masks on. A method for facial recognition of masked faces is also introduced, which improves accuracy over traditional architectures for facial recognition by training a multi-granularity model on the aforementioned datasets in combination with existing face datasets. In [

34], a masked face dataset is created and locally linear embedding (LLE) CNNs are introduced for detection of masked faces.

Despite these works, a large masked face dataset with sufficient image feature diversity to be similar to real-world images was difficult to find. Dataset augmentation can be used to supplement the amount of feature variation seen by a model during training as well as the overall size of a dataset.

In [

35], the quantity and quality of simulated, captured (collected), and augmented training data is analyzed for their impacts on a radio frequency classification task. Simulated datasets are created via random generation based on set characteristic distributions. Dataset augmentation is carried out in two ways: by sampling from existing collected capture data and modifying it to obtain uniform signal characteristic distributions in the resulting dataset, and by random selection from a Gaussian joint kernel density estimate (KDE) on captured data. One key result shows that augmentation using the KDE of the captured data increases test performance compared to data augmentation without taking into account the distributions of known data parameters, but that in both cases augmentation may increase generalization and improve performance, compared to training with solely the original dataset. One caveat is that as the dataset quality decreases and difficulty increases to a certain degree (the exact threshold was not computed by the authors), and the sample quantity remains fixed, model generalization and performance tend to decrease, especially when using augmented data that was collected without consideration of the existing dataset distribution.

Additionally, the quantity of data samples required for 100% and 95% accuracy based on linear trend and logistic fit data is found to be lower for both of the datasets acquired via the previously mentioned augmentation techniques only with less difficult datasets. For example, the KDE-based augmented dataset necessitated approximately 58% and the non-KDE augmented dataset required 167% of the number of samples per class than the original dataset required to obtain 95% accuracy on the least difficult data analyzed in the paper; on the most difficult data, the KDE-based augmented dataset required 180% and the non-KDE augmented dataset required 330% of the number of samples per class than the original dataset required to obtain 95% accuracy. Although more data is needed for high performance, collecting data for the context of the referenced paper is a time-conserving procedure—generation of augmented data significantly decreases the time required to obtain sufficient samples for 95% performance on the order of years.

Essentially, the results relevant to the work presented in the following sections of this paper are that (1) utilizing the statistical properties of the original dataset when assembling an augmented dataset is crucial to ensuring model generalization is improved and (2) the augmented dataset will have an overall lower quality of data than the original dataset and should include a greater number of samples from each class than the original dataset to ensure high performance on more difficult test data.

Independent measures of quality and other properties may cross-check and/or improve the confidence of a neural network’s classification decision. These measures also may improve performance as human-assigned categorical labels are prone to human error and consequently mislabeled data, which has a negative impact on classification performance [

36]. BRISQUE (Blind/Referenceless Image Spatial Quality Evaluator) [

37] is a spatial image quality assessment (IQA) method that does not use a reference image. The statistical regularity of natural images (i.e., images captured by a camera and not images created on a computer) are altered when distortions are present. Based on this, BRISQUE extracts features from an image based on its MSCN (Mean-Subtracted Contrast-Normalized) coefficient distributions and the pairwise products of the coefficients with neighboring pixels in four directions. For these inputs, the best-fit mean, shape, and variance parameters to an asymmetric or symmetric generalized Gaussian distribution are used in the feature vector, which is then mapped to the final quality score via regression. Furthermore, we believe that BRISQUE is a reasonable method for real-time image quality assessment because it is highly computationally efficient. In the paper, using a 1.8 Ghz computer with 2 GB of RAM, a 512 × 768 image took only 1 s to process. This is much faster than two other IQA algorithms, DIIVINE [

38] and BLIINDS-II [

39], which took 149 and 70 s, respectively.