Abstract

Accurately identifying the boundaries of agricultural land is critical to the effective management of its resources. This includes the determination of property and land rights, the prevention of non-agricultural activities on agricultural land, and the effective management of natural resources. There are various methods for accurate boundary detection, including traditional measurement methods and remote sensing, and the choice of the best method depends on specific objectives and conditions. This paper proposes the use of convolutional neural networks (CNNs) as an efficient and effective tool for the automatic recognition of agricultural land boundaries. The objective of this research paper is to develop an automated method for the recognition of agricultural land boundaries using deep neural networks and Sentinel 2 multispectral imagery. The Buinsky district of the Republic of Tatarstan, Russia, which is known to be an agricultural region, was chosen for this study because of the importance of the accurate detection of its agricultural land boundaries. Linknet, a deep neural network architecture with skip connections between encoder and decoder, was used for semantic segmentation to extract arable land boundaries, and transfer learning using a pre-trained EfficientNetB3 model was used to improve performance. The Linknet + EfficientNetB3 combination for semantic segmentation achieved an accuracy of 86.3% and an f1 measure of 0.924 on the validation sample. The results showed a high degree of agreement between the predicted field boundaries and the expert-validated boundaries. According to the results, the advantages of the method include its speed, scalability, and ability to detect patterns outside the study area. It is planned to improve the method by using different neural network architectures and prior recognized land use classes.

1. Introduction

Agricultural territories, especially fertile expanses of arable land, stand recognized as invaluable assets pivotal to food production, necessitating meticulous stewardship [1]. The emphasis on the precise delineation of these lands is underscored by their significant bearing on numerous operational facets. For instance, meticulous mapping is indispensable for orchestrating effective land use planning and management, fostering an environment in which lands are exclusively earmarked for agricultural undertakings, thereby averting soil degradation and environmental detriment [2].

By crafting clear agricultural boundaries, planners can orchestrate optimized land usage, safeguarding vital resources from non-agricultural encroachment. This approach is fundamental to sustaining the health and productivity of farmlands, enabling farmers and land managers to exert finer control over natural resources such as water and soil. Recognizing the exact contours of croplands, for instance, facilitates the targeted application of irrigation, averting water wastage and thereby conserving precious water resources [3].

Moreover, this precision plays a vital role in soil management, a critical element in sustaining soil fertility and optimizing crop yields [4]. The legal ramifications are equally significant, with the clear delineation of property and tenure rights being central to fostering sustainable land utilization. A clear demarcation prevents disputes and encroachment, averting potential conflicts and legal complications, thus fostering a conducive environment for the effective conservation and management of agricultural lands.

Furthermore, it is noteworthy that adept agricultural management often hinges on the precise knowledge of the land’s extent and crop types cultivated therein. This knowledge empowers policymakers to devise comprehensive strategies addressing critical concerns, such as food security, land utilization, and environmental safeguarding. For instance, well-defined land boundaries can catalyze the formulation of policies that bolster food crop production while thwarting the conversion of agricultural lands for non-agricultural purposes [5].

Monitoring agricultural efficacy demands the procurement of precise and dependable data pertaining to the extent of agricultural lands [6,7,8,9]. The exact demarcation of these lands facilitates data acquisition, which can critically influence the assessment of agricultural performance metrics, such as yield, soil quality, and environmental impacts. This data repository becomes a cornerstone in guiding resource distribution decisions and shaping agricultural policies.

Accurate field boundary acquisition can be achieved through various techniques, including traditional survey methods and contemporary remote sensing technologies. Established methods, such as total station surveying [10], GPS/GNSS surveying, and unmanned aerial photography [11,12], offer reliable, albeit sometimes time-consuming, solutions for acquiring precise field boundaries. While tachymetric surveying promises high accuracy, it demands a considerable time investment and specialized personnel. Conversely, GPS surveying offers a more economical and swifter alternative, despite being potentially prone to environmental disruptions.

Aerial photography has emerged as a proficient tool, capable of capturing extensive agricultural expanses with commendable accuracy. Additionally, remote sensing avenues such as satellite imagery and LiDAR [13] employ aerial or satellite photography to secure precise field boundaries. While satellite imagery offers extensive coverage capabilities, its efficacy is somewhat contingent on the resolution of the images captured. LiDAR, on the other hand, guarantees high precision, albeit at a higher cost and requiring skilled personnel for its operation.

The methodology chosen for detecting field boundaries is contingent upon a myriad of factors, including the scale of the field, the resources at one’s disposal, and the requisite degree of accuracy. Although survey techniques promise precision, they are often marred by their time-intensive nature. Conversely, remote sensing methodologies, though efficient and capable of encompassing vast agricultural areas, are not devoid of challenges. A critical aspect of these methods is the role of operators in delineating land boundaries, a task that necessitates specialized skills and software applications, predominantly geographic information systems. These methodologies, though exact, often entail diminished productivity, with a notable issue being the subjectivity inherent to interpreting the features demarcating agricultural land boundaries. Thus, enhancing the objectivity and bolstering the productivity of these interpretations, without sacrificing the quality of boundary recognition, emerge as paramount objectives for the advancement of remote sensing methodologies. In light of these challenges, we propose the integration of convolutional neural networks (CNNs) as a progressive solution.

In recent years, CNNs have experienced a surge in popularity in the domain of object boundary mapping. Their ascent can be attributed to their ability to discern and delineate object boundaries within images with acute accuracy, thereby positing them as ideal candidates for initiatives such as agricultural boundary delineation [14,15,16,17].

A significant advantage of utilizing CNNs for object boundary mapping is their intrinsic ability to assimilate and adapt to the distinctive features and nuances of the objects under scrutiny. Through the training phase on an image dataset characterized by known object boundaries, the network acquires the capability to discern the patterns and attributes unique to those objects. This, in turn, facilitates the accurate mapping of boundaries for analogous objects in subsequent images [18]. Additionally, CNNs are proficient at managing images of high complexity and variability. In the context of agricultural mapping, imagery often encapsulates diverse elements such as crops, soil variants, and water bodies, which present considerable challenges in terms of accurate differentiation and mapping. Nevertheless, CNNs demonstrate the capacity to analyze these multifaceted images and identify object boundaries with remarkable precision, despite their inherent complexity and variability [19].

Furthermore, CNNs are adept at delineating object boundaries with a high degree of accuracy and efficiency, establishing them as potent instruments for mapping extensive land parcels. This attribute is particularly salient in the sphere of agricultural land mapping, wherein the rapid and accurate mapping of large swathes of land is pivotal to fostering effective land use planning and management strategies.

Embarking on this study, our primary objective was to conceptualize an automated mechanism for detecting the boundaries of agricultural lands, leveraging the capabilities of deep neural networks alongside Sentinel 2 multispectral imagery. To attain this objective, the following tasks were outlined and completed:

- Acquire and preprocess Sentinel 2 imagery for use in training and testing deep neural networks.

- Select an appropriate deep neural network architecture for the boundary detection task.

- Train and validate a deep neural network using labeled crop boundaries on Sentinel 2 imagery.

- Evaluate the performance of the trained network on validation images by comparing the predicted boundaries to the ground truth data.

- Analyze the results of the experiment and conclude the effectiveness of using deep neural networks and multispectral Sentinel 2 imagery for automatic cropland boundary detection.

2. Materials and Methods

Buinsky municipal district was chosen as the study area. Buinsky district is a district of the Republic of Tatarstan, which is a federal subject of Russia. The district is located in the western part of Tatarstan and covers an area of about 1500 square kilometers. It borders the Apastovsky district to the north, the Tetyushsky district to the east, the Drozhzhanovsky district to the south, and the Yalchiksky district of the Chuvash Republic to the west [20].

The landscape of Buinsky district is characterized by hills and forests, with many rivers and streams flowing through the area. The largest river in the district is the Sviyaga River, which flows through the entire district from north to south. Other important rivers are Cherka and Karla.

The climate of the Buinsky district is moderately continental, with cold winters and warm summers. The average temperature in January, the coldest month, is about −11 degrees Celsius, and the average temperature in July, the warmest month, is about 19 degrees Celsius. The area receives an average of 500–600 mm of precipitation per year, with most of it falling in the summer months.

The population of Buinsky district is about 44,000 people, with most of the population living in rural areas. The largest settlement area is the town of Buinsk, which is located in the central part of the district and serves as its administrative center.

The economy of the Buinsky district is mainly based on agriculture, with crops such as wheat, barley, and potatoes. Animal husbandry is also an important industry in the district, and cattle, pigs, and poultry are raised. In addition to agriculture, the district also has several small businesses, including manufacturing and retail trade.

The choice of the area was determined by its agricultural focus and the importance of being able to correctly and accurately determine the boundaries of its arable land.

To solve the problem of semantic segmentation, i.e., delineation of agricultural land boundaries, the Linknet architecture was used, which is a deep neural network architecture widely used for image segmentation tasks [18]. It is based on a fully convolutional network (FCN) and has an encoder–decoder structure with skips between the encoder and decoder. The skip links help preserve spatial information during the oversampling process and allow the decoder to learn from the high-resolution characteristics of the encoder. This makes Linknet well suited for tasks such as object detection and segmentation [21].

Linknet consists of several layers of convolution and merging operations in the encoder, followed by a corresponding number of layers of transpose convolution and upsampling operations in the decoder. Pass-through links are added between the corresponding layers in the encoder and decoder. To speed up convergence during training, Linknet also uses batch normalization and corrected linear units (ReLU).

To further improve Linknet’s performance, transfer learning using pre-trained models has been applied. A commonly used pre-trained model is DenseNet [22], which is a deep neural network architecture that uses dense connections between layers to improve information flow and gradient propagation.

To use DenseNet for transfer learning, the pre-trained model is first tuned on a large dataset such as ImageNet to learn common features such as edges, corners, and textures. The weights of the pre-trained model are then fixed, and the Linknet decoder network is attached to the last few layers of the DenseNet model. The entire network is then trained on the target dataset to learn specific features to solve the segmentation problem.

Another popular pre-trained model for transfer learning that has been used is EfficientNet [23], which is a family of deep neural network architectures that scale in size and complexity to improve performance on a variety of tasks. EfficientNetB3 is one of the most commonly used versions and has shown superior performance compared to that of other pre-trained models, such as ResNet and Inception.

To use EfficientNetB3 for transfer learning, the same approach can be used as with DenseNet. The pre-trained model is trained on a large dataset such as ImageNet, and then the Linknet decoder network is attached to the last few layers of the EfficientNetB3 model. The entire network is then trained on the target dataset to learn specific features for the segmentation task.

In the preliminary phase of our research, we embarked on a comprehensive scrutiny of credible data repositories, encompassing high-resolution satellite imagery, up-to-date land use maps, and widespread field investigations. This multifaceted analysis paved the way for the discernment and categorization of diverse land cover segments prevalent within the focal area of our study.

The successful categorization of these segments stemmed from an in-depth acquaintance with the regional agricultural landscape, a knowledge base that was significantly augmented through meticulous field surveys. These surveys stood as an invaluable resource, channeling firsthand observations and expert insights, thereby facilitating the precise demarcation of various land cover classifications.

To fortify the reliability and accuracy of these user-generated class values, we instituted a process of cross-verification. This approach involved aligning the identified class values with scholarly sources and established databases, thereby enhancing their legitimacy and relevance within the context of the study region.

Upon the affirmation of these class values, they were integrated as benchmarks in the validation phase, serving as a trusted reference point for scrutinizing the predictive prowess of the model. This approach fostered a detailed assessment of the model’s output, spotlighting its strengths and pinpointing potential avenues for refinement.

This strategy not only allowed for nuanced insight into the model’s efficacy but also charted a pathway for prospective advancements, aimed at perpetually honing its accuracy in delineating cropland boundaries. Through a methodical and objective selection and justification of user-defined class values, we have underscored their pivotal role in the validation paradigm. Consequently, this has elevated the trustworthiness and precision of the model’s predictions in mapping cropland boundaries, paving the way for a promising progression of continual improvement and refinement in the subsequent stages of this research endeavor.

The training, verification, and implementation of the model were performed using Sentinel 2 satellite imagery [24]. Sentinel 2 imagery is widely recognized as one of the best data sources for agricultural field delineation. Using Sentinel 2 imagery for this purpose has several advantages.

First, Sentinel 2 imagery provides high-resolution data with a spatial resolution of up to 10 m, which is suitable for detecting small-scale objects such as agricultural field boundaries. These high-resolution data allow you to accurately define and delineate field boundaries, making it easier to map and manage agricultural land.

Second, Sentinel 2 imagery provides data in 13 spectral bands, including visible, near-infrared, and shortwave infrared. Different types of land cover, including vegetation, soil, and water bodies, can be identified using this spectral information. This is particularly important for agricultural mapping. It is necessary to distinguish between crops, bare soil, and other features, such as irrigation systems and water bodies.

Third, Sentinel 2 imagery has a high temporal resolution: images are acquired every 5 days. This frequent data collection allows farmland to be monitored throughout the growing season, providing information on crop growth, health, and productivity. This information is critical for effective land use planning and management, allowing farmers and land managers to make informed decisions about crop management, irrigation, and fertilization.

Another benefit of Sentinel 2 imagery is its free availability through the European Space Agency’s Copernicus program. This makes it an affordable and accessible option for mapping agricultural field boundaries, especially for small farmers and land managers who do not have access to expensive commercial imagery.

Finally, Sentinel 2 imagery is supported by a range of image processing and analysis software and tools. These include open-source tools, such as QGIS and the Sentinel Application Platform (SNAP), which provide various functions for processing and analyzing Sentinel 2 imagery for agricultural land mapping.

The analysis used a three-channel composite Sentinel 2 image for the 2021 growing season, with the Normalized Difference Vegetation Index (NDVI) standard deviation as channel 1, the infrared spectral band (833–835.1 nm) as channel 2, and the red channel (664.5–665 nm) as channel 3. This combination makes it possible to better distinguish the ground line, which theoretically should correspond to unpaved roads separating cropland, as well as cut-off forest areas and pastures, which have less variability in the vegetation curve than plowed land.

Our methodology hinges on utilizing the robust capabilities of Google Earth Engine (GEE) [25], a cloud-based platform for planetary-scale geospatial analysis. Python libraries such as earthengine-api, the Python client for GEE, eemont [26], an extension of earthengine-api for advanced preprocessing, and geemap [27,28], an interactive mapping tool, are utilized.

For our study, we prepare data from the Sentinel 2 surface reflectance (COPERNICUS/S2_SR) [29] dataset that intersects our region of interest. Images captured between April and July 2021 are preprocessed (non-vegetation period), and the Normalized Difference Vegetation Index (NDVI), a crucial indicator of vegetation health, is calculated for each.

The standard deviation of the NDVI is computed across the image collection, providing insights into the temporal variability of vegetation health. Median reflectance values are also calculated for the near-infrared (B8) and red (B4) bands of the Sentinel 2 dataset. These images are multiplied by a factor of 10,000 to convert them from floating-point numbers to short integers, optimizing storage space.

The processed layers are combined into a single composite image, and this composite is then added to the interactive map, providing a visual representation of the calculated indices (source code available in Google Colab via https://clck.ru/35Zmrj, accessed on 1 January 2023).

Finally, we initiate an export task that sends the composite image to Google Drive in the form of a GeoTIFF file. The image is exported at a scale of 10 m using the EPSG:32639 coordinate reference system, ensuring spatial consistency with other geospatial datasets.

The resulting composite is converted to uint8 format with a 5% percentile cutoff to minimize the effect of noise and to maintain an 8-bit color scale in each channel, which is required for pattern recognition using convolutional neural networks.

From the obtained image, an area of ~45,000 ha is selected for training and testing the neural network. For this purpose, continuous manual extraction of all cultivated areas is performed using WorldView-3 imagery with 0.5 m resolution, and the resulting vector layer is rasterized using a satellite image raster grid with 10 m resolution. The resulting image–mask pair is sliced by a floating window into 256 × 256 pixel squares called patches, with 50% of the previous patch’s neighbor captured. The process begins with parameter initialization, which defines key inputs such as input files, labeled files, window size, stride, label type, and output format. This initialization phase allows for flexibility and customization to meet different geospatial needs. Once the parameters are set, the methodology proceeds with data verification to ensure the consistency and integrity of the input files. This step validates that the input files are single-band rasters with matching attributes such as rows, columns, projections, and transformations. By verifying the input data, subsequent analysis can rely on reliable and consistent geospatial information. After data verification, the methodology focuses on feature and label generation. Input files are processed to construct feature and label arrays, in which labels are classified based on user-defined class values. This step enables the extraction of relevant information from the geospatial data and prepares it for subsequent machine learning tasks. In addition, a coordinate mesh is created to accurately position the geospatial data. This mesh consists of top-left coordinates that ensure proper alignment and geospatial reference throughout the generated image patches. These image patches, or “chips”, are then generated based on the specified step and window size, allowing for efficient data organization and analysis. This results in a dataset in which 80% of the data are used to train the neural network and 20% are used for validation and testing. The training is performed in Python 3.8 environments using the TensorFlow machine learning framework. To artificially increase the volume of the training sample, the filters compression-stretching, mirroring, cropping with rotation, etc., are applied to the images, which allows us to increase the volume of the training sample up to more than 30,000 pairs of the image mask. To minimize the risk of overfitting and underfitting, iterative learning monitoring functions are used, which reduces the learning rate when it reaches a “learning plateau”, but stops learning if the loss function does not decrease over 5 learning epochs. The Jaccard coefficient, a measure of the consistency of the predicted image with the true image, is used as the loss function.

The trained neural network model is applied to the satellite image of the entire Buinsky district.

3. Results

In the input of the training and verification of the obtained neural network models, it was decided to use the combination Linknet + EfficientNetB3 because of its significantly better values in terms of its achieved accuracy and speed of training, so the results are given just for this combination.

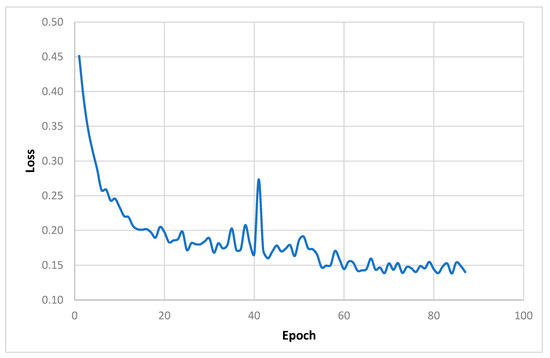

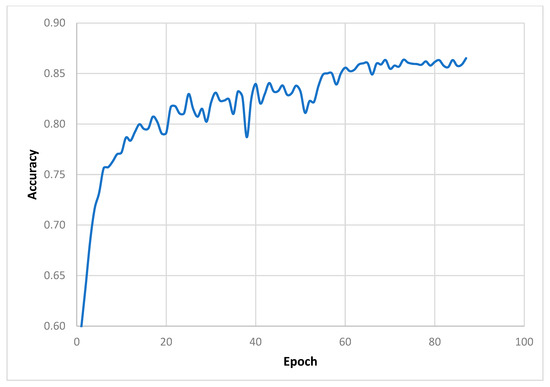

The neural network training was iterative for 87 epochs, after which the error function stopped decreasing and stopped at 0.07 (Figure 1). The accuracy of the borders extraction on the test sample not involved in the training was 86.3% (Figure 2) with the value of the f1 measure equal to 0.924.

Figure 1.

Loss function changes in the neural network.

Figure 2.

Accuracy variation in the neural network on the test dataset.

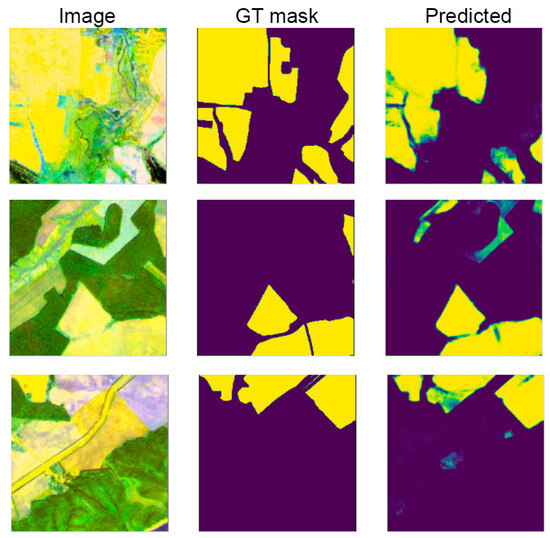

Visually evaluating the recognition results, we should note a high degree of correspondence between the predicted field boundaries and the real ones obtained by the expert method (Figure 3). The existing noise can be filtered out by using matrix filters as well as by selecting an appropriate value of pixel brightness intensity when reclassifying to cropland-background objects.

Figure 3.

Results of testing the trained neural network on fragments of satellite composites (image). GT mask—boundaries of the fields selected by expert method; predicted—predicted boundaries.

It is worth noting that it was not always possible to separate the arable boundaries from one another. This is because the initial resolution of Sentinel 2 images (10 m) is not always sufficient when arable lands are separated by unpaved roads or narrow forest belts. In general, however, the ability of the model to recognize arable land boundaries can be evaluated at a rather high level.

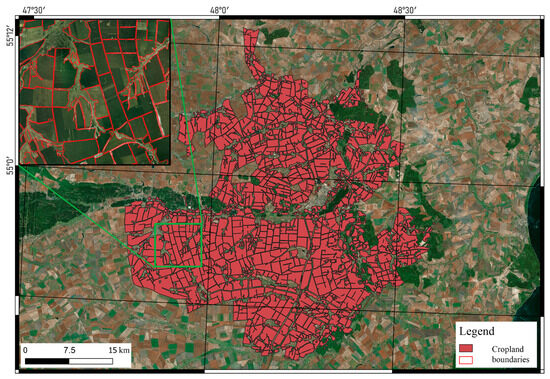

The trained and verified model was applied to the whole Buinsky district. The results of the recognition of arable land boundaries in the study area are shown in Figure 4.

Figure 4.

Results of automated mapping of cropland boundaries in Buinsky district.

4. Discussion

Our study’s findings underscore the efficacy of the synergistic approach involving Linknet and EfficientNetB3 in training neural networks for agricultural land boundary delineation across the entire Buinsky district. This methodology not only excels in mapping cropland boundaries with notable accuracy (86.3%), but also markedly curtails the requisite time and computational resources, establishing itself as a formidable tool for large-scale, high-resolution image analyses. The substantial concordance between the predicted field boundaries and those derived through expert methodologies reaffirms the potential of this tactic, which corroborates the observations documented in previous research commending the proficiency of machine learning algorithms in geospatial analyses [30,31].

However, our exploration also brings to light several challenges. A primary issue is the diminished accuracy in pinpointing the exact demarcation of boundaries when compared to high-resolution satellite imagery, largely attributed to the constrained resolution of Sentinel 2 imagery. This drawback, echoed in other scholarly works [32], signals a wider challenge encountered in the domain of remote sensing data analyses.

Furthermore, the current strategy occasionally misclassifies areas not utilized in agricultural rotation, such as pastures, identifying them as arable lands with perennial grasses. A viable solution seems to be the adoption of multitemporal data series to effectively mask fields that do not conform to regular cropland characteristics.

Additionally, the analysis unearthed a discrepancy spanning 10,000 hectares between the interpreted arable land area in the Buinsky district (89,000 hectares) and the data recorded in the database of indicators of municipal entities of the Russian Federation (99,000 hectares). This disparity arises from the federal database accounting for perennial grasses, a facet not entirely encompassed in our interpretation. This calls for an improvement in methodologies to precisely account for perennial grasses and other non-crop vegetation, hinting at the prospective advantages of incorporating multitemporal data series to enhance crop detection accuracy [33,34].

4.1. Methodological Considerations in Cropland Boundary Detection

The automated mapping of cropland boundaries is a cornerstone in the conservation and management of agricultural resources. At this juncture, a critical discussion contrasting semantic segmentation with traditional image classification and object-based image analysis (OBIA) becomes indispensable in forging more refined and accurate methodologies. This segment delves into these methodologies, explicating their underlying principles, applications, and potential advantages in cropland boundary detection.

4.1.1. Traditional Image Classification

Historically, image analysis has predominantly leaned on image classification techniques, which categorize images into distinct classes based on dominant features or objects encapsulated within the frame. While competent in general scene categorization, this method operates on a somewhat coarse scale, focusing more on the overarching aspects of the image rather than intricate details, potentially bypassing complex patterns and boundaries critical in delineating cropland boundaries with precision.

4.1.2. Object-Based Image Analysis (OBIA)

OBIA has emerged as a progressive approach, concentrating on segmenting an image into significant objects, subsequently categorizing them into different classes grounded on their attributes and spatial relationships. This method integrates not only spectral characteristics but also spatial and contextual information, paving the way for a more nuanced and detailed image analysis. In the sphere of cropland boundary detection, OBIA manifests the potential to identify and delineate intricate patterns and structures, offering a superior level of accuracy compared to its traditional counterparts.

4.1.3. Semantic Segmentation

Semantic segmentation, deeply rooted in deep learning paradigms, offers a finer granularity in image analysis, segmenting the image into sections each associated with a specific class or category, thereby facilitating a detailed pixel-level analysis. This technique recognizes the contextual relationships among various regions in the image, fostering a holistic understanding and classification of the segments within the image. In the context of cropland boundary detection, semantic segmentation demonstrates proficiency in distinguishing subtle variances between adjacent regions, potentially enabling a more accurate delineation of boundaries between similar crop types, an aspect often overlooked by traditional image classification.

4.1.4. Comparative Insights and Future Directions

Navigating the complex terrain of cropland boundary detection reveals the potential synergy of methodologies as a promising route. While traditional image classification lays the foundational groundwork, the amalgamation of OBIA and semantic segmentation promises a deeper, more nuanced analysis. These advanced techniques, focusing on object recognition and pixel-level analysis, respectively, complement each other in the detection of the complex patterns and structures characteristic of cropland boundaries.

As we envision the future, we anticipate further explorations to harness the synergistic potential of these methodologies, fostering advancements in the precision and depth of cropland boundary detection. This investigation accentuates the importance of this exploration, advocating for a concerted effort to validate and leverage these methodologies, contributing significantly to the progressive evolution of this domain.

In orchestrating our study, identifying the optimal channels to integrate into our convolutional neural network model was a critical phase. The selection of the NDVI, red, and infrared channels is substantiated by empirical evidence highlighting their effectiveness in vegetation analyses. The NDVI, a reliable indicator of plant health and vitality, is derived from the red and near-infrared (NIR) channels and has been established as a vital tool in monitoring crop growth stages and assessing vegetation health. Preliminary assessments have revealed that the NDVI channel considerably facilitated the alignment between predicted and actual vegetation boundaries, a contribution reflected quantitatively in the high accuracy rates during the model validation phase. Concurrently, the red channel, recognized for its sensitivity to chlorophyll absorption, emerged as a crucial instrument in distinguishing vegetation from non-vegetation zones, offering a clear demarcation of cropland boundaries and aiding in the accurate extraction of crop patterns and features. In tandem, the infrared channel, which distinguishes vegetation features based on reflective properties, significantly bolstered the model’s ability to discern complex patterns within the data, a contribution substantiated through quantitative analyses of model performance metrics. It should be mentioned that the present convolutional neural network models are confined for the recognition of a maximum of three channels at a time, thereby limiting the incorporation of a more diversified dataset, which could potentially enrich the analysis. Notwithstanding this limitation, the selected channels have proven to be potent in demarcating cropland boundaries, as validated by the high accuracy rates noted in our study.

As we forge ahead, we are keen on exploring avenues to amplify the capabilities of convolutional neural network models, fostering the inclusion of a more varied dataset for a comprehensive analysis in forthcoming research ventures.

Looking forward, it would be propitious to investigate methodologies to enhance the resolution of images utilized in training the neural network models. This enhancement could potentially amplify the accuracy of boundary detection, facilitating more precise differentiation between fields separated by unpaved roads or narrow forest strips. Additionally, the integration of a more diverse training dataset, encompassing data on perennial grasses and other non-crop vegetation, could potentially diminish errors in crop detection.

In conclusion, while our study demonstrates the potential of machine learning algorithms in crop detection, it also highlights the need for the continued research and refinement of these methods. Future studies should aim to address the challenges identified in this study and to develop more accurate and robust crop recognition algorithms.

5. Conclusions

In this study, a method for the automated recognition of cropland boundaries using deep neural networks was developed and tested. In general, the statistical and visual verification of the obtained results can be classified as satisfactory and comparable to manual interpretations. Of the obvious advantages of the methodology should be noted its performance—the recognition of land boundaries in the example of the Buinsky district of the Republic of Tatarstan took less than a minute—as well as its ability to scale beyond the municipality and region in the absence of reference to the spectral characteristics of the study areas and image recognition ability. In the future, it is planned to refine the methodology as well as to use other neural network architectures and the preliminary recognition of land use classes to solve the problem of the interpretation of meadow/grassland boundaries with perennial grasses.

Funding

The study was funded by the Russian Science Foundation Project No. 23-27-00292, https://rscf.ru/en/project/23-27-00292/, accessed on 1 January 2023 (methodology and experiment) and was carried out in accordance with the Strategic Academic Leadership Program “Priority 2030” of the Kazan Federal University (translating and proofreading).

Data Availability Statement

The authors will provide research materials upon request to the corresponding author.

Acknowledgments

The authors would like to express their gratitude to the reviewers and their important comments and suggestions, which greatly improved the manuscript.

Conflicts of Interest

The author declares no conflict of interest.

References

- Fritz, S.; See, L.; McCallum, I.; You, L.; Bun, A.; Moltchanova, E.; Duerauer, M.; Albrecht, F.; Schill, C.; Perger, C.; et al. Mapping Global Cropland and Field Size. Glob. Chang. Biol. 2015, 21, 1980–1992. [Google Scholar] [CrossRef]

- Mukharamova, S.; Saveliev, A.; Ivanov, M.; Gafurov, A.; Yermolaev, O. Estimating the Soil Erosion Cover-Management Factor at the European Part of Russia. ISPRS Int. J. Geo Inf. 2021, 10, 645. [Google Scholar] [CrossRef]

- Dai, Z.Y.; Li, Y.P. A Multistage Irrigation Water Allocation Model for Agricultural Land-Use Planning under Uncertainty. Agric. Water Manag. 2013, 129, 69–79. [Google Scholar] [CrossRef]

- Dumanski, J.; Onofrei, C. Techniques of Crop Yield Assessment for Agricultural Land Evaluation. Soil. Use Manag. 1989, 5, 9–15. [Google Scholar] [CrossRef]

- Appendini, K.; Liverman, D. Agricultural Policy, Climate Change and Food Security in Mexico. Food Policy 1994, 19, 149–164. [Google Scholar] [CrossRef]

- De Castro, V.D.; da Paz, A.R.; Coutinho, A.C.; Kastens, J.; Brown, J.C. Cropland Area Estimates Using Modis NDVI Time Series in the State of Mato Grosso, Brazil. Pesq. Agropec. Bras. 2012, 47, 1270–1278. [Google Scholar] [CrossRef]

- Eitelberg, D.A.; van Vliet, J.; Verburg, P.H. A Review of Global Potentially Available Cropland Estimates and Their Consequences for Model-based Assessments. Glob. Change Biol. 2015, 21, 1236–1248. [Google Scholar] [CrossRef] [PubMed]

- Lmgwal, S.; Bhatia, K.K.; Singh, M. Semantic Segmentation of Landcover for Cropland Mapping and Area Estimation Using Machine Learning Techniques. Data Intell. 2023, 5, 370–387. [Google Scholar] [CrossRef]

- Potapov, P.; Turubanova, S.; Hansen, M.C.; Tyukavina, A.; Zalles, V.; Khan, A.; Song, X.-P.; Pickens, A.; Shen, Q.; Cortez, J. Global Maps of Cropland Extent and Change Show Accelerated Cropland Expansion in the Twenty-First Century. Nat. Food 2021, 3, 19–28. [Google Scholar] [CrossRef]

- Usmanov, B.; Nicu, I.C.; Gainullin, I.; Khomyakov, P. Monitoring and Assessing the Destruction of Archaeological Sites from Kuibyshev Reservoir Coastline, Tatarstan Republic, Russian Federation. A Case Study. J. Coast. Conserv. 2018, 22, 417–429. [Google Scholar] [CrossRef]

- Gafurov, A.M. Possible Use of Unmanned Aerial Vehicle for Soil Erosion Assessment. Uchenye Zap. Kazan. Univ. Ser. Estestv. Nauk. 2017, 159, 654–667. [Google Scholar]

- Gafurov, A. The Methodological Aspects of Constructing a High-Resolution DEM of Large Territories Using Low-Cost UAVs on the Example of the Sarycum Aeolian Complex, Dagestan, Russia. Drones 2021, 5, 7. [Google Scholar] [CrossRef]

- Revenga, J.C.; Trepekli, K.; Oehmcke, S.; Jensen, R.; Li, L.; Igel, C.; Gieseke, F.C.; Friborg, T. Above-Ground Biomass Prediction for Croplands at a Sub-Meter Resolution Using UAV–LiDAR and Machine Learning Methods. Remote Sens. 2022, 14, 3912. [Google Scholar] [CrossRef]

- Scott, G.J.; England, M.R.; Starms, W.A.; Marcum, R.A.; Davis, C.H. Training Deep Convolutional Neural Networks for Land–Cover Classification of High-Resolution Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 549–553. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN Feature Based Graph Convolutional Network for Weed and Crop Recognition in Smart Farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Alam, M.; Wang, J.-F.; Guangpei, C.; Yunrong, L.; Chen, Y. Convolutional Neural Network for the Semantic Segmentation of Remote Sensing Images. Mobile Netw. Appl. 2021, 26, 200–215. [Google Scholar] [CrossRef]

- Gafurov, A. Mapping of Rill Erosion of the Middle Volga (Russia) Region Using Deep Neural Network. ISPRS Int. J. Geo Inf. 2022, 11, 197. [Google Scholar] [CrossRef]

- Gafurov, A.M.; Yermolayev, O.P. Automatic Gully Detection: Neural Networks and Computer Vision. Remote Sens. 2020, 12, 1743. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of Deep Learning CNN Model for Land Use Land Cover Classification and Crop Identification Using Hyperspectral Remote Sensing Images. J. Indian Soc. Remote Sens. 2019, 47, 1949–1958. [Google Scholar] [CrossRef]

- Yermolaev, O.P.; Mukharamova, S.S.; Maltsev, K.A.; Ivanov, M.A.; Ermolaeva, P.O.; Gayazov, A.I.; Mozzherin, V.V.; Kharchenko, S.V.; Marinina, O.A.; Lisetskii, F.N. Geographic Information System and Geoportal «River Basins of the European Russia». IOP Conf. Ser. Earth Environ. Sci. 2018, 107, 012108. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. DenseNet: Implementing Efficient ConvNet Descriptor Pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Montero, D. Eemont: A Python Package That Extends Google Earth Engine. J. Open Source Softw. 2021, 6, 3168. [Google Scholar] [CrossRef]

- Wu, Q.; Lane, C.R.; Li, X.; Zhao, K.; Zhou, Y.; Clinton, N.; DeVries, B.; Golden, H.E.; Lang, M.W. Integrating LiDAR Data and Multi-Temporal Aerial Imagery to Map Wetland Inundation Dynamics Using Google Earth Engine. Remote Sens. Environ. 2019, 228, 1–13. [Google Scholar] [CrossRef]

- Wu, Q. Geemap: A Python Package for Interactive Mapping with Google Earth Engine. J. Open Source Softw. 2020, 5, 2305. [Google Scholar] [CrossRef]

- Sentinel-2—Missions—Sentinel Online. Available online: https://copernicus.eu/missions/sentinel-2 (accessed on 24 June 2023).

- Stewart, A.J.; Robinson, C.; Corley, I.A.; Ortiz, A.; Ferres, J.M.L.; Banerjee, A. TorchGeo: Deep Learning with Geospatial Data. arXiv 2022, arXiv:2111.08872. [Google Scholar]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in Land Cover and Crop Classification Based on Temporal Features Learning from Sentinel-2 Data Using Recurrent-Convolutional Neural Network (R-CNN). Appl. Sci. 2019, 10, 238. [Google Scholar] [CrossRef]

- Di Tommaso, S.; Wang, S.; Lobell, D.B. Combining GEDI and Sentinel-2 for Wall-to-Wall Mapping of Tall and Short Crops. Environ. Res. Lett. 2021, 16, 125002. [Google Scholar] [CrossRef]

- Sani, D.; Mahato, S.; Sirohi, P.; Anand, S.; Arora, G.; Devshali, C.C.; Jayaraman, T.; Agarwal, H.K. High-Resolution Satellite Imagery for Modeling the Impact of Aridification on Crop Production. arXiv 2022, arXiv:2209.12238. [Google Scholar]

- Obadic, I.; Roscher, R.; Oliveira, D.A.B.; Zhu, X.X. Exploring Self-Attention for Crop-Type Classification Explainability. arXiv 2022, arXiv:2210.13167. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).