1. Introduction

Traffic anomaly detection is an important research topic in Artificial Intelligence (AI) and computer vision, as it directly affects road safety and urban mobility. Traffic anomalies such as accidents, vehicle breakdowns, or unusual pedestrian movements are unexpected events that can cause injuries or fatalities and create serious problems for emergency response and traffic management. According to the World Health Organization (WHO), road accidents cause around 1.19 million deaths each year and between 20 and 50 million injures, many of whom suffer long-term disabilities [

1]. These incidents not only have a high human cost but also place a heavy burden on healthcare systems and lead to major economic losses. This highlights the need for reliable and fast detection methods capable of identifying and anticipating such events.

Rapid emergency response is essential to minimize the impact of traffic anomalies. The golden hour principle in emergency medicine [

2] stresses the importance of acting within the first 60 min of severe injuries to improve survival rates. However, most existing traffic monitoring systems rely on human operators monitoring multiple video feeds, which leads to delays due to fatigue, distractions, and cognitive overload. To overcome these limitations, AI-based anomaly detection systems have emerged as a crucial solution, significantly improving the speed and accuracy of anomaly identification.

In our previous work [

3], we presented an intelligent system for detecting traffic anomalies using advanced deep learning techniques that predicted future frames using features extracted from the current ones. Although the method achieved promising results, it showed limitations in dynamic environments where vehicles and pedestrians appear and disappear at different speeds and directions, leading to noisy predictions and lower reliability.

To address this limitation, this paper presents an improved framework for real-time traffic anomaly detection that enhances both accuracy and efficiency. The proposed model introduces several key improvements:

Enhanced temporal context: Instead of analyzing a single frame, the model processes sequences of n consecutive frames, providing contextual knowledge to improve anomaly detection accuracy.

Taking advantage of the n-frames context window, the addition of a correlation matrix () to the system for increasing the temporal features extracted from the sequence.

Focused detection mechanism: The integration of an Interaction Field () enables the model to prioritize dynamic elements, reducing the impact of static background noise and improving reconstruction reliability.

These improvements collectively contribute to a more practical and scalable AI-driven anomaly detection system, ensuring real-time performance while maintaining architectural simplicity. By minimizing computational overhead, the proposed approach offers a more efficient alternative to existing solutions, making it feasible for deployment on resource-constrained hardware and large-scale traffic monitoring infrastructures.

The remainder of this paper is organized as follows:

Section 2 reviews relevant literature on anomaly detection in traffic scenarios, highlighting key advancements and challenges.

Section 3 presents the proposed methodology, detailing the architectural modifications introduced to enhance anomaly detection performance.

Section 4 describes the experimental setup and discusses the obtained results. Finally,

Section 5 summarizes the conclusions and outlines potential directions for future improvements.

2. Related Work

Recent advances in anomaly detection for traffic scenarios have focused on using computer vision and artificial intelligence (AI) techniques to enhance accuracy and real-time responsiveness. Researchers have developed methods that tackle challenges such as distinguishing anomalies from normal behaviors, reducing false detections, and ensuring timely identification of critical events. Existing methodologies can be broadly categorized into feature-based approaches, generative models, and deep learning-based architectures, each offering different trade-offs between accuracy, computational cost, and scalability.

A deep pyramidal network has been proposed for anomaly detection, leveraging multi-scale pyramidal structures and perceptual loss to enhance feature learning [

4]. This approach encodes normal images into a low-dimensional latent space and reconstructs them, demonstrating strong performance on the MVTec dataset. However, their effectiveness in complex traffic environments remains limited due to challenges in handling intricate textures and dynamic scene variations. Another approach, SimpleNet, addressed anomaly detection by integrating a feature adaptor to minimize domain bias and an anomaly feature generator to synthesize anomalies within the feature space [

5]. Evaluations on the MVTec AD benchmark revealed its ability to bridge the gap between academic research and industrial applications. Generative Adversarial Networks (GANs) have also been explored for anomaly detection, as seen in ADGAN [

6]. This method searches image representations within the latent space of a GAN generator, iteratively refining both latent vectors and generator parameters. ADGAN has achieved competitive performance across various benchmarks, showcasing the potential of generative methods in anomaly detection tasks.

While significant progress has been made in image-based anomaly detection, addressing anomalies in video sequences presents additional complexities due to temporal dynamics. To improve anomaly detection and localization in surveillance videos, a method integrating topic models with a spatiotemporal classifier was introduced [

7]. This “projection model” enhances detection accuracy and provides precise spatiotemporal localization of anomalies. Furthermore, a real-world surveillance video dataset was proposed to encourage further advancements in anomaly detection research. Furthermore, spatio-temporal adversarial networks have been utilized to detect anomalies in video sequences [

8]. These models learn patterns corresponding to normal behaviors, enabling them to identify deviations indicative of anomalies. Experiments demonstrate competitive performance and interpretable visualizations of detected anomalies. A different approach on video anomaly detection was introduced through future frame prediction techniques [

9]. By enforcing spatial (appearance) and temporal (motion) constraints, this method ensures consistency between predicted and ground-truth frames. Notably, it was the first to explicitly incorporate a temporal constraint into video prediction tasks. Deep convolutional neural networks (CNNs) have also been applied to anomaly detection, focusing on the correlation between object appearances and their associated motions [

10]. This model is optimized for robustness against diverse anomalies, improving reliability in real-world applications. Unsupervised learning approaches have demonstrated strong potential in improving anomaly detection. One such approach leverages textual descriptions alongside a pre-trained vision-language model (CLIP) [

11]. This model achieves results comparable to weakly supervised techniques without requiring labeled data. Expanding on this trend of using large pre-trained models, AADC-Net was introduced as a multimodal network to specifically address data limitations and imbalances [

12]. This approach leverages both pretrained Large Language Models and Vision-Language Models to enhance understanding with minimal supervision. Furthermore, it integrates a DEtection TRansformer model for visual feature extraction, which notably eliminates the need for bounding box supervision. Also leveraging the cross-modal capabilities of CLIP, other research has focused on the limitations of visual-only approaches by developing a weakly supervised framework that incorporates audio-visual collaboration [

13]. This method introduces an efficient audio-visual fusion mechanism and a novel audio-visual prompt to adapt the frozen CLIP backbone without full retraining.

Another significant development is S3R, a self-supervised learning framework that models feature-level anomalies via sparse representations [

14]. By integrating Normal and De-Normal modules, S3R consistently outperforms existing methods in both one-class and weakly supervised settings. Addressing the dual challenges of high computational cost and the tendency of deep networks to overlook anomalies due to their strong generalization ability, another self-supervised approach was recently introduced [

15]. This method proposes Anomaly Distance Learning, which uses self-supervised signals to actively maximize the feature gap between normal and abnormal samples, and a Context-Aware Skip Connection to selectively preserve normal information To further enhance temporal modeling in video anomaly detection, Spatiotemporal Long Short-Term Memory (ST-LSTM) combined with adversarial training was proposed [

16]. This approach effectively captures unified memory representations of spatial appearances and temporal variations, improving anomaly detection performance. Following this line of memory optimization, researchers have proposed a Multi-Memory-Augmented dual-flow network [

17]. This approach not only utilizes memory units to explicitly manage the diversity of normal patterns but also addresses the distance metric introducing a novel “curvy metric”

Addressing video anomaly detection from another perspective, several works have leveraged weakly supervised and few-shot learning approaches. Lu et al. proposed a few-shot learning approach designed to detect anomalies in previously unseen scenes with only limited frames for adaptation [

18]. The proposed method automatically learns robust spatiotemporal features, making it effective in adapting quickly to new scenarios. To reduce reliance on extensive manual annotations, a weakly supervised framework based on Multiple Instance Learning (MIL) has been developed [

19]. This method applies a ranking mechanism to video clips extracted via a Two-Stream Inflated 3D (I3D) network, enhancing detection accuracy. Traditional MIL-based ranking loss methods, while useful for anomaly classification, often fail to fully leverage the abundance of normal data, leading to susceptibility to misclassification errors. To overcome this limitation, a diffusion-based normality learning pretraining step has been introduced [

20]. This approach trains a Global–Local Feature Encoder (GLFE) exclusively on normal videos, capturing both short- and long-range temporal dependencies through a Transformer block and a pyramid of dilated convolutions. The method integrates a Co-Attention module for dynamic feature fusion and employs Multi-Sequence Contrastive loss to enhance anomaly discrimination. Further leveraging diffusion models, the approach in [

21] tackles the challenge of small abnormal objects—often missed by feature-level methods—by proposing a novel patch-based diffusion model engineered to capture fine-grained local information. Observing that anomalies manifest as deviations in both appearance and motion, this method also introduces innovative motion and appearance conditions that are integrated into the diffusion process.

A multimodal and multiscale weakly supervised method has also been introduced to improve anomaly detection in challenging video conditions such as blurring and occlusions [

22]. This framework extracts RGB and optical flow features using a pre-trained I3D network, filters redundancies with an Attention De-redundancy (AD) module, and models long- and short-term dependencies using a Multi-scale Feature Learning (MFL) module. Finally, an Adaptive Feature Fusion (AFF) module dynamically integrates the most relevant appearance and motion features.

Extending these concepts specifically to traffic scenarios, researchers have proposed tailored approaches to detect and respond promptly to traffic anomalies. Motion Interaction Field (MIF) has been employed to model vehicle interactions using Gaussian kernels, significantly improving anomaly detection and localization performance [

23]. This method, inspired by water wave dynamics, successfully captures key aspects of traffic interactions and outperforms traditional approaches. A multimodal large language model (MLLM)-based framework has been introduced to automate the detection of safety-critical events in driving videos [

24]. By leveraging multi-stage question-answering (QA) techniques, logical and visual reasoning capabilities are enhanced, leading to improved accuracy and detection efficiency. To improve response times in traffic anomaly detection, transfer learning combined with synthetic image generation has been employed [

25]. EfficientNetB1 and MobileNetV2 models have demonstrated notable performance, showcasing their potential in enhancing road safety. Another vision-based system optimized for anomaly detection in smart cities, I3D-CONVLSTM2D, integrates RGB and optical flow data using transfer learning [

26]. Designed for edge IoT devices, this system effectively addresses real-world challenges and dataset limitations. Deep representation modeling using denoising autoencoders has been explored to enhance real-time anomaly detection reliability [

27]. By learning normal vehicle behaviors, this approach improves the identification of unusual incidents while reducing false positives.

Several works have incorporated temporal information into neural networks to improve detection stability. For example, R-UNet [

28] integrates recurrent blocks and attention mechanisms for temporal dependency modeling, and DDoS-UNet [

29] adds temporal context for MRI super-resolution. These architectures demonstrate the advantages of temporal modeling, even outside the traffic domain.

Despite the significant progress, existing models still face challenges such as high false positive rates due to occlusions and poor adaptability to different environments. CNN-based methods often fail to fully capture temporal dynamics in traffic flow. To address these issues, this work proposes TGU-Net, a framework that introduces temporal correlation modeling and object-masked reconstruction to achieve more accurate and reliable anomaly detection in real-time traffic scenarios. The following section describes the proposed methodology in detail.

3. Methodology

The model extends our previous architecture [

3] depicted in

Figure 1 by incorporating temporal information and a focused detection strategy. The base architecture is built upon a convolutional U-Net [

30], where skip connections link encoder and decoder stages to preserve spatial details. While U-Net is commonly used for image segmentation, its ability to reconstruct high-quality images makes it suitable for predicting future frames in traffic scenes.

The main improvements introduced in this work focus on better capturing temporal dependencies, refining spatial feature extraction, and improving the robustness of anomaly detection.

3.1. Data Acquisition

During early experiments, we observed that the quality of image reconstruction was limited, resulting in relatively low Peak Signal-to-Noise Ratio (PSNR) values. This was mainly due to the lack of large, high-resolution public datasets suitable for training deep generative models in realistic traffic environments. Although alternative anomaly detection datasets exist, many are characterized by low visual quality [

31], outdated simulation models [

32] or excessive noise and non-traffic specific anomalies [

33].

To overcome this limitation, we created a synthetic dataset (

https://github.com/Maarioo01/CARLAccident accessed on 12 November 2025) using CARLA simulator [

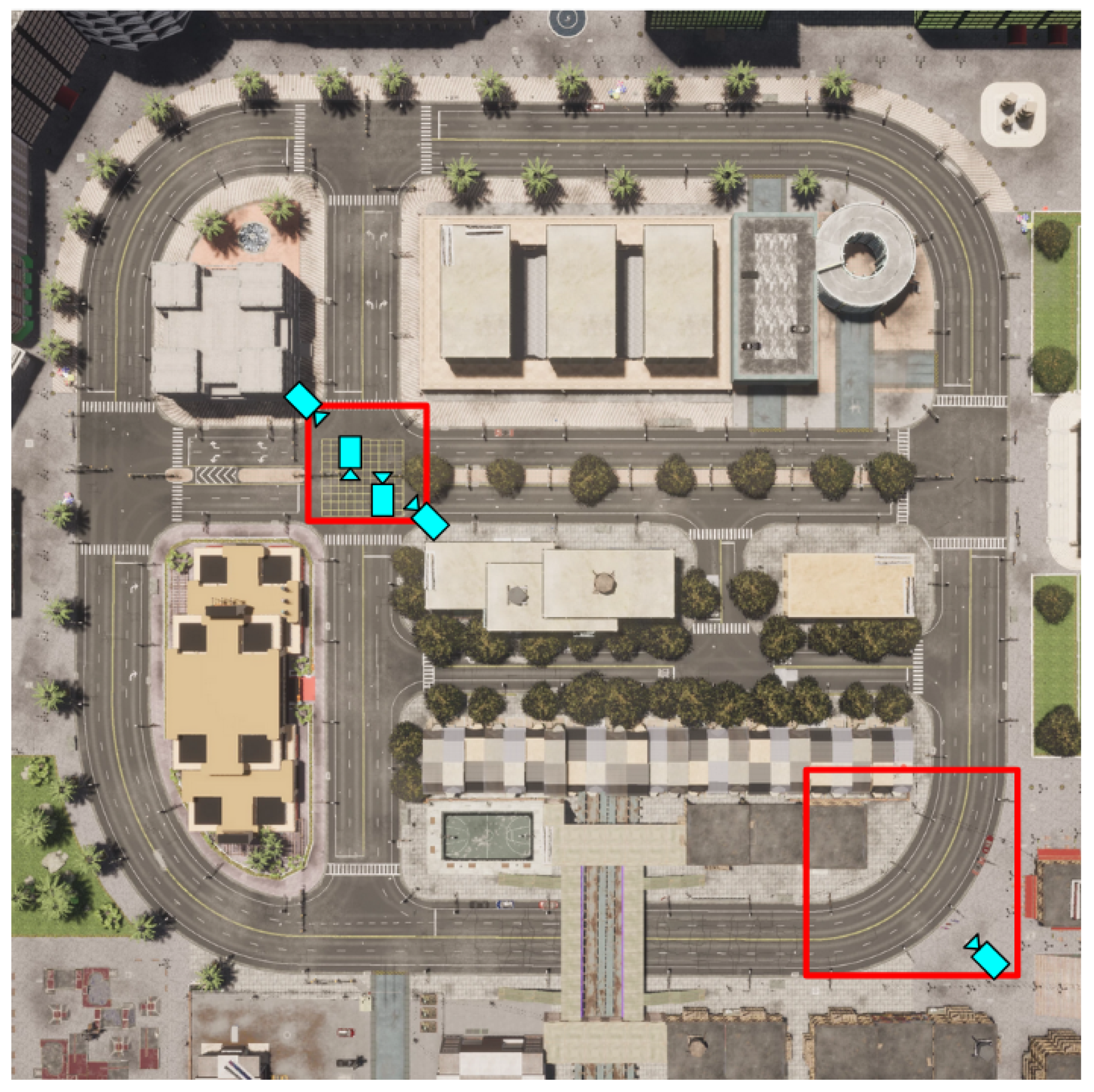

34] designed for anomaly detection. For dataset collection, two specific locations from the built-in Town10 map were selected, as shown in

Figure 2.

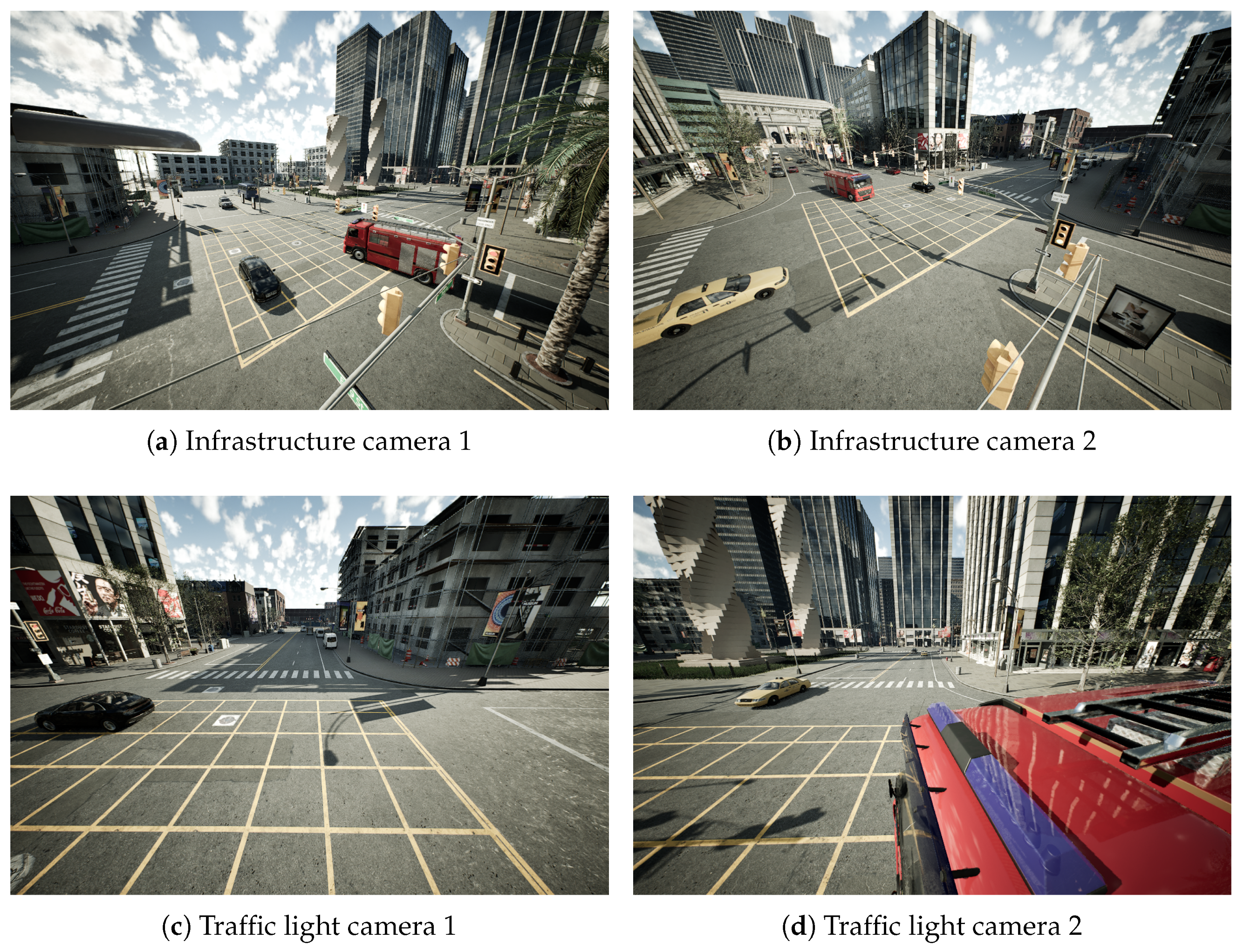

Intersection scenario: A four-way intersection equipped with four cameras placed at different angles to cover all directions as illustrated in

Figure 3.

Curved road scenario: A single camera positioned on a curved road to capture vehicles approaching from both directions as shown in

Figure 4.

A total of five cameras were deployed in CARLA, each configured with a resolution of 1280 × 720 pixels and a 120° field of view (FoV), ensuring extensive coverage of the selected scenarios.

To generate data, a large number of dynamic agents, including cars, trucks, motorcycles, and pedestrians, were introduced using an automated simulation script. This setup ensured a natural traffic flow within the virtual environment, facilitating the collection of high-quality “regular driving” sequences.

3.2. Proposed Architecture

Then, the temporally enhanced framework proposed in this work is illustrated in

Figure 5. Given a sequence

of

n frames, the images are first resized to

and processed by the encoder

E to extract the main feature representations tensor

. In parallel, a Correlation Matrix

is computed to capture pixel-level dependencies within

I. This step is critical for preserving temporal context, without it, the system would interpret

I as

n independent frames rather than as a temporally coherent sequence. The

is then flattened into the

-dimensional tensor

and fed into a Temporal Modulation Module

. This module consists of two distinct linear layers that transform

into two vectors,

(scale) and

(shift), both with dimension

. The latent space

is then generated by applying an Affine Transformation

to the feature tensor

using these modulation factors:

This method ensures a more sophisticated and dynamic integration of temporal context into the visual features. By conditionally scaling and shifting the spatial features based on the history captured by the

, we ensure that the temporal dynamics are incorporated in a non-additive manner, maximizing the fusion efficiency without increasing the dimensionality of the feature representation available to the decoder

D·

At the final stage, the predicted frame

generated by our model must be compared with the ground-truth frame

to evaluate the normality of the scene. However, in most anomaly cases, many background elements such as the road surface, buildings, traffic lights, or trees are not directly involved in the event. Moreover, since reconstructions of normal situations are never perfectly accurate at the pixel level, we introduced an additional step in the anomaly detection stage. After obtaining

, the ground-truth frame

is processed with YOLOv11 [

35] as the object detector

to identify traffic-related elements of the scene and generate a mask

M. This mask is then applied to both frames, resulting in

and

. Finally, the masked images are compared using

, from which a similarity score is derived to determine whether the observed situation corresponds to a normal event or an anomaly.

3.3. Temporal Context

One major limitation of the initial approach [

3] was the lack of temporal knowledge in the input data used for frame reconstruction. The model was originally designed to predict the next frame based on a single input image, which proved to be insufficient data for accurately capturing dynamic traffic scenes. Given that urban environments are characterized by constantly moving objects, such as vehicles and pedestrians suddenly entering the field of view, this limited temporal awareness resulted in frequent reconstruction errors.

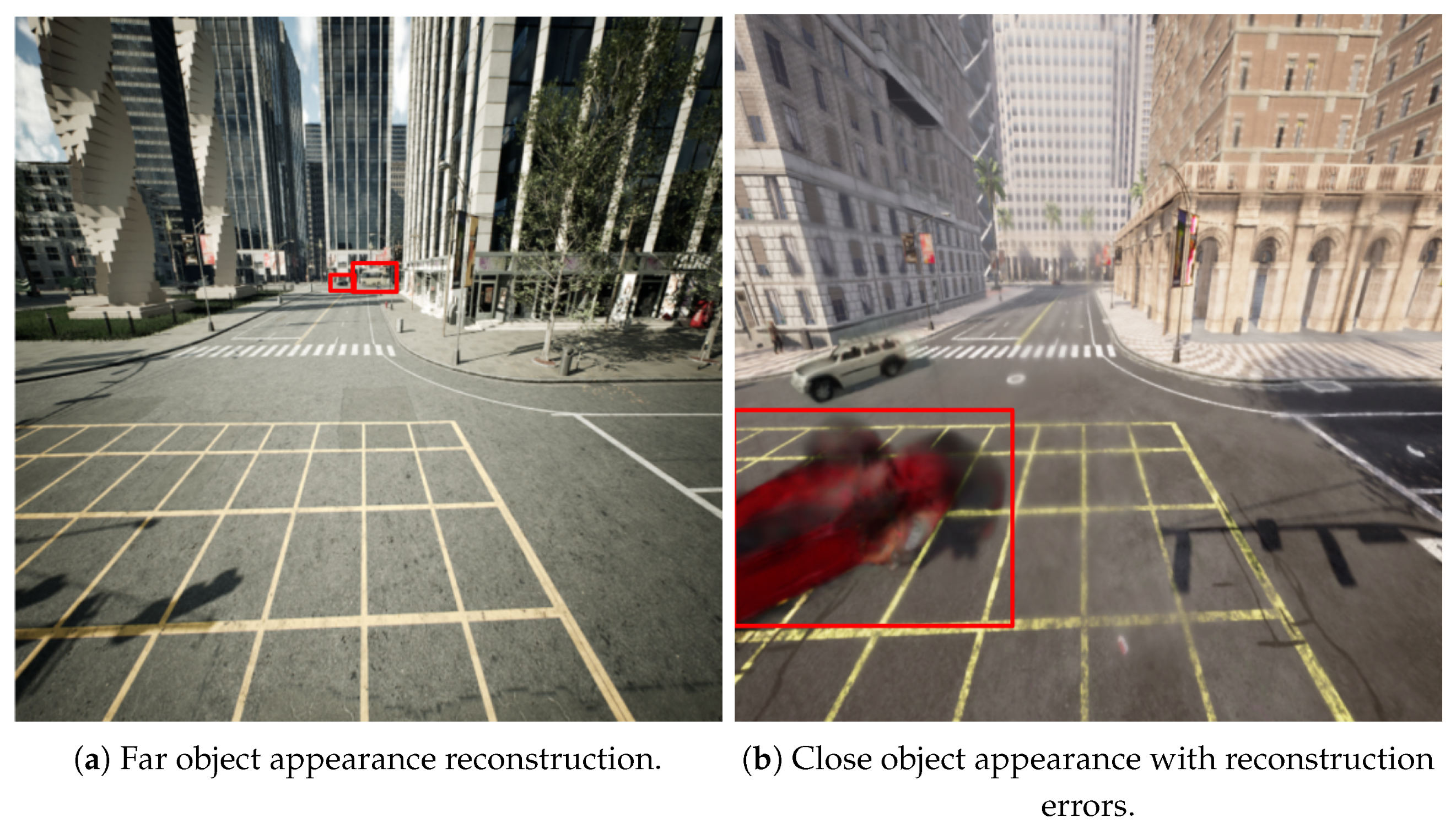

This limitation was especially evident when the model attempted to reconstruct newly introduced objects, such as a car or pedestrian suddenly appearing in the scene. In these cases, significant reconstruction errors occurred because the model lacked prior context to predict the presence and shape of objects that had not been visible in the previous frame. The severity of these errors largely depended on the object’s position relative to the camera:

Distant objects: When an object appeared far from the camera as shown in

Figure 6a, perspective effects caused it to occupy a relatively small area in the image. Consequently, the reconstruction error was distributed over a limited number of pixels, leading to only a minor drop in

.

Close objects: In contrast, when a new object entered the scene close to the camera as shown in

Figure 6b, it occupied a significantly larger portion of the image. This led to substantial reconstruction errors, resulting in a sharp drop in the

. Consequently, the system often misinterpreted this drop as an anomaly, increasing the estimated probability of anomaly and triggering a false positive detection.

Further analysis showed that this issue was most pronounced in the initial frames when a new object first entered the scene. At this stage, partial occlusion, caused by the camera’s perspective, limited the model’s ability to infer the complete structure of the object. However, as the object became fully visible, the reconstruction stabilized, and the returned to normal levels.

The goal of this adjustment is to provide the model with a more comprehensive representation of scene dynamics, improving its ability to anticipate temporal variations while reducing the noise caused by newly appearing objects within the camera’s field of view. The primary cause of this issue was the model’s reliance on a single-frame input, which lacked sufficient temporal information for accurate predictions. To overcome this limitation, the training structure was modified to use a sequence of consecutive n frames instead of a single one. However, while increasing the input to a sequence of n frames enhances the temporal view, relying solely on image features form the encoder E is insufficient to capture frame-to-frame dependencies explicitly. Therefore, is calculated to provide an explicit, low-dimensional representation of the pixel-level temporal consistency across the sequence. This information is then merged with the extracted feature tensor to construct an enriched latent space with stronger temporal awareness. The goal of this adjustment is to provide the model with a more comprehensive representation of scene dynamics, improving its ability to anticipate temporal variations while reducing the noise caused by newly appearing objects within the camera’s field of view.

However, this limitation was not fully overcome, as the unpredictable emergence of new objects continues to make perfect reconstruction a persistent challenge. This highlights an avenue for future work focused on improving the model’s capacity to anticipate and reconstruct previously unseen objects in complex traffic environments.

3.4. Generative

The Generative part of our architecture, built on the U-Net encoder–decoder structure [

30], benefits significantly from the enhanced latent space

. Our approach refines the temporal integrations by moving beyond the original design [

3], which relied only on image features propagated through skip connections. The key adjustment is the use of the Affine Transformation

for the construction of

. By applying the temporal modulation factors

and

derived from the

to the bottleneck features

, we overcome the issue of insufficient temporal knowledge that plagued the single-frame baseline. This modulation allows the model to selectively emphasize elements within the visual features based on the observed temporal consistency of the scene, leading to more accurate predictions and improved robustness against reconstruction noise due to dynamic objects. The decoder

D then utilizes this temporally enriched

and the spatial details from the skip connections to reconstruct the subsequent frame

.

3.5. Anomaly Detection

Once the predicted frame

is generated, the anomaly detection part is introduced to enhance anomaly detection on the scene. In our previous work [

3] anomaly evaluation was performed by directly comparing

with the ground truth frame

to compute a similarity score. However, this initial approach exhibited several limitations. Changes between training and inference scenarios, including variations in objects, introduced noise into the reconstruction due to the inherent uncertainty of the generative model. Moreover, since

cannot perfectly replicate the ground-truth pixels, the accumulation of minor reconstruction error on irrelevant regions (i.e., buildings, road surface, distant objects) degraded the accuracy of anomaly detection, despite these areas not being crucial to distinguish normal from anomalous situations.

To mitigate these issues, we integrate a post-processing strategy that emphasizes the most relevant elements of the scene. We intentionally apply

after the generative phase for two reasons: (1) It ensures the encoder

E has access to the full image context to extract the most comprehensive features (

), maximizing the reconstruction quality for all scenes, including those with subtle anomalies. (2) Using the mask

M derived from the ground-truth

guarantees that the mask accurately highlights all present objects. Specifically,

, which is free from reconstruction noise, is processed with a state-of-the-art object detector

[

35] to extract bounding boxes of traffic-related entities such as vehicles, pedestrians and traffic lights. From these detections, a mask

M is generated to highlight only the relevant elements. This mask is then applied to both

and

, producing

and

, thereby filtering out irrelevant regions that would otherwise introduce noise. Then, the

is computed between the masked frames to determine whether the observer situation corresponds to a normal event or an anomaly. However, the performance of this system relies on the robustness of the object detector [

35]. While its high precision proved sufficient for the diverse traffic behaviours and collisions generated in our dataset, potential failure to detect severely distorted or highly congested objects during real anomalies could lead to false negatives in the masked comparison. For future deployment in real scenarios, maintaining system reliability will require continuous monitoring and finetuning of the

module with the real data to ensure that all safety elements are consistently masked.

4. Experiments and Results

Building on TGU-Net, a series of experiments were conducted to evaluate the effectiveness of the proposed approach. The main goal was to analyze the impact of object-masked to assess whether filtering out static objects enhances anomaly detection, while comparing its performance with the addition of the correlation matrix against previously explored modifications [

3].

4.1. Generated Dataset

As mentioned in

Section 3.1, the lack of large, high-quality datasets for traffic anomaly detection motivated the creation of a CARLA-based synthetic dataset. Using the same simulator configuration described earlier, we collected both training and testing data under realistic traffic conditions. In total, 508,685 frames were collected for training, including 406,948 frames from the intersection scenario and 101,737 frames from the curved road. All training sequences represent normal normal traffic behaviors, without any anomalies.

To evaluate the model’s performance in detecting traffic anomalies, a separate dataset was generated with a combination of normal and anomalous events. The anomalies were created manually by introducing controlled collisions between vehicles under various conditions. This dataset includes

1270 anomalous frames, grouped into different sequences and covering various types of collisions, including frontal, lateral, and rear-end impacts. These sequences were manually generated to ensure a diverse range of scenarios.

2651 normal frames, also structured in distinct sequences, representing routine traffic behavior.

For preprocessing, the same pipeline was applied to both training and testing datasets. First, for the input data, the entire set of frames were grouped into sequences of consecutive frames. After that, each sequence was resized to and pixels, providing the final input sequence for E. Finally, the target to be predicted is defined as the immediate subsequent element of the selected sequence.

4.2. Implementation Details

With the training datasets already prepared and organized, the next phase was the training. The TGU-Net was developed and trained using PyTorch 2.0.0+cu118 on two NVIDIA RTX 4090 GPUs (USA, Santa Clara, California) with 24 GB of VRAM each, using a workstation equipped with a 13th Gen Intel® Core™ i9-13900K (USA, Arizona, Chandler) and 64 GB of RAM. The high memory capacity was essential for managing large batch sizes and high-resolution images, effectively minimizing memory-related bottlenecks. In alignment with the goal of computational efficiency, we measured the end-to-end inference time, encompassing the full pipeline: sequence loading, feature extraction, frame reconstruction, object detection, masking and final

calculation. The average inference time achieved was ∼0.0785 s per sequence when running on a single NVIDIA RTX 4090 GPU. This performance confirms the framework’s capability for real-time deployment in traffic monitoring applications. The experiments were conducted using an architecture composed of an encoder–decoder module, with a middle feature representation

f whose dimension

d is fixed to 1024. Model training was performed using the Adam [

36] optimizer, combined with a learning rate scheduler to ensure stable convergence. The initial learning rate was set to 0.01 and decreased by 10% every two epochs. A batch size of 20 was adopted, and model training proceeded for a maximum of 100 epochs, with early stopping implemented to terminate the process when no further improvements were detected.

The loss function was defined as a combination of Mean Squared Error (MSE) and Structural Similarity Index Measure (SSIM), ensuring a balance between pixel-wise accuracy and perceptual quality similarity:

where

and

are the ground-truth and predicted images,

N is the number of elements (pixels × channels),

are mean intensities,

are variances,

is the covariance, and

are small stabilizing constants. The weights

and

control the relative contribution of the two terms.

While the MSE measures the average squared difference between corresponding pixels, indicating lower values for greater similarity, SSIM evaluates the perceptual quality of an image by comparing luminance, contrast, and structure, producing values between −1 and 1, where values closer to 1 indicate better reconstruction.

In addition, the model’s performance was evaluated using several key metrics to assess its effectiveness and the impact of the proposed enhancements. Three main evaluation strategies were adopted: , accuracy, and a custom delay function.

In the first place,

measures the quality of the reconstructed scenes by quantifying the difference between generated and ground truth images. In the context of anomaly detection,

is particularly effective, as it emphasizes the ratio between signal peaks and noise, making it more resilient to localized outliers. In this work,

values were also converted to confidence percentages to estimate the likelihood of an anomaly occurring. The

is calculated as

where

represents the maximum possible pixel value and

is the Mean Squared Error.

Subsequently, to complement , other classification metrics were used, including accuracy, and recall. These metrics offered a comprehensive view of the system’s ability to correctly distinguish between normal and anomalous sequences, based on a threshold applied to the confidence score derived from .

Finally, a custom delay function was implemented to evaluate the model’s responsiveness. This function measures the number of frames between the actual occurrence of an anomaly and the point at which the system detects it with 100% confidence.

4.3. Evaluation of Across Different Situations

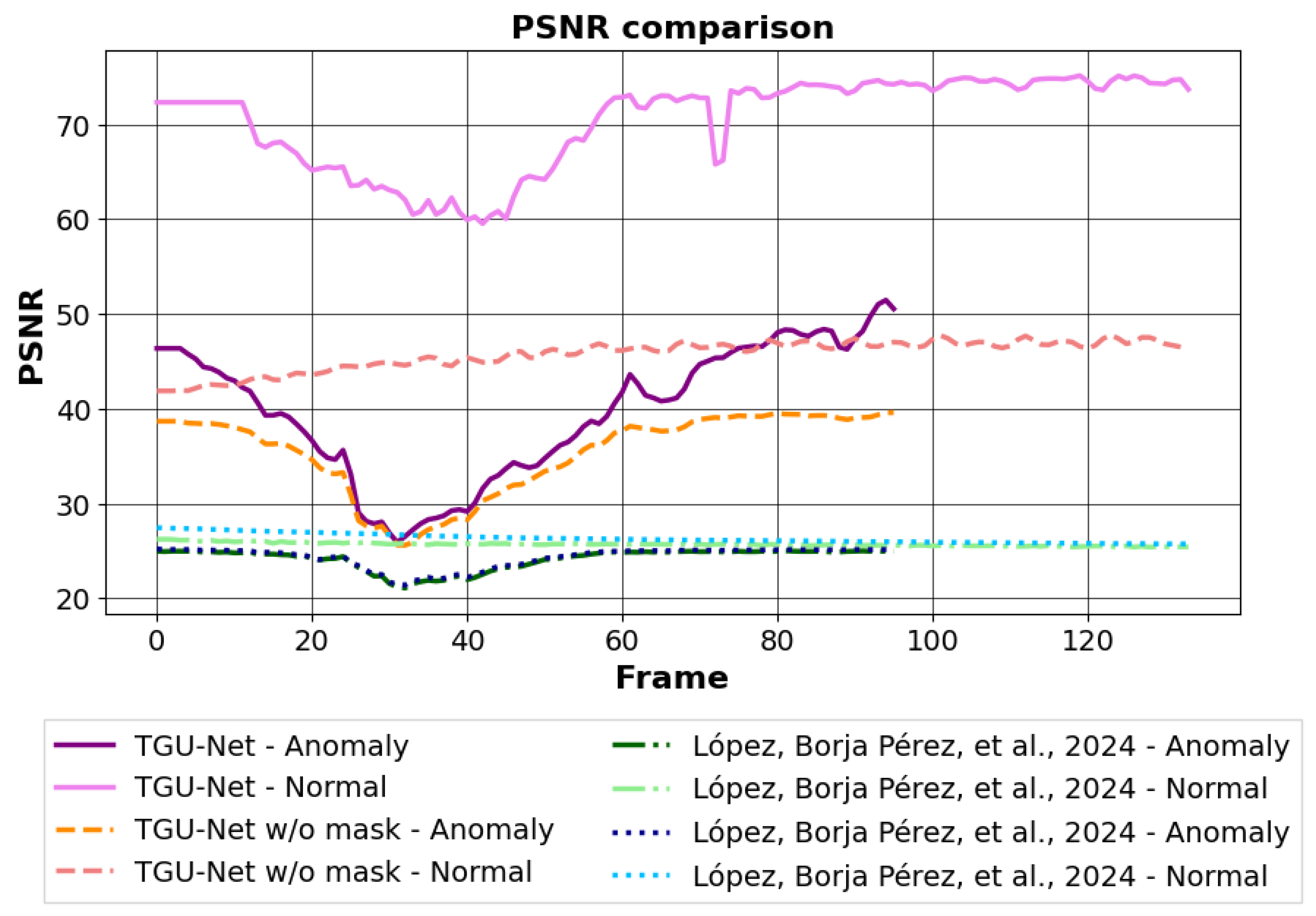

Based on the evaluation metrcis defined above, the experimental analysis was designed to systematically assess the effectiveness of the proposed TGU-Net framework. In this section, we present the results obtained from applying the model to diverse traffic scenarios, with the aim of quantifying improvements introduced by the temporal and anomaly detection modules. As a first step,

was evaluated across different test situations to provide a direct comparison between our proposed implementation, its ablated version without the anomaly detection stage, and the U-Net and U-NetR baselines from our previous work [

3]. The corresponding results are summarized in

Table 1.

As expected, anomalous scenes consistently yield lower values than normal ones, reflecting the model’s struggle to accurately reconstruct anomalies. However, the most significant insight is that the TGU-Net w/o mask not only achieves higher absolute values overall but also exhibits a much clearer separation between normal and anomalous scenarios. This distinction is critical for improving anomaly classification performance.

Nonetheless, its performance remains below that of the TGU-Net with the proposed anomaly detection part, underlining the critical role of the object-masking mechanism. This significant gap achieved by the final model reinforces its effectiveness in separating normal and anomalous frames, offering a more robust foundation for traffic anomaly detection systems.

To better visualize these differences, two scenarios were selected from the same camera viewpoint and environment, one depicting an anomaly and the other showing normal behavior. The corresponding frame sequences were processed using all the evaluated models. As shown in

Figure 7, each pair of lines represents the

values obtained from a specific model when applied to the normal and anomalous scenes, respectively.

This visualization offers a clearer understanding of the effectiveness of TGU-Net in distinguishing between normal and anomalous scenes. Not only does it consistently yield the highest

values, but it also maintains a well-defined separation between the two categories. Specifically, the large separation between the TGU-Net masked and the TGU-Net without mask lines demonstrates the efficacy of the masking strategy in suppressing background noise and achieving metric stability in normal scenes, a key justification for its superiority over not masked evaluation.In contrast, models on [

3] exhibit significantly lower

values, with only minimal differentiation between normal and anomalous situations.

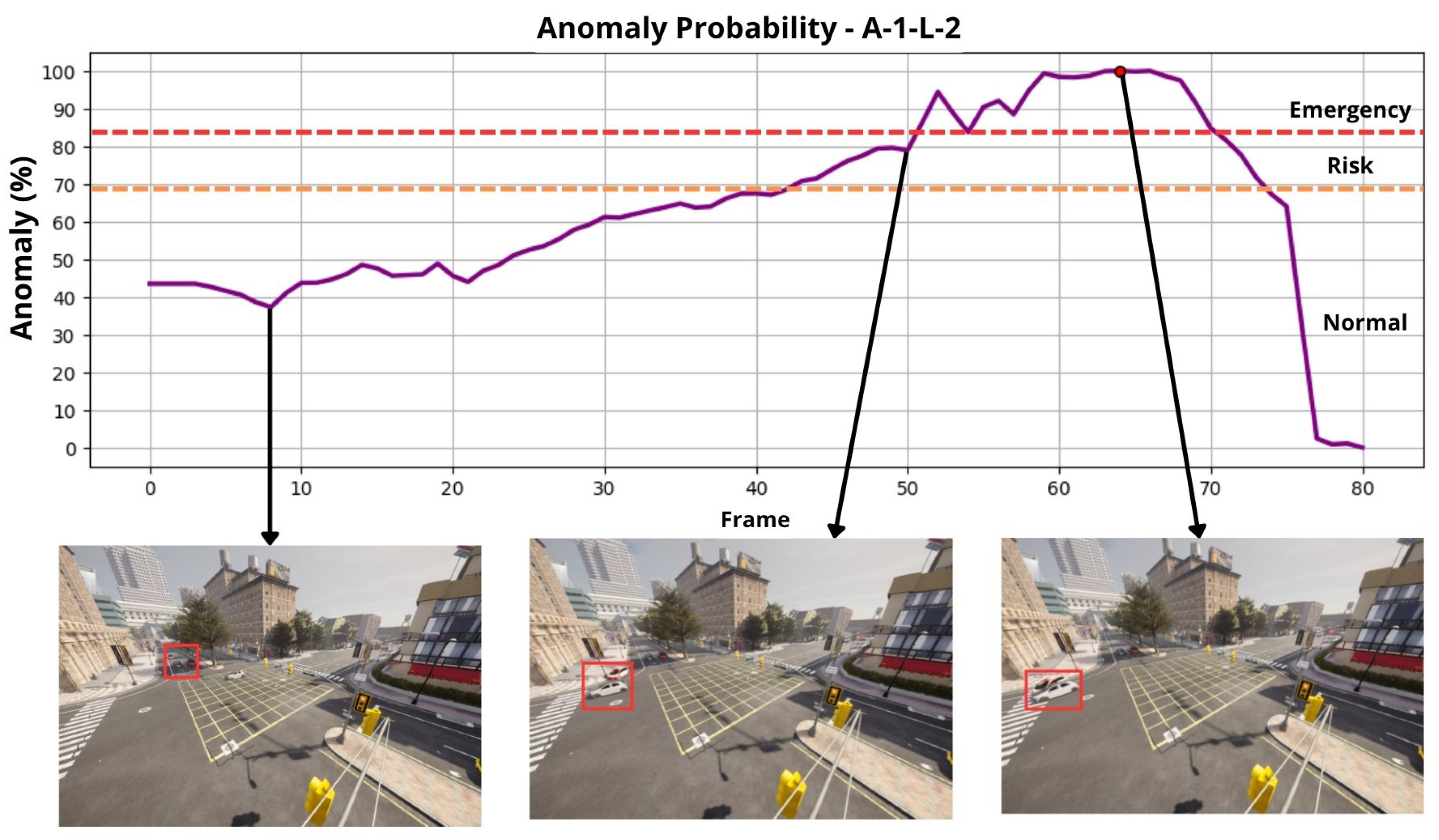

4.4. Assessment of Anomaly Detection Confidence

As outlined earlier,

values are leveraged as a way for estimating the probability of an anomaly. To further analyze this aspect, an offline evaluation was conducted to examine the evolution of

during anomalous sequences. Specifically, accident scenarios were inferred with the proposed TGU-Net and the corresponding

values were tracked across time. These values were subsequently normalized into a percentage scale

so the lowest observed

was mapped to a 100% anomaly confidence and the highest to 0%.

As illustrated in

Figure 8, this transformation facilitates the adjustment of stage limits, enabling the design of adaptive thresholds that can distinguish between three levels of risk: normal operation, potential risk, and emergency anomaly. This approach not only enhances interpretability but also provides the basis for deploying the system in real-time safety applications.

4.5. Accuracy Evaluation of TGU-Net and Models in [3]

To further evaluate the model’s performance at different confidence levels used for anomaly classification, we analyzed its classification metrics, including accuracy, precision, recall, and F1 score. These metrics provide a comprehensive view of the model’s ability to correctly detect anomalies while minimizing false positives. The corresponding results are presented in

Table 2.

The baseline models achieve perfect recall, indicating that they successfully identify all anomalous cases. However, this comes at the cost of significantly lower precision due to a high number of false positives. These results imply that while both models are sensitive to detecting anomalies, they frequently misclassify normal scenes as anomalies, resulting in a high false alarm rate.

The TGU-Net w/o the mask already shows a notable improvement over the baseline approaches. It achieves higher accuracy and maintains perfect recall, successfully identifying all anomalous instances. Nevertheless, the presence of some false alarms indicates that residual noise in the scene, likely from static or irrelevant elements, can still cause occasional misclassification.

The final TGU-Net further enhances these results, achieving the highest accuracy and maintaining perfect recall, leading to a robust F1-score. This balance reflects the model’s strong capability in correctly identifying all anomalous cases while also minimizing false positives. These gains underscore the effectiveness of the object-masking strategy, which reduces background noise and allows the model to focus on dynamic, high-risk elements within the traffic scene, resulting in more consistent and trustworthy predictions.

4.6. Frame Delay Evaluation

Finally, to assess the timeliness of each model’s detection, we introduce a custom delay metric. This metric quantifies the reaction time of the model, measured in frames, from the onset of an anomalous event to its definitive detection. We formally define the delay

D for a single anomalous event as

where

(Ground Truth Frame) is the frame index where the anomalous event actually begins and

(Detection Frame) is the first frame index at which the model’s confidence score reaches its maximum value (100%), indicating a confident and complete detection.

Table 3 presents a comparative analysis of the ground truth frame (

), the model’s detection frame (

), and the resulting delay (

D) for the TGU-Net, its mask-free variant, and the two baseline architectures.

Models in [

3] demonstrate inconsistent behavior in early anomaly detection, often exhibiting significant delays. In multiple cases, they recognize anomalies well after the actual event, limiting their practicality in real-time applications.

The TGU-Net without the anomaly detection part shows notable improvement in early detection when compared to [

3]. In many scenarios, it successfully anticipates anomalies before they happen. However, its performance remains inconsistent in certain cases, occasionally failing to outperform the baseline models.

Within this context, the TGU-Net achieves a superior balance between early detection and prediction stability. It consistently reduces detection delays, often identifying anomalies at the exact moment they occur, or even slightly in advance, without losing precision. Moreover, it shows enhanced temporal stability, avoiding the fluctuations seen in the unmasked version, which occasionally introduced minor delays. As shown in

Figure 9, in the A-4-L-3 scenario all models converge around frame 30. However, TGU-Net and its unmasked variant reach full confidence earlier. Notably, the TGU-Net achieves 100% detection confidence precisely at the anomalous frame, as marked by the dotted horizontal line.

In summary, the TGU-Net demonstrates a good performance by enabling early and stable anomaly detection with sustained confidence. While the version without the anomaly detection part also surpasses baseline models in early detection, it falls short in terms of precision and consistency. These results underscore the performance of TGU-Net model in enhancing both detection stability and overall accuracy.