Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems

Abstract

1. Introduction

1.1. Personalized Online Advertising Systems

1.2. Spiking Neural Networks (SNNs)

1.3. Challenge and Objectives

- Design and develop novel SNN-based models tailored to the specific requirements and challenges of personalized online advertising systems, considering several complex factors such as user behavior patterns and information;

- Investigate and understand the potential advantages of SNN-based models over traditional ANN-based models in predicting CTR in the context of personalized online advertising;

- Conduct empirical experiments and comparative analysis to quantitatively assess the performance of the SNN-based models in terms of CTR prediction accuracy, efficiency, and adaptability in comparison to traditional ANN-based models;

- Provide valuable insights for boosting advertising effectiveness for businesses and users. By refining CTR predictions, businesses can optimize their ad placements and targeting strategies, leading to better campaign performance and increased ROI. On the other hand, users will see more relevant ads, improving their overall online experience and engagement.

2. Related Work and Contribution

2.1. Related Work

- Detection of weather images. The transmission of weather information from a location at specific time intervals has an impact on the living conditions of the people who live there, either directly or indirectly. Toğaçar et al. (2020) in their study focus on whether it is possible to make weather predictions based on weather images using today’s technology and computer systems [14]. They demonstrate that using SNNs in conjunction with deep learning models can achieve a successful result;

- Recognition of emotional states. Luo et. al. (2020) propose an innovative method for recognizing emotion states by combining spiking neural networks (SNNs) and electroencephalograph (EEG) techniques [15]. Two datasets are used to validate the proposed method. The results show that using the variance data processing technique and SNNs can classify emotional states more accurately. Overall, this work achieves a better performance than the benchmarking approaches and demonstrates the advantages of using SNNs for emotion state classifications;

- Event-based optical flow estimation. Kosta et al. (2023) focus on improving the task of optical flow estimation using SNNs with learnable Neuronal Dynamics [16]. Experiments on the Multi-Vehicle Stereo Event-Camera dataset and the DSEC-Flow dataset show a reduction in average endpoint error (AEE) compared to state-of-the-art ANNs.

- Combining convolutional neural networks (CNNs) and Factorization Machine (FM). Xiang-Yang et al. (2018) developed a model that extracts high-impact features with CNN and classifies them with FM, which can learn the relationship between feature components that are mutually distinct [17]. The experimental results show that the CNN–FM hybrid model can effectively improve the accuracy of advertising CTR prediction when compared to the single-structure model;

- Optimized Deep Belief Nets (DBNs). Chen et al. (2019) focus on increasing the training efficiency of a prediction model using DBNs by proposing a network optimization fusion model based on a stochastic gradient descent algorithm and an improved particle swarm optimization algorithm. The experiment results show that the fusion method improved the training efficiency of deep belief nets, resulting in a better CTR accuracy compared to the traditional models [18];

- Enhanced Parallel Deep CTR model. Current deep CTR models, such as Deep and Cross Networks (DCN), suffer from insufficient information sharing, which limits their effectiveness. Chen et al. (2021) propose an Enhanced Deep & Cross Network (EDCN) with lightweight, model-agnostic bridge and regulation modules, to enhance CTR prediction accuracy [19]. Extensive testing and real-world deployment on Huawei’s online advertising platform show that EDCN outperforms the baseline models;

- Deep Field-Embedded Factorization Machines (Deep FEFMs). Pande et al. (2023) showed that a Deep FEFM-based model, compared to other Field Factorization Machine-based models, has much lower complexity [20]. Deep FEFM combines the interaction vectors learned by the FEFM component with a Deep NN and is, therefore, able to learn higher-order interactions. Also, after conducting comprehensive experiments on two publicly available real-world datasets, the results showed that Deep FEFM outperformed the existing state-of-the-art shallow and deep models;

- Ensemble multiple models to improve results. Viktoratos et al. (2022) proposed a novel approach to designing personalized advertisement systems by leveraging device and application-related variables from cold-start users [21]. This system trains and tests state-of-the-art machine learning models on existing datasets and uses a deep learning ensembler to predict CTR.

2.2. Contribution

- This research proposes a novel approach, which is the first to investigate the use of SNNs in the CTR prediction problem;

- The SNN-based models that have been developed and tested are highly competitive and more energy efficient than current state-of-the-art approaches in CTR prediction, which would have a significant impact on the online advertising industry;

- The introduced SNN-based models demonstrate a versatile approach that can be applied to various domains beyond CTR prediction, such as financial forecasting, healthcare diagnostics, autonomous systems, and natural language processing. This work contributes to neural network research by providing a framework for implementing SNNs in real-world applications with temporal dynamics and event-driven data processing, guiding researchers and practitioners in leveraging SNNs’ unique characteristics like energy efficiency and temporal information processing across diverse problem domains;

- Potential economic and social impact for businesses and users. By improving the accuracy of ad targeting, businesses can increase revenue through higher click-through rates and conversions, while also providing a more personalized and relevant ad experience for users. This not only leads to economic benefits through improved resource allocation and increased sales but also enhances user satisfaction by reducing irrelevant ad exposure, ultimately fostering a more efficient and user-friendly digital ecosystem.

3. Materials and Methods

- Phase 1—First, well-known advertising datasets from publicly available sources were selected. Data preprocessing was conducted to ensure quality and consistency;

- Phase 2—A baseline machine learning algorithm, a baseline artificial neural network (ANN), and a spiking neural network (SNN) were developed, along with more advanced state-of-the-art models, all specifically tailored for click-through rate (CTR) classification tasks;

- Phase 3—In the third phase, the models were trained and tested on the datasets. All models were fine-tuned, exploring various neuron classes, learning rules, and network topologies to optimize performance for CTR prediction. Rigorous testing and validation were conducted using standard evaluation metrics, including AUC-ROC and accuracy. Throughout this process, iterative refinements were made based on experimental results to enhance the models’ accuracy and efficiency. Finally, an extensive analysis of the results was performed to evaluate the effectiveness of SNN-based approaches for CTR prediction, highlighting their strengths and potential areas for future improvement in computational advertising.

3.1. Phase 1—Dataset Selection and Preprocessing

3.2. Phase 2—Models

3.2.1. Logistic Regression

3.2.2. Deep Baseline Model

3.2.3. RSynaptic Model

- I_syn—Synaptic current;

- I_in—Input current;

- U—Membrane potential;

- U_(thr)—Membrane threshold;

- S_out—Output spike;

- R—Reset mechanism;

- α—Synaptic current decay rate;

- β—Membrane potential decay rate;

- V—Explicit recurrent weight.

3.2.4. Leaky Model

- I_in—Input current;

- U—Membrane potential;

- U_thr—Membrane threshold;

- R—Reset mechanism;

- β—Membrane potential decay rate.

3.2.5. RLeaky Model

- I_in—Input current;

- U—Membrane potential;

- U_thr—Membrane threshold;

- S_out—Output spike;

- R—Reset mechanism;

- β—Membrane potential decay rate;

- V—Explicit recurrent weight.

3.2.6. Deep & Cross Network v2 Model (DCN v2)

- Cross-Layer—The cross-layer network represents the core innovation of DCN models. DCN-v2 significantly improves upon its predecessor by replacing the single weight vector of DCN-v1 with a weight matrix W, substantially increasing the model’s expressiveness. This enhancement enables more sophisticated feature interaction modeling while maintaining the network’s ability to learn explicit high-order feature crosses efficiently;

- Deep Layers—Standard fully connected with multiple hidden layers with ReLU activation. Applied to the same input embeddings as the cross-layer network. Learns implicit high-order feature interactions;

- Integration Layer—The final layer combines representations from both the cross and deep networks. For binary classification tasks like CTR prediction, this typically involves a single output neuron with sigmoid activation that produces the final prediction probability by leveraging the complementary strengths of both network components.

3.2.7. Final MLP Model

- A.

- Feature Selection Module—Dynamically selects relevant features for each MLP branch using attention-like gating. Contains two independent gates, one for each MLP branch. Can use specific features as context for gating decisions. Sigmoid gates multiply feature embeddings element-wise.

- B.

- Dual MLP Branches—follow the same pattern, but can have different configurations.

- ○

- MLP1: Focuses on high-capacity feature learning (more parameters);

- ○

- MLP2: Focuses on refined, compact representations (fewer parameters);

- ○

- Different Dropouts: Varying regularization strengths;

- C.

- Interaction Aggregation Module—the most sophisticated component—is a multihead bilinear interaction module. Mathematically,where

- ○

- x, y: Outputs from MLP1 and MLP2;

- ○

- W_x, W_y: Linear projection weights;

- ○

- W_h: Bilinear interaction matrix for head h;

- ○

- H: Number of heads (default: 2);

- D.

- Output Layer—Converts logits to probabilities for CTR prediction. Output Range: [0, 1] represents click probability.

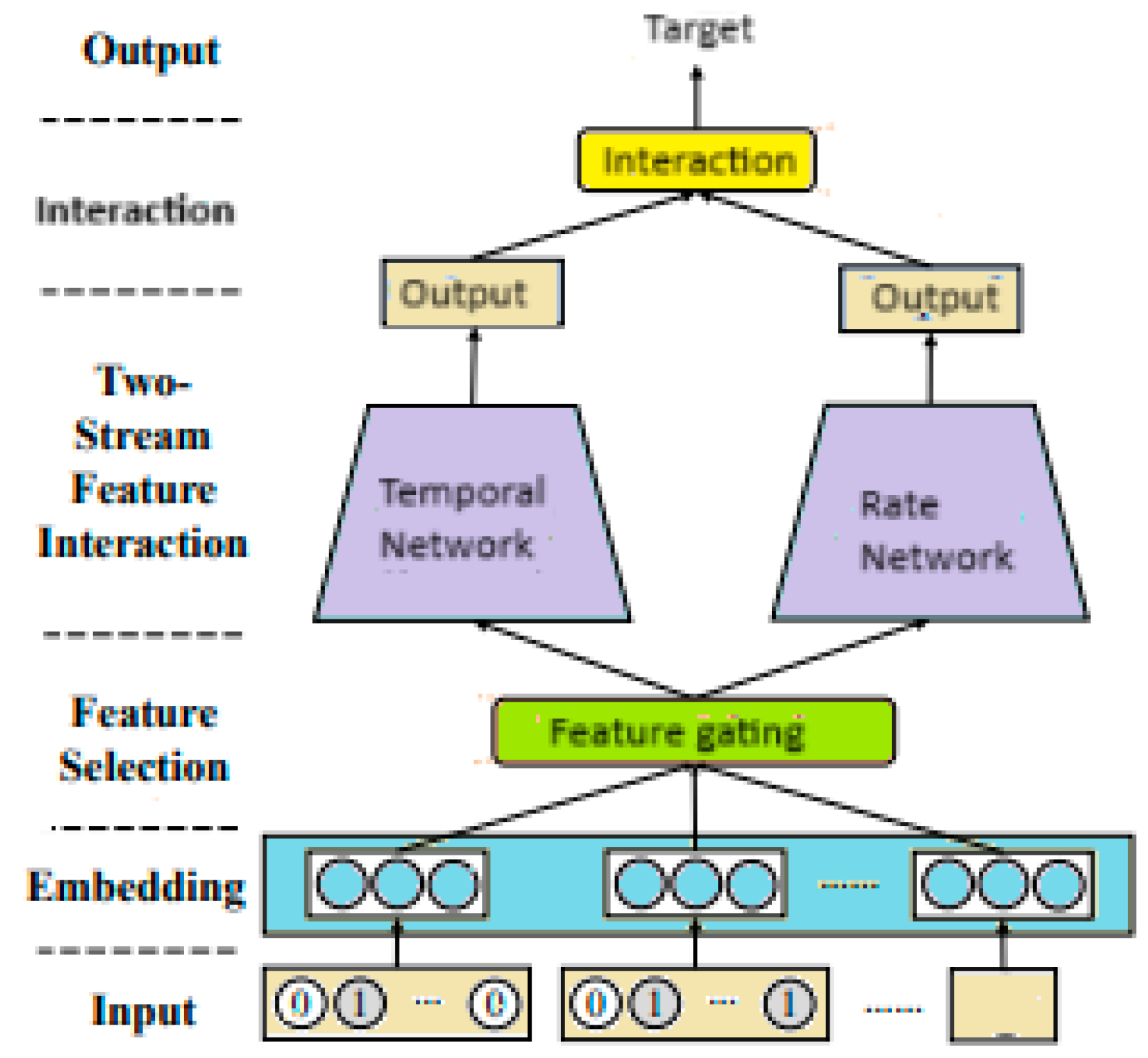

3.2.8. Temporal Rate Spike with Attention NN Model (TRA–SNN)

- Feature Selection Module—Similar to final MLPs, attention-based feature selection to identify important feature interactions. Multi-head self-attention with 2 attention heads captures inter-field dependencies and contextual relationships. Then, gating with a sigmoid is used to create two feature representations from the flattened embeddings. In detail:

- B.

- Temporal and Rate SNN Branches

- C.

- Interaction Aggregation Module

- —

- b is the batch size and nL the feature dimension;

- —

- ;

- —

- ;

- —

- ;

- —

- .

3.3. Phase 3—Training and Testing Models

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, J.; Kong, X.; Xia, F.; Bai, X.; Wang, L.; Qing, Q.; Lee, I. Artificial Intelligence in the 21st Century. IEEE Access 2018, 6, 34403–34421. [Google Scholar] [CrossRef]

- Neural Networks and Deep Learning. Available online: https://www.ijareeie.com/upload/2025/february/27_Neural.pdf (accessed on 10 October 2023).

- de Medeiros, M.M.; Hoppen, N.; Maçada, A.C.G. Data science for business: Benefits, challenges and opportunities. Bottom Line 2020, 33, 149–163. [Google Scholar] [CrossRef]

- Huang, P.; Gong, B.; Chen, K.; Wang, C. Energy-Efficient Neural Network Acceleration Using Most Significant Bit-Guided Approximate Multiplier. Electronics 2024, 13, 3034. [Google Scholar] [CrossRef]

- Stanojevic, A.; Woźniak, S.; Bellec, G.; Cherubini, G.; Pantazi, A.; Gerstner, W. High-performance deep spiking neural networks with 0.3 spikes per neuron. Nat. Commun. 2024, 15, 6793. [Google Scholar] [CrossRef] [PubMed]

- Sakalauskas, V.; Kriksciuniene, D. Personalized Advertising in E-Commerce: Using Clickstream Data to Target High-Value Customers. Algorithms 2024, 17, 27. [Google Scholar] [CrossRef]

- Deng, S.; Tan, C.-W.; Wang, W.; Pan, Y. Smart Generation System of Personalized Advertising Copy and Its Application to Advertising Practice and Research. J. Advert. 2019, 48, 356–365. [Google Scholar] [CrossRef]

- Research and Market Ltd. Online Advertising Market—Growth, Trends, COVID-19 Impact, and Forecasts (2021–2026). 2021. Available online: https://www.researchandmarkets.com/reports/4602258/online-advertising-market-growth-trends#product--toc (accessed on 10 October 2023).

- Yang, Y.; Zhai, P. Click-through rate prediction in online advertising: A literature review. Inf. Process. Manag. 2022, 59, 102853. [Google Scholar] [CrossRef]

- Viktoratos, I.; Tsadiras, A. Personalized Advertising Computational Techniques: A Systematic Literature Review, Findings, and a Design Framework. Information 2021, 12, 480. [Google Scholar] [CrossRef]

- Islam, R.; Majurski, P.; Kwon, J.; Sharma, A.; Tummala, S.R.S.K. Benchmarking Artificial Neural Network Architectures for High-Performance Spiking Neural Networks. Sensors 2024, 24, 1329. [Google Scholar] [CrossRef] [PubMed]

- Varshika, M.L.; Corradi, F.; Das, A. Nonvolatile Memories in Spiking Neural Network Architectures: Current and Emerging Trends. Electronics 2022, 11, 1610. [Google Scholar] [CrossRef]

- Ponulak, F.; Kasinski, A. Introduction to spiking neural networks: Information processing, learning and applications. Acta Neurobiol. Exp. 2011, 71, 409–433. [Google Scholar] [CrossRef] [PubMed]

- Toğaçar, M.; Ergen, B.; Cömert, Z. Detection of weather images by using spiking neural networks of deep learning models. Neural Comput. Appl. Oct. 2020, 33, 6147–6159. [Google Scholar] [CrossRef]

- Luo, Y.; Fu, Q.; Xie, J.; Qin, Y.; Wu, G.; Liu, J.; Jiang, F.; Cao, Y.; Ding, X. EEG-Based Emotion Classification Using Spiking Neural Networks. IEEE Access 2020, 8, 46007–46016. [Google Scholar] [CrossRef]

- Kosta, A.K.; Roy, K. Adaptive-SpikeNet: Event-based Optical Flow Estimation using Spiking Neural Networks with Learnable Neuronal Dynamics. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar] [CrossRef]

- She, X.; Wang, S. Research on Advertising Click-Through Rate Prediction Based on CNN-FM Hybrid Model. In Proceedings of the 2018 10th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 25–26 August 2018; pp. 56–59. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Q.; Wang, S.; Shi, J.; Zhao, Z. Click-through Rate Prediction Based on Deep Belief Nets and Its Optimization. J. Softw. 2019, 30, 3665–3682. [Google Scholar] [CrossRef]

- Chen, B.; Wang, Y.; Liu, Z.; Tang, R.; Guo, W.; Zheng, H.; Yao, W.; Zhang, M.; He, X. Enhancing Explicit and Implicit Feature Interactions via Information Sharing for Parallel Deep CTR Models. In Proceedings of the CIKM ’21: 30th ACM International Conference on Information & Knowledge Management, New York, NY, USA, 1–5 November 2021. [Google Scholar] [CrossRef]

- Pande, H. Field-Embedded Factorization Machines for Click-through rate prediction. arXiv 2021. [Google Scholar] [CrossRef]

- Viktoratos, I.; Tsadiras, A. A Machine Learning Approach for Solving the Frozen User Cold-Start Problem in Personalized Mobile Advertising Systems. Algorithms 2022, 15, 72. [Google Scholar] [CrossRef]

- Mao, K.; Zhu, J.; Su, L.; Cai, G.; Li, Y.; Dong, Z. FinalMLP: An Enhanced Two-Stream MLP Model for CTR Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Zhang, W.; Mi, X. A study on the efficiency and accuracy of neural network model to optimize personalized recommendation of teaching content. Appl. Math. Nonlinear Sci. 2025, 10, 1–23. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Weerts, H.J.P.; Mueller, A.C.; Vanschoren, J. Importance of Tuning Hyperparameters of Machine Learning Algorithms. arXiv 2020. [Google Scholar] [CrossRef]

- Zhu, H.; Liu, S.; Xu, W.; Dai, J. Linearithmic and unbiased implementation of DeLong’s algorithm for comparing the areas under correlated ROC curves. Expert Syst. Appl. 2024, 246, 123194. [Google Scholar] [CrossRef]

- Muir, D.R.; Sheik, S. The road to commercial success for neuromorphic technologies. Nat. Commun. 2025, 16, 3586. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Digix | Avazu |

|---|---|---|

| Branch Architecture | 256–128–64 | 150–150–150 |

| Gradient Approximation Strategy | Sigmoid surrogate | Sigmoid surrogate |

| Learning Rate | 0.0001 | 0.0001 |

| Reset Mechanism (for SNN) | None | subtract |

| Number of Time Steps (SNNs) | 4 | 15 |

| Optimizer | Adam | Adam |

| Batch size | 2086 | 2086 |

| Embedding Size | 40 | 32 |

| Activation Functions | Relu in hidden layers, Sigmoid in output | Relu in hidden layers, Sigmoid in output |

| Loss Function | BCE | MSE |

| Epochs | 10 | 10 |

| Dropout | 0.1 | 0.1 |

| Weight Initialization | Xavier | Xavier |

| Model Name | AUC ROC | Accuracy | Log Loss | PI(%) |

|---|---|---|---|---|

| Logistic Regression | 0.6550 | 0.785 | 0.4743 | 15.0 |

| Leaky | 0.7307 | 0.792 | 0.4502 | 3.16 |

| RLeaky | 0.7411 | 0.796 | 0.4418 | 1.71 |

| RSynaptic | 0.7487 | 0.798 | 0.4397 | 0.68 |

| Deep Baseline | 0.7502 | 0.801 | 0.4356 | 0.48 |

| DCN-V2 | 0.7531 | 0.808 | 0.4334 | 0.09 |

| TRA–SNN | 0.7538 | 0.809 | 0.4331 | - |

| FinalMLP | 0.7552 | 0.810 | 0.4319 | −0.19 |

| Model Name | AUC ROC | Accuracy | Log Loss | PI(%) |

|---|---|---|---|---|

| Logistic Regression | 0.6178 | 0.8000 | 0.4926 | 15.18 |

| Leaky | 0.6906 | 0.8038 | 0.4694 | 3.04 |

| RLeaky | 0.6944 | 0.8045 | 0.4613 | 2.48 |

| RSynaptic | 0.7073 | 0.8061 | 0.4585 | 0.61 |

| Deep Baseline | 0.7098 | 0.8075 | 0.4547 | 0.25 |

| DCN-V2 | 0.7111 | 0.8093 | 0.4523 | 0.07 |

| TRA–SNN | 0.7116 | 0.8096 | 0.4521 | - |

| FinalMLP | 0.7119 | 0.8097 | 0.4512 | −0.04 |

| Model Name | MACs Avazu | MACs Digix | Average EEI(%) |

|---|---|---|---|

| DCN-V2 | 1.2417 | 1.5826 | 75% |

| FinalMLP | 0.4180 | 0.5099 | 24% |

| TRA–SNN | 0.3142 | 0.3924 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uruqi, A.; Viktoratos, I. Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems. Forecasting 2025, 7, 38. https://doi.org/10.3390/forecast7030038

Uruqi A, Viktoratos I. Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems. Forecasting. 2025; 7(3):38. https://doi.org/10.3390/forecast7030038

Chicago/Turabian StyleUruqi, Albin, and Iosif Viktoratos. 2025. "Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems" Forecasting 7, no. 3: 38. https://doi.org/10.3390/forecast7030038

APA StyleUruqi, A., & Viktoratos, I. (2025). Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems. Forecasting, 7(3), 38. https://doi.org/10.3390/forecast7030038