Expansions for the Conditional Density and Distribution of a Standard Estimate

Abstract

1. Introduction and Summary

2. Multivariate Edgeworth Expansions

3. The Conditional Density and Distribution

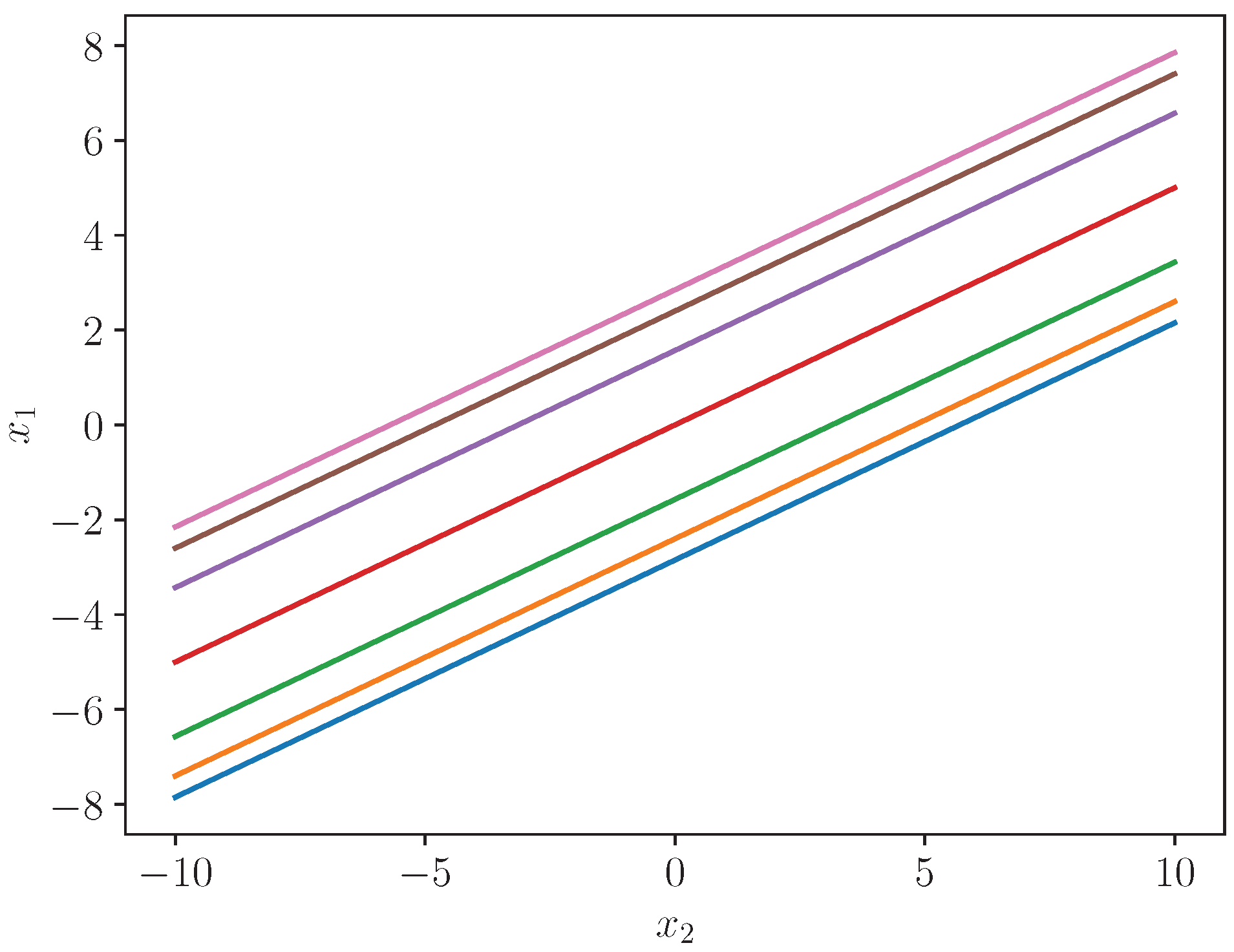

4. The Case

5. Conclusions

6. Discussion

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Conditional Moments

References

- Withers, C.S. 5th-Order Multivariate Edgeworth Expansions for Parametric Estimates. Mathematics 2024, 12, 905. Available online: https://www.mdpi.com/2227-7390/12/6/905/pdf (accessed on 20 December 2024). [CrossRef]

- Withers, C.S.; Nadarajah, S. Tilted Edgeworth expansions for asymptotically normal vectors. Ann. Inst. Stat. Math. 2010, 62, 1113–1142. [Google Scholar] [CrossRef]

- Withers, C.S. Edgeworth coefficients for standard multivariate estimates. Axioms 2025, 14, 632. [Google Scholar] [CrossRef]

- Shenton, L.R.; Bowman, K.O. Maximum Likelihood Estimation in Small Samples; Griffin’s Statistical Monograph: London, UK, 1977. [Google Scholar]

- Withers, C.S.; Nadarajah, S. Asymptotic properties of M-estimators in linear and nonlinear multivariate regression models. Metrika 2014, 77, 647–673. [Google Scholar] [CrossRef]

- Withers, C.S. Accurate confidence intervals when nuisance parameters are present. Comm. Statist.-Theory Methods 1989, 18, 4229–4259. [Google Scholar] [CrossRef]

- Barndoff-Nielsen, O.E.; Cox, D.R. Inference and Asymptotics; Chapman and Hall: London, UK, 1994. [Google Scholar]

- Barndoff-Nielsen, O.E.; Cox, D.R. Asymptotic Techniques for Use in Statistics; Chapman and Hall: London, UK, 1989. [Google Scholar]

- Bhattacharya, R.N.; Rao, R.R. Normal Approximation and Asymptotic Expansions; SIAM: Philadelphia, PA, USA, 2010. [Google Scholar]

- Hall, P. The Bootstrap and Edgeworth Expansion; Springer: New York, NY, USA, 1992. [Google Scholar]

- Booth, J.; Hall, P.; Wood, A. Bootstrap estimation of conditional distributions. Ann. Stat. 1992, 20, 1594–1610. [Google Scholar] [CrossRef]

- DiCiccio, T.J.; Martin, M.A.; Young, G.A. Analytical approximations to conditional distribution functions. Biometrika 1993, 80, 781–790. [Google Scholar] [CrossRef]

- Hansen, B.E. Autoregressive conditional density estimation. Int. Econ. Rev. 1994, 35, 705–730. [Google Scholar] [CrossRef]

- Klüppelberg, C.; Seifert, M.I. Explicit results on conditional distributions of generalized exponential mixtures. J. Appl. Probab. 2020, 57, 760–774. [Google Scholar] [CrossRef]

- Moreira, M.J. A conditional likelihood ratio test for structural models. Econometrica 2003, 71, 1027–1048. [Google Scholar] [CrossRef]

- Pfanzagl, P. Conditional distributions as derivatives. Ann. Probab. 1979, 7, 1046–1050. [Google Scholar] [CrossRef]

- Withers, C.S.; Nadarajah, S.N. Charlier and Edgeworth expansions via Bell polynomials. Probab. Math. Stat. 2009, 29, 271–280. [Google Scholar]

- Anderson, T.W. An Introduction to Multivariate Analysis; John Wiley: New York, NY, USA, 1958. [Google Scholar]

- Comtet, L. Advanced Combinatorics; Reidel: Dordrecht, The Netherlands, 1974. [Google Scholar]

- Stuart, A.; Ord, K. Kendall’s Advanced Theory of Statistics, 5th ed.; Griffin: London, UK, 1991; Volume 2. [Google Scholar]

- Withers, C.S.; Nadarajah, S. Nonparametric estimates of low bias. REVSTAT Stat. J. 2012, 10, 229–283. [Google Scholar]

- Withers, C.S.; Nadarajah, S. Bias reduction: The delta method versus the jackknife and the bootstrap. Pak. J. Statist. 2014, 30, 143–151. [Google Scholar]

- Withers, C.S.; Nadarajah, S. Bias reduction for standard and extreme estimates. Commun. Stat.-Simul. Comput. 2023, 52, 1264–1277. [Google Scholar] [CrossRef]

- Hall, P. Rejoinder: Theoretical Comparison of Bootstrap Confidence Intervals. Ann. Stat. 1988, 16, 981–985. [Google Scholar] [CrossRef]

- Skovgaard, I.M. Edgeworth expansions of the distributions of maximum likelihood estimators in the general (non i.i.d.) case. Scand. J. Statist. 1981, 8, 227–236. [Google Scholar]

- Skovgaard, I.M. Transformation of an Edgeworth expansion by a sequence of smooth functions. Scand. J. Statist. 1981, 8, 207–217. [Google Scholar]

- Withers, C.S.; Nadarajah, S. The distribution and percentiles of channel capacity for multiple arrays. Sadhana Sadh Indian Acad. Sci. 2020, 45, 1–25. [Google Scholar] [CrossRef]

- Skovgaard, I.M. On multivariate Edgeworth expansions. Int. Statist. Rev. 1986, 54, 169–186. [Google Scholar] [CrossRef]

- Cornish, E.A.; Fisher, R.A. Moments and cumulants in the specification of distributions. Rev. l’Inst. Int. Statist. 1937, 5, 307–322. [Google Scholar] [CrossRef]

- Fisher, R.A.; Cornish, E.A. The percentile points of distributions having known cumulants. Technometrics 1960, 2, 209–225. [Google Scholar] [CrossRef]

- Skovgaard, I.M. Saddlepoint expansions for conditional distributions. J. Appl. Probab. 1987, 24, 875–887. [Google Scholar] [CrossRef]

- Butler, R.W. Saddlepoint Approximations with Applications; Cambridge University Press: Cambridge, UK, 2007; pp. 107–144. [Google Scholar] [CrossRef]

- Daniels, H.E. Saddlepoint approximations in statistics. Ann. Math. Statist. 1954, 25, 631–650. [Google Scholar] [CrossRef]

- Hill, G.W.; Davis, A.W. Generalised asymptotic expansions of Cornish-Fisher type. Ann. Math. Statist. 1968, 39, 1264–1273. [Google Scholar] [CrossRef]

- Withers, C.S.; Nadarajah, S. Generalized Cornish-Fisher expansions. Bull. Brazilian Math. Soc. New Series 2011, 42, 213–242. [Google Scholar] [CrossRef]

- Withers, C.S.; Nadarajah, S. Expansions about the gamma for the distribution and quantiles of a standard estimate. Methodol. Comput. Appl. Probab. 2014, 16, 693–713. [Google Scholar] [CrossRef]

- Jing, B.; Robinson, J. Saddlepoint approximations for marginal and conditional probabilities of transformed variables. Ann. Statist. 1994, 22, 1115–1132. [Google Scholar] [CrossRef]

- McCullagh, P. Tensor notation and cumulants of polynomials. Biometrika 1984, 71, 461–476. [Google Scholar] [CrossRef]

- McCullagh, P. Tensor Methods in Statistics; Chapman and Hall: London, UK, 1987. [Google Scholar]

- Teal, P. A Code to Calculate Bivariate Hermite Polynomials. 2024. Available online: https://github.com/paultnz/bihermite/tree/main (accessed on 20 December 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Withers, C.S. Expansions for the Conditional Density and Distribution of a Standard Estimate. Stats 2025, 8, 98. https://doi.org/10.3390/stats8040098

Withers CS. Expansions for the Conditional Density and Distribution of a Standard Estimate. Stats. 2025; 8(4):98. https://doi.org/10.3390/stats8040098

Chicago/Turabian StyleWithers, Christopher S. 2025. "Expansions for the Conditional Density and Distribution of a Standard Estimate" Stats 8, no. 4: 98. https://doi.org/10.3390/stats8040098

APA StyleWithers, C. S. (2025). Expansions for the Conditional Density and Distribution of a Standard Estimate. Stats, 8(4), 98. https://doi.org/10.3390/stats8040098