Abstract

The current replication crisis relating to the non-replicability and the untrustworthiness of published empirical evidence is often viewed through the lens of the Positive Predictive Value (PPV) in the context of the Medical Diagnostic Screening (MDS) model. The PPV is misconstrued as a measure that evaluates ‘the probability of rejecting when false’, after being metamorphosed by replacing its false positive/negative probabilities with the type I/II error probabilities. This perspective gave rise to a widely accepted diagnosis that the untrustworthiness of published empirical evidence stems primarily from abuses of frequentist testing, including p-hacking, data-dredging, and cherry-picking. It is argued that the metamorphosed PPV misrepresents frequentist testing and misdiagnoses the replication crisis, promoting ill-chosen reforms. The primary source of untrustworthiness is statistical misspecification: invalid probabilistic assumptions imposed on one’s data. This is symptomatic of the much broader problem of the uninformed and recipe-like implementation of frequentist statistics without proper understanding of (a) the invoked probabilistic assumptions and their validity for the data used, (b) the reasoned implementation and interpretation of the inference procedures and their error probabilities, and (c) warranted evidential interpretations of inference results. A case is made that Fisher’s model-based statistics offers a more pertinent and incisive diagnosis of the replication crisis, and provides a well-grounded framework for addressing the issues (a)–(c), which would unriddle the non-replicability/untrustworthiness problems.

1. Introduction

All approaches to statistical inference revolve around three common elements:

- (A)

- Substantive subject matter information (however vague, specific, or formal) which specifies the questions of interest. This often comes in the form of an aPriori Postulated (aPP) model, say , , based on an equation with an error term (usually white-noise) attached. The quintessential example of an aPP model is the linear model:where ‘’ stands for ‘Normal (N), Independent (I) and Identically Distributed (ID).

- (B)

- Appropriate data that could shed light on the pertinence of the substantive information framed by .

- (C)

- A set of probabilistic assumptions imposed (directly or indirectly via unobservable error terms) on data , comprising the invoked statistical model, say , , whose validity underwrites the reliability of inference and the trustworthiness of the ensuing evidence.

For the discussion that follows, it is important to define the key concepts incisively. An empirical study is said to be replicable if its statistical results: (i) can be independently confirmed with very similar or consistent results by other researchers, (ii) using the same or akin data, and (iii) studying the same phenomenon of interest. Statistical evidence is said to be untrustworthy when: (a) any of probabilistic assumptions is invalid for data , which (b) undermines the optimality and reliability of the ensuing inference procedures, and/or (c) misinterpreting the inference results by eliciting unwarranted evidence relating to the parameters .

In a highly influential paper, Ioannidis [1] made a case that “most published research findings are false” by proposing a widely accepted explanation based on:

- [S1]

- Viewing the untrustworthiness of evidence problem at a discipline-wide level as an instantiation of the Positive Predictive Value (PPV) measure of the Medical Diagnostic screening (MDS) model, after replacing its false positive/negative probabilities with the type I/II error probabilities, and adding a prior distribution for the unknown parameter

- [S2]

- Inferring that the non-replicability stems primarily from abuses of frequentist testing inflating the nominal into a higher actual type I error probability, yielding high rates of rejections of true null hypotheses which lower the replication rates.

During the last two decades, the replication crisis literature has taken presumptions [S1]–[S2] at face value and focused primarily on amplifying how the non-replication of published empirical results provides prima facie evidence of their untrustworthiness, pointing the finger at several abuses of frequentist testing, including p-hacking, data-dredging, optional stopping, double dipping, and HARKing; see Baker [2], Höffler [3], Wasserstein et al. [4]. The apparent non-replicability has been affirmed in several disciplines (Simmons et al. [5]; Camerer et al. [6] inter alia). As a result of that, several leading statisticians and journal editors called for reforms (Benjamin et al. [7]; Shrout and Rodgers [8] inter alia), which include:

- [i]

- replacing p-values with Confidence Intervals (CIs) and effect sizes, and

- [ii]

- redefining statistical significance by reducing the conventional threshold of to a smaller value, say ; see Johnson et al. [9].

The primary objective of this paper is twofold. First, to argue that the untrustworthiness of published empirical evidence has been endemic in several scientific disciplines since the 1930s, and the leading contributor to the untrustworthiness has been statistical misspecification: invalid probabilistic assumptions imposed on the data in (C) above. An effective way to corroborate that is to replicate several influential papers in a particular research area with the same or akin data, and affirm or deny their trustworthiness or/and replicability by first testing the validity of their invoked probabilistic assumptions; see Andreou and Spanos [10], Do and Spanos [11]. In contrast, Ioannidis [1] makes no such attempt to corroborate his claims, but instead employs a metaphor, based on the PPV, that invokes stipulations [S1]–[S2] grounded on several questionable presuppositions.

Second, to put forward an alternative explanation about the main sources of the untrustworthiness of published empirical evidence and propose ways to ameliorate the problem. It is argued that the untrustworthiness stems primarily from a much broader problem relating to the uninformed and recipe-like implementation of frequentist statistics (a) without proper understanding of the invoked statistical model and its validity for data , (b) using incongruous implementations of frequentist inference procedures that misconstrue their error probabilities, as well as (c) invoking unwarranted evidential interpretations of their results, such as misinterpreting ‘accept ’ (no evidence against ) as evidence for ’ or misinterpreting ‘reject ’ (evidence against ) as evidence for a particular ’. As incisively argued by Stark and Saltelli [12], p. 41:

“…Practitioners go through the motions of fitting models, computing p-values or confidence intervals, or simulating posterior distributions. They invoke statistical terms and procedures as incantations, with scant understanding of the assumptions or relevance of the calculations, or even the meaning of the terminology. This demotes statistics from a way of thinking about evidence and avoiding self-deception to a formal “blessing” of claims”.

It is argued that Fisher’s [13] model-based frequentist inference framework provides effective ways to address both the non-replicability and the untrustworthiness problems by dealing with the issues (a)–(c) raised above. Special emphasis is placed on distinguishing between ‘inference results’, such as a point estimate, an observed Confidence Interval (CI), an effect size, and accept/reject which are too coarse and unduly ‘data-specific, and their inductive generalizations framed as evidence for germane inferential claims relating to the ‘true’ say . The latter are outputted by the post-data severity evaluation (SEV) of testing results. This distinction is crucial since inference results are not often replicable with ‘akin’ data, but the SEV inferential claims would be replicable when the invoked statistical model is statistically adequate (valid assumptions) for the particular data.

Section 2 provides a summary of Fisher’s [13] model-based frequentist statistics, with particular emphasis on the statistical adequacy of (implicitly) invoked by all model-based inferences (frequentist and Bayesian), as a prelude to the discussion. Section 3 revisits the MDS model (a bivariate simple Bernoulli) underlying the PPV to compare and contrast its false positive/negative rates with the Neyman-Pearson (N-P) type I/II error probabilities. Section 4 elaborates on the uninformed and recipe-like implementation of frequentist statistics by highlighting several unwarranted evidential interpretations of their results. Particular emphasis in placed on the role of establishing the statistical adequacy of to ensure that the actual error probabilities approximate closely the nominal ones, rendering them tractable. Section 5 discusses how the post-data severity (SEV) evaluation of the accept/reject results (Mayo and Spanos [14]) transmutes them into replicable evidence which could give rise to learning from about the phenomena of interest.

2. Model-Based Frequentist Statistics

2.1. Fisher’s Model-Based Statistical Framing

This section provides a bird’s eye view of frequentist inference, in general, and N-P testing in particular, in an attempt to preempt needless confusion relating to what is traditionally known as the Null Hypothesis Significance Testing (NHST) practice; see Nickerson [15], Spanos [16].

Fisher [13] founded model-based statistical induction that revolves around the concept of a prespecified parametric statistical model, whose generic form is:

where denotes the distribution of the sample the sample space, and the parameter space. can be viewed as a particular parameterization ( of the observable stochastic process underlying data . provides an ‘idealized’ description of a statistical mechanism that could have given rise to . The main objective of model-based frequentist inference is to give rise to learning from data by narrowing down to a small neighborhood around the ‘true’ value of in say whatever value that happens to be; see Spanos [17] for further discussion.

The statistical adequacy of for data plays a crucial role in securing the reliability of inference and the trustworthiness of evidence because it ensures that the actual error probabilities approximate closely the nominal ones, enabling the ‘control’ of these unobservable probabilities. As a result, when is misspecified:

- (a)

- the distribution of the sample in (1) is erroneous,

- (b)

- rendering the likelihood function invalid,

- (c)

- distorting the sampling distribution of any relevant statistic (estimator, test, predictor).

In turn, (a)–(c) give rise to (i) ‘non-optimal’ inference procedures, and (ii) induce sizeable discrepancies between the actual and nominal error probabilities; arguably the most crucial contributor to the untrustworthiness of empirical evidence. Applying a 0.05 significance level test, when the actual type I error probability is 0.97 (Spanos [18] Table 15.5) will give rise to untrustworthy evidence. Hence, the only way to keep track of the relevant error probabilities is to establish the statistical adequacy of to forefend the unreliability of inference stemming from (a)–(c), using thorough Mis-Specification (M-S) testing; see Spanos [19]. This will secure the optimality and reliability of the ensuing inferences, giving rise to trustworthy evidence.

2.2. Frequentist Inference: Estimation

Example 1. Consider the simple Normal model:

The sampling distributions of the optimal estimators of , and , evaluated under factual reasoning () are:

and (iii) and are independent, where denotes the chi-square distribution with degrees of freedom (d.f.). Assumption (iii) implies:

for any well-behaved (Borel) functions ; see Spanos [16]. The factual () reasoning ensures that and , yielding:

This gives rise to the sampling distribution of the pivot:

where denotes the Student’s t distribution with d.f.

(4) underlies the Uniformly Most Accurate (UMA) CI for :

where denotes the distribution threshold of the relevant tail area with probability. The optimal property of UMA denotes a CI with the shortest expected length.

2.3. Neyman-Pearson (N-P) Testing

Example 1 (continued). In the context of (2), testing the hypotheses:

gives rise to the Uniformly Most Powerful (UMP) -significance level N-P test:

(Lehmann and Romano [20]) where is the rejection region, and is determined by the prespecified .

The distribution of evaluated using hypothetical reasoning (what if ):

ensures that underlying the evaluations of:

The sampling distribution of evaluated under (what if ) is:

where is the noncentrality parameter, and (10) is used to evaluate the type II error probability and the power for a given :

It should be emphasized that these error probabilities are assigned to a particular N-P test, e.g., to calibrate its generic (for any ) capacity to detect different discrepancies from ; see Neyman and Pearson [21].

The primary role of these error probabilities is to operationalize the notions of ‘statistically significant/insignificant’ in the form of ‘accept/reject results’. The optimality of N-P tests revolves around an in-built trade-off between the type I and II error probabilities, and an optimal N-P test is derived by prespecifying at a low value and minimizing the type II error , or maximizing the power , . In summary, the error probabilities have several key attributes Spanos [16]:

- [i]

- They are assigned to the test procedure to ‘calibrate’ its generic (for any ) capacity to detect different discrepancies from .

- [ii]

- They cannot be conditional on , an unknown constant (not a random variable).

- [iii]

- There is a built-in trade-off between the type I and II error probabilities.

- [iv]

- They frame the accept/reject rules in terms of ‘statistical approximations’ based on the distribution of evaluated using hypothetical reasoning.

- [v]

- They are unobservable since they revolve around -true value of .

This was clearly explained in Fisher’s 1955 reply to a letter from John Tukey: “A level of significance is a probability derived from a hypothesis [hypothetical reasoning], not one asserted in the real world” (Bennett [22] p. 221).

2.4. An Inconsistent Hybrid Logic Burdened with Confusion?

In an insightful discussion, Gigerenzer [23] describes the traditional narrative of statistical testing created by textbook writers in psychology during the 1950s and 1960s, as based on ‘a hybrid logic’: “Neither Fisher nor Neyman and Pearson would have accepted this hybrid as a theory of statistical inference. The hybrid logic is inconsistent from both perspectives and burdened with conceptual confusion” (p. 324)

It is argued that Fisher’s model-based statistical induction could provide a unifying ‘reasoning’ that elucidates the similarities and differences between Fisher’s inductive inference and Neyman’s inductive behavior; see Halpin and Stam [24].

Using the t-test in (7), the two perspectives have several common components:

(i) a prespecified statistical model

(ii) the framing of hypotheses in terms of

(iii) a test statistic ,

(iv) a null hypothesis :

(v) the sampling distribution of evaluated under and

(vi) a probability threshold (etc.) to decide when is discordant/rejected.

The N-P perspective adds to the common components (i)–(vi),

(vii) re-interpreting as a prespecified(pre-datat) type I error probability,

(viii) an alternative hypothesis : to supplement : ,

(ix) the sampling distribution of evaluated under

(x) the type II error probability and the power of a test.

These added components frame an optimal theory of N-P testing based on constructing an optimal test statistic and framing a rejection region to maximize the power.

The key to fusing the two perspectives is the ‘hypothetical reasoning’ underlying the derivation of both sampling distributions in (v) and (ix). The crucial difference is that the type I and II (power) error probabilities are pre-data because they calibrate the test’s generic (for all ) capacity to detect discrepancies from , but the p-value is a post-data error probability since its evaluation is based on .

The traditional textbook narrative considers Fisher’s significance testing, based on : and guided by the p-value, problematic since the absence of an explicit alternative renders the power and the p-value ambivalent, e.g., one-sided or two-sided? This, however, misconstrues Fisher’s significance testing since his p-value is invariably one-sided because, post-data, the sign of designates the relevant tail. In fact, the ambivalence originates in the N-P-laden definition of the p-value: ‘the probability of obtaining a result ‘equal to or more extreme’ than the one observed when is true’. The clause ‘equal or more extreme’ is invariably (mis)interpreted in terms of . Indeed, the p-value is related to by interpreting as the smallest significance level for which a true would have been rejected; see Lehmann and Romano [20]. A more pertinent post-data definition of the p-value that averts this ambivalence is: ‘the probability of all sample realizations that accord less well (in terms of ) with than does, when is true’; see Spanos [18].

Similarly, the power of a test comes into play with just the common components (i)–(vi) since the probability threshold defines the distribution threshold for the probability of detecting discrepancies of the form using the non-central Student’s t in (10), originally derived by Fisher [25]. Indeed, Fisher [26] pp. 21–22, was the first to recognize the effect of increasing n on the power (he called sensitivity): “By increasing the size of the experiment, we can render it more sensitive, meaning by this that it will allow of the detection of … quantitatively smaller departures from the null hypothesis”, which is particularly useful in experimental design; see Box [27].

The above arguments suggest that when the underlying hypothetical reasoning and the pre-data vs. post-data error probabilities are delineated, there is no substantial conflict or conceptual confusion between the Fisher and N-P perspectives. What remains problematic, however, is that neither the p-value nor the accept/reject results provide cogent evidence because they are too coarse to designate a small neighborhood containing to engender any genuine learning from data via The post-data severity (SEV) evaluation offers such an evidential interpretation in the form of a discrepancy from warranted by and data with high enough probability; see Section 5.

3. The Medical Diagnostic Screening Perspective

3.1. Revisiting the MDS Statistical Model

The statistical analysis of MDS was pioneered by Yerushalmy [28] and Neyman [29]. The concept of the false-positive (negative) rate relates to the proportion of results of a medical diagnostic test indicating falsely that a medical condition exists (does not exist). ‘Sensitivity’ denotes the proportion of positives that are correctly identified, and ‘specificity’ is the proportion of negatives that are correctly identified.

Example 2. Viewing the MDS in the context of model-based inference, the invoked is a simple (bivariate) Bernoulli (Ber) model:

where (-test positive, (-test negative, (-disease, and (-no disease; see Spanos [30]. is often presented in the form of the contingency in Table 1, where (Bishop et al. [31]):

Table 1.

contingency table.

The MDS revolves around several measures relating to the ‘effectiveness’ of the screening, as shown in Table 2, representing the parameters of substantive interest in terms of which inferences are often framed.

Table 2.

Medical Diagnostic Screening Measures.

The relevant data for the MDS are usually generated under ‘controlled conditions’ in order to secure the validity of the IID assumptions, and the results are based on a large number of medical tests, say carried out with specimens prepared in a lab that is known to be positive or negative; see Senn [32]. This renders observable events whose frequencies can be used to estimate, not only the probabilities in Table 1, but also the parameters of interest in Table 2. The unknown parameters are estimated using Maximum Likelihood (ML):

where is the indicator function and denotes the frequencies in each cell. ML estimators are used since they are invariant to reparametrizations needed to estimate the parameters of interest (Table 2); see Greenhouse and Mantel [33], Nissen-Meyer [34].

Example 2 (empirical). The ML estimates in (13), in the form of the observed relative frequencies based on are given in Table 3. The point estimates of the MDS measures in Table 2 are shown in Table 4, where the specificity is reasonable, but the PPV indicates that the screening is not reliable enough.

Table 3.

table of relative frequencies.

Table 4.

Estimates of MDS measures.

Note that all the above probabilities are estimable since X and Y are observable (Senn [32], in contrast to the N-P error probabilities that revolve around .

3.2. The PPV and Its Impertinent Metamorphosis

The PPV is a well-known measure of the effectiveness of a MDS evaluating: ‘the probability of a patient testing positive, given that the patient has the disease’:

derived from the probabilities of the binary observable events in Table 1.

Ioannidis [1] metamorphosed the PPV by (a) replacing the false positive/negative with the type I/II error probabilities:

where is the power, and (b) adding a prior:

where denotes the ‘true’ to define the Metamorphosed PPV:

aiming to evaluate ‘the probability of rightful rejections of (when false)’.

Unfortunately, the substitutions used in (17) are incongruous since the two sides in (15) have nothing in common. In particular, the type I/II error probabilities are testing-based, grounded on hypothetical reasoning ( or ), and satisfying features [i]–[v] (Section 2.3). In contrast, the false positive/negative probabilities are estimation-based, grounded on factual reasoning (), and belying [i]–[v] since:

- [i]*

- They are assigned to the observable events and

- [ii]*

- They are observable conditional and probabilities.

- [iii]*

- There is no trade-off between and .

- [iv]*

- [v]*

- They are observable probabilities that can be estimated using data .

Example 3. In the field of psychology, assume (10% false nulls), (high enough), and (higher than due to abuses of the p-value) yieldsM-PPV which Ioannidis [1] would interpret as evidence that “most published research findings [in psychology] are false”.

This evaluation raises serious questions of pertinence. ‘Why would the power denoting the generic capacity of a N-P test to detect all discrepancies for all relating to detecting an arbitrary discrepancy with be of any interest in practice?’ Why 10% false nulls? Given that for any even when one assumes (erroneously) that such an assignment makes sense.

To reveal the problems with the evaluation of the M-PPV at a more practical level, consider the task of collecting (IID) data, analogous to the MDS data in Table 3, from the ‘population’ of all published empirical studies in psychology. Such a task is instantly rendered impossible by the fact that ‘ is true/false’ is unobservable since they revolve around . This is why Ioannidis makes no such attempt and instead evaluates the M-PPV by plucking numbers from thin air.

As mentioned above, a more effective procedure to make a case for the untrustworthiness of published empirical evidence would be to replicate the most cited empirical papers in a particular research field with the same or akin data, and affirm or deny their untrustworthiness/non-replicability, by probing their statistical adequacy first. A recent example of such replication is discussed in Do and Spanos [11], which relates to the most famous empirical relationship in macroeconomics, the Phillips curve. Some of the most highly cited/influential published papers in major economics journals since the late 1950s have been replicated using the original data. Their replication confirmed their numerical results with minor differences in precision. However, when the statistical adequacy is probed, all these papers were found to be statistically misspecified, rendering their empirical findings untrustworthy.

By focusing on the untrustworthiness at a discipline-wide level, the M-PPV has nothing to say about the virtues or flaws of the individual papers. Therefore, an empirical study in psychology that does an excellent job of forefending statistical misspecification and erroneous interpretations of its inference results, should not be dismissed as untrustworthy by association, just because one’s conjectural evaluation yields M-PPV . Worse, the Bonferroni-type corrections for the questionable practices blamed by the replication literature make sense only at the level of an individual study. Indeed, such adjustments will be pointless when that study is statistically misspecified, since the latter would render the error probabilities intractable by the induced sizeable discrepancies between the actual and nominal ones (Section 4.1).

In summary, Ioannidis [1] makes his case using an incongruous metaphor free of any empirical evidence in conjunction with highly questionable claims, including:

- [a]

- The accept/reject results are in essence misconstrued as evidence for /.

- [b]

- The observable/conditional false positive/negative probabilities of the PPV are viewed as equivalent to the unobservable/unconditional type I/II error probabilities.

- [c]

- Imputing a contrived ‘prior’ in (16) into N-P testing, by viewing the falsity of and at a discipline-wide level as a ‘bag of nulls, a proportion of which is false’.

- [d]

- Invoking a direct causal link between the replicability and the trustworthiness of empirical evidence.

3.3. Could the M-PPV Shed Any Light on Untrustworthiness?

The question that naturally arises is why the case by Ioannidis [1], grounded on the M-PPV, has been so widely accepted. A plausible explanation could be that its apparent credibility stems from the same uninformed and recipe-like implementation of statistics, which cursorily uses the terms ‘type I/II error probabilities’ and ‘false positive/negative probabilities’ interchangeably, ignoring their fundamental differences in their nature and crucial attributes [i]–[v] vs. [i]*–[v]*.

To make the impertinence of this claim more transparent, consider using the same Bernoulli model in (12) for the Berkeley admissions data (Freedman et al. [35] pp. 17–20), which would alter the BDS terminology of the random variables (Table 5).

Table 5.

MDS vs. Berkeley admissions.

This would leave unchanged the statistical analysis in Table 1, Table 3 and Table 4, but the MDS terminology in Table 2 would seem absurd for the data on potential gender discrimination in the admissions. In fact, any attempt to relate denying admission∣femaledenying admission∣male) to the power of a test and the type I error probability, respectively, would be considered ludicrous.

Regrettably, the M-PPV has created a sizeable literature, including in philosophy of science, based on selecting different values for in (17), and plotting the M-PPV as a function of the odds ratio, , or the power to propose various impertinent diagnoses and remedies for untrustworthiness; see Nosek et al. [36] and Munafò et al. [37]. For instance, Bird [38] argues: “If most of the hypotheses under test are false, then there will be many false hypotheses that are apparently supported by the outcomes of well conducted experiments and null-hypothesis significance tests with a type-I error rate of 5%. Failure to recognize this is to commit the fallacy of ignoring the base rate” (p. 965) is ill-thought-out. First, the charge that frequentist testing is vulnerable to the base-rate fallacy is erroneous since frequentist error probabilities cannot be conditional on a constant ; see Spanos [30]. Second, statistical hypotheses share no similitude to ‘a bag of lottery tickets one of which will win’ since parameters are unknown constants and take values over a continuum, hence will invariably be the case in practice with only by happenstance. Worse, Bird’s [38] recommendation that the trustworthiness of evidence will improve by: “Seek(ing) means of generating more likely research hypotheses to be true” (p. 968) reveals an arrant misunderstanding of how N-P testing gives rise to learning about from data Indeed, interrogatory N-P probing using optimal tests will yield more reliable inferences, irrespective of how many null values have been probed since learning is attained irrespective of whether is accepted or rejected; see Section 5.

4. Uninformed Implementation of Statistical Modeling and Inference

A strong case can be made that the widespread abuse of frequentist testing is only symptomatic of a much broader problem relating to the uninformed, and recipe-like, implementation of statistical methods that contributes to untrustworthy evidence, in several different ways, the most important of which are the following:

- (a)

- Statistical misspecification: invalid probabilistic assumptions are imposed on the particular data , by ignoring the approximate validity (statistical adequacy) of the probabilistic assumptions comprising the statistical model . Statistical misspecification is endemic in disciplines like economics where probabilistic assumptions are often assigned to unobservable error term(s), but what matters for the reliability of inference and the trustworthiness of the ensuing evidence is whether the probabilistic assumptions imposed (indirectly) on the observable process underlying the data are valid or not; see Spanos [18].

- (b)

- Unwarranted evidential interpretations of inference results, including: (i) an optimal point estimate implies that for n large enough, (ii) attaching the coverage probability to observed CIs, and (iii) misinterpreting testing accept/reject results by detaching them from their particular statistical context (Spanos [17]), and (iv) conflating nominal and actual error probabilities by ignoring Bonferroni-type adjustments stemming from abuses of N-P testing. Focusing on (iv), however, overlooks the forest for the trees, since: “p values are just the tip of the iceberg.”(Leek and Peng [39]).

- (c)

- Questionable statistical modeling practices which include (i) foisting an aPriori Postulated (aPP) substantive model on data using a curve-fitting procedure, and (ii) evaluating its ‘appropriateness’ using goodness-of-fit/prediction measures, without recognizing that the latter is neither necessary nor sufficient for statistical adequacy, which is invariably ignored; see Spanos [18].

In practice, an aPriori Postulated (aPP) (substantive) model should be nested within its implicit statistical model comprising (a) the probabilistic assumptions imposed (often implicitly) on the observable process underlying and (b) the ensuing parametrization , providing (c) the crucial link between and the real-world mechanism that generated . The statistical parameters are framed to relate them to the substantive parameters relating the two via restrictions, say which need to be tested to ensure that is substantively adequate vis-a-vis data .

Example 4. Consider the -Capital Asset Pricing Model (CAPM) where:

where -portfolio returns, -market returns, and - returns of a risk free asset, with being the underlying statistical (linear regression) model.

The parameters of the two models are related via the substantive restrictions:

For the estimated to yield trustworthy evidence, (a) should be statistically adequate for data , and (b) the restrictions in (19) should not belie the data , which is seldom the case in published empirical papers; see Spanos [18].

4.1. Statistical Adequacy and Replication in Practice

A particularly important contributor to untrustworthy evidence is the statistical misspecification of the invoked . Statistically adequate models are (approximately) replicable with akin data because they are unique. In contrast, there are numerous ways can be statistically misspecified; see Spanos [18]. Indeed, untrustworthy evidence is easy to replicate when practitioners employ the same uninformed and recipe-like, implementation of statistical methods, ignoring their statistical adequacy “... an analysis can be fully reproducible and still be wrong.” (Leek and Peng [39]). This calls into question the Ioannidis [1] stipulations [S1]–[S2], since statistical misspecification is the primary source of untrustworthiness giving rise to (i) ‘non-optimal’ inference procedures, and (ii) inducing sizeable discrepancies between the actual and nominal error probabilities (Section 2.1). These discrepancies render any Bonferroni-type adjustments for p-hacking, data-dredging, multiple testing and cherry-picking, irrelevant. Hence, a crucial precondition for relating replication/non-replication to the trustworthiness/untrustworthiness is to establishthe statistical adequacy of the invoked using akin data.

Example 5. Consider two studies based on akin data sets , , invoking the same the simple Normal in (2) , giving rise to the following results:

with the standard errors in brackets, and denoting the residuals.

Taking the results in (20) at face value, Table 6 compares them and their ensuing CIs and N-P tests whose p-values are given in square brackets. An informal test of the difference between the two means, : vs. : yields rendering the results of the two studies almost identical. Despite that, it’s not obvious how an umpire could opine whether the results with data constitutes a successful replication with trustworthy evidence of the results with

Table 6.

Statistical Inference Results.

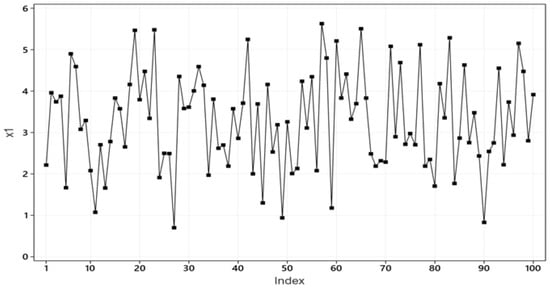

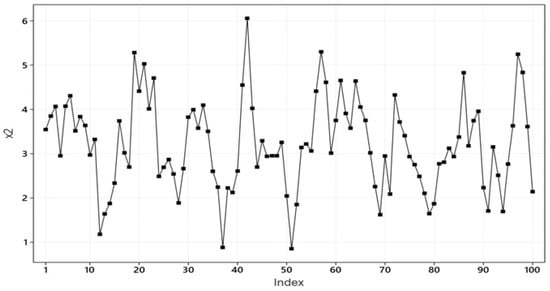

To answer that one needs to evaluate the statistical adequacy of both estimated models in (20) using informal t-plots of the data combined with formal M-S testing (Spanos [19]) to probe their statistical adequacy. The t-plot for data (Figure 1) exhibits no obvious departures from the NIID assumptions, but the ‘irregular cycles’ exhibited by (Figure 2) indicate a likely departures from ‘Independence’.

Figure 1.

t-plot of data .

Figure 2.

t-plot of data .

The validity of the IID assumptions can be tested using the runs test (Spanos, 2019) [18]:

where R-number of runs, , and depend only on The Anderson-Darling (A-D) tests for Normality are also given in Table 7.

Table 7.

Simple M-S tests for IID.

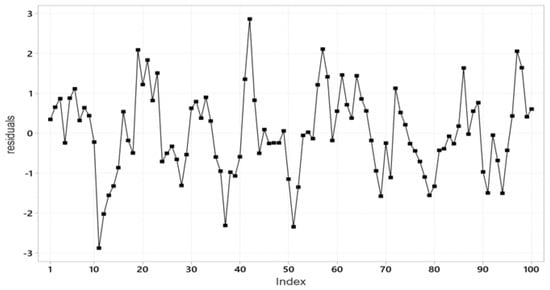

The M-S testing results in Table 7 confirm that the simple Normal model in (2) is statistically adequate for data , but misspecified with data since the ‘Independence’ assumption is invalid. This is corroborated by the t-plot of the residuals in Figure 3, which exhibits similar irregular cycles as Figure 2. This implies that the inference results in Table 6 based on will be unreliable since the non-independence induces sizeable discrepancies between the actual and nominal error probabilities; see Spanos [18].

Figure 3.

t-plot of data .

Thus, the results based on data do not represent a successful replication (with trustworthy evidence) of those based on data . To secure trustworthy evidence for any inferential claim using data one needs to respecify the original in (2) by selecting an alternative statistical model aiming to account for the dependence mirrored by the irregular cycles in Figure 2 and Figure 3.

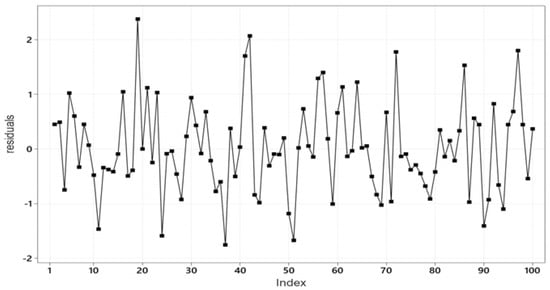

An obvious choice in this case is to replace the assumptions of IID with Markov dependence and stationarity, which give rise to an Autoregressive [AR(1)] model; see Spanos [18]. Estimating the AR(1) model with data yields:

The t-plot of the residuals from (22) in Figure 4 indicates that the Markov dependence assumption accounts for the irregular cycles exhibited by the residuals in Figure 2 and Figure 3. The statistical adequacy of (22) is confirmed by the M-S tests:

Figure 4.

t-plot of data .

4.2. Fallacious Evidential Interpretations of Inference Results

Another crucial source of the untrustworthiness of evidence is unwarranted and fallacious evidential interpretations of unduly data-specific inference results as evidence for particular inferential claims.

Example 1 (continued). The optimality of the point estimators,

, does not entail:

where ≃ indicates approximate equality. The claims in (23) are unwarranted since and ignore the relevant uncertainty associated with and rely exclusively on single point, from the sampling distributions, and; see Spanos [16]. Invoking asymptotic properties for and such as consistency, will not justify the claims in (23). As argued by Le Cam [40] p. xiv: “... limit theorems ‘as n tends to infinity’ are logically devoid of content about what happens at any particular n”.

(b) It is important to emphasize that the problem with the claim also extends to estimation-based effect sizes; see Ellis [41]. For instance, in the case of testing the differences between two means, Cohen’s is nothing more than

| an estimate relating to the estimator of |

In that sense, the claim that is a variation on the same unwarranted claim: for n large enough; see Spanos [16]

(c) The inferential claim associated with (5) relates to the random CI overlaying with probability but does not extend to Spanos [16]:

As Neyman [42], p. 288, argued: “…valid probability statements about random variables usually cease to be valid if the random variables are replaced by their particular values”. i.e., the factual reasoning makes no sense post-data [ has occurred].

(d) It is also well-known that interpreting the ‘accept/reject results’ as evidence for / is unsound giving rise to two related fallacies (Mayo and Spanos [14]):

Fallacy of acceptance: misinterpreting ‘accept ’ (no evidence against ) as evidence for ’. This could easily arise in cases where n is too small and the test has no sufficient power to detect a actual discrepancy .

Fallacy of rejection: misinterpreting ‘reject ’ (evidence against ) as evidence for a particular ’. This could easily arise when n is very large and the test is sensitive enough to detect tiny discrepancies; see Spanos [43].

The above arguments call into question the M-PPV-inspired reforms relating to replacing p-values with CIs and effect sizes, and redefining statistical significance by reducing the conventional thresholds for , as ill-thought-out.

5. Testing Results vs. the Post-Data Severity (SEV) Evaluation

The reason why the accept/reject results are not routinely replicable with akin data is that they are sensitive to (i) the framing of and , (ii) the prespecified and (iii) the sample size n. A principled procedure transmuting the unduly data-dependent accept/reject results into evidence relating to is the post-data severity (SEV) evaluation, guided by the sign and magnitude of . A hypothesis H ( or ) passes a severe test with data if (Mayo and Spanos [14]):

- (C-1)

- accords with H, and

- (C-2)

- with very high probability, test would have produced a result that ‘accords less well’ with H than does, if H were false.

5.1. Case 1: Reject

Example 6. Consider the simple Bernoulli model:

where and the hypotheses:

It can be shown that the t-type test (Lehmann and Romano, [20]):

is optimal in the sense of Uniformly Most Powerful (UMP). The sampling distribution of evaluated under (hypothetical: what if ) is:

where the ‘scaled’ Binomial distribution, can be approximated (≃) by the N, which is used to evaluate and the p-value:

The sampling distribution of under (hypothetical: what if ) is:

whose probabilities can be approximated using:

(31) is used to derive the type II error and the power of the test in (27) which increases monotonically with and and decreases with .

Example 6 (continued). For the simple Bernoulli model in (25), the relevant data refer to newborns for 2020 in Cyprus, 5190 male and 4740 female , i.e., . The optimal test in (27), based on and yields:

This, combined with suggest that condition C-1 implies that accords with , and condition C-2 indicates that the relevant event relates to: “outcomes that accord less well with than does”, i.e., event : and its probability relates to the inferential claim :

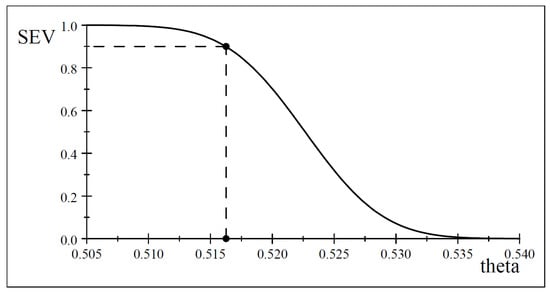

where is a prespecified (high enough) probability. The evaluation of (32), based on (31) gives rise to the severity curve depicted in Figure 5, assigning probabilities relating to the warrant with data of different discrepancies .

Figure 5.

Severity curve for data .

The largest discrepancy warranted with severity by test and data is see Table 8. How does the post-data SEV evaluation convert the unduly data-specific accept/reject results into evidence, giving rise to learning from data about While the SEV evaluation is always attached to in (27), as it relates to the relevant inferential claim , warranted with probability could be viewed as narrowing down the coarse accept/reject result indicating that to the much narrower for .

Table 8.

for ‘reject ’ and ‘accept ’ with (.

5.2. Case 2: Accept

Example 6 (continued). Consider replacing in (26) with the Nicholas Bernoulli value . Applying the UMP test yields:

indicating ‘accept ’ at but given the relevant for the inferential claim, is:

whose evaluation is based on (31), which is identical to that of rejecting based on in Figure 5, despite the change of the result from reject to accept ! Indeed, comparing the results for and in Table 8, is clear that even though the discrepancies are different (, the two curves are identical . As a result, for the same inferential claim, the largest discrepancy at probability from (reject ) coincides with the smallest discrepancy from (accept ). Also, the low severity () for the point estimate ensures that will never be a warranted discrepancy, i.e., there is strong evidence against the claim .

What renders the SEV evaluation different is that it calibrates the warranted with high enough probability. In that sense, it can be viewed as a testing-based effect size that provides a more reliable evaluation of the ‘scientific effect’; see Spanos [16]. This should contrasted with the estimation-based effect size measure, (Ellis, 2010) [41], which is equally fallacious as that claim that for n large enough.

Statistical vs. substantive significance. The SEV evaluation would also address this problem by relating the discrepancy from () warranted by test and data with high probability to the substantively determined value . For example, in human biology (Hardy, [44]) it is known that the substantive value for the ratio of boys to all newborns is . Comparing with the severity-based warranted discrepancy, 0.01556 () suggests that the statistically determined includes the substantive value since . In fact, the SEV evaluation of is .

5.3. Post-Data Severity and the Trustworthiness of Evidence

The above discussion suggests that similar point estimates, observed CIs, and testing results do not guarantee a successful replication or/and trustworthy evidence. Although the statistical adequacy of the underlying statistical models is necessary, it is not sufficient, especially when the sample size n of such studies is different.

An obvious way to address this issue is to use the distinction between statistical results and evidence and compare the warranted discrepancies from the null value, by the tests in question with high enough severity. To illustrate, consider an example that utilizes similar data from two different countries, three centuries apart.

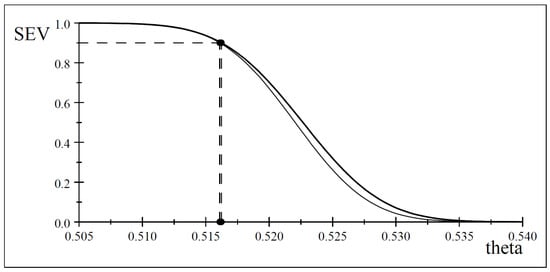

Example 6 (continued). To bring out the replicability of the SEV evidential claims, consider data that refer to newborns during 1668 in London (England), 6073 boys, 5560 girls, (Arbuthnot [45]), 352 years before the Cyprus 2020 data. The optimal test in (27) yields with rejecting : This result is close to with from Cyprus for 2020, but the question of interest is whether the latter constitutes a successful replication of the 1668 data. Using , the warranted discrepancy from by test and London data is which is almost identical to that with the Cyprus data , affirming the replicability of the evidence that .

The two severity curves in Figure 6 for data and are almost identical for the range of values of that matters (), despite the fact that vs. Hence, for a statistically adequate , the SEV could provide a more robust measure of replicability of trustworthy evidence, than point estimates, effect sizes, observed CIs, or p-values; see Spanos [16].

Figure 6.

Severity curves for data and .

The key to enabling the evaluation to circumvent the problem with different sample sizes n is that the two components in use the same n to evaluate the discrepancy , addressing the above-mentioned fallacies of acceptance and rejection. In contrast, the power of is evaluated using where is based on the prespecified and the p-valueis evaluated only under , N, rendering both evaluations vulnerable to the large/small n problems.

The SEV can be used to address other foundational problems, including distinguishing between statistical and substantive significance, as well as providing a testing-based effect size for the magnitude of the ‘substantive’ effect. An example of that is illustrated in Spanos (2023) [17], where a published paper reports a t-test for an estimated regression coefficient claimed to be significant at with and a p-value . The SEV evalution of that claim indicates that the warranted magnitude of the coefficient would be considerably smaller at with high enough severity.

6. Summary and Conclusions

The case made by Ioannidis [1], based on M-PPV misrepresents frequentist testing and misdiagnoses the replication crisis, due to the its incongruous stipulations [S1]–[S2] based on highly questionable presuppositions [a]–[d]. The most ill-chosen incongruousness is the colligating of the false positive/negative with the type I/II error probabilities. This led to promoting ill-informed reforms, including replacing p-values with CIs and effect sizes and lowering .

The above discussion has made a case that the non-replicability and the untrustworthiness of empirical evidence stem primarily from the uninformed and recipe-like implementation of statistical modeling and inference. This ignores fundamental issues relating to (a) establishing the statistical adequacy of the invoked statistical model for data , (b) the reasoned implementation of frequentist inference and the pertinent interpretation of their error probabilities, as well as (c) warranted evidential interpretations of inference results. Fisher’s model-based statistics provides an incisive explanation for the non-replication/untrustworthiness of empirical evidence and an appropriate framework for addressing (a)–(c). The statistical adequacy of is necessary for securing the trustworthiness of empirical evidence and ensuring the crucial link between trustworthiness and replicability, as both are key aspects firmly attached to individual studies. The inveterate problem with frequentist testing is that the accept/reject results are too coarse and unduly data-specific to provide cogent evidence for This problem can be addressed using their post-data SEV evaluation to output the discrepancy warranted by data with high probability, which narrows down the coarseness of these results. From this perspective, abuses of frequentist testing represents the tip of the untrustworthy evidence iceberg, with the remainder stemming mainly from statistical/substantive misspecification.

As Stark and Saltelli [12] aptly argue: “The problem is one of cargo-cult statistics – the ritualistic miming of statistics rather than conscientious practice. This has become the norm in many disciplines, reinforced and abetted by statistical education, statistical software, and editorial policies” (p. 40).

A redeeming value of the Ioannidis [1] case might be that its provocative title raised awareness of the endemic untrustworthiness of published empirical evidence. This could potentially initiate a fertile dialogue leading to ameliorating this thorny problem. This could happen when the focus is redirected to the reasoned implementation of frequentist modeling and inference guided by statistical adequacy. That is, replicating influential empirical papers in a particular research area with the same or akin data, and evaluating their statistical and substantive adequacy, represents the most effective way to establish the untrustworthiness of published empirical evidence.

The endemic untrustworthiness of published empirical evidence in most disciplines could be redressed but would require a lot of difficult changes, including: (i) a substantial overhaul of the teaching of probability theory and statistics, (ii) crucial changes in the current statistical software to include M-S testing, and (iii) changes in the editorial policies for papers that rely on statistical inference requiring corroboration of the statistical adequacy for the invoked statistical models.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are publicly available.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PPV | Positive Predictive Value |

| M-PPV | Metamorphosed PPV |

| MDS | Medical Diagnostic Screening |

| NIID | Normal, Independent and Identically Distributed |

| M-S | Mis-Specification |

| N-P | Neyman–Pearson |

| UMP | Uniformly Most Powerful |

| CAPM | Capital Asset Pricing Model |

| SEV | post-data severity evaluation |

References

- Ioannidis, J.P.A. Why most published research findings are false. PLoS Med. 2005, 2, e124. [Google Scholar] [CrossRef] [PubMed]

- Baker, M. Reproducibility crisis. Nature 2016, 533, 353–366. [Google Scholar]

- Hoffler, J.H. Replication and Economics Journal Policies. Am. Econ. Rev. 2017, 107, 52–55. [Google Scholar] [CrossRef]

- Wasserstein, R.L.; Schirm, A.L.; Lazar, N.A. Moving to a world beyond ‘p < 0.05’. Am. Stat. 2019, 73, 1–19. [Google Scholar]

- Simmons, J.P.; Nelson, L.D.; Simonsohn, U. False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allow Presenting Anything as Significant. Psychol. Sci. 2011, 22, 1359–1366. [Google Scholar] [CrossRef]

- Camerer, C.F.; Dreber, A.; Forsell, E.; Ho, T.H.; Huber, J.; Johannesson, M.; Kirchler, M.; Almenberg, J.; Altmejd, A.; Chan, T.; et al. Evaluating replicability of laboratory experiments in economics. Science 2016, 351, 1433–1436. [Google Scholar] [CrossRef]

- Benjamin, D.J.; Berger, J.O.; Johannesson, M.; Nosek, B.A.; Wagenmakers, E.J.; Berk, R.; Bollen, K.A.; Brembs, B.; Brown, L.; Camerer, C.; et al. Redefine statistical significance. Nat. Hum. Behav. 2017, 33, 6–10. [Google Scholar] [CrossRef]

- Shrout, P.E.; Rodgers, J.L. Psychology, science, and knowledge construction: Broadening perspectives from the replication crisis. Annu. Rev. Psychol. 2018, 69, 487–510. [Google Scholar] [CrossRef]

- Johnson, V.E.; Payne, R.D.; Wang, T.; Asher, A.; Mandal, S. On the Reproducibility of Psychological Science. J. Am. Stat. Assoc. 2017, 112, 1–10. [Google Scholar] [CrossRef]

- Andreou, E.; Spanos, A. Statistical adequacy and the testing of trend versus difference stationarity. Econom. Rev. 2003, 22, 217–237. [Google Scholar] [CrossRef]

- Do, H.P.; Spanos, A. Revisiting the Phillips curve: The empirical relationship yet to be validated. Oxf. Bull. Econ. Stat. 2024, 86, 761–794. [Google Scholar] [CrossRef]

- Stark, P.B.; Saltelli, A. Cargo-cult statistics and scientific crisis. Significance 2018, 15, 40–43. [Google Scholar] [CrossRef]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. A 1922, 222, 309–368. [Google Scholar]

- Mayo, D.G.; Spanos, A. Severe Testing as a Basic Concept in a Neyman-Pearson Philosophy of Induction. Br. J. Philos. Sci. 2006, 57, 323–357. [Google Scholar] [CrossRef]

- Nickerson, R.S. Null Hypothesis Significance Testing: A review of an old and continuing controversy. Psychol. Methods 2000, 5, 241–301. [Google Scholar] [CrossRef] [PubMed]

- Spanos, A. Revisiting noncentrality-based confidence intervals, error probabilities, and estimation-based effect sizes. J. Math. Stat. Psychol. 2021, 104, 102580. [Google Scholar] [CrossRef]

- Spanos, A. Revisiting the Large n (Sample Size) Problem: How to Avert Spurious Significance Results. Stats 2023, 6, 1323–1338. [Google Scholar] [CrossRef]

- Spanos, A. Probability Theory and Statistical Inference: Empirical Modeling with Observational Data; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Spanos, A. Mis-Specification Testing in Retrospect. J. Econ. Surv. 2018, 32, 541–577. [Google Scholar] [CrossRef]

- Lehmann, E.L.; Romano, J.P. Testing Statistical Hypotheses; Springer: New York, NY, USA, 2005. [Google Scholar]

- Neyman, J.; Pearson, E.S. On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. A 1933, 231, 289–337. [Google Scholar]

- Bennett, J.H. Statistical Inference and Analysis: Selected Correspondence of RA Fisher; Clarendon Press: Oxford, UK, 1990. [Google Scholar]

- Gigerenzer, G. The superego, the ego, and the id in statistical reasoning. In A Handbook for Data Analysis in the Behavioral Sciences: Methodological Issues; Psychology Press: London, UK, 1993; pp. 311–339. [Google Scholar]

- Halpin, P.F.; Stam, J.H. Inductive Inference or Inductive Behavior: Fisher and Neyman: Pearson Approaches to Statistical Testing in Psychological Research (1940–1960). Am. J. Psychol. 2006, 119, 625–653. [Google Scholar] [CrossRef]

- Fisher, R.A. Properties of Hh functions. In Introduction to the British Association of Mathematical Tables; British Association: London, UK, 1931; Volume 1, p. 26. [Google Scholar]

- Fisher, R.A. The Design of Experiments; Oliver and Boyd: Edinburgh, UK, 1935. [Google Scholar]

- Box, J.F. R.A. Fisher, The Life of a Scientist; Wiley: New York, NY, USA, 1978. [Google Scholar]

- Yerushalmy, J. Statistical problems in assessing methods of medical diagnosis, with special reference to X-ray techniques. Public Health Rep. (1896–1970) 1947, 62, 1432–1449. [Google Scholar] [CrossRef]

- Neyman, J. Outline of statistical treatment of the problem of diagnosis. Public Health Rep. (1896–1970) 1947, 62, 1449–1456. [Google Scholar] [CrossRef]

- Spanos, A. Is Frequentist Testing Vulnerable to the Base-Rate Fallacy? Philos. Sci. 2010, 77, 565–583. [Google Scholar] [CrossRef]

- Bishop, Y.V.; Fienberg, S.E.; Holland, P.W. Discrete Multivariate Analysis; MIT Press: Cambridge, MA, USA, 1975. [Google Scholar]

- Senn, S. Statistical Issues in Drug Development, 3rd ed.; Wiley: New York, NY, USA, 2021. [Google Scholar]

- Greenhouse, S.W.; Mantel, N. The evaluation of diagnostic tests. Biometrics 1950, 6, 399–412. [Google Scholar] [CrossRef]

- Nissen-Meyer, S. Evaluation of screening tests in medical diagnosis. Biometrics 1964, 20, 730–755. [Google Scholar] [CrossRef]

- Freedman, D.; Pisani, R.; Purves, R. Statistics, 3rd ed.; Norton: New York, NY, USA, 1998. [Google Scholar]

- Nosek, B.A.; Hardwicke, T.E.; Moshontz, H.; Allard, A.; Corker, K.S.; Dreber, A.; Fidler, F.; Hilgard, J.; Struhl, M.K.; Nuijten, M.B.; et al. Replicability, robustness, and reproducibility in psychological science. Annu. Rev. Psychol. 2022, 73, 719–748. [Google Scholar] [CrossRef]

- Munafò, M.R.; Nosek, B.A.; Bishop, D.V.M.; Button, K.S.; Chambers, C.D.; Percie du Sert, N.; Simonsohn, U.; Wagenmakers, E.J.; Ware, J.J.; Ioannidis, J.P.A. A manifesto for reproducible science. Nat. Hum. Behav. 2017, 1, 1–9. [Google Scholar] [CrossRef]

- Bird, A. Understanding the Replication Crisis as a Base Rate Fallacy. Br. J. Philos. Sci. 2021, 72, 965–993. [Google Scholar] [CrossRef]

- Leek, J.T.; Peng, R.D. Statistics: P values are just the tip of the iceberg. Nature 2015, 520, 520–612. [Google Scholar] [CrossRef]

- Le Cam, L. Asymptotic Methods in Statistical Decision Theory; Springer: New York, NY, USA, 1986. [Google Scholar]

- Ellis, P.D. The Essential Guide to Effect Sizes: Statistical Power, Meta-Analysis, and the Interpretation of Research Results; CUP: Cambridge, UK, 2010. [Google Scholar]

- Neyman, J. Note on an article by Sir Ronald Fisher. J. R. Stat. Ser. B 1956, 18, 288–294. [Google Scholar] [CrossRef]

- Spanos, A. How the Post-Data Severity Converts Testing Results into Evidence for or against Pertinent Inferential Claims. Entropy 2024, 26, 95. [Google Scholar] [CrossRef] [PubMed]

- Hardy, I.C.W. (Ed.) Sex Ratios: Concepts and Research Methods; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Arbuthnot, J. An argument for Divine Providence, taken from the constant regularity observed in the birth of both sexes. Philos. Trans. 1710, 27, 186–190. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).